MambaDPF-Net: A Dual-Path Fusion Network with Selective State Space Modeling for Robust Low-Light Image Enhancement

Abstract

1. Introduction

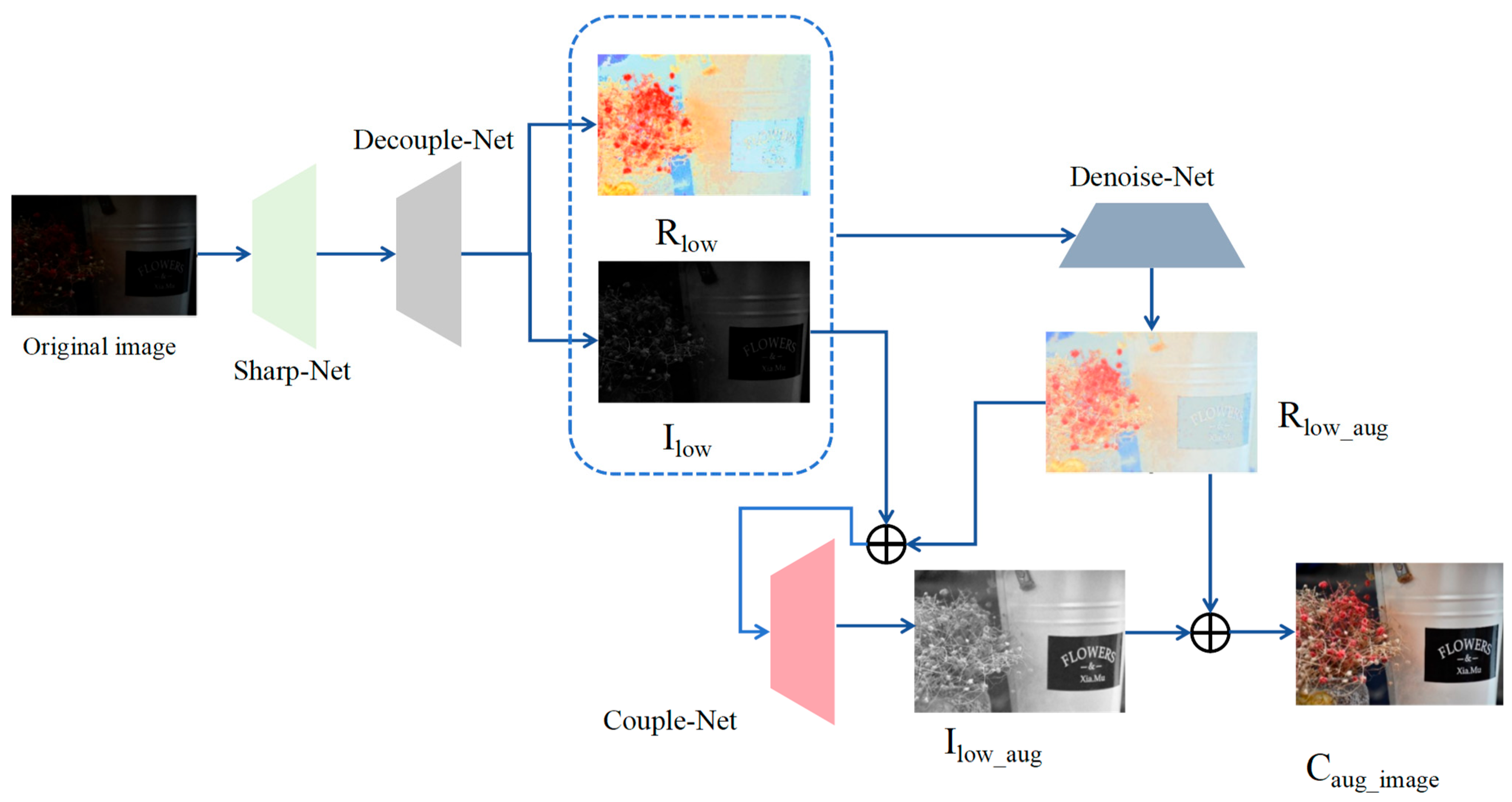

- We propose MambaDPF-Net, a dual-path fusion network for low-light enhancement guided by the Retinex model, establishing an integrated framework where sharpening, decoupling, denoising, and coupling sub-networks collaborate synergistically.

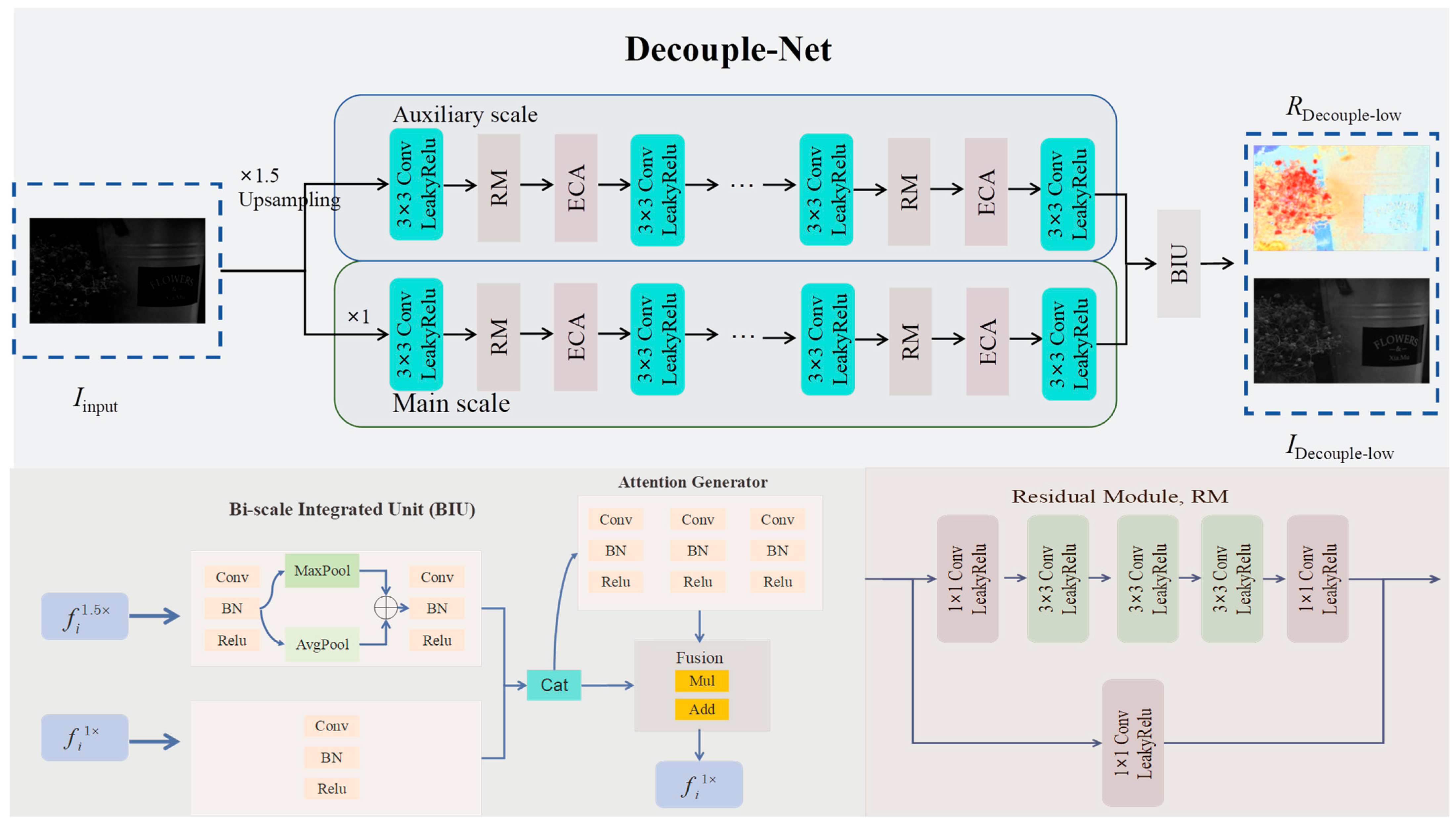

- The decoupling branch employs dual-scale feature aggregation to robustly estimate illumination and reflectance maps, achieving physically interpretable component representations.

- A dedicated denoising branch for the reflectance domain is constructed, incorporating illumination noise correction to suppress artefacts while avoiding excessive smoothing.

- The coupling branch incorporates a Mamba selective state space module. This mechanism is uniquely suited for the non-uniform nature of low-light images, enabling content-aware fusion: it dynamically models long-range dependencies in structured, well-lit regions while simultaneously suppressing noise propagation from dark, low-signal areas.

2. Related Work

2.1. Traditional Methods

2.2. Deep Learning-Based Methods

3. Approach

3.1. Overview

3.2. Sharp-Net

3.3. Decouple-Net

3.4. Denoise-Net

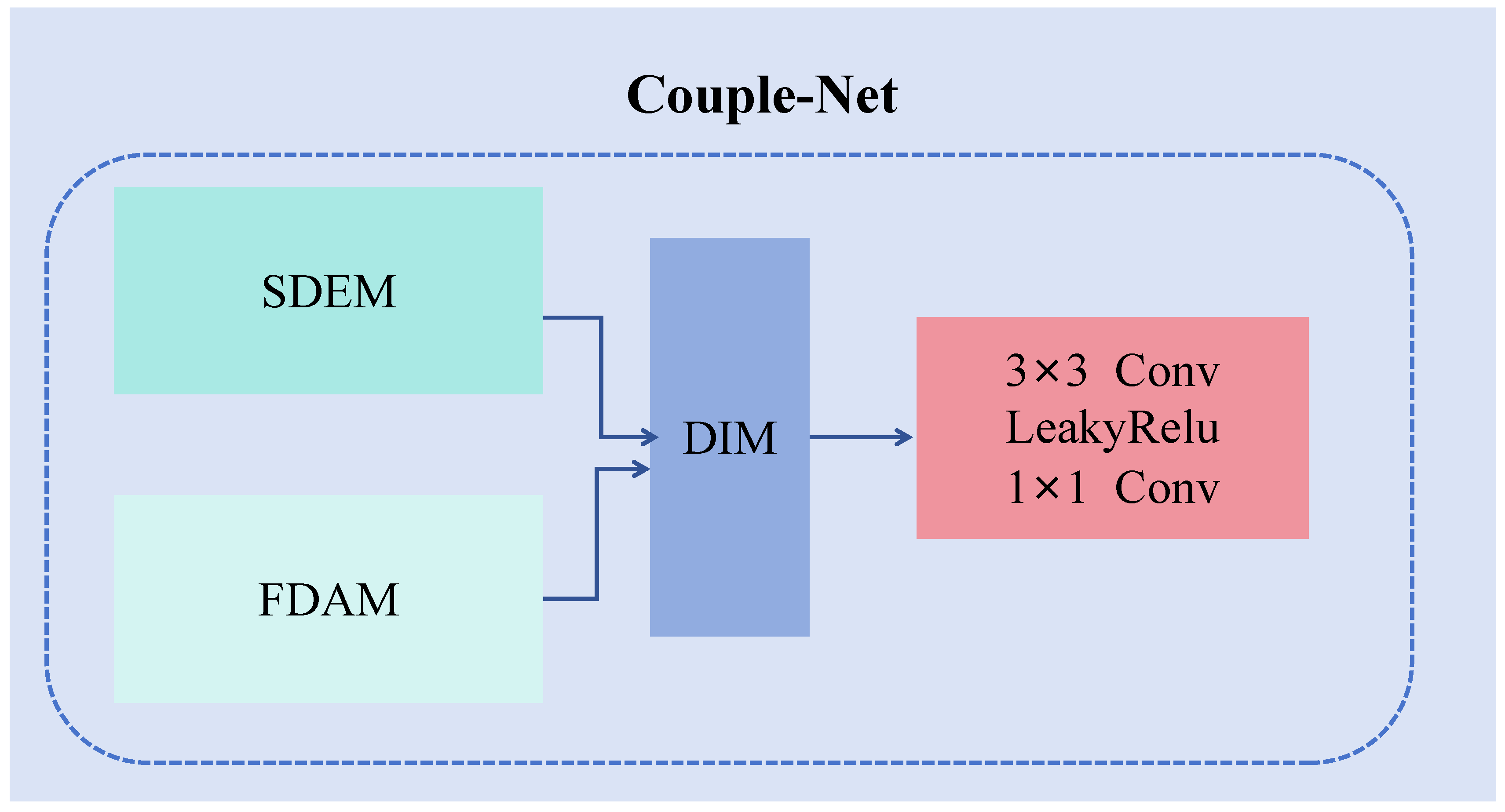

3.5. Couple-Net

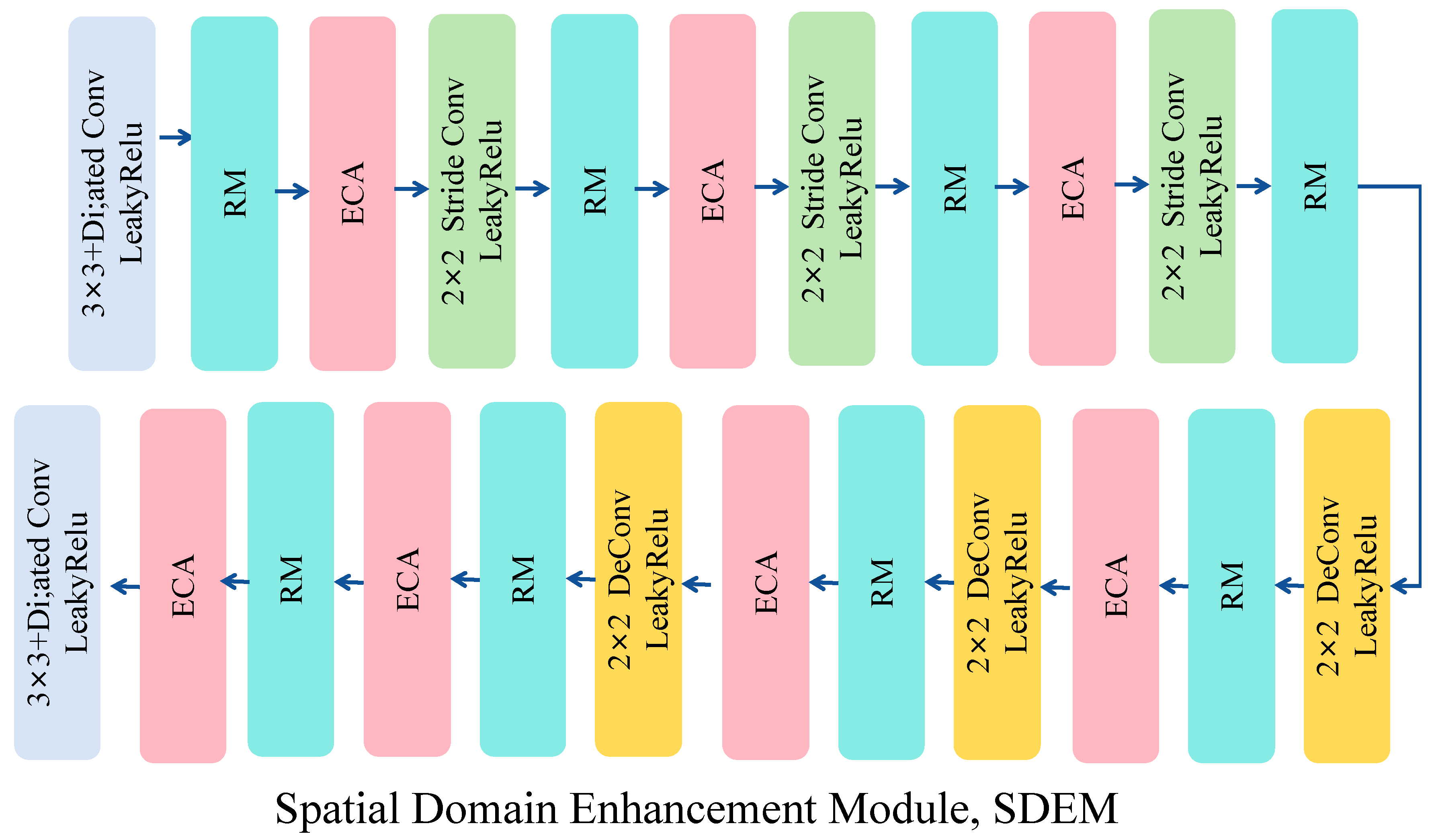

3.5.1. Spatial Domain Enhancement Module (SDEM)

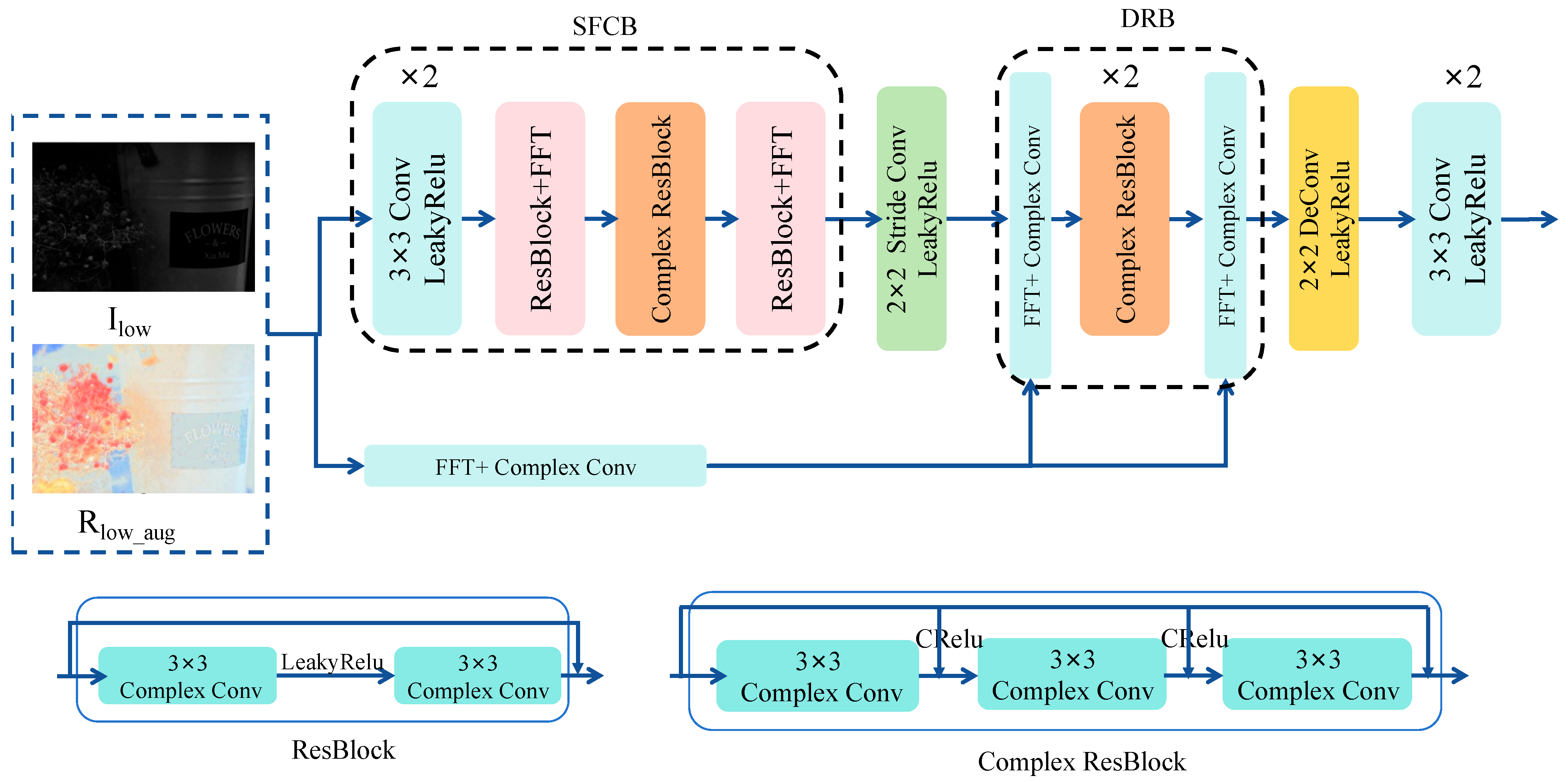

3.5.2. Frequency Domain Augmentation Module (FDAM)

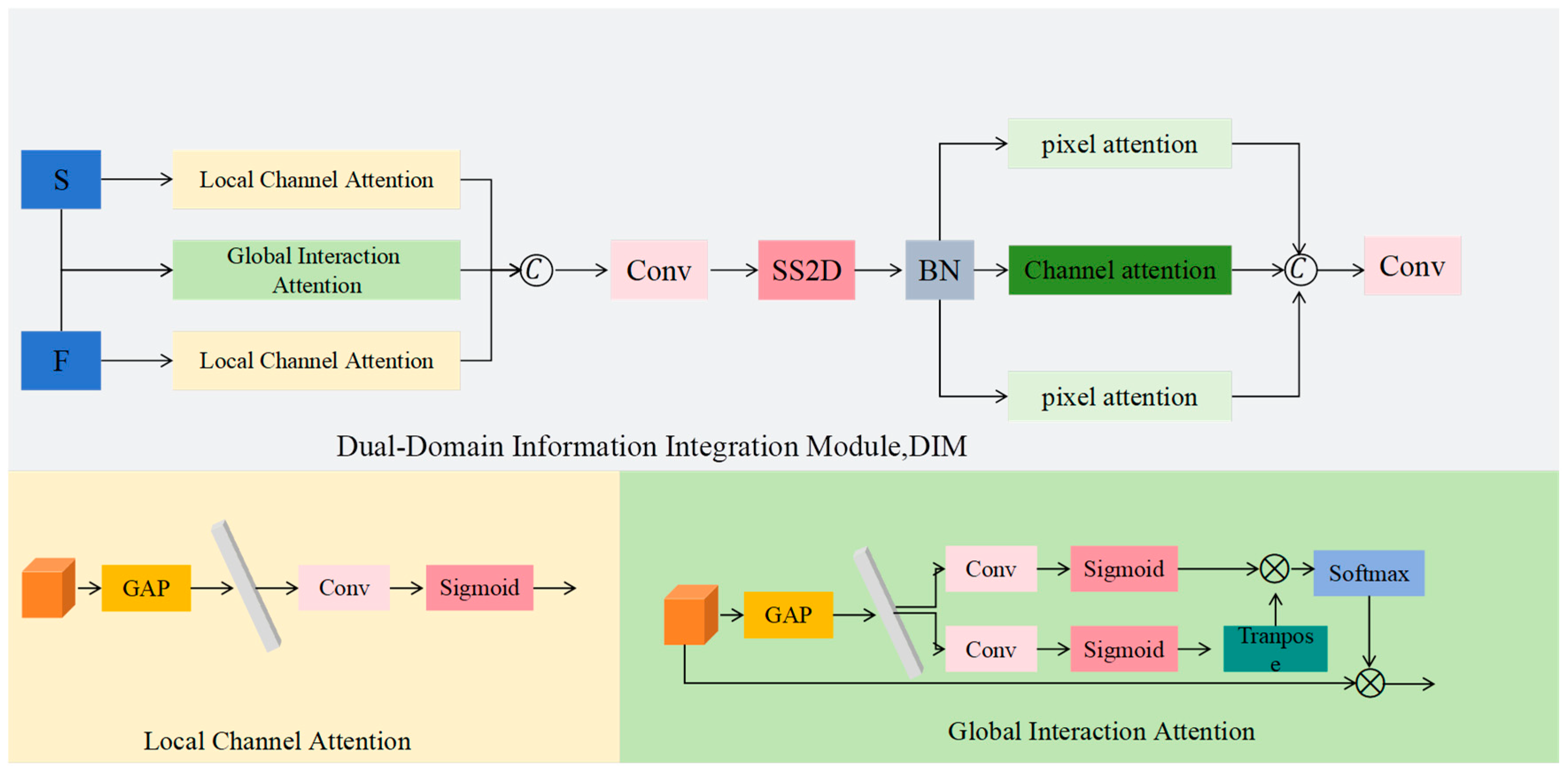

3.5.3. Dual-Domain Information Integration Module (DIM)

3.6. Multi-Task Training Learning Framework

4. Experiments

4.1. Realization Details

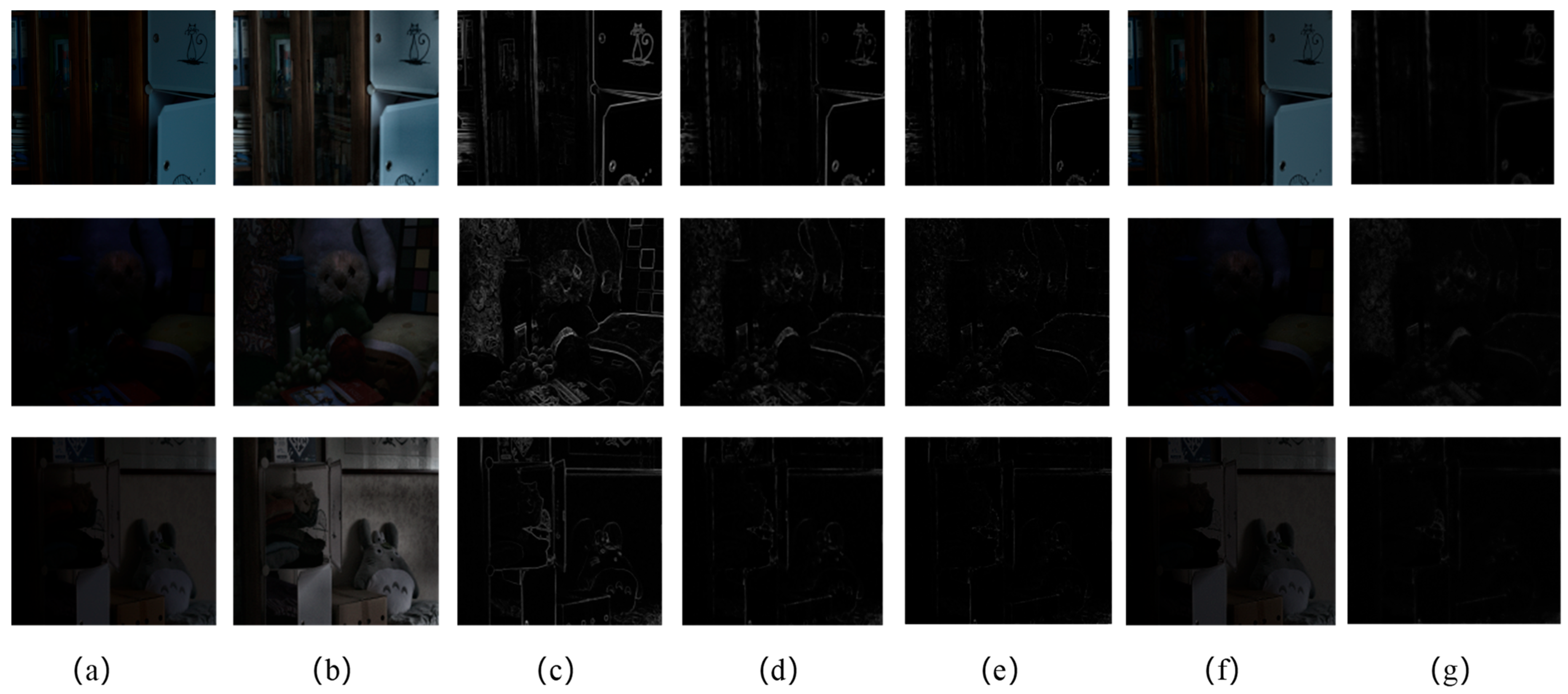

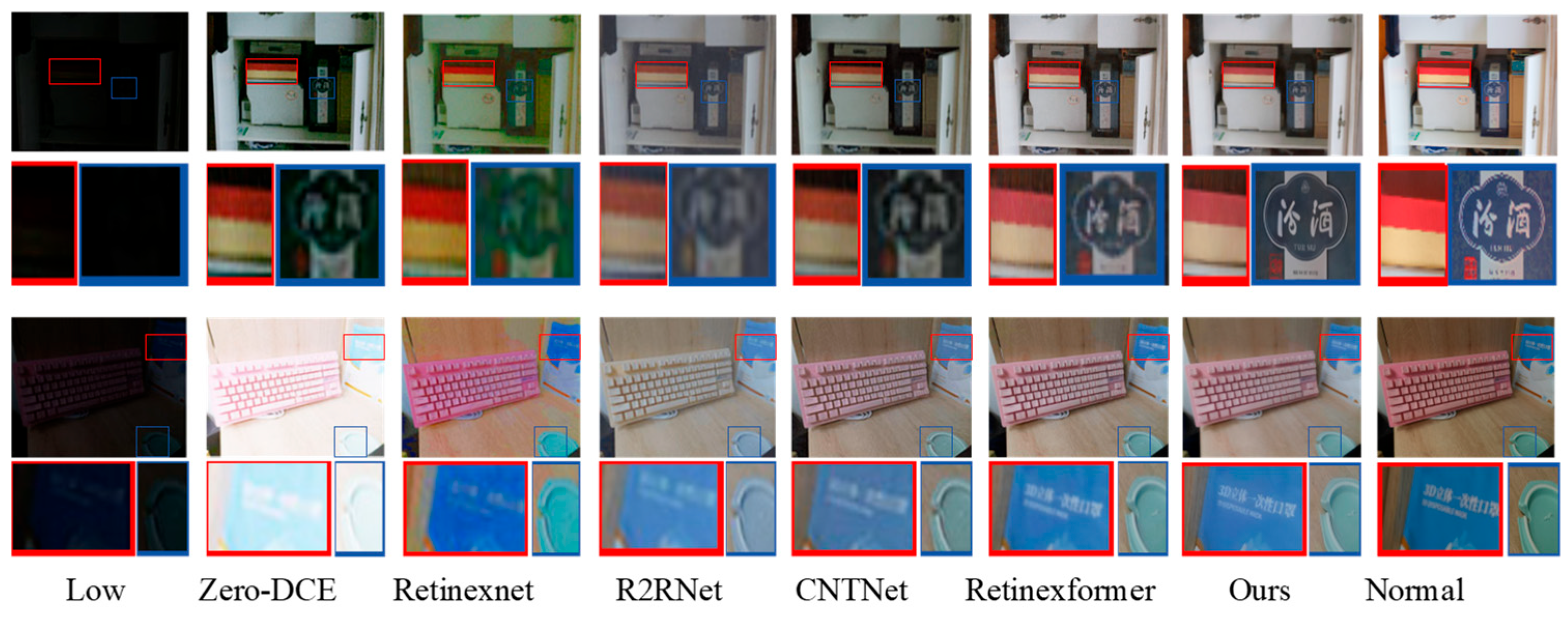

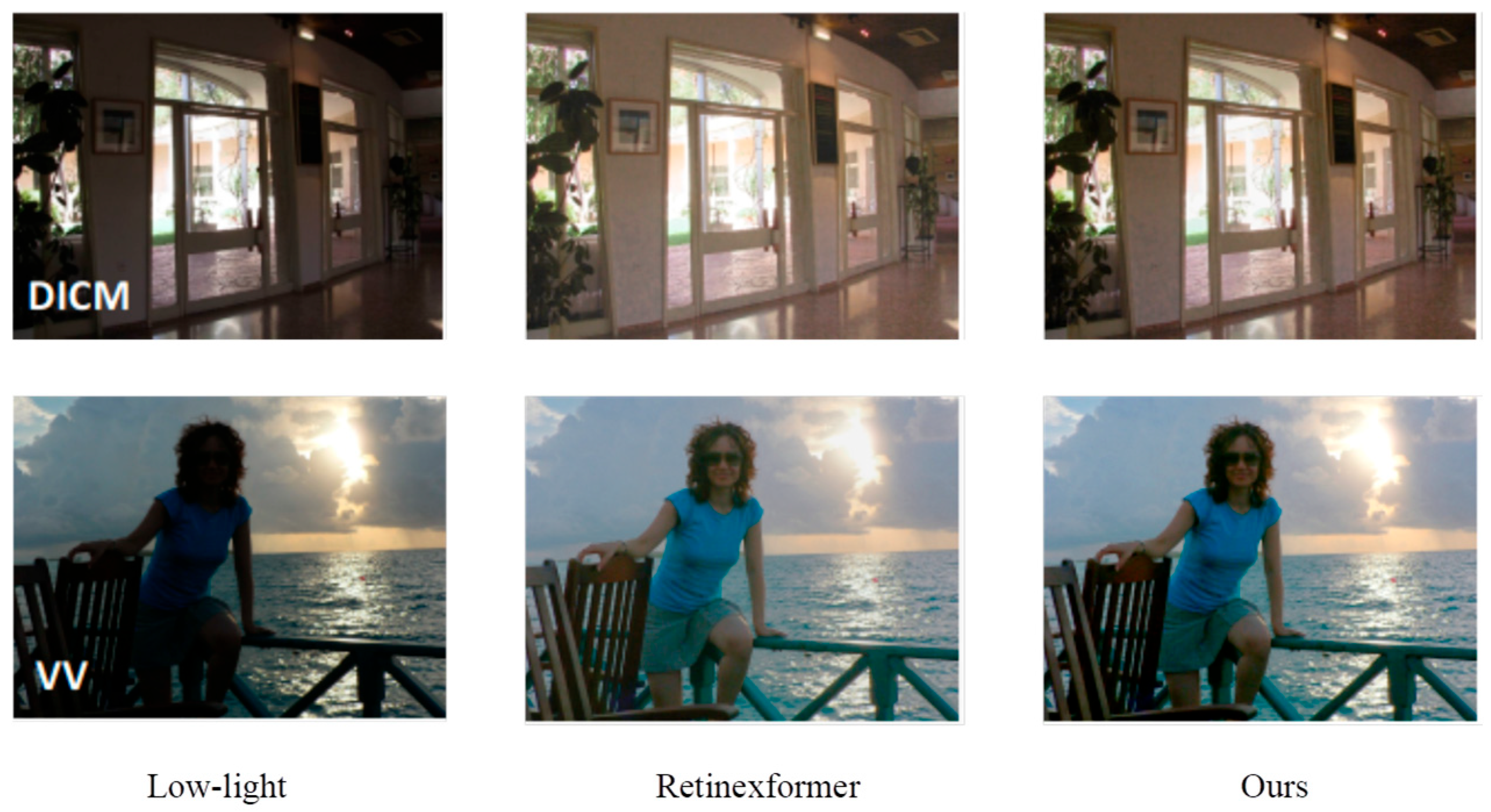

4.2. Comparison with State-of-the-Art Methods on Real Datasets

4.3. Ablation Experiment

| Methods | LOL(PSNR) | LOL(SSIM) | LSRW(PSNR) | LSRW(SSIM) |

|---|---|---|---|---|

| Baseline | 18.131 | 0.712 | 20.207 | 0.816 |

| Baseline + Sharp | 18.374 | 0.728 | 20.211 | 0.816 |

| Baseline + Sharp + BIU | 22.222 | 0.831 | 20.216 | 0.817 |

| Ours | 26.24 | 0.943 | 20.259 | 0.838 |

4.4. Complexity and Efficiency Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNNs | Convolutional Neural Networks |

| BIU | Bimodal Integration Unit |

| RM | Residual Module |

| SDEM | Spatial Domain Enhancement Module |

| FDAM | Frequency Domain Augmentation Module |

| DIM | Dual-Domain Information Integration Module |

| SFCB | Spatial-Frequency Conversion Block |

| DRB | Detail Recovery Block |

| CAM | Cross-attention Module |

| LCA | Local Channel Attention |

| GISA | Global Interaction Semantic Attention |

References

- Zhang, B.; Shu, D.; Fu, P.; Yao, S.; Chong, C.; Zhao, X.; Yang, H. Multi-Feature Fusion Yolo Approach for Fault Detection and Location of Train Running Section. Electronics 2025, 14, 3430. [Google Scholar] [CrossRef]

- Rodríguez-Lira, D.-C.; Córdova-Esparza, D.-M.; Terven, J.; Romero-González, J.-A.; Alvarez-Alvarado, J.M.; González-Barbosa, J.-J.; Ramírez-Pedraza, A. Recent Developments in Image-Based 3d Reconstruction Using Deep Learning: Methodologies and Applications. Electronics 2025, 14, 3032. [Google Scholar] [CrossRef]

- Guan, Y.; Liu, M.; Chen, X.; Wang, X.; Luan, X. Freqspatnet: Frequency and Spatial Dual-Domain Collaborative Learning for Low-Light Image Enhancement. Electronics 2025, 14, 2220. [Google Scholar] [CrossRef]

- Sun, Y.; Hu, S.; Xie, K.; Wen, C.; Zhang, W.; He, J. Enhanced Deblurring for Smart Cabinets in Dynamic and Low-Light Scenarios. Electronics 2025, 14, 488. [Google Scholar] [CrossRef]

- Choi, D.H.; Jang, I.H.; Kim, M.H.; Kim, N.C. Color Image Enhancement Based on Single-Scale Retinex with a Jnd-Based Nonlinear Filter. In Proceedings of the 2007 IEEE International Symposium on Circuits and Systems (ISCAS), New Orleans, LA, USA, 27–30 May 2007. [Google Scholar]

- Rahman, Z.; Jobson, D.J.; Woodell, G.A. Multi-Scale Retinex for Color Image Enhancement. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996; pp. 1003–1006. [Google Scholar]

- Parthasarathy, S.; Sankaran, P. An Automated Multi Scale Retinex with Color Restoration for Image Enhancement. In Proceedings of the 2012 National Conference on Communications (NCC), Kharagpur, India, 3–5 February 2012. [Google Scholar]

- Fu, Y.; Hong, Y.; Chen, L.; You, S. Le-Gan: Unsupervised Low-Light Image Enhancement Network Using Attention Module and Identity Invariant Loss. Knowl. Based Syst. 2022, 240, 108010. [Google Scholar] [CrossRef]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-Reference Deep Curve Estimation for Low-Light Image Enhancement. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 1780–1789. [Google Scholar]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. Enlightengan: Deep Light Enhancement without Paired Supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Lore, K.G.; Akintayo, A.; Sarkar, S. Llnet: A Deep Autoencoder Approach to Natural Low-Light Image Enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Lv, F.; Lu, F.; Wu, J.; Lim, C.S. Mbllen: Low-Light Image/Video Enhancement Using Cnns. In Proceedings of the British Machine Vision Conference (BMVC 2018), Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Moran, S.; Marza, P.; McDonagh, S.; Parisot, S.; Slabaugh, G. Deeplpf: Deep Local Parametric Filters for Image Enhancement. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Sharma, A.; Tan, R.T. Nighttime Visibility Enhancement by Increasing the Dynamic Range and Suppression of Light Effects. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Online, 19–25 June 2021; pp. 11977–11986. [Google Scholar]

- Hai, J.; Hao, Y.; Zou, F.; Lin, F.; Han, S. Advanced Retinexnet: A Fully Convolutional Network for Low-Light Image Enhancement. Signal Process. Image Commun. 2023, 112, 116916. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, J.; Guo, X. Kindling the Darkness: A Practical Low-Light Image Enhancer. In Proceedings of the 27th ACM International Conference on Multimedia; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1632–1640. [Google Scholar]

- Liu, R.; Ma, L.; Zhang, J.; Fan, X.; Luo, Z. Retinex-Inspired Unrolling with Cooperative Prior Architecture Search for Low-Light Image Enhancement. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Online, 19–25 June 2021. [Google Scholar]

- Wang, R.; Zhang, Q.; Fu, C.W.; Shen, X.; Zheng, W.S.; Jia, J. Underexposed Photo Enhancement Using Deep Illumination Estimation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. A Multiscale Retinex for Bridging the Gap between Color Images and the Human Observation of Scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Guo, X.; Li, Y.; Ling, H. Lime: Low-Light Image Enhancement Via Illumination Map Estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Fu, X.; Zeng, D.; Huang, Y.; Liao, Y.; Ding, X.; Paisley, J. A Fusion-Based Enhancing Method for Weakly Illuminated Images. Signal Process. 2016, 129, 82–96. [Google Scholar] [CrossRef]

- Li, M.; Liu, J.; Yang, W.; Sun, X.; Guo, Z. Structure-Revealing Low-Light Image Enhancement Via Robust Retinex Model. IEEE Trans. Image Process. 2018, 27, 2828–2841. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep Retinex Decomposition for Low-Light Enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar] [CrossRef]

- Subramani, B.; Veluchamy, M. Fuzzy Gray Level Difference Histogram Equalization for Medical Image Enhancement. J. Med. Syst. 2020, 44, 103. [Google Scholar] [CrossRef]

- Weng, J.; Yan, Z.; Tai, Y.; Qian, J.; Yang, J.; Li, J. Mamballie: An Efficient Low-Light Image Enhancement Model Based on State Space. arXiv 2024, arXiv:2405.16105v1. [Google Scholar]

- Li, C.; Guo, C.; Loy, C.C. Learning to Enhance Low-Light Image Via Zero-Reference Deep Curve Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4225–4238. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Zhou, D. Cybernetics Efficient Low-Light Image Enhancement with Model Parameters Scaled Down to 0.02M. Int. J. Mach. Learn. Cyber. 2024, 15, 1575–1589. [Google Scholar] [CrossRef]

- Zhou, H.; Zeng, X.; Lin, B.; Li, D.; Ali Shah, S.A.; Liu, B.; Guo, K.; Guo, Z. Polarization Motivating High-Performance Weak Targets’ Imaging Based on a Dual-Discriminator Gan. Opt. Express 2024, 32, 3835–3851. [Google Scholar] [CrossRef]

- Fan, X.; Ding, M.; Lv, T.; Sun, X.; Lin, B.; Guo, Z. Meta-Dnet-Upi: Efficient Underwater Polarization Imaging Combining Deformable Convolutional Networks and Meta-Learning. Opt. Laser Technol. 2025, 187, 112900. [Google Scholar] [CrossRef]

- Lin, B.; Qiao, L.; Fan, X.; Guo, Z. Large-Range Polarization Scattering Imaging with an Unsupervised Multi-Task Dynamic-Modulated Framework. Opt. Lett. 2025, 50, 3413–3416. [Google Scholar] [CrossRef]

- Chen, S.; Yang, X. An Enhanced Adaptive Sobel Edge Detector Based on Improved Genetic Algorithm and Non-Maximum Suppression. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021. [Google Scholar]

- Hai, J.; Xuan, Z.; Yang, R.; Hao, Y.; Zou, F.; Lin, F.; Han, S. R2rnet: Low-Light Image Enhancement Via Real-Low to Real-Normal Network. J. Vis. Commun. Image Represent. 2023, 90, 103712. [Google Scholar] [CrossRef]

- Cai, Y.; Bian, H.; Lin, J.; Wang, H.; Timofte, R.; Zhang, Y. Retinexformer: One-Stage Retinex-Based Transformer for Low-Light Image Enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023. [Google Scholar]

| Methods | LOL-v1 | LOL-v2 (real) | LSRW | |||

|---|---|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | |

| RetinexNet [23] | 16.77 | 0.560 | 15.47 | 0.567 | 16.76 | 0.566 |

| R2RNet [32] | 20.21 | 0.816 | 18.96 | 0.772 | 20.20 | 0.820 |

| Retinexformer [33] | 25.16 | 0.845 | 25.67 | 0.930 | 19.54 | 0.586 |

| Ours | 25.31 | 0.849 | 26.24 | 0.943 | 20.259 | 0.838 |

| Methods | LIME | VV | DICM |

|---|---|---|---|

| RetinexNet [23] | 4.361 | 3.816 | 4.209 |

| Zero-DCE [9] | 3.912 | 3.217 | 2.835 |

| R2RNet [32] | 3.176 | 3.093 | 3.503 |

| Proposed | 3.042 | 3.009 | 2.713 |

| Methods | Parameters (M) | FLOPs (G) | FPS |

|---|---|---|---|

| R2RNet | 1.5 | 7.5 | 20 |

| Retinexformer | 1.6 | 15.6 | 35 |

| Ours | 2.0 | 13.7 | 30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Yin, S. MambaDPF-Net: A Dual-Path Fusion Network with Selective State Space Modeling for Robust Low-Light Image Enhancement. Electronics 2025, 14, 4533. https://doi.org/10.3390/electronics14224533

Zhang Z, Yin S. MambaDPF-Net: A Dual-Path Fusion Network with Selective State Space Modeling for Robust Low-Light Image Enhancement. Electronics. 2025; 14(22):4533. https://doi.org/10.3390/electronics14224533

Chicago/Turabian StyleZhang, Zikang, and Songfeng Yin. 2025. "MambaDPF-Net: A Dual-Path Fusion Network with Selective State Space Modeling for Robust Low-Light Image Enhancement" Electronics 14, no. 22: 4533. https://doi.org/10.3390/electronics14224533

APA StyleZhang, Z., & Yin, S. (2025). MambaDPF-Net: A Dual-Path Fusion Network with Selective State Space Modeling for Robust Low-Light Image Enhancement. Electronics, 14(22), 4533. https://doi.org/10.3390/electronics14224533