Nonverbal Interactions with Virtual Agents in a Virtual Reality Museum

Abstract

1. Introduction

2. Developing the VA and Virtual Environment

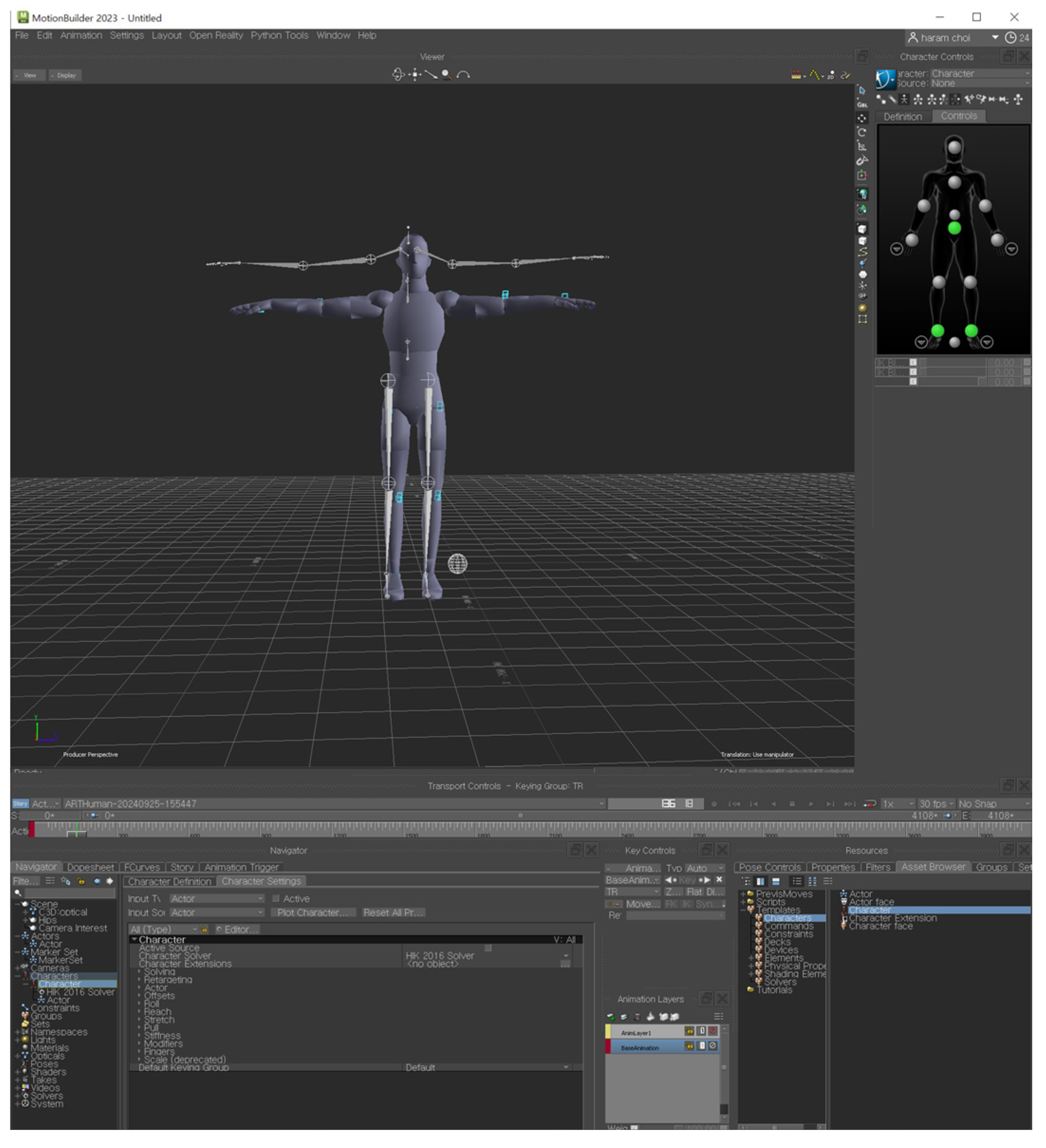

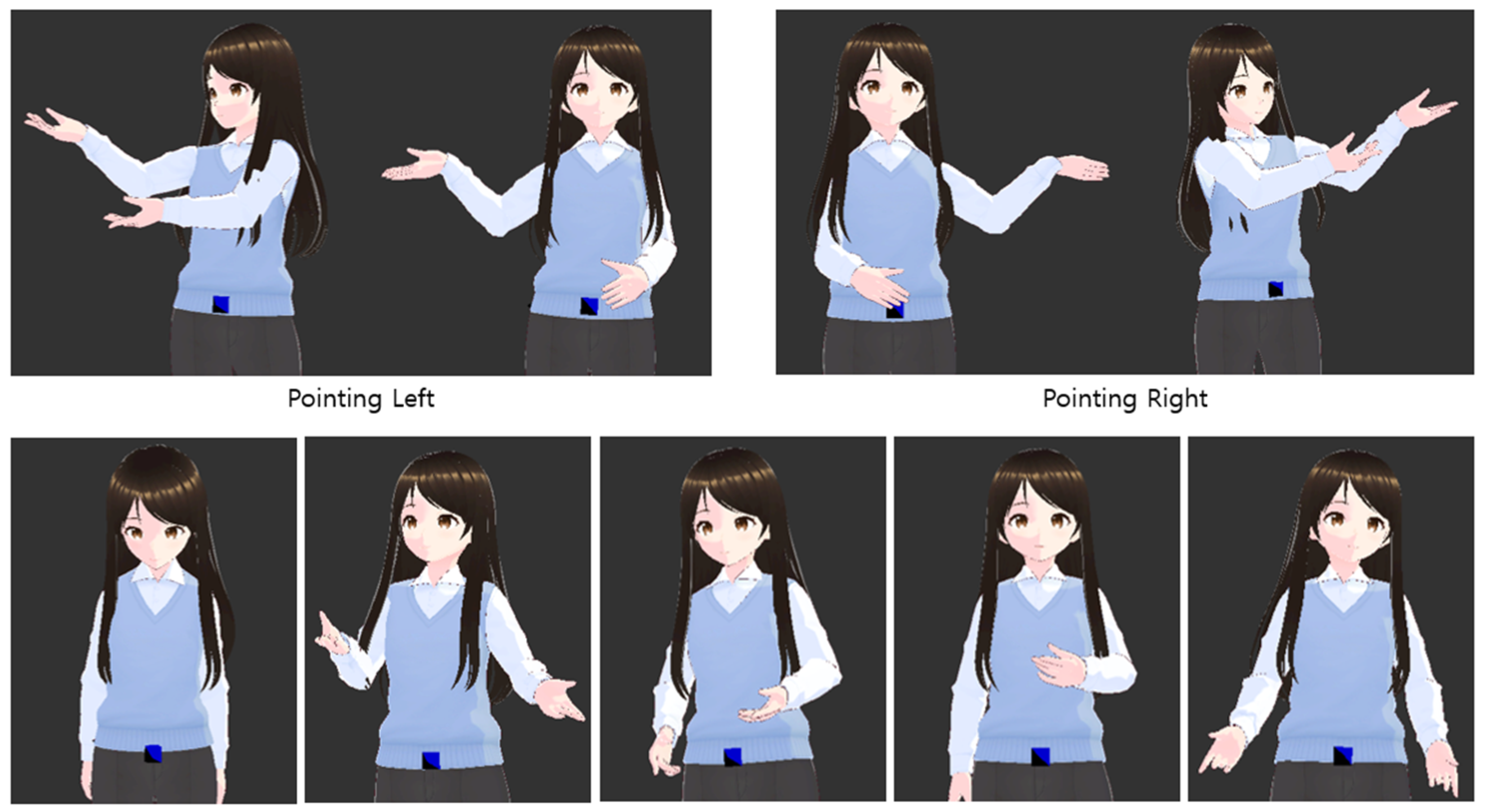

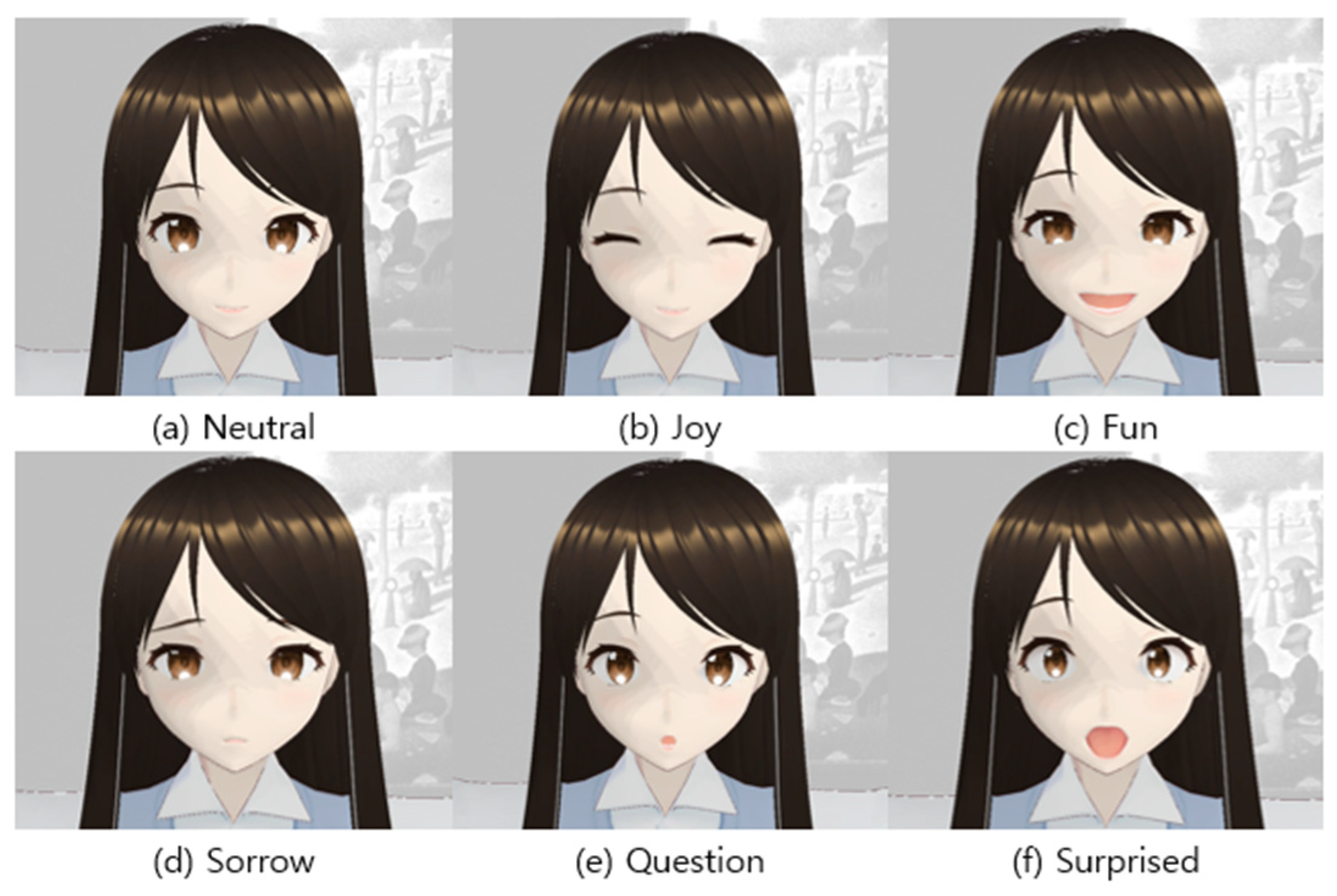

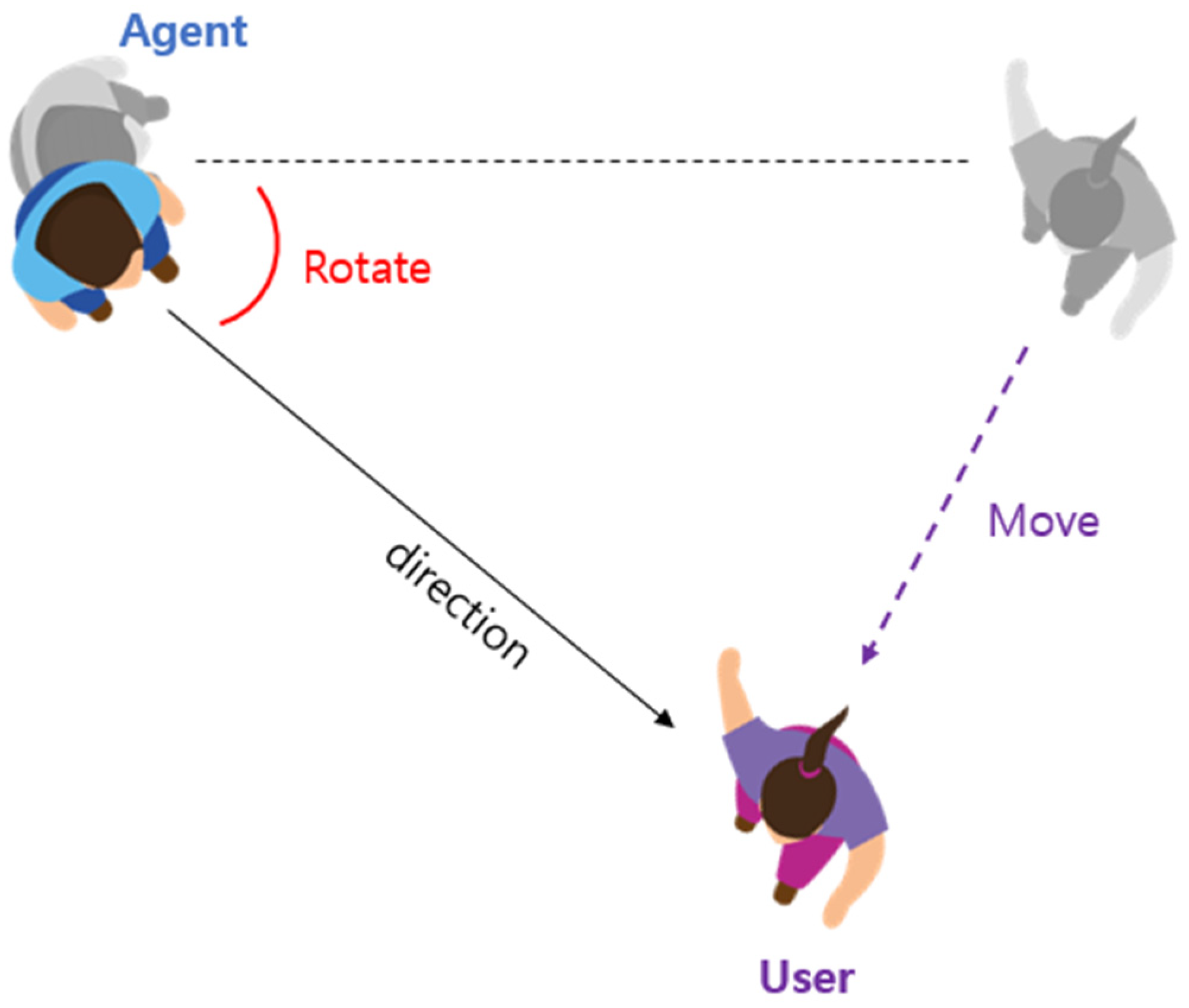

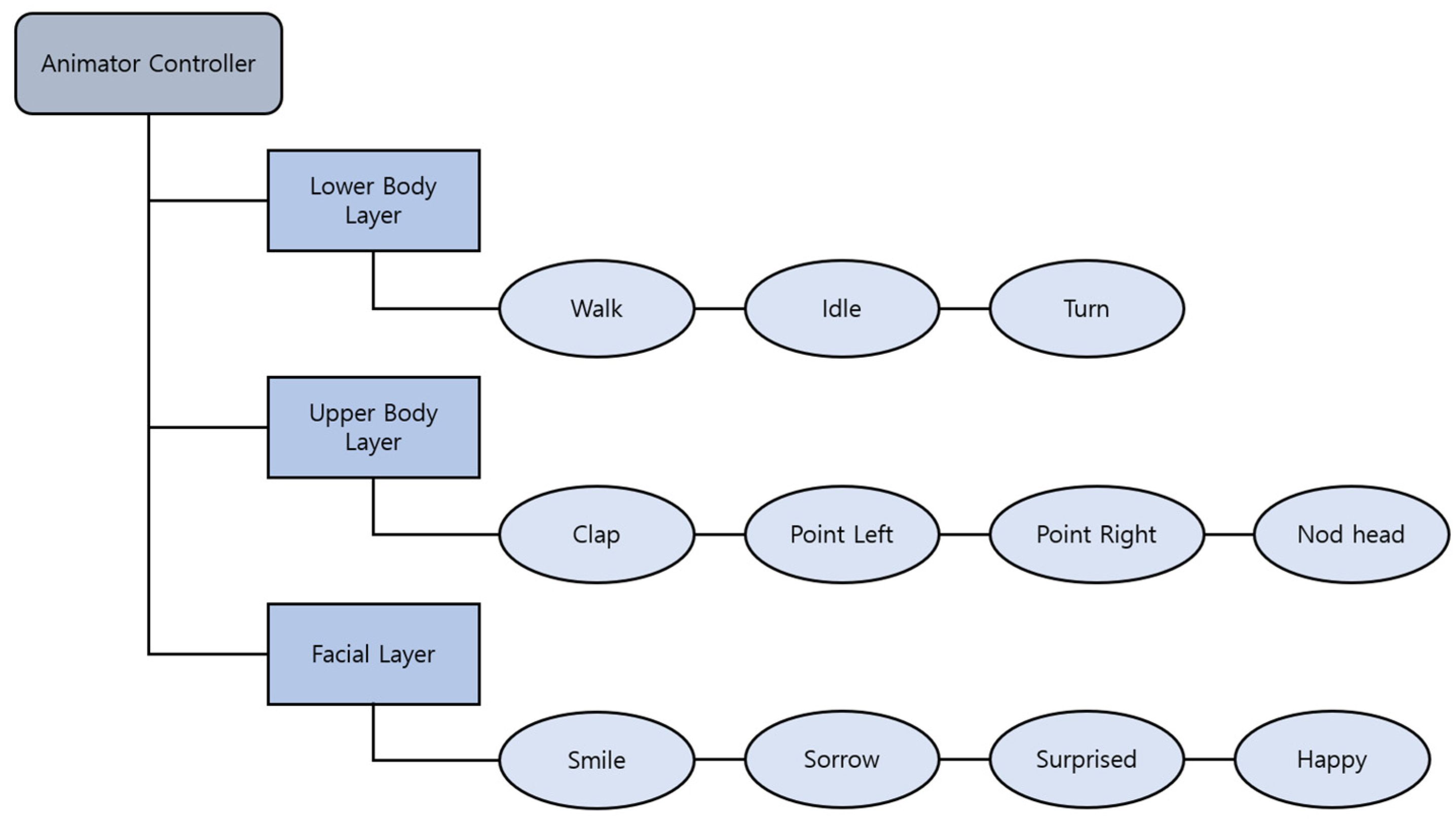

2.1. VA Implementation

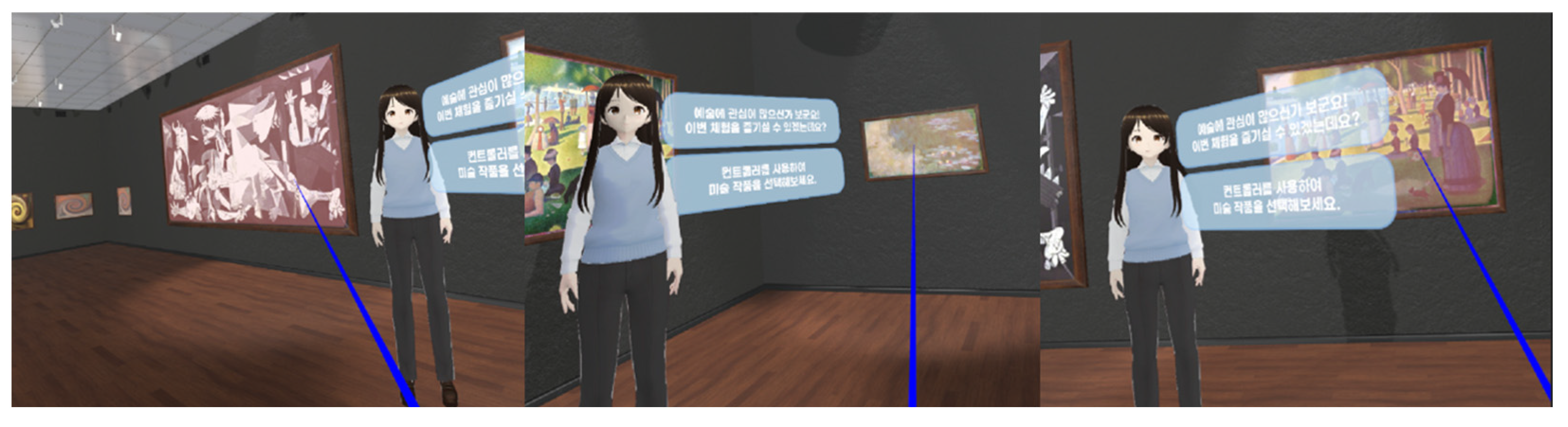

2.2. VRLE

3. Experiment

3.1. Experimental Design

3.2. User Experiment

3.3. Experiment Method

4. Result Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ECA | Embodied conversational agent |

| FIVE | Framework for immersive virtual environments |

| HMD | Head-mounted display |

| IPQ | Igroup presence questionnaire |

| VA | Virtual agent |

| VR | Virtual reality |

| VRLE | Virtual reality learning environment |

References

- Weller, M. Virtual Learning Environments: Using, Choosing and Developing Your VLE; Routledge: London, UK, 2007. [Google Scholar]

- Shin, D.-H. The role of affordance in the experience of virtual reality learning: Technological and affective affordances in virtual reality. Telemat. Inform. 2017, 34, 1826–1836. [Google Scholar] [CrossRef]

- Freina, L.; Ott, M. A Literature Review on Immersive Virtual Reality in Education: State of the Art and Perspectives. In Proceedings of the International Scientific Conference Elearning and Software for Education, Bucharest, Romania, 23–24 April 2015; Volume 1. [Google Scholar]

- Burdea, G. Haptic Feedback for Virtual Reality, Keynote Address of Proceedings of International Workshop on Virtual Prototyping. In Proceedings of the International Workshop on Virtual Prototyping, Laval, France, 17–29 May 1999. [Google Scholar]

- Burdea, G.C.; Coiffet, P. Virtual Reality Technology; John Wiley & Sons: New York, NY, USA, 2003. [Google Scholar]

- Huang, H.-M.; Rauch, U.; Liaw, S.-S. Investigating learners’ attitudes toward virtual reality learning environments: Based on a constructivist approach. Comput. Educ. 2010, 55, 1171–1182. [Google Scholar] [CrossRef]

- Hanson, K.; Shelton, B.E. Design and development of virtual reality: Analysis of challenges faced by educators. J. Educ. Technol. Soc. 2008, 11, 118–131. [Google Scholar]

- Parong, J.; Pollard, K.A.; Files, B.T.; Oiknine, A.H.; Sinatra, A.M.; Moss, J.D.; Passaro, A.; Khooshabeh, P. The mediating role of presence differs across types of spatial learning in immersive technologies. Comput. Human. Behav. 2020, 107, 106290. [Google Scholar] [CrossRef]

- Martin, J.H. Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition; Pearson/Prentice Hall: Hoboken, NJ, USA, 2009. [Google Scholar]

- Iglesias, A.; Luengo, F. Intelligent agents in virtual worlds. In Proceedings of the 2004 International Conference on Cyberworlds, Tokyo, Japan, 18–20 November 2004; pp. 62–69. [Google Scholar]

- Veletsianos, G. The impact and implications of virtual character expressiveness on learning and agent–learner interactions. J. Comput. Assist. Learn. 2009, 25, 345–357. [Google Scholar] [CrossRef]

- Schroeder, N.L.; Adesope, O.O.; Gilbert, R.B. How effective are pedagogical agents for learning? a meta-analytic review. J. Educ. Comput. Res. 2013, 49, 1–39. [Google Scholar] [CrossRef]

- Swartout, W.; Artstein, R.; Forbell, E.; Foutz, S.; Lane, H.C.; Lange, B.; Morie, J.; Noren, D.; Rizzo, S.; Traum, D. Virtual humans for learning. AI. Mag 2013, 34, 13–30. [Google Scholar] [CrossRef]

- Sabourin, J.; Mott, B.; Lester, J.C. Modeling learner affect with theoretically grounded dynamic Bayesian networks. In Proceedings of the Lecture Notes in Computer Science; Springer Berlin Heidelberg: Memphis, TN, USA; Berlin/Heidelberg, Germany, 2011; Volume 2011, pp. 286–295. [Google Scholar]

- Johnson, W.L.; Lester, J.C. Face-to-face interaction with pedagogical agents, twenty years later. Int. J. Artif. Intell. Educ. 2016, 26, 25–36. [Google Scholar] [CrossRef]

- Kang, S.-H.; Gratch, J.; Wang, N.; Watt, J.H. Does the Contingency of Agents’ Nonverbal Feedback Affect Users’ Social Anxiety? In Proceedings of the 7th International Joint Conference on Autonomous Agents and Multiagent Systems, Estoril, Portugal, 12–16 May 2008; Volume 1, pp. 120–127. [Google Scholar]

- Huang, L.; Morency, L.-P.; Gratch, J. Virtual Rapport 2.0. In Proceedings of the Intelligent Virtual Agents: 10th International Conference, Philadelphia, PA, USA, 20–22 September 2010; Springer: Reykjavik, Iceland; Berlin/Heidelberg, Germany, 2011; Volume 2011, pp. 68–79. [Google Scholar]

- Yalçin, Ö.N. Modeling empathy in embodied conversational agents. In Proceedings of the 20th ACM International Conference on Multimodal Interaction, Boulder, Colorado, 16–20 October 2018; ACM: New York, NY, USA, 2018; pp. 546–550. [Google Scholar]

- Diederich, S.; Brendel, A.B.; Morana, S.; Kolbe, L. On the design of and interaction with conversational agents: An organizing and assessing review of human-computer interaction research. J. Assoc. Inf. Syst. 2022, 23, 96–138. [Google Scholar] [CrossRef]

- Gunkel, D.J. Communication and artificial intelligence: Opportunities and challenges for the 21st century. Communication +1 2012, 1, 1–26. [Google Scholar] [CrossRef]

- Jolibois, S.; Ito, A.; Nose, T. Multimodal expressive embodied conversational agent design. In Proceedings of the Communications in Computer and Information Science, Copenhagen, Denmark, 23–28 July 2023; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 244–249. [Google Scholar]

- Mekni, M. An artificial intelligence based virtual assistant using conversational agents. J. Softw. Eng. Appl. 2021, 14, 455–473. [Google Scholar] [CrossRef]

- André, E.; Pelachaud, C. Interacting with embodied conversational agents. In Speech Technology; Springer: Berlin/Heidelberg, Germany, 2010; pp. 123–149. [Google Scholar] [CrossRef]

- Aneja, D.; Hoegen, R.; McDuff, D.; Czerwinski, M. Understanding Conversational and Expressive Style in a Multimodal Embodied Conversational Agent. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; ACM: New York, NY, USA, 2021; pp. 1–10. [Google Scholar]

- Reeves, B.; Nass, C. The Media Equation: How People Treat Computers, Television, and New Media like Real People; Center for the Study of Languag: Cambridge, UK, 1996; Volume 10, pp. 19–36. [Google Scholar]

- McNeill, D. Hand and Mind: What Gestures Reveal About Thought; University of Chicago Press: Chicago, IL, USA, 1992. [Google Scholar]

- Frischen, A.; Bayliss, A.P.; Tipper, S.P. Gaze cueing of attention: Visual attention, social cognition, and individual differences. Psychol. Bull. 2007, 133, 694–724. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. Facial expressions. In Handbook of Cognition and Emotion; Dalgleish, T., Power, M., Eds.; Wiley: New York, NY, USA, 1999; Volume 16, p. e320. [Google Scholar]

- Tanenbaum, T.J.; Hartoonian, N.; Bryan, J. “How Do I Make This Thing Smile?” An Inventory of Expressive Nonverbal Communication in Commercial Social Virtual Reality Platforms. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Maloney, D.; Freeman, G.; Wohn, D.Y. “Talking without a voice” understanding non-verbal communication in social virtual reality. Proc. ACM Hum. Comput. Interact. 2020, 4, 1–25. [Google Scholar] [CrossRef]

- Burgoon, J.K.; Buller, D.B.; Woodall, W.G. Nonverbal Communication: The Unspoken Dialogue; McGraw-Hill: New York, NY, USA, 1996. [Google Scholar]

- Wang, I.; Ruiz, J. Examining the use of nonverbal communication in virtual agents. Int. J. Hum.-Comput. Interact. 2021, 37, 1648–1673. [Google Scholar] [CrossRef]

- Kopp, S.; Gesellensetter, L.; Krämer, N.C.; Wachsmuth, I. A Conversational Agent as Museum Guide—Design and Evaluation of a Real-World Application. In Proceedings of the Lecture Notes in Computer Science, Kos, Greece, 12–14 September 2005; Springer Berlin Heidelberg: Kos, Greece; Berlin/Heidelberg, Germany, 2005; Volume 2005, pp. 329–343. [Google Scholar]

- Potdevin, D.; Clavel, C.; Sabouret, N. Virtual intimacy in human-embodied conversational agent interactions: The influence of multimodality on its perception. J. Multimodal User Interfaces 2021, 15, 25–43. [Google Scholar] [CrossRef]

- Grivokostopoulou, F.; Kovas, K.; Perikos, I. The effectiveness of embodied pedagogical agents and their impact on students learning in virtual worlds. Appl. Sci. 2020, 10, 1739. [Google Scholar] [CrossRef]

- Galanxhi, H.; Nah, F.F.H. Deception in cyberspace: A comparison of text-only vs. avatar-supported medium. Int. J. Hum.-Comput. Stud. 2007, 65, 770–783. [Google Scholar] [CrossRef]

- Takano, M.; Yokotani, K.; Kato, T.; Abe, N.; Taka, F. Avatar Communication Provides More Efficient Online Social Support Than Text Communication. In Proceedings of the International AAAI Conference on Web and Social Media 2025, Copenhagen, Denmark, 23–26 June 2025; Volume 19, pp. 1862–1879. [Google Scholar]

- Mori, M. Bukimi No Tani [the uncanny valley]. Energy 1970, 7, 33. [Google Scholar]

- Tinwell, A.; Nabi, D.A.; Charlton, J.P. Perception of psychopathy and the uncanny valley in virtual characters. Comput. Hum. Behav. 2013, 29, 1617–1625. [Google Scholar] [CrossRef]

- Tinwell, A.; Grimshaw, M.; Nabi, D.A.; Williams, A. Facial expression of emotion and perception of the uncanny valley in virtual characters. Comput. Hum. Behav. 2011, 27, 741–749. [Google Scholar] [CrossRef]

- Mennecke, B.E.; Triplett, J.L.; Hassall, L.M.; Conde, Z.J. Embodied social presence theory. In Proceedings of the 2010 43rd Hawaii International Conference on System Sciences, Honolulu, HI, USA, 5–8 January 2010; pp. 1–10. [Google Scholar]

- Allmendinger, K. Social presence in synchronous virtual learning situations: The role of nonverbal signals displayed by avatars. Educ. Psychol. Rev. 2010, 22, 41–56. [Google Scholar] [CrossRef]

- Foglia, L.; Wilson, R.A. Embodied cognition. Wiley Interdiscip. Rev. Cogn. Sci. 2013, 4, 319–325. [Google Scholar] [CrossRef] [PubMed]

- Cassell, J.; Bickmore, T.; Campbell, L.; Vilhjalmsson, H.; Yan, H. Designing embodied conversational agents. In Embodied Conversational Agents; The MIT Press: Cambridge, MA, USA, 2000; Volume 29. [Google Scholar]

- Doughty, M.J. Consideration of three types of spontaneous eyeblink activity in normal humans: During reading and video display terminal use, in primary gaze, and while in conversation. Optometry Vis. Sci. 2001, 78, 712–725. [Google Scholar] [CrossRef]

- Stern, J.A.; Walrath, L.C.; Goldstein, R. The endogenous eyeblink. Psychophysiology 1984, 21, 22–33. [Google Scholar] [CrossRef]

- Oh, S.Y.; Bailenson, J.; Krämer, N.; Li, B. Let the avatar brighten your smile: Effects of enhancing facial expressions in virtual environments. PLoS ONE 2016, 11, e0161794. [Google Scholar] [CrossRef] [PubMed]

- Slater, M.; Wilbur, S. A framework for immersive virtual environments (FIVE): Speculations on the role of presence in virtual environments. Presence 1997, 6, 603–616. [Google Scholar] [CrossRef]

- Biocca, F.; Kim, J.; Choi, Y. Visual touch in virtual environments: An exploratory study of presence, multimodal interfaces, and cross-modal sensory illusions. Presence 2001, 10, 247–265. [Google Scholar] [CrossRef]

- Schubert, T.; Friedmann, F.; Regenbrecht, H. The experience of presence: Factor analytic insights. Presence Teleoperators Virtual Environ. 2001, 10, 266–281. [Google Scholar] [CrossRef]

| Situation | BRW (Eyebrow) | EYE (Eyes) | MTH (Mouth) | ALL | Note |

|---|---|---|---|---|---|

| Default | - | - | - | Neutral = 100 | Natural smile |

| End of curation | - | Joy = 80 | - | - | Folded-eye smile |

| Positive | Fun = 70 | Fun = 100 | Fun = 65 Joy = 40 | - | Strong smile |

| Sad | Sorrow = 60 | Sorrow = 50 | Angry = 50 | - | Downturned eyes, brows, and mouth |

| Inquisitive | Surprised = 50 | Sorrow = 100 | - | Subtle doubtful expression | |

| Surprised | Surprised = 80 | Surprised = 80 | Surprised = 50 | - | Wide eyes and open mouth |

| Category | Items |

|---|---|

| Human Likeness | The museum curator closely imitated human behavior. |

| Attractiveness | The museum curator appeared attractive. |

| Eeriness | The museum curator evoked eeriness. |

| Comfort | Interacting with the museum curator felt comfortable. |

| Warmth | The museum curator felt warm and friendly. |

| Category | Items |

|---|---|

| Self-Reported Copresence | I felt like I was sharing the same space as the curator. |

| I was continuously aware of the curator’s presence during the interaction. | |

| Perceived Other’s Copresence | I felt that the curator was aware of my presence. |

| I felt like the curator was acting like she was with me in the same real space. | |

| I felt that the curator was interacting with me and responding to my actions. | |

| Social Presence | Interacting with the curator felt natural. |

| I felt that the curator understood my emotions and responses. | |

| Interacting with the curator was an enjoyable and meaningful experience. |

| Category | Items |

|---|---|

| Spatial Presence | I felt as if I were actually inside the virtual museum. |

| I was able to intuitively understand the layout of the exhibition space and my position within it. | |

| Moving around in the virtual museum felt similar to walking in a real physical space. | |

| I could easily perceive my position and orientation in the virtual museum. | |

| Involvement | I was deeply focused on the exhibits in the virtual museum. |

| The information and experiences provided by the virtual museum kept me engaged. | |

| Interacting with the interactive elements (such as explanations and guides) in the virtual museum was enjoyable. | |

| Experienced Realism | The spatial design of the virtual museum felt realistic. |

| The information provided by the virtual museum (such as explanations, texts, and audio) was similar to what I would expect from a real museum experience. |

| Category | Group | n | Mean | SD | p |

|---|---|---|---|---|---|

| Uncanny Valley | A | 15 | 4.35 | 0.57 | 0.001 |

| B | 15 | 3.52 | 0.68 |

| Category | Group | n | Mean | SD | p |

|---|---|---|---|---|---|

| Self-Reported Copresence | A | 15 | 4.47 | 0.55 | 0.053 |

| B | 15 | 4 | 0.70 | ||

| Perceived Other’s Copresence | A | 15 | 4.2 | 0.54 | <0.001 |

| B | 15 | 3.07 | 0.8 | ||

| Social Presence | A | 15 | 4.1 | 0.66 | <0.001 |

| B | 15 | 2.93 | 0.76 |

| Category | Group | n | Mean | SD | p |

|---|---|---|---|---|---|

| Spatial Presence | A | 15 | 4.77 | 0.24 | 0.004 |

| B | 15 | 4.27 | 0.54 | ||

| Involvement | A | 15 | 4.73 | 0.29 | <0.001 |

| B | 15 | 3.64 | 0.82 | ||

| Experienced Realism | A | 15 | 4.73 | 0.53 | 0.026 |

| B | 15 | 4.17 | 0.77 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sung, C.; Nam, S. Nonverbal Interactions with Virtual Agents in a Virtual Reality Museum. Electronics 2025, 14, 4534. https://doi.org/10.3390/electronics14224534

Sung C, Nam S. Nonverbal Interactions with Virtual Agents in a Virtual Reality Museum. Electronics. 2025; 14(22):4534. https://doi.org/10.3390/electronics14224534

Chicago/Turabian StyleSung, Chaerim, and Sanghun Nam. 2025. "Nonverbal Interactions with Virtual Agents in a Virtual Reality Museum" Electronics 14, no. 22: 4534. https://doi.org/10.3390/electronics14224534

APA StyleSung, C., & Nam, S. (2025). Nonverbal Interactions with Virtual Agents in a Virtual Reality Museum. Electronics, 14(22), 4534. https://doi.org/10.3390/electronics14224534