Abstract

Network slicing (NS) plays a vital role in enabling flexible and efficient resource allocation, tailored to diverse use cases and network domains. This survey paper explores the synergy between NS and Artificial Intelligence (AI), emphasizing how Machine Learning (ML) techniques can address challenges across the slice life-cycle. A key contribution of this work is an in-depth analysis of AI and primarily ML applications in each phase of the slice life-cycle, delving into their specific tasks and discussing the techniques applied to these tasks. Furthermore, we present a taxonomy based on different slicing criteria, offering a structured perspective to enhance understanding and implementation.

1. Introduction

The 5G and Beyond 5G (B5G) networks are designed to serve different types of users, i.e., verticals, with a plethora of different services, achieving higher performance in terms of latency, throughput, and reliability, fostering the digital transformation of vertical industries []. The International Telecommunication Union (ITU) categorized 5G services [] into (a) Enhanced Mobile Broadband (eMBB), for capacity enhancement, (b) Ultra-Reliable and Low-Latency Communications (URLLCs), for providing robust connectivity with very low latency, and (c) Massive Machine Type Communications (mMTCs), to support low-rate burst-rate communication among a huge number of devices.

The diverse nature and different performance requirements of these services have driven both researchers and industry professionals to seek a technology capable of enabling a unified network infrastructure, capable of meeting these varied requirements effectively. Network Slicing (NS) has emerged as a solution to this challenge, widely recognized by academia and industry as a key enabler for the delivery of customized and on-demand network services []. Network Function Virtualization (NFV) and Software-Defined Networking (SDN) are the main technologies that NS exploits to create end-to-end logical network-oriented on-demand services, called network slices, capable of supporting the different demands of each type of service. Each slice spans over all network domains, i.e., the Radio Access Network (RAN), the Transport Network (TN), and the Core Network (CN), and is created to provide a specific service, which has different performance requirements than the services provided by other slices.

Each network slice progresses through multiple phases, from its initial request for creation to its eventual decommissioning when it is no longer required. These phases compose the slice life-cycle and have been defined by the 3rd Generation Partnership Project (3GPP) [].

The application of NS involves tasks such as the network slice design, construction, deployment, operation, control, and management. These tasks require real-time analysis of a large volume of complex data, along with dynamic decision making, to ensure that the deployed network slice effectively meets the targeted Quality of Service (QoS) requirements. Given the volume of data to be processed and the time constraints, tasks related to slice creation and operation are not always feasible to perform manually, and hence must be automated [].

In order to address the challenges arising from the application of NS, Artificial Intelligence (AI) and especially Machine Learning (ML) have been widely proposed as key enablers to make its implementation more feasible and efficient. The use of such techniques can automate many tasks related to the creation, deployment, and operation of network slices, as well as enable the optimized real-time reconfiguration of parameters. Building on such research efforts, the adoption of AI/ML methods for NS has also been standardized by leading global Standards Development Organizations (SDOs), which have defined specific use cases for these methods and the requirements they must meet in this context [].

In the literature, there are many research works proposing AI, and mainly ML methods, that deal with different problems arising from the application of NS in 5G and B5G networks. Those problems are related to different tasks that need to be performed in each phase of the slice life-cycle. Thus, the proposed applications of AI and ML are associated with slice life-cycle phases.

The primary motivation for this work is to fill the gap in the literature by providing a comprehensive survey on the application of ML methods to various tasks across each phase of the network slice life-cycle, as outlined in ref. []. Our goal is to summarize the tasks associated with each phase and the corresponding ML methods proposed to address the challenges related to these tasks. Additionally, we aim to contribute to the broader discourse on intelligent networks, and offer readers a valuable resource that covers the fundamental concepts of both NS and ML.

The main contributions of this work can be summarized as follows:

- We present the state-of-the-art related surveys focusing on the application of ML to NS, and discuss their respective contributions.

- We provide insights about the basic functionalities of NS, as well as existing architectural approaches, and slicing types. Moreover, we present the main technology enablers of NS and the different phases that compose the slice life-cycle.

- We introduce the foundational concepts of ML techniques and their respective categories.

- We present the findings of our extensive literature review on the applications of ML methods to NS. Specifically, for each phase of the life-cycle and each task associated with a phase, we outline the ML applications proposed in the literature, the challenges they address, and the methods employed.

- Whenever feasible, we also associate each proposed application with the different types of slicing, considering the resource allocation approach, use case application, and network domain in which NS is applied.

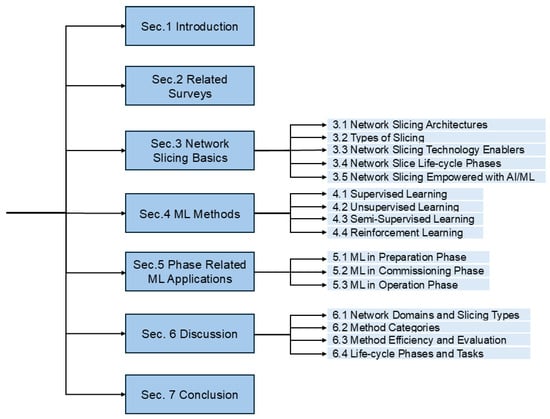

The remainder of this survey is organized as follows: Section 2 presents the state-of-the-art related surveys and their contributions. Section 3 introduces the basics of NS, including the different architectures, slicing types, the technology enablers of NS, the phases of the slice life-cycle, and how AI/ML empowers NS. Section 4 provides key information about ML, including different training approaches and the resulting ML categories. Section 5 highlights the main contributions of this survey, focusing on ML applications proposed to address challenges arising from the deployment of NS for each slice life-cycle phase. Section 6 discusses issues related to specific ML methods identified during this survey, and finally, Section 7 concludes our work. The structure of our survey is illustrated in Figure 1.

Figure 1.

Structure of the survey.

2. Related Surveys

Since the appearance of the term “network slicing”, several surveys and reviews have been published, exploring various aspects of this term. Initially, researchers primarily focused on the fundamental principles of NS, its architectures, and the dynamics associated with their open challenges.

More precisely, Foukas et al. [] presented the 5G architecture and its challenges. Afolabi et al. [] focused on the NS principal concepts, its enabled technologies and solutions, as well as the current standardization efforts. Kaloxylos [] summarized existing NS solutions, while Zhang [] outlined slicing approaches, key technologies, and open issues. Chahbar et al. [] presented end-to-end 5G NS models. Moreover, the majority of papers reviewed 5G NS architectures primarily leveraging SDN and NFV. The authors in ref. [] focused on the European Telecommunications Standards Institute (ETSI) and Open Networking Foundation (ONF) architectures and their SDN and NFV capabilities, while Barakabitze et al. [] highlighted 5G NS using SDN and NFV solutions.

Several other surveys focus on the mathematical models that can be applied to NS. Debbabi et al. [] investigated the NS architectures from their algorithmic perspective. Su et al. [] categorized the mathematical models of resource allocation of NS into general, economic, game, prediction, and robustness, and failure recovery models. The authors also mentioned that prediction models can use ML algorithms. However, their discussion of this topic is limited. Wijethilaka et al. [] focused on the role of NS for the Internet of Things (IoT) realization in 5G networks. The authors also highlighted the need to integrate ML algorithms with NS to achieve automated functionalities; however, their discussion of this topic was limited.

From the beginning of 2020, several surveys have investigated the use of AI/ML algorithms in NS. Shen et al. [] surveyed AI-assisted RAN slicing and its challenges. Special attention was given to the implementation of NS in the IoT environment. Khan et al. [] highlighted recent advances, provided a taxonomy, and discussed the requirements and challenges in NS for IoT applications. The authors also suggested the use of federating learning (FL) for future NS; however, no further details were given. Wu et al. [] focused on the application of NS in smart applications, mainly for smart transportation, smart energy, and smart factory applications. The authors also mentioned the benefits of intelligent NS. Nevertheless, they did not delve into the AI/ML-based NS. Ssengonzi et al. [] emphasized the application of Deep Reinforcement Learning (DRL) for NS in 5G and beyond networks. Dangi et al. [] highlighted security aspects regarding ML-based NS in 5G networks during the network slice life-cycle. Donatti et al. [] surveyed the different ML techniques that can be applied in the different phases of the slice life-cycle. Still, the authors focused only on the specific tasks of the phases of the slice life-cycle. Phyu et al. [] also talked about the application of ML approaches for the specific tasks of NS, i.e., traffic forecasting, admission control, and resource allocation. Azimi et al. [] surveyed works that employed ML techniques for resource management in RAN slicing. Hamdi et al. [] focused on ML techniques and NS for the Internet of Vehicles (IoV). Ebrahimi et al. [] studied NS resource management in cross-domain and End-to-End (E2E) contexts, while Novanana et al. [] focused on E2E 5G slicing and diversity strategies to increase the network performance. Finally, Sun et al. [] reviewed the current and future role of Explainable AI (XAI) in 6G communications, focusing on its application in network slicing. Their work categorizes XAI methods and highlights their impact on transparency and reliability in critical scenarios, like vehicular networks.

Table 1 summarizes the aforementioned works by year of publication in ascending order. In this survey, we also focus on the application of the AL/ML techniques in the different phases of the slice life-cycle. However, we go one step further, delving deeper into the specific tasks of each phase of the life-cycle by presenting and discussing the ML techniques applied in these tasks, as outlined in ref. [], and proposing a taxonomy based on different slicing criteria.

Table 1.

Summary of related surveys.

3. Network Slicing Basics

The concept of network slice was introduced in 2015 by the Next Generation Mobile Network (NGMN) Alliance to successfully accommodate the diverse service requirements of the 5G use cases using a common network infrastructure. According to the 3GPP, a network slice is defined as “a logical network that provides specific network capabilities and network characteristics, supporting various service properties for network slice customers” []. Moreover, according to Afolabi et al. [], NS should adhere to the seven following principles:

- Automation: Enables on-demand slice setup without manual effort, specifying Service Level Agreements (SLAs) and timing via signaling.

- Isolation: Enables on-demand slice setup without manual effort, specifying SLAs and timing via signaling.

- Customization: Tailors resources to tenant needs with programmable policies and value-added services.

- Elasticity: Adjusts resources dynamically to maintain SLAs under changing conditions.

- Programmability: Open Application Programming Interfaces (APIs) allow third parties to manage slice resources flexibly.

- End-to-end: Ensures seamless service delivery across domains and technologies.

- Hierarchical abstraction: Allows recursive resource sharing, enabling layered services.

Thus, the benefits of NS are numerous and can be summarized below [,]:

- Through virtual networks’ multiplexing, it can support multi-tenancy, leading to reduced capital expense in both network deployment and operation.

- It has the capability to achieve service differentiation and ensure the fulfillment of SLAs for each type of service.

- On-demand creation and adjustment of slices, along with their potential annulment as required, can increase the flexibility and adaptability in network management.

3.1. Network Slicing Architectures

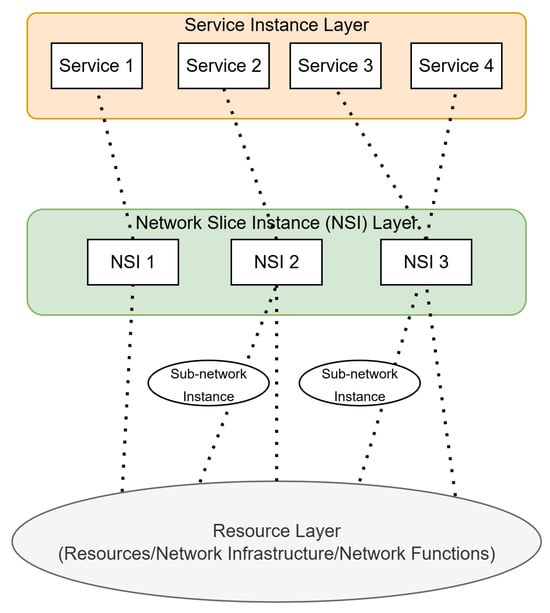

Several SDOs, such as the 3GPP, the NGMN alliance, etc., have focused on the development of NS-oriented architectures [,]. The NGMN slicing architecture, as depicted in Figure 2, consists of the following layers [,]: (1) resource (infrastructure) layer; (2) network slice instance layer; and (3) application (service instance) layer. The resource layer provides all the virtual or physical resources to the network slice instance layer that furnishes the necessary network characteristics for a service instance. Finally, the application layer consists of all services (end-user service or business services). A detailed overview regarding NS architectures of SDOs and related NS projects can be found in ref. [].

Figure 2.

The NGMN architecture.

3.2. Types of Slicing

NS can be classified according to different criteria, as summarized in Table 2. Firstly, based on the resource allocation method (slice elasticity) used, NS can be distinguished into the following categories [,]:

Table 2.

Types of network slicing.

- Static Slicing, where each Virtual Network (VN) gets a fixed portion of physical resources for its entire service life. The main advantage of this approach is its simplicity, as no continuous control signaling or coordination is required. However, since static slicing lacks flexibility to adapt to varying traffic loads, it often leading to inefficient resource utilization. Moreover, the determination of the optimal fixed allocation among slices is a very challenging task.

- Dynamic Slicing, where operators are able to dynamically design, deploy, customize, and optimize the slices according to the service requirements or conditions in the network. This approach enhances flexibility, resource efficiency, and responsiveness since it allows the dynamic deployment or expansion of slices whenever is needed. Moreover, predictive or adaptive mechanisms can further optimize allocation by foreseeing demand variations. However, dynamic slicing increases system complexity and requires accurate traffic prediction and orchestration.

- Semi-Static or Semi-Dynamic Slicing, where part of the resources is allocated statically and it can be guaranteed while the other part is dynamically allocated. This hybrid model balances between stability and adaptability; however it cannot guarantee efficient resource utilization while it requires partial dynamic control mechanisms.

In addition, depending on the use case application, NS can be applied as shown below []:

- Vertically, where each slice is customized to meet the specific requirements of different vertical industries or applications. (i.e., automotive, smart grid, healthcare, etc.) In this context, vertical industries collaborate with the core network to address diverse QoS and QoE demands across different use cases []. Thus, this approach ensures strong performance isolation; however it comes at the cost of increased orchestration complexity.

- Horizontally, in which the resources are distributed evenly across different slices to meet the diverse needs of various users or services within the network. Thus, this approach promotes fair resource sharing and scalability; however, it cannot guarantee QoS differentiation and latency assurance.

Based on the ownership, NS can also be classified into the following categories []:

- Local 5G Operator (L5GO) Slicing, which allows the local 5G operator to create and manage network slices that meet the specific requirements of various use cases, such as hospitals, universities, and industrial environments. This approach supports localized control and enhances performance isolation, but in many countries, it may face limitations due to spectrum availability and regulatory constraints.

- Mobile Network Operators (MNO) Slicing, which involves the MNO managing the entire slice life-cycle. This allows the MNO to achieve centralized control, efficient resource allocation; however, it comes at the cost of increased capital and operational expenditures and greater complexity in orchestration, tenant isolation, and SLA assurance.

Finally, according to the network domain wherein slicing is applied, NS can be classified into the following categories:

- Radio Access Network (RAN) Slicing, that focuses on virtualizing radio resources such as spectrum, scheduling, and other RAN components including base stations and antennas. It enables flexible the sharing of radio resources among slices but it faces challenges in allocating resources in real time, managing interference, and coordinating between base stations.

- Core Slicing, which involves partitioning the CN elements and functions, such as routers, gateways, and servers, to create virtualized network slices. It enables customized service paths and the independent operation of each slice, but it faces issues regarding scaling, security, and keeping slices properly isolated.

- Transport slicing, which encompasses partitioning the network transport infrastructure, including optical fibers, switches, and routers, into separate virtual slices. It enables differentiated and personalized 5G services tailored to specific applications and user needs. However, integration with legacy networks and coordination across multiple transport layers remain significant challenges.

- E2E Slicing, which refers to the orchestration and coordination of network slices across the entire network, from the core to the edge, to provide seamless connectivity and service delivery to end-users. It enables seamless connectivity and stable service quality but is hard to implement because it requires coordination across domains, works with different vendors, and involves complex service management.

3.3. Network Slicing Technology Enablers

The feasibility of implementing and applying the NS concept derives from the elaboration of several technologies that allow the softwarization and virtualization of the network. This subsection overviews the key enabler technologies of NS, namely SDN, NFV, Multi-access Edge Computing (MEC), and Cloud Computing [].

3.3.1. Software-Defined Networking (SDN)

The SDN approach makes networks capable of orchestrating and controlling applications/services in a more granular and E2E manner that brings intelligence, flexibility, and centralized control with a global view of the entire network. Moreover, responding rapidly to changing network conditions, as well as business-market and end-user needs, is made possible via SDN. SDN bridges the gap between service provisioning and network management by introducing a virtualized control plane that enables intelligent management decisions across network functions. It uses standardized south-bound interfaces (SBIs) to make network control directly programmable [].

3.3.2. Network Function Virtualization (NFV)

With NFV, certain network functions (NFs) can be virtualized and run on top of commodity hardware devices. These NFs can be easily deployed and dynamically allocated independently of the software and hardware that exist in traditional vendor offerings. This enables network resources to be efficiently allocated to Virtual Network Functions (VNFs) through dynamic scaling to achieve Service Function Chaining (SFC). Resource provisioning optimization to end-users with high QoS is ensured by NFV as well as VNF operation performance by including minimum latency and failure rate thresholds [].

3.3.3. Multi-Access Edge Computing (MEC) and Cloud Computing

By leveraging MEC, data is processed near the point of generation and consumption, close to end-users. This approach provides cloud computing capabilities to application and content providers, along with an IT service environment at the mobile network’s edge. As a result, the network can deliver ultra-low latency services essential for business-critical applications while also supporting interactive user experiences in high-traffic areas. In 2012, Cisco coined the term fog computing [] especially for IoT architectures. According to Yi et al. [] “Fog Computing is a geographically distributed computing architecture with a resource pool which consists of one or more ubiquitously connected heterogeneous devices (including edge devices) at the edge of network and not exclusively seamlessly backed by Cloud services, to collaboratively provide elastic computation, storage and communication (and many other new services and tasks) in isolated environments to a large scale of clients in proximity”. Depending on the exact location of computation, alternative versions, like Mist Computing [], have also been proposed.

3.4. Network Slice Life-Cycle Phases

According to ITU-T TS 28.530 [], the management aspects of NS can be distinguished in four phases, namely the Preparation, the Commissioning, the Operation, and the Decommissioning. Every task, which is relevant to slice design, instantiation, management, etc., is included in one of the aforementioned phases. Table 3 summarizes the tasks of each phase.

Table 3.

Tasks related to each slice life-cycle phase. Adapted from ref. [].

3.4.1. Preparation Phase

Before the creation/instantiation of a network slice, the following preparatory tasks must be carried out. Slice Design (SD) involves the design of a requirements template that should be fulfilled by the new slice. These requirements are derived from the services provided to the tenants or to the users that will be associated with the new slice. Capacity Planning (CP) involves the estimation of the network load that the new slice should be able to handle, in conjunction with the overall network traffic. Network Function Evaluation (NFE) involves the association of specific VNFs with the slice to be created and the assessment of the performance of these functions. Network Environment Preparation (NEP) involves the necessary preparations and decision making to be performed prior to the instantiation of the new slice. This may include the modification of network parameters affecting already running slices and the acceptance or rejection of a new slice request, based on the current network environment status, i.e., the admission control, one of the most important decision-making tasks in the Preparation phase.

3.4.2. Commissioning Phase

After the completion of the previous tasks, network slices should be created according to the slice requests that have been admitted in the previous phase. Moreover, in this phase, the following tasks have to be performed. The Necessary Resources Reservation (NRR) task is performed first in order to ensure that the essential amount of resources for the new slice will be available upon its creation. Then, the Slice Instance Creation (SIC) task is performed in order to create the Network Slice Instance (NSI) of the new slice. Finally, the Resources Initial Allocation and Configuration (RIAC) task is performed, during which the reserved resources are allocated to the new slice.

3.4.3. Operation Phase

Following the Commissioning phase, the slice is ready to provide service entering the operation phase. The first task to be performed in this phase is the Slice Activation (SA) of the NSI, which is a decision-making task that basically checks whether or not the NSI is prepared to support communication services. Once activated, the reserved resources are allocated to the network slice, enabling it to schedule these resources and provide services to the subscribed end-users.

After the activation, the tasks of Monitoring (MON) and Performance Reporting (PREP) are carried out in a continuous manner, as they intend to supervise specific Key Performance Indicators (KPIs) and QoS fulfillment. Furthermore, in order to maintain the efficiency of the allocated resources, the Resource Capacity Planning (RCP) task calculates the slice resource usage and the performance relative to the allocated resources. This task may also take into account traffic predictions in order to calculate forthcoming performance. The outcome of this task may trigger the slice parameter modification (SPM) task which generates modification polices that may affect certain slice parameters. The SPM task may be triggered by receiving new network slice requirements or new supervision/reporting results. For all the aforementioned tasks of this phase, AI/ML has been proposed to enhance them.

Finally, the operation phase includes the deactivation task that is instantiated by reports indicating that a specific network slice is no longer required. This task renders the NSI inactive and stops the provision of communication services to end-users associated with that slice.

3.4.4. Decommissioning Phase

In this phase, all reserved resources that were dedicated to the specific slice are released. Meanwhile, resources that were shared among the deactivated slice and other slices are modified to remove any configuration specific to the deactivated slice.

3.5. Network Slicing Empowered with AI/ML

The complexity of creating, operating, and managing a sliced network is very high considering that multiple network slices must coexist on top of the same infrastructure, sharing common available physical resources, while being logically isolated, independent and operated by different tenants. This renders traditional human-driven network management approaches inadequate and turns machine-driven management approaches into the only option []. This is the reason why AI and more specifically ML approaches have been widely proposed in the literature to empower NS.

Furthermore, the 5G System (5GS) has been fully specified by 3GPP TS 23.501 in ref. [] and consists of various Network Functions (NFs) for both the user and the control plane, each one of which is responsible for implementing specific functionalities. For instance, one of the most popular NF is Access and Mobility Management Function (AMF), a control plane key function of the 5G CN, which is responsible of registering the UEs to the network, authenticating them and authorizing their access to services. Each NF has appropriate service interfaces for exchanging the necessary information with other NFs or other sources. For instance, AMF utilizes the Namf and N1 interfaces in order to exchange information with other NFs and the UE, respectively.

An NS-related NF in the 5GS is the Network Slice Selection Function (NSSF), which is responsible for selecting the appropriate slice for a UE. AMF exchanges UE-related data with the NSSF through the N22 interface and after processing them, NSSF informs AMF about the slice that fits most the UE. This is, in brief, the slice selection process, one of the processes performed during the Operation phase of the slice life-cycle. Every process that needs to be carried out during the slice life-cycle is part of a corresponding NF, which exchanges the necessary input and output data through the corresponding interfaces.

The elaboration of ML methods has been widely proposed in order to tackle the challenges and solve the problems arising during the application of the different NFs related to almost all the tasks of the three first phases of the network slice life-cycle, which were described in the previous subsection. Briefly, in the Preparation phase, ML has been proposed to perform slice feature selection and extraction, traffic and congestion prediction, embedding or placing VNFs and admission control. In the Commissioning phase, ML has been proposed for adaptive resource reservation and both SIC and RIAC. Finally, in the Operation phase, ML has been proposed for solving decision making and optimization problems, for various predictions and for allocating resources. In Section 5, these applications are presented in detail.

4. ML Methods

ML is a subset of AI that focuses on teaching machines to process data efficiently. Depending on the nature of the data used for training, ML techniques can be broadly categorized into four main groups: Supervised Learning (SL), Unsupervised Learning (UL), Semi-Supervised Learning (SSL), and Reinforcement Learning (RL) [,]. However, besides these broad known categories that are based on the nature of feedback provided to the algorithm during the learning process, in the literature, there are many other classifications following different criteria, such as the purpose for which the ML is used for, the manner in which the model evolves in response to feedback [], or the processed dataset exchanging approach []. In this survey, we will adopt the classification that is based on the nature of the data used for training. Furthermore, Neural Networks (NNs) cannot be confined to a single category, as they serve as fundamental tools applicable across the four aforementioned categories. For this reason, NNs are positioned here within both SL and RL, with specific variants, such as Feed-Forward NNs (FFNNs), included in both categories.

It is important to note that this work focuses solely on presenting the ML methods proposed in the literature to address problems related to NS applications in 5G and Beyond 5G Networks. A detailed description of the functionality of these methods is beyond the scope of this study. Readers are encouraged to consult the respective referenced articles for more comprehensive information on the functionality of these methods and their various intricacies.

4.1. Supervised Learning

In SL techniques, labeled training datasets are used to build models. This process involves providing the algorithm with sample data pairs of inputs and desired outputs as training data. The primary objective of these techniques is to construct a function that maps the inputs to the outputs. Consequently, given new inputs, this function will be capable of estimating the corresponding unknown outputs. The output of the function can be either continuous numeric values (in the case of regression) or class labels for the input values (in the case of classification) [].

Indicative methods of this category include Support Vector Machine (SVM), Least Absolute Shrinkage and Selection Operator (LASSO), Random Forest (RF), k-Nearest Neighbor (KNN), Gradient Boosting Decision Tree (GBDT), and several different Artificial Neural Networks (ANNs) like the Multi-Layer Perceptron (MLP), Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), etc.

4.2. Unsupervised Learning

In UL techniques, unstructured data or data without labels are provided to the learning algorithm as input. The algorithm is tasked with identifying patterns or structures within the input data, even though no explicit feedback is provided. Such techniques are mainly used for data clustering, dimensionality reduction, and density estimation [,].

Algorithms in this category include K-means, Spectral Clustering, Principal Component Analysis (PCA), Sparse Autoencoder (SAE), and Expectation Maximization (EM).

4.3. Semi-Supervised Learning

SSL techniques combine both labeled and unlabeled data for the training. An enormous amount of unlabeled data and sparse labeled data are used to mainly create an appropriate model of data classification [].

An algorithm in this category that is related to NS is the Variational Autoencoders (VAEs) for Semi-Supervised Learning.

4.4. Reinforcement Learning

In RL techniques, the entities of the agent (i.e., the learning algorithm) and the environment are introduced. The learning process is based on a series of interactions between these entities. Specifically, the agent first receives percepts containing the state of the environment (or system). The agent then performs an action that results in a change in the environment’s state. Based on this new state, the agent receives feedback in the form of a reward or a penalty. This action and feedback process is iterated until the agent learns to navigate the environment effectively. The two key factors that characterize RL techniques are the transition model (which defines how the environment transitions from one state to the next) and the policy (which determines the action taken in a given state) [].

Algorithms included in this category are the Markov Decision Process (MDP), the State–Action–Reward–State–Action (SARSA), Q-Learning (QL), the Linear Function Approximation (LFA), the Multi-Armed Bandit (MAB), etc.

5. Phase-Related ML Applications

The exploitation of ML methods is a key enabler of the NS concept in 5G. Consequently, the majority of research works in the field of NS refer to AI/ML applications as a cornerstone for making the NS concept feasible. Some key applications of ML techniques in the context of NS include classification, prediction, clustering, and pattern recognition.

In this section, we will summarize, for each slice of the life-cycle phase, the applications of AI/ML that have been proposed in the literature, in order to address potential challenges that arise during NS implementation.

5.1. ML in Preparation Phase

Various ML techniques can be applied in different tasks of the Preparation phase. Singh et al. [] used SVM and K-Means for slice design (SD). More specifically, the authors, in their Network Sub-Slicing Framework, applied SVM for feature selection and K-Means for grouping similar services, for use cases such as IoT. Experimental results showed improved performance in terms of latency and energy efficiency.

In addition to slice design, ML methods can be incorporated during the Network Environment Preparation (NEP) task. To this end, Bega et al. [] analyzed 5G infrastructure markets from the perspective of a mobile network operator, focusing on how the operator should manage network slice requests to maximize revenue while ensuring that existing slice guarantees are upheld. They modeled this challenge as a Semi-Markov Decision Process (SMDP) and proposed a QL algorithm for slice admission control. Their evaluation demonstrates that the QL algorithm performs nearly as well as the optimal Value Iteration policy in terms of revenue and gain, and it significantly outperforms the optimal policy in perturbed scenarios due to its adaptive nature. Moreover, in ref. [], to deal with scalability issues, the authors extended their previous work by introducing the Network-slicing Neural Network Admission Control (N3AC) algorithm, a DRL algorithm that uses two FFNNs to calculate rewards for accepting or rejecting requests. Its goal is to maximize the number of accepted slice requests while maintaining QoS for existing slices. Simulation results demonstrated that N3AC achieves performance close to the optimal policy. Bakri et al. [] focused on maximizing the Infrastructure Providers’ (InPs) revenue while minimizing the SLA violation penalties. Specifically, the authors evaluated the QL, the Deep QL (DQL), and the Regret Matching (RM) algorithms’ ability to identify optimal admission policies and assessed their performance in both offline and online scenarios, which is crucial for practical deployment. The results indicated that QL and DQL perform better when trained offline before online deployment, whereas RM outperforms both QL and DQL in real-time online usage. Raza et al. [] proposed a slice admission strategy that increases InPs’ revenue by accepting as many slice requests as possible while minimizing SLA violation penalties by rejecting requests that could degrade other services. To achieve this goal, they use RL (i.e., an ANN). Performance evaluation against non-ML approaches, including static and threshold-based heuristics, showed that their RL-driven admission policy outperforms the other approaches in terms of overall loss, which includes potential revenue loss from rejected services and losses from service degradation when resource slices cannot be scaled up as needed. Business profit is also the focus in ref. []. Specifically, the authors focused on the Admission Control for Service Federation (ACSF) problem, where the admission controller selects the domain for service deployment or rejects it to maximize long-term profit while accounting for federation costs. To deal with this problem, the authors applied a specific type of RL, called average reward learning, along with QL. The results showed that the proposed method outperforms QL, which struggles due to its reliance on the reward discount factor. Sciancalepore et al. [] introduced Online Network Slicing (ONETS), an online slice broker that approves or denies slice requests by analyzing past data and using resource overbooking to maximize revenue and minimize SLA violations. They modeled the problem as a Budgeted Lock-up Multi-Armed Bandit (BLMAB) and enhanced the Upper Confidence Bound (UCB) approach to reduce complexity. Evaluation showed that ONETS outperforms non-ML-based solutions in system utilization, multiplexing gain, and SLA violation reduction. Rezazadeh et al. [,], worked on the joint slice admission control and resource allocation problem, which lays in both Preparation and Commissioning phases; thus, their work will be presented in Section 5.2.

On top of these studies, in the literature, there have been works that apply ML for both NEP and Network Function Evaluation (NFE) tasks. Guan et al. [] presented a hierarchical resource management framework for customized slicing where multiple InPs manage multi-tenant E2E slices. The authors introduced a global resource manager that, within its functional plane, deploys a Service Broker responsible for the admission control of real-time slice requests from multiple tenants using the DQL algorithm. The framework aims to optimize admission control decisions and maximize the average reward by reserving resources for requests that generate higher revenue for the InP. Evaluation results demonstrated that the proposed intelligent framework achieves better service quality satisfaction compared to non-intelligent approaches. Sulaiman et al. [] proposed a multi-agent DRL solution to address NS and admission control in 5G Cloud-RAN (CRAN), aiming to improve long-term InP revenue. Their multi-agent DRL approach uses separate reward functions for each agent based on admission control and slicing policies, which allows both agents to work synergistically without interference. The evaluation results showed that the proposed solution outperforms greedy and single-agent DRL approaches in terms of InP revenue and average available bandwidth. Yan et al. [] tackled the automatic Virtual Network Embedding (VNE) problem, where Virtual Network Requests (VNRs) must be mapped onto substrate network resources. Each VNR requires specific resources and, if available, these resources are allocated; otherwise, the VNE algorithm may reject or postpone the request. The authors proposed a DRL-based VNE scheme that uses a Graph Convolutional Network (GCN) for feature extraction and uses Asynchronous Advantage Actor–Critic (A3C) for parallel policy gradient training, optimizing the VNE policy with considerations in terms of request acceptance, long-term revenue, load balance, and policy exploration. Simulations showed that the proposed A3C-GCN scheme significantly improves the acceptance ratio and average revenue compared to other state-of-the-art methods. Rkhami et al. [] also addressed the VNE problem in core slices using DRL. They proposed a two-step approach: first, applying a heuristic to find a sub-optimal solution, then optimizing with DRL. In their model, called Improving the Quality of VNE Heuristics (IQH), the system states are represented as heterogeneous graphs using a Relational GCN (RGCN), and action probabilities are calculated with an MLP. The goal is to improve the revenue-to-cost metric. Simulations showed significant improvements while using the First-Fit and Best-Fit heuristics. Esteves et al. [] proposed a Heuristically Assisted DRL (HA-DRL) approach for the Network Slice Placement (NSP) problem, using a GCN for feature extraction and A3C for solving the NSP. HA-DRL aims to minimize resource consumption, maximize slice acceptance, and balance node load. It incorporates a modified Actor Network with a heuristic layer based on the Power of Two Choices (P2C) algorithm. HA-DRL was compared against pure DRL and P2C, showing faster convergence, near-real-time placement, and better acceptance ratios. Kibalya et al. [] tackled the slice deployment challenge across multi-provider infrastructures, focusing on the candidate search problem. In response, they proposed the Candidate Search Algorithm (CaSA), which filters feasible InPs based on network topology and slice constraints. A DRL neural network is then used to select the optimal InP set, maximizing the revenue-to-cost ratio for slice deployment.

Another task in which ML can be incorporated in the Preparation phase is Capacity Planning (CP). Aboeleneen et al. [] proposed the Error-aware, Cost-effective and Proactive NS Framework (ECP) for CP, predicting traffic levels and proactively creating slices for increased demand. ECP operates in two phases: the first uses prediction models like the RF, the AutoRegressive Integrated Moving Average (ARIMA), and the Long Short Term Memory (LSTM) to forecast future load, while the second uses a DRL method with Proximal Policy Optimization (PPO) to create cost-effective slices. Simulations showed that LSTM provides the highest accuracy, and ECP allocates lower-cost slices efficiently. ECP’s first phase aligns with the Preparation phase, and the second phase aligns with the Commissioning phase. Dandachi et al. [] proposed a Cross-slice Admission and Congestion Control algorithm, dealing with both NEP and CP tasks. Their solution combines admission and congestion controllers using the SARSA algorithm with LFA in order to optimize resource utilization and system performance by reducing the rejection of best-effort slice requests and increasing the acceptance rate of guaranteed QoS slices.

Finally, in the Preparation phase, according to ref. [], another task in which ML can be applied is the prediction of service demand. Techniques like RNNs can analyze historical data to make accurate predictions of service demand for a slice, which can then inform decision making during the Commissioning phase.

Table 4 summarizes the related tasks, the allocation, the use case and network domain types, the ML category, as well as the method proposed, and whether that method was tested for each of the referred works of the preparation phase.

Table 4.

Summary of ML-based NS in the Preparation phase (SD: slice design, NFE: Network Function Evaluation, NEP: Network Environment Preparation, CP: Capacity Planning).

5.2. ML in Commissioning Phase

The main tasks to be carried out during the Commissioning phase involve slice creation, including resource reservation and orchestration, service grouping, and resource allocation. Once a slice request is accepted and preparation for its creation begins, the necessary resources must first be reserved to ensure that they are available for allocation to the new slice later.

According to the network services that each slice should provide, the appropriate VNFs should be assigned to it. For this placement, AI/ML methods, like Deep Learning (DL), can be applied [] to ensure a dynamic approach that adapts to time-varying service demands while meeting service delay requirements. Additionally, the appropriate resources must be reserved for each slice, prior to its creation, based on the service demands that each slice is expected to handle. Since these demands are influenced by time-varying data traffic loads, resource reservation should be adaptive to these variations. To address this, RL methods, such as Deep Deterministic Policy Gradient (DDPG), can be employed for efficient resource management.

Extending the aforementioned VNF placement task, the authors of ref. [] state that the placement of the VNFs on the slices needs to be carried out autonomously, as this is a key aspect of the Zero-touch network and Service Management (ZSM) in 5G and beyond networks. With that said, they propose a mechanism called SCHEMA, which is a ZSM scheme that emphasizes the scalability of multi-domain networks and the minimization of service latency. The complex multi-domain slice service placement problem is modeled as a Distributed MDP, and a Distributed RL approach is employed to solve it by placing RL agents in each domain, orchestrating in this way the VNFs independently of other RL agents in different domains. The proposed scheme was evaluated and proven to be able to significantly reduce the average service latency when compared against a centralized RL solution.

The last task of the Commissioning phase, denoting the creation of the network slice, is the initial allocation of network resources to the new slice. The resources to be allocated vary and include communication (radio), caching, and computing resources. In this resource allocation process, AI/ML methods can be applied [,]. The initial allocation of resources takes place in this phase to enable the slice, even though the amount of allocated resources may alter according to the needs of the slice in the next phase.

In ref. [], Zhang et al. addressed the problem of vehicular multi-slice optimization, focusing on slice allocation strategies. Specifically, their proposal aims to calculate the priority queue of slice requests waiting to be deployed on the RAN, taking into account the system status, available spectrum resources, and the deployment delay constraints of each request. The optimization objective is to select a priority queue that minimizes average latency while maximizing overall service utility. To solve this optimization problem, the authors employed QL and developed an algorithm called the Intelligent Vehicular Multi-slice Optimization (IVMO) algorithm. To demonstrate the effectiveness of their approach, they conducted simulations and compared IVMO’s performance—measured in terms of the number of requests in the queue and service utility—against two other non-ML-based methods (fair allocation and greedy algorithm). According to the simulation results presented, the proposed IVMO algorithm achieves better performance on both metrics.

In ref. [], Quang et al. worked on the VNF-Forwarding Graph (VNF-FG) embedding problem, which involves deploying service requests on the CN by allocating resources to meet the service requirements in terms of QoS, while adhering to the constraints of the underlying infrastructure. Each VNF-FG request consists of VNFs connected by Virtual Links (VLs). VNFs require specific computing resources, such as CPU, RAM, and storage, while VLs are characterized by network-oriented metrics, e.g., bandwidth, latency, and packet loss rate. The authors formulated the VNF-FG allocation problem as an MDP with appropriate states and actions, aiming to maximize the number of accepted VNF-FGs. A VNF-FG is considered accepted only if all its VNFs and VLs are allocated and the QoS requirements are satisfied. To address this complex problem, the authors proposed an enhanced DDPG algorithm called Enhanced Exploration DDPG (E2D2PG). E2D2PG incorporates the concepts of “replay memory” and the Heuristic Fitting Algorithm (HFA) to determine the embedding strategy. To evaluate the performance of their proposed algorithm, the authors conducted simulations comparing E2D2PG with the standard DDPG. The results demonstrated that E2D2PG outperforms DDPG in terms of acceptance ratio and the percentage of deployed VLs.

In ref. [], Zhao and Li proposed an RL-based approach for the cooperative mapping of slice requests to physical nodes and links. Their proposed algorithm, named RLCO, aims to deploy network slice requests to the CN by determining the optimal slicing policy. This policy cooperatively maps requests to physical nodes and links, considering the real-time resource status of the substrate network while maximizing the request acceptance rate and the earning-to-cost ratio. In the proposed mapping scheme, RLCO handles node mapping, Dijkstra’s algorithm is used for link mapping, and an RL-based iterative process is applied to identify the mapping strategy that maximizes a reward function. This reward function accounts for the cross-impact among benefits, costs, nodes, and links.

There also exist approaches that tackle problems lying in both the Preparation and Commissioning phases. For instance, the ECP NS framework proposed in ref. [], which was already presented in Section 5.1, has two phases, the first of which belongs to the Preparation phase, while the second belongs to the Commissioning phase. During the second phase, a PPO method is used to create cost-effective slices, based on the forecast load derived in the first phase. Another example is presented in ref. [], where the authors focused on a CRAN joint slice admission control and resource allocation problem. They first formulated this problem as an MDP and then applied an advanced continuous DRL method, called Twin Delayed DDPG (TD3), to solve it. The TD3 algorithm, based on the state–actor–critic model, aims to optimize policies over time, enabling the Central Unit to autonomously reconfigure computing resource allocations across slices. This approach minimizes latency, energy consumption, and the instantiation overhead of VNFs for each slice.

In their later work [], the authors tackled the same joint problem in a B5G RAN environment. In particular, they proposed an NS resource allocation algorithm based on a lifelong zero-touch framework. This algorithm, termed prioritized twin delayed distributional DDPG (D-TD3), differs from TD3 in that it employs distributional return learning instead of directly estimating the Q-value. Here, the Q-value is represented as a distribution function of state–action returns. Additionally, the authors incorporated a replay buffer, allowing D-TD3 agents to memorize and reuse past experiences. For evaluation purposes, the proposed algorithm’s performance was assessed in terms of admission rate, latency, CPU utilization, and energy consumption against other state-of-the-art DRL approaches, such as TD3, DDPG, and Soft–Actor–Critic (SAC). The results demonstrated that D-TD3 outperforms these methods, achieving superior results in all evaluated metrics.

Table 5 summarizes the related tasks, the allocation, the use case and network domain types, the ML category, the method proposed, and whether that method was tested for each of the referred works in the Commissioning phase.

Table 5.

Summary of ML-based NS in the Commissioning Phase (SIC: Slice Instance Creation, RIAC: Resources’ Initial Allocation and configuration, NRR: Necessary Resources Reservation).

5.3. ML in Operation Phase

The operation phase has attracted the most attention from the research community for applying ML methods to its related tasks. This is because the tasks in this phase have the greatest impact on the performance and effectiveness of NS, requiring complex real-time computations. For instance, ultra-low latency (equal or less than 1 ms for URLLC applications such as autonomous driving or industrial automation), high reliability (≥of 99.999% availability for mission-critical services), massive connectivity (support for up to 1 million devices per square kilometer in mMTC scenarios such as smart cities or sensor networks), and guaranteed high throughput (multi-Gbps rates for eMBB services, like AR/VR and UHD video streaming) have to be achieved. These requirements are highly dynamic and context-dependent, making real-time adaptation essential.

The volume of past research works in this phase is significant enough to necessitate grouping the works by broader task categories rather than the ones explicitly listed in Table 3. As such, each group encompasses the multiple tasks mentioned in Table 3. The resulting groups are as follows:

- Decision Making–Optimization–Classification

- Monitoring–Prediction

- Resource Allocation

5.3.1. Decision Making–Optimization–Classification

This group involves works that deal with problems related to the tasks of slice activation (SA), slice parameter modification (SPM), Performance Reporting (PREP) and Resource Capacity Planning (RCP).

More specifically, in SA, the decision on whether a slice instance should be activated is taken. For example, Phyu et al. [] proposed a MAB-based slice activation/deactivation approach that considers the user QoS and the network energy consumption. More specifically, the authors formulated the slice activation/deactivation problem using MDP, and then used the MAB approach to identify the near-optimal slice activation/deactivation decision while ensuring the QoS requirements for individual users are maintained. Furthermore, the authors extended their previous work in ref. [] by considering the joint slice activation/deactivation and the user association problem. The proposed multi-agent fully cooperative decentralized framework (ICE-CREAM) uses the Decentralized Partially Observable MDP (Dec-POMDP) to formulate the problem and the multi-agent partially observable state-aware MAB (POMAB) to find the near-optimal solution to the above problem.

Slice selection is another type of decision-making task performed in this phase. In ref. [], the authors proposed an ML model based on a CNN to determine the most suitable network slice for a device to associate with. Their model, DeepSlice, first predicts the traffic load for each slice (as discussed in the next subsection). Then, based on the service requested parameters, it employs a DLNN to make the slice selection decision. In ref. [], Xi et al. considered the resource slicing concept to maximize Mobile Virtual Network Operators’ (MVNOs) benefits from the shared RAN infrastructure. They proposed a mechanism for assigning users to slices, aiming to optimize the MVNO’s long-term benefits while considering resource availability. They modeled the problem as an SMDP and employed a DRL-based algorithm to address the curse of dimensionality and enable online optimization. Specifically, they used Deep Q-Network (DQN) and compared its performance with conventional QL and Random Resource Allocation (RRA). Their results showed that DQN converges significantly faster than QL. Moreover, in high-user scenarios, DQN achieves higher network throughput and total MVNO utility compared to both QL and RRA. Shome et al. [] consider the 3GPP Rel. 16, which enables User Equipment (UE) to connect to up to 8 network slices—an aspect overlooked in related works. They proposed a DRL-based slice selection and bandwidth allocation approach that considers Quality of Experience (QoE), price satisfaction, and spectral efficiency. In this approach, each virtual base station is assigned a DQN agent, which determines the slice(s) to which a user will connect, based on the service requirements and available resources. Compared to another DQN approach, the proposed method achieves better performance in terms of user satisfaction, convergence time, and bandwidth savings per user. In ref. [], Tang et al. focused on the context of intelligent vehicular networks, where the vehicles need to offload their intelligent tasks to the appropriate slices. These slices, called resource slices, must provide sufficient resources to meet the demands of the connected vehicle tasks. The authors proposed a Slice Selection-based Online Offloading (SSOO) algorithm (using a Deep Neural Network (DNN)) that leverages distributed intelligence to perform resource slice selection and vehicle assignment, and takes into account the current system environment, aiming to minimize the system’s energy cost. To evaluate their proposed scheme’s performance, they compared it with three baseline schemes: ETCORA, DRL-CORA, and GA-NSS. The selected performance metrics included average device energy consumption, total energy consumption, task completion rate, and the number of tasks processed by slices. The experimental results demonstrate that the proposed SSOO algorithm outperforms the other algorithms.

Another decision-making task related to this phase is the allocation of user service requests to slices. In ref. [], Zhang et al. proposed a slicing model and a resource allocation scheme for the CN. The main challenge they aimed to address revolved around determining the slice in which a service request should be deployed to ensure a high transmission success rate. To achieve this, they proposed a Multi-output Classification Edge-based Graph Convolutional Network (MCEGCN) which essentially is an SL-based resource allocation model that predicts the slice on which a request should be deployed to maximize the number of successfully transmitted requests. The model consists of two Edge-based Graph CNNs (EGCNs) that can extract the spatial correlations of the network’s edge features. The authors chose this approach over a DNN because, as they state, DNNs may fail in prediction since they cannot exploit the graph structure of the network’s links. For evaluation, they compared the performance of their proposed model with other approaches, including a Multi-Layer Perceptron (MLP), another neural network-based model. The performance metrics included a deployment success rate and total transmission across different utilization rates, and the results showed that MCEGCN outperforms the others. In ref. [], Tsourdinis et al. presented a framework for slice allocation based on the services running on top of a cloud-native network, enabling the creation of a fully service-aware network for B5G applications. More specifically, this framework makes accurate decisions regarding slice allocation by classifying, in real-time, the traffic exchanged by different users and by predicting the future connectivity needs of applications. The authors explored the adoption of several ML models for this task (FFNN, RNN, LSTM, Bidirectional LSTM (BiLSTM)) and concluded that a hybrid CNN-LSTM distributed learning scheme provides the best performance. Specifically, the CNN is applied to user-generated traffic for feature extraction, noise reduction, and dimensionality reduction. The processed information is then passed to the LSTM, which captures data patterns using memory components, enabling the forecasting of future data trends.

In the Operation phase, there are tasks related to optimizing slice parameters, policies, or even profit-related values. To this end, the authors in ref. [] proposed a computing resource allocation optimization approach for a fog-RAN scenario, which includes a cluster of fog nodes coordinated with an edge controller (EC). The proposed DQN-based approach can learn the network’s dynamics and adapt to them by optimizing the computing resource allocation policy of the edge controllers. Mei et al. [] introduced an intelligent network slicing architecture for Vehicle-to-Everything (V2X) services, which includes a Slice Deployment Controller (SDCon) that adjusts the network slicing configuration scheme to ensure the QoS requirements of V2X services and reduce the network cost for mobile network operators. Authors have proposed a DQN that incorporates LSTM to make the adaptation decisions, which improves the QoS compared to a non-ML-based approach. Zhang et al. [] proposed a DDPG-based pricing and resource allocation scheme in optical data centers, which encourages tenants, i.e., the service providers (SPs), to request resources in a load-balanced manner that reduces the cumulative blocking probability. Similarly, in ref. [], Lu et al. proposed a DLR-based scheme for an inter-datacenter optical network (IDCON). Simulation results confirmed that, compared to a traditional centralized approach, the proposed scheme increases the InPs’ profit and reduces computational complexity. In ref. [], Khodapanah et al. proposed a slice-aware radio resource management framework that ensures the slice KPIs’ fulfillment by fine-tuning the slice-related control parameters of the packet scheduler and the admission controller. For this purpose, authors employed an SL-based ANN whose objective is to provide the appropriate set of control parameters that maximize the KPIs.

Several works address slice reconfiguration and decision making under uncertainty. Wei et al. [] investigated the Network Slice Reconfiguration Problem (NSRP) caused by traffic variability. Specifically, the authors reformulated the problem as an MDP and then employed a Dueling Double-DQN (DDQN) to solve it, forming the proposed Intelligent NS Reconfiguration Algorithm (INSRA). Numerical results illustrate that INSRA can minimize long-term resource consumption and avoid unnecessary reconfigurations. Yang et al. [] presented an intent-driven optical network architecture combining DRL-based slicing policy generation and reconfiguration. The architecture uses a DDPG algorithm for fine-grained slicing strategies, including spectrum slicing, computing resource slicing, and storage resource slicing, which impact network components such as blocking probability, load balancing, and delay. In the evaluation, the authors compare their approach to DQN and show that the proposed method outperforms DQN in learning time, blocking probability, and resource utilization.

The reconfiguration of the slice parameters in some works is based on the exchange of the network state information between the InPs and the tenants. Rago et al. [] proposed a tenant-driven RAN slice enforcement scheme, where the slice enforcement is made by the InP based on the outcome of a DDPG algorithm that each tenant is utilizing to calculate adaptive bandwidth requests for its slices. Each tenant is aware of the overall network status as InP utilizes a DL scheme, which is a convolutional autoencoder, to the compress network status and share it with tenants. Similarly, in ref. [], the authors leveraged an SAE that encodes network contextual information, such as SNR and data load pattern, in order to exchange this information between the Base Band Units (BBUs) and the Remote Radio Units (RRUs) in an open RAN scenario.

Besides works focused solely on decision making or optimization tasks, some works jointly address both. In ref. [], the authors explored Fog-RAN slicing in a scenario with a hotspot and Vehicle-to-Infrastructure (V2I) slice instances on a RAN segment composed of Fog Access Points (F-APs) and RRUs. Specifically, they proposed a DRL-based approach to address the interdependence of caching decisions and UE associations under dynamic conditions. Experimental results showed that the proposed DRL-based solution outperforms non-ML approaches in terms of cache hit ratio and cumulative reward.

Finally, the last task in this task group is classification, wherein ML methods are applied to classify slices, use case scenarios, and traffic. Endes and Yuksekkaya [] proposed a 5-layer NN to classify users based on their service requirements and assign them to the most suitable network slice to fulfill their needs. Abbas et al. [] employed a K-means clustering algorithm to efficiently place the base stations in groups. Wu et al. [] proposed an AI-based traffic classification algorithm that classifies and allocates traffic to the appropriate slice based on the current service and network state. The authors used several ML methods in their classifier and showed that RF and GBDT outperform XGBoost and KNN in terms of accuracy.

Table 6 summarizes the related tasks, the allocation, the use case and network domain types, the ML category, as well as the ML method proposed and whether or not that method was tested for each of the referred works in the Decision Making–Optimization–Classification group.

Table 6.

Summary of works related to Decision Making–Optimization–Classification (SA: slice activation, SPM: slice parameter modification, PREP: Performance Reporting, RCP: Resource Capacity Planning).

5.3.2. Monitoring-Prediction

This group involves works that deal with problems related to the tasks of Monitoring (MON)-Performance Reporting (PREP), and Resource Capacity Planning (RCP). All of the following works propose the application of ML methods for predicting different slice-related values.

Several works focus on traffic prediction and slice selection. Thantharate et al. [] proposed an ML-based model that first leverages RF to predict the traffic load of each slice, and based on the prediction outcome, a DLNN is applied for the assignment decision. Song et al. [] introduced the ML-based traffic-aware dynamic slicing framework. This framework leverages ML for traffic prediction and allocates network resources accordingly to reduce delay and blocking probability, based on a three-layer FFNN.

Ensuring the SLA in slice services is the focal point of the work in ref. []. Specifically, the authors proposed a method for forecasting SLA violations in slice services. SLA breach prediction relies on VNF bandwidth prediction, which is performed by a hybrid model combining LSTM and ARIMA, a non-ML-based model.

Many studies focus on predicting future workloads and resource needs, helping network operators prepare in advance and avoid allocating more resources than necessary. Camargo et al. [] proposed two ML models, LSTM and RF, to forecast the expected throughput of network slices. The results showed that RF outperformed LSTM, which lacked generalization. Kafle et al. [] adopted the LASSO regression model to perform server workload predictions in a CN scenario. Bega et al. proposed two schemes: the DeepCog [], an ML-based slice capacity forecasting scheme, designed to enable anticipatory resource allocation; and the AZTEC [], a capacity allocation framework which effectively allocates capacity to individual slices, using a multi-timescale forecasting model. Specifically, DeepCog forecasts future capacity needs, minimizing resource overprovisioning and SLA violations, using a 3D CNN for encoding and MLPs for decoding. In contrast, the AZTEC is implemented by DNNs, and more specifically with 3-Dimension CNNs (3D-CNNs), which allow different slices to be examined for prediction in parallel, as they are very efficient in extracting spatio-temporal features. The forecasting ability of the different DNNs, which are part of the AZTEC, was investigated, and showed that the predictions made follow the fluctuations of the slice traffic.

Several works explore how ML can be applied to determine whether new flows can be accepted without compromising QoS. Garrido et al. [] presented a Context-Aware Traffic Predictor (CATP), and a Prediction-Based Admission Control (Pre-BAC), an admission control mechanism that exploits advanced time-series forecasting. Both approaches intend to make predictions, and both leverage ML methods to do so. More specifically, in Pre-BAC, RL was used, while in CATP, different DNN versions were used (LSTMs, 3D-CNN, Spatio-Temporal NN, and Convolutional LSTM (ConvLSTM)). Buyakar et al. [] employed the LSTM algorithm to predict future bandwidth requirements and Mondrian Random Forests (MRF) to predict E2E delay. These predictions are integrated into their admission control algorithm to determine whether a flow with specific QoS requirements can be admitted without violating the QoS of already admitted flows. Yan et al. [] proposed an intelligent Resource Scheduling Strategy (iRSS) combining DL and RL to manage network and traffic dynamics. The iRSS performs periodic traffic predictions in a large time-scale using LSTM and the predicted data are used to perform resource allocations. In their evaluation, the authors demonstrated that LSTM achieves accurate traffic predictions. Tayyaba et al. [] proposed a policy framework for optimized resource allocation in SDN-based 5G cellular networks that consists of several modules, including an adaptive policy generator, a resource manager, a traffic scheduler, and a traffic classifier. In the traffic classifier module, the authors employ LSTM, CNN, and DNN methods for traffic prediction and evaluate their detection accuracy. LSTM achieved the highest accuracy, followed next by CNN, with DNN showing the lowest accuracy. Monteil et al. [] presented a solution for determining the optimal resource reservation for a service provider based on DNN and LSTM methods. The authors compared their framework with the baseline ARIMA prediction model and found that DNN and LSTM performed better, as they captured the daily and seasonal trends of the data.

Special attention has been given to vehicular and mobile networks due to dynamic traffic patterns. Cui et al. [] aimed to optimize slice weights and reduce delay. For traffic prediction, they used ConvLSTM, combining CNN and LSTM, to model the temporal-spatial dependencies of slice service traffic. Performance evaluations showed that their predictive approach significantly reduces slice resource allocation delay compared to a non-predictive approach. Cui et al., in later studies [,], proposed LSTM-DDPG, an algorithm that uses LSTM to predict long-term traffic demand by extracting temporal correlations in data sequences, and DDPG for fine-grained resource scheduling, outperforming traditional ARIMA models. Khan et al. [] focused on joint resource allocation for URLLC and eMMB slices in vehicular networks, and used DNN to estimate Channel State Information (CSI), achieving better resource allocation without excessive signaling.

Other works also present specialized prediction models to reduce complexity or overhead. Sapavath et al. [] proposed a Sparse Bayesian Linear Regression (SBLR) algorithm for predicting CSI in large-scale multi-input–multi output (MIMO) wireless networks, achieving better prediction accuracy while avoiding the overfitting and high complexity associated with neural networks. Matoussi et al. [] introduced a real-time user-centric RAN slicing scheme for CRAN-based applications, which aims to maximize user throughput and minimize deployment cost by optimizing resource allocation, and using a BiLSTM-based DNN. Finally, Jiang et al. [] presented a framework integrating AI for intelligent tasks in NS. Two use cases were examined: MIMO channel prediction and security anomaly detection. In the first use case, a three-layer RNN was used to predict fading channels, improving the transmission antenna selection. In the second, RF and SVM were utilized to detect security threats in industrial networks, with high detection accuracy rates achieved.

Researchers are also frequently interested in slice brokering and orchestration. Gutterman et al. [] proposed a short-time-scale prediction model for RAN slice brokers, called X-LSTM. The model is a modification of LSTM inspired by ARIMA and the X-11 statistical method. Their simulations showed that X-LSTM outperforms ARIMA and standard LSTM in terms of prediction accuracy, resulting in lower slice costs. Sciancalepore et al. [] introduced a Reinforcement Learning-based 5G Network Slice Broker (RL-NSB) framework, for assisting NSBs in associating SLA requirements with physical resources that includes a traffic forecasting module, an admission control algorithm, and a slice scheduler. Similarly, Abbas et al. [] proposed a framework for multi-domain slice resource orchestration that uses LSTM to predict resource utilization (CPU, RAM, storage) and throughput for VNF running on CN slices. It also employs K-means clustering to process the predicted dataset and determine whether reconfiguration is needed.

Moreover, inter-slice balance and fairness are another research direction. Silva et al. [] evaluated SVM, ANN, and kNN for predicting QoS degradation, with SVM achieving the best performance. Bouzidi et al. [] integrated LSTM and Logistic Regression (LR) to predict congestion and support proactive slice adaptations in an SDN-based architecture. Finally, Thantharate et al. [] presented ADAPTIVE6G, an adaptive learning framework for resource management and load prediction in NS applications for B5G and 6G systems. ADAPTIVE6G aims to improve network load estimation, promoting fairer and more uniform resource management. The authors combined DL and TL for load prediction across different slices and demonstrated that their framework outperforms traditional DNN approaches in their experimental results.

Table 7 summarizes the related tasks, the allocation, the use case and network domain types, the ML category, as well as the ML method proposed, and whether or not that method was tested for each of the referred works in the monitoring–prediction task group.

Table 7.

Summary of works related to monitoring–prediction (MON: Monitoring, RCP: Resource Capacity Planning, SPC: Slice Parameter Classification).

5.3.3. Resource Allocation

This group includes works focused on resource allocation, specifically addressing the tasks of Performance Reporting (PREP), which relies on slice performance observations, and slice parameter modification (SPM), aimed at reconfiguring specific resource allocation parameters to enhance slice performance.

Some works look at distributed and collaborative resource management, where different network stakeholders—like MVNOs, infrastructure providers, and cloud providers—work together. For example, Hu et al. [] proposed a federated slicing approach using blockchain and RL, helping all parties make fair and efficient allocation decisions. Li et al. [] took a more centralized approach, applying DQL to better match resource supply with user demand in both the RAN and the core network. Cui et al. [,] extended this by focusing on vehicular networks, combining LSTM and DDPG to predict long-term traffic and adjust resources in real time; an approach that showed a strong performance in maintaining QoS.

Other researchers have turned to multi-agent or hybrid learning methods to handle more complex scenarios. Wang et al. [] combined a multi-agent RL algorithm with an RF classifier to allocate both radio and core network resources in networks that include public and private slices. Moon et al. [] presented a smart ensemble method that blends fast-learning with high-performance algorithms to improve RAN slicing. Similarly, Hua et al. [] introduced Generative Adversarial Network-powered Deep Distributional Q Network (GAN-DDQN) for better spectrum sharing and SLA satisfaction, whereas Chen et al. [] addressed dual connectivity scenarios using an LSTM-enhanced DQN to balance QoE, throughput, and energy use. Shome et al. [] proposed a DRL-based slice selection and bandwidth allocation approach, which considers multi-slice-connected UEs and multiple DQN agents that decide the bandwidth allocation to the UEs.

A number of studies focus on demand-aware or spectrum-sensitive resource tuning. Meng et al. [] used DRL to optimize bandwidth allocation in smart grid networks, while Shi et al. [] accounted for radar systems coexisting with 5G users in their spectrum allocation model. Albonda et al. [] looked at how to balance resources between eMBB and V2X slices, combining Q-learning with heuristic rules for better QoS. Nouri et al. [] worked on the joint allocation of power and Physical Resource Blocks (PRBs) to the UEs while ensuring QoS requirements and leveraged a Semi-Supervised learning approach, which combines a VAE with contrastive loss, named SS-VAE, in order optimize the overall network utility.

When it comes to dealing with interference and slice-specific needs, researchers, like Zambianco et al. [], have applied DQN to handle mixed numerology issues, reducing interference while maintaining slice capacity. Shao et al. [] designed a multi-agent system with Graph Attention Networks to manage resource sharing in dense networks. Chergui et al. [] focused on making sure that RAN resource allocation decisions also respect operator cost constraints by using neural networks trained on KPI data.

Some works explore resource control under constraints or in real-time environments. Xu et al. [] introduced a constrained version of the SAC algorithm to deal with energy and delay constraints. Liu et al. [] used a similar idea with a constrained MDP model and Interior-Point Policy Optimization (IPPO) to allocate bandwidth while considering latency. Chen et al. [] used multi-agent DQNs to optimize resource allocation across slices and tenants, showing noticeable improvements in performance metrics. Yan et al. [], in their proposed iRSS, combined large time-scale predictions acquired by LSTM and A3C in order to perform short-term resource scheduling. In their evaluation, the authors demonstrated that A3C outperforms state-of-the-art methods such as QL, AC, and a heuristic resource scheduling algorithm in terms of cumulative reward and resource utilization.

There is also a wide range of work focused on vehicular and edge networks. Sun et al. [] proposed a DRL-based resource controller for D2D vehicle communications, while Yu et al. [] presented a two-stage bandwidth allocation model using DDPG for V2X. Li et al. [] built an E2E framework that coordinates resource allocation across the RAN and Core, improving user access. Akyildiz et al. [] introduced a hierarchical multi-agent system to manage resource sharing between URLLC and eMBB slices. Zhou et al. [] followed a similar approach using cooperative Q-learning to allocate resources fairly between competing slices.

In contrast, several works tackle resource allocation at the link level or in energy-efficient ways. Wang et al. [] proposed a GCN-based solution called LinkSlice for better PRB scheduling. Li et al. [] showed how combining LSTM with A2C leads to better bandwidth allocation, especially in balancing spectral efficiency with SLA compliance. Alcaraz et al. [] focused on resource-constrained environments and proposed a lightweight, kernel-based RL method that offers strong performance without excessive computation.