1. Introduction

The integration of emotional intelligence into artificial intelligence systems has emerged as a key factor in the advancement of human–computer interaction [

1,

2,

3,

4]. In particular, the development of conversational agents capable of expressing empathy is gaining increasing attention, given their potential to foster user-centric interactions.

Empathy is a multidimensional construct defined as the ability to understand and share another individual’s emotional states while maintaining a clear distinction between self and other. In the scientific literature, empathy is typically divided into two main components: affective empathy, which involves emotional sharing, and cognitive empathy, which refers to the rational understanding of another’s emotions [

5].

From a neuroscientific perspective, empathy involves specific brain circuits, including the medial prefrontal cortex and the insula, supporting the hypothesis that the brain internally simulates the emotional states of others [

6].

In a clinical context, empathy is crucial in the physician–patient relationship. It is defined as the ability of a healthcare professional to understand the experiences of a patient and communicate this understanding effectively. Studies have shown that higher levels of physician empathy are associated with better clinical outcomes, increased treatment adherence, and reduced patient anxiety [

7].

Furthermore, empathy is a central element in communication protocols such as the SPIKES model, used in oncology. It also serves as a protective factor against burnout in healthcare professionals, contributing to their psychological well-being and the quality of care they provide [

8].

Empathetic chatbots hold significant promise to improve user experience in a wide range of domains, including customer service, education, and mental health support. Their ability to simulate empathetic responses contributes to more meaningful and emotionally attuned interactions, which are particularly valuable in contexts involving psychological vulnerability or distress.

Consider, for instance, a scenario in which a user expresses feelings of anxiety. A conventional neutral response such as “It’s important to stay calm and find a way to relax” may offer practical advice but lacks emotional resonance. In contrast, an empathetic response like “I understand that you’re feeling anxious right now, and it is okay to feel this way. Let’s take a moment to focus on something that can help you relax” demonstrates emotional attunement and validation, which are central to the therapeutic potential of empathetic communication. This distinction underscores the importance of integrating affective and cognitive empathy mechanisms into conversational agents, thereby fostering trust, emotional safety, and user engagement.

The direct development and deployment of fully empathetic AI chatbots present substantial practical, economic, and strategic challenges. Many organizations have already committed significant financial and technical resources to the creation of domain-specific conversational agents that are deeply embedded within operational infrastructures. Modifying or replacing these systems to incorporate empathetic capabilities would not only incur considerable additional costs but also pose risks of performance degradation and operational instability. This issue is particularly pronounced in vertical domains such as legal advisory, financial consulting, healthcare triage, and technical support, where chatbots are meticulously fine-tuned to deliver accurate and context-sensitive information. Embedding empathy into these models would require extensive retraining using domain-specific empathetic datasets, which are often scarce and can compromise the original task performance of the chatbot. Furthermore, the end-to-end development of a new empathetic chatbot is resource-intensive, involving multiple stages—data acquisition and annotation, model training and validation, system integration, and regulatory compliance testing. For organizations operating under budgetary constraints or lacking access to high-performance infrastructure, such an undertaking may be economically and logistically infeasible.

To address these constraints, we propose a modular architecture based on an empathy rephrasing layer. This layer, implemented through a large language model (LLM), takes the original chatbot output and returns a semantically equivalent response enriched with an empathetic tone. By decoupling the generation of empathetic expression from the core dialogue functionality, this approach enables the seamless integration of advanced affective capabilities into existing conversational systems. The result is an enhancement in user engagement and the promotion of more nuanced, human-centric interactions, without compromising the original intent of the chatbot’s response.

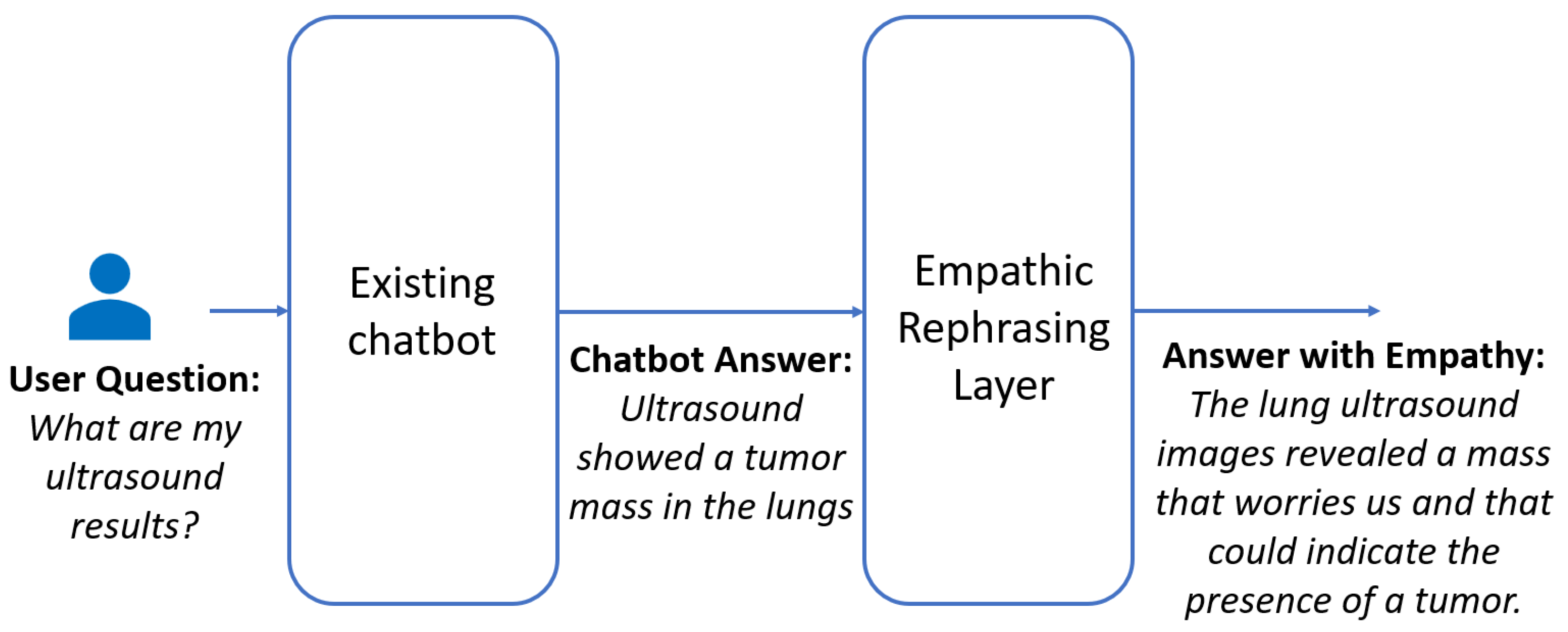

The modular architecture proposed in this work, as shown in

Figure 1, based on an empathetic rephrasing layer, offers a cost-effective and low-risk alternative. This layer operates independently of the core chatbot, taking its original output and transforming it into a semantically equivalent response enriched with empathetic language. This design ensures that the factual accuracy and domain-specific relevance of the original response are preserved, while enhancing its emotional resonance.

Crucially, this approach enables seamless integration of empathetic capabilities into existing systems without requiring structural modifications or retraining of the base model. For example, a financial chatbot already optimized for regulatory compliance and investment advice can be augmented with empathetic responses simply by cascading the rephrasing layer, thereby improving user experience without compromising precision. Similarly, in healthcare applications, where clarity and correctness are paramount, the layer ensures that empathetic phrasing does not alter the medical intent of the original message. This modularity makes the solution highly scalable and adaptable across diverse sectors, offering a strategic pathway to human-centric AI interaction while significantly reducing development time, infrastructure costs, and operational risks.

This architecture is particularly advantageous in domains where emotional sensitivity is critical. In mental health support, it facilitates reassurance and validation; in customer service, it improves perceived helpfulness; and in education, it fosters motivational engagement. The modularity of the system ensures scalability and adaptability across sectors, offering a cost-effective pathway to human-centric AI interaction.

To implement and evaluate this approach, we conducted an extensive experimental campaign involving ten small and medium-sized LLMs, selected for their deployability on single-GPU setups. We explored both few-shot learning and fine-tuning strategies using the IDRE (Italian Dialogue for Empathetic Responses) dataset [

9], which comprises 480 curated triplets focused primarily on healthcare scenarios. Each triplet includes a user message, an initial chatbot reply, and an empathetically rephrased version.

Model performance was assessed through a comprehensive, multi-layered evaluation strategy. Central to our approach is the adoption of the LLM-as-a-judge paradigm, specifically leveraging the G-Eval framework, which utilizes a state-of-the-art large language model (GPT-4o [

10]) as an objective evaluator for the outputs generated by smaller models. This automated evaluation is inspired by established protocols such as SPIKES, widely used in medical communication, and is tailored to ensure a nuanced and contextually relevant assessment across five key dimensions: empathy, knowledge, coherence, fluency, and lexical variety. While the primary focus of the dataset and evaluation was the healthcare domain, this study also systematically explored the generalizability of the rephrasing capability by generating and evaluating empathetic responses in additional domains, including work, social, legal, and financial contexts. We validated the G-Eval results and ensured inter-method agreement by integrating a human annotation study. Expert annotators, trained in chatbot evaluation, assessed a representative sample of model outputs using a Likert scale across the same five dimensions; inter-annotator consistency was quantified via Fleiss’ kappa. Finally, to fully triangulate and quantitatively support our findings, we integrated traditional NLP similarity metrics BLEU [

11], ROUGE [

12], and BERTScore [

13]. These metrics provided an essential, independent measure of the two core objectives of the task: style transfer (introducing empathetic language) and meaning preservation (maintaining semantic fidelity to the original response). This multi-pronged evaluation framework ensures both the robustness and the interpretability of our results.

The results indicate that fine-tuning large language models (LLMs) with the IDRE dataset is an effective strategy for instilling empathetic capabilities, with particularly promising outcomes on smaller models such as Llama-3.2-1B-Instruct. Moreover, fine-tuning corrected limitations observed in few-shot learning, enhancing both lexical diversity and empathy, as shown in the case of Gemma-3-1B. Finally, the empathetic skills acquired in the medical domain were shown to be transferable to other contexts, suggesting the broad generalizability of empathetic dialogue systems across diverse sectors.

These findings open new opportunities for deploying empathetic AI in a wide range of real-world applications. One potential application is in telemedicine platforms, where empathetic responses can improve patient trust and adherence to medical advice. In elderly care, empathetic chatbots can provide companionship and emotional support, helping to reduce feelings of isolation. In educational settings, tutors enhanced with empathetic capabilities can adapt their tone to better support students facing difficulties, thereby fostering a more inclusive and motivating learning environment. In addition, legal and financial advisory bots can benefit from empathetic rephrasing to deliver sensitive information—such as debt management or legal obligations—in a more considerate and human-centric manner. Finally, in human resources and workplace wellness tools, empathetic AI can assist in managing employee concerns, offering support during stressful periods, and promoting a healthier organizational climate.

Although this study focused on the Italian language, the methodology for dataset creation, model adaptation, and evaluation is inherently language-agnostic and can be extended to other linguistic contexts.

The contributions of this research are twofold. First, the primary innovation lies in the development and validation of IDRE, a resource that addresses a gap in the Italian scientific literature. Second, leveraging this dataset, we propose a novel modular architecture that demonstrates practical and scalable applicability. This architecture incorporates an empathetic reformulation layer within a decoupled design framework, enabling the integration of emotional capabilities into existing chatbot systems without requiring costly retraining or structural modifications. This approach effectively addresses both practical and economic challenges associated with empathy integration, significantly reducing development time and infrastructure costs while ensuring adaptability across diverse application domains.

To support transparency and reproducibility, the dataset, the prompts, all fine-tuned models, and the Python scripts developed in this study are publicly available through Hugging Face repositories, as detailed in

Appendix A.

2. Related Works

The development of empathetic chatbots capable of understanding and responding to human emotions is an increasingly important research area [

14]. However, achieving this goal requires high-quality datasets that capture human–machine interactions enriched with empathetic elements.

Despite the growing availability of datasets for machine learning and natural language processing, there is a notable lack of resources specifically designed for empathetic chatbots in the Italian language, which presents a significant challenge. While some datasets—such as those referenced in [

15,

16,

17,

18]—include emotional information, they primarily focus on labeling words or sentences with general emotional tags. These resources often lack the contextual depth and complexity needed to support the development of truly empathetic conversational agents.

Within the current research landscape, a line of studies has emerged comparing the empathetic capabilities of LLMs with those of humans. The results indicate that LLMs can, in certain contexts, outperform human performance in empathy-related tasks. A systematic review [

19] showed that 78.6% of responses generated by ChatGPT-3.5 were preferred over those produced by humans. Further investigations [

20], conducted on a sample of 1000 participants, confirmed the statistically significant superiority of LLMs in generating empathetic responses. In particular, the GPT-4o model showed a 31% increase in responses judged as good compared to the human baseline, while other models like Llama-2 (24%), Mixtral-8x7B (21%), and Gemini-Pro (10%) showed smaller improvements.

To further enhance the empathetic capabilities of LLMs, various methodologies have been proposed [

21]. These include semantically similar in-context learning, a two-phase interactive generation approach, and the integration of knowledge bases. Experimental evidence suggests that applying these techniques can significantly improve model performance, helping to surpass the state of the art.

Evaluation remains a critical challenge, as it is inherently multidimensional and subjective, making objective comparison difficult. In this context, Ref. [

22] highlighted that generic LLM models like GPT-4o can show limited performance when evaluated using multidimensional metrics. In contrast, classifiers based on smaller models, such as Flan-T5, subjected to fine-tuning on specific data, have been shown to outperform larger models on certain evaluation criteria.

Recent works have also explored novel architectures and evaluation strategies for empathetic chatbots. For example, Ref. [

23] proposed a system that integrates neural and physiological signals to enhance real-time emotion recognition and empathetic response generation. Ref. [

24] conducted a systematic review of empathetic conversational agents in mental health, highlighting the effectiveness of hybrid ML engines. Ref. [

25] provided a comprehensive review of emotionally intelligent chatbot architectures and evaluation methods. Ref. [

26] introduced a communication framework based on psychological empathy and positive psychology, which demonstrated improved user well-being in mental health applications.

The task of generating empathetic responses can be conceptualized as a text style transfer (TST) problem, a subfield of natural language generation focused on altering the stylistic properties (e.g., sentiment, formality, and tone) while preserving semantic content. Transforming a non-empathetic response into an empathetic one requires the model to maintain the core meaning (“what” is being said) while modifying the mode of expression (“how” it is said), a process that directly aligns with the definition of TST.

Historically, research on TST has been hampered by a shortage of parallel training data and a lack of standardized evaluation metrics. Early work attempted to overcome these challenges through the use of non-parallel data. For example, Ref. [

27] introduced a generative neural model based on Variational Auto-Encoders (VAEs) to learn disentangled latent representations, enabling controlled text generation by manipulating specific attributes such as sentiment or tense. Similarly, Ref. [

28] proposed a cross-alignment method for style transfer on non-parallel texts, relying on the separation of style and content and assuming a shared latent content distribution across corpora with different styles. This approach has been successfully applied to tasks such as sentiment modification and formality conversion.

A significant advancement was made by [

29], who formalized the evaluation problem and introduced two new metrics, “transfer strength” and “content preservation,” both highly correlated with human judgments. This shifted the community’s focus toward more rigorous and objective evaluation. More recently, Ref. [

30] conducted a “meta-evaluation” of existing metrics, assessing their robustness and correlation with human judgments across multiple languages, including Italian, Brazilian Portuguese, and French.

Within this context, empathetic rephrasing emerges as a specific and complex case of TST, where the style to be transferred involves not just lexical changes but a nuanced combination of linguistic and structural choices aimed at conveying empathy.

A critical issue highlighted by these studies is the trade-off between performance and computational costs, given that the most advanced and high-performing models require a considerable expenditure of resources. In this work, we focus on leveraging small and medium-sized models to limit costs while evaluating their effectiveness on the empathetic rephrasing task. Additionally, we employ automated evaluation to further reduce the need for costly human evaluators.

4. Results and Discussion

This section presents and discusses the results of the empathetic rephrasing task, designed to improve chatbot communication by enriching responses with empathetic content. The evaluation was conducted using the IDRE dataset as the primary resource, applying both fine-tuning and few-shot learning techniques across a range of large language models.

The analysis is structured in five parts. It begins with an assessment of model performance within the medical domain, the original context of the IDRE dataset. It then examines the generalization of empathetic capabilities to other domains, including legal, financial, social, and workplace settings. Subsequently, it focuses on models specifically adapted for the Italian language, evaluating their configurations and effectiveness. The fourth part introduces the validation of evaluation methods, where we performed agreement analysis using Fleiss’ kappa and complemented this with standard rephrasing quality metrics such as BLEU, ROUGE and BERTScore to assess lexical and semantic fidelity. The final part addresses efficiency, comparing model performance with computational costs and discussing the feasibility of automated evaluation in contrast to human annotation.

4.1. Medical Domain Performance

The first part of the analysis focuses on the medical domain, where the IDRE dataset was originally designed and annotated. This domain is particularly relevant for empathetic communication, as it involves sensitive and emotionally charged interactions.

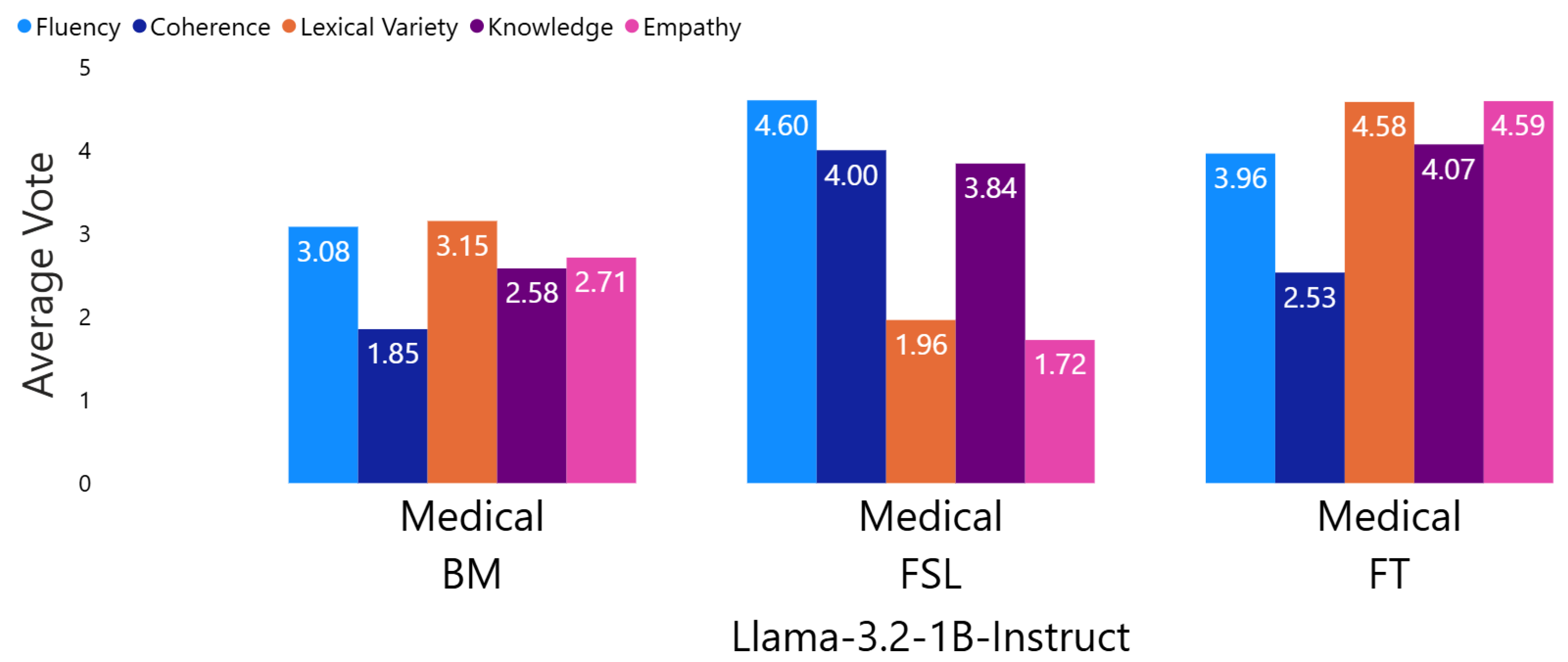

Overall, fine-tuned models consistently outperformed few-shot baselines, especially in empathy-related metrics. Notably, smaller models such as Llama-3.2-1B-Instruct and Gemma-3-1B demonstrated the most significant improvements. Llama-3.2-1B-Instruct showed an increase of 17.08% with FSL and a remarkable 32.3% with FT (see

Table 5). These increments were calculated by measuring the average percentage improvement of each model across all evaluated dimensions for each configuration (FSL and FT) compared to the baseline performance of the BM (see tables in

Appendix B). For each dimension, the score of the model using FSL or FT was compared to the corresponding score of the BM, and the relative percentage increase was computed. The final reported value represents the mean of these percentage increases across all dimensions, providing a comprehensive view of the model’s overall enhancement. The overall mean increments are reported together with their 95% confidence intervals (CIs): FSL 0.013 (95% CI: −0.053, 0.080) and FT: 0.067 (95% CI: 0.001, 0.133). This data is crucial, suggesting that for models with fewer parameters, FSL and FT techniques can have a proportionally greater impact, enabling them to achieve performance levels closer to those of larger, computationally more expensive models. This result opens promising perspectives for implementing lighter models in resource-constrained environments.

A peculiar case is represented by the Gemma-3-1B model. Initially, the application of FSL led to a significant penalty of −20.42%. This performance decrease was attributed to the model’s tendency, in FSL mode, to reproduce the original sentence instead of generating an effective rephrasing. This behavior negatively impacted the evaluation dimensions, particularly “lexical variety” (average score of 1.28) and “empathy” (average score of 1.59), as shown in

Figure 5. However, the model’s behavior was substantially corrected with the application of fine-tuning. With FT, “lexical variety” drastically increased to 4.34 and “empathy” to 4.93, positioning Gemma-3-1B among the top-performing models for these specific evaluation dimensions after fine-tuning. This phenomenon suggests that for certain models, fine-tuning can act as a corrective mechanism for intrinsic limitations observed during few-shot learning, significantly enhancing their ability to generate diverse and empathetic text, which are crucial attributes in the medical domain for effective and patient-centered communication. A similar phenomenon, although starting from lower performance than the base model, is also observed with the Llama-3.2-1B-Instruct model, as illustrated in

Figure 6.

Table 6 illustrates an example of a rephrased sentence generated by the LLaMAntino-3-ANITA-8B-Inst-DPO-ITA model in its base model (BM), fine-tuning (FT), and few-shot learning (FSL) configurations. It is observed that the original sentence directly conveys information about a severe respiratory infection.

In the BM version, the model reformulates the sentence with all the information from the original but without an increase in empathy.

With FSL, the model inserts phrases such as “I am concerned” (“sono preoccupato” in original version) and “I want to reassure you” (“voglio assicurarmi” in original version) with the aim of empathizing with the patient; however, the necessity for urgent treatment is not mentioned.

Conversely, in the FT version, the concerning health status is immediately highlighted, followed by information regarding the presence of a severe respiratory infection, and finally, details about the urgent intervention are provided.

This example clearly demonstrates how the FT-generated sentence is the most empathetic while retaining all the essential information from the original statement.

Table 7 presents the relative performance improvements of all models in FT and FSL configurations compared to the baseline model across all evaluation dimensions.

Among these, empathy emerges as the metric with the most substantial improvement, particularly in the FT configuration, where gains reach up to +40% relative to the BM.

Conversely, models with approximately 1 billion parameters (LLaMA-3.2-1B-Instruct and Gemma-3-1B) exhibited poor performance in FSL for empathy and lexical variety. Qualitative analysis indicates that these models frequently failed to perform meaningful rephrasing in FSL settings, often replicating the input sentence verbatim. This tendency is further supported by the high scores observed in the coherence dimension for these models (53.75% and 37.2%, respectively). Elevated coherence scores suggest that the output sentences maintained a high degree of internal consistency—an outcome that is expected when the input is minimally altered or directly copied. Therefore, the lack of rephrasing in FSL settings is not only evident qualitatively but also quantitatively reflected in coherence metrics. This behavior likely stems from the limited contextual information provided in few-shot prompts, which may be insufficient for smaller models to generalize effectively.

A notable trend is observed in the coherence metric under FSL, where most models experienced a performance drop. This phenomenon appears to be linked to the models’ tendency to prioritize empathetic expression over semantic fidelity when exposed to minimal examples. For instance, given the original input sentence “Your illness is terminal.” an FSL-generated reformulation was “I’m very concerned about your situation and understand how difficult this news must be. I’m here to support you every step of the way, to listen, to accompany you, and to help you manage your better self during this difficult time.”

While the reformulated sentence demonstrates increased empathy, it significantly diverges from the original content, thereby reducing coherence. However, this phenomenon does not occur in the FT versions of the models, where a higher coherence is observed instead.

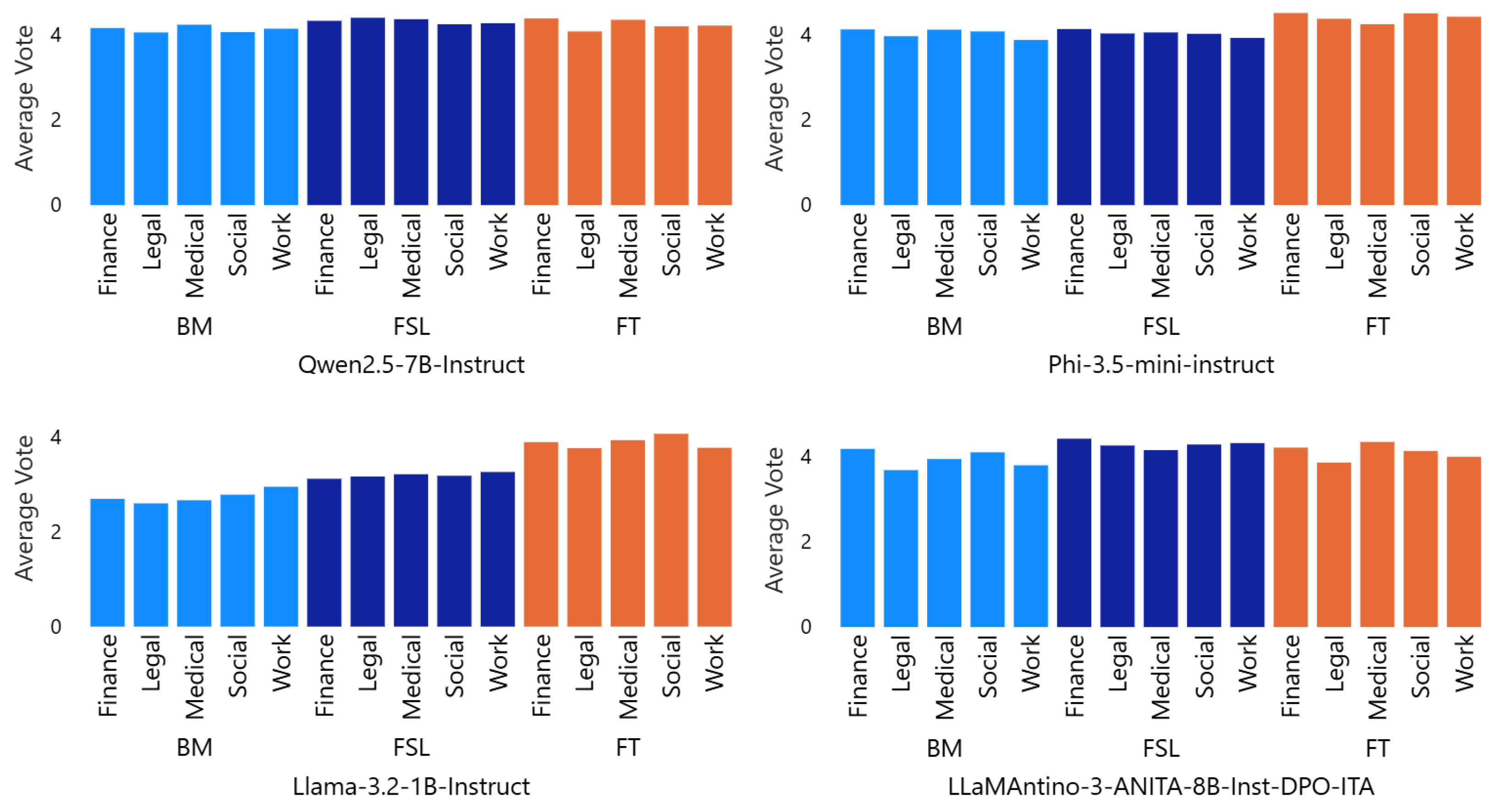

4.2. Cross-Domain Generalization

An analysis of model performance on sentences from non-medical domains reveals that most models exhibit a satisfactory ability to generalize, maintaining empathy levels comparable to those observed in the medical context. This trend is illustrated in

Figure 7, which reports the average scores achieved by four models (Qwen2.5-7B-Instruct, Phi-3.5-mini-instruct, Llama-3.2-1B-Instruct, and LLaMAntino-3-ANITA-8B-Inst-DPO-ITA) across all topics. Notably, the models’ performance in finance, legal, social, and welfare domains appears broadly aligned with their results in the medical domain.

Table 8 provides a comparative analysis of empathy-related performance improvements, measured in both FSL and FT configurations relative to the BM.

However, some exceptions emerge. The Minerva-7B-instruct-v1.0 model, for instance, showed significantly lower performance in non-medical domains, with negative empathy improvements in almost all cases (finance: FSL −17.86%, FT −7.61%; legal: FSL −34.57%, FT −45.33%; work: FSL −26.15%, FT −7.89%; and social: FT −9.64%). This contrasts with its positive gains in the medical domain (FSL +10.11%, FT +12.73%), suggesting limited generalization capabilities.

Smaller models, such as gemma-3-1b and Llama-3.2-1B-Instruct, displayed a recurring pattern: suboptimal performance in the FSL configuration, followed by substantial improvements after fine-tuning. This underscores the critical role of FT in adapting lightweight models for empathetic tasks across diverse domains.

Conversely, models like Phi-3.5-mini-instruct and Llama-3.1-8B-Instruct achieved greater empathy improvements in non-medical domains compared to the medical one. For example, Phi-3.5-mini-instruct recorded a peak improvement of 18.33% (FT, social) versus 5.11% in the medical domain, while Llama-3.1-8B-Instruct reached 33.02% (FSL, work) compared to 8.17% (FSL, medical). These results suggest that such models may be inherently more versatile in general contexts than in specialized ones.

Finally, the granite-3.1-8b-instruct model maintained stable performance, with small but consistent empathy improvements across all domains. This behavior likely reflects its high intrinsic empathy baseline, leaving a narrower margin for improvement compared to models starting from lower initial performance.

4.3. Italian Model Evaluation

An additional focus of this study is the evaluation of sentence generation quality in models specifically tailored for the Italian language:

LLaMAntino-3-ANITA-8B-Inst-DPO-ITA demonstrates substantial improvements over the baseline, with performance gains of +5.06% in FSL and +9.22% in FT. These results highlight the effectiveness of the combined instruction tuning and DPO approach, particularly when supported by deep adaptation via fine-tuning. Notably, LLaMAntino excels in the empathy dimension, achieving a +23.74% improvement in FT—one of the highest scores across the entire evaluation. The model also demonstrates balanced performance across other dimensions: it enhances text fluency and lexical diversity, indicating strong stylistic control and vocabulary enrichment without compromising semantic coherence. However, structural coherence shows some instability in FSL, which is effectively mitigated through FT, suggesting that supervised optimization can address architectural limitations. In terms of knowledge integration, LLaMAntino performs well, with consistent improvements likely attributable to careful source selection during training.

Minerva-7B-instruct-v1.0 presents a more nuanced profile. It achieves greater improvement in FSL (+4.19%) than in FT (+2.41%), suggesting strong intrinsic comprehension and generalization capabilities, likely stemming from its pre-training phase. The model appears particularly well-suited for extracting relevant information from limited examples, reducing reliance on fine-tuning. In the empathy dimension, Minerva also performs well, with gains of +10.11% in FSL and +12.73% in FT, although less pronounced than LLaMAntino. In the knowledge dimension, Minerva surprisingly outperforms the baseline in FSL, but exhibits weaknesses in coherence and fluency under FT, indicating a higher sensitivity to adaptation strategies. Lexical diversity improves moderately, and empathetic expression remains one of its core strengths.

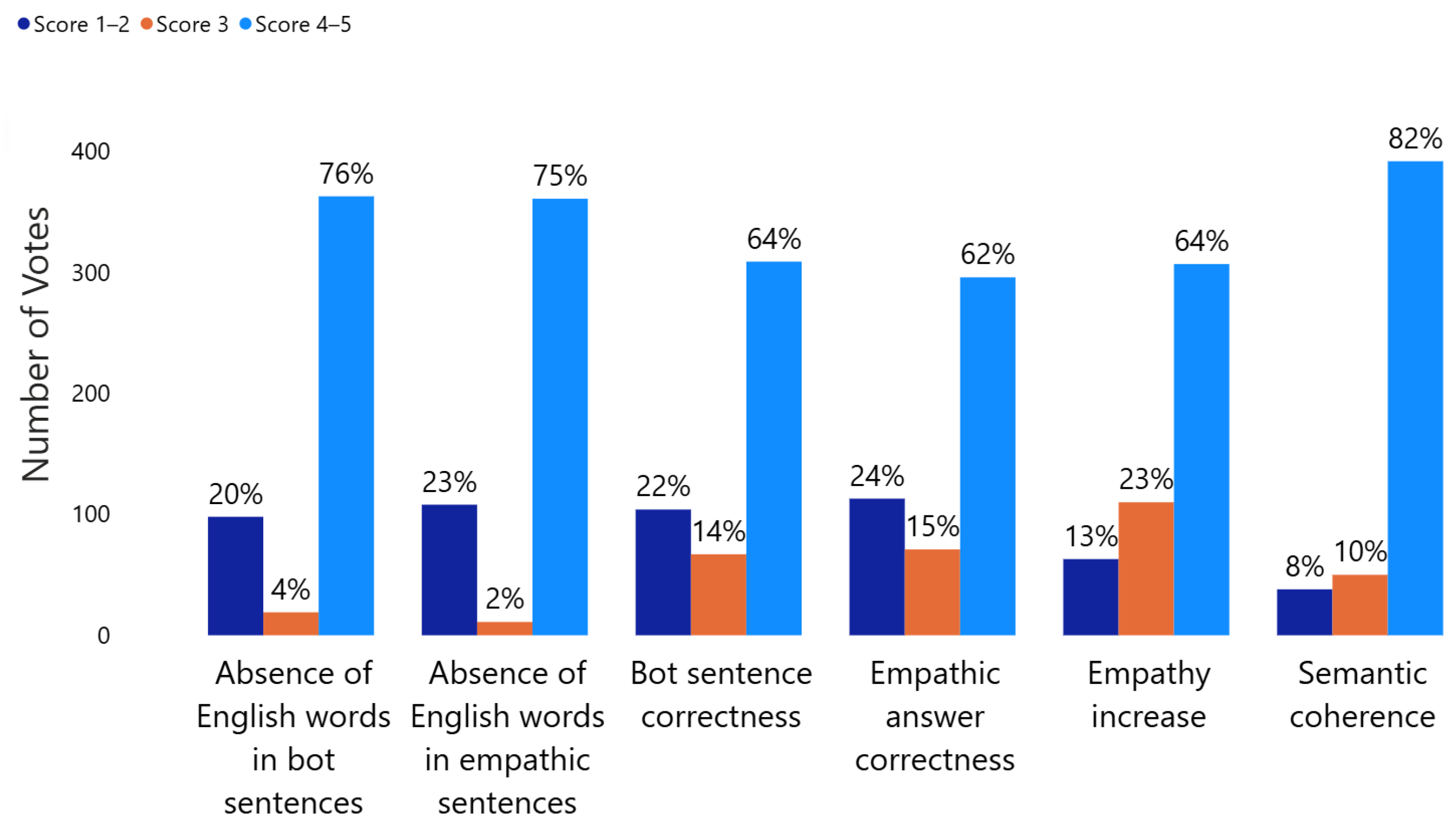

4.4. Inter-Rater Agreement and Metric Validation

To assess the impact of including G-Eval as an annotator, Fleiss’ kappa coefficient was calculated under two scenarios: three human annotators and a combination of two human annotators plus G-Eval. All human annotators were experts in the NLP domain and received dedicated training on the evaluation criteria prior to the assessment. The total number of raters was kept constant to avoid the statistical artifact introduced by increasing the number of annotators, which tends to lower the kappa value simply due to the higher probability of disagreement. This methodological choice enables us to attribute any observed variation in kappa specifically to the inclusion of G-Eval. The results obtained are reported in

Table 9.

The findings show a reduction in agreement across all metrics, with decreases ranging from (fluency) to (empathy). Fluency and knowledge exhibit only marginal decreases, suggesting that G-Eval is relatively consistent with human raters when evaluating structural and informational aspects. Coherence and empathy show more pronounced reductions, confirming that semantic and affective dimensions are more challenging for an automated model to replicate. Lexical variety records a significant drop, likely due to divergent interpretations of sentences where the rephrasing consisted of adding a brief empathetic segment at the beginning or end of the original sentence. In these cases, some annotators reported uncertainty about the appropriate score, oscillating between low ratings (since most of the text was unchanged) and high ratings (because the addition introduced a stylistic change perceived as relevant). It is important to note that empathy is inherently subjective: the annotators reported difficulties in establishing uniform criteria, particularly when the empathetic tone was implicit or ambiguous, which contributed to increased variability in the ratings. Similarly, for knowledge, some raters highlighted the complexity of distinguishing between informational clarity and empathetic tone, resulting in variability in the ratings.

In addition to inter-rater agreement, we further assessed the outputs using automatic similarity metrics to provide a complementary perspective on style transfer and semantic preservation, as summarized in

Table 10.

The combination of these results strongly supports the hypothesis that the empathetic rephrasing layer operates effectively, achieving its objectives while maintaining appropriate constraints on meaning.

The low scores for BLEU-4 (0.17) and ROUGE-L (0.38) validate the success of the style transfer. These values confirm that the model’s output is not a mere verbatim copy or a trivial synonym substitution of the source sentence. Instead, the model introduced significant lexical and structural changes, such as opening phrases for empathy and modal modifiers, which necessarily penalize n-gram-based metrics but are essential for the required emotional shift.

The most critical finding for substantiating the model’s viability is the BERTScore F1 value of 0.70. This result demonstrates a substantially preserved level of semantic coherence between the original neutral message and the empathetic output. Although this score is not maximal (which is typically in the 0.85–0.95 range for pure paraphrasing tasks where the style is unchanged), the 0.70 figure must be interpreted within the inherent style transfer vs. meaning preservation trade-off.

The observed deviation from the ideal BERTScore is a direct consequence of the empathetic intervention. Introducing subjective, emotional modifiers (e.g., “I understand this is difficult,” or “I am sorry to hear that”) inevitably alters the global semantic vector of the sentence, even when the factual kernel remains untouched. This slight reduction in semantic identity is thus considered the necessary cost for achieving emotional efficacy. The 0.70 value is strong evidence that the model successfully avoids semantic drift or factual inconsistencies, confirming that the rephrasing layer is a controlled mechanism that enhances emotional intelligence without fundamentally compromising the informational integrity of the base task.

4.5. Time and Cost Analysis

This section provides a quantitative assessment of the time and costs associated with the fine-tuning process and subsequent phrase generation. As outlined in

Section 3.2.1, the evaluated LLMs were grouped into two categories based on their parameter count. All measurements were conducted using a Standard_NC16as_T4_v3 virtual machine within the Microsoft Azure cloud environment, as described in

Section 3.2.3. To evaluate efficiency and cost-effectiveness, a benchmark was performed by generating 100k empathetic phrases per model.

The fine-tuning analysis revealed that small-scale models required an average of 5 min per training session, with an estimated cost of EUR 0.08. In contrast, medium-scale models exhibited longer training durations, ranging from 8 to 15 min, with corresponding estimated costs between EUR 0.15 and EUR 0.30.

For the generation task, small-scale models produced 100k phrases in approximately 17 h, incurring a cost of EUR 18. Medium-scale models completed the same task in around 50 h, with an estimated cost of EUR 54. For comparative purposes, the cost of generating 100k equivalent empathetic phrases in Italian via human annotators on the Amazon Mechanical Turk (MTurk) [

53] platform was estimated. Assuming a reward of USD 0.1 per phrase and a 20% platform fee, the total cost for human-based generation amounted to USD 12k (approximately EUR 11,280). To provide a benchmark with state-of-the-art large language models, we estimated the cost of generating 100k empathetic phrases using GPT-4o via API. Assuming an average of 50 tokens per phrase, the total input and output tokens required would be 5 million each. According to OpenAI’s pricing (USD 5 per 1 M input tokens and USD 15 per 1 M output tokens), the total cost for generating 100k phrases is USD 100 (approximately EUR 94). The costs are summarized in

Table 11.