Abstract

The development of artificial intelligence has inevitably led to the growth of deepfake images, videos, human voices, etc. Deepfake detection is mandatory, especially when used for unethical and illegal purposes. This study presents a novel approach to image deepfake detection by introducing the Custom-Made Facial Recognition Algorithm (CMFRA), which employs four distinct features to differentiate between authentic and deepfake images. The proposed method combines facial landmark detection with advanced statistical analysis, integrating mean Mahalanobis distance and three head pose coordinates (yaw, pitch, and roll). The landmarks are extracted using the Google Vision API. This multi-feature approach assesses facial structure and orientation, capturing subtle inconsistencies indicative of deepfake manipulations. A key innovation of this work is introducing the mean Mahalanobis distance as a core feature for quantifying spatial relationships between facial landmarks. The research also emphasizes anomaly analysis by focusing solely on authentic facial data to establish a baseline for natural facial characteristics. The anomaly detection model recognizes when a face is modified without extensive training on deepfake samples. The process is implemented by analyzing deviations from this established pattern. The CMFRA demonstrated a detection accuracy of 90%. The proposed algorithm distinguishes between authentic and deepfake images under varied conditions.

Keywords:

artificial intelligence; head pose estimation; randomized PCA; image authenticity; Google Vision API; yaw; pitch; roll; mouth; nose; eye 1. Introduction

Advances in artificial intelligence (AI) extend techniques for generating digital media, with deepfake technology at the forefront of this transformation. Deepfakes use complex algorithms to create realistic synthetic images and videos. The result of this process is a blurred line between authentic and fabricated content. This study presents the progression of deepfake technologies, evaluates current detection strategies and proposes a new framework as a potential area of exploration in the field of deepfake detection in images.

Evaluations of deepfake detection methodologies demonstrate varying levels of success. Guarnera et al. [1] introduce a technique that analyzes Discrete Cosine Transform (DCT) coefficients. The analysis generalizes the distinction between real and synthetic images. Similarly, Noreen et al. [2] explore the application of histogram-oriented gradients in conjunction with deep learning (DL) models, demonstrating the outcomes in identifying deepfake images. Additionally, Gupta et al. [3] point out that human evaluators frequently struggle to differentiate between authentic and deepfake visuals. This action recognizes the necessity for automated detection systems that offer superior accuracy.

Contemporary research proposes quantitative performance metrics of detection algorithms. For instance, the ResNet50 architecture has been integrated into various detection frameworks using datasets such as Celeb-DF and FaceForensics++ [4]. Furthermore, Kang et al. [5] demonstrate that methodologies leveraging residual noise and manipulation traces improve the identification of deepfake images.

Deepfake technology employs advanced generative models, particularly Generative Adversarial Networks (GANs), to create hyper-realistic synthetic images and videos. The foundational architecture of GANs allows for generating images that convincingly mimic real human faces. Among the various GAN architectures employed in a deepfake generation, StyleGAN and its subsequent iterations stand out due to their exceptional ability to generate high-resolution images that are challenging to differentiate from authentic photographs [6]. These models function by training on extensive datasets of authentic images. The training process allows them to generate new images that preserve the statistical characteristics of the original data. As a consequence, it makes the face swap between two people possible.

The advancement of deepfake technology has simultaneously driven the development of corresponding detection methods. As deepfake generation techniques become more advanced, so do the approaches for their identification. Recent research has concentrated on refining detection algorithms through adversarial training and identifying unique artifacts produced during the deepfake generation. The main objective of all these technologies is to deceive the human eye so that it cannot identify the existence of a deepfake at the image or frame level in video content [7,8,9].

1.1. Paper Contributions

The contributions of this study emerge as follows:

- Design of Customized Mahalanobis Facial Recognition Algorithm (CMFRA). The authors developed the CMFRA, a novel algorithm designed to improve deepfake detection by combining statistical analysis with facial recognition. The CMFRA utilized Google Vision API for landmark extraction and was implemented using C# for data processing and anomaly detection.

- Integration of mean Mahalanobis distance for landmark analysis. This study developed the mean Mahalanobis distance as a key tool for assessing spatial relationships between facial landmarks. Unlike traditional methods such as Euclidean distance, the Mahalanobis distance incorporates correlations between features, improving sensitivity to minor facial geometry distortions. This statistical measure was calculated through the combination of Google Vision API for landmark data and custom-developed C# statistical script.

- Use of anomaly detection model for deepfake identification. To differentiate deepfake images, we deviated from traditional binary classification by adopting an anomaly detection approach. By concentrating on authentic facial characteristics to define a baseline of normality, our model identifies deviations from this baseline as potential deepfakes, mitigating the risk of overfitting that can arise from extensive training on manipulated data. In this way, we reduce the risk of overfitting. The model was trained using a dataset of 200 images of the same person’s authentic faces. The model was tested with 80 additional images for validation.

- Incorporation of head pose estimation. Head pose estimation was another key feature used in the CMFRA. We propose parameters like yaw, pitch, and roll as facial landmarks to provide additional geometric data to increase detection accuracy. The authors used the OpenCV library (through the SolvePnP method) integrated into a C# framework to estimate head orientation, enriching the overall feature set used for anomaly detection.

- Training the anomaly model. The authors innovatively combined multiple features (geometric facial landmarks, Mahalanobis distance metrics, and head pose angles) to form a feature set. This hybrid approach allowed the model to capture a variety of facial inconsistencies introduced during deepfake manipulation. The Randomized Principal Component Analysis (PCA) method was implemented to handle dimensionality reduction, thus streamlining the anomaly detection process.

- Performance evaluation of the CMFRA pipeline. The authors developed an entire detection pipeline in C# using the Visual Studio platform. This pipeline included modules for feature extraction, data processing, and deepfake detection, demonstrating effective integration of all components. The model showed a detection accuracy of 90% on test images, which included a mix of authentic and synthetic images generated by advanced deepfake tools. The authors provided a detailed performance analysis, including a confusion matrix that highlighted a recall rate of 100% for detecting deepfakes.

1.2. Paper Structure

This study is organized into six sections. The review of the literature is described in Section 2. Section 3 provides a comprehensive overview of the CMFRA framework, including feature extraction methodologies, Mahalanobis distance utilization, and anomaly detection implementation using Google Vision API and C#. The performance of the developed model is meticulously evaluated in Section 4, with a particular emphasis on detection accuracy and validation metrics. A detailed discussion of the findings is presented in Section 5, encompassing an assessment of the model’s limitations, such as dataset size constraints, and proposing avenues for future research, including exploring larger datasets and developing hybrid model architectures. Finally, Section 6 summarizes this research’s key contributions, underscores the anomaly detection approach’s significance, and outlines promising future work directions.

2. Literature Review

2.1. Identification of Deepfakes in Images

Significant research in the specialized literature has been performed to identify content with traces of deepfake. Most of these studies analyze convolutional neural networks (CNNs) for deepfake detection. Ashani et al. [10] indicate that CNN architectures like VGG16 and ResNet50 are distinguished between deepfake images by scrutinizing the distinctive features and artifacts introduced during their creation. The problem with these detectors lies in their inability to generalize. In other words, a detector trained on the dataset Deepfake Detection Challenge (DFDC) cannot identify if dataset investigation FaceForensics++ has been used. For these reasons, generalization through the use of as many datasets as possible is studied in [11].

Another progressive technique integrates facial landmark detection with architectures like the Video Vision Transformer (ViViT). This methodology increases the model’s capacity to identify facial features frequently altered in deepfake images [12]. Wang et al. [13] demonstrate that methods that identify image noise can also differentiate authentic images from deepfakes. These methods analyze intrinsic noise characteristics in images, making it possible to examine details that the human eye cannot perceive. The techniques presented in the paper by Atamna et al. [14] identify deepfakes even when originating from different generative models by examining temporal variations in video sequences or concentrating on residual noise patterns [15,16].

CNNs extract and analyze facial features, mainly identifying inconsistencies that indicate manipulation. For instance, Shahzad et al. highlighted the application of CNNs alongside other machine learning (ML) algorithms, such as logistic regression (LR) and multilayer perceptron (MLP), for detecting fake faces, demonstrating the versatility of these approaches in handling different datasets, including the deepfake TIMIT benchmark dataset [17]. Tran et al. [18] emphasize the importance of attention mechanisms in CNNs to focus on target-specific regions within images, thereby increasing detection accuracy. Advancements in this field combine segmentation-based noise modeling [19] and face boundary detection through Face X-ray representations [20].

Recent research has explored hybrid models that combine different DL architectures. For instance, the CNN-RNN (recurrent neural network) model is optimized with particle swarm optimization (PSO) to improve detection rates compared to traditional methods [21]. This hybrid approach reflects a growing trend in the field, where researchers leverage the strengths of multiple algorithms to tackle the complexities of deepfake detection. Although there are numerous methods for deepfake detection in the specialized literature, the technology faces problems that have not yet been solved, such as the low quality of images that hinder correct identification [22].

2.2. Human Subject Position in Image Processing Context

Head pose estimation (HPE) is a research field in computer vision. Various methodologies have been developed to accurately estimate head orientation in three-dimensional space, typically represented by yaw, pitch, and roll angles.

The methodologies in HPE employ three-dimensional face models and depth information. For example, Kong and Mbouna [23] demonstrate combining three-dimensional (3D) face morphing with depth parameters. The methodology used in their paper estimates the position of the head to its different orientations. The analysis is performed by extracting features to align a 3D model with a two-dimensional (2D) image. The method is revolutionary because it estimates even when the head is inclined or rotated. Heredia-Lidón et al. [24] explore a multi-view strategy employing CNNs for yaw prediction, showcasing the capability of DL to manage intricate head orientations. Barros et al. [25] introduce a hybrid framework that combines facial landmark detection with salient feature tracking, using a particle filter to refine pose estimates.

Feature detection algorithms constitute another component of HPE. Maes et al. [26] validate the meshSIFT algorithm for identifying features on 3D facial surfaces. Complementarily, Johnson et al. [27] employ the Scale-Invariant Feature Transform (SIFT) to establish correspondences between facial features and model points, thereby increasing the dependability of pose estimation in uncalibrated monocular video scenarios. Recent technical applications demonstrate the use of advanced simulation, data acquisition, and automated assessment systems in diverse engineering contexts [28,29,30].

Incorporating depth data has proven beneficial for achieving real-time performance in HPE. Li et al. [31] describe an algorithm that integrates depth information with Kalman filtering, resulting in more rapid and stable head pose estimations.

Google Vision API provides AI services that perform image processing. Ali et al. [32] present the integration of Google Vision API into technologies such as Google Glass. This integration requires real-time scene analysis for users with visual impairments [33]. The method improves the quality of life for individuals with visual disabilities by eliminating an intermediary such as a smartphone. Azure Face API is a direct competitor but is currently unusable due to technical limitations, making Google Vision superior for code-level integrations. The API’s reliability in image labeling and classification has been affirmed through qualitative analyses, revealing its superiority in image labeling tasks compared to human annotators.

Google Vision API is not without limitations. Apte et al. [34] identify challenges related to inconsistent labeling, especially when processing rotated images, which can result in inaccuracies. Adversarial attacks have been shown to exploit vulnerabilities within the API, raising concerns about its reliability in embedded systems, IoT, and industrial real-time applications.

Google Vision API extends its versatility in social media analysis and cultural studies. For example, the paper by Smith et al. [35] used it to analyze the semantic content of memes to study the interaction between visual elements and textual components. In digital humanities, the API supports research on gender biases and misinformation in visual content [36,37].

2.3. Mahalanobis Distance

Tursman et al. [38] use Mahalanobis distance to identify deepfakes in video content. The distance is calculated for points associated with the lips and analyzed in relation to different angles of the face. Mahalanobis distance is used in this research to evaluate the differences between authentic and fake videos. The paper uses clustering models and reports an accuracy of 75%.

Numerical integration of complex mathematical models has been extensively studied over time for various task simulations [39]. These mathematical models, regardless of their complexity, have been examined from the perspective of their applicability in various contexts [40], most often speculating on simplification options in implementation [41].

Yu et al. [42] highlight the low performance of deepfake detection algorithms in video content, recording a 65.18% accuracy for these algorithms. The Mahalanobis distance is also presented to analyze facial landmarks relative to a background point to detect whether a frame is a deepfake.

In the paper by Yang [43], the Mahalanobis distance is used to evaluate if frames in a video contain deepfake information. For this, the Mahalanobis distance is applied to various facial points to measure how much a suspect image deviates from the values of a natural model. Testing was conducted using K-fold cross-validation (K = 5 to 10), and the main performance metric was the error rate. The best result achieved was an error rate of 0.15.

In the paper by Anwar et al. [44], the Mahalanobis distance is employed to calculate the width of the mouth, the height between the extreme points of the mouth, and the distance from the middle of the forehead to the middle of the eyebrows. These values are then used to determine the emotional state of the video content. The paper compares the emotions detected in original and deepfake videos. Mean squared error (MSE) was used to implement the comparison. Tests showed important differences between the emotions in the two types of videos. Additionally, a method based on the entropy of histograms was used to assess image quality, finding a reduction in entropy for deepfake videos.

Mahalanobis distance is also used to detect abnormal behaviors in social media posts. In this research, the Mahalanobis distance is used to evaluate deviations from the usual language patterns. Thus, Silva et al. [45] model the vector distributions of social media posts using linguistic features and contextual relationships between words. They compute the Mahalanobis distance for each post, allowing for the automatic identification of the most likely examples of suspicious content.

Mahalanobis distance is introduced as an instrument to identify the acoustic particularities of multiple languages [46]. The research evaluates how much specific phonemes deviate from typical distributions, better capturing the correlations among acoustic coefficients compared to Euclidean distance. The paper demonstrates that using the Mahalanobis distance leads to superior results in phoneme recognition.

2.4. Deepfake Models’ Accuracy and Anomalies AI Models

The accuracy of models in identifying deepfake images involves various techniques to identify these manipulations, resulting in a spectrum of accuracy levels [47].

CNNs have proven effective in differentiating between authentic and manipulated images. The study by Vardhan et al. [48] reported an accuracy of 93.62% using a DL framework designed explicitly for deepfake detection. Gedela et al. [49] employed a modified DL architecture that achieved high accuracy in detecting deepfake videos. An SRTNet deepfake recognition tool was developed by Zhang et al. [50] to collect images from videos by joining spatial and residual domains. The tests conducted on the FaceForensics++ (FF++) dataset showed that SRTNet surpasses the existing algorithms in discovery deepfake. The VGG-19 model, proposed by Nassif et al. [51], attained an accuracy that exceeded 80% in identifying deepfake images within the FF++, outperforming earlier approaches that achieved lower accuracy using binary classification techniques.

A particular category of ML is the anomaly detectors. The primary objective of anomaly detection is to recognize instances that do not conform to the anticipated norm, which indicates potential fraud, faults, or other significant events [52,53]. Anomaly detection techniques are categorized into three main classes based on the availability of labeled data: supervised, semi-supervised, and unsupervised. Supervised anomaly detection relies on labeled training data to learn the characteristics of normal and abnormal instances. At the same time, unsupervised methods operate without labeled data, identifying anomalies based solely on the data’s inherent structure. Semi-supervised approaches utilize a small amount of labeled data alongside a larger set of unlabeled data to enhance detection performance [54,55,56].

Recent advancements have introduced hybrid models that combine multiple techniques to improve detection accuracy. For example, ensemble methods integrating various anomaly detection algorithms have shown promising results in increasing performance over single-method approaches [57]. Incorporating transfer learning frameworks allows one to adapt models trained on one domain to be utilized in another, addressing the challenge of limited anomalous data availability [58].

In specific applications, anomaly detection is employed to identify unauthorized access or network intrusions [59]. In healthcare, it aids in detecting unusual patterns in patient data that may indicate medical errors or fraud [60] or transport by analyzing potholes, cracks, etc. [61]. In industrial settings, anomaly detection is used to monitor equipment performance and predict failures, thereby facilitating proactive maintenance [62,63].

Randomized PCA is a technique for handling large-scale datasets, particularly in genomics, image processing, and ML fields [64,65]. The randomized PCA technique operates by constructing a smaller matrix that captures the features of the original data, specifically the top eigenvalues and eigenvectors, with high probability. The application of randomized PCA is employed in genome problems but is not limited to them. In the image processing field, randomized PCA is utilized to increase the accuracy of classification tasks. Pan et al. [66] applied a kernel-based approach to PCA for detecting anomalies in chemical sensors, demonstrating that randomized techniques outperform traditional methods in speed and accuracy. This is further supported by the findings of Zhang et al. [67], who recommended employing PCA as an additional method to Mahalanobis distance to better estimate the results.

3. Materials and Methods

This study explores the potential of the CMFRA in distinguishing between real faces and deepfakes generated through image manipulation software. We focus on utilizing the Google Vision API for facial feature detection landmark extraction. Using landmarks provided by the Google Vision API detected on a face, the CMFRA extracts the yaw, pitch, and roll values, representing the head’s three-dimensional orientation relative to the camera. The Mahalanobis distance is calculated based on the spatial relationships between these landmarks. Together, these four values, yaw, pitch, roll, and Mahalanobis distance (defined as a feature), form the unique description of an authentic image. This approach enables the CMFRA to capture a face’s geometric and spatial properties, making it possible to differentiate between real and potentially deepfake images. By aggregating these values for multiple real images, we construct the dataset required to train the model.

Instead of classifying the face into a predefined class, we propose analyzing the anomalies based on the four features. In this context, we do not train the model using two classes. Instead of classifying a face into predefined categories (e.g., “real” or “fake”), analyzing anomalies based on the four extracted features offers several advantages. This approach eliminates the need to train the model on two separate classes, reducing the risk of bias and overfitting associated with imbalanced datasets. There is no need to build the dataset for two classes. By focusing solely on patterns observed in authentic images, the model learns the intrinsic characteristics of authentic faces. In this way, it simplifies the training process and generalizes to unseen anomalies.

The CMFRA comprises three major components that work synergistically to increase the accuracy of distinguishing real faces from deepfake images. These components use the Google Vision API to detect and map key facial landmarks, such as the eyes, nose, mouth, and jawline, providing data that allow the algorithm to analyze the structure of the face, as follows:

- The proposed Mean Mahalanobis Distances Module (MMDM) calculates, using a landmark, a global measure of multiple facial characteristics, unique for a human subject, correlated with the head pose.

- The three head pose coordinates, yaw, pitch, and roll, describe the face’s orientation in 3D space and are computed using the Head Pose Module (HPM).

- ML pipeline built with ML.NET for anomaly detection using a Randomized PCA trainer: This unsupervised anomaly detection algorithm identifies data patterns by projecting them into a lower-dimensional space. The model learns the distribution of regular patterns. This compact pipeline combines feature engineering and anomaly detection seamlessly, leveraging ML.NET’s modular design for anomaly detection.

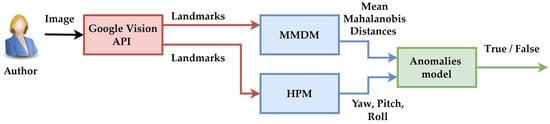

The diagram presented in Figure 1 outlines the process employing a pipeline for deepfake detection using the CMFRA. It presents the structured methodology for evaluating and detecting deepfake images, leveraging the three modules based on facial features. The overall pipeline analysis highlights how multiple technologies and techniques are integrated to address the complex problem of deepfake detection.

Figure 1.

Conceptual CMFRA diagram.

Figure 1 presents the conceptual architecture of the CMFRA method. The process initially extracts facial features (landmarks) using the Google Vision API. These data are then transmitted to two analytical modules: MMDM, which evaluates the geometric consistency of the face through statistical distances, and HPM, which estimates the head orientation in three-dimensional space (yaw, pitch, roll). The four extracted features are combined into a feature vector. Next, it is considered the input for an anomaly detection model built with ML.NET. This model returns an anomaly score for each image analyzed. In the next step, if the score exceeds a predefined threshold, the image is classified as a potential deepfake. The figure highlights the modularity of the pipeline. At the same time, Figure 1 illustrates the data flow between the pipeline components. The input of the pipeline is a raw image, and the output is a binary decision represented by one of the labels: authentic or deepfake. The process presented in Figure 1 outputs a binary decision, True (deepfake) or False (authentic), based on the anomaly score. This modular and synergistic design ensures high accuracy in detecting deepfake images.

3.1. CMFRA Methodology

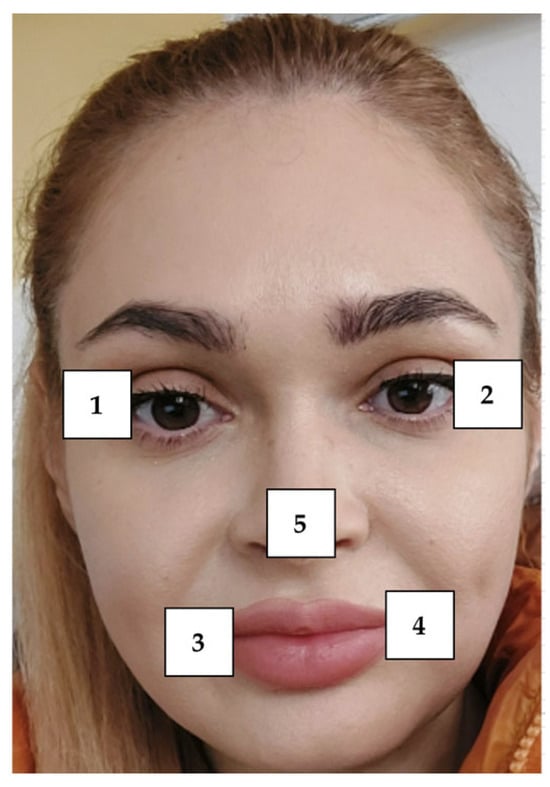

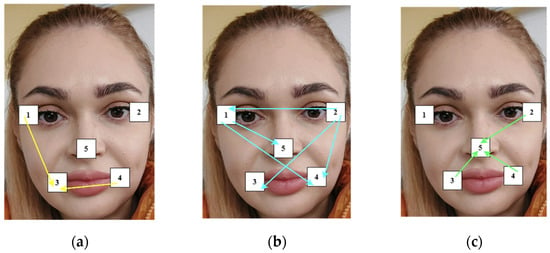

The Google Vision API was used to identify key facial features and landmarks for each image in the dataset. The CMFRA uses the following Google Vision API facial landmarks in Figure 2 for the MMDM: LEFT_EYE (point 1 in Figure 2), RIGHT_EYE (point 2), MOUTH_LEFT (point 3), MOUTH_RIGHT (point 4), and NOSE_TIP (point 5).

Figure 2.

CMFRA-analyzed landmarks.

The MMDM calculates the Mahalanobis distance using the facial landmarks returned by the Google Vision API. In this context, each facial landmark from the five specified is represented as a pair of coordinates , and we have n = 5 landmarks for each image. When comparing sets of landmarks, we aim to compute the distance between the landmark configurations while accounting for their correlations, scale, and possible distortions.

Each set of facial landmarks is represented using Equation (1) [38]:

where:

X is a vector of size n representing the coordinates of the facial landmarks.

The Mahalanobis formalism was adapted for the MMDM to compute the Mahalanobis distance between facial landmarks. The following procedure is applied to the reference image and, next, to the test image [38]:

- 1.

- Compute the mean point (, ) of the two points (, ) and (, ) using Equation (2).

n is the number of landmarks.

is the x coordinate of the landmark i;

is the y coordinate of the landmark i.

- 2.

- Calculate the deviations () using Equation (3):

- 3.

- The adaptation for the MMDM Covariance Matrix (S) using the deviations is calculated using Equation (4).

- 4.

- Calculate the Inverse Covariance Matrix () using Equation (5).

- 5.

- Compute the difference vector () for two specific landmarks, using Equation (6).

- 6.

- Calculate the Mahalanobis distance () for the two specific landmarks, using Equation (7).

Steps 5 and 6 are computed for all types of landmark combinations.

- 7.

- Calculate the mean Mahalanobis distances (), with Equation (8), for all types of landmark combinations to have a comparison tool between the Mahalanobis distances calculated for two images.

By applying the generalized Mahalanobis distance formulas and computing the mean distance across all landmark pairs, we measured the spatial relationships among the facial landmarks. This approach allows for quantitative comparison of different sets of landmarks, assessment of facial symmetry and structural variations, and baseline metrics for further analyses

3.2. HPM Description

Calculating the head pose (yaw, pitch, and roll) uses facial landmarks and a predefined 3D model of facial points. Five key facial landmarks were utilized for the HPM to calculate the head pose in 3D space. These include the nose tip, which serves as a central reference point. The chin (gnathion) represents the lower boundary of the face; the left and right eye corners define the horizontal axis; and the left and right mouth corners capture the lower facial boundary (NOSE_TIP, CHIN_GNATHION, LEFT_EYE, RIGHT_EYE, MOUTH_LEFT, MOUTH_RIGHT). These landmarks provide spatial information to compute the three head pose parameters: yaw, pitch, and roll. This procedure uses the SolvePnP method in OpenCV for head pose estimation and includes the following steps:

- Obtain 2D facial landmarks from the image using a facial detection system: NOSE_TIP, CHIN_GNATHION (the lowest point on the chin), LEFT_EYE, RIGHT_EYE, MOUTH_LEFT, MOUTH_RIGHT;

- Use a 3D facial model that approximates the human face structure;

- Use the 2D coordinates of the detected landmarks from the image and map each 2D landmark to its corresponding 3D point in the facial model;

- Create the camera matrix, which represents the internal parameters of the camera:

- Focal length—typically set to the image width for simplicity;

- Principal point—the center of the image (width/2, height/2);

- Example camera matrix:

- 5.

- SolvePnP estimates the pose by determining the head’s rotation and translation relative to the camera using the mapped 2D points and 3D model;

- 6.

- Convert the rotation vector into a rotation matrix using Rodrigues transformation. From the rotation matrix, calculate the Euler angles:

- Yaw: the side-to-side rotation (e.g., looking left or right);

- Pitch: the up-and-down rotation (e.g., looking up or down);

- Roll: the tilt of the head (e.g., tilting left or right).

- 7.

- Convert the Euler angles to degrees for interpretability. The output is represented as follows:

- Yaw—horizontal rotation in degrees;

- Pitch—vertical rotation in degrees;

- Roll—tilt in degrees.

This procedure was implemented using the Emgu CV library in C# language.

3.3. CMFRA Description

The anomaly model was trained using data extracted from one of the authors’ real facial images. The model learned authentic patterns of human facial geometry and orientation. The training dataset consisted of four facial features: Mahalanobis distance, the statistical deviation of facial landmarks, and the three head pose coordinates (yaw, pitch, and roll). These parameters describe the 3D orientation of the face. These features collectively represent authentic facial characteristics and capture the face’s structural and positional dynamics and correlation.

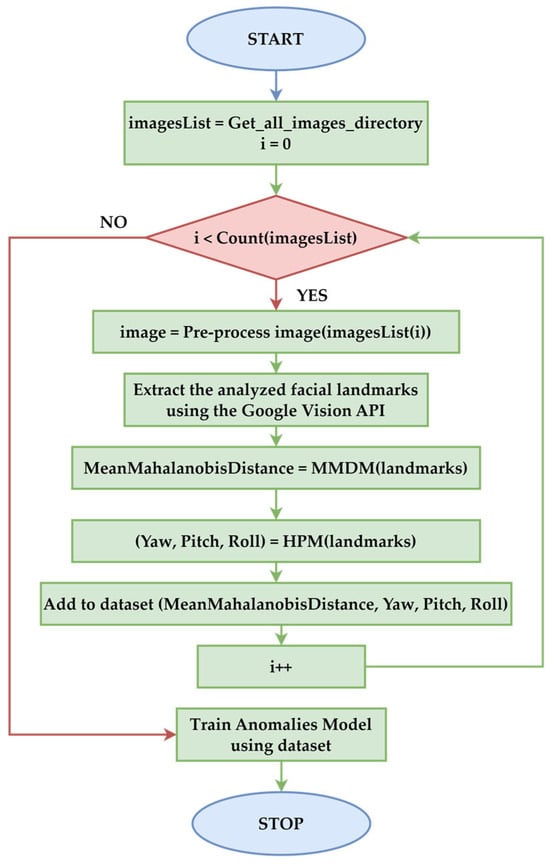

Figure 3 outlines the training process for an anomaly model designed to distinguish authentic images from potential deepfakes. The process begins with initializing a list of all images in a training directory. For each image, the first step involves pre-processing it to ensure it meets the requirements for further analysis, such as resizing or normalizing. Next, the algorithm extracts the analyzed facial landmarks using the Google Vision API, capturing landmarks. Next, the mean Mahalanobis distance is calculated by the MMDM. The HPM computes the three-dimensional orientation of the face, extracting the yaw, pitch, and roll values based on the landmarks. These features (mean Mahalanobis distance, yaw, pitch, and roll) are then added to a dataset, serving as the feature vector representation for the current image. After the dataset is constructed, it is used to train the Anomalies Model. The MMDM learns the patterns of authentic facial data based on these extracted features. The process concludes with a trained model ready to detect anomalies in unseen images and identify potential deepfakes.

Figure 3.

Workflow of the training process.

Training the anomaly detector uses the RandomizedPCATrainer algorithm from the ML.NET library. A projection rank of 2 was set for this. Oversampling was set to a value of 20 to provide numerical stability in extracting the principal components. The algorithm compresses the input data into a lower-dimensional space. Within this space, the model learns the distribution of points considered normal. The decision threshold is applied to the anomaly score returned for the sample. The integration of the PCA algorithm within the pipeline is fully automated. This does not require manual pre-processing. ML.NET internally handles the calculation of eigenvalues and eigenvector projection. Training is conducted by using the Fit() method on the dataset containing the authentic images. Predictions are obtained by using the Transform() function on the test images. In the experiment, the model learns the distribution of authentic features, and any statistical deviation is automatically classified as an anomaly.

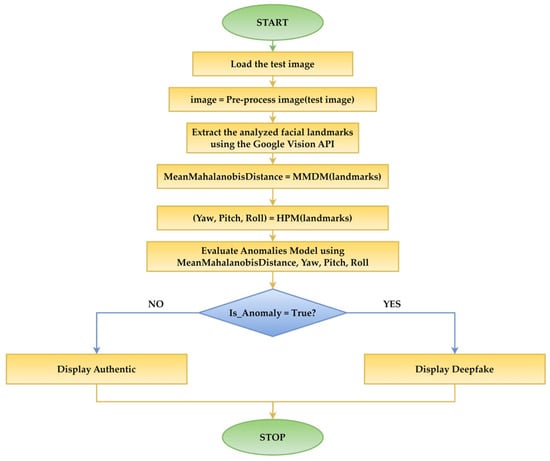

To evaluate new images, whether authentic or deepfake, the MMDM calculates the Mahalanobis distance by analyzing the spatial relationships between facial landmarks, providing a measure of global facial consistency. The HPM extracts the yaw, pitch, and roll coordinates, determining the head’s orientation in 3D space. These modules generate a feature set analogous to the training data, as depicted in Figure 4.

Figure 4.

Workflow of the evaluation process.

Figure 4 presents a flowchart of the evaluation process. The extracted features from the input image are then processed by the Anomalies Model, which uses ML to evaluate whether the feature combination aligns with the learned patterns of real facial data. The model identifies deviations from these patterns as anomalies, classifying such images as deepfakes. We use an anomaly-based detection approach because it does not require examples of manipulated or deepfake photos for training.

The implemented anomaly detection pipeline leverages randomized PCA, a dimensionality reduction technique, to analyze the input data and detect anomalies. While it differs from a traditional neutral network (NN), certain conceptual parallels are drawn to understand its functioning. In this model, the input features (such as Mahalanobis distance, yaw, pitch, and roll) are analogous to neurons in an input layer, representing the dimensions of the dataset.

The dimensionality reduction process, defined by the rank parameter, is set to 2. It acts as a hidden layer with two neurons. The input data are compressed without affecting information such as noise or representation details. The proposed algorithm computes the principal components in a single step. The oversampling parameter (default set to 20) increases the accuracy of the principal component approximation by sampling additional random vectors.

The model outputs an anomaly score, similar to a decision layer, which evaluates how much a sample deviates from the learned patterns. The pipeline identifies anomalies by applying a threshold (set at 0.001).

4. Results

The Anomalies Model was trained using a dataset of 200 images of the same individual. These images were selected to cover a broad range of variations in facial appearance, ensuring that the model could learn to recognize the subtle features of an authentic face across different scenarios. The dataset included selfies taken from various angles, which allowed the model to learn how facial landmarks shift and adjust in 3D space due to changes in head pose. The images encompassed a variety of hairstyles, makeup applications, and lighting conditions. Hairstyles were chosen to reflect common daily changes an individual may undergo, ensuring the model detects faces with hair in different positions, lengths, and styles. Makeup variations, including light and heavy applications, were included to simulate how cosmetic alterations affect facial features. These variations allowed the model to distinguish between natural changes in appearance and manipulations indicative of deepfake images.

The anomaly threshold was set to a value of 0.001 within the CMFRA pipeline empirically. This value was established after conducting a set of preliminary tests during the validation phase. The objective was to maximize the separation between the anomaly scores of authentic images and those of deepfake images. In practice, this threshold proved to be optimal by ensuring a compromise between the false-positive (FP) and false-negative (FN) rates at a final accuracy of 83.3% and a recall of 100%. To evaluate the impact of the threshold, alternative values were tested, but this value led to an increase in the number of FPs or a decrease in recall. For this reason, the value of 0.001 was retained as an optimal value for the context of the model trained on the analyzed subject. Cross-validation was not applied in the traditional sense because the model is not a supervised classification model with explicit labels. Validation was performed using a set of authentic images not used in training, with natural variations and deepfake images generated with Pixlr AI Face Swap.

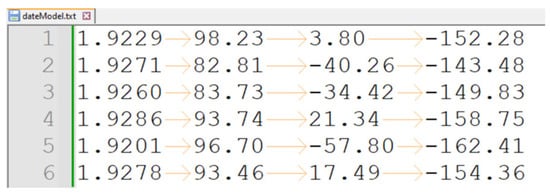

Figure 5 depicts a caption of the dataset used for model training, featuring the four numerical attributes: Mahalanobis distance, yaw, pitch, and roll. Each row represents a unique sample, capturing facial orientation’s statistical and geometric characteristics.

Figure 5.

Feature set representation for Anomalies Model training.

The images were taken under different lighting conditions, such as dim, bright, and mixed lighting scenarios. These variations influence how facial features are captured, such as shadowing, color representation, and detail visibility. By exposing the model to a diverse range of lighting, it was trained to handle real-world conditions where lighting often varies, ensuring that the model is not sensitive to such factors.

The combination of these varied conditions (angles, hairstyles, makeup, and lighting) ensured that the model was trained with sufficient diversity to reliably distinguish between authentic and altered facial images. This training approach aimed to equip the model with the ability to generalize, making it capable of identifying deepfakes even in real-world variations in facial appearance.

4.1. Computation of Mean Mahalanobis Distances

We consider the following five facial landmarks provided by the Google Vision API and presented in Figure 2 for the reference image: LEFT_EYE (0.726, 1.114), RIGHT_EYE (0.718, 0.800), MOUTH_LEFT (0.986, 1.082), MOUTH_RIGHT (0.984, 0.823), and NOSE_TIP (0.876, 0.981). Next, we present an example for calculating the Mahalanobis distance for the LEFT_EYE—RIGHT_EYE pair.

Table 1 presents all Mahalanobis distances between all landmarks analyzed and the unified value for this scenario.

Table 1.

Deviations for the reference image.

- 1.

- Initially, we compute the point:

- 2.

- We calculate the deviation () for each landmark, and the results are presented in Table 1.

- 3.

- By applying Equation (4), we compute the Covariance Matrix (S). Initially, we compute the outer products for each landmark, and finally, we calculate their sum. We exemplify the outer product for the LEFT_EYE:

The obtained value for the sum of the outer products of the landmarks is:

The value for the Matrix S is:

- 4.

- We calculate the Inverse Covariance Matrix (), as follows:

- 5.

- We compute the difference vectors () and the corresponding Mahalanobis distance for each landmark pair. Next, we exemplify for the LEFT_EYE—RIGHT_EYE and summarize the results for all landmarks in Table 2.

Table 2. Mahalanobis distance for landmark pairs.

Table 2. Mahalanobis distance for landmark pairs.

- 6.

- We calculate the mean Mahalanobis distance using the values presented in Table 2.

By applying the generalized Mahalanobis distance formulas and computing the mean distance across all landmark pairs, we measured the spatial relationships among the facial landmarks. This approach allows for quantitative comparisons of different sets of landmarks, assessment of facial symmetry and structural variations, and baseline metrics for further analyses in facial comparisons.

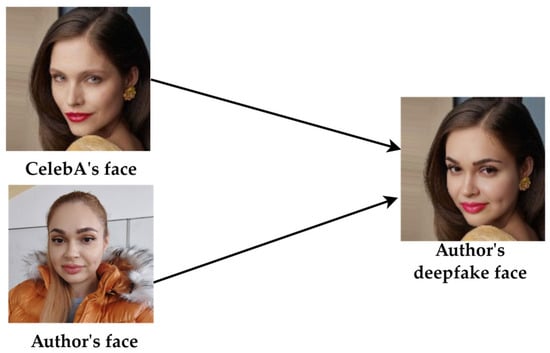

4.2. Protocol for Testing CMFRA

A synthetic face-swapped image was generated using an advanced deepfake AI tool to evaluate the CMFRA. The face of a celebrity, from the CelebA dataset [68], was selected as the source face, and an image of one of the authors of the present study served as the target face. The Pixlr’s AI Face Swap online tool [69] was employed to superimpose the source face onto the target face within the image. This tool creates a realistic face swap by aligning facial features and blending skin tones. The result was a synthesized image where the celebrity’s facial features were mapped onto the author’s face, creating a plausible yet counterfeit representation, as depicted in Figure 6.

Figure 6.

Generating deepfake image process.

In the following, we present two distinct scenarios that demonstrate the application of the Anomalies Model to unseen images. These scenarios evaluated the model’s capability to differentiate between authentic images and deepfake content using the mean Mahalanobis distance.

The first scenario (Figure 7a) involves an authentic image of the same subject whose data were used during training. However, this unique image was not part of the training dataset. It differs in angle, lighting, hairstyle, or facial expressions, ensuring the model is exposed to novel but realistic variations. This scenario assesses the model’s ability to generalize and accurately classify a new, real image as authentic by analyzing its Mahalanobis distance and head pose features.

Figure 7.

Test images: (a) authentic; (b) deepfake.

The second scenario (Figure 7b) presents a deepfake image generated by manipulating the facial features of the same individual, which is also described in Figure 6.

The authentic image (Figure 7a) is characterized by a head pose with coordinates yaw: 146.56°, pitch: 53.13°, roll: −153.85°. The deepfake image is described by a head pose with coordinates yaw: −172.61°, pitch: 40.44°, roll: −158.98°. The following section will analyze these scenarios to identify the mean Mahalanobis distances.

4.3. Comparative Mean Mahalanobis Distances

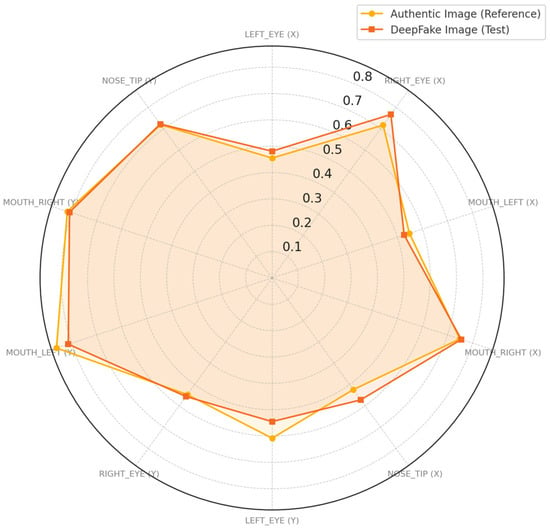

By computing the Mahalanobis distances between all pairs of facial landmarks within each image and calculating the mean Mahalanobis distance, we quantitatively assessed the spatial relationships of the facial features in the two analyzed images presented in Figure 7. The Mahalanobis distances provide a statistical measure that accounts for the covariance among landmarks.

Figure 8 outlines the comparative visualization of landmark coordinates for authentic and deepfake images from Figure 7. The graph plots the key facial landmarks LEFT_EYE, RIGHT_EYE, MOUTH_LEFT, MOUTH_RIGHT, and NOSE_TIP for authentic and deepfake images. The results were provided by the execution of the C# code implementing the CMFRA alongside the response returned by the Anomalies Model.

Figure 8.

Landmark coordinates of authentic and deepfake images from Figure 7.

The overall structure of the polygons is similar, indicating that the deepfake generation attempts to closely replicate the spatial positioning of facial landmarks from authentic images. Deviations are noted around the MOUTH_LEFT, NOSE_TIP, RIGHT_EYE, and LEFT_EYE coordinates, where the deepfake polygon slightly diverges. These deviations show imperfections in landmark alignment algorithms used during deepfake synthesis in the central and lower regions of the face. Such misalignments produce the identification of the deepfake images when combined with other analytical techniques. These results validate the accuracy of the CMFRA in distinguishing between authentic and manipulated facial images.

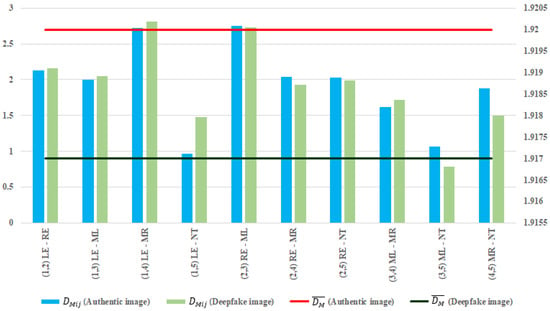

Figure 9 depicts the Mahalanobis distances () and mean Mahalanobis distances () of authentic and deepfake images from Figure 7. Each bar represents the distance calculated between specific facial landmarks, measured in normalized units.

Figure 9.

and of authentic and deepfake images from Figure 7.

The lowest Mahalanobis distance among the landmark pairs of the authentic and deepfake images is recorded by the RIGHT_EYE–MOUTH_LEFT (0.019), whereas the highest is for LEFT_EYE–NOSE_TIP (0.512). The landmark pairs can be grouped into three categories according to the results. The first category includes landmark pairs (yellow lines in Figure 10a) with the closest Mahalanobis distances (less than 0.005) among them, such as RIGHT_EYE–MOUTH_RIGHT and MOUTH_LEFT–MOUTH_RIGHT, which reflect that deepfake manipulation attempts to preserve the proportions between the height and width of person’s face to maintain visual plausibility.

Figure 10.

Landmark pairs groups. 1— LEFT_EYE; 2—RIGHT_EYE; 3—MOUTH_LEFT; 4—MOUTH_RIGHT; 5—NOSE_TIP.

The second category comprises landmark pairs (turquoise lines in Figure 10b) with medium Mahalanobis distances (between 0.005 and 0.1) among them, such as LEFT_EYE–RIGHT_EYE, LEFT_EYE–MOUTH_LEFT, LEFT_EYE–MOUTH_RIGHT, RIGHT_EYE–MOUTH_LEFT, and RIGHT_EYE–NOSE_TIP, which underline the limitations of Pixlr’s AI Face Swap online tool to maintain accurate facial geometry around all five landmarks of the person’s face (Figure 10b).

Finally, the third category consists of landmark pairs (green lines in Figure 10c) with the highest Mahalanobis distances (higher than 0.1) among them. This is the case of MOUTH_LEFT–NOSE_TIP, MOUTH_RIGHT–NOSE_TIP, and LEFT_EYE–NOSE_TIP, in which Pixlr’s AI Face Swap online tool recorded the highest error in mapping the correlation between the person’ nose and mouth outline (Figure 10c).

The difference between the mean Mahalanobis distances of authentic and deepfake images underscores the effectiveness of this indicator in detecting deepfake images.

4.4. CMFRA Implementation

The CMFRA was implemented using Visual Studio with the C# programming language. To evaluate the performance of the Anomalies Model, 80 test images, comprising 40 authentic images and 40 deepfake images, were used. The tests revealed that the CMFRA correctly classified 80% of the authentic images as non-anomalous (authentic), while 100% of the deepfake images were correctly identified as anomalous (deepfake).

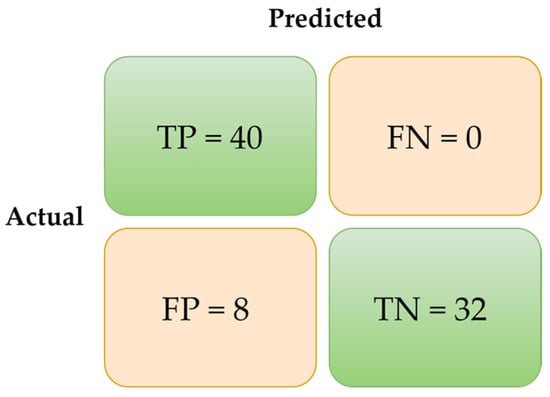

The results demonstrate that the CMFRA can identify manipulated images, particularly deepfakes, even under varied conditions. In Figure 11, the confusion matrix for the CMFRA tests indicates high values for correctly identified deepfakes (true positive—TP) and authentic images (true negative—TN) and low levels for authentic images misclassified as deepfake (FP) and deepfakes misclassified as authentic (FN).

Figure 11.

Confusion matrix for the CMFRA tests.

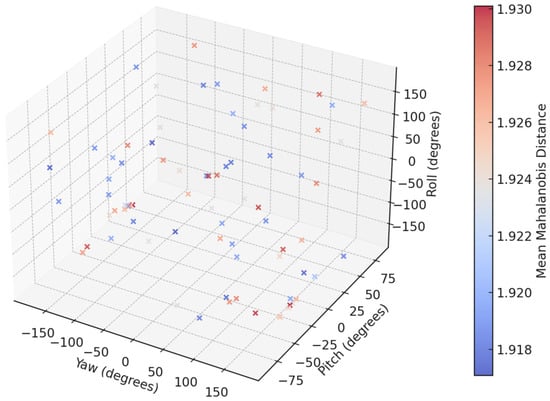

Figure 12 represents 80 data points in multidimensional space, where each point is described by its yaw, pitch, and roll values (3D axes) and its mean Mahalanobis distance (color intensity). The points are categorized into 40 authentic and 40 deepfake images, with authentic images generally exhibiting lower Mahalanobis distances (blue shades) and deepfake showing higher distances (red shades). The spatial distribution highlights variations in head pose parameters (yaw, pitch, and roll), while the color gradient provides insight into the alignment accuracy of facial landmarks. This representation is instrumental in identifying distinguishing patterns between authentic and manipulated facial images.

Figure 12.

4D visualization of the features corresponding to the test images.

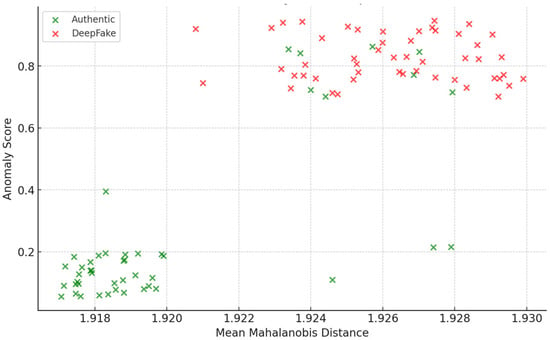

The scatter plot presented in Figure 13 compares the mean Mahalanobis distance against the anomaly score for 40 authentic (green) and 40 deepfake (red) images. Authentic images generally cluster in the lower-left region, characterized by lower anomaly scores and smaller Mahalanobis distances, indicating consistent facial feature alignment. In contrast, deepfake images dominate the mid-right and upper areas, reflecting higher anomaly scores and distances, highlighting inconsistencies in feature synthesis. FPs appear in overlapping regions, reducing separation clarity. Despite this, the plot effectively demonstrates distinct behavioral patterns between the two categories, validating these metrics for differentiating authentic images from deepfakes in anomaly detection systems.

Figure 13.

CMFRA execution for the deepfake image.

The anomaly score quantifies the extent to which an observation deviates from the expected distribution, thereby identifying outliers. In our case, it measures discrepancies in facial features in the report, taking into account head pose alignment. Higher scores suggest deepfake-like inconsistencies, while lower scores indicate authentic images. It supports distinguishing manipulated content from authentic images in anomaly detection frameworks.

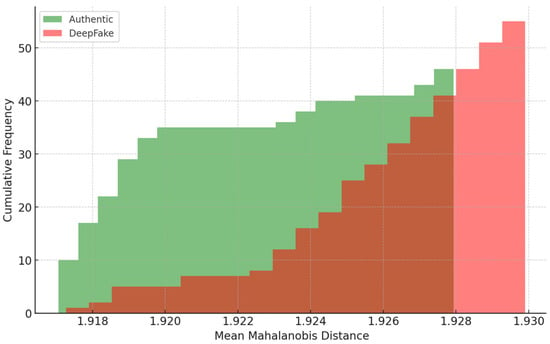

The cumulative distribution plot highlights the separation between authentic and deepfake images based on mean Mahalanobis distance, achieving a 90% classification accuracy. The authentic images (green area in Figure 14) cluster at lower Mahalanobis distances and present facial landmark alignment and geometry. Their high cumulative frequency at low Mahalanobis distances suggests high recognition reliability. Conversely, deepfake images (red area in Figure 14) dominate higher Mahalanobis distances, revealing inconsistencies in the synthesized facial features and geometry. Their cumulative frequency is low at low Mahalanobis distances, exhibiting limited overlap area near the authentic distribution (dark red area in Figure 14). This separation shows the utility of the mean Mahalanobis distance in anomaly detection systems for effectively distinguishing between manipulated and authentic content.

Figure 14.

Cumulative distribution of mean Mahalanobis distance.

The accuracy, precision, recall, and F1-Score metrics are computed as follows:

At 90% accuracy, the model demonstrates that it correctly classifies most images. This high accuracy reflects the CMFRA’s reliability in handling authentic and manipulated content. With a precision of 83.3%, the model shows a good balance in minimizing FP. This points out that most images identified as deepfakes were correctly classified, ensuring confidence in detecting manipulated content. The recall score of 100% highlights the model’s ability to identify all deepfake images correctly. The F1-Score of 91% represents a balance between precision and recall. It reflects the CMFRA’s ability to detect deepfakes while maintaining low FP rates.

5. Discussion

The present study focuses on distinguishing between authentic and deepfake images by implementing the CMFRA methodology, which utilizes a combination of facial landmark detection and Mahalanobis distance analysis. The primary findings demonstrate the accuracy of the CMFRA in identifying deepfakes, achieving a notable 90% overall accuracy in classification tests.

The CMFRA methodology proposes a unique combination of mean Mahalanobis distance and head pose parameters (yaw, pitch, and roll) to characterize the spatial relationships of facial landmarks. This approach, which focuses on the anomalies detected in facial symmetry and orientation, allows for differentiation between real and manipulated images. By training the anomaly detection model exclusively on authentic data, we avoid overfitting risks that typically arise when working with deepfake samples, ensuring the system’s adaptability to different manipulated images. The evaluation phase, which utilized synthetic deepfake images generated by aligning a celebrity’s face with the subject’s face, clearly demonstrated that the model detects facial inconsistencies often imperceptible to the human eye.

The implications of this study are multifaceted. In a world increasingly vulnerable to the misuse of deepfake technology, having a detection method like the CMFRA mitigates potential threats to privacy, media authenticity, and even national security. The technology’s ability to distinguish subtle manipulations in facial features highlights its applicability in high-stakes environments, such as political campaigns and the entertainment industry, where deepfakes are employed to manipulate public opinion. The findings underline the value of using geometric and statistical facial features in combating deepfake technology.

In the literature, there are works that address the issue of deepfake detection using different models. Guarnera et al. [1] use the CNN method based on convolution traces, achieving an accuracy of 97%. Vardhan et al. [48] also use CNN within the images, achieving an accuracy of 93.62%. An accuracy of over 90% is also reported in the paper by Zhang et al. [50], where deepfake detection is performed using CNN methods with spatial residuals. In the paper by Nassif et al. [51], the accuracy is over 80% and uses Visual Geometry Group (VGG)-19 for images extracted from videos. The paper by Tursman et al. [38] is a reference work that uses the Mahalanobis distance applied to video images. In this study, the declared accuracy is 75%. These results are summarized for comparison in Table 3.

Table 3.

Deepfake detection comparison.

The research presented in Table 3 uses standardized datasets, such as FaceForensics++, MANFA, Celeb-DF, or DFDC, and most studies employ CNN methods or hybrid networks. The study by Tursman et al. [38] is the closest to CMFRA because it uses statistical models such as the Mahalanobis distance. Analyzing these results, a direct comparison in terms of accuracy or other metrics is impossible because the integration method and the final objective differ. For example, the paper [38] uses the Mahalanobis distance pointwise, on pairs of features at the video content level, with the aim of detecting temporal inconsistencies that appear between frames. Unlike the research in [38], CMFRA takes an anomaly-based approach applied to static images, combining global facial statistics with 3D orientation pairs to construct a model of facial normality. Another reason why a direct comparison at the accuracy level is impossible concerns the model’s size and structure. For example, the paper [38] uses Mahalanobis as a consistency score in a frame analysis, while the current proposal utilizes an entire ML.NET pipeline with training on authentic images using randomized PCA for dimensionality reduction and the calculation of an anomaly score.

For these reasons, a direct comparison between the results is impossible, even though the same or similar statistical methods are behind the mathematical apparatus. The way they are integrated into the overall model means that these performance characteristics are not a direct benchmark for the novelty of the research.

Some limitations need to be discussed. First, the model was trained on a limited dataset of 200 images, with 80 additional images used for testing purposes. This small sample size could restrict the model’s ability to generalize facial characteristics. Furthermore, the difficulty of generating realistic deepfake images for the test set was another challenge. The deepfake generation process is often time-consuming. Given these constraints, the results should be interpreted cautiously.

The model proposed in this study was trained exclusively on a dataset constructed from images of a single person, who is the first author of this article. The images were acquired in a controlled environment with the aim of validating an anomaly detection mechanism. Therefore, the authors emphasize that the model’s objective is not to build a system that generalizes in binary classification. The choice of training type presented in this study offers methodological benefits. Therefore, the aim is to avoid overfitting on manipulated datasets and focus on determining the natural characteristics of a human face. Because the model is designed as an anomaly detector and not a traditional classifier, testing was performed on a single set of images. Testing on other image sets or individuals would contradict the detection architecture and philosophy adopted in this research. Furthermore, the authors also emphasize that the introduction of additional sets for the second person requires the introduction of additional approval procedures regarding the use agreement for these data. The purpose of this research is not to validate the generalization to the wider population. The objective of this research is to demonstrate the concept of statistical deviations in facial geometry. These deviations are used in identifying forged images. The objective was achieved by the CMFRA algorithm, obtaining an accuracy of 90%. Furthermore, the deepfake image identification rate is 100%. Therefore, conducting additional tests on other data or individuals is neither possible nor necessary within the scope of this study. The authors justify the impossibility of using another dataset belonging to a different person due to the lack of ability to acquire images in a controlled environment. Since the additional tests exceeded the original scope of the research, the authors did not perform additional tests on other datasets. Introducing additional sets would require a completely different approach, focused on intersubjective generalization. Such a direction is foreseen as a possibility for expanding research within future research directions.

The CMFRA method has a computational cost associated with real-time implementation scenarios. The CMFRA pipeline is fully implemented in C# using the ML.NET platform, the Emgu CV library, and the Google Vision API. The average processing time for an image, including facial feature extraction, Mahalanobis distance calculation, and head pose estimation, was approximately 0.8 to 1.2 s on a system with an Intel i5 processor, 8 GB RAM, no dedicated GPU, with a download speed of 156.9 Mbps and an upload speed of 41.8 Mbps. Therefore, the method is suitable for integration into desktop, mobile, or embedded applications that do not require GPU infrastructure. Time is influenced by the internet connection speed because the three-dimensional head orientation is obtained through requests to the Google Vision API service. Compared to other neural networks, which require inference times on the order of seconds on a GPU and consume a lot of memory, CMFRA is much more efficient for scenarios with such computational constraints, as the computational effort is no longer necessary for training on a large dataset, and the volume used in training is small.

The CMFRA model is associated with 90% accuracy and a 100% recall rate for deepfake images. Following the analysis of the FP and FN cases, we identified the following factors that underlie the generation of these errors:

- Intense makeup that alters the natural contours of the mouth, eyes, or nose, generating statistical deviations from the learned model.

- Non-invasive aesthetic procedures, such as lip fillers or botulinum toxin injections, which subtly alter facial geometry.

- Water retention or temporary facial swelling affects the natural proportions between facial landmarks.

- Exaggerated facial expressions in the category of grimaces distort the position of key features.

- Extreme lighting conditions affect the landmarks returned by the Google Vision API in areas with shadows or overexposure.

- Atypical capture angles generate unusual combinations of yaw–pitch–roll values that were not included in the training set.

These observations show that the model is only sensitive to digital forgeries and a range of natural, but unusual, variations in facial appearance. These observations reinforce the idea of the sensitivity of the dataset used for training and, subsequently, of the images used for anomaly detection.

The CMFRA model is built as an anomaly detection system trained exclusively on authentic images. It does not use traditional binary classification on a balanced dataset. Therefore, Receiver Operating Characteristic (ROC) or precision–recall curves do not reflect the model’s performance in this case. For these reasons, visual representations such as the cumulative distribution of Mahalanobis distance, scatter plots, and the confusion matrix were used because they offer a direct interpretation of the model’s separability.

Future research should prioritize expanding the diversity and scale of the training dataset. Including more subjects with varying facial characteristics, such as age, gender, and ethnicity, would enhance the model’s accuracy. Moreover, incorporating additional features, such as Procrustes distance analysis, may provide a better understanding of facial feature manipulation, potentially improving detection rates. We also recommend further exploration of hybrid models that combine statistical methods like Mahalanobis distance with DL architectures.

The authors acknowledge the limitations of this study, stemming from the use of a single individual for analysis, which many readers may find unacceptable. ML models struggle with training on massive volumes of data, which generates a suite of problems that the authors of this study managed to avoid precisely through this approach. This intentional limitation of the present study was the use of a small, homogeneous dataset with authentic data from a single individual, namely one of the authors. This choice is motivated by the need to test the feasibility of an anomaly detection method based solely on the geometric modeling of the human face in relation to its three-dimensional position. The goal was not to build a system capable of generalization. The authors wanted to demonstrate that subtle deviations in facial features generated in the context of deepfakes can be identified using statistical methods, without having to go through the associated neural network training issues. Extending the method to diverse datasets can lead to a series of problems that make integrating the CMFRA algorithm impossible.

By implementing these recommendations, future studies could provide a more accurate evaluation of deepfake detection techniques, ultimately contributing to the safe and ethical use of generative AI technologies.

6. Conclusions

This study presented a novel approach to detecting deepfake images using the CMFRA, combining facial landmark detection with statistical analysis through Mahalanobis distance. The main research question aimed to determine whether statistical anomalies in facial landmark configurations could be effectively utilized to distinguish authentic faces from manipulated ones. Our results demonstrate that this method is feasible and effective, achieving an accuracy rate of 90% in distinguishing real images from deepfakes. This outcome supports the argument that statistical inconsistencies in facial geometry are reliable indicators of deepfake manipulations.

The CMFRA methodology utilized the combination of the Google Vision API for facial landmark extraction, Mahalanobis distance for statistical anomaly detection, and head pose analysis (yaw, pitch, and roll) to identify discrepancies in manipulated images. Focusing on authentic facial characteristics rather than training the model on manipulated images helped reduce overfitting.

This research contributes knowledge by demonstrating that deepfake detection is approached through the lens of statistical anomaly detection, focusing on geometric consistency in facial features. This contribution is important because, unlike traditional DL models that rely on training with vast numbers of manipulated samples, our method focuses on learning what constitutes an authentic face. By employing Mahalanobis distance as a comparative tool for facial landmarks, we provided a computationally efficient framework capable of identifying discrepancies that might escape visual examination.

We recommend several avenues for future work. Increasing the number of subjects, encompassing a wider array of demographic characteristics such as ethnicity, age, and gender, will improve the generalizability of our model. The comparative methodology in training for a specific subject or a generalized number of subjects will be part of our future work. Moreover, integrating Procrustes distance analysis as an additional tool could increase the model’s ability to evaluate the alignment and transformation of facial features. Future work should also investigate hybrid approaches that combine statistical methods, such as Mahalanobis and Procrustes distances, with DL frameworks.

In conclusion, the CMFRA has demonstrated that it is a trusted method for detecting deepfake images by leveraging statistical anomalies in facial landmarks and head pose data. Our research contributes a unique perspective to deepfake detection, emphasizing the potential of anomaly detection rather than solely relying on classification-based approaches. By focusing on facial features’ geometric and statistical consistency, our method provides a foundation for further exploration into deepfake detection techniques. The contributions here offer valuable insights for researchers and practitioners working to combat the negative impacts of deepfake technology.

Author Contributions

Conceptualization, C.-M.R.; methodology, C.-M.R. and A.S.; software, C.-M.R.; validation, C.-M.R. and A.S.; formal analysis, C.-M.R. and A.S.; investigation, A.S.; resources, A.S.; data curation, C.-M.R.; writing—original draft preparation, C.-M.R. and A.S.; writing—review and editing, A.S.; visualization, C.-M.R. and A.S.; supervision, C.-M.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Petroleum-Gas University of Ploiesti, Romania.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this study will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 2D | Two-dimensional |

| 3D | Three-dimensional |

| AI | Artificial intelligence |

| CMFRA | Customized Mahalanobis Facial Recognition Algorithm |

| CNN | Convolutional neural network |

| DCT | Discrete Cosine Transform |

| DFDC | Deepfake Detection Challenge |

| DL | Deep learning |

| FF++ | FaceForensics++ |

| FN | False negative |

| FP | False positive |

| GAN | Generative Adversarial Network |

| HPE | Head pose estimation |

| HPM | Head Pose Module |

| LR | Logistic regression |

| ML | Machine learning |

| MLP | Multilayer perceptron |

| MMDM | Mean Mahalanobis Distances Module |

| MSE | Mean squared error |

| NN | Neutral network |

| PCA | Principal Component Analysis |

| PSO | Particle swarm optimization |

| RNN | Recurrent neural network |

| ROC | Receiver Operating Characteristic |

| SIFT | Scale-Invariant Feature Transform |

| TN | True negative |

| TP | True positive |

| VGG | Visual Geometry Group |

| ViViT | Video Vision Transformer |

References

- Guarnera, L.; Giudice, O.; Battiato, S. DeepFake detection by analyzing convolutional traces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 2841–2850. [Google Scholar] [CrossRef]

- Noreen, I.; Muneer, M.S.; Gillani, S. Deepfake attack prevention using steganography GANs. PeerJ Comput. Sci. 2022, 8, e1125. [Google Scholar] [CrossRef]

- Gupta, G.; Raja, K.; Gupta, M.; Jan, T.; Whiteside, S.T.; Prasad, M. A Comprehensive Review of DeepFake Detection Using Advanced Machine Learning and Fusion Methods. Electronics 2023, 13, 95. [Google Scholar] [CrossRef]

- Borade, S.; Jain, N.; Patel, B.; Kumar, V.; Godhrawala, M.; Kolaskar, S.; Nagare, Y.; Shah, P.; Shah, J. ResNet50 DeepFake Detector: Unmasking Reality. Indian J. Sci. Technol. 2024, 17, 1263–1271. [Google Scholar] [CrossRef]

- Kang, J.; Ji, S.K.; Lee, S.; Jang, D.; Hou, J.U. Detection Enhancement for Various Deepfake Types Based on Residual Noise and Manipulation Traces. IEEE Access 2022, 10, 69031–69040. [Google Scholar] [CrossRef]

- Moon, K.H.; Ok, S.Y.; Lee, S.H. SupCon-MPL-DP: Supervised Contrastive Learning with Meta Pseudo Labels for Deepfake Image Detection. Appl. Sci. 2024, 14, 3249. [Google Scholar] [CrossRef]

- Guarnera, L.; Giudice, O.; Guarnera, F.; Ortis, A.; Puglisi, G.; Paratore, A.; Bui, L.M.; Fontani, M.; Coccomini, D.A.; Caldelli, R.; et al. The Face Deepfake Detection Challenge. J. Imaging 2022, 8, 263. [Google Scholar] [CrossRef] [PubMed]

- Roșca, C.M.; Bold, R.A.; Gerea, A.E. A Comprehensive Patient Triage Algorithm Incorporating ChatGPT API for Symptom-Based Healthcare Decision-Making. In Emerging Trends and Technologies on Intelligent Systems, Proceedings of the 4th International Conference ETTIS 2024, Noida, India, 27–28 March 2024; Lecture Notes in Networks and Systems; Springer: Singapore, 2025; pp. 167–178. [Google Scholar] [CrossRef]

- Siino, M.; Falco, M.; Croce, D.; Rosso, P. Exploring LLMs Applications in Law: A Literature Review on Current Legal NLP Approaches. IEEE Access 2025, 13, 18253–18276. [Google Scholar] [CrossRef]

- Ashani, Z.N.; Ilias, I.S.C.; Ng, K.Y.; Ariffin, M.R.K.; Jarno, A.D.; Zamri, N.Z. Comparative Analysis of Deepfake Image Detection Method Using VGG16, VGG19 and ResNet50. J. Adv. Res. Appl. Sci. Eng. Technol. 2024, 47, 16–28. [Google Scholar] [CrossRef]

- Shao, R.; Wu, T.; Liu, Z. Robust Sequential DeepFake Detection. Int. J. Comput. Vis. 2025, 133, 3278–3295. [Google Scholar] [CrossRef]

- Ramadhani, K.N.; Munir, R.; Utama, N.P. Improving Video Vision Transformer for Deepfake Video Detection Using Facial Landmark, Depthwise Separable Convolution and Self Attention. IEEE Access 2024, 12, 8932–8939. [Google Scholar] [CrossRef]

- Wang, T.; Chow, K.P. Noise Based Deepfake Detection via Multi-Head Relative-Interaction. Proc. AAAI Conf. Artif. Intell. 2023, 37, 14548–14556. [Google Scholar] [CrossRef]

- Atamna, M.; Tkachenko, I.; Miguet, S. Improving Generalization in Facial Manipulation Detection Using Image Noise Residuals and Temporal Features. In Proceedings of the IEEE International Conference on Image Processing, Kuala Lumpur, Malaysia, 8–11 October 2023; pp. 3424–3428. [Google Scholar] [CrossRef]

- Rosca, C.M.; Stancu, A.; Iovanovici, E.M. The New Paradigm of Deepfake Detection at the Text Level. Appl. Sci. 2025, 15, 2560. [Google Scholar] [CrossRef]

- Pagliaro, A.; Compagnino, A.A.; Sangiorgi, P. Advanced AI and Machine Learning Techniques for Time Series Analysis and Pattern Recognition. Appl. Sci. 2025, 15, 3165. [Google Scholar] [CrossRef]

- Shahzad, H.F.; Rustam, F.; Flores, E.S.; Luís Vidal Mazón, J.; de la Torre Diez, I.; Ashraf, I. A Review of Image Processing Techniques for Deepfakes. Sensors 2022, 22, 4556. [Google Scholar] [CrossRef]

- Tran, V.-N.; Lee, S.-H.; Le, H.-S.; Kwon, K.-R. High Performance DeepFake Video Detection on CNN-Based with Attention Target-Specific Regions and Manual Distillation Extraction. Appl. Sci. 2021, 11, 7678. [Google Scholar] [CrossRef]

- Kong, C.; Chen, B.; Li, H.; Wang, S.; Rocha, A.; Kwong, S. Detect and Locate: Exposing Face Manipulation by Semantic- and Noise-Level Telltales. IEEE Trans. Inf. Forensics Secur. 2022, 17, 1741–1756. [Google Scholar] [CrossRef]

- Li, L.; Bao, J.; Zhang, T.; Yang, H.; Chen, D.; Wen, F.; Guo, B. Face X-Ray for More General Face Forgery Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5000–5009. [Google Scholar] [CrossRef]

- Al-Adwan, A.; Alazzam, H.; Al-Anbaki, N.; Alduweib, E. Detection of Deepfake Media Using a Hybrid CNN–RNN Model and Particle Swarm Optimization (PSO) Algorithm. Computers 2024, 13, 99. [Google Scholar] [CrossRef]

- Zhang, W.; Zhao, C.; Li, Y. A Novel Counterfeit Feature Extraction Technique for Exposing Face-Swap Images Based on Deep Learning and Error Level Analysis. Entropy 2020, 22, 249. [Google Scholar] [CrossRef]

- Kong, S.G.; Mbouna, R.O. Head Pose Estimation from a 2D Face Image Using 3D Face Morphing with Depth Parameters. IEEE Trans. Image Process. 2015, 24, 1801–1808. [Google Scholar] [CrossRef]

- Heredia-Lidón, Á.; Martínez-Abadías, N.; Sevillano, X. Full-Range Yaw Prediction: A Multi-View Approach for 3D Head Model Pose Estimation Using Convolutional Neural Networks. In Artificial Intelligence Research and Development, Proceedings of the 25th International Conference of the Catalan Association for Artificial Intelligence, Barcelona, Spain, 25–27 October 2023; IOS Press: Amsterdam, The Netherlands, 2023; pp. 90–93. [Google Scholar] [CrossRef]

- Barros, J.M.D.; Garcia, F.; Mirbach, B.; Varanasi, K.; Stricker, D. Combined framework for real-time head pose estimation using facial landmark detection and salient feature tracking. In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Funchal, Portugal, 27–29 January 2018; pp. 123–133. [Google Scholar] [CrossRef]

- Maes, C.; Fabry, T.; Keustermans, J.; Smeets, D.; Suetens, P.; Vandermeulen, D. Feature detection on 3D face surfaces for pose normalisation and recognition. In Proceedings of the Fourth IEEE International Conference on Biometrics: Theory, Applications and Systems, Washington, DC, USA, 27–29 September 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Johnson, D.; Yuan, X.; Roy, K. Using Ensemble Convolutional Neural Network to Detect Deepfakes Using Periocular Data. In Intelligent Systems and Applications. IntelliSys 2023; Lecture Notes in Networks and Systems; Arai, K., Ed.; Springer: Cham, Switzerland, 2024; Volume 823, pp. 546–563. [Google Scholar] [CrossRef]

- Zamfir, F.; Paraschiv, N.; Pricop, E. Performance analysis in WiMAX networks using random linear network coding. In Proceedings of the 4th International Conference on Control, Decision and Information Technologies, Barcelona, Spain, 5–7 April 2017; pp. 590–594. [Google Scholar] [CrossRef]

- Paraschiv, N.; Pricop, E.; Fattahi, J.; Zamfir, F. Towards a reliable approach on scaling in data acquisition. In Proceedings of the 23rd International Conference on System Theory, Control and Computing, Sinaia, Romania, 9–11 October 2019; pp. 84–88. [Google Scholar] [CrossRef]

- Zamfir, F.S.; Pricop, E. On the design of an interactive automatic Python programming skills assessment system. In Proceedings of the 14th International Conference on Electronics, Computers and Artificial Intelligence, Ploiesti, Romania, 30 June–1 July 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Li, Y.; Sun, P.; Qi, H.; Lyu, S. Toward the Creation and Obstruction of DeepFakes. In Handbook of Digital Face Manipulation and Detection; Advances in Computer Vision and Pattern Recognition; Rathgeb, C., Tolosana, R., Vera-Rodriguez, R., Busch, C., Eds.; Springer: Cham, Switzerland, 2022; pp. 71–96. [Google Scholar] [CrossRef]

- Rao, S.U.; Ranganath, S.; Ashwin, T.S.; Reddy, G.R.M. A Google Glass Based Real-Time Scene Analysis for the Visually Impaired. IEEE Access 2021, 9, 166351–166369. [Google Scholar] [CrossRef]

- Rosca, C.-M.; Stancu, A.; Popescu, M. The Impact of Cloud Versus Local Infrastructure on Automatic IoT-Driven Hydroponic Systems. Appl. Sci. 2025, 15, 4016. [Google Scholar] [CrossRef]

- Apte, A.; Bandyopadhyay, A.; Shenoy, K.A.; Andrews, J.P.; Rathod, A.; Agnihotri, M.; Jajodia, A. Countering Inconsistent Labelling by Google’s Vision API for Rotated Images. In Innovations in Computational Intelligence and Computer Vision; Advances in Intelligent Systems and Computing; Sharma, M.K., Dhaka, V.S., Perumal, T., Dey, N., Tavares, J.M.R.S., Eds.; Springer: Singapore, 2021; Volume 1189, pp. 202–213. [Google Scholar] [CrossRef]

- Smith, A.O.; Tacheva, J.; Hemsley, J. Visual Semantics of Memes: (Re)Interpreting Memetic Content and Form for Information Studies. Proc. Assoc. Inf. Sci. Technol. 2022, 59, 800–802. [Google Scholar] [CrossRef]

- Omena, J.J.; Pilipets, E.; Gobbo, B.; Chao, J. The Potentials of Google Vision API-based Networks to Study Natively Digital Images. Diseña 2021, 1–25. [Google Scholar] [CrossRef]

- Rosca, C.-M.; Stancu, A.; Tănase, M.R. A Comparative Study of Azure Custom Vision Versus Google Vision API Integrated into AI Custom Models Using Object Classification for Residential Waste. Appl. Sci. 2025, 15, 3869. [Google Scholar] [CrossRef]

- Tursman, E.; George, M.; Kamara, S.; Tompkin, J. Towards untrusted social video verification to combat deepfakes via face geometry consistency. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 2784–2793. [Google Scholar] [CrossRef]

- Cărbureanu, M. The Calculation of the Integrals using the Sample Mean Method. Bul. Univ. Pet.–Gaze Din Ploieşti Ser. Mat.-Informatică-Fiz. 2006, LVIII, 79–86. Available online: http://www.unde.ro/bmif/docs/20062/13%20CarbureanuM.pdf (accessed on 24 July 2025).

- Cărbureanu, M. A Factor Analysis Method Applied in Development Field. In Analele Universităţii “Constantin Brâncuşi” din Târgu Jiu, Seria Economie; Constantin Brâncuși University of Târgu Jiu: Târgu Jiu, Romania, 2010; pp. 187–194. Available online: https://www.utgjiu.ro/revista/ec/pdf/2010-01/17_MADALINA_CARBUREANU.pdf (accessed on 24 July 2025).