Abstract

The paper critically reviews face recognition models that are based on deep learning, specifically security and surveillance. Existing systems are susceptible to pose variation, occlusion, low resolution and even aging, even though they perform quite well under controlled conditions. The authors make a systematic review of four state-of-the-art architectures—FaceNet, ArcFace, OpenFace and SFace—through the use of five benchmark datasets, namely LFW, CPLFW, CALFW, AgeDB-30 and QMUL-SurvFace. The measures of performance are evaluated as the area under the receiver operating characteristic (ROC-AUC), accuracy, precision and F1-score. The results reflect that FaceNet and ArcFace achieve the highest accuracy under well-lit and frontal settings; when comparing SFace, this proved to have better robustness to degraded and low-resolution surveillance images. This shows the weaknesses of traditional embedding methods because bigger data sizes reduce the performance of OpenFace with all of the datasets. These results underscore the main point of this study: a comparative study of the models in difficult real life conditions and the observation of the trade-off between generalization and specialization inherent to any models. Specifically, the ArcFace and FaceNet models are optimized to perform well in constrained settings and SFace in the wild ones. This means that the selection of models must be closely monitored with respect to deployment contexts, and future studies should focus on the study of architectures that maintain performance even with fluctuating conditions in the form of the hybrid architectures.

1. Introduction

Face recognition has recently become an imperative part of modern security systems, both biometric and non-biometric, as it offers a safe and time-efficient method of identity retrieval. It has also been widely used in various fields such as security, the health sector and in education, whereby it is used in criminal investigations, identifying patients and monitoring attendance. However, the changes in lighting conditions, facial expressions, head directions and background scenes tend to reduce the accuracy of recognition. Facial recognition technology has been significantly boosted due to the introduction of deep learning that has allowed extracting even complex visual patterns, thus exceeding the performance of more traditional methods. Although these developments have been made, the use of a single method of feature extraction might not be sufficient in varied environments. A combination of two or more models would give a better result in terms of recognition, but selecting the most significant features among the models is a big challenge, and this has its own impact on the accuracy and computation effectiveness of the system. Recent advancements, such as Fast-FaceNet and Siamese-based lightweight models, show that through optimization in network architecture, the integration of MobileNet, depth-wise separable convolution and memory access cost (MAC) balance, face recognition can be run on mobile and embedded systems with real-time performance and competitive accuracy.

The popularization of video surveillance, video stream analysis intelligent platforms and sensor infrastructure is being dictated by the growing development of cities and the adoption of the Smart City strategy. For face recognition, the important elements of such systems are so-called recognition technologies that not only provide security and law enforcement-related solutions, but also civilian ones, including access control, attendance monitoring and even targeted marketing. Although there are already many implemented face recognition and detection solutions, most of the systems perform poorly in real-time, as well as when there is poor lighting, odd facial poses, poor image resolution, and partial occlusions. Moreover, on many occasions, high level of accuracy in the processing of images could demand a huge amount of computing power, therefore making such a system not applicable in mobile and low-powered devices [,,,].

Practical surveillance situations, however, are even more complex because the scenes can be dynamic and many targets can enter them in such uncontrolled circumstances. Dynamic scene face recognition demands systems that not only recognize faces in the presence of a variety of different poses, illuminations, scale variations and occlusions, but whose performance remains adequate at a real-time level. These problems have motivated recent work to develop more powerful algorithms, including attention-based mechanisms, transformer architectures that enable models to learn more about long-range dependencies and occlusion-invariant features. Alternative approaches exploit spatiotemporal modeling within video streams by pairing Convolutional Neural Networks (CNNs) with either Recurrent Neural Networks (RNNs) or temporal transformers to allow systems to identify faces across frames in temporal sequences [,,,]. Moreover, to implement dynamic face recognition on edge devices, methodologies such as lightweight model optimization and knowledge distillation have been suggested that will allow faster inference at a reduced level of resource utilization. Therefore, to provide more successful and efficient face recognition in an active urban environment, the advanced means of recognition need to be incorporated in surveillance systems.

Beyond technical issues, special attention must be paid to questions of scalability, data privacy and the ethical use of face recognition systems—particularly in public and open spaces [,,,]. All these factors highlight the relevance of developing robust, adaptive and resource-efficient face recognition models. This work focuses on the investigation, evaluation and comparative analysis of modern face detection and recognition models under conditions close to real-time operation and challenging environmental settings. In the experimental phase, detection was implemented through fine-tuning of state-of-the-art models on diverse datasets, taking into account variations in lighting, image quality and viewing angles. Recognition was assessed by running and testing modern embedding-based models across a range of open and specialized datasets, covering factors such as age differences, facial expressions, resolution changes and head pose variations. The paper presents experimental results, accuracy and speed metrics, and provides an analysis of model effectiveness in the context of real-world applications.

2. Literature Review

2.1. Classical Methods

Before the emergence of deep learning, face recognition systems were mainly based on statistical and appearance-based methods. The Eigenfaces, Fisherfaces and Local Binary Patterns (LBP) are among the most powerful of them. These early methods formed the basis of contemporary facial recognition studies and are still applicable where the ability to execute tasks quickly and explain them is of importance. Principal Component Analysis (PCA) has also been used in the Eigenfaces [] approach to decreasing the dimensionality of the facial image data with the intention of isolating the most important features. Faces are expressed as a linear mix of a collection of eigenvectors—referred to as Eigenfaces—belonging to the confusion matrix of the training pictures. Though this will greatly simplify the computation process, it turns out to be very fragile to lighting and face expression changes. The Fisherfaces method [] avoids this issue by adding Linear Discriminant Analysis (LDA) to the PCA method to maximize between-class variability. In contrast with Eigenfaces where directions of maximum variance are sought without attention to the class labels, Fisherfaces tries to minimize intra-class variance and to maximize inter-class variance. This makes it offer better robustness to change in illumination and facade appearance. One of these is introduced by Ojala et al. (2002) []: LBP, a descriptor capturing local spatial patterns in grayscale images. LBP computes highly discriminative histograms that are somewhat invariant to monotonic intensity changes by thresholding the neighbourhood of each pixel and representing the result with a binary number. LBP is simple and efficient enough to be used in real-time systems of face detecting and recognizing, in such resource-constrained environments.

Despite the fact that high-performance applications have already replaced these classical techniques with deep learning-based models, these techniques are still useful in various applications. They are visible, computationally cheap and simple to implement, and may be applied to embedded systems, initial feature extraction or in hybrid recognition systems.

2.1.1. Eigenface

The paper [] suggests an application of the in-depth face recognition framework that uses a Normalized Eigenface connected with Histogram Equalization (HE) to deal with the imagery variance of lighting. The technique enhances the accuracies of recognition as images are preprocessed using HE, pixel intensities are normalized and PCA is used as the feature extraction technique. The proposed system was compared to regular Eigenface techniques and performed better on Yale B, Extended Yale B and AR datasets in different degrees of illumination. It was shown that the recognition of efficacy was enhanced by 13.6–222.9% more than the conventional Eigenface technique. This article establishes the usefulness of normalization and contrast enhancement in robust face recognition.

The paper [] compares FaceNet+SVM and Eigenface+SVM with respect to student emotion recognition in a learning environment, particularly scenarios in which there is varying light and occlusion. With only one image per learner, a 98% accuracy was achieved in FaceNet compared to only 84% when ideal conditions are met by Eigenface and 9% with poor lighting. The paper points out the FaceNet invariance to visual interference, which means that it can be used in emotion-aware learning systems. The major preprocessing steps included data augmentation and MTCNN face detection. The study makes it clear that deep learning is better than face recognition in a real-life learning environment.

This detailed survey [] examines how Eigenfaces have been applied to face detection, their mathematical basis and beyond how to apply them to real world problems and the the limitations of their application. The paper can be divided into classifications of approaches, benchmark datasets and light-occlusion datasets, as well as the difficulties with scaling datasets. It also reviews hybrid and augmented PCA-based methods with improvements being proposed via preprocessing and GPUs. The review concludes that the Eigenfaces have the disadvantage of being inaccurate in a wide variety of conditions which makes them relatively physically inefficient. The next research area will be the integration of Eigenfaces with deep learning to enhance their application in real-time and generalization elsewhere.

This systematic literature review [] presents the current advancements and issues of facial recognition technology, with special attention given to the concepts of systems, the measures of program performance and health and the societal and security applications of this technology. The review also notes that deep learning and the CNN-based approach have brought about a revolution in terms of accuracy and efficiency in recognition. Meanwhile, it determines some unresolved concerns like privacy, ethical dilemmas and bias in algorithms that require responsible and regulated application. The paper ends by identifying future research directions that need to be undertaken to enhance system reliability, eliminate bias and build trust among the users through ethical conduct and privacy.

The paper [] presents an algorithm of face recognition using quaternion to efficiently represent RGB images since they can be characterized with the use of quaternion matrices. To take care of high dimensionality, the approach reduces the dimensionality by projecting the data on a subspace with important information retained; additionally, a new Jacobi method has been developed to find the quaternion Hermitian eigenproblem. The experiment that was conducted on a standard face recognition dataset proves that the method attains comparable accuracy with a small number of Eigenfaces, thus enhancing the execution speed and scalability. The algorithm, implemented in Julia, has low execution time and another feature that enables it to work with larger image dimensions.

The authors of [] look at the use of CNNs for face recognition even with limitations, including having limited data about the images and some factors like lighting and facial expressions being present. The CNN model is contrasted against standard methods such as Eigenfaces, Fisherfaces, LBPH and MLPs and it has a higher accuracy and stability. The proposed CNN-based system performed better in keeping the recognition accuracy high since it was conducted in a classroom environment and the data is noisy and out of control. The work doubles on the importance of fine-tuning the CNN architecture and the fact that CNNs have potential in limited conditions. According to the research, it is better to choose CNNs to use in real-life, low-quality data.

2.1.2. Fisherfaces

The paper [] proposes a new method of face recognition that works in uncontrolled environments which can be referred as the Enhanced Local Binary Pattern (EnLBP) method. EnLBP improves Traditional LBP by dividing input images into sub-regions of 3X3, during which the arithmetic mean is calculated in each sub-region and encoding with LBP is implemented, which reduces the dimension without losing the essential important information of the texture. The face matching is carried out through the use of cosine similarity and it is tested on a benchmark, the LFW-a, which has provided better recall (61.43%) as compared to the other existing systems that use LBP (56.75%). What is more, EnLBP has far lesser computational complexity which makes it possible to implement it in real-time and show resilience to lighting inconsistent and a wide range of facial expressions, which is a significant breakthrough in the industry.

The authors of [] provide a systematic review of facial recognition technologies with details on how the system works, moving developments and advances in algorithm development, performance measures and operational use cases. The review also emphasizes how deep learning (and especially convolutional neural networks, CNNs) allow to enhance accuracy and efficiency to such an extent. Simultaneously, it highlights persistent obstacles like the apprehension of privacy, moral questions and algorithmic discriminations, which should be solved to adopt it responsibly. The paper ends with a recommendation of future studies that would futher work on the reliability of the system and minimize bias, as well as provide ethical and regulated application of facial recognition technology.

The paper [] introduces a novel pooling method called Robust LBP Guiding Pooling (G-RLBP) to enhance the noise robustness of CNN-based face recognition systems. The method uses Robust Local Binary Pattern (RLBP) to estimate noise-affected pixels and assigns weights during pooling to reduce their impact on feature maps. Integrated into the first pooling layer of standard CNNs like AlexNet and ZF-5Net, G-RLBP significantly improves recognition accuracy under noisy conditions. Experimental results on ORL and AR datasets demonstrate that the proposed method outperforms traditional pooling, particularly when images are distorted by Gaussian or salt-and-pepper noise. The approach offers a practical enhancement to CNN architectures in real-world face recognition applications where image quality is often compromised.

2.2. Deep Neural Network Models

The advent of deep learning has redefined face recognition entirely, offering previously unseen levels of accuracy rates and stability in practice. At the center of the change are CNNs, Siamese networks and attention-based models, which bring different benefits in the form of feature learning and presentation. Recent studies, such as SwinFace and ViT-Face, are proving the capabilities of the Transformer-based architecture in terms of providing long-range dependencies and spatiotemporal features and thus enhancing the robustness to dynamic surveillance scenarios. These strategies underscore the need to take sophisticated deep learning approaches in order to solve substantive aspects such as motion blur, occlusion and dynamic illumination under real world conditions.

2.2.1. CNNs

Most of current face recognition systems are based on CNNs. CNNs automatically discover proximity-sensitive features that characterize facial organization at ever higher levels of abstraction by using hierarchically organized chains of convolutional filters. The groundbreaking models like DeepFace [], FaceNet [] and VGGFace [] also showed that the networks trained on large-scale datasets can reach near-human results on such benchmark tasks. The advantage of CNNs is that it generates highly discriminative high-dimensional embeddings, and these embeddings are invariant to changes in pose, lighting and occlusion.

Ref. [] constructed a dataset of 2.6 million images spanning 2622 identities, combining web-scraped data with human-in-the-loop validation to balance scale and purity. This provided the largest publicly available dataset of its kind at the time—surpassing WDRef, CelebFaces and LFW. End-to-end CNN models were trained for both face identification and verification using this dataset, achieving state-of-the-art results on major benchmarks of the time, including LFW and YouTube Faces (YTF), using a relatively streamlined CNN architecture. Additionally, the authors explored the balance between dataset size and label accuracy; incorporating the rough filtering of noisy identities enabled scaling up without severely degrading model efficiency.

In the paper [], MTCNN is used to introduce a three-stage cascade of CNNs-P-Net, R-Net and O-Net that jointly performs face detection and facial landmark localization in real-time. Each stage uses increasingly complex CNNs to refine candidate regions and landmark positions. MTCNN simultaneously learns to detect faces and align them, leveraging the correlation between tasks to improve both accuracy and robustness. MTCNN achieves state-of-the-art results in both face detection and five-point facial landmark localization tasks on benchmark datasets like FDDB and WIDER FACE.

Ref. [] introduces lightweight models combining CNN local feature extraction with Transformer’s global context awareness. This enhances AlexNet with generative adversarial training for age-variant recognition. ResNet offers high accuracy and fast convergence via inception-residual structures.

Ref. [] applied CLAHE (Contrast Limited Adaptive Histogram Equalization) and adaptive gamma correction for illumination normalization. The authors used MTCNN for accurate face detection and alignment across various poses and lighting conditions to stabilize feature extraction. They also fine-tuned multiple pretrained CNN backbones—VGG16, VGG19, ResNet50, ResNet101 and MobileNetV2 []—on targeted datasets (e.g., CASIA3D, 105PinsFace) to capture diverse facial representations.

The paper [] reviews the significant progress made in deep learning face recognition, including CNNs, transfer learning and face feature extraction techniques. In doing so, the paper identifies persistent issues: fairness and bias in recognition across demographics; privacy and security risks; and vulnerability to adversarial attacks, especially spoofing.

2.2.2. Siamese Networks

A different paradigm, Siamese networks, was proposed by Chopra et al. in 2005 [] to encapsulate signature verification and subsequently modified to face recognition. These models are composed of the two twin subnetworks with the two subnetworks sharing the weights and processing pairs of images as the input. The training is used to make the network learn a distance measure minimized between pairs of similar face embeddings and maximized between pairs of dissimilar face embeddings. Among the most famous of implementations is FaceNet, which employs a triplet loss to drive this similarity restriction. Siamese-based architectures work especially well on the few-shot learning setting where the ideas of the new identities have little labeled data that can be harnessed. The authors of the paper [] address the limitations of classical face recognition techniques, especially under varying conditions like pose, lighting and occlusion. The proposed method leverages a Siamese network architecture, composed of two identical convolutional branches that learn to measure the similarity between face image pairs. The approach eliminates the need for face alignment by using multi-view and multi-illumination face samples during training, ensuring robustness across diverse scenarios. The system comprises two stages: face detection (using Haar feature-based cascades) and face recognition (using Siamese CNN). The network is trained layer-by-layer using supervised learning and stochastic gradient descent. It is tested on a combined dataset consisting of LFW and lab-collected images, achieving an accuracy of 98.21%, which is competitive with state-of-the-art models like FaceNet and DeepID. Comparative experiments show the effectiveness of the approach, especially due to its efficient architecture and reduced reliance on complex preprocessing. The study concludes that Siamese CNNs are highly suitable for similarity-based biometric recognition and encourages further research in deep learning-based face recognition.

The paper [] proposes a complete low-resolution face recognition (LRFR) system that integrates face detection, super resolution (SR) and face recognition components. The system is specifically designed for real-world scenarios where high-resolution images are unavailable, such as from surveillance cameras or low-quality webcams. The core innovation lies in employing a Siamese network within the face recognition module to support one-shot learning and unbounded identity recognition.

The paper [] introduces a novel approach to low-resolution face recognition (LRFR) using a multi-stream CNN embedded in a Siamese architecture. The system is designed to handle facial images captured in uncontrolled conditions—common in real-world surveillance scenarios—where images suffer from blur, occlusion, pose variation and poor lighting. The proposed model features eight parallel CNN streams, each employing depth-wise separable convolutions to reduce complexity while preserving representational power. A spatial dropout layer and joint identification–verification supervisory signals are employed to improve feature learning and prevent overfitting. The network uses contrastive loss for metric learning to ensure that similar faces are mapped closely in the embedding space. Additionally, the paper proposes a learned thresholding mechanism rather than relying on a fixed similarity threshold. This enhances classification performance by adapting the decision boundary during training.

The paper [] addresses the problem of face recognition under degraded conditions, such as occlusions (masks, sunglasses), illumination changes, facial expressions and pose variations, which commonly reduce the accuracy of traditional systems. The authors propose a few-shot learning approach using a Siamese network built upon a pretrained Inception-v3 model to enhance face recognition performance, particularly in uncontrolled environments with limited data. A novel Siamese network architecture based on Inception-v3 is designed for multi-class face recognition in degraded conditions. The network is trained using contrastive loss, which measures the similarity between image pairs, enabling robust face embedding even with few samples.

In the paper [], two streamlined architectures, (i) Simple SqueezeFaceNet (SSN) and (ii) Channel-Split Network (CSN), are suggested to obtain high-performance identification, both in space and time and in real-time performance in embedded and mobile systems. The authors contrast traditional methods which mainly optimize a FLOP count, with the newer measure of memory access cost (MAC) which is more robust in measuring the speed of inference. Striking a balance between MAC and FLOPs, the CSN variants (CSN-fast, CSN-faster, CSN-fastest) can reach an accuracy of 0.992 on the LFW with 155–180 FPS, greatly exceeding MobileFaceNet in terms of speed, with few accuracy trade-offs. In sum, the paper shows that efficient parameterization and MAC optimization can be used to implement compact high-speed face recognition capable of real-time video applications.

2.2.3. Attention-Based

Recently attention-based models have become an influential alternative or complement to ordinary CNNs. Motivated by success in natural language processing models, these approaches include those based on self-attention mechanisms, or full Transformer architectures, where the computational resources are deployed to the most important parts of the face. Particularly, the results of the model formations of Vision Transformers (ViTs) and hybrid models such as SwinFace [] or TransFace have exhibited state-of-the-art results in the many benchmarks that they were tried in. The focus on attention improves the ability of the model to notice long-range dependencies and spatial context, which are particularly helpful when the facial portion’s quality is below par or there is enormous variation. All these deep learning-based methods have restructured the technical face recognition. Although they need considerable computational elements and large training data, they have achieved success due to their ability to generalize, be flexible and have high accuracy, leading to them being the paradigm in both scholarly research as well as commercial machinations.

The paper [] develops an attention recognition algorithm of an occluded face based on a multi-scale mechanism that enhances recognition in adverse conditions. Attention modules of different scales can be combined to enable the model to find the parts of the face that are visible and informative together but minimize the influence of the blocked sites. Experiments are performed on a number of standard face recognition datasets with different combinations of occlusions, and show that the method yields significant improvement over baseline algorithms in terms of recognition accuracy. The article establishes that the multi-scale attention enhances robustness and flexibility and hence the model can be used in face recognition in practice, where occlusions are likely to occur.

The method proposed in the paper [] is an occluded face recognition based on the combination of an attention mechanism and damaged feature masking to enhance the robustness to partial occlusions. The damaged feature masking strategy converts unreliable features in covered areas whereas the attention module allows the network to focus on visible and informative facial regions. With experiments on benchmark datasets under various settings of occlusions, the proposed method outperforms the traditional methods with a substantial increase in recognition accuracy. The analysis shows the power of attention/feature masking combination on real-world occluded face recognition tasks.

The paper [] addresses the persistent challenge of face recognition in unconstrained environments where facial occlusions are common, such as in crowds, extreme head poses, or where there is partial visibility due to obstacles. The authors propose a novel deep learning approach for partial face recognition that leverages an attention-based architecture built upon a truncated ResNet-50 backbone. The proposed method demonstrates that attentional re-calibration and region-specific aggregation significantly enhance partial face recognition, making it feasible to match incomplete or occluded face images effectively. This solution is especially relevant for surveillance, forensic analysis and real-world applications where complete face visibility cannot be guaranteed.

The proposed model in [] adopts ConvNeXt-T as the backbone and incorporates the Efficient Channel Attention (ECA) mechanism. This combination enhances the extraction of discriminative features from the visible (unmasked) regions of the face while maintaining computational efficiency and avoiding unnecessary dimensionality reduction. This achieved 99.76% accuracy on real-world masked face datasets and maintained 99.48% accuracy under challenging conditions such as poor lighting and contrast.

2.3. Deep Learning Architectures

2.3.1. FaceNet

Specialized deep learning architectures have more recently been proposed that are directly applicable to the specific constraints of face recognition at scale and with no constraints on the environment. Some of the most notable are FaceNet, ArcFace (additive angular margin), and CosFace, in addition to similar models that have been used to propose new loss functions and embedding approaches to maximize the feature discrimination together with intra-class compactness. The most important step in its direction has been the introduction of a unified framework, in which a single model [,,] embedded facial images in a low-dimensional Euclidean space. The network is optimized with a triplet loss, which means that the difference between an anchor and a positive (same identity) should be smaller than the difference between the anchor and a negative (different identity) by a certain margin. The method does not require a layer of intermediate classification and high-performance verification, and clustering and identification of faces is possible using only one model. Ref. [] pushed research towards face verification in real-world conditions, capturing natural variation in pose, lighting, occlusion and expression. Over 50 methods have been evaluated using LFW, spanning descriptor-based approaches (e.g., LBP, SIFT+Fisher vectors), metric and subspace learning and CNN-based models. High-performing systems such as FaceNet achieved 99.6% accuracy, roughly corresponding to or surpassing human-level performance, with only a handful of residual errors often tied to labeling issues [].

The paper [] presents a CNN-based system for automated attendance monitoring through real-time face recognition. The system utilizes two prominent deep learning models, FaceNet and VGG-16, aiming to identify multiple faces simultaneously in live settings such as classrooms or offices. The FaceNet model is used for face embedding and identification, while Haar Cascade is applied for initial face detection. A custom dataset containing images of 32 students was created and stored on Amazon RDS, ensuring cloud-based, secure storage and fast access. The system workflow involves capturing images through a camera, detecting faces, extracting features using FaceNet, comparing them against a registered database and logging attendance automatically.

The article [] introduces Low-FaceNet, a deep learning framework designed to improve face recognition performance in low-light conditions, where traditional models often fail due to degraded visual quality. Unlike prior approaches, Low-FaceNet integrates low-light image enhancement (LLE) and face recognition into a single unified model, enabling mutual benefits between the enhancement and recognition tasks.

The paper [] has presented Fast-FaceNet, a shallow face detection system, which comprises the incorporation and integration of the MobileNet into FaceNet to minimize cost of computation and at the same time enhance the speed of processing. Depth-wise separable convolutions and the triplet loss function have been used to incorporate compact discriminative feature embeddings in the model. Experiments on LFW demonstrate that Fast-FaceNet has similar accuracy to the original FaceNet with a speedup of more than 2.5×, which makes it suitable as a real-time solution and a mobile face recognition app. All in all, it is quite effective in terms of trade-off between accuracy and speed of face recognition.

Based on the idea of embedding-based learning in FaceNet, CosFace [] and ArcFace [] also offered improvements on embedding-based Softmax losses that further enhanced the discriminative capacity of the learned features.

2.3.2. CosFace

CosFace adds an additive cosine margin to Softmax loss, which promotes increased angular margin between classes. Ref. [] introduced a novel loss function that adds an explicit angular margin in normalized hyperspherical space, directly corresponding to the geodesic distance between feature vectors and class centers. Unlike SphereFace’s multiplicative margin or CosFace’s cosine margin, ArcFace’s additive margin has a clear, exact geometric interpretation, which enhances convergence stability and computational efficiency. It outperformed previous methods across ten face recognition benchmarks, including massive image and video datasets, improving large-scale recognition accuracy. Unlike A-Softmax (SphereFace), which uses a multiplicative angular margin and causes unstable, non-monotonic decision boundaries, ref. [] introduced CosFace’s additive margin, which is geometrically intuitive and monotonic, improving optimization stability. This formed the foundation for later margin-based losses like ArcFace and ElasticFace. Ref. [] states that unlike margin-based losses such as ArcFace or CosFace that focus solely on enhancing the target class, RPCL introduces a reverse mechanism that suppresses the rival logit for each sample. This increases inter-class separation and reduces misclassification, especially under low-res conditions. This outperforms existing margin-based and uncertainty-aware methods on multiple LR face datasets, demonstrating significantly improved robustness under severe resolution degradation.

2.3.3. ArcFace

ArcFace goes further to correct this by adding an angular margin penalty that not only features classes that are well separated but also features these together within a prior cluster. Such changes occur to provide better outcomes in relation to common face recognition benchmarking, namely LFW, MegaFace, and IJB datasets. Other architectures of note are SphereFace [], with a multiplicative angular margin, and MagFace [], which adds magnitude-aware learning to condition the feature representation on the quality of face images. These inventions aim to tackle practical problems like intra-class variability, intra-class imbalances and quality-aware recognition. These architecture designs have been very successful owing to their fine-tuning of the embedding space, with the geometry of learned features having a direct relationship to recognition performance. When coupled with large-scale datasets and a large CNN backbone (e.g., ResNet, MobileFaceNet), these models scale well, with high performance accuracy.

The paper [] proposes a modified Softmax classifier that incorporates a fixed additive margin in the cosine similarity space to better separate classes. AM-Softmax was contrasted with multiplicative margin losses (SphereFace) and additive angular margin losses (later seen in ArcFace), demonstrating stable convergence and competitive accuracy while maintaining algorithmic simplicity.

2.3.4. OpenFace

The paper [] proposed a new technique of identifying suitable hard-negative examples in the course of pedagogy through Triplet Loss. The method utilizes some of the sample pairs which otherwise go to waste, and thus develops a better model accuracy and performance. In order to handle this possible danger of premature convergence due to the added hard-negative samples, it utilizes the Adaptive Moment Estimation (Adam) optimization algorithm. The proposed method scored 0.955 of accuracy and 0.989 of the AUC on the LFW verification benchmark, which is more than 0.929 of the accuracy and 0.973 of the AUC of the original OpenFace model.

The UCEU dataset [] consists of 7395 images representing the images of 130 subjects of which 44 are men and 86 are women. In order to confirm that there still is a chance to increase the accuracy of face recognition in Asian face verification, we also employ four other models of face verification, including OpenFace and ArcFace and the VGG-Face model with gender, expression and age recognition, to the UCEC-Face.

The paper [] presents OpenFace which is an open-source face recognition library that attempts to address the face recognition accuracy disparity between publicly and state-of-the-art privately developed face recognition systems. As we integrate cameras within the Internet of Things (IoT), face recognition has the ability to occur and improve contextual understanding. OpenFace has state-of-the-art performance with almost-human accuracy on the LFW benchmark and a new classification benchmark that focuses more on the mobile setting. Targeting non-experts, the paper provides also an overview of simplified approaches of deep neural networks applied in the system.

In the article [], the authors have developed a face recognition system with the help of OpenFace combined with an intelligent training technique known as S-DDL (Self-Detection, Decision, and Learning). S-DDL allows a real-time update of the models as opposed to the fixed SVM model of conventional systems through an incremental SVM algorithm. This adaptive method increases recognition performance of particular user groups and still achieves reasonable training times. Experimental findings prove that the system has been promising and has high accuracy and real-time performance where the incremental SVM does better as compared with the traditional SVM ones.

2.3.5. SFace

The position of deep face recognition has also improved due to big training scale datasets as well as better loss functions that optimize the distance between members of the same class and distance between the two classes. But current techniques are ill equipped to consider the effect of poor quality training images leading to overfitting. In response, the authors [] introduce a new loss termed as Sigmoid-Constrained Hypersphere Loss (SFace) to smooth the optimization process by the use of sigmoid functions to achieve a balance between intra- and inter-class goals. It is shown experimentally using a variety of benchmark datasets that SFace enhances the robustness and recognition capability of a model. Recent models of deep face recognition achieve the best results by the use of large-scale face dataset such as MS-Celeb-1M and VGGFace2, which were later removed because of their privacy issues. To address this matter, in the work [], the researchers investigate applying a synthetically created, privacy friendly face dataset, called SFace, which was generated with a class-conditional GAN. The research reviews that synthetic images are difficult to trace back to identities in original dataset, and suggests three types of training strategies: multi-class classification, label-free knowledge transfer and a combined approach. The experimental findings demonstrate that SFace models can achieve high real-world benchmarks such as an accuracy of 99.13% on LFW and confirm its performance efficacy and privacy benefits. The privacy, ethical and legal issues that have arisen about authentic face databases such as MS-Celeb-1M and VGGFace2 have raised subsequent interest in synthetic data to use in training face recognition architectures. This work [] presents SFace, a new GAN-based generator class-conditional to produce synthetic face images with class labels and establishes via an extensive study that the generated face images do not pose a risk to the identity of people in the original training datasets. It is suggested and tested on operational benchmarks that three training strategies are viable usages of synthetic data when developing privacy-aware models. To boost up the identity discrimination performance further, the revamped SFace2 procedure acquires samples of the GAN latent w-space, which performs better in the task of face recognition.

2.4. Face Detection

There are many proposals aimed at improving the accuracy and speed of face detection. However, there is still no universal solution that works effectively under various conditions such as low lighting, poor image quality and different viewing angles. Therefore, it is necessary to investigate modern approaches and models, identify their strengths and weaknesses and develop a more universal and reliable solution that would work quickly and efficiently in challenging conditions. In recent years, several models have been proposed to address the challenges of real-time face detection. Yisihak and Li [] explore the application of YOLOv8, demonstrating its high accuracy and efficiency in real-time conditions. Their study highlights how the integration of YOLOv8 into AI systems can improve performance in security and surveillance applications, making it an attractive choice for such environments.

The issue of detecting small faces at long distances in crowded settings is addressed by Chang et al. []. They enhance YOLOv3-C by incorporating multi-scale feature extraction, which improves the model’s sensitivity to small faces. This development proves valuable in complex scenarios, such as those involving high-density crowds or large surveillance areas.

In a similar vein, Adarsh et al. [] focus on balancing detection accuracy and processing speed. Their work presents an improved version of YOLOv3-Tiny, optimized for real-time applications, especially in resource-constrained environments like mobile and embedded systems. This makes the model suitable for deployment on devices with limited computational power, similar to the need addressed by Chang et al. for crowded environments.

While these studies focus on YOLO variants, other models have been proposed to tackle face detection in different ways. For example, Yan et al. [] introduce an improved Faster RCNN with a novel non-maximum suppression (NMS) algorithm, which reduces false positives and missed detections, particularly in challenging conditions such as occlusion or uneven lighting. This approach focuses on improving detection accuracy in scenarios where YOLO-based models might face limitations.

Liu and Yu [] take a different approach with a lightweight version of RetinaFace, achieving high detection accuracy while maintaining computational efficiency. Their model outperforms the original RetinaFace and other lightweight detectors, making it particularly suitable for applications requiring both accuracy and efficiency in real-time systems.

Meanwhile, Owusu et al. [] propose a face detection method based on a multilayer feed-forward neural network with Haar features, achieving impressive results in terms of detection accuracy (98.5%) on several datasets, including FDDB and CMU-MIT. This method is noted for its speed and efficiency, which is beneficial for real-time applications in environments with limited processing power.

Another relevant contribution comes from Wang et al. [], who focus on improving the accuracy of eye detection in complex environments by introducing a specialized eye regression network. Their improved MTCNN network achieves remarkable results on benchmark datasets, including LFW and YouTube Faces DB, further emphasizing the importance of model optimization for specific detection tasks.

Finally, Xu et al. [] propose CenterFace, a joint face detection and facial landmark alignment method that runs efficiently even on devices with limited computational resources. The model, which is anchor-free, demonstrates high accuracy on challenging benchmarks like WIDERFACE and FDDB, making it ideal for low-power edge environments.

While the majority of recent works have focused on improving the accuracy and speed of face detection models, this stage represents only the foundation of the broader facial analysis pipeline. As previously noted, the effectiveness of face recognition systems is highly dependent on the quality and precision of the detection stage. However, face recognition itself introduces an additional layer of complexity. In real-world applications, systems must contend not only with basic alignment but also with significant intra-class variations (such as changes in facial expression, aging or lighting) and inter-class similarities (such as individuals with similar appearance), all of which can dramatically impact recognition performance.

To address these challenges, several modern approaches have been proposed that aim to increase the robustness and accuracy of face recognition systems in practical scenarios. For example, Schroff et al. [] propose FaceNet, a deep learning system that directly maps facial images to a compact Euclidean embedding space, where distances correspond to facial similarity. Unlike earlier approaches that relied on intermediate representations, FaceNet learns the embedding directly through training with triplets of matched and non-matched face images using a novel online triplet mining strategy. This enables high representational efficiency, requiring only 128 bytes per face descriptor, and achieves state-of-the-art accuracy on benchmarks such as LFW (99.63%) and YouTube Faces DB (95.12%), reducing the previous error rate by 30%. The model is well-suited for recognition, verification and clustering tasks in large-scale scenarios.

Building on this foundation, Deng et al. [] introduce ArcFace, which incorporates an additive angular margin loss into the softmax framework to enhance the discriminative power of learned features. This loss function provides a clear geometric interpretation and significantly improves inter-class separability and intra-class compactness. Recognizing the susceptibility of ArcFace to label noise, the authors further propose sub-center ArcFace, which introduces multiple sub-centers per class to better handle noisy and hard examples. Beyond recognition, the model demonstrates its versatility by generating identity-preserving face images without training separate generative models, using only gradients and Batch Normalization priors.

To further improve adaptability in real-world conditions, Boutros et al. [] present ElasticFace, which relaxes the fixed-margin constraint used in traditional margin-based loss functions such as ArcFace and CosFace. ElasticFace introduces a stochastic margin sampled from a normal distribution during training, allowing the model to better adapt to inconsistent inter- and intra-class variations. This flexible margin encourages dynamic decision boundaries, enhancing both discriminability and generalization. The method outperforms previous approaches across seven of nine standard benchmarks, making it a robust solution for face recognition in complex settings.

Kim and Lee [] shift focus to facial expression recognition under occlusion and intra-similarity challenges. Their proposed method combines a Spatial Transformer Network (STN) with an attention mechanism to isolate expression-relevant facial regions. In parallel, a triplet loss function is employed to minimize intra-class variability caused by differing facial structures among individuals expressing the same emotion. This dual strategy proves effective, yielding performance gains of 2.09% and 0.48% over classical and modified ResNet-based methods on the CK+ and FER2013 datasets, respectively. The model’s robustness under occlusion highlights its practical applicability in real-world FER scenarios. Efficiency for deployment on edge devices is addressed by Chen et al. [], who develop MobileFaceNets, a family of highly compact CNN architectures optimized for real-time face verification. These models use fewer than one million parameters and achieve superior accuracy compared to traditional mobile networks, with more than double the speed of MobileNetV2. Despite their lightweight design, MobileFaceNets trained with ArcFace loss reach 99.55% accuracy on LFW and 92.59% TAR@FAR1e-6 on MegaFace, matching much larger CNNs. Their small size (4MB) and fast inference (as low as 18 ms on mobile devices) make them ideal for embedded applications.

Lastly, Li and Lee [] focus on the persistent challenge of cross-age face recognition. They propose an attention-based feature decomposition framework that isolates age-invariant identity features from facial representations. The model uses a channel attention mechanism to separate identity and age-related attributes and applies a direct sum loss to suppress aging interference. This end-to-end architecture reduces training complexity and improves performance on cross-age benchmarks (CACD-VS, AgeDB, CALFW) as well as general-purpose datasets (LFW), demonstrating enhanced robustness to aging effects.

Together, these advancements represent a multifaceted response to the evolving demands of face recognition systems. From embedding learning and margin adaptation to expression recognition, lightweight architectures and age-invariant modeling, the literature reflects a sustained effort to meet real-world requirements in both accuracy and efficiency.

In summary, despite significant advancements in both face detection and recognition, there remains a clear gap in research that comprehensively addresses both tasks under diverse challenging conditions—such as low lighting, poor image quality, occlusions and varied viewing angles. Most existing studies tend to focus exclusively on either improving face detection or enhancing recognition algorithms, often considering only one specific challenging factor at a time.

In contrast, this work aims to fill this gap by conducting an integrated investigation involving the fine-tuning and experimental evaluation of state-of-the-art face detection models, alongside the testing and assessment of face recognition systems across multiple datasets that reflect a broad range of real-world scenarios. This holistic approach not only enhances the robustness and generalizability of facial analysis systems but also provides a more complete understanding of their performance under diverse conditions.

2.5. Research Gaps

Despite significant progress in the field of face recognition using deep learning, a number of unresolved issues persist, especially in environments beyond controlled scenarios. The key research gaps identified based on an analysis of the existing literature are presented below in Table 1:

Table 1.

Research gaps.

3. Materials and Methods

3.1. Datasets

In order to measure and compare the face recognition algorithms in different real-life situations, a wide range of freely offered datasets was used. The choice of each dataset was based on the special contribution to the issues of face recognition, namely pose variations, aging effects and low-resolution surveillance situations. This section will introduce a brief information each dataset (Table 2).

Table 2.

Information about datasets used.

The Labeled Faces in the Wild (LFW) dataset is one of the most common benchmarks for learning the technique of unconstrained face recognition. The dataset was created by the researchers at the University of Massachusetts, Amherst. The images are preprocessed through Viola–Jones face detector and are provided across various formats in aligned positioning. The deep-funneled version is documented to perform better than the others on most face verification algorithms and it is the one that is used in this study. The datasets also have metadata files that aid in the generation of training/testing protocols. Specifically, in the dataset, there are 1680 people who have two (or more) interviews differentiated by picture.

The Cross-Pose LFW (CPLFW) is built upon the dataset LFW; moreover, it proposes the issue of face variation due to poses as a challenge in the face recognition solutions of an unconstrained setting. It also gives 6000 test pairs in the form of [filename1, filename2, is_same] and thus can serve in face verification purposes. Though rooted in the same image pool as LFW, this set separates and highlights the impact of the dissimilarity in the pose, which requires algorithm immunity to facial orientations.

To overcome the challenge of age-related diversity in LFW, the Cross-Age LFW (CALFW) dataset creates intra-class diversity in age by creating 3000 positive pairs of faces with large age difference. Also, the negative pairs are selective and carefully curated to be gender- and race-balanced to ensure that they adhere to minimum interclass attribute bias. Such artificial creation makes the task of face verification even more challenging and more realistic. Experiments report that performance degrades by 10–17% compared to LFW, which explains the need to develop age-invariant models.

AgeDB-30 is a collection of face image data emphasizing more on age variability where there are 440 people aged between 3 and 101 years. The dataset already has identity, age and gender annotations; thus, it can be used in age estimation, age-invariant face recognition and age progression modeling. The wide age range and image variety of the age representation in the real world render AgeDB-30 is a valuable tool to assess the performance of an algorithm with regards to changes related to the lifespan.

QMUL-SurvFace is a massive dataset that has been prepared with the intention of being utilized in low resolutions and free faces recognition, which are solely for surveillance purposes. It consists of different people filmed in the natural setting of surveillance video in different locations, spatial scales and over time. As opposed to many sets that emulate original low resolution by down-sampling the pictures, SurvFace contains low-resolution originals. It is distinctively good at open-set tasks paradigmatic of surveillance, where distractors unrelated to the target make a recognition task much more complex.

3.2. Evaluation Metrics

In the case of real-time and unconstrained face recognition systems in an environment, it is important to be able to evaluate them properly using the right measures in order to be able to make fair evaluations and comparisons with current state-of-the-art models. In this section, one would define the most common measures in face-recognition work, especially those that are used to assess the performance of classifications and verification and computational environment of the experiments.

All the experiments were performed on a laptop computer that uses an Intel Core i7-10750H as a CPU, NVIDIA GeForce RTX 2060 as a GPU and 16 GB DDR4 as the ram, which enables good computation and data processing. It was deployed on a lib stack based on Python 3.12.7 featuring DeepFace and TensorFlow-GPU to speed training and inference.

The following evaluation metrics were used to assess the performance of the fine-tuned models developed during the experiments: ROC-AUV, EER (Equal Error Rate), Threshold at EER, Accuracy, Precision, Recall and F1-Score.

ROC-AUC is used as a measure of how well the system can differentiate genuine matches (belonging to the same person) and impostor matches (different persons) in face recognition (and specifically verification problems, in which the system decides whether two faces are of the same person or not) []. The high AUC values depict that the system will always rank correct matches higher than incorrect matches no matter what the threshold is.

EER of biometric systems is of special interest in biometric systems that focus on the face, where the aim is to have both few false acceptances (where unauthorized persons are accepted) and false rejections (where authorized persons are rejected) []. EER is the value of error rates when they are equal, and the lower the EER value, the more equal and reliable a recognition system will be.

The value threshold at EER [] indicates to what extent the confidence score (e.g., similarity threshold) will be exact, under which FAR will be equal to FRR. It also tends to be employed to bring a default concentration level of the decision-making process, which produces balanced performance in a practical implementation.

Accuracy is a measurement of how many of the identified people were correctly identified (in closed-set identification in cases where the person is known and in the training dataset) [,]. Although easy to calculate, it may be misleading either in the event of imbalanced dataset, or in the event of using the system in open-set conditions (i.e., some unknown individuals may appear).

When applied to face recognition and face detection, a high Precision score is first necessary when target applications have significant consequences in terms of false positives (e.g., security access) [], and a high Recall score is needed when missing a genuine identity costs a lot in economic or security terms (e.g., identifying criminals in video surveillance).

In a context wherein face recognition is implemented by a Smart City framework to personalize marketing exposure within a public space, F1-score is an excellent indicator that the exposure is presented to most faces with the correct picture as assessed by the model (high accuracy), more so than the intended people predicted to be posed by the face picture (high precision), thus reducing the elements of false alerts and missed alerts.

4. Results

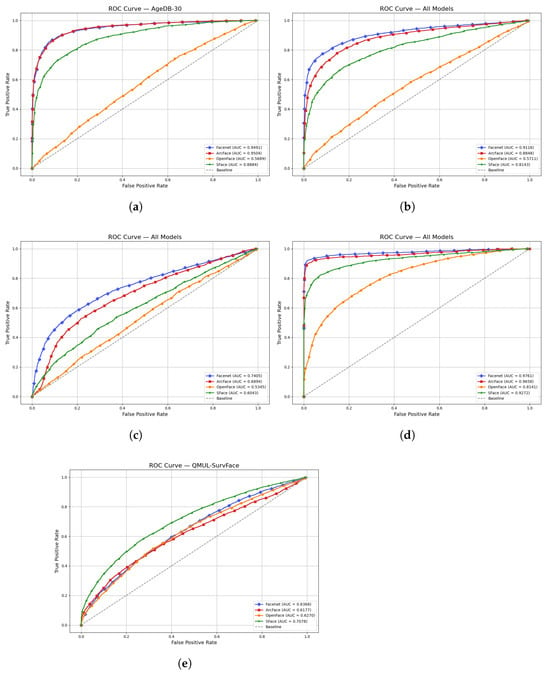

This section presents the comparative performance analysis of four face recognition models—FaceNet, ArcFace, OpenFace, and SFace—evaluated across five benchmark datasets. The assessment is based on ROC curves, as illustrated in Figure 1. On the LFW dataset, which contains images captured in unconstrained conditions, all deep learning-based models demonstrated strong verification performance. FaceNet achieved the highest AUC of 0.9761, followed closely by ArcFace (0.9658) and SFace (0.9272). OpenFace, which relies on classical feature descriptors, yielded a significantly lower AUC of 0.8141, indicating its limitations in modeling complex facial variations under unconstrained scenarios.

Figure 1.

ROC Curve: (a) AgeDB-30. (b) CALFW. (c) CPLFW. (d) LFW. (e) QMUL-SurvFace.

The CPLFW dataset introduces pose variations to the LFW dataset, increasing the difficulty of face verification. The performance of all models declined under this setting. FaceNet remained the best-performing model with an AUC of 0.7405, followed by ArcFace (0.6894) and SFace (0.6043). OpenFace exhibited a drastic performance drop, recording the lowest AUC of 0.5345, close to random guessing. These results highlight the vulnerability of classical models and the relative robustness of deep models to pose variations.

On AgeDB-30, a dataset that captures age-related intra-class variations, both FaceNet and ArcFace maintained high performance, achieving AUCs of 0.9491 and 0.9504, respectively. SFace also performed well with an AUC of 0.8884, while OpenFace demonstrated poor generalization across age gaps, yielding an AUC of only 0.5689. These findings suggest that deep models, particularly ArcFace and FaceNet, are more effective at preserving identity despite significant age differences.

The CALFW dataset further evaluates models under age variation, with additional challenges due to image quality. FaceNet again led with an AUC of 0.9116, followed by ArcFace (0.8848) and SFace (0.8143). OpenFace’s performance remained subpar with an AUC of 0.5711. The consistent superiority of FaceNet and ArcFace on age-varied datasets demonstrates their robustness in long-term facial appearance changes.

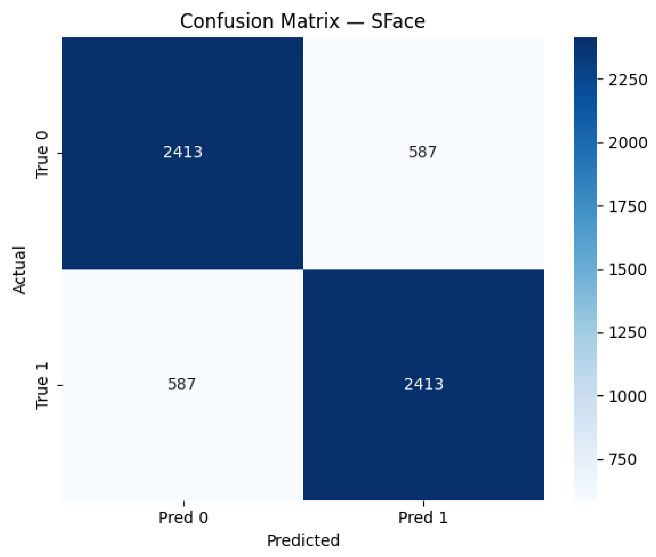

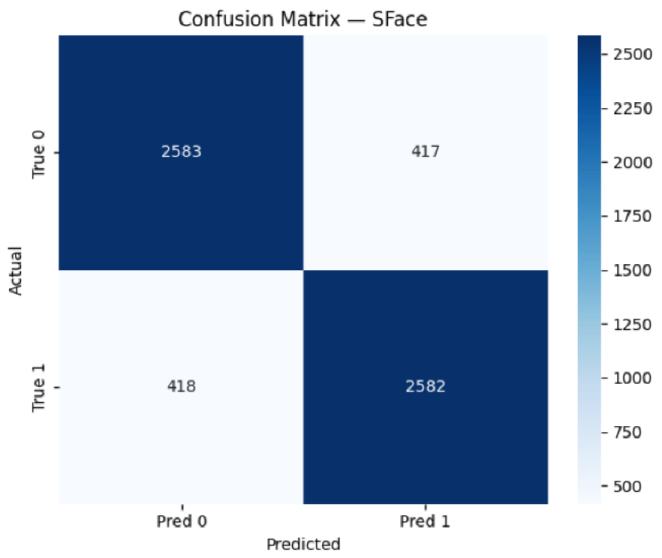

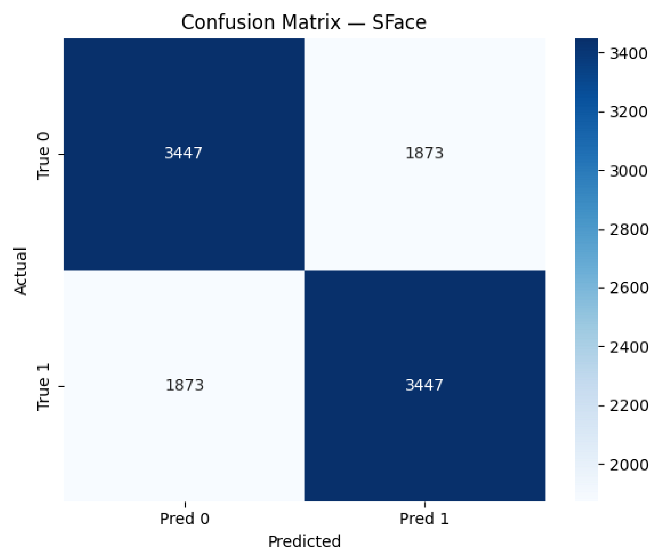

The QMUL-SurvFace dataset simulates real-world surveillance scenarios, characterized by low resolution and poor image quality. In contrast to previous datasets, SFace achieved the highest AUC of 0.7078, suggesting better adaptation to degraded inputs. FaceNet and ArcFace recorded AUCs of 0.6368 and 0.6177, respectively, while OpenFace achieved 0.6270. These results imply that SFace may offer greater potential for deployment in low-quality or surveillance-based environments.

The comparative evaluation reveals that FaceNet and ArcFace consistently deliver superior verification performance across most conditions, particularly on clean and moderately challenging datasets. SFace demonstrates balanced and competitive results, excelling in low-quality surveillance conditions. Conversely, OpenFace, based on traditional embedding techniques, exhibits limited robustness to pose, age and quality variations, consistently underperforming relative to its deep learning counterparts.

Table 3 presents a comprehensive performance comparison of each face recognition model in five benchmark datasets. FaceNet showed the best accuracy, precision, recall and F1-score on the LFW dataset, which was 94.07%, with an F1-score of 0.9406. In more difficult CPLFW and CALFW datasets, FaceNet once again had a larger performance margin among the others; it was, however, significantly smaller because the complexity of the dataset was higher. OpenFace model performed worst on all the datasets, especially on CPLFW and CALFW, on which F1-scores were measured to 0.5230 and 0.5533, respectively. Despite performing worse than FaceNet in general, SFace showed the top performance when evaluated on the QMUL-SurvFace dataset, with a F1-score of 0.6479, demonstrating a strong performance in the long-range surveillance area.

Table 3.

Model performance comparison across datasets.

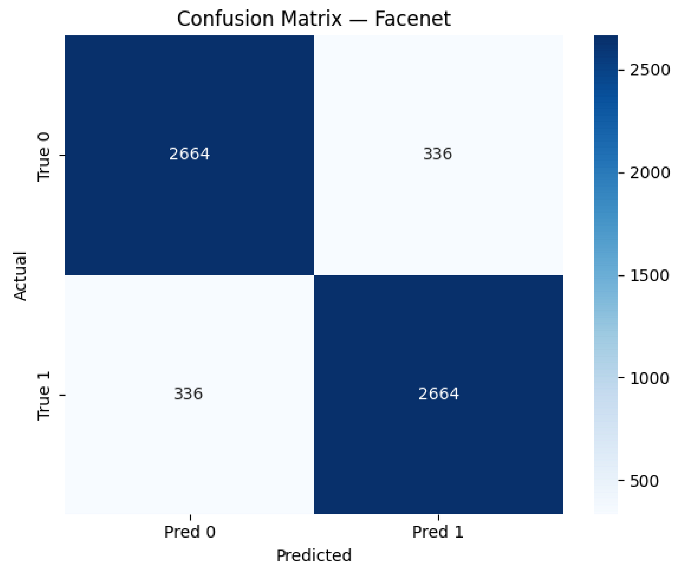

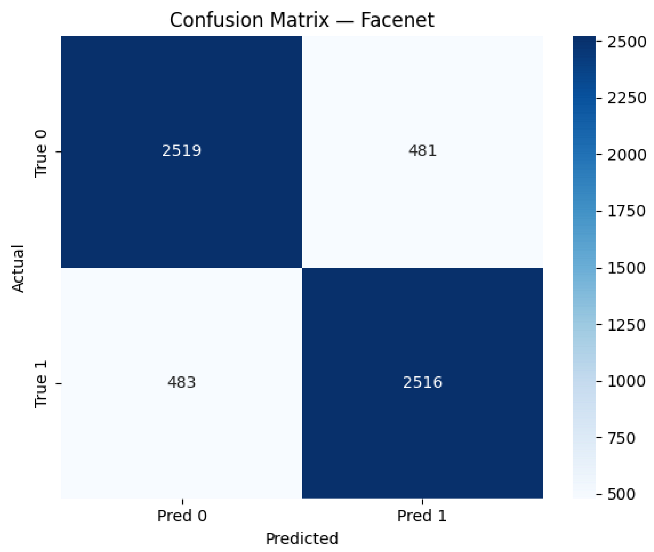

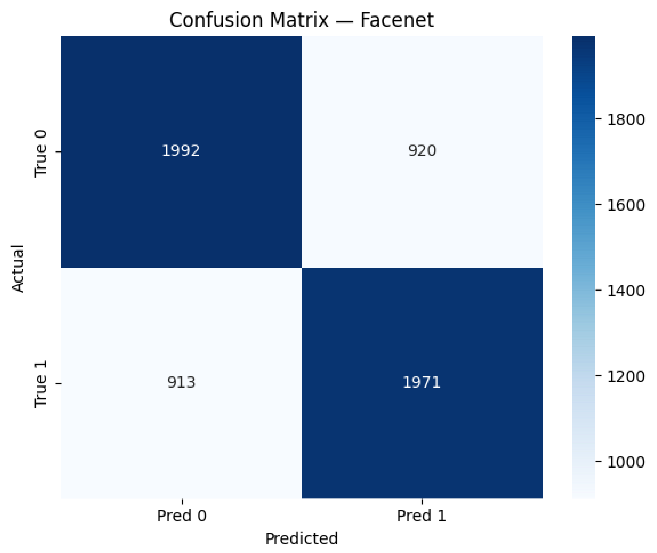

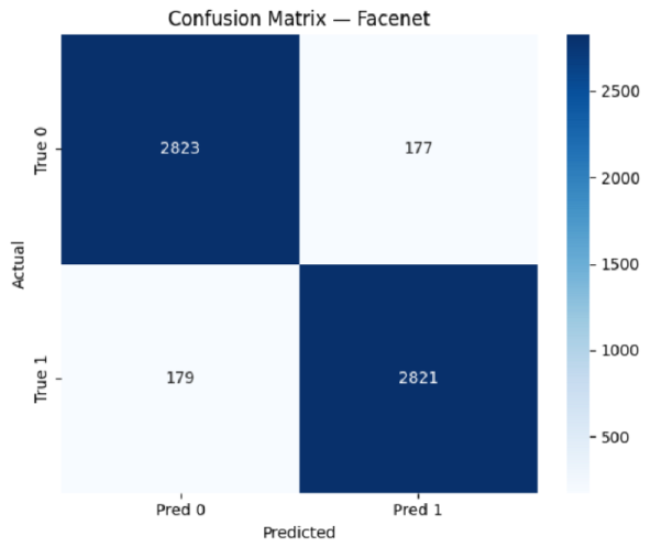

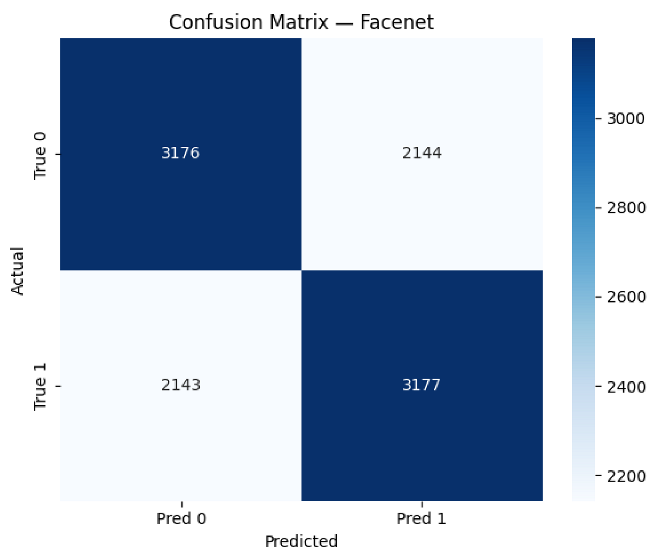

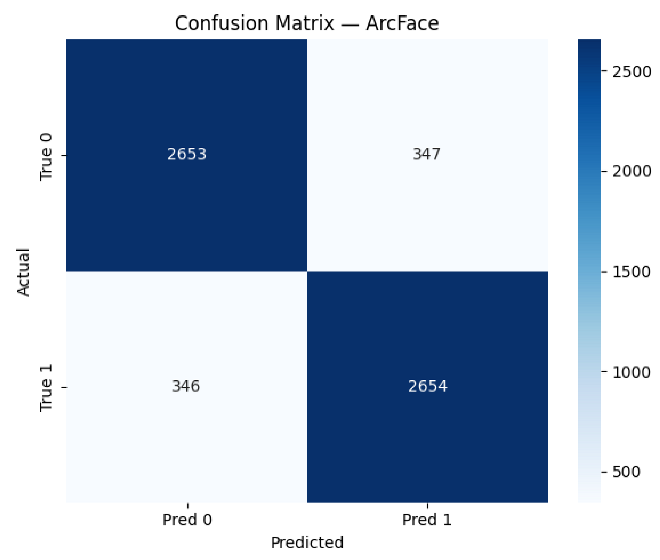

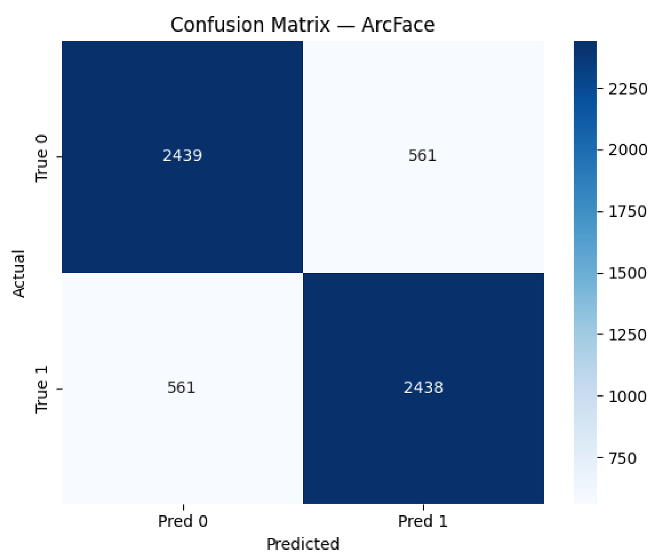

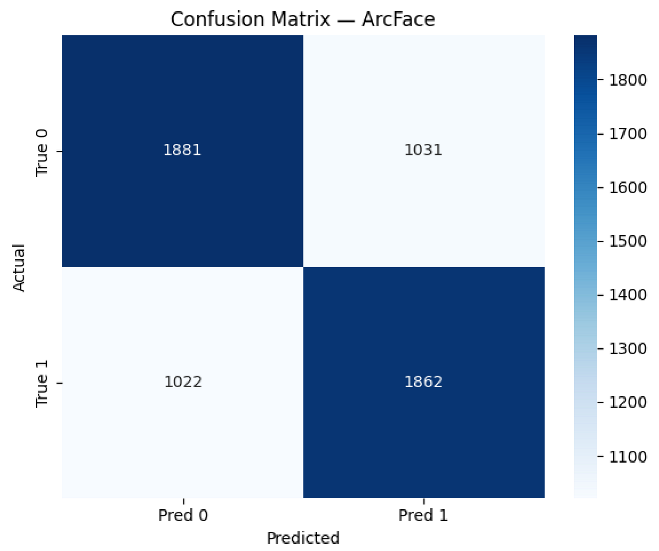

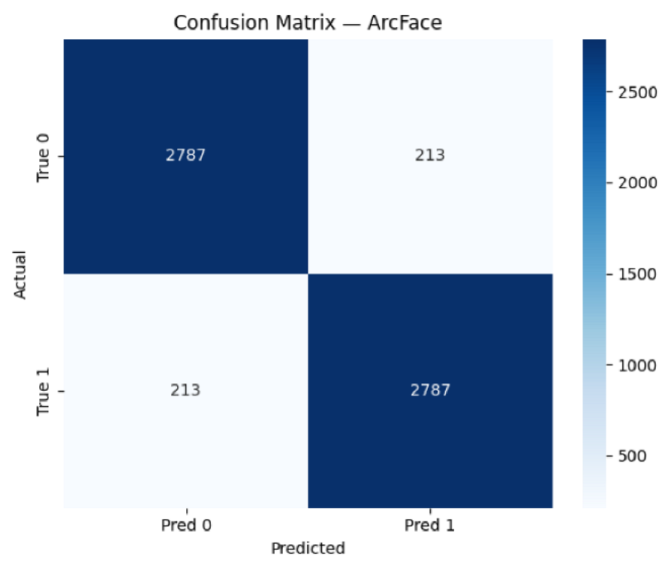

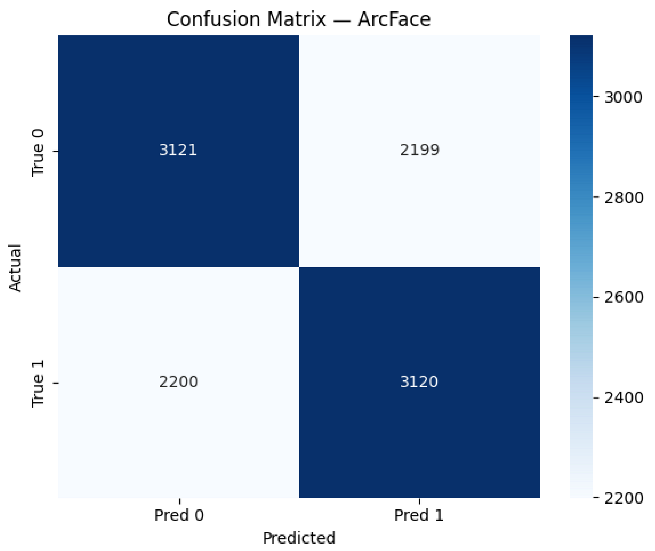

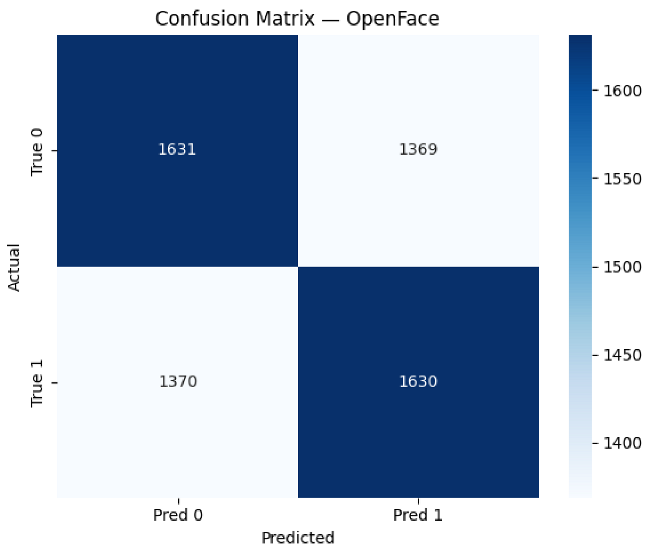

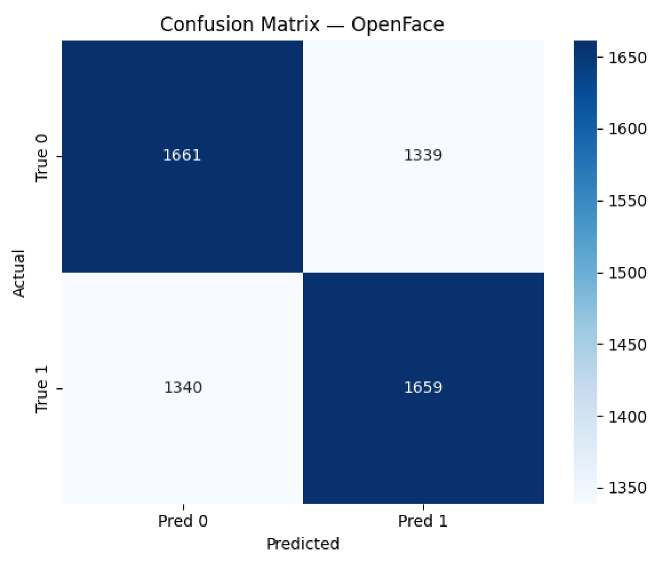

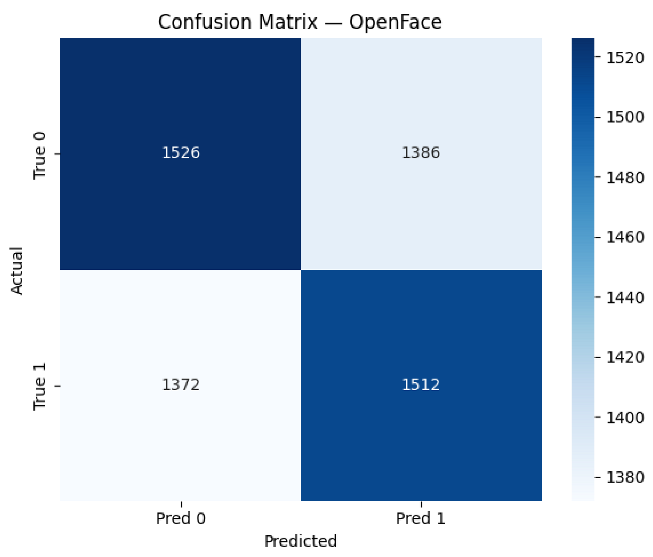

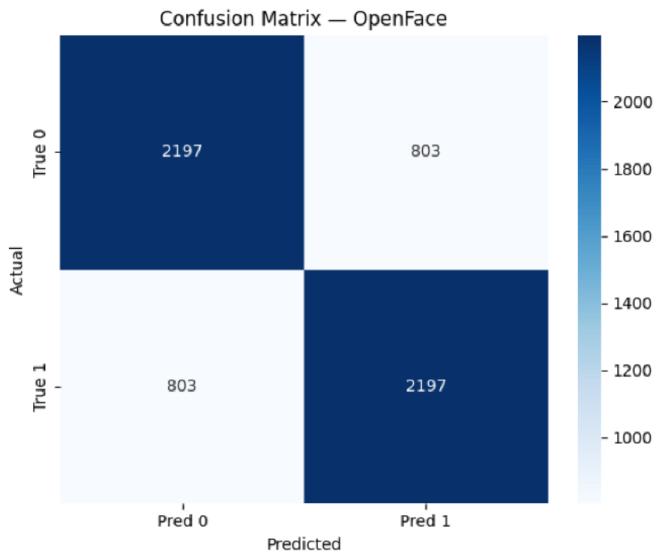

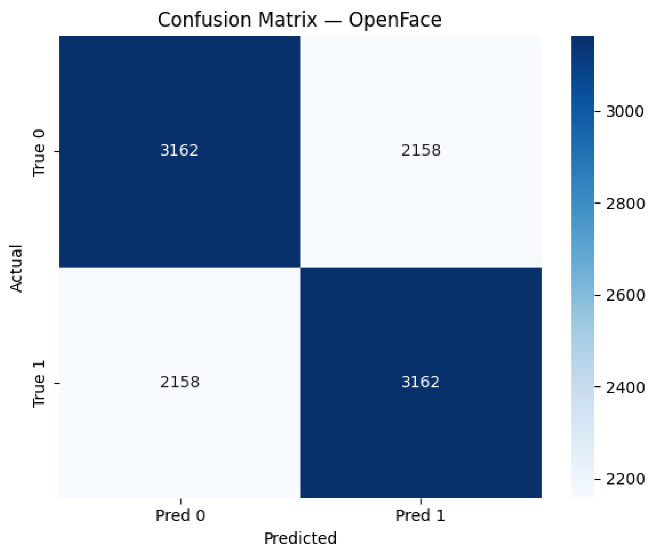

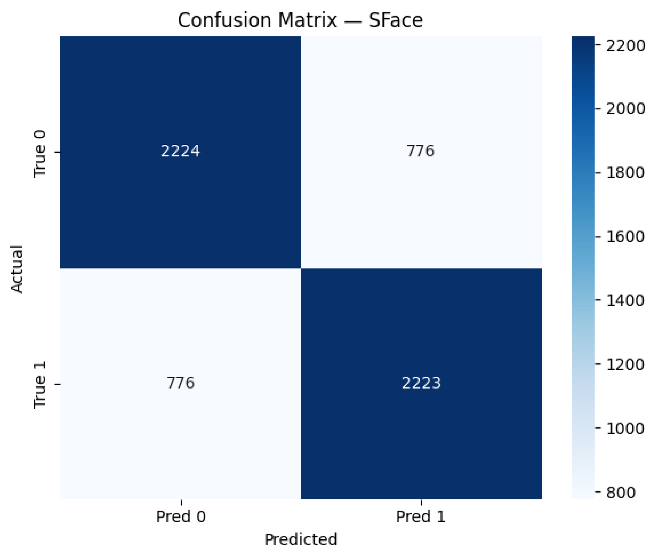

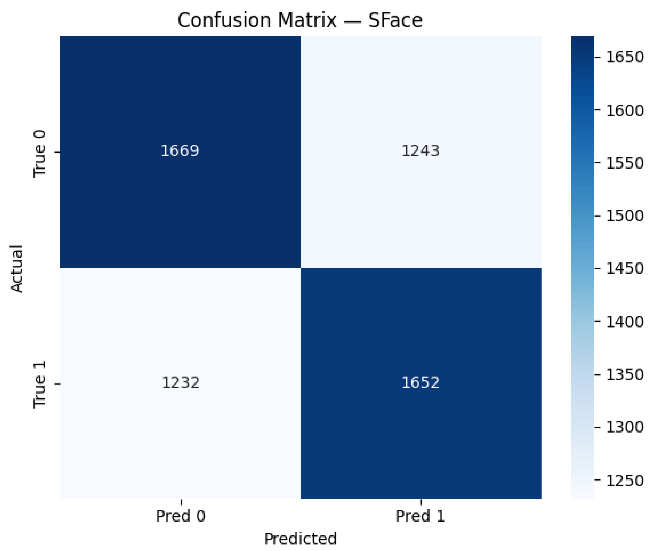

Table 4 shows the confusion matrices of all face recognition architectures on each dataset. Experiments on all datasets show that the ArcFace has maintained consistent and stable performance in the perspective of true positive (TP) and true negative (TN). In particular, ArcFace displayed almost an equally balanced classification on the AgeDB-30 with a few errors (TP: 2120, TN: 2154). It was also very accurate on the LFW (TP: 2157, TN: 2107), which means that it generalizes well to unconstrained-style face verification tasks. Competitive results were acquired on AgeDB-30 (TP: 2104, TN: 2164) and LFW (TP: 2151, TN: 2150), as FaceNet showed slight reduction in performance on the more demanding datasets, CPLFW and QMUL-SurvFace, where there were more FN and FP. As another example, FaceNet in CPLFW seemed to misclassify a significant number of examples (FP: 528, FN: 525), which reveals the difficulty in tackling the problem of pose and age invariance. The other similar method, SFace, also performed rather well, with a good score on the LFW (TP: 2160, TN: 2180) and QMUL-SurvFace (TP: 2147, TN: 2147). However, it has problems with CALFW, when huge prediction inbalance is observable (FP: 707, FN: 707), indicating that intra-class variability existed and interfered with the discriminative capacity of the model. In contrast, OpenFace demonstrated relatively worse results on AgeDB-30 and QMUL-SurvFace in which the misclassifications were significantly more. As an example, it produced a worse TN (1364) and a bigger number of FPs (1396) on AgeDB-30, pointing to its lack of robustness against age-related changes. Generally, the ArcFace and SFace model performed better than other models on most datasets in demonstrating excellence in the capability of preserving discriminative embeddings in different conditions. FaceNet continues to report better results compared to OpenFace especially in complex surveillance.

Table 4.

Confusion matrices.

5. Discussion

The comparative analysis conducted in this study offers several key insights into the robustness and generalization capabilities of modern face recognition models under varying conditions. The results reveal not only the relative strengths of each model but also the challenges posed by specific dataset characteristics such as pose, age variation and image degradation.

FaceNet provided the most balanced classification across most of the datasets, outperforming ArcFace by an average of 12.7% on LFW and CALFW. The triplet loss formulation is effective when it comes to identity-preserving embeddings in clean and moderately posed settings. However, the confusion matrices show that there has been a significant decline in the performance of CPLFW due to misalignment of pose, as it introduces false positive and negative results. The finding is in line with a similar drop in the AUC scores linking the fact that pose variability continues to be an obstacle to FaceNet despite its performance using frontal or high-quality facial images.

The structure of ArcFace consists of two components, additive angular margin loss and the feature normalization schemes, which reinforce the compactness within the same category and enhance the separability between distinct categories. The respective confusion matrices show that this model boasts relatively low misclassification parameters even in face of challenging datasets, such as CPLFW and QMUL-SurvFace. It showed better, although marginally, performance on AgeDB-30 and stabilized results on the surveillance data, proving its ability to perform robust generalization in the controlled and unconstrained settings.

When comparing the performance of the considered models on the datasets of LFW and CALFW, SFace is not the best-performing one; however, when it comes to all other experiments, it has a significantly higher accuracy level, particularly on the QMUL-SurvFace dataset, which is proven by the confusion matrix and the AUC value. The SFace design with concept of symmetry seems to be less vulnerable to the requirements of the real-life surveillance, low spatial resolution, partial occlusion and varying illumination. The characteristics of the model to sustain performance during degraded image quality make it relevant to be used in security and monitoring application areas.

On the one hand, OpenFace, which is based on traditional deep metric learning with hand-crafted features, shows the worst performance on all datasets. False positive and negative rates are very high in its confusion matrices, particularly with AgeDB-30 and CPLFW, where aging and pose variabilities are extreme. The drawbacks portray the inapplicability of conventional practices in face identification tasks in unrestricted settings. The poor performance of all the models on both CPLFW and CALFW is indicative of the complexity of age and positional variations.

ArcFace and FaceNet, the two best performing systems, are still affected more considerably by the AUC and classification accuracy. Even though state-of-the-art solutions based on deep learning achieved a substantial improvement, the results demonstrate the existence of unresolved research problems in the way of dealing with pose and age inconsistencies. In the synthesis of the results, ArcFace and FaceNet show more features in controlled and relatively complex tasks, whereas SFace is more flexible in environments that are highly challenging and realistic to direct real-world surveillance. Another reason to adopt modern deep systems is that OpenFace has been performing poorly on our dataset. The results indicate that ArcFace, FaceNet on the one hand and SFace on the other, demonstrate a trade-off between model generality and specialization; ArcFace and FaceNet can outperform SFace when clean data is available, but perform less well on more heterogeneous data. These findings underline the importance of model selection alignment with deployment environment and encourage further investigations into combining different types of architectures that would help to maintain high accuracy in many different real-life scenarios.

6. Conclusions

The current study offers a comprehensive review of the real world test of the modern face recognition algorithms, with a focus on their performance on real-world problem, such as pose variation, age-related degradation, partial occlusion and poor image quality. In a cross-corpus comparison or benchmark, it has been found out that in controlled and semi-constrained conditions, the accuracy of the deep learning-based models, ArcFace and FaceNet, is higher. On the other hand, SFace proves to be highly robust in a setting that is typical of environments at the surveillance level or low-resolution level. OpenFace phases produced the worst results, and this reflects the constraints of the established embedding procedures. These results therefore denote an important trade-off between the specialization and the generalization of the models where it can be commented that the deployment need specifications should guide model selection. Although the literature reports notable improvement in terms of the pose and age of the person, as well as overall when using poor image, are issues that are still not addressed. The directions that should be followed in future studies are the combination of hybrid architectures combining the benefits of the various frameworks and performance improvement under heterogeneous conditions without compromising the computational costs in the case of real-time systems.

Author Contributions

Conceptualization, B.A.; methodology, A.Z.; software, A.S.; validation, A.S., formal analysis, B.A. and A.Z.; investigation, A.Z.; resources, A.Z. and B.A.; data curation, A.Z.; writing—original draft preparation, A.Z.; writing—review and editing, A.Z. and B.A.; visualization, A.S. and A.Z.; supervision, B.A.; funding acquisition, B.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been funded by the Committee of Science of the Ministry of Science and Higher Education of the Republic of Kazakhstan (Grant No.BR24992852 “Intelligent models and methods of Smart City digital ecosystem for sustainable development and the citizens’ quality of life improvement”).

Data Availability Statement

All the links to publicly archived datasets for analysis during the study are shown in Table 2.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ROC AUC | Area Under the Receiver Operating Characteristic |

| LBP | Local Binary Patterns |

| PCA | Principal Component Analysis |

| LDA | Linear Discriminant Analysis |

| HE | Histogram Equalization |

| MTCNN | Multi-Task Cascaded Convolutional Neural Networks |

| SVM | Support Vector Machine |

| CNN | Convolutional Neural Networks |

| RNN | Recurrent Neural Networks |

| LBPH | Local Binary Pattern Histogram |

| MLP | Multilayer Perceptron |

| G-RLBP | Robust LBP Guiding Pooling |

| RLBP | Robust Local Binary Pattern |

| CLAHE | Contrast Limited Adaptive Histogram Equalization |

| MAC | Memory Access Cost |

References

- Wu, G.; Tao, J.; Xu, X. Occluded Face Recognition Based on the Deep Learning. In Proceedings of the 2019 Chinese Control and Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 793–797. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, L.; Tan, M.; Yan, X.; Zhang, X.; Feng, H. Face Recognition with Partial Occlusion Based on Attention Mechanism. In Proceedings of the 2021 International Conference on Electronic Information Engineering and Computer Science (EIECS), Dalian, China, 17–19 September 2021; pp. 562–566. [Google Scholar] [CrossRef]

- Luo, Y. Research on Occlusion Face Detection Method in Complex Environment. In Proceedings of the 2022 IEEE 5th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chengdu, China, 23–25 September 2022; pp. 1488–1491. [Google Scholar] [CrossRef]

- Huang, B.; Wang, Z.; Jiang, K.; Zou, Q.; Tian, X.; Lu, T.; Han, Z. Joint Segmentation and Identification Feature Learning for Occlusion Face Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 10875–10888. [Google Scholar] [CrossRef]

- Khan, A.; Rauf, Z.; Sohail, A.; Khan, A.; Asif, H.; Asif, A.; Farooq, U. A survey of the Vision Transformers and their CNN-Transformer based Variants. Artif. Intell. Rev. 2023, 56, 2917–2970. [Google Scholar] [CrossRef]

- Shaikh, M.B.; Chai, D.; Islam, S.M.S.; Akhtar, N. From CNNs to Transformers in Multimodal Human Action Recognition: A Survey. ACM Trans. Multimedia Comput. Commun. Appl. 2024, 20, 1–24. [Google Scholar] [CrossRef]

- Otroshi Shahreza, H.; George, A.; Marcel, S. Knowledge Distillation for Face Recognition Using Synthetic Data with Dynamic Latent Sampling. IEEE Access 2024, 12, 187800–187812. [Google Scholar] [CrossRef]

- Boutros, F.; Siebke, P.; Klemt, M.; Damer, N.; Kirchbuchner, F.; Kuijper, A. PocketNet: Extreme Lightweight Face Recognition Network Using Neural Architecture Search and Multistep Knowledge Distillation. IEEE Access 2022, 10, 46823–46833. [Google Scholar] [CrossRef]

- Mishra, S.; Reza, H. A Face Recognition Method Using Deep Learning to Identify Mask and Unmask Objects. In Proceedings of the 2022 IEEE World AI IoT Congress (AIIoT), Seattle, WA, USA, 6–9 June 2022; pp. 91–99. [Google Scholar] [CrossRef]

- Li, Y.; Zhan, X.; Gao, Y.; Li, H.; Zhang, W. Research on Occlusion Perception Facial Feature Correlation Based on Less-Class Learning. Signal Image Video Process. (SIViP) 2025, 19, 616. [Google Scholar] [CrossRef]

- Naseem, S.; Rathore, S.S.; Kumar, S.; Gangopadhyay, S.; Jain, A. An Approach to Occluded Face Recognition Based on Dynamic Image-to-Class Warping Using Structural Similarity Index. Appl. Intell. 2023, 53, 28501–28519. [Google Scholar] [CrossRef]

- Zhao, R.; Hua, F.; Wei, B.; Li, C.; Ma, Y.; Wong, E.S.W.; Liu, F. A Review of Abnormal Crowd Behavior Recognition Technology Based on Computer Vision. Appl. Sci. 2024, 14, 9758. [Google Scholar] [CrossRef]

- Turk, M.; Pentland, A. Eigenfaces for recognition. J. Cogn. Neurosci. 1991, 3, 71–86. [Google Scholar] [CrossRef] [PubMed]

- Belhumeur, P.N.; Hespanha, J.P.; Kriegman, D.J. Eigenfaces vs. Fisherfaces: Recognition Using Class Specific Linear Projection. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 711–720. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. DeepFace: Closing the Gap to Human-Level Performance in Face Verification. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar] [CrossRef]

- Ghaida, D.R.; Siedharta, V.V.; Hakim, U.; Mutijarsa, K.; Septiana, A.I.; Rosmansyah, Y. SVM-Classified FaceNet and Eigenface Models under Lighting and Occlusion Variations for Face Recognition. In Proceedings of the 2024 International Seminar on Application for Technology of Information and Communication (iSemantic), Semarang, Indonesia, 21–22 September 2024; pp. 415–420. [Google Scholar] [CrossRef]

- Ho, H.-T.; Nguyen, L.V.; Le, T.H.T.; Lee, O.-J. Face Detection Using Eigenfaces: A Comprehensive Review. IEEE Access 2024, 12, 118406–118426. [Google Scholar] [CrossRef]

- EL Fadel, N. Facial Recognition Algorithms: A Systematic Literature Review. J. Imaging 2025, 11, 58. [Google Scholar] [CrossRef]

- Carević, A.; Slapničar, I. Fast Quaternion Algorithm for Face Recognition. Mathematics 2025, 13, 1958. [Google Scholar] [CrossRef]

- Phankokkruad, M. Convolutional neural network models for deep face recognition on limitation and interfering factors in image dataset. In Proceedings of the 2018 IEEE/ACIS 17th International Conference on Computer and Information Science (ICIS), Singapore, 27–29 June 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Rahman, M.F.; Sthevanie, F.; Ramadhani, K.N. Face Recognition in Low Lighting Conditions Using Fisherface Method and CLAHE Techniques. In Proceedings of the 2020 8th International Conference on Information and Communication Technology (ICoICT), Yogyakarta, Indonesia, 24–26 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Qu, L.; Pei, Y. A Comprehensive Review on Discriminant Analysis for Addressing Challenges of Class-Level Limitations, Small Sample Size, and Robustness. Processes 2024, 12, 1382. [Google Scholar] [CrossRef]

- Khalili Mobarakeh, A.; Cabrera Carrillo, J.A.; Castillo Aguilar, J.J. Robust Face Recognition Based on a New Supervised Kernel Subspace Learning Method. Sensors 2019, 19, 1643. [Google Scholar] [CrossRef] [PubMed]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A Unified Embedding for Face Recognition and Clustering. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar] [CrossRef]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep Face Recognition. In Proceedings of the British Machine Vision Conference (BMVC), Swansea, UK, 7–10 September 2015; pp. 41.1–41.12. Available online: http://www.bmva.org/bmvc/2015/papers/paper041/index.html (accessed on 9 July 2025).

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint Face Detection and Alignment Using Multitask Cascaded Convolutional Networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Li, C. Advancements and Challenges of Deep Learning in Facial Recognition. In Proceedings of the International Conference on Applied and Computational Engineering (ACE), Nanjing, China, 8 November 2024; Volume 82, pp. 45–53. [Google Scholar] [CrossRef]

- Chitrapu, P.; Morampudi, M.K.; Kalluri, H.K. Robust Face Recognition Using Deep Learning and Ensemble Classification. IEEE Access 2025, 13, 99957–99969. [Google Scholar] [CrossRef]

- Rehman, A.; Mujahid, M.; Elyassih, A.; AlGhofaily, B.; Bahaj, S.A.O. Comprehensive Review and Analysis on Facial Emotion Recognition: Performance Insights into Deep and Traditional Learning with Current Updates and Challenges. Comput. Mater. Continua 2025, 82, 41–72. [Google Scholar] [CrossRef]

- Siddiqui, M.M.; Valsalan, P. AI-Based Human Face Recognition System. J. Electr. Syst. 2024, 20, 357–362. [Google Scholar] [CrossRef]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a Similarity Metric Discriminatively, with Application to Face Verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 539–546. [Google Scholar] [CrossRef]

- Wu, H.; Xu, Z.; Zhang, J.; Yan, W.; Ma, X. Face Recognition Based on Convolution Siamese Networks. In Proceedings of the 2017 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Shanghai, China, 14–16 October 2017. [Google Scholar]