Abstract

In recent years, the rapid growth of virtual reality has opened up new possibilities for creating immersive virtual worlds known as metaverses. With advancements in technology, such as virtual reality headsets, and the emergence of applications with social interaction, metaverses offer exciting opportunities for STEM (Science, Technology, Engineering, and Mathematics) education. This article proposes the integration of metaverses into STEM courses, enabling students to forge connections between different subjects within their curriculum, to leverage current technological advancements, and to revolutionize project presentations and audience interaction. Drawing upon a case study conducted with students enrolled in the “Proyectos RII 1: Organización y Escenarios” course at the Escuela Politécnica Superior de Alcoy of the Technical University of Valencia (Spain), this article provides a comprehensive description of the methodology employed by the students and professors. It outlines the process followed, shedding light on the innovative use of metaverses in their projects. Moreover, the article shares valuable insights obtained through surveys and personal opinions gathered from students throughout the course and upon its completion. By exploring the intersection of metaverses and STEM education, this study showcases the transformative potential of integrating interactive virtual environments. Furthermore, this work highlights the benefits of increased student engagement, motivation, and collaboration, as well as the novel ways in which projects can be presented and interactions with audiences can be redefined.

1. Introduction

STEM education, encompassing Science, Technology, Engineering, and Mathematics, has become a cornerstone of contemporary learning, with implications for various sectors of society. In an era marked by rapid technological advancements and a dynamic global landscape, the importance of fostering STEM literacy cannot be overstated. STEM education equips students with critical skills, enabling them to analyze complex problems, develop innovative solutions, and engage in data-driven decision making. By nurturing curiosity, problem solving abilities, and collaboration, STEM education empowers students to thrive in a world increasingly shaped by scientific and technological progress [1,2].

Yet, despite its undeniable significance, STEM education faces certain limitations that hinder its full realization. Traditional classroom approaches may struggle to capture the dynamic, interactive, and immersive qualities that characterize real-world STEM applications. Static textbooks and conventional lectures can fall short in igniting students’ passion and curiosity for STEM disciplines. Furthermore, the demand for well-qualified STEM professionals far exceeds the supply, revealing a gap between educational offerings and industry requirements. To bridge this gap, innovative approaches are imperative, in order to inspire and engage students in STEM subjects [3].

Interactive metaverses are emerging as a promising solution, to address the challenges and limitations faced by traditional STEM education. The notion of the metaverse transitioning from a concept to a tangible reality in the foreseeable future is widely recognized, particularly in conjunction with the evolution of social media and the emergence of perceptual immersion in “virtual worlds”. Entities are now exploring its possibilities and considering its integration into existing business models, including its applicability to the tourism sector [4].

The metaverse consists of a shared virtual world or space that emerges from the combination of Virtual Reality (VR) and the internet, facilitating a group experience where several users co-exist. Although the concept “metaverse” does not belong to a specific technology, it usually revolves around users’ interactions with such technologies.

The metaverse is expected to have transformative impacts across various domains, such as tourism, education, leisure, marketing, social networks, and health [5]. Individuals who engage with the metaverse traverse seamlessly between the physical and virtual realms, unlocking a vast array of opportunities [6].

However, certain current limitations of the metaverse are associated with weaker social connections in virtual scenarios, potential privacy implications, and challenges in adapting to the real world [7]. Nevertheless, the metaverse holds enormous potential for emerging educational environments. It provides a scenario with relevant flexibility, allowing an immersive setting where users are able to create and share experiences [8]. A recent study by [9] highlighted that environments that use hybrid learning are still in preliminary development phases, necessitating further improvement in educators’ abilities to facilitate successful teaching and learning in virtual environments.

The metaverse comprises fundamental elements, regarding interface, environment, security, and interaction [10]. Visual and sound elements are employed, to produce an atmosphere, and rendering scenes and objects is sometimes necessary, to enhance immersion in the metaverse. Motion rendering plays a crucial role in reproducing natural avatar movements [5]. There are two main methods of content presentation: through hand-based input devices and Head-Mounted Displays (HMDs), or via web browsers, screens, and mice. In both situations, it is fundamental interaction, as it emulates natural behavior and enables communication with other individuals.

Avatars are employed within virtual spaces, to customize and enhance socialization, representing digital versions of individuals. Through their avatars, humans can interact and recreate real-life spaces, harnessing the possibilities of VR components [11].

1.1. Motivation

Undoubtedly, VR applications, including metaverses, are poised to unlock a multitude of opportunities across various industries in the near future [12]. Beyond their conventional use in entertainment and gaming, these technologies offer vast potential in diverse domains, such as education, healthcare, architecture, engineering, and beyond.

In education, the integration of VR and metaverses offers a transformative approach to learning and training. Students can now immerse themselves in interactive and captivating learning experiences, enabling them to explore complex concepts with heightened engagement and practicality. For instance, in STEM courses, where abstract mathematical principles are often difficult to grasp, VR applications can provide tangible applications that demonstrate the real-world relevance of foundational concepts.

Moreover, educational metaverses offer an unparalleled platform for effective communication and presentation skills development. By showcasing their solutions in a virtual environment, students can overcome personal challenges, like stage fright, and they can deliver their ideas more confidently.

With the goal of exploring the potential benefits and drawbacks of integrating VR and metaverses into STEM courses, this study focuses on the development of an educational metaverse. In this metaverse, students will have the opportunity to design and present their improvement solutions for specific problems, thereby harnessing the power of VR to enhance their learning experience and skills development. Through this research, the authors of this work seek to illuminate the transformative impact of these cutting-edge technologies on education and beyond.

1.2. Related Work

Within the realm of educational metaverses, Second Life emerged as a pioneering platform that underscored the potential of virtual environments for fostering learning, collaboration, and exploration [13,14,15,16]. This platform quickly garnered recognition from educational institutions and educators, as it showcased an ability to transcend traditional classroom boundaries and to deliver a dynamic, engaging, and often experiential learning arena. However, despite its initial influence, Second Life exhibited several limitations within the context of education, ultimately paving the way for more tailored metaverse platforms, like Spatial.io, which are specifically designed to suit educational objectives.

One of the prominent issues with Second Life is its general-purpose nature, which contrasts with the focused metaverse platforms crafted for specific applications, such as education. The platform’s broad scope encompasses a wide array of activities, diluting its educational focus. In contrast, newer platforms concentrate on distinct niches, making them better suited for educational applications. Furthermore, content moderation challenges in Second Life have raised concerns among educational institutions, regarding content safety and appropriateness. By contrast, contemporary platforms prioritize a user-friendly experience, a pivotal consideration within educational settings, where users might vary in their technological proficiency. Advanced features, like spatial audio, hand gestures, and facial expressions, offered by platforms such as Spatial.io, contribute to a heightened sense of immersion.

The impending impact of the metaverse on the educational landscape is poised to revolutionize the educational landscape in the coming years [17]. Its adoption and implementation in educational settings are rapidly gaining popularity across all levels of education. Within these immersive virtual environments that incorporate game elements, students can engage in more intriguing, enjoyable, and interactive learning experiences, stimulating their imagination, their individual and collective intelligence, and enhancing their short-term memory [18]. Additionally, the metaverse shows promise in improving students’ problem-solving skills and critical thinking [19], boosting academic performance, learning outcomes, and comprehension of subjects [20].

Previous research has demonstrated the positive impact of VR in various educational fields, providing students with authentic real-world application contexts [21]. For instance, studies by Gilliam [22] and Aslam [23] revealed that learning in authentic contexts improves students’ achievements and problem-solving abilities. Moreover, Kukulska [24] highlighted the value of authentic contexts in fostering connections between acquired knowledge, leading to enhanced retention, transfer of learning, and increased interest and motivation in the learning process.

According to Kye [8], the metaverse holds the potential to create a new educational environment that facilitates social communication through immersive learning experiences. By leveraging the success of VR-based games in the gaming industry, the gamification of learning resources within the metaverse can effectively engage and motivate learners [25]. While the debate over the metaverse’s impact on education continues [26], there is considerable interest among stakeholders in developing and delivering educational content in the metaverse. Frameworks, such as the one proposed by Wang [27], set guidelines for future education systems in this domain.

Other notable works exploring the implementation of the metaverse in education include [28], which proposed a metaverse framework with potential core stacks, including hardware, computing, networking, virtual platforms, interchange tools and standards, payments service, content, service, and assets, and discussed its reasons briefly. Additionally, the work presented in [29] divided the metaverse into three essential components (i.e., hardware, software, and contents) and three approaches (i.e., user interaction, implementation, and application), from a general perspective. Moreover, ref. [30] presented some valuable insights into roles (i.e., intelligent tutors, intelligent tutees, and intelligent peers), in providing educational services, and potential applications of the metaverse for educational settings, from the perspective of AI.

In the practical implementation of metaverse technology in an educational setting, Duan [31] successfully created a virtual space within a university campus, examining students’ behavior within the metaverse. The results demonstrated how a simple economic system and democratic activity were seamlessly facilitated within this virtual environment.

1.3. Objectives and Main Contributions

This article proposes the integration of VR into STEM subjects, to enhance students’ motivation and performance. The primary focus is on creating an educational metaverse, providing students with a virtual space to design and propose robotic systems for improving industrial processes. The ultimate goal is to familiarize students with the application of current technology in their degree subjects and future professional endeavors, enabling them to present their proposals in a more natural and interactive manner.

The article extensively describes the educational metaverse’s design and introduces a work methodology that leverages version control repositories, facilitating the development of proposals across various STEM disciplines.

To validate the effectiveness of the educational metaverse for presentations, the article presents results from students enrolled in the “Projects RII 1: Organization and Scenarios” course at the Escuela Politécnica Superior de Alcoy of the Technical University of Valencia (Spain) during the academic year 2022/2023.

The key contributions of this work are as follows:

- Motivating students in STEM courses, through project-based learning, employing VR tools tailored to their specific subjects.

- Designing an educational metaverse prototype, granting students individual spaces to develop their projects and present them interactively.

- Proposing a work methodology centered around version control repositories, enabling STEM teachers to seamlessly integrate the educational metaverse innovation into their classes.

The sections of this article are as follows. First, the proposed metaverse and methodology are thoroughly described in Section 2. Subsequently, Section 3 provides insights into the usability of the interface and covers other relevant aspects. Towards the end of the article, Section 3.2 offers a comprehensive discussion, while Section 4 presents concluding remarks on the findings.

2. Materials and Methods

2.1. Participants

This work had a cohort of 40 students who were enrolled in the “Proyectos RII 1: Organización y Escenarios” course at the Escuela Politécnica Superior de Alcoy of the Technical University of Valencia (Spain) during the academic year 2022/2023. Notably, none of the participants possessed prior experience with VR. The students worked collaboratively in groups, with each group comprising two or three members. Within these groups, one member was designated to deliver the presentation within the virtual environment. It is worth highlighting that all the groups received instruction from the same professor, while the evaluation phase involved the assessment of their work by three different professors.

2.2. Development of the Application

The application was custom-tailored for the subject “Proyectos RII 1: Organización y Escenarios”, where students were assigned the task of designing a robotic system to improve the palletizing and strawberry packaging line process (for more details, refer to https://www.youtube.com/watch?v=KKQBlbZ1sY8, accessed on 2 August 2023). Consequently, the application’s environment was carefully designed to provide an industrial setting, ensuring full immersion for the students throughout the experience.

Additionally, it was crucial to allow each group of students the freedom to customize and modify their environment based on their unique robotic system elements, without any interference from other groups. To accomplish this, a multi-room application design was implemented, facilitating efficient collaboration during work and seamless access to specific environments.

During presentations, it is crucial to create a sense of genuine interaction among users, which includes the ability both to listen to and to speak with others within the virtual environment. To achieve this immersive experience, avatars with expressive animations are seamlessly integrated. These avatars are designed to synchronize eye and mouth movements with the user’s speech, providing a more lifelike communication experience. Additionally, the avatars are programmed to respond with arm and head movements based on the gestures made by the users, further enhancing the sense of realistic interaction.

For this project, Meta [32] avatars were chosen, primarily because they were compatible with the Meta Quest 2 hardware used during the experiments [33]. However, it is essential to mention that other avatar options could have been used, without compromising the core objectives of the study. The focus remained on creating a dynamic and engaging communication environment during presentations, where users felt as though they were genuinely interacting with one other, leading to a more effective and immersive learning experience.

Furthermore, the application accounts for the differences between virtual and real-world environments, especially in terms of space. Students and professors need to navigate the virtual world freely, despite potential obstacles in the real-world classroom setting, such as chairs and tables. As a result, the application is equipped with the functionality of “teleporting”, allowing users to jump within the virtual space without physical movement in the real world.

To provide users with maximum freedom and natural interactions, the application employs hand tracking technology. VR headsets with vision cameras can track users’ hands and/or pupils, enabling specific hand gestures to control virtual elements and user movements in the virtual world. In this work, hand tracking was used to interact with the virtual environment and to perform actions like “teleporting” through a hand gesture.

PhotonEngine [34] was employed, to implement the multi-user functionality of the application. In essence, PhotonEngine represents a cutting-edge multiplayer framework designed explicitly for real-time online gaming. Developed by Exit Games, it seamlessly enables multi-user functionality in various applications. Operating on a robust client-server model, PhotonEngine ensures fairness in gameplay, while its low-latency data transmission and scalability contribute to an immersive gaming experience. Furthermore, the framework supports a range of networking models and allows for cross-platform deployment, providing developers with extensive documentation and SDKs for effortless integration. As a result, PhotonEngine empowers developers to create interactive multiplayer games with unparalleled ease and efficiency.

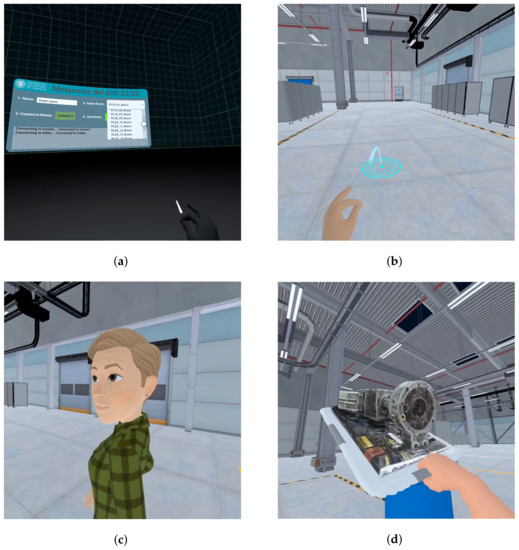

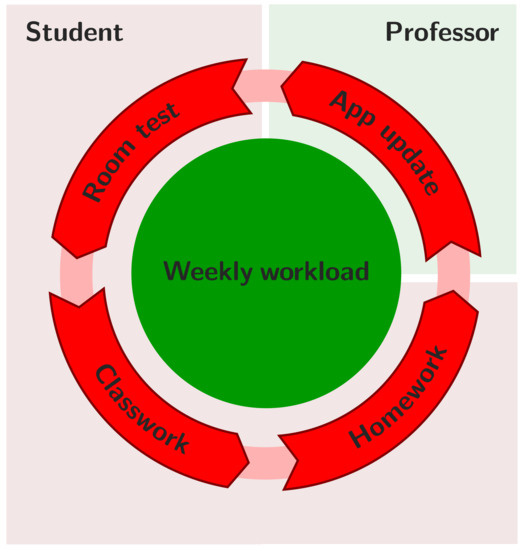

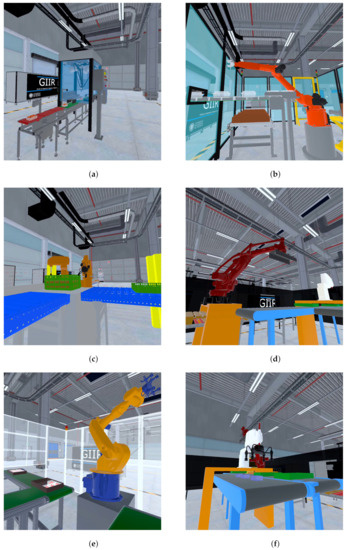

In light of the aforementioned details, Figure 1 illustrates the VR application provided as a template for the workgroups. The key components include an initial “lobby” scene, where groups connect to the application and select their workrooms (Figure 1a), and dedicated “room” work scenes, where students incorporate their robotic system designs (Figure 1b–f). As depicted in Figure 1b, upon launching the application, each user enters the lobby, where they can connect and select their desired room. Once inside the chosen room, users have the freedom to navigate the virtual environment naturally, by physically walking through the real space or utilizing the teleporting functionality, activated by performing a pinch gesture with the index finger and thumb of the left hand. This gesture triggers a blue target to appear, indicating the selected teleport destination. To confirm the teleportation, users simply need to separate their index finger and thumb. This intuitive and user-friendly feature enhances the immersive experience within the virtual environment. Furthermore, in a multi-user scenario within the room, the avatars of other users would be visible, as exemplified in Figure 1c. Notably, the application is designed to access Meta’s proprietary credentials, resulting in the avatar displayed being identical to the user’s selection in their Meta account, which is associated with their Meta Quest 2 headset. This integration ensures a personalized and consistent experience for each user, fostering a sense of individuality and familiarity within the shared virtual environment.

Figure 1.

Educational industrial metaverse developed: (a) lobby; (b) room—1st person view; (c) room—example of meta’s avatar; (d) interaction with virtual objects; (e) adding new virtual objects; (f) exit room functionality.

As previously stated, the application is designed to enable hands-only interaction with the virtual world, eliminating the need for external controllers. Users can manipulate virtual objects in the environment by using their hands, replicating real-world gestures, as illustrated in Figure 1d. Moreover, users have the unique ability to introduce new objects into the environment. Although this functionality was not required for the case study presented in this work, Figure 1e demonstrates how users can bring their own mobile phones into the virtual environment, to capture photos of their experience. To achieve this, users simply look at the palm of their left hand, prompting a green button symbolizing a mobile phone. Upon pressing the button, the virtual phone is introduced into the environment, visible to all present users, who can interact with and take it, if desired.

To exit the virtual environment, users need to focus on the palm of their right hand and press the red exit button displayed in Figure 1f. This action brings up a menu exclusive to the user who wishes to leave, ensuring a confirmation step to avoid accidental exits. This thoughtful approach prevents unintended interactions and provides a user-friendly experience throughout the application.

2.3. Methodology Framework

As mentioned earlier, the course comprised 40 students organized into working groups of 2 or 3 members, with each group assigned a specific room to modify. To streamline collaboration and ensure a secure workflow for both the professors and students, GitHub was selected as the version control tool [35]. GitHub, a web-based platform utilizing Git version control, offers features for efficient codebase management, collaboration, and change tracking. Its code review, issue tracking, and project management tools make it a widely-used choice for open-source and private projects, fostering a community-driven development approach.

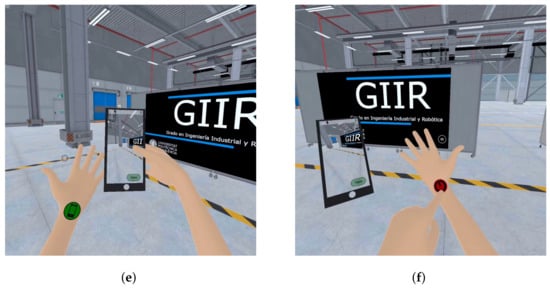

Figure 2 depicts the methodology used for updating the application and the interaction between the professor and student groups through GitHub. The professor acts as the administrator and creates a remote repository on GitHub. The main branch or master branch contains a copy of the primary application (template) used by the professor on their local repository (i.e., the professor’s PC). To maintain the functional integrity of the application, only the professor has permission to modify this main branch.

Figure 2.

Repository workflow.

Once the workgroups are formed, each group creates a GitHub user, to access the remote repository containing the application template. The professor sets up individual work branches for each group and grants them read and write permissions, allowing them to work independently on their assigned tasks without affecting other groups’ work. These branches start with identical copies of the main application. Workgroups use a Git manager, like GitHub Desktop, to “clone” the content of their branch into their local repositories (i.e., student PCs), facilitating their work on the application.

Within the application, each working group has a designated folder, to incorporate their proposals into the virtual environment. This may include 3D models (e.g., obj or fbx files), materials, and small scripts. When a modification is made, the group can easily update the remote repository, by pulling the changes into their branch. The professor can then review the modifications made, by accessing the respective branches on both the remote and local repositories.

When a group wants to view the results of their modifications in the VR application, using the headsets, they initiate a pull request. The professor assesses potential conflicts with the main application in the main branch or master. If everything appears in order, with no conflicts, the professor proceeds to update the main branch with the group’s changes.

Once the application is successfully updated in the remote repository, the professor pulls the main branch or master into their local repository. This allows the professor to compile the updated application and send it to the Meta bundle, updating the version of the application on all devices that can access it. This seamless process ensures that the latest version of the VR application, incorporating the contributions of all groups, is readily available for use on the headsets. Leveraging GitHub’s version control capabilities enables the professor to efficiently manage updates and to seamlessly integrate the collective efforts of the student groups into the final application version.

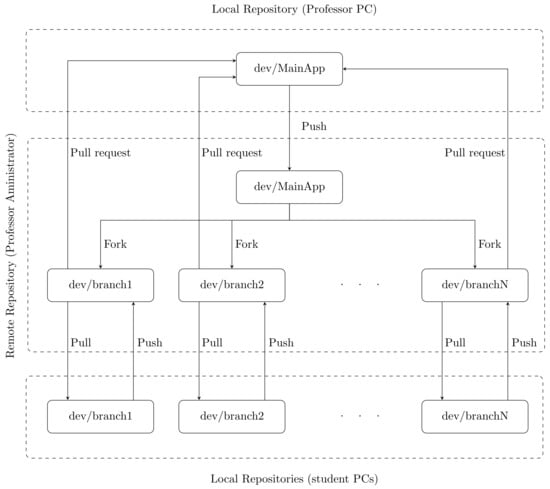

To maintain synchronization and ensure all students can view collective progress, the professor follows a weekly update schedule (see Figure 3). After in-class work on modifications in the associated room, the students continue working on the project at home throughout the week. The professor then updates the main application with the latest changes, until the established date and time. During the subsequent class session, the working groups utilize VR devices, to review advancements made during the previous week. This structured workflow promotes efficiency, avoids delays, and guarantees that all students can experience their contributions in the virtual environment, fostering a smooth and productive learning experience.

Figure 3.

Weekly workload diagram.

In summary, the proposed work methodology offers valuable learning opportunities, enhancing students’ technical skills while promoting efficient project management and collaboration. Working with shared projects and utilizing version control through GitHub equips students with essential teamwork, communication, and version control practices, highly relevant in real-world development scenarios. Leveraging version control ensures the security of students’ work and simplifies project management for the professor.

Overall, this methodology not only prepares students for successful real-world software development but also fosters a collaborative and organized approach to project development, equipping them with the skills necessary for professional success.

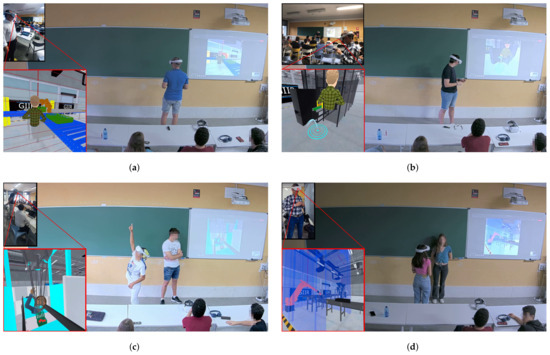

2.4. Research Design and Validation

The ultimate goal of this study was to assess the feasibility, advantages, and drawbacks of students presenting their work in a fully immersive virtual environment. To achieve this, each working group had to ensure that their final solutions functioned correctly within the virtual space. Figure 4 showcases various examples of solutions proposed by the students, to enhance the palletizing and strawberry packaging line processes.

Figure 4.

Illustrative examples of robotic systems developed by the students: (a) conveyor belt with a parallel robot used for pick and place; (b) conveyor belt with a robotic arm used for pick and place; (c) several conveyor belts together with several robotic arms; (d) small conveyor belt with a robotic arm used for pick and place; (e) conveyor belt and a robotic arm with a tool that allows to simultaneously pick and place up to four pieces; (f) conveyor belt with a SCARA robot used for pick and place.

The evaluation process for this subject included an oral presentation of the adopted solution, which formed a part of the assessment. Each working group was allotted a maximum of 10 min to deliver their complete presentation. Following the presentation, the subject professors engaged in a question-and-answer session, to address any doubts or queries that arose.

During the designated time, the students were advised to allocate approximately 3 min to explain their proposal within the developed metaverse. The presentations were structured to begin with a section where one or more members of the group elucidated the motivation behind their work and the problems they aimed to address. They also provided a well-reasoned account of the chosen devices and robots. In this segment of the presentation, the students utilized a projector and multimedia materials, such as PowerPoint presentations or similar software, videos, and other visual aids.

Next, one of the group members entered the virtual world alongside one of the subject professors. The experience was broadcast to the rest of the class via streaming, due to the limited availability of VR devices. During this immersive segment, the student thoroughly explained all aspects of the adopted solution and interacted with the professor, to address any doubts or seek clarifications. Once the explanation in the virtual world concluded, both the student and the professor exited the virtual environment and continued with the rest of the presentation. This enabled further analysis of aspects like feasibility, assumptions, conclusions, and future work.

This presentation format offered an innovative and interactive approach, allowing students to showcase their solutions within the virtual environment while ensuring the entire class could follow the demonstration. The subsequent question and answer session aided in comprehensive evaluation and fostered a deeper understanding of the students’ work.

Figure 5 displays several groups conducting presentations of their work while explaining their proposed and implemented solutions within the metaverse. It highlights the significance of teleportation functionality in such applications, as it compensates for the limited physical space available for movement in the real world environment.

Figure 5.

Illustrative examples of students presenting their work in the classroom using the metaverse: (a) a student is presenting his work in the metaverse while another student accesses his presentation in the metaverse; (b) a student is checking the presentation of another student in the metaverse; (c) two students present their work in the classroom, one of them using the metaverse; (d) two students present their work in the classroom while another student accesses their presentation in the metaverse.

2.5. Data Analysis

In line with [36,37,38,39], this study conducted usability tests, along with interviews with the students, to test the proposed approach and prove the advantages of the developed method.

In this context, the students who conducted the presentations in the virtual environment were asked to complete a standard questionnaire, known as the System Usability Scale (SUS) [40]. The SUS questionnaire was primarily designed to evaluate the usability of the developed VR application. Table 1 displays the ten questions that comprise the SUS questionnaire.

Table 1.

SUS questions [40].

Furthermore, all students were asked to participate in a custom questionnaire and to share their feedback on the methodologies utilized throughout the course. It is essential to emphasize that both questionnaires were completely anonymous, guaranteeing confidentiality and promoting candid input from the students. The mean and standard deviation were utilized as statistical measures.

Finally, some students spontaneously shared their opinions with the professors, providing valuable feedback that will be taken into account for future developments. Such feedback from the students is essential for continuous improvement and enhancement of the course and methodologies.

3. Results and Discussion

3.1. Usability Results and Student Satisfaction

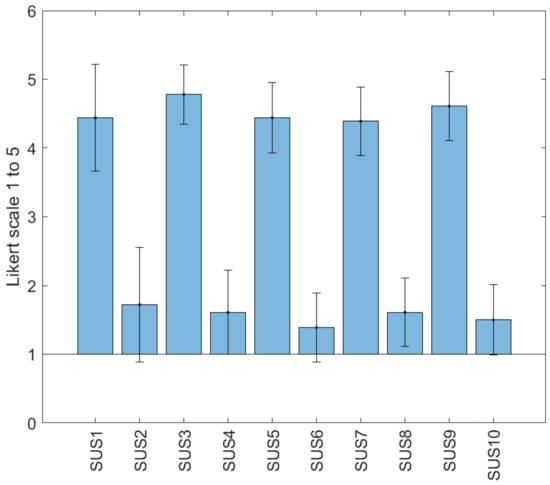

Firstly, the application’s usability was evaluated with 18 students, who presented their work in the virtual space.

The main demographic data of the students are as detailed next: 66.67% of the participants were male and the remaining 33.33% were female. The case study involved first-year students, aged between 18 and 25 years old, all pursuing bachelor’s studies or equivalent qualifications. Only 11.11% of the students had prior experience with VR devices, while the remaining 88.89% reported no prior experience.

According to the results of the SUS questions (see Figure 6—note that a five-point Likert-type scale is used), the global perceived usability of the application was 87.08 out of 100 (min: 75, max: 95; SD: 6.66). These scores indicate that the developed application accomplished a very good usability level.

Figure 6.

SUS results (mean and standard deviation).

Furthermore, the students were asked to provide comments regarding their experience presenting their work within the metaverse. The majority of users expressed a strong interest in frequently using this new form of presenting, emphasizing the advantages of effectively showcasing their designs. Additionally, most students agreed that having more VR devices during the development and presentation phases would be beneficial.

Moreover, the 40 students were asked about their preference for using VR to present their projects. They responded using a five-point Likert-type scale, where 5 indicated a strong preference for using VR, and 1 indicated a dislike for using VR. The result was a mean score of 4.35, with a standard deviation of 0.80. This indicates a positive inclination towards using VR as a preferred presentation method for their projects.

Finally, the majority of students added some comments, providing their personal opinions. Some of these comments were as follows:

- –

- “The subject has been surprising”;

- –

- “I think it has been a great learning to introduce us to the world of robotics”;

- –

- “We must adapt to new technologies and the use of these tools is a clear example of what the future will be, not too far away”;

- –

- “It is a very good way to work, especially considering that in the university you expect much more traditional classes”;

- –

- “From my point of view, I think that the methodology used in this subject has seemed good to me, therefore I would use it for future subjects”.

3.2. Additional Observations and Remarks

This section discusses other significant aspects that the professors of the subject have observed, through the implementation of this innovation.

Firstly, the incorporation of VR has proven to be a powerful motivator for students, encouraging them to engage with the subject’s content in a novel and immersive way. Their heightened motivation has led students to show increased interest in their project proposals. In some cases, professors had to manage and limit their enthusiasm to ensure they stayed within the designated timeframe and objectives, as exceeding it might have negatively impacted their performance in other subjects.

Another remarkable aspect is how VR can effectively help students overcome stage fright, particularly those in the first year of their academic journey, such as the participants in the case study presented here. VR’s capacity for immersion is well known, and the devices currently available contribute to creating a convincing sense of presence in a virtual environment.

During the case study, one student in a group struggled with stage fright. The professors suggested that she be the one to present the demonstration of the robotic system developed by her group. Recognizing her nervousness during preparations, the professors advised her to enter the metaverse from the beginning of the presentation, since she was solely responsible for that part. As the presentation progressed, the student immersed herself in the metaverse, and when the time came for her to perform the demonstration in the virtual space, no signs of nervousness or stage fright were evident. Afterward, when asked about her experience, she expressed that, although she was initially nervous upon entering the metaverse, she quickly became focused on the virtual objects and explanations, losing awareness of the real-world surroundings.

The aforementioned case indicates that the utilization of educational metaverses for presentations can have broader positive effects beyond just academic achievements. It can empower students to overcome personal challenges, such as stage fright, and it can provide them with a unique platform to showcase their work with confidence and proficiency. The integration of VR into educational settings opens up a realm of possibilities for enhancing students’ overall learning experience.

At this point, the authors of this work would like to also acknowledge the inherent challenges and considerations that come with VR technologies. In addition to the technical limitations, an important aspect to address is the user-centric perspective. Previous studies have demonstrated that not all users have the same level of comfort and preference when it comes to using metaverse hardware, such as VR headsets.

Although none of the students who participated in this work did, some individuals may experience discomfort, including dizziness, headaches, and even nausea, when exposed to VR imagery. This phenomenon has been reported by users who may be sensitive to the immersive nature of VR experiences.

To overcome this challenge, it is essential to explore various strategies that can enhance the comfort and usability of metaverse hardware. This might include using hardware with improved ergonomics, offering adjustable settings, and providing guidance to users on how to manage potential discomfort. By addressing user concerns and providing practical solutions, educators and developers can ensure that VR experiences are more inclusive and accessible to a wider range of learners.

4. Conclusions

In this work, a novel approach to presenting the work of university students in STEM degrees, leveraging the potential of VR, has been introduced.

The present study has presented and detailed the functionalities and key features of the educational metaverse designed for conducting interactive presentations. Furthermore, an application methodology has been proposed that utilizes version control repositories, such as GitHub, to facilitate seamless collaboration between professors and students during the development and modification of the metaverse application, to suit the specific requirements of their work.

Through a case study, involving 40 students from the course “Proyectos RII 1: Organización y Escenarios” at the Escuela Politécnica Superior de Alcoy of the Universitat Politècnica de València (Spain) during the academic year 2022/2023, the feasibility and effectiveness of this innovative approach has been demonstrated. The results obtained from standard questionnaires and student feedback revealed that the adoption of this new method of presenting their projects not only motivated them but also highlighted the practical application of cutting-edge technology, in showcasing their solutions in a more natural and engaging manner.

Additionally, it has been observed how this VR-based innovation can have positive effects on other important aspects, such as alleviating stage fright and reducing its impact on students who experience it.

Looking ahead, the authors are currently exploring the possibility of conducting entire presentations within the metaverse, rather than just a portion, as demonstrated in the case study. In particular, the intention is to enable students to integrate their multimedia materials, such as PowerPoint presentations, dynamically within their assigned virtual space. By doing so, they can interact seamlessly with other avatars present, directing the flow of their presentations coherently and without interruptions. The authors of this work believe that this improvement has the potential to benefit students across various disciplines, extending beyond STEM fields.

Moreover, the flexibility of the educational metaverse designed in this study allows users to access it from any location with internet access. This aspect opens up new possibilities, particularly in scenarios where students may face limitations to presenting their work in person, such as in the case of final degree projects, which are often presented through video conferencing. It would be intriguing to investigate how presentations in an educational metaverse for final degree projects could enhance interaction and elevate the overall quality of the presentations.

In conclusion, the amalgamation of VR technology and the creation of educational metaverses have exhibited promising outcomes in revolutionizing the dynamics of student presentations and audience engagement. This technology possesses the capacity to reshape educational presentations significantly, offering an immersive and interactive platform for students to effectively showcase their concepts and solutions. Nevertheless, the seamless integration of VR and metaverses comes with certain challenges and constraints that require attention. These include limitations, such as the finite battery life of VR devices, potentially curbing extended immersive learning sessions. The cost of hardware can render VR experiences inaccessible for some educational institutions and students, while classroom space constraints need to be considered, to ensure sufficient physical room for all students to partake in VR activities.

Moreover, health concerns—like discomfort, motion sickness, or visual fatigue after prolonged use of VR devices—need to be addressed. Certain medical conditions, such as epilepsy, might preclude the use of VR devices for specific students. The maintenance and support of a fleet of VR devices necessitate consistent attention, as malfunctions, updates, and repairs can disrupt the learning process, if not promptly resolved. Additionally, the potential for distraction poses a challenge; while VR can augment engagement, it can also divert students from their primary learning goals. Tackling these limitations presents an opportunity to foster a more inclusive, captivating, and efficacious educational experience for students utilizing VR devices, as exemplified by the case study presented here.

Author Contributions

Conceptualization, J.E.S., S.M.-J., E.G.-L. and V.C.-R.; methodology, J.E.S. and S.M.-J.; software, J.E.S. and A.M.; validation, J.E.S., S.M.-J., E.G.-L. and V.C.-R.; formal analysis, J.E.S., S.M.-J., E.G.-L. and V.C.-R.; writing—original draft preparation, J.E.S.; writing-review and editing, J.E.S. and L.G.; supervision, J.E.S.; project administration, J.E.S. and L.G.; funding acquisition, J.E.S., L.G. and A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Spanish Government (Grant PID2020-117421RB-C21 funded by MCIN/AEI/10.13039/501100011033).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, Y.; Xiao, Y.; Wang, K.; Zhang, N.; Pang, Y.; Wang, R.; Qi, C.; Yuan, Z.; Xu, J.; Nite, S.B.; et al. A systematic review of high impact empirical studies in STEM education. Int. J. Stem Educ. 2022, 9, 72. [Google Scholar] [CrossRef]

- Montés, N.; Zapatera, A.; Ruiz, F.; Zuccato, L.; Rainero, S.; Zanetti, A.; Gallon, K.; Pacheco, G.; Mancuso, A.; Kofteros, A.; et al. A Novel Methodology to Develop STEAM Projects According to National Curricula. Educ. Sci. 2023, 13, 169. [Google Scholar] [CrossRef]

- Leung, W.M.V. STEM Education in Early Years: Challenges and Opportunities in Changing Teachers’ Pedagogical Strategies. Educ. Sci. 2023, 13, 490. [Google Scholar] [CrossRef]

- Martí-Testón, A.; Muñoz, A.; Gracia, L.; Solanes, J.E. Using WebXR Metaverse Platforms to Create Touristic Services and Cultural Promotion. Appl. Sci. 2023, 13, 8544. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Hughes, L.; Baabdullah, A.M.; Ribeiro-Navarrete, S.; Giannakis, M.; Al-Debei, M.M.; Dennehy, D.; Metri, B.; Buhalis, D.; Cheung, C.M.; et al. Metaverse beyond the hype: Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 2022, 66, 102542. [Google Scholar] [CrossRef]

- Zyda, M. Building a Human-Intelligent Metaverse. Computer 2022, 55, 120–128. [Google Scholar] [CrossRef]

- Barráez-Herrera, D.P. Metaverse in a virtual education context. Metaverse 2022, 3, 1–9. [Google Scholar] [CrossRef]

- Kye, B.; Han, N.; Kim, E.; Park, Y.; Jo, S. Educational applications of metaverse: Possibilities and limitations. J. Educ. Eval. Health Prof. 2021, 18, 32. [Google Scholar] [CrossRef]

- Alam, A.; Mohanty, A. Metaverse and Posthuman Animated Avatars for Teaching-Learning Process: Interperception in Virtual Universe for Educational Transformation. In Innovations in Intelligent Computing and Communication; Panda, M., Dehuri, S., Patra, M.R., Behera, P.K., Tsihrintzis, G.A., Cho, S.B., Coello Coello, C.A., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 47–61. [Google Scholar]

- Kim, J. Advertising in the Metaverse: Research Agenda. J. Interact. Advert. 2021, 21, 141–144. [Google Scholar] [CrossRef]

- Cheong, B.C. Avatars in the metaverse: Potential legal issues and remedies. Int. Cybersecur. Law Rev. 2022, 3, 467–494. [Google Scholar] [CrossRef]

- Michalikova, K.; Suler, P.; Robinson, R. Virtual Hiring and Training Processes in the Metaverse: Remote Work Apps, Sensory Algorithmic Devices, and Decision Intelligence and Modeling. Psychosociol. Issues Hum. Resour. Manag. 2022, 10, 50–63. [Google Scholar] [CrossRef]

- Baker, S.C.; Wentz, R.K.; Woods, M.M. Using Virtual Worlds in Education: Second Life® as an Educational Tool. Teach. Psychol. 2009, 36, 59–64. [Google Scholar] [CrossRef]

- Wang, F.; Burton, J.K. Second Life in education: A review of publications from its launch to 2011. Br. J. Educ. Technol. 2013, 44, 357–371. [Google Scholar] [CrossRef]

- Sarac, H.S. Benefits and Challenges of Using Second Life in English Teaching: Experts’ Opinions. Procedia-Soc. Behav. Sci. 2014, 158, 326–330. [Google Scholar] [CrossRef]

- Gashan, A.K.; Bamanger, E. Living as an Avtar: EFL Learners’ Attitudes towards Utilizing Second Life Virtual Learning. Stud. Engl. Lang. Teach. 2014, 11, 1–15. [Google Scholar] [CrossRef]

- ‘asher’ Rospigliosi, P. Metaverse or Simulacra? Roblox, Minecraft, Meta and the turn to virtual reality for education, socialisation and work. Interact. Learn. Environ. 2022, 30, 1–3. [Google Scholar] [CrossRef]

- Díaz, J.E.M.; Saldaña, C.A.D.; Avila, C.A.R. Virtual World as a Resource for Hybrid Education. Int. J. Emerg. Technol. Learn. 2020, 15, 94–109. [Google Scholar] [CrossRef]

- Kolmos, A.; Holgaard, J.E.; Clausen, N.R. Progression of student self-assessed learning outcomes in systemic PBL. Eur. J. Eng. Educ. 2021, 46, 67–89. [Google Scholar] [CrossRef]

- Reyes, C.E.G. Percepción de Estudiantes de Bachillerato Sobre el uso de Metaverse en Experiencias de Aprendizaje de Realidad Aumentada en Matemáticas. 2020. Available online: https://redined.educacion.gob.es/xmlui/handle/11162/199075 (accessed on 2 August 2023).

- Chang, C.Y.; Lai, C.L.; Hwang, G.J. Trends and research issues of mobile learning studies in nursing education: A review of academic publications from 1971 to 2016. Comput. Educ. 2018, 116, 28–48. [Google Scholar] [CrossRef]

- Gilliam, M.; Jagoda, P.; Fabiyi, C.; Lyman, P.; Wilson, C.; Hill, B.; Bouris, A. Alternate Reality Games as an Informal Learning Tool for Generating STEM Engagement among Underrepresented Youth: A Qualitative Evaluation of the Source. J. Sci. Educ. Technol. 2017, 26, 295–308. [Google Scholar] [CrossRef]

- Aslam, F.; Adefila, A.; Bagiya, Y. STEM outreach activities: An approach to teachers’ professional development. J. Educ. Teach. 2018, 44, 58–70. [Google Scholar] [CrossRef]

- Kukulska-Hulme, A.; Viberg, O. Mobile collaborative language learning: State of the art. Br. J. Educ. Technol. 2018, 49, 207–218. [Google Scholar] [CrossRef]

- Park, S.; Kim, S. Identifying World Types to Deliver Gameful Experiences for Sustainable Learning in the Metaverse. Sustainability 2022, 14, 1361. [Google Scholar] [CrossRef]

- Tlili, A.; Huang, R.; Shehata, B.; Liu, D.; Zhao, J.; Metwally, A.H.S.; Wang, H.; Denden, M.; Bozkurt, A.; Lee, L.H.; et al. Is Metaverse in education a blessing or a curse: A combined content and bibliometric analysis. Smart Learn. Environ. 2022, 9, 1–31. [Google Scholar] [CrossRef]

- Wang, M.; Yu, H.; Bell, Z.; Chu, X. Constructing an Edu-Metaverse Ecosystem: A New and Innovative Framework. IEEE Trans. Learn. Technol. 2022, 15, 685–696. [Google Scholar] [CrossRef]

- Kang, Y. Metaverse Framework and Building Block. J. Korea Inst. Inf. Commun. Eng. 2021, 25, 1263–1266. [Google Scholar]

- Park, S.M.; Kim, Y.G. A Metaverse: Taxonomy, Components, Applications, and Open Challenges. IEEE Access 2022, 10, 4209–4251. [Google Scholar] [CrossRef]

- Huang, H.; Hwang, G.J.; Chang, S.C. Facilitating decision making in authentic contexts: An SVVR-based experiential flipped learning approach for professional training. Interact. Learn. Environ. 2021, 1–17. [Google Scholar] [CrossRef]

- Duan, H.; Li, J.; Fan, S.; Lin, Z.; Wu, X.; Cai, W. Metaverse for Social Good: A University Campus Prototype. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual, 20–24 October 2021. [Google Scholar]

- Wang, S.; Mihajlovic, M.; Ma, Q.; Geiger, A.; Tang, S. MetaAvatar: Learning Animatable Clothed Human Models from Few Depth Images. arXiv 2021, arXiv:2106.11944. [Google Scholar]

- Solanes, J.E.; Muñoz, A.; Gracia, L.; Tornero, J. Virtual Reality-Based Interface for Advanced Assisted Mobile Robot Teleoperation. Appl. Sci. 2022, 12, 6071. [Google Scholar] [CrossRef]

- Photon. Photonengine SDK. 2023. Available online: https://www.photonengine.com/sdks (accessed on 2 August 2023).

- GitHub. 2023. Available online: https://github.com (accessed on 2 August 2023).

- Blattgerste, J.; Strenge, B.; Renner, P.; Pfeiffer, T.; Essig, K. Comparing Conventional and Augmented Reality Instructions for Manual Assembly Tasks. In Proceedings of the 10th International Conference on PErvasive Technologies Related to Assistive Environments, Island of Rhodes, Greece, 21–23 June 2017; ACM: New York, NY, USA, 2017; pp. 75–82. [Google Scholar] [CrossRef]

- Attig, C.; Wessel, D.; Franke, T. Assessing Personality Differences in Human-Technology Interaction: An Overview of Key Self-report Scales to Predict Successful Interaction. In HCI International 2017—Posters’ Extended Abstracts; Stephanidis, C., Ed.; Springer International Publishing: Cham, Switzerland, 2017; pp. 19–29. [Google Scholar]

- Muñoz, A.; Martí, A.; Mahiques, X.; Gracia, L.; Solanes, J.E.; Tornero, J. Camera 3D positioning mixed reality-based interface to improve worker safety, ergonomics and productivity. CIRP J. Manuf. Sci. Technol. 2020, 28, 24–37. [Google Scholar] [CrossRef]

- Franke, T.; Attig, C.; Wessel, D. A Personal Resource for Technology Interaction: Development and Validation of the Affinity for Technology Interaction (ATI) Scale. Int. J.-Hum.-Comput. Interact. 2018, 35, 456–467. [Google Scholar] [CrossRef]

- Brooke, J. “SUS-A Quick and Dirty Usability Scale.” Usability Evaluation in Industry; CRC Press: London, UK, 1996; ISBN 9780748404605. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).