Abstract

As a newly developed metaheuristic algorithm, the artificial bee colony (ABC) has garnered a lot of interest because of its strong exploration ability and easy implementation. However, its exploitation ability is poor and dramatically deteriorates for high-dimension and/or non-separable functions. To fix this defect, a self-adaptive ABC with a candidate strategy pool (SAABC-CS) is proposed. First, several search strategies with different features are assembled in the strategy pool. The top 10% of the bees make up the elite bee group. Then, we choose an appropriate strategy and implement this strategy for the present population according to the success rate learning information. Finally, we simultaneously implement some improved neighborhood search strategies in the scout bee phase. A total of 22 basic benchmark functions and the CEC2013 set of tests were employed to prove the usefulness of SAABC-CS. The impact of combining the five methods and the self-adaptive mechanism inside the SAABC-CS framework was examined in an experiment with 22 fundamental benchmark problems. In the CEC2013 set of tests, the comparison of SAABC-CS with a number of state-of-the-art algorithms showed that SAABC-CS outperformed these widely-used algorithms. Moreover, despite the increasing dimensions of CEC2013, SAABC-CS was robust and offered a higher solution quality.

1. Introduction

Optimization problems are omnipresent in industrial manufacturing and science activities. In general, these problems are complex and characterized by non-convexity, non-differentiability, discontinuity, etc. These kinds of problem are hard to handle with traditional methods in mathematics because they require strict limits on mathematical properties in optimization problems. In recent years, swarm algorithms (SAs) have received much attention as a powerful tool for solving these kinds of complex optimization problem. Since the need for SAs was recognized, a wide variety of SAs have been developed, often inspired by modeling the behaviors of organisms in the natural world, including the genetic algorithm (GA) [1,2], the firefly algorithm (FA) [3], the ant colony algorithm (ACO) [4], the differential evolution algorithm (DE) [5,6,7], the particle swarm algorithm (PSO) [8,9], and artificial bee colony (ABC) [10,11], etc.

The ABC algorithm, which replicates the tight collaborative activity of employed bees, onlooker bees, and scout bees in discovering suitable food sources, was initially described by Karaboga et al. [12] in 2005. Due to its straightforward design, few variables, and strong resilience, the ABC has attracted researcher interest and is applied in route planning, resource scheduling, and other related problems. However, it is very difficult to apply a single operator to perfectly solve all kinds of optimization problem. The ABC is no exception and faces the following challenges:

- In comparison to other SAs, ABC has a sluggish convergence, due to the 1-D update in the search equation. It is crucial to figure out how to increase the convergence speed to improve ABC’s performance. One difficulty that should be addressed is how to increase the algorithm’s convergence speed, while maintaining high performance, by enhancing the method;

- The problems that can be solved using the artificial bee colony algorithm are limited in variety, due to the simplicity and singularity of the ABC algorithm’s updating method;

- According to certain pertinent literature studies [13], ABC has a significant capacity for exploration because of a single search equation in the evolution process. As a result, a popular area of research is how to improve exploitation, while maintaining exploration.

Many ABC variants have been designed to address these deficiencies, and the modified techniques can be divided into three groups: modifying search equations [14], assembling a multi-strategy [15], and hybridizing other metaheuristic search frameworks [16].

In order to resolve these difficult optimization issues, this study suggests a novel variation of ABC called SAABC-CS, which stands for self-adaptive ABC with a candidate strategy pool. Its main characteristics can be summed up as follows:

- Five alternative search methods are combined to generate a candidate strategy pool that improves ABC’s exploitation capability, without sacrificing exploration capability. In addition, we include multi-dimensional updates in each strategy, which considerably increases the frequency of individual updates and boosts the convergence speed. A self-adaptive method is also suggested for choosing the right search technique. The knowledge from the previous information is used to adaptively update the selection probability of each strategy;

- Our approach, in contrast to other algorithms, performs quite well, without adding additional control parameters when applying each strategy, which is aligned to the artificial bee colony program’s original intention—simplicity and effectiveness;

- By improving the method, we make it more useful for solving actual, practical issues in the real world.

The remaining part of this paper is divided into the following sections: The works pertaining to the fundamental ABC and its variations are detailed in Section 2. In Section 3, the suggested algorithm SAABC-CS is described. The effectiveness of our suggested approach and the analysis of the results of our algorithm in comparison to other algorithms are provided in Section 4. The last Section provides a summary of our work.

2. Related Work

2.1. ABC Algorithm

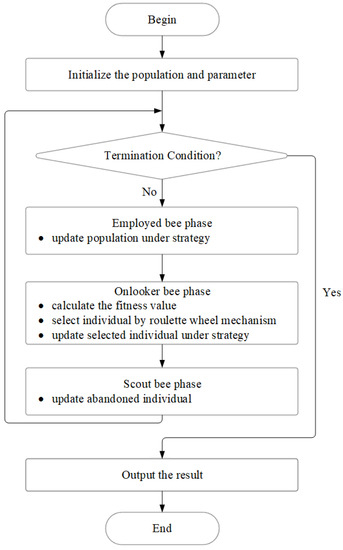

The initialization period, employed bee period, onlooker bee period, and scout bee period make up the primary foundation of the ABC. All bees have different responsibilities at different stages. It should be noted that each bee has its own food resources in each period, and the number of bees in each period is consistent. The food resource in this statement often represents a candidate solution to the optimization problem. The evolution framework of the ABC is seen in Figure 1.

Figure 1.

The ABC framework.

Like all SAs, the ABC needs to go through an initialization stage before performing the other three stages of work cyclically. The following are the relevant contents of each phase:

- (i)

- Initialization phase

In this phase, the entire population is randomly initialized, each individual represents a food resource and this is generated using Equation (1).

where and . D stands for the dimension of the optimization problems, while is the number of solutions in a swarm. is a random number belonging to (0,1), represents the element of jth dimension of the ith individual. and denote the minimum value and maximum value in all dimensions of each individual, respectively.

- (ii)

- Employed bee phase

The evolution reaches the employed bee phase after startup. According to Equation (2), each employed bee searches the full search area for new food sources during this phase.

The random number has the value (−1,1). Unlike , which belongs to the whole population, is a randomly chosen solution. The value of is reinitialized using Equation (1) if it oversteps either the lower or higher barrier. takes the place of if its object function value is superior to that of .

In addition, there are a number of updated individuals (NUI) for each solution. Thus, NUI is recorded using a 1·SN matrix. At the beginning, every element of NUI is initialized to zero. After that, once the has successfully been replaced by , the ith value of NUI is reset to zero. Otherwise, the ith value of NUI is increased by one. The NUI matrix has an effect on the subsequent scout bee phase.

- (iii)

- Onlooker bee phase

The bee continues to search for new food sources during the onlooker bee phase. In contrast to the employed bee phase, the onlooker bee only has to seek in the vicinity of the chosen food resource, which is equivalent to expanding the utilization of the food resource. At this stage, not every food resource can be selected to search in its vicinity, but a probability search is carried out according to its fitness value. The calculation equation for the fitness value is as shown in Equation (3).

where and are the fitness values of and objective function result, correspondingly. is calculated in the employed bee phase. Equation (4) determines the selection probability of each food resource.

After that, the onlooker bees use the classic roulette wheel selection strategy to select a food resource. Obviously, the larger the fitness value obtained, the greater the chance the food resource is selected. Equation (2) is also used as an update equation for the onlooker bees. In addition, the same process is used as for the employed bees after generating a candidate solution.

- (iv)

- Scout bee phase

At scout bee phase, the element value NUI associated with each individual is checked. Once an individual’s NUI exceeds a predefined value, it is believed that this food resource has been exhausted, which implies that the individual may be trapped at a local optima. As a result, in this circumstance, the employed bee is transformed into a scout bee to help Equation (1) generate new solutions.

2.2. ABC Variants

Although the ABC algorithm has a good optimization performance, it also has certain shortcomings, such as it being easy to fall into local optimum, the imbalance between exploration and exploitation, and a slow convergence speed. Due to the existing problems with the ABC, researchers have proposed many different methods to solve them. Most solutions can be divided into three distinct categories:

- (1)

- Modifying the search equation

The performance of the ABC algorithm depends heavily on the solution search equation. In a basic ABC, the solution search equation does well in exploration but poorly in exploitation, since each individual shown in Equation (2) is chosen randomly from the overall population. Thus, inspired by [17,18], Wang and Zhou et al. [19] proposed an ABC variant (KFABC). KFABC is based on knowledge fusion and its viability was tested against 32 benchmark functions. Lu et al. [20] designed Fast ABC (FABC), which made use of two extra alternative search equations for employed bees and onlooker bees, respectively. These two equations also utilized the bees’ individual information and employed a Cauchy operator to equilibrize the global and local search capacities of individuals. In order to prove its effectiveness, the performance of FABC was compared with that of 10 benchmark functions and a genuine path planning issue. Gao et al. [21], inspired by differential evolution (DE), presented an improved search equation using a modified ABC (MABC). This variant enabled the bees to search around the best solutions found in the previous iteration, to improve the exploitation. A total of 28 benchmark functions were used in the comparison experiments. When compared to two ABC-based algorithms, the findings showed that MABC performed well when addressing complicated numerical optimization problems.The improved algorithm that Guo et al. [22] developed based on MABC is called the global artificial bee colony search algorithm. Guo incorporated all the employed bees’ historical best positions based on the information about food sources into the search equations to develop this algorithm. Yu et al. [23] proposed another form of ABC variant called the adaptive ABC (AABC). It adjusted the greedy degree of the original ABC using a novel greedy position update strategy and an adaptive control scheme. Using a set of benchmark functions, AABC outperformed the original ABC and subsequent ABC iterations in their tests.

- (2)

- Hybridizing another metaheuristic search framework

Hybrid algorithms are mainly based on the combination of two or more metaheuristic algorithms, so that the advantages of one algorithm can be used to offset the deficiencies of other algorithms. This method could improve the optimization performance of an algorithm. The following are some examples of hybrid ABC algorithms that combined the ABC algorithm with other heuristic algorithms. Jadon et al. [24] proposed a hybridization of ABC and DE algorithms (HABCDE), to develop a more efficient algorithm than ABC or DE individually. Over twenty test problems and four actual optimization issues were used to evaluate the performance of HABCDE. Alqattan et al. [25] presented a hybrid particle movement ABC algorithm (HPABC). This algorithm adapted the particle moving process to improve the exploitation of the original ABC variant. The algorithm variant was provided, and seven benchmark functions were utilized to validate it. Chen et al. [26], on the other hand, introduced a simulated annealing algorithm into the employed bees’ phase and proposed the simulated annealing-based ABC algorithm (SAABC). To improve algorithm exploitation, the simulated annealing algorithm was added in the employed bee search process. The experimental results were validated against a collection of numerical benchmark functions of varying size. This demonstrated that the SAABC algorithm outperformed the ABC and global best guided ABC algorithms in the majority of tests.

- (3)

- Assembling multi-strategy

Multi-strategy search refers to the implementation of different search strategies in the different search stages of the ABC or for different food resources. In recent years, some algorithms that introduced multi-strategy search into ABC have been proposed, but their effectiveness varied. Gao et al. [27] formed a strategy pool using three distinct search strategies and adopted an adaptive selection mechanism to further enhance the performance of the algorithm. It was evaluated using a set of 22 benchmark functions and compared against other ABCs. In almost every case, the comparison findings revealed that the suggested method provided superior results. Song et al. [28] designed a novel algorithm called MFABC. MFABC improved the search ability of the ABC algorithm with a small population by fusing multiple search strategies for both employed bees and onlooker bees. MFABC’s accuracy, stability, efficiency, and convergence rate were demonstrated experimentally on a set of benchmark functions. Chen et al. [29] proposed a new algorithm called self-adaptive differential artificial bee colony (sdABC) by incorporating multiple diverse search strategies and a self-adaptive mechanism into the original ABC algorithm. The sdABC technique was tested on 28 benchmark functions, including both common separable and difficult non-separable CEC2015 functions. The experimental findings suggested that sdABC obtained substantially better outcomes on both separable and non-separable functions than earlier ABC algorithms. In addition to the above ABC algorithm variants, Zhou et al. [30] developed a modified neighborhood search operator by utilizing an elite group, which is called MGABC. Their experiments employed 50 well-known test functions and one real-world optimization issue to validate the technique, which included 22 scalable basic test functions and 28 complicated CEC2013 test functions. The comparison included seven distinct and well-established ABC variations, and the findings suggested that the technique could obtain test results that were at least equivalent in test performance for most of the test functions.

Assessing these three improvement directions, the first is too simple and the second makes the algorithm extremely complicated. Thus, we choose the third direction as our main interest. We based our research partially on prior work from other researchers. By assembling a multi-strategy search, a wider range of issues can be tackled and the outcomes are better.

3. The Proposed Algorithm SAABC-CS

3.1. Candidate Strategy Pool

In most cases, different problems have different characteristics, and they are hard to describe clearly in advance. Thus, problems are usually black boxes. Moreover, different update strategies for ABC have unique characteristics. It is unrealistic to rely on only one strategy to solve all problems. These observations make us reconsider how to select strategies or construct novel strategies to improve the robustness when facing different problems. Based on the motivations above, we selected five search strategies with different characteristics from the relevant literature [18,31] to construct our candidate strategy pool. In addition, we employed the binomial crossover method to enable the algorithm to find optimal solutions more effectively. Considering both exploration and exploitation during the entire evolution process, five strategies were selected and are described in detail, as follows:

- (i)

- “rand”:

- (ii)

- “pbest-1”:

- (iii)

- “pbest-2”:

- (iv)

- “current-to-pbest”:

- (v)

- “pbest-to-rand”:

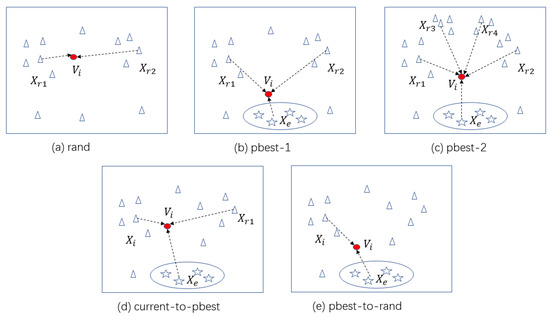

Figure 2 roughly depicts the behavior of each strategy, the individuals in ellipse are the elite individuals, and the remaining triangle icons represent other common individuals. The red circle in Figure 2 represents the new individual generated by the corresponding strategy. With the “rand” strategy, the position of the new individual in Figure 2a is between two different individuals, and it is close to the first random individual. Actually, its position falls within a circle with as the center and as the radius. The behavior of the “rand” strategy makes the algorithm focus more attention on a global search. Similarly, the position of the new individual in Figure 2b is between an elite individual and two different common individuals with the “pbest-1” strategy. This strategy leads the algorithm to learn the elite’s information, while focusing on a global search. With the “pbest-2” strategy, the position of the new individual in Figure 2c is also in the center of the selected individuals, this makes our algorithm utilize more individual sampling information. As shown in Figure 2d, the position of the new individual is affected by the current individual, an elite individual, and a randomly selected individual in the “current-to-pbest" strategy. The position of the new individual in Figure 2e is based on the elite individual and current individual, which comprehensively takes the current individual and elite individual into consideration.

Figure 2.

Schematic diagrams of five different strategies. (Stars represent elite individuals, triangles represent common individuals, red circles represent new individual, dashed arrows represent search direction).

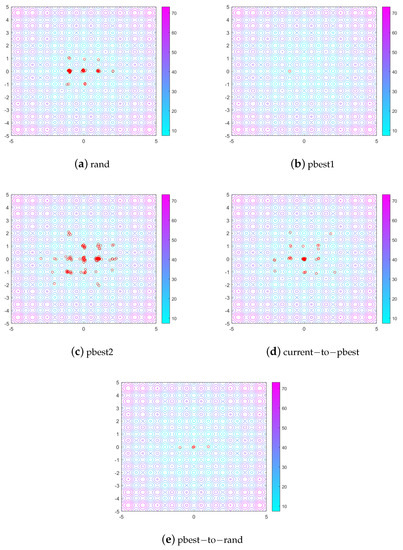

In order to further reflect the different characteristics of the five strategies in seeking optimal solutions, we performed an experiment on the Rastrgin function [32] under the same conditions. The formula of Rastrigin is as follows, and its dimension was set to 2:

where X is a 2-dimensional individual.

In this experiment, we obtained the two-dimensional individual distribution for the five strategies after 20 generations, and present the results in Figure 3. The initial population size of each strategy was 100. From Figure 3a, these individuals may be seen to disperse around local maxima, although the local maxima are still distant from the global maxima. Thus, it is obvious that the “ABC/rand” strategy has a strong exploration ability but weak exploitation ability. We can see clearly that all individuals converge around one local optimum in Figure 3b, but the local optimum is not the global optimum. Thus, it has a strong exploitation ability but weak exploration ability. The “ABC/pbest-2” strategy originates from “ABC/pbest-1”, but with increased exploration ability. This modification causes most individuals to distribute around global optimum, with some individuals located around other local optima. The results in Figure 3c further demonstrate that the “ABC/pbest-2” strategy increased its exploration ability while keeping its exploitation ability. As for the results in Figure 3d, the “ABC/current-to-pbest” strategy uses the information of the current individual, a random different individual, and a random elite individual. Thus, it has a strong exploration ability during early generation and a strong exploitation ability during late generation. As we can see from Figure 3e, under the influence of “ABC/pbest-to-rand”, the individuals mainly converged around the global optimum, with others also located near local optima. This was dominated by but also uses the current individual information. Thus, it maintains a significant capacity for exploitation, while also having the opportunity to leave the local optima and go to a global or nearby one.

Figure 3.

Five strategies’ contour graphs based on the two-dimensional Rastrigin function. (Red dots represent individuals. Subfigures (a–e) represent individual distribution map of the five strategies in the current generation, respectively).

With the exception of the “ABC/rand” technique, the other four search methods all utilize the information of the elite group. The following two benefits result from using an elite group instead of the elite with the best fitness:

(1) In the first place, this allows the entire population to fully utilize the knowledge of the elite solution group during the evolution process and evolve in a better way.

(2) Second, the whole population is prone to becoming locked in local optima if the population only uses the present global optimal solution as the search traction. However, the population may evolve in numerous good directions and are provided better solutions by the elite group. As a result of using an elite group, it is simple for the population to move away from the local optima and reach the global or approximated optimal region.

Additionally, the original ABC search approach performs poorly for some issues with variable inseparability, since it only updates one variable at a time. Therefore, to update many dimensions at once, these techniques combine mutation and crossover, as in GA. In this approach, using various update techniques inside the adaptive mechanism enhances the algorithm’s efficiency, while simultaneously strengthening its robustness. Thus, to create a trial vector , we apply a binomial crossover operator to and .

where , . A number chosen at random between [1, D] called k is utilized to make certain that at least one element is updated. is an arbitrary number ranging from 0 to 1 with a uniform distribution.

In our algorithm, we also precisely apply the boundary correction technique to improve the outcome. If the jth dimension element of is outside of the boundary, we make the following revisions:

To join the following generation, we choose the superior source vector over the trial vector .

The following values are set for the strategy’s self-definition parameters: The elite community’s size is q·SN. The dimension update is controlled by parameter M, which is set at 0.5.

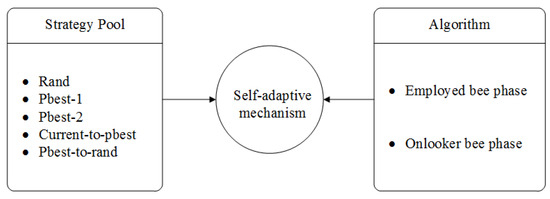

3.2. Self-adaptive Mechanism

To maximize the algorithm’s efficiency, we must choose a more appropriate approach in different phases of the algorithm, due to the distinctive characteristics of the aforementioned five alternative search strategies. As a result, we include an adaptive mechanism in our suggested algorithm, to choose the best strategy. The fundamental principle of self-adaptation is to dynamically modify the potential for choosing an appropriate approach, in accordance with the success information about producing superior solutions. The selection likelihood of one strategy increases when an exceptional solution is produced by this strategy. Additionally, any tactic has the chance to be picked out during the evolution, owing to the roulette selection system. Such a self-adaptive system can help the population move beyond the local ideal, as well as toward the optimal. The combination of this self-adaptive mechanism with the aforementioned five techniques is depicted in the flowchart in Figure 4.

Figure 4.

Flowchart of self-adaptive multiple strategies.

In the initialization phase, some variables are initialized by the self-adaptive mechanism. Prob is a 1 × 5 matrix, in which each element corresponds to the selection probability for the above , and the sum of all elements is 1. In the beginning, their selection probability is equal, to guarantee fairness. Two 1 × SN matrices sFlag and fFlag are used, to mark whether the candidate solution is better or worse than the original solution when using a corresponding strategy. SN is a measure of population density. If the new generated solution is better, the associated sFlag matrix element is set to 1 and the corresponding fFlag matrix element is set to 0, and vice versa. We also use two 5·LP matrices, sCounter and fCounter, to count the proportion of triumphs and failures of each generation in the LP generation, after updating using the corresponding strategy. LP represents a fixed interval, and we set this to 10 here. For every LP generation, we use sCounter and fCounter to update the Prob of each strategy. The statistical data information of sCounter and fCounter are the main source for updating the Prob value. Moreover, every time the selected strategy probability is updated, every element of sFlag, fFlag, sCounter, and fCounter must be reset to 0, to avoid affecting the next LP generations. The update equation of Prob is determined using the following Equation (14), and then the probability is normalized using Equation (15).

3.3. Scout Bee and Modified Neighborhood Search Operator

In this stage, we utilize the method proposed by Wang et al. in KFABC [19], adding two methods based on opposition-based learning(OBL) and the Cauchy approach, to generate two additional solutions. Then, we select the best solution from the random solutions, OBL solution, and Cauchy solution, to replace the abandoned solution. The random operator, the OBL operator, and Cauchy disturbance operator that produce the candidate solutions are described in Equations (1), (16) and (17).

where the space’s boundary is defined by Lower and Upper. j = 1, 2,…, D, and the abandoned solution is represented by .

where , return a value from the Cauchy distribution.

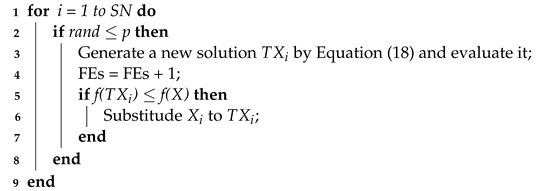

In addition, we use a neighborhood search operator in our method as a supplementary operator, which was suggested by Zhou et al. in MGABC [30]. The operator continues to use the data from the elite group solution and determines whether to employ the supplemental operator in this generation based on a certain possibility p (p is 0.1, as in MGABC [30]. The operator is shown in Equation (18).

where three solutions from the elite group, , , and , were chosen at random and must be distinct from . As positive numbers drawn at random from (0,1), r1, r2, and r3 must also satisfy the restriction that r1 + r2 + r3 = 1. If is superior to , will take the place of .

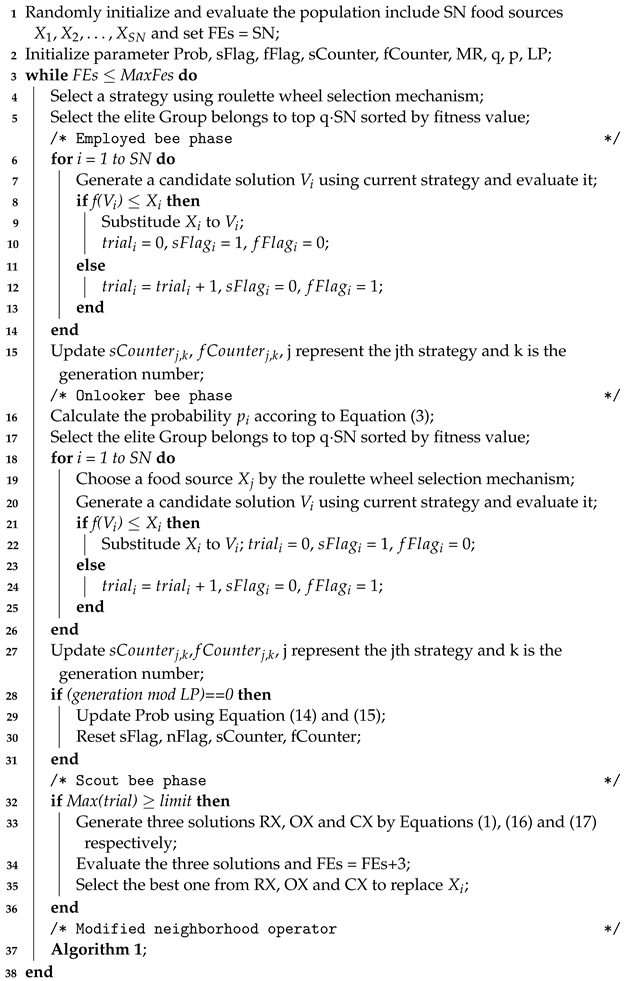

3.4. Framework of SAABC-CS

During the employed and onlooker bee phase, SAABC-CS employs five distinct search algorithms, four of which make use of knowledge from the elite group. We provide an adaptive mechanism based on prior knowledge to choose the best search technique, in order to make better use of these five tactics. To enhance the algorithm’s efficiency and speed of convergence, we update the search technique used for the scout bee and add an additional neighborhood search operator. The pseudo-code for SAABC-CS is provided in Algorithms 1 and 2 and, the flowchart for it can be viewed in Figure 5, which help to better explain the entire process.

| Algorithm 1: The pseudo-code of Modified neighborhood operator |

|

Figure 5.

Flowchart of SAABC-CS.

| Algorithm 2: The pseudo-code of SAABC-CS. |

|

4. Experiments

4.1. Test Problems

We ran trials on 50 test issues that were separated into two sets of benchmarks, to demonstrate the efficacy of our suggested algorithm SAABC-CS. The first benchmark set included 22 basic functions, and the second benchmark set was referred to as CEC2013. The dimensions of the CEC2013 benchmarks were set as 30, 50, and 100. We used two values (Mean and Std) as metrics for algorithm comparison. ”Mean” represents the average value of the optimal results obtained by the algorithm for the corresponding running times, and “Std” represents the corresponding variance. Experiment 1 not only verified the effectiveness of the strategy pool but also demonstrated the effectiveness of the self-adaptive method. Experiment 2 compared the performance of SAABC-CS with that of the other five algorithms in the CEC2013 function set. All algorithms designed in this section were utilized in MATLAB R2020a. Table 1, Table 2, Table 3 and Table 4 report the compared results, in which the best result for each problem is marked in bold, and summarize the statistical findings. “+/=/−” indicate that SAABC-CS outperformed, was comparable to, or underperformed the compared algorithm in the test tasks.

Table 1.

Results of five single strategies vs. multi-strategy ABC algorithms with self-adaptive/rand on basic 22 function (D = 30).

Table 2.

Results of SAABC-CS vs. the other five ABC algorithms on CEC2013 function with D = 30.

Table 3.

Results of SAABC-CS vs. other five ABC algorithm on CEC2013 function with D = 50.

Table 4.

Results of SAABC-CS vs. the other five ABC algorithms on CEC2013 function with D = 100.

4.2. Effectiveness Analysis of the Proposed Strategy Pool and Self-adaptive Mechanism

In experiment 1, we wanted to probe the following two problems:

- Problem 1: Is it necessary to assemble the five different strategies?

- Problem 2: Is the self-adaptive mechanism required and are the results affected when the self-adaptive selection mechanism is replaced by a random selection mechanism?

To solve problem 1, each single strategy was embedded into the original ABC, to make a result comparison between each strategy and SAABC-CS. As for problem 2, we tested two different strategy selections. One was the random strategy selection mechanism, and the other was the self-adaptive selection mechanism. The ABC algorithms including various search methods mentioned below examined the efficacy of the strategy pool and the self-adaptive mechanism.

- ABC-rand: the original ABC with rand strategy;

- ABC-pbest-1: the original ABC with pbest-1 strategy;

- ABC-pbest-2: the original ABC with pbest-2 strategy;

- ABC-current-to-pbest: the original ABC with current-to-pbest strategy;

- ABC-pbest-to-rand: the original ABC with pbest-to-rand strategy;

- SAABC-CS: ABC with self-adpative selection mechanism in the strategy pool;

- RABC-CS: ABC with random selection mechanism in the strategy pool.

The fundamental settings for the seven algorithms listed above were as follows: the SN, D, limit, MaxFEs, and running times were set to 100, 30, 100, 5000·D, and 30, respectively. Table 1 displays the outcomes of the ABC using the RABC-CS and SAABC-CS single search techniques on the fundamental 22 functions. SAABC-CS in Table 1 outperformed ABC-rand, ABC-pbest-1, ABC-pbest-2, ABC-current-to-pbest, and ABC-pbest-to-rand on 14, 16, 15, 14, and 13 of the 22 test functions, respectively. This demonstrated that the combination of five techniques increased the test function accuracy. SAABC-CS outperformed RABC-CS, which chooses methods at random, on 14 functions, while being comparable for 8 of them. In this test suite, the self-adaptive selection mechanism performed better than the random selection method.

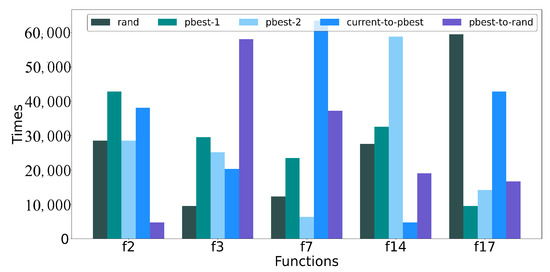

To determine the contribution of each strategy, we counted the use times of each strategy during the whole processes for two multimodal functions (, ) and three unimodal functions (, , ). The outcomes are displayed in Figure 6. As shown in the figure, the strategies with the highest frequency for f2, f3, f7, f14, and f17 were “pbest-1”, “pbest-to-rand”, “current-to-pbest”, “pbest-2”, and “rand”, respectively. Among the five single strategies in Table 1, “pbest-1”, “pbest-to-rand”, “current-to-pbest”, “pbest-2”, and “rand” produced the best results for the f2, f3, f7, f14, and f17 functions, respectively. Taking function f7 as an example, “current-to-pbest” had the best performance and “pbest-to-rand” came second among the results of the five single techniques shown in Table 1. According to Figure 6, the suggested self-adaptive mechanism chose the strategy “current-to-pbest” most, followed by “pbest-to-rand”. This phenomenon explains why the adaptive selection approach worked so well. The self-adaptive mechanism had the capability to adaptively choose the best approach in accordance with the requirements of the problem, so that the quality of the solutions was improved.

Figure 6.

The frequency of strategies by function.

4.3. Comparison for CEC2013 Functions

In this section, we used the CEC2013 testing suite in experiment 2, to further illustrate the effectiveness of our algorithm SAABC-CS in handling complicated functions and high-dimensional issues. Since the CEC2013 test functions are more complicated than the 22 fundamental scalar functions, it was challenging to find the global best solution for CEC2013. We compared our algorithm SAABC-CS with the original ABC [12] and four additional cutting-edge ABC algorithms (ABCNG [33], KFABC [19], SABC-GB [15], and MGABC [30]). We measured the outcomes on D = 30, 50, and 100, to examine the comprehensive performance of these algorithms in several dimensions. For the fairness of the experiments, the methods were evaluated using the same parameters, SN = 100, limit = 1000, and MaxFEs = 10,000·D. The final statistical outcomes of the six related algorithms are encapsulated in Table 2, Table 3 and Table 4 based on 30 independent runs.

For D = 30, the results of the above ABC variations are reported in Table 2. For 21, 17, 25, 20, and 25 of the 28 test functions, our algorithm SAABC-CS outperformed or equaled the ABC, ABCNG, KFABC, SABC-GB, and MGABC in terms of outcomes. Additionally, SAABC-CS achieved the best results on 17 functions of F1–F5, F7, F8, F10, F12, F13, F15, F18, F20, F23-F25, and F28 when it was compared with the other algorithms. The statistical results of all algorithms on CEC2013 with the dimension D = 50 are shown in Table 3. SAABC-CS outperformed or equaled the ABC, ABCNG, KFABC, SABC-GB, and MGABC on 21, 17, 25, 20, and 25 out of 28 functions, respectively. The outcomes of all algorithms with the criterion D = 100 are displayed in Table 4. On 24, 20, 25, 19, and 25 out of 28 functions, SAABC-CS outperformed or equaled the ABC, ABCNG, KFABC, SABC-GB, and MGABC, with comparable or superior performance. Moreover, on F1–F9, F12–F13, F15–F20, F23–F25, and F28, SAABC-CS always achieved the best value out of all algorithms. As a result, SAABC-CS had the highest overall performance of the six algorithms that were examined.

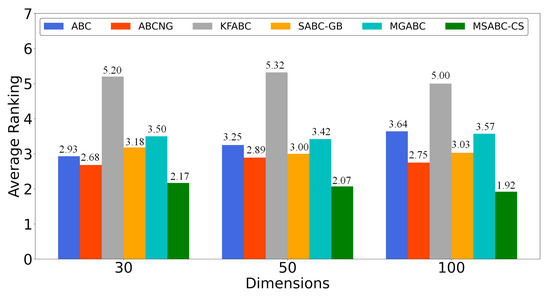

To be more intuitive, we used the Friedman test [34] to rank all algorithms across all test problems, with the results shown in Table 5 and Figure 7. It is worth noting that the Friedman test uses the post hoc technique [35,36,37,38], and the lower the ranking, the better the overall performance of the algorithm. From the data, we can see the average rank of SAABC-CS was always the first for 30, 50, and 100 dimensions. Therefore, SAABC-CS’s effectiveness was always superior to the others on all dimensions.

Table 5.

Average Ranking.

Figure 7.

Illustration of Average Ranking.

We can further demonstrate the efficacy of our approach in finding the optimal for the complicated functions, based on the comparison findings mentioned above for the CEC2013 functions.

4.4. SAABC-CS for Practical Engineering Problems

In real-world engineering, parameter estimation of frequency-modulated (FM) sound waves [39] is frequently investigated. In this section, we performed tests to make comparisons between SAABC-CS and other ABC variants. We ran each algorithm 30 times independently and compared the results of the top outcomes of each algorithm.

4.4.1. Parameter Estimation for Frequency-Modulated (FM) Sound Waves

Frequency-modulated (FM) sound wave synthesis plays an important role in various modern music systems. To estimate the parameter of a FM synthesizer and to optimize the results, we solved a six-dimensional optimization problem, where we tried to optimize the vector given in Equation (19). The goal of this optimization problem was to generate a sound (19) similar to the target sound (20). This problem highly complex and multi-modal, having strong epistasis, with a minimum value . The expressions for the estimated sound and the target sound waves are given as

where and the parameters are defined in the range . The fitness function is the summation of the square errors between the estimated wave (19) and the target wave (20), as follows:

4.4.2. Results of SAABC-CS Compared with Other Algorithms

Table 6 is a summary of the final results of our experiments, including the six parameters and the optimal cost. SAABC-CS obtained the minimum cost in solving the parameter estimation for frequency-modulated (FM) sound waves problem.

Table 6.

SAABC-CS vs. other algorithms for parameter estimation of FM sound waves.

5. Conclusions

In this paper, we proposed a new self-adaptive ABC algorithm with candidate strategies (SAABC-CS) to balance the exploration and exploitation of the evolution. Compared with the original ABC, SAABC-CS has three modifications, without adding any extra parameters: (1) five strategies are selected and assembled in a strategy pool; (2) a self-adaptive mechanism was designed to make the algorithm universal; (3) three neighbor mutations work together to enhance the scout phase. The aforementioned additions enhance ABC’s overall performance by allowing it to tackle complicated issues with more features, while balancing its exploration and development capabilities.

Comprehensive experiments were performed on two groups of functions: 22 basic benchmark functions and CEC2013 test suites. The experiment results for the 22 basic test functions showed that SAABC-CS obtained a much better performance than an ABC with one strategy. Furthermore, the self-adaptive selection mechanism in SAABC-CS was well-turned to select an appropriate strategy for facing problems of a different nature. For the complex and difficult CEC2013 benchmark suite, SAABC-CS still achieved promising results and surpassed four state-of-the-art ABCs. With the increasing dimensions of CEC2013, the performance of SAABC-CS did not deteriorate. The method also produced positive results when it was used to tackle real-world engineering challenges, demonstrating that it can solve real-world optimization problems as well as test functions.

Although extensive experiments were conducted to demonstrate the performance of SAABC-CS, we hope to theoretically analyze the algorithm, inspired by the literature [40,41,42]. We also wish to extend the use of SAABC-CS to certain large and expensive problems in the future.

Author Contributions

Y.H.: Conceptualization, Methodology, Software, Validation, Formal analysis, Writing—Original Draft. Y.Y.: Conceptualization, Methodology, Writing—Original Draft, Supervision, Project administration. J.G.: Conceptualization, Methodology, Writing—Original Draft, Supervision, Project administration. Y.W.: Methodology, Supervision, Project administration. All authors have read and agreed to the published version of the manuscript.

Funding

This work was part funded by the National Natural Science Foundation of China (No. 61966019), and the Fundamental Research Funds for the Central Universities (No. CCNU20TS026).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this investigation are accessible upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Whitley, D. A genetic algorithm tutorial. Stat. Comput. 1994, 4, 65–85. [Google Scholar] [CrossRef]

- Houck, C.R.; Joines, J.; Kay, M.G. A genetic algorithm for function optimization: A Matlab implementation. Ncsu-Ie Tr 1995, 95, 1–10. [Google Scholar]

- Wang, H.; Zhou, X.; Sun, H.; Yu, X.; Zhao, J.; Zhang, H.; Cui, L. Firefly algorithm with adaptive control parameters. Soft Comput. 2017, 21, 5091–5102. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Qin, A.K.; Huang, V.L.; Suganthan, P.N. Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Trans. Evol. Comput. 2008, 13, 398–417. [Google Scholar] [CrossRef]

- Qin, A.K.; Suganthan, P.N. Self-adaptive differential evolution algorithm for numerical optimization. In Proceedings of the 2005 IEEE Congress on Evolutionary Computation, Scotland, UK, 2–5 September 2005; Volume 2, pp. 1785–1791. [Google Scholar]

- Mallipeddi, R.; Suganthan, P.N.; Pan, Q.K.; Tasgetiren, M.F. Differential evolution algorithm with ensemble of parameters and mutation strategies. Appl. Soft Comput. 2011, 11, 1679–1696. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Shi, Y. Particle swarm optimization: Developments, applications and resources. In Proceedings of the 2001 Congress on Evolutionary Computation (IEEE Cat. No. 01TH8546), Seoul, Republic of Korea, 27–30 May 2001; Volume 1, pp. 81–86. [Google Scholar]

- Karaboga, D.; Basturk, B. On the performance of artificial bee colony (ABC) algorithm. Appl. Soft Comput. 2008, 8, 687–697. [Google Scholar] [CrossRef]

- Karaboga, D.; Akay, B. A comparative study of artificial bee colony algorithm. Appl. Math. Comput. 2009, 214, 108–132. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report, Technical Report-tr06; Engineering Faculty, Erciyes University: Kayseri, Turkey, 2005. [Google Scholar]

- Bajer, D.; Zorić, B. An effective refined artificial bee colony algorithm for numerical optimisation. Inf. Sci. 2019, 504, 221–275. [Google Scholar] [CrossRef]

- Kumar, D.; Mishra, K. Co-variance guided artificial bee colony. Appl. Soft Comput. 2018, 70, 86–107. [Google Scholar] [CrossRef]

- Xue, Y.; Jiang, J.; Zhao, B.; Ma, T. A self-adaptive artificial bee colony algorithm based on global best for global optimization. Soft Comput. 2018, 22, 2935–2952. [Google Scholar] [CrossRef]

- Cui, L.; Li, G.; Zhu, Z.; Lin, Q.; Wen, Z.; Lu, N.; Wong, K.C.; Chen, J. A novel artificial bee colony algorithm with an adaptive population size for numerical function optimization. Inf. Sci. 2017, 414, 53–67. [Google Scholar] [CrossRef]

- Wang, H.; Wu, Z.; Rahnamayan, S.; Sun, H.; Liu, Y.; Pan, J.S. Multi-strategy ensemble artificial bee colony algorithm. Inf. Sci. 2014, 279, 587–603. [Google Scholar] [CrossRef]

- Gao, W.; Liu, S.; Huang, L. A novel artificial bee colony algorithm based on modified search equation and orthogonal learning. IEEE Trans. Cybern. 2013, 43, 1011–1024. [Google Scholar] [PubMed]

- Wang, H.; Wang, W.; Zhou, X.; Zhao, J.; Wang, Y.; Xiao, S.; Xu, M. Artificial bee colony algorithm based on knowledge fusion. Complex Intell. Syst. 2021, 7, 1139–1152. [Google Scholar] [CrossRef]

- Lu, R.; Hu, H.; Xi, M.; Gao, H.; Pun, C.M. An improved artificial bee colony algorithm with fast strategy, and its application. Comput. Electr. Eng. 2019, 78, 79–88. [Google Scholar] [CrossRef]

- Gao, W.; Liu, S. A modified artificial bee colony algorithm. Comput. Oper. Res. 2012, 39, 687–697. [Google Scholar] [CrossRef]

- Guo, P.; Cheng, W.; Liang, J. Global artificial bee colony search algorithm for numerical function optimization. In Proceedings of the 2011 Seventh International Conference on Natural Computation, Shanghai, China, 26–28 July 2011; Volume 3, pp. 1280–1283. [Google Scholar]

- Yu, W.J.; Zhan, Z.H.; Zhang, J. Artificial bee colony algorithm with an adaptive greedy position update strategy. Soft Comput. 2018, 22, 437–451. [Google Scholar] [CrossRef]

- Jadon, S.S.; Tiwari, R.; Sharma, H.; Bansal, J.C. Hybrid artificial bee colony algorithm with differential evolution. Appl. Soft Comput. 2017, 58, 11–24. [Google Scholar] [CrossRef]

- Alqattan, Z.N.; Abdullah, R. A hybrid artificial bee colony algorithm for numerical function optimization. Int. J. Mod. Phys. C 2015, 26, 1550109. [Google Scholar] [CrossRef]

- Chen, S.M.; Sarosh, A.; Dong, Y.F. Simulated annealing based artificial bee colony algorithm for global numerical optimization. Appl. Math. Comput. 2012, 219, 3575–3589. [Google Scholar] [CrossRef]

- Gao, W.f.; Huang, L.l.; Liu, S.y.; Chan, F.T.; Dai, C.; Shan, X. Artificial bee colony algorithm with multiple search strategies. Appl. Math. Comput. 2015, 271, 269–287. [Google Scholar] [CrossRef]

- Song, X.; Zhao, M.; Xing, S. A multi-strategy fusion artificial bee colony algorithm with small population. Expert Syst. Appl. 2020, 142, 112921. [Google Scholar] [CrossRef]

- Chen, X.; Tianfield, H.; Li, K. Self-adaptive differential artificial bee colony algorithm for global optimization problems. Swarm Evol. Comput. 2019, 45, 70–91. [Google Scholar] [CrossRef]

- Zhou, X.; Lu, J.; Huang, J.; Zhong, M.; Wang, M. Enhancing artificial bee colony algorithm with multi-elite guidance. Inf. Sci. 2021, 543, 242–258. [Google Scholar] [CrossRef]

- Zhu, G.; Kwong, S. Gbest-guided artificial bee colony algorithm for numerical function optimization. Appl. Math. Comput. 2010, 217, 3166–3173. [Google Scholar] [CrossRef]

- Mühlenbein, H.; Schomisch, M.; Born, J. The parallel genetic algorithm as function optimizer. Parallel Comput. 1991, 17, 619–632. [Google Scholar] [CrossRef]

- Xiao, S.; Wang, H.; Wang, W.; Huang, Z.; Zhou, X.; Xu, M. Artificial bee colony algorithm based on adaptive neighborhood search and Gaussian perturbation. Appl. Soft Comput. 2021, 100, 106955. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Wang, B.C.; Li, H.X.; Li, J.P.; Wang, Y. Composite differential evolution for constrained evolutionary optimization. IEEE Trans. Syst. Man, Cybern. Syst. 2018, 49, 1482–1495. [Google Scholar] [CrossRef]

- Wang, Y.; Li, J.P.; Xue, X.; Wang, B.C. Utilizing the correlation between constraints and objective function for constrained evolutionary optimization. IEEE Trans. Evol. Comput. 2019, 24, 29–43. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, B.C.; Li, H.X.; Yen, G.G. Incorporating objective function information into the feasibility rule for constrained evolutionary optimization. IEEE Trans. Cybern. 2015, 46, 2938–2952. [Google Scholar] [CrossRef]

- Fan, Q.; Jin, Y.; Wang, W.; Yan, X. A performancE—driven multi-algorithm selection strategy for energy consumption optimization of sea-rail intermodal transportation. Swarm Evol. Comput. 2019, 44, 1–17. [Google Scholar] [CrossRef]

- Das, S.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for CEC 2011 Competition on Testing Evolutionary Algorithms on Real World Optimization Problems; Jadavpur University, Nanyang Technological University: Kolkata, India, 2010; pp. 341–359. [Google Scholar]

- KhalafAnsar, H.M.; Keighobadi, J. Adaptive Inverse Deep Reinforcement Lyapunov learning control for a floating wind turbine. Sci. Iran. 2023, in press. [Google Scholar] [CrossRef]

- Keighobadi, J.; KhalafAnsar, H.M.; Naseradinmousavi, P. Adaptive neural dynamic surface control for uniform energy exploitation of floating wind turbine. Appl. Energy 2022, 316, 119132. [Google Scholar] [CrossRef]

- Keighobadi, J.; Nourmohammadi, H.; Rafatania, S. Design and Implementation of GA Filter Algorithm for Baro-inertial Altitude Error Compensation. In Proceedings of the Conference: ICLTET-2018, Istanbul, Turkey, 21–23 March 2018; pp. 21–23. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).