Abstract

Music serves as a powerful tool for emotion regulation, particularly in adolescents, who experience emotional challenges. Understanding the determinants shaping their perception of musical emotions may help optimize music-based interventions, especially for those with ADHD. This online study examined how familiarity, musical affinity, and ADHD diagnosis influence adolescents’ judgments of musical excerpts in terms of arousal and emotional valence. A total of 138 adolescents (38 ADHD, 100 controls) rated 55 excerpts for arousal, valence, and familiarity using 10-point Likert scales. Musical affinity was conceptualized as a multidimensional construct encompassing musical experience, listening diversity, and receptivity to musical emotions. A cluster analysis identified two affinity profiles (low and high), and ANCOVAs tested the effects of affinity, ADHD, and familiarity on arousal and valence judgments. Familiarity strongly affected both arousal and valence. High-affinity adolescents judged excerpts as more pleasant and familiar, though arousal ratings did not differ between affinity profiles. Familiarity effects on emotional valence were stronger among lower-affinity adolescents. ADHD status did not significantly affect ratings. Overall, the study underscores music’s potential for emotion regulation and its relevance in educational, clinical, and self-care contexts.

Keywords:

musical emotions; familiarity; musical affinity; arousal; emotional valence; ADHD; adolescents 1. Introduction

Adolescents place music at the core of their daily lives, making them the most frequent consumers compared to other age groups (; ; ; ). Beyond its ubiquity, music constitutes a notable tool for emotional regulation and well-being in everyday life (; ; ; ). For adolescents, music may therefore provide vital assistance as they navigate emotional challenges typical of this development period, such as identity development and peer acceptance (). This role may assume greater importance for adolescents with attention deficit/hyperactivity disorder (ADHD), who often experience emotion dysregulation. It has been demonstrated that this clinical population often encounters difficulties in regulating their emotional state to adapt to environmental demands or to reach one’s goals (; ). In clinical contexts, music is increasingly used as a therapeutic tool (see for a review), especially for youth with ADHD, with recent reviews and meta-analyses underscoring potential benefits for mood improvement and emotion regulation (; ; ). Understanding how adolescents perceive emotions in music is therefore a key aspect in clarifying the potential role of music as a regulatory tool, especially in adolescents with ADHD. As such, the goal of this study was to improve knowledge regarding the ways in which adolescents with and without ADHD perceive musical emotions. To situate the present research, the next sections successively address four central themes: (1) dimensional approaches to musical emotions, (2) the role of tempo and familiarity in musical emotion perception, (3) individual musical affinity variability, and (4) musical emotion perception in ADHD.

1.1. Dimensional Approach to Musical Emotions

Over the past few decades, researchers have investigated emotional responses to music using two prominent approaches: categorical and dimensional. The categorical approach, based on general theories of basic emotions (), suggests that listeners may recognize and experience different emotions based on musical cues, such as happiness, sadness, or anger (; ). In the context of musical emotions specifically, however, the categorical approach is limited. Indeed, emotions elicited by music are often complex and hard to confine to discrete categories (e.g., “sad” music eliciting positive feelings; ) and are therefore better conceptualized as spectrums (; ; ).

As a result, a complementary approach has emerged in research on musical emotions, prioritizing a dimensional framework to allow for nuances and to better reflect the richness and ambiguity of musical experience (; ). Two dimensions of emotions are particularly relevant: arousal (relaxing-stimulating), defined as a physiological or subjective experience of energy or activity, and emotional valence, defined as the pleasant-unpleasant continuum (; ; ). These dimensions are widely used in musical emotion research and provide a parsimonious way to assess adolescents’ emotional judgments. In this context, both structural features of music (e.g., tempo) and experiential factors (e.g., familiarity) have been shown to modulate perceived arousal and emotional valence, offering complementary insight into how listeners interpret musical emotions.

1.2. The Role of Tempo and Familiarity in Musical Emotion Perception

Among musical parameters, tempo (i.e., the pace of a musical piece; ) has been identified as a key determinant of arousal in adult populations. Music with a faster tempo is typically perceived as more stimulating and increases physiological arousal, whereas slower tempi are perceived as less stimulating (; ; ; ). Studies in adults have also demonstrated that tempo interacts with emotional valence. Faster tempi are generally associated with higher perceived emotional valence (i.e., more pleasant), while slower tempi are often linked to a less pleasant evaluation of the music (; ; ; ; ; ; ).

Familiarity has been consistently identified as another strong predictor of arousal and emotional valence in adults (; ) and, to a lesser extent, in youth samples (). Across these age groups, familiar music elicits greater arousal and pleasure than unfamiliar music. This pattern is thought to arise from mechanisms of predictability and anticipation. Indeed, with repeated exposure to musical pieces, listeners learn the structural features of a piece and form expectations about its unfolding (; ; ). When these expectations are confirmed, particularly during anticipated and rewarding passages (e.g., a favorite moment of a song), dopaminergic reward circuits are engaged, amplifying both arousal and emotional valence (; ; ). In adults, familiar and anticipated music engages reward-related brain regions such as the nucleus accumbens and orbitofrontal cortex (; ). Although this neural mechanism has not yet been directly confirmed in adolescents, preadolescent fMRI and behavioral findings suggest that similar reward mechanisms may already be functional during this developmental stage (; ; ).

Although familiarity generally enhances musical pleasure and arousal, its influence is not unidirectional. Familiarity appears to act as an emotional amplifier, intensifying listeners’ affective responses regardless of their perceived emotional valence, making pleasant excerpts feel more pleasant, but also unpleasant ones more unpleasant (). This suggests that familiarity strengthens the emotional impact of music on individuals rather than determining its pleasantness. At the same time, studies have shown that unfamiliar music can also evoke powerful emotional reactions (), indicating that emotional engagement does not depend solely on prior exposure. Therefore, these findings suggest that familiarity primarily amplifies the intensity of emotional responses rather than the direction of emotional valence, and that its effects likely vary across listeners according to individual traits such as musical affinity (; ; ).

1.3. Musical Affinity Inter-Individual Variability

In adolescence, music plays a key role in emotional expression, regulation, and identity formation (; ; ; ). Although musical training is often used as an index of musical experience and affinity to explain inter-individual variability, it only captures a narrow part of adolescents’ engagement with music. Recent work has therefore proposed moving beyond formal training, and advocates for a multidimensional view of musical engagement (; ; ). These frameworks highlight that musical engagement extends beyond instrumental practice to encompass diverse components, such as perceptual sensitivity and emotional responsiveness. In line with this perspective, the present study operationalizes musical affinity through three complementary components of this engagement: (1) musical experience, reflecting exposure and practice; (2) listening diversity, indexing the breadth of musical genres adolescents engage with; and (3) receptivity to musical emotions, capturing the affective sensitivity experienced when listening to music.

1.3.1. Musical Experience

Traditionally, musical training has been regarded as the primary determinant of music-related abilities (). Such training can enhance perceptual sensitivity to musical structure and emotional expression (; ; ; ). However, growing evidence suggests that untrained individuals may still display strong emotional sensitivity due to environmental and individual differences (; ; ; ). Importantly, adolescents generally have fewer years of accumulated training than adults, simply because of their younger age. Yet, music still plays a central role in their lives (; ). Thus, measuring musical experience can provide useful insights but should not be considered the sole source of musical abilities, including perception of emotions in music.

1.3.2. Listening Diversity

Listening to a broad range of musical genres increases the amount of structural and affective cues to which listeners are accustomed. Such diversity has been linked to stronger preferences and more heterogeneous emotional responses (; ). In adolescents, listening habits have also been associated with emotional well-being () and with coping styles during stress, suggesting that how youth listen (in addition to what they listen to) influences their emotional outcomes (). Recent work suggests that listening to recommendations of less familiar genres can increase listeners’ openness and curiosity, while also helping to deconstruct pre-existing stereotypes and implicit associations (). By expanding the range of musical structures and emotions that feel familiar, listening diversity fosters greater emotional sensitivity and flexibility in music perception, shaping both how individuals respond to music and what kinds of music they prefer.

This relationship between listening diversity, familiarity, and musical preferences is particularly relevant during adolescence, a period when music plays a crucial role in identity formation and emotional expression (). At this developmental stage, preferences are strongly oriented toward popular styles such as pop, hip-hop/rap, electronic dance music (EDM), and contemporary R&B (; ; ; ). Therefore, the diversity and distinctiveness of preferred and familiar genres, particularly when preferences extend beyond those typically shared among adolescents, may provide a meaningful indicator of individual differences in musical engagement (; ; ).

1.3.3. Receptivity to Musical Emotions

Individuals differ in their sensitivity to the affective content of music. Some are more prone to emotional absorption, defined as a disposition to become deeply immersed in music and to experience strong affective reactions such as chills, tears or intense pleasure (; ). Receptivity reflects a combination of dispositional traits (e.g., openness to experience) and situational engagement (e.g., attentive or background listening), which influence how listeners experience music (; ). Greater receptivity has, in turn, been linked to stronger perceived and felt emotions as individuals with higher openness to experience or greater attentiveness to the music tend to report more intense emotional experiences (; ; ).

Together, these three components capture complementary aspects of individual engagement with music and how one responds to it, referred to as musical affinity. Musical affinity encapsulates an individual’s inclination to engage with music through training (e.g., music classes, self-taught), diversity of listening (e.g., listening to multiple musical genres), and receptivity to musical emotion (e.g., propensity to experience emotions during music listening). This conceptualization is especially relevant to adolescence, when everyday musical engagement is both pervasive and emotionally salient (; ).

1.4. ADHD and Perception of Musical Emotions

Beyond musical affinity, ADHD constitutes an important individual characteristic that may modulate emotional responses to music (; ). ADHD is characterized by inattention, hyperactivity, and impulsivity () and often presents with multiple comorbidities, such as psychological or other neurodevelopmental disorders (; ; ; ). Importantly, it is also frequently accompanied by emotion dysregulation, defined as a reduced capacity to effectively modulate emotional states (e.g., rapid mood shifts; ; ; ). Several authors have argued that these emotional difficulties should occupy a more central place in the conceptualization and diagnosis of ADHD (; ; ; ). Given that access to psychological care for youth with ADHD is limited worldwide, identifying accessible strategies for emotional support is crucial (; ; ; ). In this context, engaging with music represents a promising and accessible avenue for supporting the emotional well-being of adolescents with ADHD. Indeed, adolescents with ADHD often exhibit heightened emotional reactivity and may also find in music a resource for emotional and attentional regulation (; ; ). However, a better understanding of how ADHD influences the perception of musical emotions is essential before music-based intervention can be effectively used in this population. Difficulties in emotional regulation can influence listener’s experience and their perception of musical emotions (). Recent research suggests that individuals with poorer emotion-regulation abilities engage with music differently and experience altered emotional responses (; ). Moreover, music-based interventions targeting emotional regulation demonstrate measurable improvements in emotion-regulation skills and affective well-being (). In parallel, evidence indicates that individuals with ADHD often present timing and rhythm-related impairments, such as difficulties synchronizing to a beat or maintaining a steady tempo (; ; ), which could affect their sensitivity to temporal or arousal-related cues in music, even though improvisational and expressive abilities may remain intact (). Thus, ADHD presents a unique dual profile of emotional dysregulation and atypical temporal processing, making this population particularly relevant for investigating how musical emotions, specifically perceived arousal and emotional valence, are processed and experienced.

Although few studies have examined musical emotion perception, specifically in adolescents with ADHD, related findings provide useful insights. Recent systematic reviews converge in showing that music can play multiple roles in ADHD, spanning music-based therapy, music listening, and performance contexts (; ). Furthermore, both active and passive engagement with music appear beneficial for emotional functioning. Active participation (e.g., singing, instrument playing) facilitates emotional expression and self-regulation by engaging rhythmic and motor systems linked to executive control and dopaminergic reward networks (; ; ). These activities help externalize affective states, promote social connectedness, and reduce aggression or impulsivity. In contrast, passive listening, particularly to calming or familiar music, can modulate arousal and mood by providing structure and predictability, decreasing physiological stress markers (e.g., heart rate variability, cortisol levels), and promoting relaxation (; ; ). These findings support the dual role of music as both an expressive and regulatory tool for individuals with ADHD.

Moreover, music-based interventions have shown promising results in reducing core ADHD symptoms, such as hyperactivity and impulsivity, as well as emotion dysregulation by reinforcing attentional focus, promoting rhythmic training, and potentially engaging dopaminergic reward pathways involved in motivation and affective control (; ). However, methodological heterogeneity across studies (e.g., small sample sizes, varied intervention protocols, inconsistent outcome measures) currently limits firm conclusions ().

Taken together, these findings suggest that music may serve both compensatory (by supporting attentional and emotional control) and regulatory (by modulating affective states) functions in ADHD, even in the presence of perceptual challenges (i.e., timing and rhythm difficulties). Nevertheless, the existing literature has largely focused on behavioral and performance outcomes, while the emotional perception of music in ADHD remains insufficiently understood and warrants further investigation. In particular, adolescents’ subjective judgments of musical emotion (i.e., how they perceive arousal and emotional valence in music) remain largely unexplored (), despite their relevance for understanding how music might regulate emotions in this clinical population.

1.5. Present Study

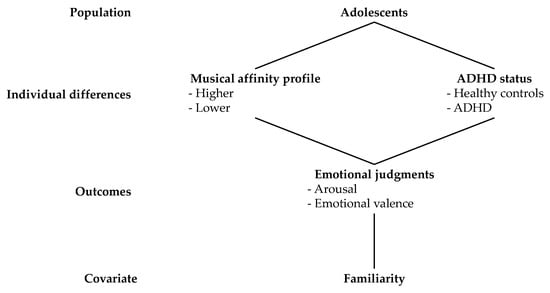

The present online study aims to better understand the perception of musical emotions in adolescents. More specifically, the study investigated how familiarity, musical affinity, and ADHD diagnosis jointly shape adolescents’ judgments of arousal and emotional valence elicited by music. To this end, adolescents rated 55 musical excerpts spanning diverse genres to reflect adolescents’ musical preferences (e.g., pop, hip-hop/rap, EDM) in two dimensions: arousal and emotional valence. Figure 1 presents the research design presented in the study. Building on prior evidence that familiarity strongly predicts emotional responses to music (; ), we expected higher familiarity to be associated with greater arousal and with ratings reflecting higher pleasantness on the emotional valence dimension. Based on multidimensional accounts of musical engagement (; ), we expected that higher musical affinity, characterized by greater musical experience, listening diversity, and receptivity to musical emotions, would be associated with more polarized emotional ratings. Finally, given the documented impairments of adolescents with ADHD (i.e., timing, rhythm perception, emotion dysregulation), we hypothesized that they would differ from controls in their emotional judgments of arousal and emotional valence, although the literature does not allow for predictions regarding the direction of the effects.

Figure 1.

Research design of the present study.

2. Materials and Methods

2.1. Participants

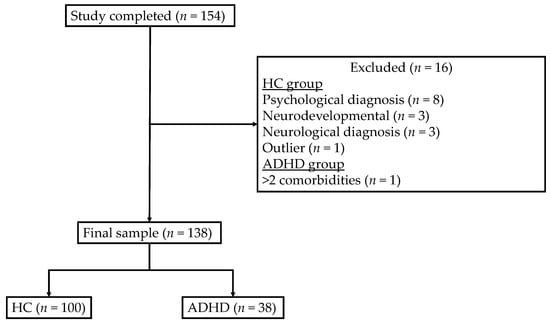

Adolescent (12–18 years old) participants were recruited through social media and recruitment posters distributed in schools and nonprofit organizations in Quebec, Canada. To be included in the study, the participants were required to have French as their first or second language and report no auditory or neurological disorder (see Figure 2). Participants were separated into two groups based on their self-reported ADHD diagnosis received from a qualified professional (e.g., physician, psychologist, neuropsychologist): ADHD and healthy controls (HCs). In the ADHD group, a maximum of two psychological or neurodevelopmental diagnoses (e.g., generalized anxiety disorder, learning disorder) was allowed. This exclusion criterion was established to minimize variability in the data while allowing for generalization of the findings as ADHD frequently presents with multiple comorbidities (; ; ; ). For HC participants, exclusion criteria included the presence of psychological and neurodevelopmental disorders. The final sample included 138 adolescents aged 12 to 18 years (M = 14.34, SD = 1.45). Participants had an average of 9 years of education (SD = 1.18). Overall, 53.6% identified as girls, 39.9% as boys, and 6.5% as another gender identity. Because the latter proportion was too small for meaningful comparisons, these participants were excluded from analyses involving gender. Most participants reported being North American (76.6%), and the most predominant first language was French (84.8%). Among the 38 ADHD participants, 15 (39.9%) reported comorbid anxiety and/or depression, and 6 (15.8%) had a co-occurring learning disorder.

Figure 2.

Flowchart of Sample Selection.

All adolescents, and their parents for participants under 14 years old, gave their consent to enroll in the study in accordance with the Quebec Civil Code for the participation of minors aged less than 14 years old to minimize risk research. The study was approved by the Comité d’Éthique à la recherche en Éducation et en Psychologie of the University of Montreal.

2.2. Materials

2.2.1. Sociodemographic and Health Information

Sociodemographic characteristics, such as age, gender (boy, girl, or other), first and second language, ethnic group, and education were collected in the online form. Health information, such as prior diagnoses of auditory, psychological, neurodevelopmental and neurological problems, was also collected in the online form to verify if they meet the inclusion and exclusion criteria.

2.2.2. Stimuli

Fifty-five instrumental musical pieces were selected from different popular music streaming platforms (e.g., Spotify, iTunes, Youtube; see Appendix A for a complete description of musical pieces). Musical pieces were selected to ensure a variety of musical genres adapted to the preferences of adolescents (e.g., electronic dance music, hip-hop, pop; ; ; ; ). For each musical piece, an excerpt of 15 seconds was selected. All musical excerpts were normalized at peak value, and logarithmic fade-ins and fade-outs of 500 ms were made using Reaper software v6.78 (). All the selected excerpts were composed in a major mode and ranged from 40 to 150 BPM (beats per minute, BPM; M = 96.55, SD = 27.81). The tempo of each musical piece was first estimated by a trained musician through auditory analysis and subsequently cross-validated with automated values provided by Tunebat (https://tunebat.com/), a Spotify API-based database that provides key and BPM metadata for commercially available recordings. This two-step procedure ensured the reliability of tempo values, particularly for excerpts with less regular rhythmic structures (e.g., solo piano, ambient, orchestral pieces).

2.2.3. Arousal, Emotional Valence, and Familiarity Scales

Participants were asked to rate how they perceived each musical excerpt for arousal, emotional valence, and familiarity using 10-point Likert scales (from 1 to 10; 1 = Relaxing, 10 = Stimulating; 1 = Unpleasant, 10 = Pleasant; and 1 = Unfamiliar, 10 = Familiar).

2.2.4. Musical Affinity

Musical affinity was assessed using three subscales: (A) Musical experience (e.g., years of formal and informal training), (B) Music listening diversity, and (C) Receptivity to musical emotions (e.g., arousal and mood regulation). The internal consistency and descriptive statistics of the subscales are presented in Table 1. See also Appendix B for detailed psychometric properties.

Table 1.

Descriptive Statistics and Internal Consistency of the Musical Affinity Subscales and Composite Score.

- A.

- Musical Experience

The musical experience subscale was assessed using years of formal and informal musical training as well as the number of instruments played in those two contexts. Response formats were adapted from the Goldsmith Musical Sophistication Index (Gold-MSI; ) to better reflect adolescent experiences (e.g., highest value diminishing from 10+ years to 5+ years). Four items were included: (1) years of formal training, (2) years of informal training (e.g., self-taught, video-based), (3) the number of instruments (including singing) played formally, and (4) the number of instruments played informally. Training duration was rated on a 6-point Likert scale (from 0 = 0 or less than 1 year, to 5 = 5 or more years) and the instrument count used a similar scale (0 = none, 5 = 5 or more).

A composite score was computed by averaging the four items, with higher scores indicating greater and more diverse musical experiences. The subscale demonstrated acceptable internal consistency (Cronbach’s α = 0.803), and corrected correlations from each item with the composite score ranged from 0.719 to 0.792 (p < 0.001), suggesting a balanced contribution across indicators. All inter-item correlations were significant (r = 0.243 to 0.770, p < 0.010), further supporting internal coherence (complete statistical indexes can be found in Appendix B).

- B.

- Music Listening Diversity

Participants reported how frequently they listened to different musical genres (e.g., “How often do you listen to Pop?”) on a 5-point Likert scale (from 1 = Never to 5 = Very often). To obtain a general index of music listening diversity, a two-step procedure was elaborated. First, to isolate musical diversity (i.e., number of genres) from listening frequency (how often the genre is listened to), responses were dichotomized: genres rated as 2 (Rarely) or lower were coded as 0 (Not listened to) and genres rated as 3 (Occasionally) or higher were coded as 1 (Listened to). The resulting music listening diversity index, ranging from 0 to 21, reflected the number of genres that the participants reported having any meaningful engagement with.

Using a coefficient suited for dichotomous items (Kuder-Richardson Formula-20), the index demonstrated good internal consistency (KR-20 = 0.801). Corrected item-total correlations ranged from 0.114 to 0.570. As expected in a formative measure, some genres showed lower correlations due to high prevalence (e.g., pop = 83.3%, hip hop/rap = 81.9%) or rarity (e.g., spiritual/religious music = 12.3%; traditional Quebec music = 27.5%; classical = 29.7%). These items were retained to preserve the conceptual scope of the index, as they reflect unique and meaningful aspects of musical taste, despite their lower statistical contribution.

Second, to create five ordinal levels of musical diversity, participants were ranked based on quintiles derived from the observed distribution of the raw diversity index (range: 0–21). This data-driven approach ensured balanced group sizes, given the asymmetric distribution of the data. The resulting categories ranged from 1 (very low diversity) to 5 (very high diversity).

- C.

- Receptivity to Musical Emotions

This subscale was inspired by a validated questionnaire by () to measure participants’ receptivity to musical emotions. Initially composed of 10 items (see Appendix B), one item (“Music makes me feel nostalgic”) was excluded from analysis due to its low item-total Pearson correlation (r = 0.297) and weak convergence with the remaining items (r = 0.107 to 0.333). Participants rated each item on a 5-point Likert scale (1 = Never, 5 = Very often), and a mean score across the remaining nine items (e.g., “Music comforts me”) was computed. Higher scores indicate greater emotional receptivity to music. The subscale demonstrated good internal consistency (Cronbach’s α = 0.868) and all retained items showed moderate to strong correlations with the total score (ranging from 0.587 to 0.777), supporting construct validity.

- D.

- Total Musical Affinity Score

A total score was computed by summing the values of the three subscales. Receptivity to musical emotions and music listening diversity were both scaled from 1 to 5, while musical experience ranged from 0 to 5. Although the subscales were moderately to strongly intercorrelated (r = 0.48 to 0.84), internal consistency for the total score was limited (Cronbach’s α = 0.502), reflecting the conceptual heterogeneity of the subscales. Given these psychometric considerations, the total musical affinity score was retained for descriptive purposes only, rather than for classifying participants by musical affinity.

All subscale distributions showed acceptable levels of skewness and kurtosis (see Table 1), supporting their use as input variables in a cluster analysis to determine meaningful profiles of musical affinity.

2.3. Procedure

The online study was constructed using LimeSurvey v3.28.52 (, Hamburg, Germany) and the International Laboratory for Brain, Music, and Sound Research Online Testing Platform (BRAMS-OTP, https://brams.org/category/online-testing-platform/, accessed on 10 November 2025), and was administered in French. After giving their consent to enroll in the study, participants first completed the general information form and musical affinity questionnaire. Then, they rated 55 musical excerpts on three dimensions (arousal, emotional valence, familiarity). Before the beginning of this part, participants were asked to wear headphones to reduce environmental distractions, to complete the study in a calm environment, and to adjust the volume intensity to a comfortable level. To ensure participants’ comprehension of the task requirements, two practice trials were administered. Participants could replay each musical excerpt as many times as needed. Excerpts were presented in a randomized order for each participant.

2.4. Data Analysis

2.4.1. Musical Affinity Classification

To identify participant profiles based on musical affinity, the three subscales (musical experience, music listening diversity, and receptivity to musical emotions) were entered into a two-step cluster analysis. This approach was selected to empirically distinguish participants with lower and higher musical affinity while accounting for the multidimensional nature of the construct.

While the automatic procedure of the analysis proposed three clusters (silhouette measure ≈ 0.40), a two-cluster solution was retained, as it provided the highest quality (fair-to-good; silhouette measure ≈ 0.50) and offered clearer interpretability. To verify the stability of the classification, a k-means structure was also computed, with strong convergence (χ2(1) = 96.11, p < 0.001) and an excellent agreement (κ = 0.82, p < 0.001) between methods. The resulting profiles were labeled Higher Musical Affinity (HMA) and Lower Musical Affinity (LMA).

2.4.2. Preliminary Analyses

Descriptive statistics and two-way ANOVAs (Musical affinity profile × Diagnostic group) were conducted to compare subgroups on demographic variables (gender, age, years of education) and musical affinity scores (each subscale and total composite score). These analyses served to verify group equivalence and to ensure that the musical affinity classification was independent of diagnostic status.

2.4.3. Main Analyses

Two separate univariate analyses of covariance (ANCOVAs) were conducted to examine the effects of musical affinity profile (lower vs. higher) and diagnostic group (ADHD vs. HC) on standardized ratings of arousal and emotional valence (z-scores). In each model, standardized familiarity ratings (z-scores) were entered as a covariate to control for the well-established influence of familiarity on emotional responses to music. The assumptions of the ANCOVA were tested (Pearson correlations, Levene’s test, homogeneity of regression slopes) prior to analysis to ensure their applicability. Additional linear regressions were conducted when necessary to further investigate significant interaction effects.

All analyses were conducted using SPSS Statistics Version 28.0.1.0 (IBM Corp., Armonk, NY, USA), with a two-tailed significance level of α = 0.05 (or adjusted α for multiple comparisons). Post hoc analyses were conducted when significant effects were observed, with Bonferroni-adjusted p-values reported and interpreted against α = 0.05.

3. Results

3.1. Contrasting ADHD and Healthy Controls Across Musical Affinity Profiles

3.1.1. Sample Characteristics

To examine the effects of musical affinity profile (LMA vs. HMA) and diagnostic group (ADHD vs. HC), two-way ANOVAs were conducted on sociodemographic variables and musical affinity scales (Table 2). No significant effects or interactions emerged for age or education, indicating that the four subgroups were comparable for these characteristics. However, the gender distribution differed across subgroups (χ2(3) = 17.21, p < 0.001, V = 0.37), with girls being overrepresented in the ADHD-HMA group and underrepresented in the HC-LMA group.

Table 2.

Descriptive Statistics and Two-Way ANOVA Results for Demographic and Musical Affinity Variables by Musical Affinity Profile and Diagnostic Group.

3.1.2. Musical Affinity Profiles

As expected, the HMA profile reported significantly higher scores on all three musical affinity subscales and on the composite score compared to the LMA profile (all ps < 0.001; see Table 2). No significant main effects of diagnosis or interaction effects with diagnostic group were observed on any musical affinity measure, suggesting that ADHD status did not influence musical experience, listening diversity, or receptivity to musical emotions.

In summary, the two-step clustering yielded two robust and interpretable musical affinity profiles that were balanced across diagnostic groups and comparable in age and education, though the gender distribution varied. These profiles served as the basis for the subsequent analyses on emotional judgments (arousal and emotional valence).

3.2. Impact of Individual Differences on Arousal and Emotional Valence

3.2.1. Preliminary Associations Between Arousal, Emotional Valence, Familiarity, and Individual Differences

Given the unequal gender distribution across diagnostic groups, musical affinity profiles, and their crossed subgroups, preliminary analyses were conducted to assess its potential influence on the main analyses. Specifically, a three-factor ANCOVA was performed with diagnostic group, musical affinity profile, and gender as between-subject factors. This analysis aimed to verify whether gender acted as a confounding variable. No interactions involving gender were observed (all ps > 0.05, all η2p < 0.012). Consequently, gender was not retained as a factor in the main analyses.

Pearson correlations indicated that familiarity was positively associated with evaluations of musical excerpts based on arousal (r = 0.27, p = 0.001), emotional valence (r = 0.49, p < 0.001), and musical affinity profile (r = 0.30, p < 0.001), supporting its inclusion as a covariate. No significant association was observed with diagnostic group (p = 0.652).

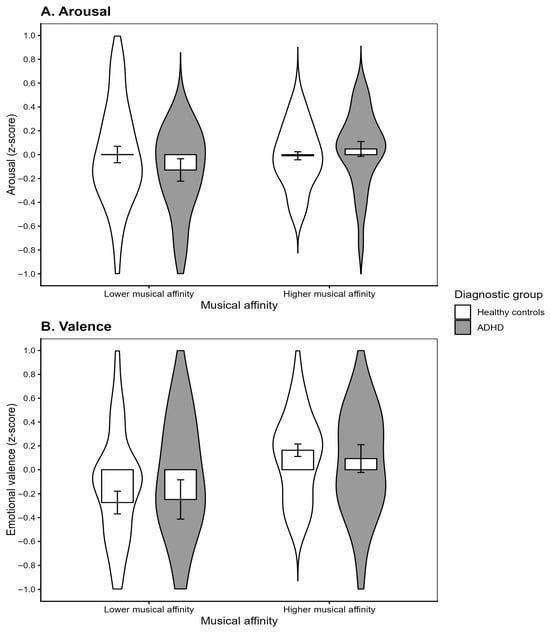

3.2.2. Arousal

A two-way ANCOVA with diagnostic group and musical affinity profile as between-subject factors, and familiarity entered as a covariate (see Table 3), revealed a significant main effect of familiarity on arousal ratings (F(1, 133) = 9.96, p = 0.002, η2p = 0.070), indicating that more familiar excerpts were generally perceived as more arousing. No main effects of diagnostic group (p = 0.721) or musical affinity (p = 0.764) were found, and their interaction did not reach significance (p > 0.05; see Figure 3A). Thus, arousal ratings were primarily driven by familiarity, with no evidence for moderating effects of diagnostic group or musical affinity.

Table 3.

Univariate ANCOVAs for arousal and emotional valence judgments: Effects of diagnosis and musical affinity group controlling for familiarity.

Figure 3.

Mean and standard errors for (A) arousal and (B) emotional valence ratings as a function of musical affinity profiles and ADHD diagnosis.

3.2.3. Emotional Valence

The homogeneity of regression slopes assumption was not fully met for emotional valence (see Table 3), as familiarity interacted significantly with musical affinity profile (F(1, 131) = 5.64, p = 0.019, η2p = 0.041) and marginally with diagnostic group (F(1, 131) = 3.20, p = 0.076, η2p = 0.024). Therefore, these interaction terms were retained in the main analysis to ensure compliance with ANCOVA assumptions and because they involved independent variables central to the study. Each interaction was explored with regression analyses.

The ANCOVA revealed a strong main effect of familiarity (F(1, 131) = 34.46, p < 0.001, η2p = 0.208), indicating that more familiar excerpts were perceived as more pleasant. In contrast, the main effects of musical affinity profile (F(1, 131) = 1.70, p = 0.195, η2p = 0.013) and diagnostic group (F(1, 131) = 0.46, p = 0.498, η2p = 0.004), as well as their interaction (F(1, 131) = 3.00, p = 0.085, η2p = 0.022), were not significant.

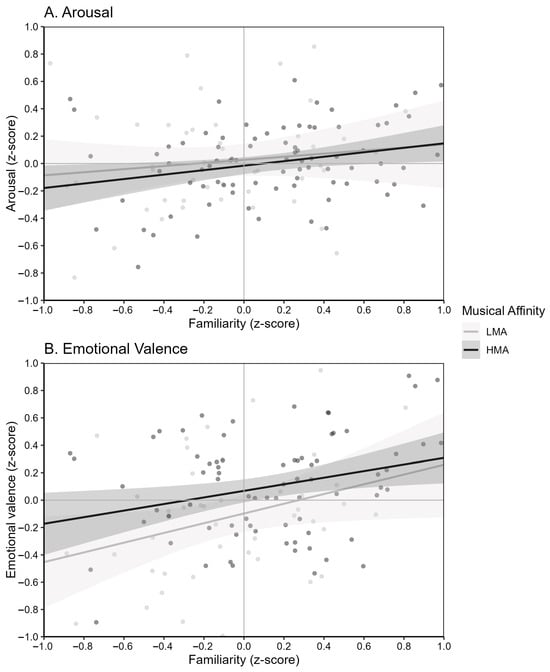

Follow-up regression analyses (see Figure 4) confirmed the significant interaction between familiarity and musical affinity profile (β = −0.27, p = 0.028), suggesting that the positive association between familiarity and emotional valence was more pronounced among participants in the LMA group (β = 0.57, p < 0.001) compared to those in the HMA group (β ≈ 0.30, p > 0.05). In contrast, the interaction between diagnostic group and familiarity was only marginal in the ANCOVA and was not supported by the regression (β = 0.10, p = 0.242).

Figure 4.

Effects of familiarity on (A) arousal and (B) emotional valence ratings by musical affinity profile.

4. Discussion

The present study aimed to investigate how diagnostic status (ADHD vs. controls), musical affinity (LMA vs. HMA), and familiarity with musical excerpts shape adolescents’ perception of musical emotions. Participants completed tests of musical affinity (musical experience, music listening diversity, and receptivity to musical emotions) and rated 55 musical excerpts in terms of perceived arousal, emotional valence, and familiarity. The data of 138 adolescents was analyzed, including 38 ADHD and 100 healthy controls. Based on a cluster analysis including the three musical affinity subscales, 50 participants were classified as having lower musical affinity (LMA) and 88 as having higher musical affinity (HMA), resulting in four diagnostic x affinity subgroups: ADHD-LMA, ADHD-HMA, HC-LMA, and HC-HMA. Overall, the results identified familiarity as the strongest predictor of adolescents’ emotional judgments to music. Furthermore, musical affinity influenced the effect of familiarity on emotional valence judgments, with adolescents in the LMA profile showing a stronger association than those in the HMA profile. However, diagnostic status (ADHD vs. HC) had no significant effect on participants’ emotional judgments.

4.1. Familiarity at the Core of Musical Emotion

Familiarity emerged as the most robust predictor of both arousal and emotional valence, with stronger effects on emotional valence in the LMA profile. This result is partly consistent with our expectations, as prior research has established familiarity as a central determinant of musical liking and emotional response (; ). Our findings allowed to refine this association by highlighting variability in how familiarity supports emotional judgments based on individual characteristics.

The results align with the mere exposure effect (), which posits that repeated encounters with a stimulus increase preference, and with ’s () theory of aesthetic experience, which emphasizes that pleasure arises from a balance between familiarity and novelty (). From these perspectives, LMA adolescents may require higher familiarity to experience music as pleasant, reflecting a narrower optimal zone for novelty. By contrast, HMA adolescents, who exhibit greater listening diversity, appear more open to novelty, enabling them to derive positive affect even from less familiar excerpts. One mechanism through which familiarity may exert its effects is autobiographical memory, as familiar music more readily evokes personal memories and associated responses (; ). This suggests that adolescents with lower musical affinity have fewer opportunities to form positive autobiographical associations with a wide range of musical genres and may find it harder to appreciate less familiar music.

Two interaction effects approached significance in the ANCOVA on emotional valence: diagnostic group × familiarity (p = 0.076) and diagnostic group × musical affinity (p = 0.085). Although these trends did not reach statistical significance, they point toward potential group-specific patterns in how music is emotionally processed in ADHD. Given the limited power of the present sample to detect higher-order interactions, these trends should be interpreted cautiously and warrant replication in larger samples.

Finally, arousal ratings were predicted by familiarity but did not vary according to diagnostic status or musical affinity level. Research shows that arousal perception is primarily driven by musical parameters such as tempo (). Familiarity may nonetheless enhance these effects by strengthening prediction and reward processes during listening (; ) independently of diagnostic status or musical affinity profile.

4.2. Rethinking Emotional Responses to Music in ADHD

No significant differences were found between ADHD and HC participants in their ratings of perceived arousal, emotional valence, or familiarity, indicating that adolescents with ADHD perceived musical emotions comparably to controls. This finding contradicts our initial hypothesis that ADHD participants would differ from controls in their emotional judgement.

It is useful to situate this result within existing integrative models conceiving musical emotion processing as a multistage mechanism (; ). The first stage, early acoustic-musical processing, involves the perceptual decoding of musical parameters (e.g., tempo, mode, rhythm, timbre), which provide the foundation for subsequent emotional appraisal. The second stage, perceived emotion, refers to the cognitive evaluation of what the music expresses (e.g., pleasant vs. unpleasant, stimulating vs. relaxing) without necessarily eliciting the corresponding emotion in the listener (; ). The third stage, felt emotion, concerns the listener’s own emotional experience induced by the music, encompassing physiological arousal, chills, pleasure, or mood changes (). Finally, a fourth stage, sensorimotor engagement, involves behavioral synchronization and motor reproduction, such as rhythm reproduction, synchronization, or active engagement, which are known to be affected in ADHD (; ; ). Considering this multistage mechanism, the absence of diagnostic differences in the present study suggests that difficulties commonly reported in emotional regulation and temporal processing among individuals with ADHD (; ; ) were not reflected under the current experimental conditions. This may be because our paradigm focused specifically on stage 2, that is, the cognitive appraisal of perceived emotion, rather than on the induction, regulation, or motor synchronization processes typically associated with later stages of musical emotion processing. This pattern may indicate that, within our sample and the musical pieces selected, the cognitive processes involved in recognizing emotions in music rely on relatively automatic perceptual mechanisms that remain preserved in ADHD, even when difficulties arise in later stages of emotional regulation or motor synchronization.

4.3. Musical Affinity: A Gateway to Adolescents’ Music Experience

Adolescents with higher musical affinity rated the excerpts as more pleasant and more familiar than their lower-affinity peers, while arousal ratings did not differ between profiles. This pattern partially supports our hypothesis, indicating that musical affinity primarily influenced emotional valence rather than perceived arousal.

One plausible explanation for this pattern lies in the role of musical absorption, defined as the tendency to become deeply immersed in and emotionally affected by music (). Adolescents with higher musical affinity likely experience stronger absorption (; ), enabling them to perceive positive affect even with less familiar excerpts. In contrast, lower-affinity adolescents may rely more strongly on familiarity to trigger comparable levels of emotional appreciation (; ; ).

Neurophysiological evidence on music reward provides converging support that familiarity and emotional engagement jointly enhance pleasure during music listening. Familiar and emotionally engaging music have been shown to activate dopaminergic reward circuits in the striatum and medial prefrontal cortex (; ), and meta-analytic evidence confirms that familiarity modulates neural responses in these regions (). Moreover, dopamine release is strongest when listening to familiar and emotionally engaging music (; ). Although the present study did not assess neural activity, the existing evidence suggests that familiarity and emotional engagement jointly recruit reward-related mechanisms. It is therefore plausible that adolescents with higher musical affinity may more readily recruit these systems even when listening to unfamiliar music, possibly due to their greater openness to experience, emotional absorption and diversity of their musical habits. In contrast, adolescents with lower musical affinity may depend more on familiarity to engage these same mechanisms and experience similar levels of pleasure.

4.4. Harnessing Familiarity in Educational, Clinical, and Self-Care Contexts

These findings carry practical implications for both musical education and therapeutic contexts. The stronger reliance of LMA adolescents on familiarity suggests that limited exposure to musical diversity constrains the range of positive emotional judgments they derive from music. In contrast, HMA adolescents, who report more varied listening habits, appear better disposed to appreciate and emotionally engage with even unfamiliar excerpts.

In educational (e.g., school) or clinical settings (e.g., music-based interventions), fostering diverse listening experiences could therefore enhance adolescents’ emotional engagement and flexibility in music-based activities (). Exposure to a variety of genres and cultural traditions may expand the emotional spectrum accessible through music while supporting curiosity, openness, and the development of more adaptive regulation strategies (; ). Such exposure may not only enrich musical appreciation but also broaden the emotional benefits of music, supporting adolescents’ capacity to use music as a flexible tool for regulation.

Beyond structured interventions, these insights also highlight the potential of familiarity as a self-regulatory resource. Encouraging adolescents, particularly those with lower musical affinity, to intentionally use familiar and emotionally positive music in everyday life may strengthen their ability to modulate mood, reduce stress, and engage in self-care through music. Over time, combining familiar selections with gradual exploration of new styles could balance comfort and novelty, promoting both emotional stability and personal growth.

4.5. Strengths, Limitations and Future Studies

A core strength of the present study lies in its integration of diagnostic status, musical affinity, and familiarity within the same design, allowing for a comprehensive examination of individual differences in adolescents’ emotional responses to music. Another important strength of the study resides in the control of potential confounding variables, which increases confidence in the validity of the observed effects. In addition, the choice of musical excerpts was tailored to the musical preferences of the adolescent population, including genres such as pop, hip-hop/rap, and EDM, rather than relying on classical music excerpts that are more commonly used in musical emotion research (). This enhances both the ecological validity and the relevance of the findings for the age group in this study. Finally, although the validation of our musical affinity questionnaire was not the focus of the present analyses, it represents an attempt to capture adolescents’ overall engagement with music across three components adapted to their reality. While this composite measure demonstrated acceptable reliability, future work is needed to validate and refine the construct, particularly to determine its specificity and generalizability beyond the present sample.

Several limitations must be noted. First, emotional judgments were measured exclusively through self-report, which may not fully capture the complexity of affective experience. Future work should strengthen this approach by combining self-reports with physiological measures, such as electrodermal conductance or heart rate variability, which provide complementary indices of physiological arousal. Second, the sample was drawn from a relatively homogeneous cultural context, which limits the generalizability of the findings, as cultural familiarity is known to strongly influence emotional responses to music. Third, the sample was unbalanced in terms of gender, with a higher proportion of girls overall and across certain subgroups, which limits the interpretation of gender effects.

Other musical parameters, such as mode, timbre, and harmonic complexity, also contribute to emotional appraisal and should be considered alongside tempo (; ). Additional measures of rhythm and timing performance would also be valuable (e.g., BAASTA; , ) given their relevance to arousal and music perception and their known alteration in ADHD. Expanding recruitment to more culturally diverse populations would be useful to clarify the role of cultural familiarity. In addition, efforts should be made to ensure more representative gender distributions to explore whether gender moderates the relationship between diagnosis, musical affinity, and emotional responses to music (). Including participants’ preferred music alongside standardized excerpts may further enhance ecological validity and provide insight into how familiarity and musical affinity operate in real-world contexts.

5. Conclusions

This study provides novel evidence on how adolescents’ perception of musical emotions are affected by familiarity, musical affinity, and diagnostic status. While ADHD and healthy control participants did not differ in their ratings of arousal or emotional valence, familiarity emerged as a robust predictor across groups, particularly among adolescents with lower musical affinity. These findings suggest that the emotional impact of music in adolescence is shaped less by diagnostic status than by the interplay between familiarity and individual engagement with music. By adopting a multidimensional measure of musical affinity, this study underscores the value of considering diverse forms of musical involvement beyond formal training. Collectively, our results highlight the central role of familiarity in shaping emotional experience during music listening, as well as its potential benefits for music-based interventions and the well-being of adolescents with or without ADHD.

Author Contributions

Conceptualization, A.R. and N.G.; methodology, A.R. and N.G.; software, A.R.; validation, A.R. and N.G.; formal analysis, A.R., E.H. and N.G.; investigation, A.R. and N.G.; resources, A.R. and N.G.; data curation, A.R.; writing—original draft preparation, A.R., E.H. and N.G.; writing—review and editing, A.R., E.H., P.P.-G. and N.G.; visualization, A.R., E.H. and N.G.; supervision, N.G.; project administration, A.R. and N.G.; funding acquisition, A.R. and N.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Canadian Institutes of Health Research (#236-2020-2021-Q2-00370), the Fonds de Recherche du Québec—Santé (FRQS #313322, FRQS #299871), the University of Montreal’s department of psychology, and the non-profit organization TALAN (Bruno Gauthier).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Comité d’éthique de la recherche en Éducation et en Psychologie of University of Montreal (#CERAS-2015-16-168-D, initial approval on 20 July 2017).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy reasons.

Acknowledgments

We would like to thank Mélissa Romano, and Amélie Cloutier for their help in the musical pieces’ selection process. We would also like to thank our participants without whom this research would have been impossible. During the preparation of this manuscript, the authors used ChatGPT (OpenAI, GPT-5, 2025) for minor language editing and for translation assistance. All AI-assisted content was reviewed, corrected, and validated by the authors, who take full responsibility for the final manuscript.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| ADHD | Attention-Deficit/Hyperactivity Disorder |

| HC | Healthy Controls |

| LMA | Lower Musical Affinity |

| HMA | Higher Musical Affinity |

Appendix A

This appendix lists the 55 musical excerpts used in the study, ordered by tempo (BPM). For each track, we report the Title—Artist (Year), tempo, musical genre (aligned with the 21-genre inventory used in our measures), and the excerpt timing (start–end, mm:ss). Musical genre labels are pragmatic, intended for analytic grouping rather than formal musicological classification.

Table A1.

Musical excerpts with tempo (BPM) and precise/broad genre classification.

Table A1.

Musical excerpts with tempo (BPM) and precise/broad genre classification.

| Title—Artist (Year) | Tempo | Musical Genre | Excerpt Timing |

|---|---|---|---|

| Sugarcane—Ana Olgica (2017) | 40 | Hip-hop/Rap | 00:19–00:31 |

| Arrival of the Birds—The Cinematic Orchestra & LMO (2008) | 44 | Film/Video game soundtracks | 00:38–00:52 |

| Her Eyes the Stars—LUCHS, P.B. Almkvisth (2016) | 47 | Classical | 00:19–00:31 |

| Time—Hans Zimmer (2010) | 60 | Film/Video game soundtracks | 00:05–00:16 |

| A Long Way Home—Jack Wall (2013) | 65 | Film/Video game soundtracks | 00:18–00:31 |

| Yes, I Am a Long Way from Home—Mogwai (1997) | 65 | Alternative | 01:09–01:23 |

| Deep Blue Day—Brian Eno (1983) | 70 | Electronica | 00:27–00:41 |

| Eternal Youth—RUDE (2016) | 70 | Hip-hop/Rap | 00:26–00:38 |

| River Flows in You—Yiruma (2001) | 70 | Classical | 00:33–00:47 |

| Front Yard—Trixie Muff (2020) | 75 | Indie | 00:06–00:20 |

| Quilted Hills—Ocha & Laffey (2019) | 75 | Hip-hop/Rap | 00:13–00:26 |

| Ocean—Gradient Islant (2020) | 75 | Hip-hop/Rap | 00:11–00:25 |

| Underground Vibes—DJ Cam (1995) | 75 | Hip-hop/Rap | 00:14–00:28 |

| Daffodils—Guustavv (2018) | 76 | Hip-hop/Rap | 00:11–00:25 |

| Gendèr—Makoto San (2020) | 77 | Other cultural traditions | 00:12–00:25 |

| Nostrand Ave—Tom Doolie (2020) | 80 | Hip-hop/Rap | 00:10–00:24 |

| Spirals—Slowheal (2018) | 80 | Hip-hop/Rap | 00:23–00:37 |

| Saturday Afternoon—Sweet Oscar (2020) | 80 | Hip-hop/Rap | 00:12–00:25 |

| You—Petit Biscuit (2015) | 80 | EDM | 00:11–00:25 |

| Tuesday, July 14—Dogman Chill (2020) | 82 | Hip-hop/Rap | 00:11–00:25 |

| Go to Shanghai—Atrisma (2020) | 83 | Jazz/Blues | 00:08–00:22 |

| Lie Down—Kino B (2020) | 85 | Hip-hop/Rap | 00:22–00:35 |

| Norr—Polaroit (2020) | 85 | Indie | 01:09–01:22 |

| Mixed Feelings—DONTCRY, Glimlip (2019) | 86 | Hip-hop/Rap | 00:12–00:26 |

| Cumbia del Olvido—Nicola Cruz (2015) | 86 | Latin | 00:55–01:04 |

| Phew—Jules Hiero, Antônio Neves, Ed Santana (2020) | 87 | Jazz/Blues | 00:33–00:47 |

| Awake—Tycho (2014) | 88 | Electronica | 00:19–00:33 |

| Bewildered—Hanz (2019) | 90 | Electronica | 00:42–00:55 |

| Smokes—Monkey Larsson (2020) | 90 | Hip-hop/Rap | 00:10–00:24 |

| Plus tôt—Alexandra Stréliski (2018) | 90 | Classical | 00:49–00:53 |

| Golden Crates—Dusty Deck (2020) | 90 | Hip-hop/Rap | 00:03–00:17 |

| Noctuary—Bonobo (2003) | 92 | Electronica | 00:39–00:50 |

| Poetic Wax—Dusty Deck (2020) | 97 | Hip-hop/Rap | 00:09–00:23 |

| Intro—The xx (2009) | 100 | Indie | 00:39–00:53 |

| Invincible—DEAF KEV (2015) | 100 | EDM | 00:10–00:24 |

| Hello—OMG (2014) | 107 | K-pop | 00:00–00:14 |

| Multi—Lstn (2019) | 117 | Electronica | 00:08–00:22 |

| Sumatra—Nora Van Elken (2019) | 118 | EDM | 00:18–00:32 |

| Rest in Peace—Super Duper (2018) | 120 | EDM | 00:12–00:26 |

| Ventura—City of the Sun (2018) | 120 | Alternative | 00:24–00:37 |

| Ether—Mogwai (2016) | 120 | Alternative | 01:10–01:24 |

| The Morning After—Anthony Favier (2019) | 122 | Jazz/Blues | 00:31–00:45 |

| Say Goodbye—Lucas De Mulder & The New Mastersounds (2020) | 128 | Disco/Funk | 00:01–00:15 |

| Bot—Deadmau5 (2009) | 130 | EDM | 00:00–00:14 |

| Candyland—Tobu (2015) | 130 | EDM | 00:54–01:08 |

| Firefly—Jim Yosef (2015) | 130 | EDM | 00:31–00:45 |

| Nekozilla—Different Heaven (2016) | 130 | EDM | 00:13–00:27 |

| Nova—Ahrix (2013) | 130 | EDM | 00:40–00:54 |

| The Last Day—City of the Sun (2020) | 130 | Alternative | 00:59–01:13 |

| Slumber Party—Sonny Side Up (2017) | 132 | Hip-hop/Rap | 00:14–00:28 |

| Silly Boy—Jack Wall (2011) | 135 | Film/Video game soundtracks | 00:28–00:42 |

| Everything—City of the Sun (2016) | 136 | Alternative | 00:52–01:04 |

| I’ll Tell You Someday—Plini (2020) | 140 | Metal | 02:08–02:24 |

| In the House of Tom Bombadil—Nickel Creek (2000) | 150 | Folk | 00:00–00:14 |

| Assassin—The Fearless Flyers (2020) | 150 | Disco/Funk | 00:08–00:22 |

Appendix B

Musical affinity was operationalized through three complementary subscales: Musical Experience, Music Listening Diversity, and Receptivity to Musical Emotions. Each subscale was scored on a 0–5 or 1–5 scale to ensure comparability across indices. Detailed items, sources, and adaptations are presented below.

Appendix B.1. Musical Experience Subscale

The items of the Musical Experience subscale were adapted from the Goldsmith Musical Sophistication Index (Gold-MSI; ) to better capture adolescent musical engagement and to harmonize the scoring with the other subscales of the musical affinity index. The adapted version also sought to include formal and informal musical experience. The table below presents the inter-item and item-total corrected correlations.

Table A2.

Musical Experience: Inter-Item Correlations (Lower Triangle) and Corrected Item–Total Correlations.

Table A2.

Musical Experience: Inter-Item Correlations (Lower Triangle) and Corrected Item–Total Correlations.

| Item | Years of Formal Training | Years of Informal Training | Number of Instruments (Formal) | Number of Instruments (Informal) | Item-Total r (Corrected) |

|---|---|---|---|---|---|

| Years of formal training | — | 0.620 | |||

| Years of informal training | 0.349 | — | 0.607 | ||

| Number of instruments (formal) | 0.870 | 0.299 | — | 0.605 | |

| Number of instruments (informal) | 0.291 | 0.925 | 0.298 | — | 0.639 |

Appendix B.2. Music Listening Diversity Subscale

The list of genres was adapted from the Cultural Practices in Québec Survey (see table 47 in ) and expanded to include contemporary categories relevant to adolescents (e.g., K-pop, EDM, film soundtracks). Participants rated each genre on a 5-point scale (1 = Never, 2 = Rarely, 3 = Occasionally, 4 = Often, 5 = Very often). Responses ≥ 3 were dichotomized as “listened to” and summed across 21 genres to create a raw diversity index (range: 0–21). To harmonize with the other subscales and avoid overweighting, the raw score was recoded into five levels according to sample distribution: Very low (0–2), Low (3–4), Moderate (5–7), High (8–10), Very high (11+) diversity. The table below presents the range of inter-item correlations and item-total corrected correlations.

Table A3.

Music Listening Diversity: Range of Inter-Item Correlations and Corrected Item–Total Correlations.

Table A3.

Music Listening Diversity: Range of Inter-Item Correlations and Corrected Item–Total Correlations.

| Item | Inter-Item r (min) | Inter-Item r (max) | Item–Total r (Corrected) |

|---|---|---|---|

| Classical | −0.065 | 0.272 | 0.197 |

| Punk | −0.170 | 0.346 | 0.416 |

| Electronica | −0.051 | 0.585 | 0.324 |

| EDM (Electronic Dance Music) | 0.038 | 0.585 | 0.413 |

| Pop | −0.060 | 0.248 | 0.321 |

| Rock | −0.056 | 0.538 | 0.443 |

| Indie | −0.163 | 0.447 | 0.389 |

| Metal | −0.211 | 0.538 | 0.358 |

| Jazz/Blues | −0.071 | 0.473 | 0.322 |

| Alternative | −0.146 | 0.447 | 0.474 |

| Hip hop/Rap | −0.211 | 0.206 | 0.018 |

| R&B | −0.042 | 0.282 | 0.395 |

| Country/Western | −0.060 | 0.473 | 0.344 |

| Disco/Funk | −0.013 | 0.436 | 0.483 |

| K-pop | −0.060 | 0.358 | 0.422 |

| Latin | −0.021 | 0.403 | 0.421 |

| Folk | −0.063 | 0.413 | 0.455 |

| Film/Video game soundtracks | −0.100 | 0.314 | 0.329 |

| Spiritual/Religious | −0.034 | 0.339 | 0.246 |

| Traditional Québec | −0.034 | 0.436 | 0.323 |

| Other cultural traditions | 0.029 | 0.403 | 0.397 |

Appendix B.3. Receptivity to Musical Emotions

This subscale was inspired from (), who identified three core functions of music listening: arousal and mood regulation, self-awareness, and social relatedness. Most items in our version reflect arousal and mood regulation. Adolescents rated how often music elicited specific emotional experiences on a 5-point scale (1 = Never, 5 = Very often). The table below presents the inter-item correlations range and item-total corrected correlations.

Table A4.

Receptivity to Musical Emotions: Inter-Item Correlation Ranges and Corrected Item–Total Correlations.

Table A4.

Receptivity to Musical Emotions: Inter-Item Correlation Ranges and Corrected Item–Total Correlations.

| Item | Inter-Item r (Min) | Inter-Item r (Max) | Item–Total r (Corrected) |

|---|---|---|---|

| Music puts me in a good mood | 0.170 | 0.669 | 0.609 |

| Music comforts me | 0.293 | 0.704 | 0.703 |

| Music reduces my anger | 0.107 | 0.507 | 0.560 |

| Music makes me feel less lonely | 0.287 | 0.540 | 0.629 |

| Music gives me pleasure | 0.228 | 0.601 | 0.662 |

| Music entertains me | 0.130 | 0.518 | 0.572 |

| Music consoles me | 0.303 | 0.704 | 0.648 |

| Music makes me nostalgic 1 | 0.107 | 0.333 | 0.297 |

| Music makes me feel joyful | 0.145 | 0.669 | 0.573 |

| Music relieves my boredom | 0.112 | 0.457 | 0.465 |

Note. 1 The item “Music makes me nostalgic” was excluded from the final subscale due to weak correlations with the other items and with the total score.

Appendix B.4. Musical Affinity Total Score

The composite musical affinity score was calculated by adding the scores from each subscale (range: 2–15). As the composite score (M = 7.84; SD = 2.27) had limited internal consistency (α = 0.502) and conceptual heterogeneity, it is only used for descriptive purposes. The following table presents the correlations between each subscale and the total musical affinity score.

Table A5.

Intercorrelations Between Subscales and the Musical Affinity Total Score.

Table A5.

Intercorrelations Between Subscales and the Musical Affinity Total Score.

| Scale | Musical Experience | Listening Diversity | Emotional Receptivity |

|---|---|---|---|

| Musical Experience | — | ||

| Listening Diversity | 0.200 * | — | |

| Emotional Receptivity | 0.173 * | 0.447 ** | — |

| Musical Affinity Total | 0.635 ** | 0.827 ** | 0.673 ** |

Note. * p < 0.05. ** p < 0.01.

References

- Akkermans, J., Schapiro, R., Mullensiefen, D., Jakubowski, K., Shanahan, D., Baker, D., Busch, V., Lothwesen, K., Elvers, P., Fischinger, T., Schlemmer, K., & Frieler, K. (2019). Decoding emotions in expressive music performances: A multi-lab replication and extension study. Cognition and Emotion, 33(6), 1099–1118. [Google Scholar] [CrossRef] [PubMed]

- American Psychiatric Association. (2022). Diagnostic and statistical manual of mental disorders (5th ed., text rev.). American Psychiatric Association. [Google Scholar] [CrossRef]

- Ayano, G., Demelash, S., Gizachew, Y., Tsegay, L., & Alati, R. (2023). The global prevalence of attention deficit hyperactivity disorder in children and adolescents: An umbrella review of meta-analyses. Journal of Affective Disorders, 339, 860–866. [Google Scholar] [CrossRef]

- Bailey, J. A., & Penhune, V. (2013). The relationship between the age of onset of musical training and rhythm synchronization performance: Validation of sensitive period effects [Original Research]. Frontiers in Neuroscience, 7, 227. [Google Scholar] [CrossRef]

- Baltazar, M., & Saarikallio, S. (2017). Strategies and mechanisms in musical affect self-regulation: A new model. Musicae Scientiae, 23(2), 177–195. [Google Scholar] [CrossRef]

- Barkley, R. A. (2010). Why emotional impulsiveness should be a central feature of ADHD. The ADHD Report, 18(4), 1–5. [Google Scholar] [CrossRef]

- Baweja, R., Soutullo, C. A., & Waxmonsky, J. G. (2021). Review of barriers and interventions to promote treatment engagement for pediatric attention deficit hyperactivity disorder care. World Journal of Psychiatry, 11(12), 1206–1227. [Google Scholar] [CrossRef]

- Berlyne, D. E. (1971). Aesthetics and psychobiology. Appleton-Century-Crofts. [Google Scholar]

- Biasutti, M., & Habe, K. (2023). Teachers’ perspectives on dance improvisation and flow. Research in Dance Education, 24(3), 242–261. [Google Scholar] [CrossRef]

- Biederman, J., Faraone, S. V., Keenan, K., Benjamin, J., Krifcher, B., Moore, C., Sprich-Buckminster, S., Ugaglia, K., Jellinek, M. S., & Steingard, R. (1992). Further evidence for family-genetic risk factors in attention deficit hyperactivity disorder: Patterns of comorbidity in probands and relatives in psychiatrically and pediatrically referred samples. Archives of General Psychiatry, 49(9), 728–738. [Google Scholar] [CrossRef]

- Biederman, J., Faraone, S. V., Spencer, T. J., Mick, E., Monuteaux, M. C., & Aleardi, M. (2006). Functional impairments in adults with self-reports of diagnosed ADHD: A controlled study of 1001 adults in the community. Journal of Clinical Psychiatry, 67(4), 524–540. [Google Scholar] [CrossRef] [PubMed]

- Bonneville-Roussy, A., Rentfrow, P. J., Xu, M. K., & Potter, J. (2013). Music through the ages: Trends in musical engagement and preferences from adolescence through middle adulthood. Journal of Personality and Social Psychology, 105(4), 703–717. [Google Scholar] [CrossRef] [PubMed]

- Bramley, S., Dibben, N., & Rowe, R. (2016). Investigating the influence of music tempo on arousal and behaviour in laboratory virtual roulette. Psychology of Music, 44(6), 1389–1403. [Google Scholar] [CrossRef]

- Brattico, E., Brusa, A., Dietz, M., Jacobsen, T., Fernandes, H., Gaggero, G., Toiviainen, P., Vuust, P., & Proverbio, A. (2025). Beauty and the brain–Investigating the neural and musical attributes of beauty during naturalistic music listening. Neuroscience, 567, 308–325. [Google Scholar] [CrossRef] [PubMed]

- Brattico, E., & Pearce, M. (2013). The neuroaesthetics of music. Psychology of Aesthetics, Creativity, and the Arts, 7(1), 48–61. [Google Scholar] [CrossRef]

- Bunford, N., Evans, S. W., & Wymbs, F. (2015). ADHD and emotion dysregulation among children and adolescents. Clinical Child and Family Psychology Review, 18(3), 185–217. [Google Scholar] [CrossRef] [PubMed]

- Carrer, L. R. (2015). Music and sound in time processing of children with ADHD. Frontiers in Psychiatry, 6, 127. [Google Scholar] [CrossRef]

- Castro, S. L., & Lima, C. F. (2014). Age and musical expertise influence emotion recognition in music. Music Perception, 32(2), 125–142. [Google Scholar] [CrossRef]

- Cespedes-Guevara, J., & Eerola, T. (2018). Music communicates affects, not basic emotions—A constructionist account of attribution of emotional meanings to music. Frontier Psychology, 9, 215. [Google Scholar] [CrossRef]

- Chong, H. J., Kim, H. J., & Kim, B. (2024). Scoping review on the use of music for emotion regulation. Behavioral Sciences, 14(9), 793. [Google Scholar] [CrossRef]

- Christiansen, H., Hirsch, O., Albrecht, B., & Chavanon, M. L. (2019). Attention-deficit/hyperactivity disorder (ADHD) and emotion regulation over the life span. Current Psychiatry Reports, 21(3), 17. [Google Scholar] [CrossRef]

- Dalla Bella, S., Farrugia, N., Benoit, C.-E., Begel, V., Verga, L., Harding, E., & Kotz, S. A. (2017). BAASTA: Battery for the assessment of auditory sensorimotor and timing abilities. Behavior Research Methods, 49(3), 1128–1145. [Google Scholar] [CrossRef]

- Dalla Bella, S., Foster, N. E., Laflamme, H., Zagala, A., Melissa, K., Komeilipoor, N., Blais, M., Rigoulot, S., & Kotz, S. A. (2024). Mobile version of the battery for the assessment of auditory sensorimotor and timing abilities (BAASTA): Implementation and adult norms. Behavior Research Methods, 56(4), 3737–3756. [Google Scholar] [CrossRef]

- Demorest, S. M., Morrison, S. J., Jungbluth, D., & Beken, M. N. (2008). Lost in translation: An enculturation effect in music memory performance. Music Perception, 25(3), 213–223. [Google Scholar] [CrossRef]

- de Oliveira Goes, A., Nardi, A. E., & Quagliato, L. A. (2025). Outcomes of music therapy on children and adolescents with attention-deficit/hyperactivity disorder: A systematic review and meta-analysis. Trends in Psychiatry and Psychotherapy. ahead of print. [Google Scholar] [CrossRef]

- Dillman Carpentier, F. R., & Potter, R. F. (2007). Effects of music on physiological arousal: Explorations into tempo and genre. Media Psychology, 10(3), 339–363. [Google Scholar] [CrossRef]

- Eerola, T., Ferrer, R., & Alluri, V. (2012). Timbre and affect dimensions: Evidence from affect and similarity ratings and acoustic correlates of isolated instrument sounds. Music Perception: An Interdisciplinary Journal, 30(1), 49–70. [Google Scholar] [CrossRef]

- Eerola, T., & Vuoskoski, J. K. (2011). A comparison of the discrete and dimensional models of emotion in music. Psychology of Music, 39(1), 18–49. [Google Scholar] [CrossRef]

- Eerola, T., & Vuoskoski, J. K. (2013). A review of music and emotion studies: Approaches, emotion models, and stimuli. Music Perception, 30(3), 307–340. [Google Scholar] [CrossRef]

- Ekman, P. (1992). An argument for basic emotions. Cognition & Emotion, 6(3–4), 169–200. [Google Scholar] [CrossRef]

- Fasano, M. C., Cabral, J., Stevner, A., Vuust, P., Cantou, P., Brattico, E., & Kringelbach, M. L. (2023). The early adolescent brain on music: Analysis of functional dynamics reveals engagement of orbitofrontal cortex reward system. Human Brain Mapping, 44(2), 429–446. [Google Scholar] [CrossRef]

- Fernández-Sotos, A., Fernández-Caballero, A., & Latorre, J. M. (2016). Influence of tempo and rhythmic unit in musical emotion regulation. Frontiers in Computational Neuroscience, 10, 80. [Google Scholar] [CrossRef]

- Ferreri, L., Mas-Herrero, E., Zatorre, R. J., Ripollés, P., Gomez-Andres, A., Alicart, H., Olivé, G., Marco-Pallarés, J., Antonijoan, R. M., & Valle, M. (2019). Dopamine modulates the reward experiences elicited by music. Proceedings of the National Academy of Sciences of the United States of America, 116(9), 3793–3798. [Google Scholar] [CrossRef]

- Frankel, J. (2006). Reaper (Version v6.78/win64) [Computer software]. Cockos Inc. Available online: https://www.reaper.fm/ (accessed on 15 October 2025).

- Freitas, C., Manzato, E., Burini, A., Taylor, M. J., Lerch, J. P., & Anagnostou, E. (2018). Neural correlates of familiarity in music listening: A systematic review and a neuroimaging meta-analysis. Frontiers in Neuroscience, 12, 686. [Google Scholar] [CrossRef]

- Fuentes-Sánchez, N., Pastor, R., Eerola, T., Escrig, M. A., & Pastor, M. C. (2022). Musical preference but not familiarity influences subjective ratings and psychophysiological correlates of music-induced emotions. Personality and Individual Differences, 198, 111828. [Google Scholar] [CrossRef]

- Gabrielsson, A., & Lindström, E. (2010). The role of structure in the musical expression of emotions. In P. N. Juslin, & J. A. Sloboda (Eds.), Handbook of music and emotion: Theory, research, applications (pp. 367–400). Oxford University Press. [Google Scholar]

- Galván, A. (2010). Adolescent development of the reward system. Frontiers in Human Neuroscience, 4, 1018. [Google Scholar] [CrossRef] [PubMed]

- Garrido, S., & Schubert, E. (2011). Individual differences in the enjoyment of negative emotion in music: A literature review and experiment. Music Perception, 28(3), 279–296. [Google Scholar] [CrossRef]

- Ghanizadeh, A. (2011). Sensory processing problems in children with ADHD, a systematic review. Psychiatry Investigation, 8(2), 89–94. [Google Scholar] [CrossRef] [PubMed]

- Graziano, P. A., & Garcia, A. (2016). Attention-deficit hyperactivity disorder and children’s emotion dysregulation: A meta-analysis. Clinical Psychology Review, 46, 106–123. [Google Scholar] [CrossRef]

- Grewe, O., Nagel, F., Kopiez, R., & Altenmüller, E. (2007). Listening to music as a re-creative process: Physiological, psychological, and psychoacoustical correlates of chills and strong emotions. Music Perception, 24(3), 297–314. [Google Scholar] [CrossRef]

- Groarke, J. M., & Hogan, M. J. (2019). Listening to self-chosen music regulates induced negative affect for both younger and older adults. PLoS ONE, 14(6), e0218017. [Google Scholar] [CrossRef]

- Groß, C., Serrallach, B. L., Mohler, E., Pousson, J. E., Schneider, P., Christiner, M., & Bernhofs, V. (2022). Musical performance in adolescents with ADHD, ADD and dyslexia-behavioral and neurophysiological aspects. Brain Sciences, 12(2), 127. [Google Scholar] [CrossRef]

- Hartung, C. M., Lefler, E. K., Abu-Ramadan, T. M., Stevens, A. E., Serrano, J. W., Miller, E. A., & Shelton, C. R. (2025). A Call to Analyze Sex, Gender, and Sexual Orientation in Psychopathology Research: An Illustration with ADHD and Internalizing Symptoms in Emerging Adults. Journal of Psychopathology and Behavioral Assessment, 47(1), 18. [Google Scholar] [CrossRef]

- Hirsch, O., Chavanon, M., Riechmann, E., & Christiansen, H. (2018). Emotional dysregulation is a primary symptom in adult Attention-Deficit/Hyperactivity Disorder (ADHD). Journal of Affective Disorders, 232, 41–47. [Google Scholar] [CrossRef] [PubMed]

- Hofbauer, L. M., & Rodriguez, F. S. (2023). Emotional valence perception in music and subjective arousal: Experimental validation of stimuli. International Journal of Psychology, 58(5), 465–475. [Google Scholar] [CrossRef]

- Hove, M. J., Gravel, N., Spencer, R. M. C., & Valera, E. M. (2017). Finger tapping and pre-attentive sensorimotor timing in adults with ADHD. Experimental Brain Research, 235(12), 3663–3672. [Google Scholar] [CrossRef]

- Husain, G., Thompson, W. F., & Schellenberg, E. G. (2002). Effects of musical tempo and mode on arousal, mood, and spatial abilities. Music Perception, 20(2), 151–171. [Google Scholar] [CrossRef]

- Ipçi, M., Inci IzmIr, S. B., Türkçapar, M. H., Özdel, K., Ardiç, U. A., & Ercan, E. S. (2020). Psychiatric comorbidity in the subtypes of ADHD in children and adolescents with ADHD according to DSM-IV. Noropsikiyatri Arsivi, 57(4), 283–289. [Google Scholar] [CrossRef]

- Jakubowski, K., & Eerola, T. (2022). Music evokes fewer but more positive autobiographical memories than emotionally matched sound and word cues. Journal of Applied Research in Memory and Cognition, 11(2), 272–288. [Google Scholar] [CrossRef]

- Juslin, P. N., & Laukka, P. (2004). Expression, perception, and induction of musical emotions: A review and a questionnaire study of everyday listening. Journal of New Music Research, 33(3), 217–238. [Google Scholar] [CrossRef]

- Juslin, P. N., & Västfjäll, D. (2008). Emotional responses to music: The need to consider underlying mechanisms. Behavioral and Brain Sciences, 31(5), 559–575. [Google Scholar] [CrossRef]

- Kahn, J. H., Enevold, K. C., Feltner-Williams, D., & Ladd, K. (2025). Using music to feel better: Are different emotion-regulation strategies truly distinct? Psychology of Music, 53(4), 535–547. [Google Scholar] [CrossRef]

- Koelsch, S. (2012). Brain and music. Wiley-Blackwell. Available online: https://www.wiley.com/en-us/Brain%2Band%2BMusic-p-x000525866 (accessed on 15 October 2025).

- Korsmit, I. R., Montrey, M., Wong-Min, A. Y. T., & McAdams, S. (2023). A comparison of dimensional and discrete models for the representation of perceived and induced affect in response to short musical sounds. Frontiers in Psychology, 14, 1287334. [Google Scholar] [CrossRef] [PubMed]

- Krause, A. E., North, A. C., & Hewitt, L. Y. (2015). Music-listening in everyday life: Devices and choice. Psychology of Music, 43(2), 155–170. [Google Scholar] [CrossRef]

- Kreutz, G., Ott, U., Teichmann, D., Osawa, P., & Vaitl, D. (2008). Using music to induce emotions: Influences of musical preference and absorption. Psychology of Music, 36(1), 101–126. [Google Scholar] [CrossRef]

- Lachance, K.-A., Pelland-Goulet, P., & Gosselin, N. (2025). Listening habits and subjective effects of background music in young adults with and without ADHD. Frontiers in Psychology, 15, 1508181. [Google Scholar] [CrossRef]

- Lahdelma, I., & Eerola, T. (2020). Cultural familiarity and musical expertise impact the pleasantness of consonance/dissonance but not its perceived tension. Scientific Reports, 10(1), 8693. [Google Scholar] [CrossRef]

- Lamont, A., & Hargreaves, D. (2019). Musical preference and social identity in adolescence. In K. V. MacDonald, D. J. Hargreaves, & D. Miell (Eds.), Handbook of music, adolescents, and wellbeing (pp. 109–118). Oxford University Press. [Google Scholar] [CrossRef]

- Lapointe, M.-C. (2010). L’écoute et la consommation de la musique. In Enquête sur les pratiques culturelles au Québec (pp. 41–66). Gouvernement du Québec, Ministère de la Culture, des Communications et de la Condition féminine. [Google Scholar]

- Larrouy-Maestri, P., Morsomme, D., Magis, D., & Poeppel, D. (2017). Lay listeners can evaluate the pitch accuracy of operatic voices. Music Perception: An Interdisciplinary Journal, 34(4), 489–495. [Google Scholar] [CrossRef]

- LimeSurvey GmbH. (n.d.). LimeSurvey: An open-source survey tool. LimeSurvey GmbH. Available online: https://www.limesurvey.org (accessed on 15 October 2025).