1. Introduction

Hyperspectral image (HSI) conceives high-dimensional data containing massive information in both the spatial and spectral dimensions. Given that ground objects have diverse characteristics in different dimensions, hyperspectral images are appealing for ground object analysis, ranging from agricultural production, geology, and mineral exploration to urban planning and ecological science [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10]. Early attempts exploiting HSI mostly employed support vector machines (SVM) [

11,

12,

13], K-means clustering (KNN) [

14], and polynomial logistic regression (MLR) [

15] schemes. Traditional feature extraction mostly relies on feature extractors designed by human experts [

16,

17] exploiting the domain knowledge and engineering experience. However, these feature extractors are not appealing in the HSI classification domain as they ignore the spatial correlation and local consistency and neglect exploiting the spatial feature information of HSI. Additionally, the redundancy of HSI data makes the classification problem a challenging research problem.

In recent years, deep learning (DL) has been widely used in the field of remote sensing [

18]. Given that deep learning can extract more abstract image features, the literature suggests several DL-based HSI classification methods. Typical examples include Stacked Autoencoder (SAE) [

19,

20,

21], Deep Belief Network (DBN) [

22], Recurrent Neural Network (RNN) [

23,

24], and Convolutional Neural Network (CNN) [

25,

26,

27]. For example, Dend et al. [

19] use a layered and stacked sparse autoencoder to extract HSI features, while Wan et al. [

20] propose a joint bilateral filter and a stacked sparse autoencoder, which can effectively train the network using only a limited number of labeled samples. Zhou et al. [

21] employ a semi-supervised stacked autoencoder with co-training. When the training set expands, confidential predictions of unlabeled samples are generated to improve the generalization ability of the model. Chen et al. [

22] suggest a deep architecture combined with the finite element of the spectral space using an improved DBN to process three-dimensional HSI data. These methods [

19,

20,

21,

22,

23,

24] achieved the best results in the three datasets of IN, UP, and SA, as follows: 98.39% [

21], 99.54% [

19], and 98.53% [

21], respectively. Zhou et al. [

23] extend the long-term short-term memory (LSTM) network to exploit the spectral space and suggest an HSI classification scheme that treats HSI pixels as a data sequence to model the correlation of information in the spectrum. Hang et al. [

24] use a cascaded RNN model with control loop units to explore the HSI redundant and complementary information, i.e., reduce redundant information and learn complementary information, and fuse different properly weighted feature layers. Zhong et al. [

25] designed an end-to-end spectral–spatial residual network (SSRN), which uses a continuous spectrum and spatially staggered blocks to reduce accuracy loss and achieve better classification performance in the case of uneven training samples. In [

26], the authors propose a deep feature fusion network (DFFN), which introduces residual learning to optimize multiple convolutional layers as identity mapping that can extract deeper feature information. Additionally, the work of [

27] suggests a five-layered CNN framework that integrates the spatial context and the spectral information of HSI and integrates into the framework both spectral features and spatial context. Although current literature manages an overall appealing classification performance, the classification accuracy, network parameters, and model training should still be improved.

Deep neural network models increase the accuracy of classification problems; however, as the depth of the network increases, they also cause network degradation and increase the difficulty of training. Prompted by He et al. [

28], the residual network (ResNet) is introduced into the HSI classification [

29,

30,

31] problem. Additionally, Paoletti et al. [

30] design a novel CNN framework based on the feature residual pyramid structure, while Lee et al. [

31] propose a residual CNN network that utilizes the context depth of the adjacent pixel vectors using residuals. These network models with residual structure afford a deep network that learns easier, enhances gradient propagation, and effectively solves deep learning-related problems such as gradient dispersion.

Due to the three-dimensional nature of HSI data, current methods have a certain degree of spatial or spectral information loss. To this end, 3D-CNNs are widely used for HSI classification [

32,

33,

34,

35], with Chen et al. [

32] proposing a 3D-CNN finite element model combined with regularization that uses regularization and virtual sample enhancement methods to solve the problem of over-fitting and improve the model’s classification performance. Seydgar et al. [

33] suggest an integrated model that combines a CNN with a convolutional LSTM (CLSTM) module that treats adjacent pixels as a sequence of recursive processes, and makes full use of vector-based and sequence-based learning methods to generate deep semantic spectral–spatial characteristics, while Rao et al. [

34] develop a 3D adaptive spatial, spectral pyramid layer CNN model (ASSP-SCNN), where the ASSP-SCNN can fully mine spatial and spectral information, while additionally, training the network with variable sized samples increases scale invariance and reduces overfitting. In [

35] the authors suggest a deep CNN (DCNN) scheme that during network training combines an improved cost function and a Support vector machine (SVM) and adds category separation information to the cross-entropy cost function promoting the between-classes compactness and separability during the process of feature learning. These methods [

32,

33,

34,

35] achieved the best results in the three datasets of IN, UP, and SA, respectively, of 99.19%, 99.87%, and 98.88% [

33]. However, despite the appealing accuracy of CNN-based solutions, these impose a high computational burden and increase the network parameters. The models proposed in [

33] and [

35] converge at 50 and 100 epochs, respectively. To solve this problem, quite a few algorithms extract the spatial and spectral features separately and introduce the attention mechanism for HSI classification [

36,

37,

38,

39,

40,

41]. For example, Zhu et al. [

36] propose an end-to-end residual spectral–spatial attention network (RSSAN), which can adaptively realize the selection of spatial information and spectrum information. Through the function of weighted learning, this module enhances the information features that are useful for classification, and Haut et al. [

37] introduce the attention mechanism into the residual network (ResNet), suggesting a new vision attention-driven technology that considers bottom-up and top-down visual factors to improve the feature extraction ability of the network. Wu et al. [

38] develop a 3D-CNN-based residual group channel and space attention network (RGCSA) appropriate for HSI classification combining bottom-up and top-down attention structures with residual connections, making full use of context information to optimize the features in the spatial dimension and focus on the area with the most information. This method achieved 99.87% and 100% overall classification accuracy on the IN and UP datasets, respectively. Li et al. [

39] design a space spectrum attention network (JSSAN) to simultaneously capture the remote interdependence of spatial and spectral data through similarity assessment, and adaptively emphasize the characteristics of informational land cover and spectral bands, and Mou et al. [

40] improve the network by involving a network unit for the spectral attention module using the global spectrum space context and the learnable spectrum attention module to generate a series of spectrum gates reflecting the importance of the spectrum band. Qing et al. [

41] propose a multi-scale residual network model with an attention mechanism (MSRN). The model uses an improved residual network and spatial–spectral attention module to extract hyperspectral image information from different scales multiple times, fully integrates and extracts the spatial spectral features of the image. A good classification effect has been achieved on the HSI classification problem. These methods [

36,

37,

38,

39,

40,

41] achieved the best result in the SA dataset of 99.85% [

37].

Although CNN models manage good results on the HSI classification problem, these models still have several problems. The first one being that the HSI classification task is at the pixel level, and thus due to the irregular shape of the ground objects, the typical convolution kernel is unable to capture all the features [

42]. Another deficiency of CNNs is the small-size convolution kernel limiting the CNN’s receptive field to match the hyperspectral features over their entire bandwidth. Thus, in-depth utilization of CNN is limited, and the requirements for convolution kernels of different classification tasks vary greatly. Due to the large HSI spectral dimensionality, it is not trivial to use long-range sequential dependence between distant positions of the spectral band information because it is difficult to use for CNN-based HSI classification specific context-based convolutional kernels to capture all the spectral features.

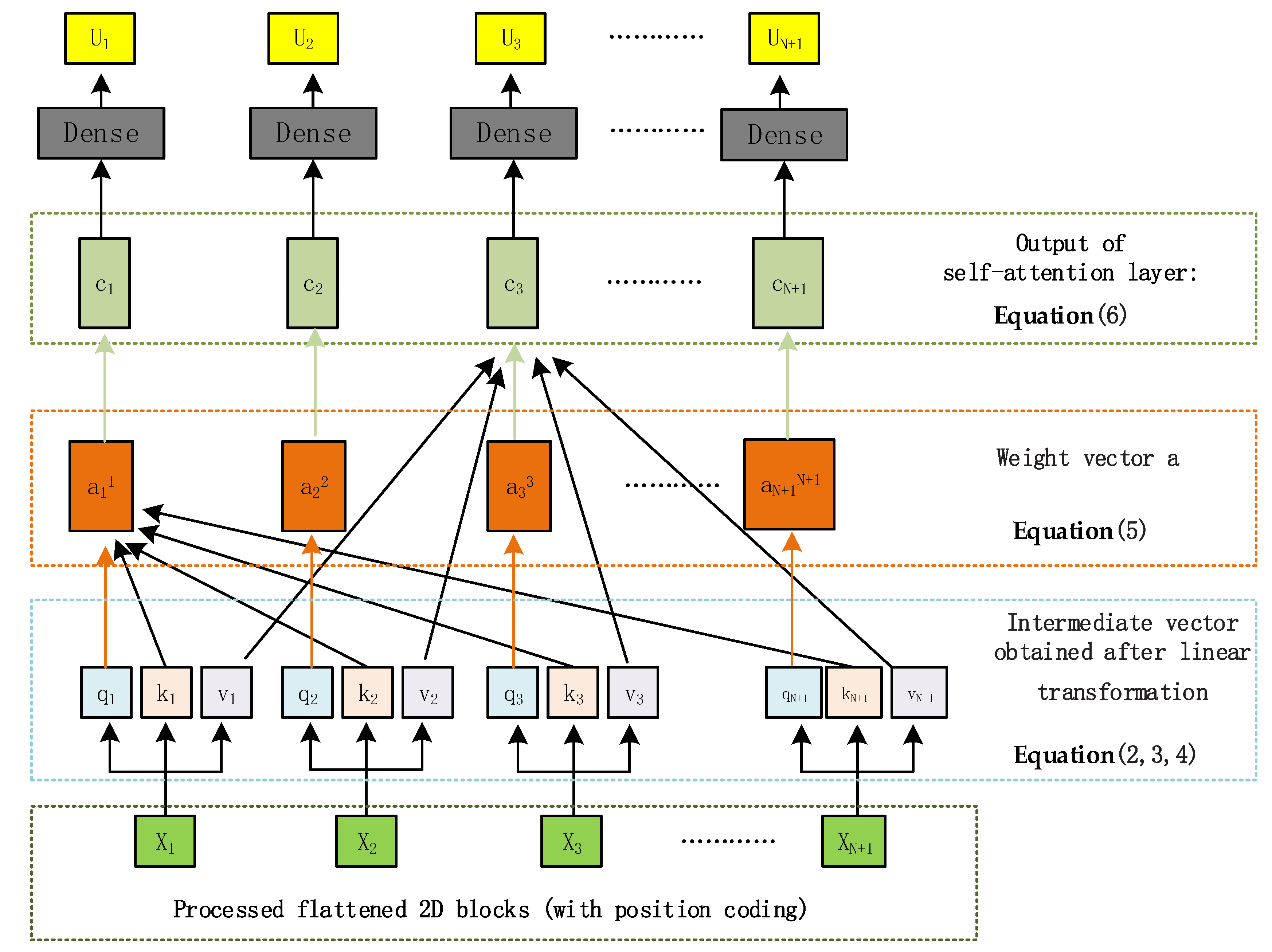

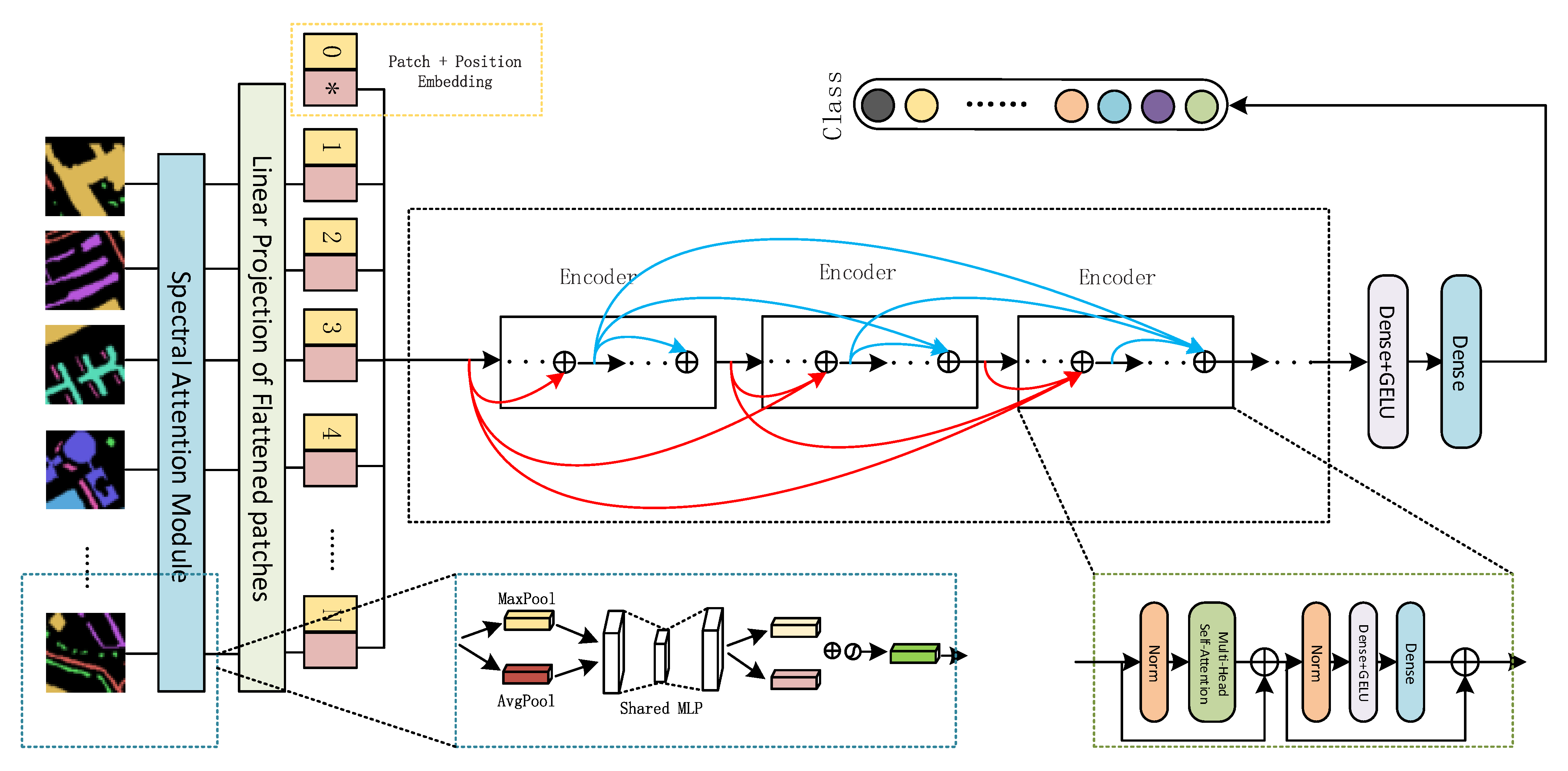

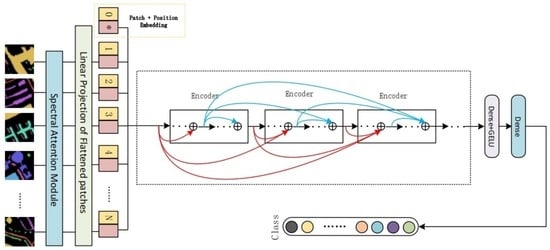

Spurred by the above problems, this paper proposes a self-attention-based transformer (SAT) model for HSI classification. Indeed, a transformer model was initially used for natural language processing (NLP) [

43,

44,

45,

46,

47], achieving great success and attracting significant attention. To date, transformer models have been successfully applied to computer vision fields such as image recognition [

48], target detection [

49], image super-resolution [

50], and video understanding [

51]. Hence, in this work, the proposed SAT Net model first processes the original HSI data into multiple flat 2D patch sequences through the spectral attention module and then uses their linear embedding sequence as the input of the transformer model. The image feature information is extracted via a multi-head self-attention scheme that incorporates a residual structure. Due to its core components, our model effectively solves the gradient explosion problem. Verification of the proposed SAT Net on three HSI public data sets against current methods reveals its appealing classification performance.

The main contributions of this work are as follows:

Our network employs a spectral attention module and uses both the spectral attention module and the self-attention module to extract feature information avoiding feature information loss.

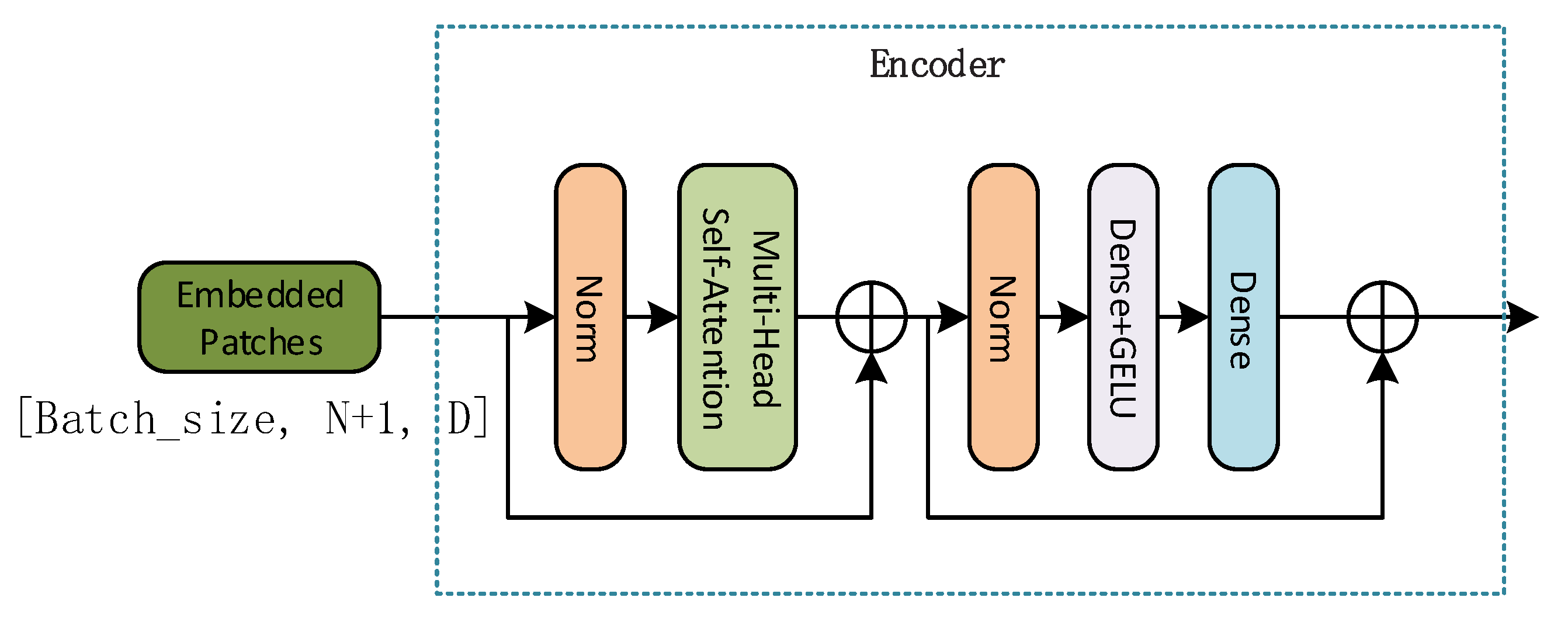

The core process of our network involves an encoder block with multi-head self-attention, which successfully handles the long-distance dependence of the spectral band information of the hyperspectral image data.

In our SAT Net model, multiple encoder blocks are directly connected using a multi-level residual structure and effectively avoid information loss caused by stacking multiple sub-modules.

Our proposed SAT Net is interpretable, enhancing its HSI feature extraction capability and increasing its generalization ability.

Experimental evaluation on HSI classification against five current methods highlights the effectiveness of the proposed SAT Net model.

The remainder of this article is organized as follows.

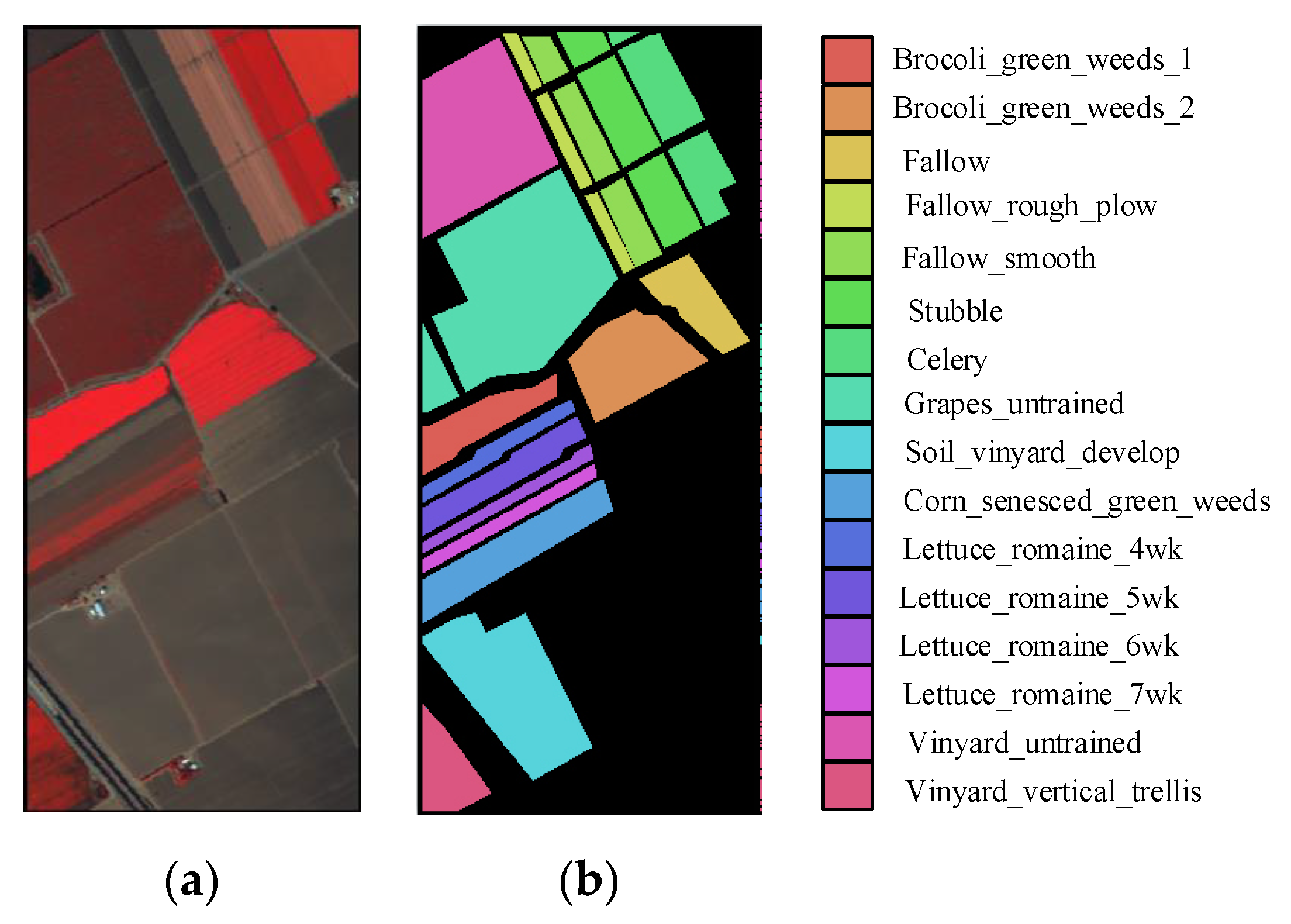

Section 2 introduces in detail the multi-head self-attention, the encoder block, the spectral attention, and the overall architecture of the proposed SAT Net.

Section 3 analyzes the interplay of each hyper-parameter of SAT Net against five current methods. Finally,

Section 4 summarizes this work.

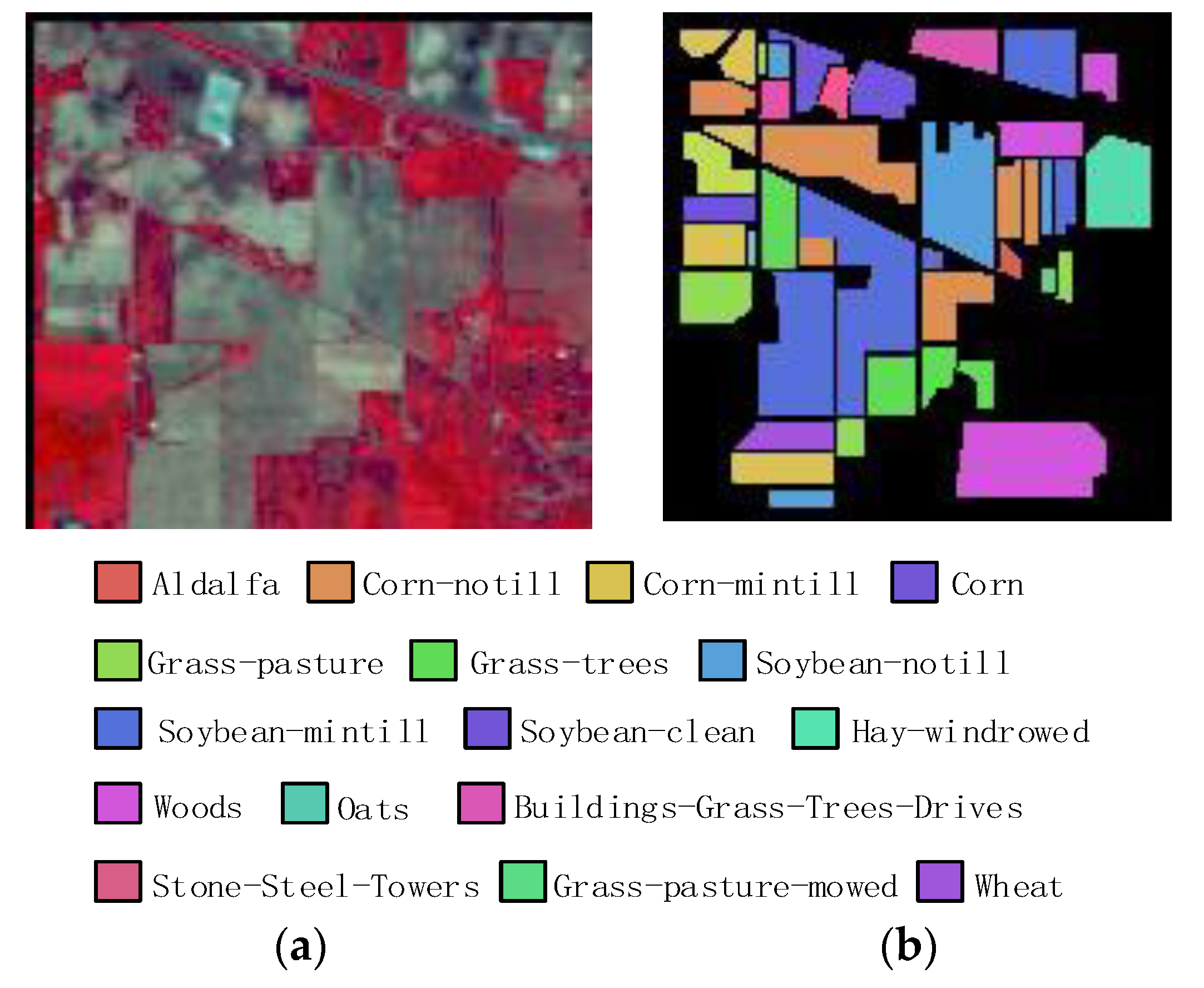

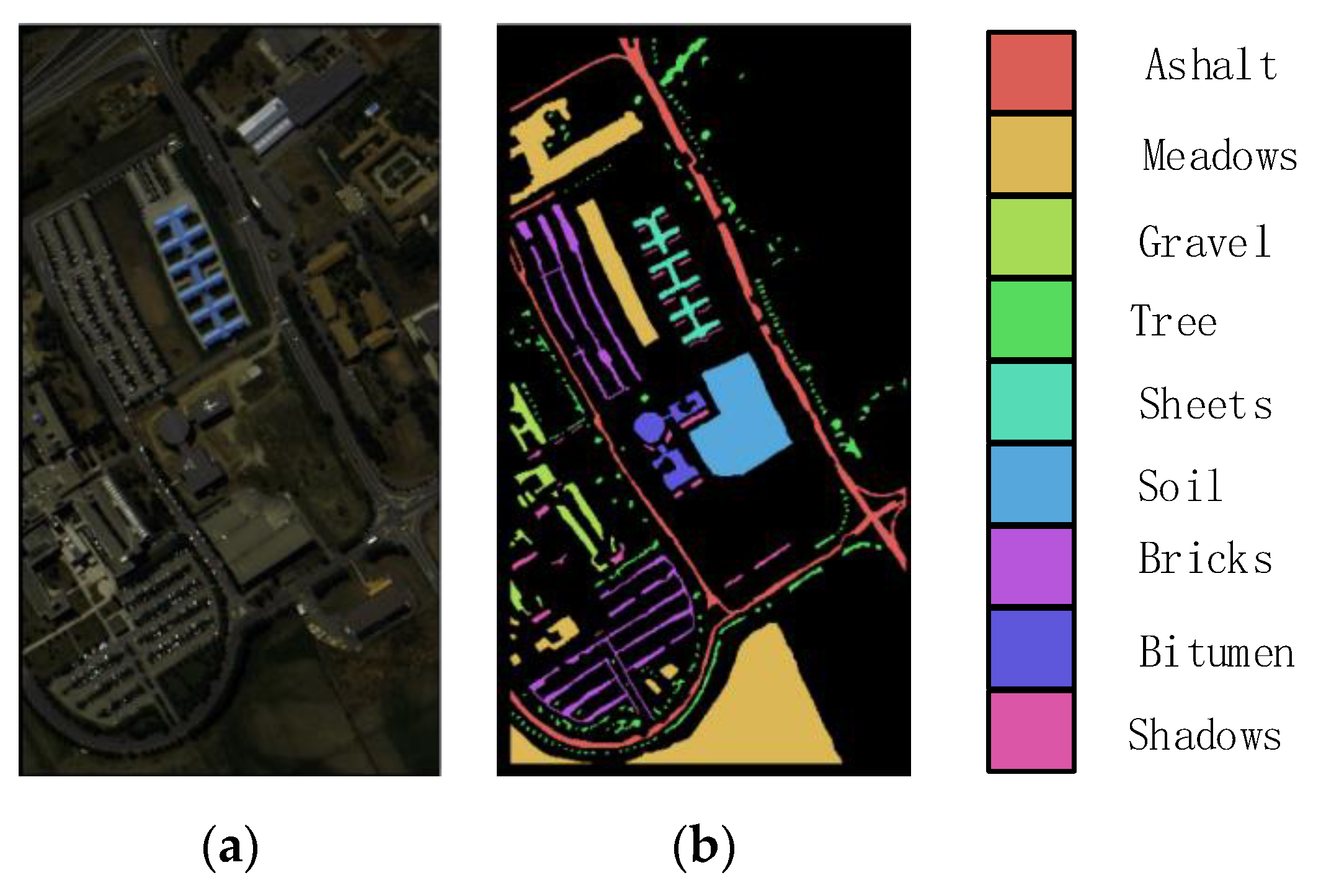

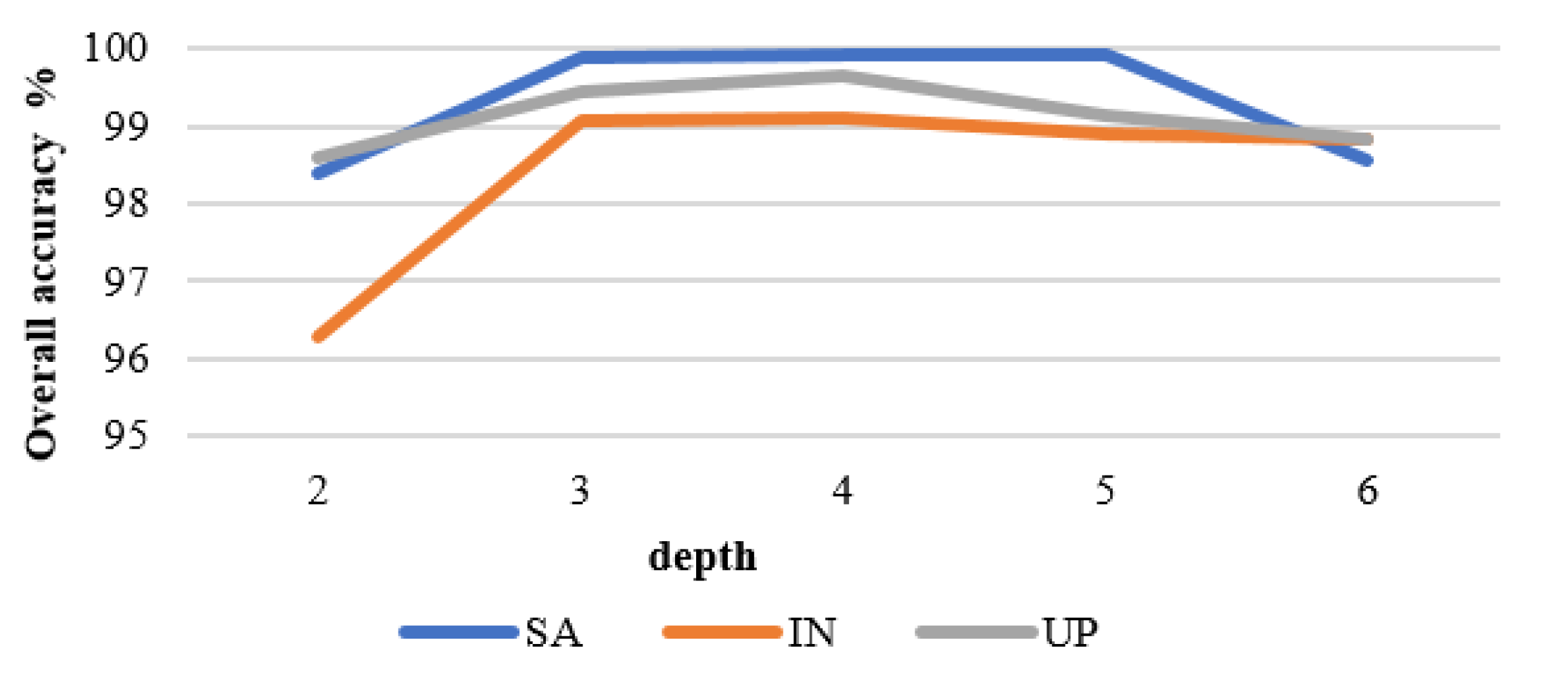

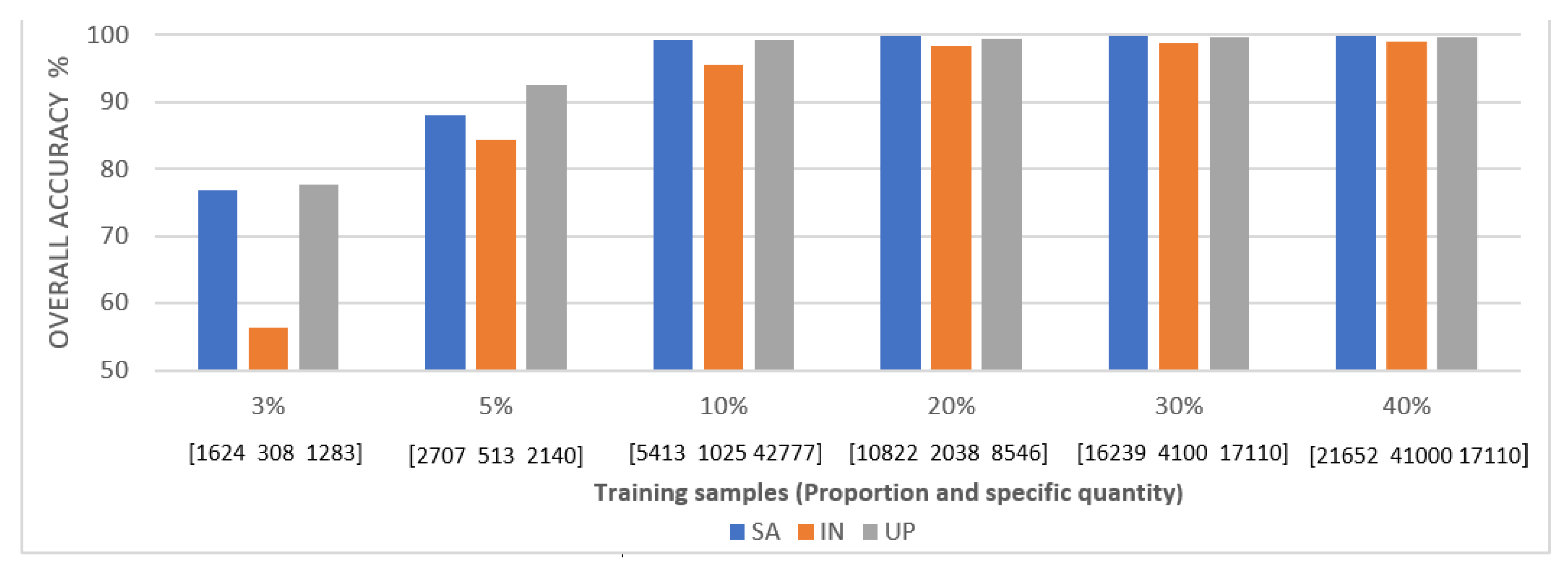

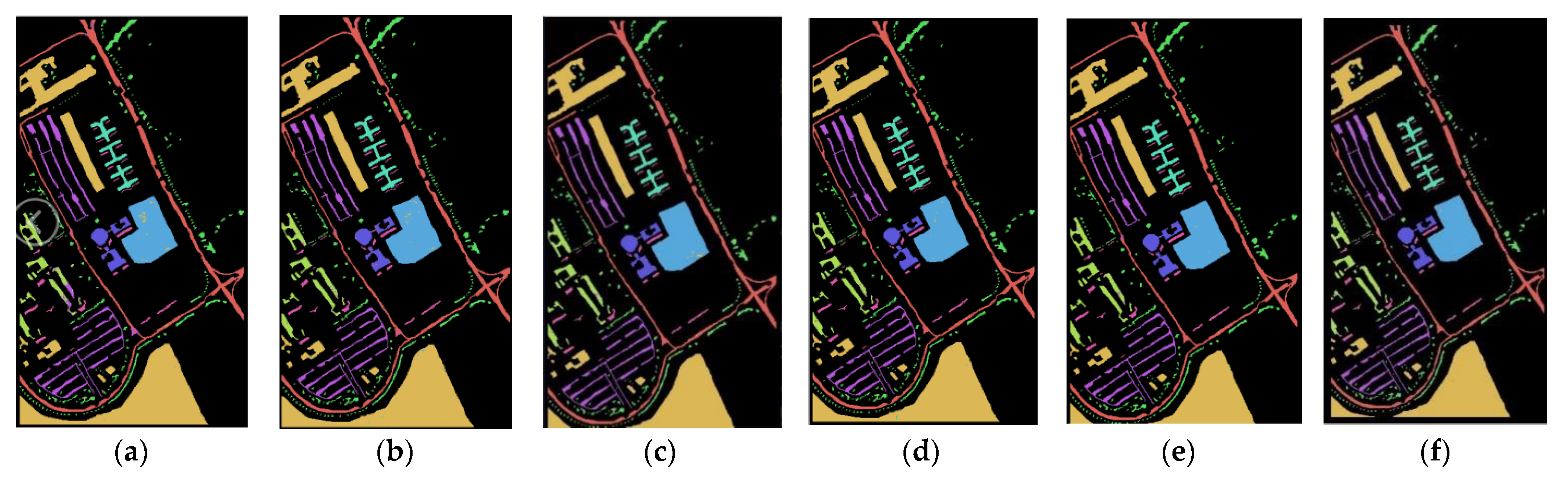

4. Conclusions

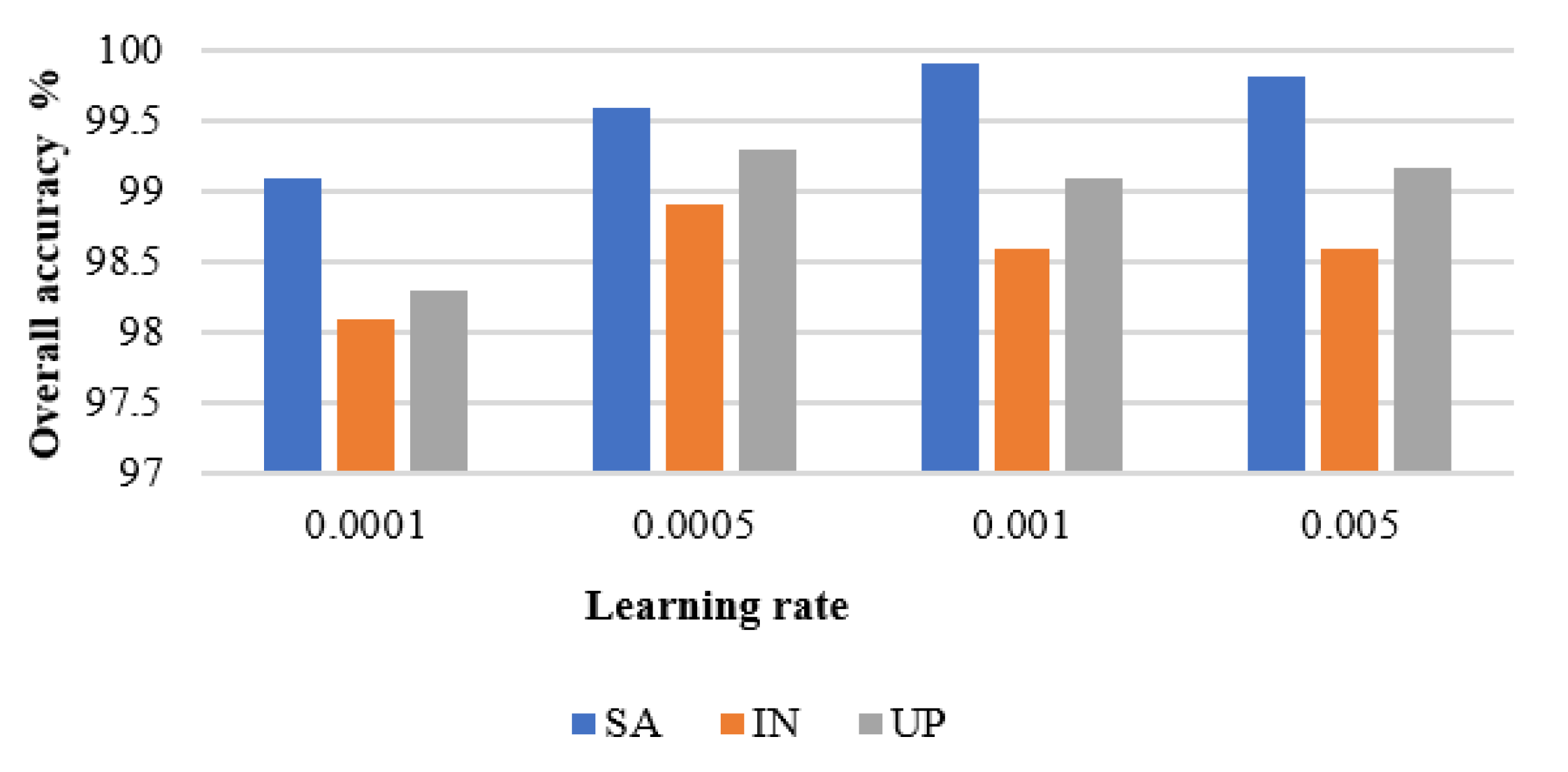

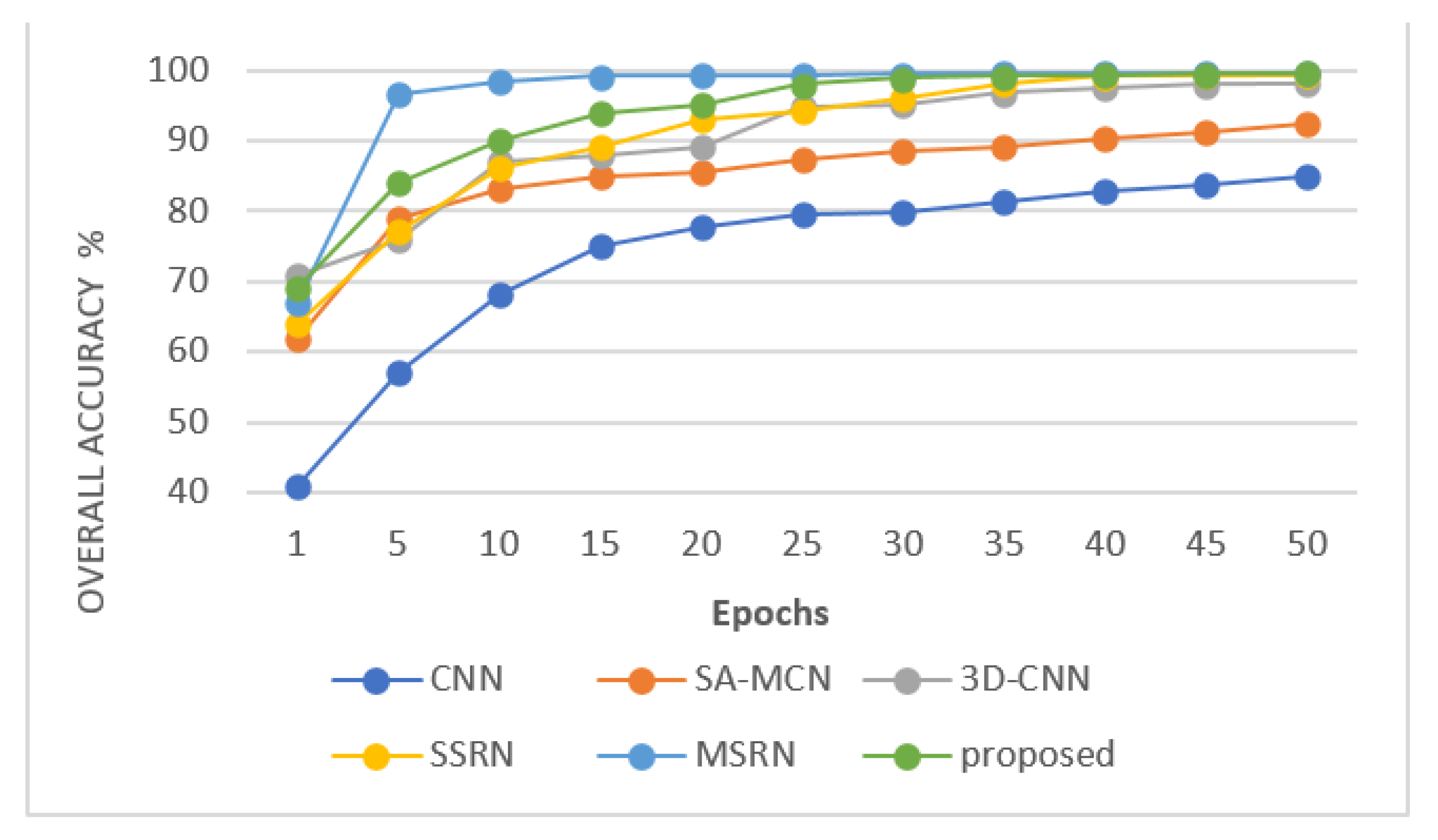

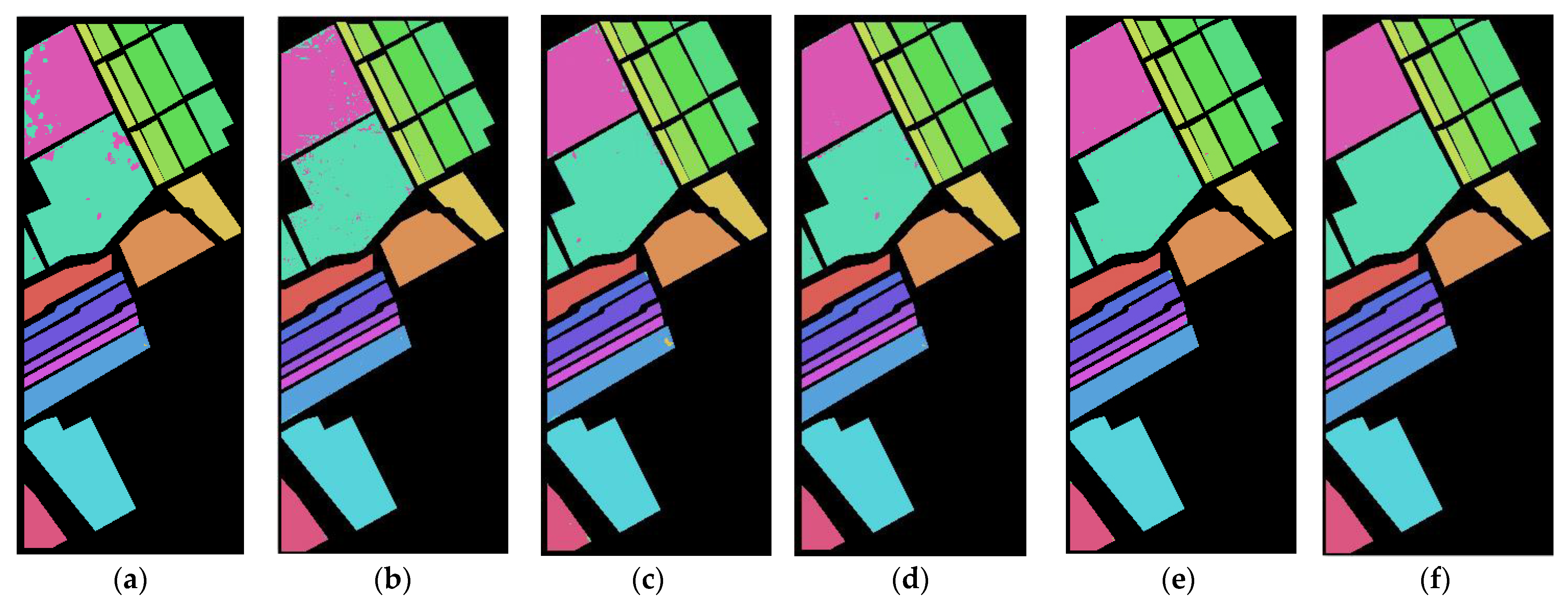

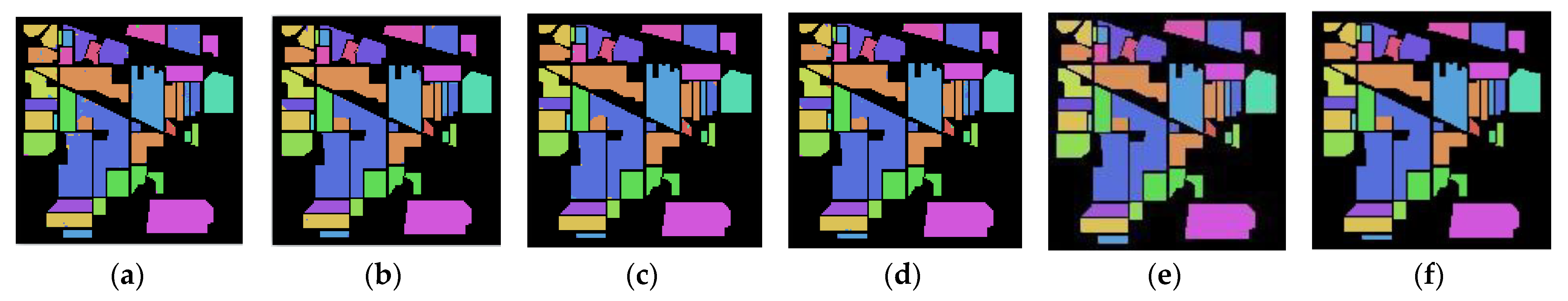

This article proposes a deep learning model that is appropriate for HSI classification entitled SAT Net. Our technique successfully employs a transformer scheme for HSI processing and proposes a new strategy for HSI image classification. Indeed, we first process the HSI data into a linear embedding sequence and then use the spectral attention module and the “multi-head self-attention” module to extract image features. The latter module solves long-distance dependence on the HSI spectral band and simultaneously discards the convolution operation avoiding information loss caused by the irregular processing of the typical convolution kernel during object classification. Overall, SAT Net combines multi-head self-attention and linear mapping, regularization, activation functions, and other operations to form an encoder block with a residual structure. To improve the performance of SAT Net, we stack multiple encoder blocks to form the main structure of our model. We verified the effectiveness of the proposed model by conducting two experiments on three publicly available datasets. The first experiment analyzes the interplay of our model’s hyperparameters, such as image size, training set ratio, and learning rate, to the overall attained classification performance. The second experiment challenges the proposed model against current classification methods. In comparison with models such as CNN, SA-MCN, 3D-CNN, and SSRN on the three public datasets, SAT NET’s OA, AA, and Kappa achieved better results. In comparison with MSRN, SAT NET achieved better results on the SA dataset. It achieved classification performance comparable to that of MSRN on the UP dataset, whereas it is slightly inferior to MSRN on the IN dataset; however, it uses less convolution (spectral attention module) to achieve better classification performance. In comparison with other methods, it provides a novel idea for HSI classification. Second, SAT NET better handles the long-distance dependence of HSI data spectrum information. On the three public data sets, i.e., SA, IN and UP, the proposed method achieved an overall accuracy of 99.91%, 99.22%, and 99.64% and an average accuracy of 99.63%, 99.08%, and 99.67%, respectively. Due to the small number of samples in the IN data set and the uneven data distribution, the classification performance of the SAT network still needs to be improved. In the future, we will study methods such as data expansion, weighted loss function, and model optimization to improve the classification of small-sampled hyperspectral data.