Abstract

We propose a new constrained optimization approach to hyperspectral (HS) image restoration. Most existing methods restore a desirable HS image by solving some optimization problems, consisting of a regularization term(s) and a data-fidelity term(s). The methods have to handle a regularization term(s) and a data-fidelity term(s) simultaneously in one objective function; therefore, we need to carefully control the hyperparameter(s) that balances these terms. However, the setting of such hyperparameters is often a troublesome task because their suitable values depend strongly on the regularization terms adopted and the noise intensities on a given observation. Our proposed method is formulated as a convex optimization problem, utilizing a novel hybrid regularization technique named Hybrid Spatio-Spectral Total Variation (HSSTV) and incorporating data-fidelity as hard constraints. HSSTV has a strong noise and artifact removal ability while avoiding oversmoothing and spectral distortion, without combining other regularizations such as low-rank modeling-based ones. In addition, the constraint-type data-fidelity enables us to translate the hyperparameters that balance between regularization and data-fidelity to the upper bounds of the degree of data-fidelity that can be set in a much easier manner. We also develop an efficient algorithm based on the alternating direction method of multipliers (ADMM) to efficiently solve the optimization problem. We illustrate the advantages of the proposed method over various HS image restoration methods through comprehensive experiments, including state-of-the-art ones.

1. Introduction

Hyperspectral (HS) imagery has 1D spectral information, including invisible light and narrow wavelength interval, in addition to 2D spatial information and thus can visualize unseen intrinsic characteristics of scene objects and environmental lighting. This makes HS imaging a key technique in many applications in various fields, e.g., earth observation, agriculture, and medical and biological imaging [1,2,3].

Observed HS images are often affected by noise because of the small amount of light in narrow wavelength and/or sensor failure. In addition, in compressive HS imaging scenarios [4,5], we have to estimate a full HS image from a minimal number of measurements. Thus, we need some methods for restoring desirable HS images from such degraded observations in HS applications.

Most HS image restoration methods are established based on optimization: a desirable HS image is characterized as a solution to some optimization problem, which consists of a regularization term and a data-fidelity term. The regularization term evaluates apriori knowledge about underlying properties on HS images, and the data-fidelity term keeps the consistency with a given observation. Thanks to the design, these methods get a reasonable result under ill-posed or ill-conditioned scenarios typical in HS image restoration.

Regularization techniques for HS image restoration are roughly classified into two groups: total variation (TV)-based approach and low-rank modeling (LRM)-based one. TV models the total absolute magnitude of local differences to exploit the piecewise-smooth structures of an image. Many TV-based approaches [6,7,8,9] have been proposed for HS image restoration. Besides, LRM-based approaches [10,11] exploit the underlying low-rank structure in the spectral direction of an HS image. A popular example is the so-called Low-rank matrix recovery (LRMR) [10].

Many recent methods [9,12,13,14,15,16,17,18,19,20,21,22] combine TV-based and LRM-based approaches, and in general, they perform better than approaches using either regularization. This is because TV-based approaches model the spatial structure of an HS image, whereas LRM-based approaches the spectral one. Naturally, the methods have to handle multiple regularization terms and a data-fidelity term(s) simultaneously in one objective function. So the methods must carefully control the hyperparameter(s) balancing these terms. Specifically, such hyperparameters are interdependent, which means that a suitable value of a hyperparameter varies depending on the multiple regularization terms used and the noise intensities on a given observation. Hence, the hyperparameter settings in such combined approaches are often troublesome tasks. Table 1 summarizes the features of the methods reviewed in this section.

Table 1.

The feature of existing methods for hyperspectral (HS) image restoration.

Based on the above discussion, we propose a new constrained convex optimization approach to HS image restoration. Our proposed method restores a desirable HS image by solving a convex optimization problem involving a new TV-based regularization and hard constraints on data-fidelity. The regularization, named Hybrid Spatio-Spectral Total Variation (HSSTV), is designed to evaluate two types of local differences: direct local spatial differences and local spatio-spectral differences in a unified manner to effectively exploit both the underlying spatial and spectral structures of an HS image. Thanks to this design, HSSTV has a strong noise and artifact removal ability while avoiding oversmoothing and spectral distortion without combining LRM. Moreover, the constrained-type data-fidelity in the proposed method enables us to translate interdependent hyperparameters to the upper bounds of the degree of data-fidelity that can be determined based only on the noise intensity. As a result, the proposed method has no interdependent hyperparameter. We also develop an efficient algorithm for solving the optimization problem based on the well-known alternating direction method of multipliers (ADMM) [23,24,25].

The remainder of the paper is organized as follows. Section 2 introduces notation and mathematical ingredients. Section 3 reviews existing methods related to our method. In Section 4, we define HSSTV, formulate HS image restoration as a convex optimization problem involving HSSTV and hard-constraints on data-fidelity, and present an ADMM-based algorithm. Extensive experiments on denoising and compressed sensing (CS) reconstruction of HS images are given in Section 5, where we illustrate the advantages of our method over several state-of-the-art methods. In Section 6, we discuss the impact of the parameters in our proposed method. Section 7 concludes the paper. We have published the preliminary versions of this work in conference proceedings [26,27], including only limited experiments and discussions. In this paper, we newly conduct real noise removal experiments (see Section 5.2) and give much deeper discussions on the performance, utility, and parameter settings of the proposed method.

2. Preliminaries

2.1. Notation and Definitions

In this paper, let be the set of real numbers. We shall use boldface lowercase and capital to represent vectors and matrices, respectively, and to define something. We denote the transpose of a vector/matrix by , and the Euclidean norm (the norm) of a vector by .

For notational convenience, we treat an HS image as a vector ( is the number of the pixels of each band, and B is the number of the bands) by stacking its columns on top of one another, i.e., the index of the component of the ith pixel in kth band is (for and ).

2.2. Proximal Tools

A function is called proper lower semicontinuous convex if , is closed for every , and for every and , respectively. Let be the set of all proper lower semicontinuous convex functions on .

The proximity operator [28] plays a central role in convex optimization based on proximal splitting. The proximity operator of with an index is defined by

We introduce the indicator function of a nonempty closed convex set , which is defined as follows:

Then, for any , its proximity operator is given by

where is the metric projection onto C.

2.3. Alternating Direction Method of Multipliers (ADMM)

ADMM [23,24,25] is a popular proximal splitting method, and it can solve convex optimization problems of the form:

where , , and . Here, we assume that f is quadratic, g is proximable, i.e., the proximity operator of g is computable in an efficient manner, and is a full-column rank matrix. For arbitrarily chosen and a step size , ADMM iterates the following steps:

The convergence property of ADMM is given as follows.

Theorem 1

(Convergence of ADMM [24]). Consider Problem (1), and assume that is invertible and that a saddle point of its unaugmented Lagrangian exists. A triplet is a saddle point of an unaugmented Lagrangian if and only if , for any . Then the sequence generated by (2) converges to an optimal solution to Problem (1).

3. Related Works

In this section, we elaborate on existing HS image restoration methods based on optimization.

3.1. TV-Based Methods

The methods proposed in [6,7,8] restore a desirable HS image by solving a convex optimization problem involving TV-based regularization. Let be the desirable HS image, and the authors assume that an observation is modeled as follows:

where and are an additive white Gaussian noise and a sparse noise, respectively. Here, the sparse noise corrupts only a few pixels in the HS image but heavily, e.g., impulse noise, salt-and-pepper noise, and line noise. The observation and the restoration problem of the methods are given by the following forms:

where is a regularization function based on TV, and and are hyperparameters. Here, The first and third terms evaluate data-fidelity on Gaussian and sparse noise, respectively. The hyperparameters and represent the priorities of each term. If we can choose suitable values of the hyperparameters, then this formulation yields high-quality restoration. However, the hyperparameters are interdependent, which means that suitable values of the hyperparameters vary depending on the used TV-based regularization term and the noise intensities on a given observation. Therefore, the settings of the hyperparameters are an essential but troublesome task.

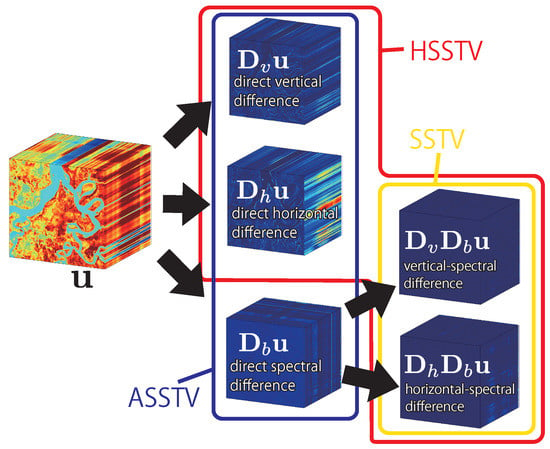

In the following, we explain each TV. Let be spatial differences operator with and being vertical and horizontal differences operator, respectively, and spectral differences operator are . In [6,7,8], HTV, ASSTV, and SSTV are defined as follows:

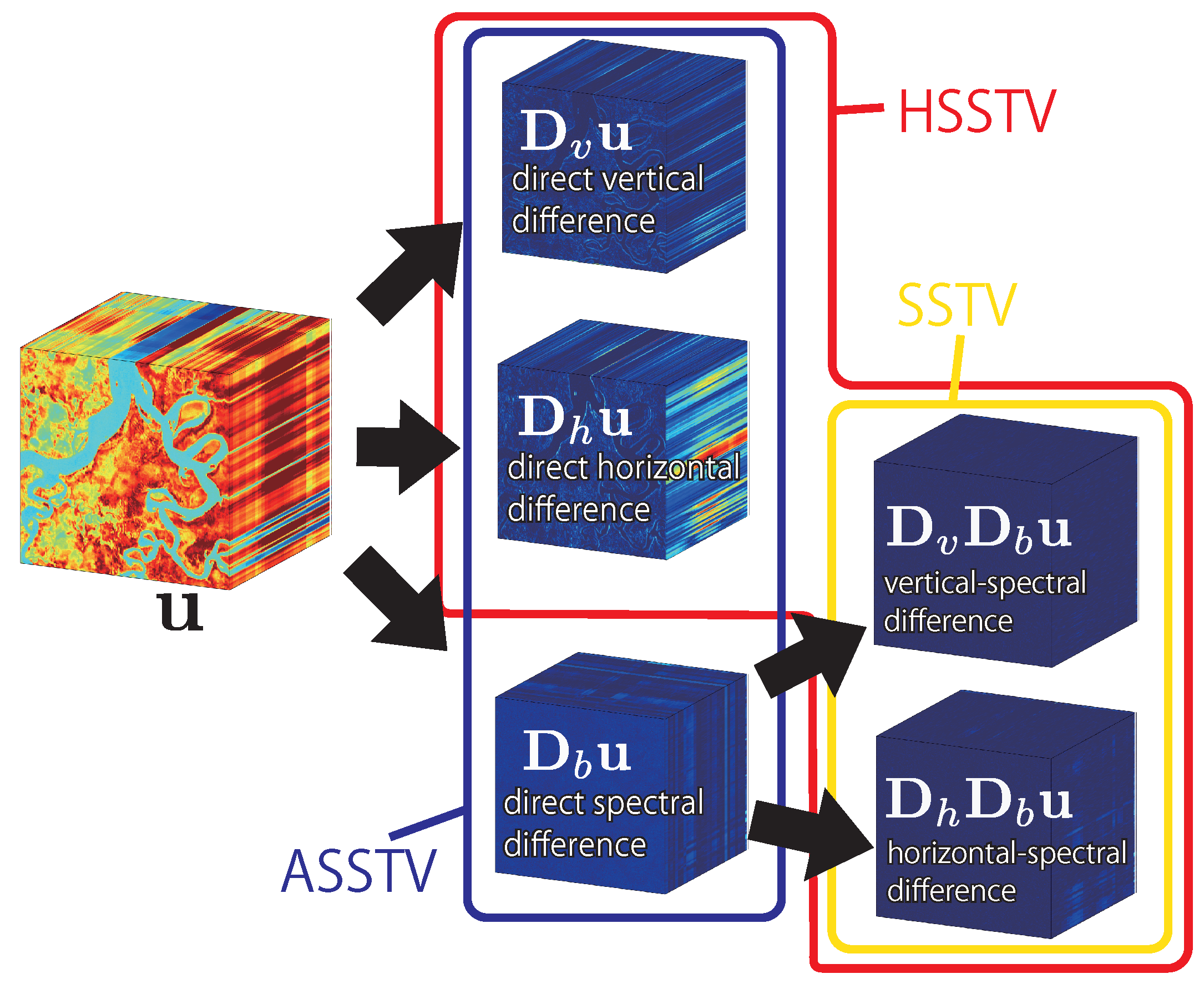

where is a TV norm, which takes the norm of spacial difference vectors for all band and then summing up for all spatial pixels, and , , and are the weight of the vertical, horizontal, and spectral differences. HTV evaluates direct spatial piecewise-smoothness and can be seen as a generalization of the standard color TV [29]. HTV does not consider spectral correlation, resulting in spatial oversmoothing. To consider the spectral correlation, the authors of [6] proposed SSAHTV. SSAHTV is a weighted HTV, and the weight is determined by spectral information. However, since SSAHTV does not directly evaluate spectral correlation, it still causes spatial oversmoothing. ASSTV evaluates direct spatial and spectral piecewise-smoothness (Figure 1, blue line). The weights , , and in (5) balance the smoothness of vertical, horizontal, and spectral differences, respectively. Owing to the definition, ASSTV can evaluate spatial and spectral correlation, but it produces spectral oversmoothing even if we carefully adjust , , and . SSTV evaluate a-prior knowledge on HS images using spatio-spectral piecewise-smoothness. It is derived by calculating spatial differences through spectral differences (Figure 1, yellow line). SSTV can restore a desirable HS image without any weight, but it produces noise-like artifacts, especially when a given observation is contaminated by heavy noise and/or degradation.

Figure 1.

Calculation of local differences in SSTV, ASSTV, and our HSSTV. SSTV evaluates the norm of spatio-spectral differences (yellow line). ASSTV evaluates the norm of direct spatial and spectral differences (blue line). HSSTV evaluates the mixed norm of direct spatial and spatio-spectral differences (red line).

3.2. LRM-Based Method

LRMR [10] is one of the popular LRM-based methods for HS image restoration, which evaluates the low rankness of an HS image in the spectral direction. To preserve the local details, LRMR restores a desirable HS image through patch-wise processing. Each patch is a local cube of the size of , and LRMR handles it as a matrix of size obtained by lexicographically arranging the spatial vectors in the patch cube in the row direction. The observation model is expressed like Section 3.1, and the restoration problem is formulated as follows:

where , , and represent the patches of a restored HS image, an observation, and a sparse noise, respectively, which are centered at (i,j) pixel. Then, the is a Frobenius norm, represents a rank function, and is a cardinality function. The method evaluates the low rankness of the estimated HS image and sparsity of the sparse noise by limiting the number of the rank of and the cardinality of using r and k, respectively. Thanks to the design, LRMR achieves high-quality restoration for especially spectral information. Meanwhile, since LRMR does not fully consider spatial correlation, the result by LRMR tends to have spatial artifacts when an observation is corrupted by heavy noise and/or degradation. Besides, the rank and cardinality functions are nonconvex, and so it is a troublesome task to seek the global optimal solution of Problem (7).

3.3. Combined Method

The methods [9,12,13,14,15,16,17,18,19,20,21,22,30] combine TV-based and LRM-based approaches. Since they can evaluate multiple types of apriori knowledge, i.e., piecewise-smoothness and low rankness, they can restore a more desirable HS image than the approaches only using TV-based or LRM-based regularization. Some methods [9,12,14,15,17,18,19,20] approximate the rank and cardinality functions by their convex surrogates. As a result, the restoration problems are convex and can be solved by optimization methods based on proximal splitting.

However, the methods have to handle multiple regularization terms and/or a data-fidelity term(s) simultaneously in one objective function; they require to carefully control the hyperparameters balancing these terms. Since the hyperparameters rely on both the regularizations and the noise intensity on an observation, i.e., the hyperparameters are interdependent, the hyperparameter settings are often troublesome tasks.

4. Proposed

4.1. Hybrid Spatio-Spectral Total Variation

We propose a new regularization technique for HS image restoration, named HSSTV. HSSTV simultaneously handles both direct local spatial differences and local spatio-spectral differences of an HS image. Then, HSSTV is defined by

where is the mixed norm, and . We assume or 2, i.e., the norm () or the mixed norm, respectively. We would like to mention that we can also see -HSSTV () as anisotropic HSSTV and -HSSTV () as isotropic HSSTV.

In (8), and correspond to local spatio-spectral and direct local spatial differences, respectively, as shown in Figure 1 (red lines). The weight adjusts the relative importance of direct spatial piecewise-smoothness to spatio-spectral piecewise-smoothness. HSSTV evaluates two kinds of smoothness by taking the norm ( or 2) of these differences associated with each pixel and then summing up for all pixels, i.e., calculating the norm. Thus, it can be defined via the mixed norm. When we set and , HSSTV recovers SSTV as (6), meaning that HSSTV can be seen as a generalization of SSTV.

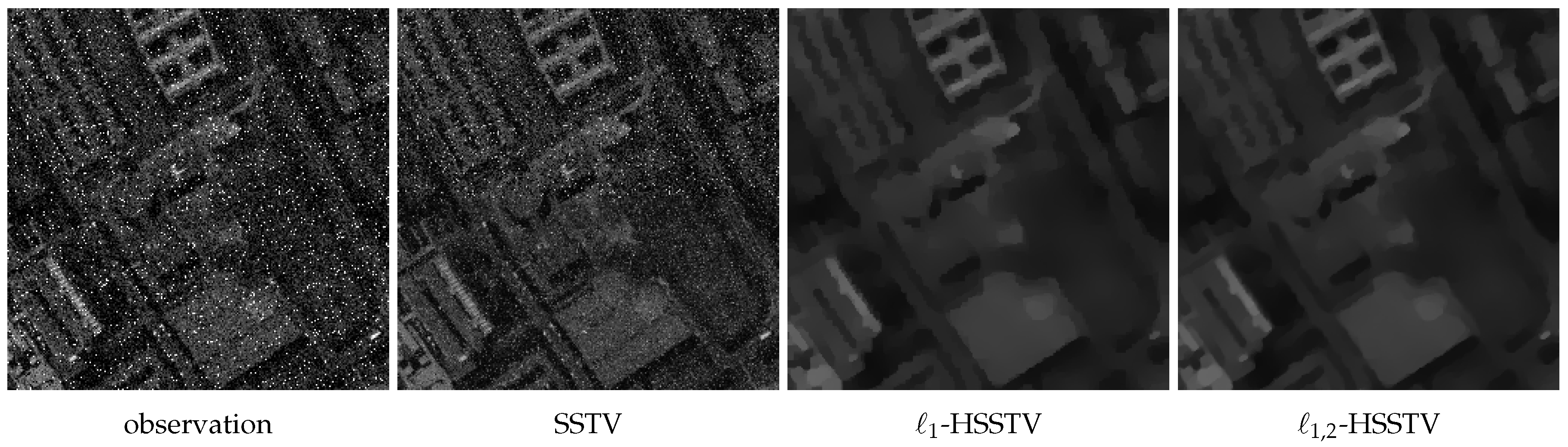

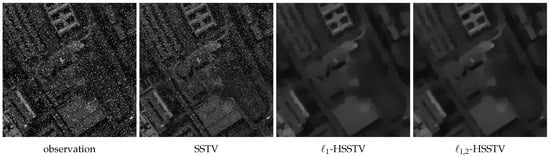

As reviewed in Section 3, since SSTV only evaluates spatio-spectral piecewise-smoothness, it cannot remove similar noise in adjacent bands. The direct spatial differences in HSSTV help to remove such noise. Figure 2 is restored HS images from an observation contaminated by similar noise in adjacent bands (the upper half area) and random noise (the lower half area). One can see that large noise remains in the upper half area of the result by SSTV. In contrast, HSSTV effectively removes all noise. However, since minimizing the direct spatial differences strongly promotes spatial piecewise-smoothness, HSSTV produces spatial oversmoothing when the weight is large. Thus, the weight should be set to less than one, as demonstrated in Section 5.

Figure 2.

Restored HS images from an observation contaminated by similar noise in adjacent bands (the upper half area) and random noise (the lower half).

4.2. HS Image Restoration by HSSTV

We consider restoring a desirable HS image from an observation contaminated by a Gaussian-sparse mixed noise. The observation model is given by the following form:

where is a matrix representing a linear observation process, e.g., random sampling, is Gaussian noise with the standard deviation , and is a sparse noise.

Based on the above model, we formulate HS image restoration using HSSTV as the following optimization problem:

where is a -centered -norm ball with the radius , is a -centered -norm ball with the radius , and is a dynamic range of an HS image (). This method simultaneously estimates the desirable HS image and the sparse noise for noise-robust restoration. The first and second constraints measure data fidelities to the observation and the sparse noise , respectively. As mentioned in [13,16,26,31,32,33,34,35,36,37,38,39], such a constraint-type data-fidelity enables us to translate the hyperparameter(s) balancing between regularization and data-fidelity like and in (3) to the upper bound of the degree of data-fidelity and that can be set in a much easier manner.

Since all constraints are closed convex sets and HSSTV is a convex function, Problem (10) is a constrained convex optimization problem. In this paper, we adopt ADMM (see Section 2.3) for solving the problem. In what follows, we reformulate Problem (10) into Problem (1).

By using the indicator functions of the constraints, Problem (10) can be rewritten as

Note that from the definition of the indicator function, Problem (11) is exactly equal to Problem (10). By letting

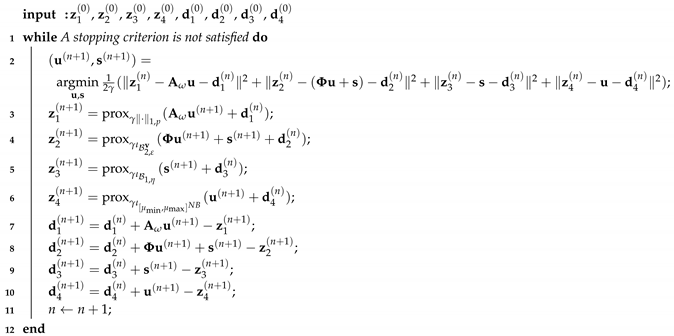

Problem (11) is reduced to Problem (1). The resulting algorithm based on ADMM is summarized in Algorithm 1.

| Algorithm 1: ADMM method for Problem (10) |

|

The update of and in Algorithm 1 comes down to the following forms:

Since the update of and in Algorithm 1 is strictly-convex quadratic minimization, one can obtain these updated forms by differentiating it. Here, we should consider the structure of because it affects the matrix inversion in (15). If is a block-circulant-with-circulant-blocks (BCCB) matrix [40], we can leverage 3DFFT to efficiently solve the inversion in Step 2 with the difference operators having a periodic boundary, i.e., can be diagonalized by the 3D FFT matrix and its inverse. If is a semiorthogonal matrix, i.e., , we leave it to the update of , which means that we replace by in (13) and by in (14). This is because the proximity operator of , in this case, can be computed by using [41] (Table 1.1-x) as follows:

If is a sparse matrix, we offer to use a preconditioned conjugate gradient method [42] for approximately solving the inversion or to apply primal-dual splitting methods [43,44,45] instead of ADMM (Primal-dual splitting methods require no matrix inversion but in general their convergence speed is slower than ADMM. Otherwise, randomized image restoration methods using stochastic proximal splitting algorithms [46,47,48,49] might be useful for reducing the computational cost.

For the update of , the proximity operators are reduced to simple soft-thresholding type operations: for and for , (i) in the case of ,

where sgn is the sign function, and (ii) in the case of ,

where .

The updates of , , and require the proximity operators of the indicator functions of , , and , respectively, which equate the metric projections onto them (see Section 2.2). Specifically, the metric projection to is given by

that onto is given by

and that onto is given, for , by

5. Results

We demonstrate the advantages of the proposed method by applying it to two specific HS image restoration problems: denoising and CS reconstruction. In these experiments, we used 13 HS images taken from the SpecTIR [50], MultiSpec [51], and GIC [52], with their dynamic ranges normalized into . All the experiments were conducted by MATLAB 2018a.

The proposed method was compared with HTV [6], SSAHTV [6], SSTV [7], and ASSTV [38]. For a fair comparison, we replaced HSSTV in Problem (10) with HTV, SSAHTV, SSTV, or ASSTV and solved the problem by ADMM. In the denoising experiments, we also compared our proposed method with LRMR [10], TV-regularized low-rank matrix factorization (LRTV) [13], and local low-rank matrix recovery and global spatial-spectral TV (LLRGTV) [16]. Since LRMR, LRTV, and LLRGTV are customized to the mixed noise removal problem, we cannot adopt them for CS reconstruction. We did not compare our proposed method with recent CNN-based HS image denoising methods [53,54]. The CNN-based methods cannot be represented as explicit regularization functions and are fully customized to denoising tasks. In contrast, our proposed method can be used as a building block in various HS image restoration methods based on optimization. Meanwhile, CNN-based methods strongly depend on what training data are used, which means that they cannot adapt to a wide range of noise intensity. Thus, the design concepts of these methods are different from TVs and LRM-based approaches.

To quantitatively evaluate restoration performance, we used the peak signal-to-noise ratio (PSNR) [dB] index and the structural similarity (SSIM) [55] index between a groundtruth HS image and a restored HS image . PSNR is defined by , where is the max value of the dynamic range of HS images, and is the mean square error between groundtruth HS images and restored HS images. The higher PSNR is, the more similar the two images are. SSIM is an image quality assessment index based on the human vision system, which is defined as follows:

where and are the ith pixel-centered local patches of a restored HS image and a groundtruth HS image, respectively, P is the number of patches, and is the average values of the local patches of the restored and groundtruth HS images, respectively, and represent the variances of and , respectively, and denotes the covariance between and . Moreover, and are two constants, which avoid the numerical instability when either or is very close to zero. SSIM gives a normalized score between zero and one, where the maximum value means that equals to .

We set the max iteration number, the stepsize , and the stopping criterion of ADMM to 10,000, 0.05, and , respectively.

5.1. Denoising

First, we experimented on the Gaussian-sparse mixed noise removal of HS images. The observed HS images included an additive white Gaussian noise with the standard deviation and sparse noise . In these experiments, we assumed that sparse noise consists of salt-and-pepper noise and vertical and horizontal line noise, with these noise ratio in all pixels being , , and , respectively. We generated noisy HS images by adding two types of mixed noise to groundtruth HS images: (i) , (ii) . In the denoising case, in (9), and the radiuses and in Problem (10) were set to and , respectively, where is the average of the observed image. Table 2 shows the parameters settings for ASSTV, LRMR, LRTV, LLRGTV, and the proposed method. We set these parameters to achieve the best performance for each method.

Table 2.

Parameter settings for ASSTV, LRMR, LRTV, and the proposed method.

In Table 3, we show the PSNR and SSIM of the denoised HS images by each method for two types of noise intensity and HS images. For HTV, SSAHTV, SSTV, ASSTV, and LRMR, the proposed method outperforms the existing methods. Besides, one can see that the PSNR and SSIM of the results by the proposed method are higher than those by LLRGTV for most situations. Meanwhile, LRTV outperforms the proposed method in some cases. This would be because LRTV combines TV-based and LRM-based regularization techniques. We would like to mention that the proposed method outperforms LRTV in over half of the situations, even though it uses only TV-based regularization.

Table 3.

Peak signal-to-noise ratio (PSNR) (top) and structural similarity (SSIM) (bottom) in mixed noise removal experiments.

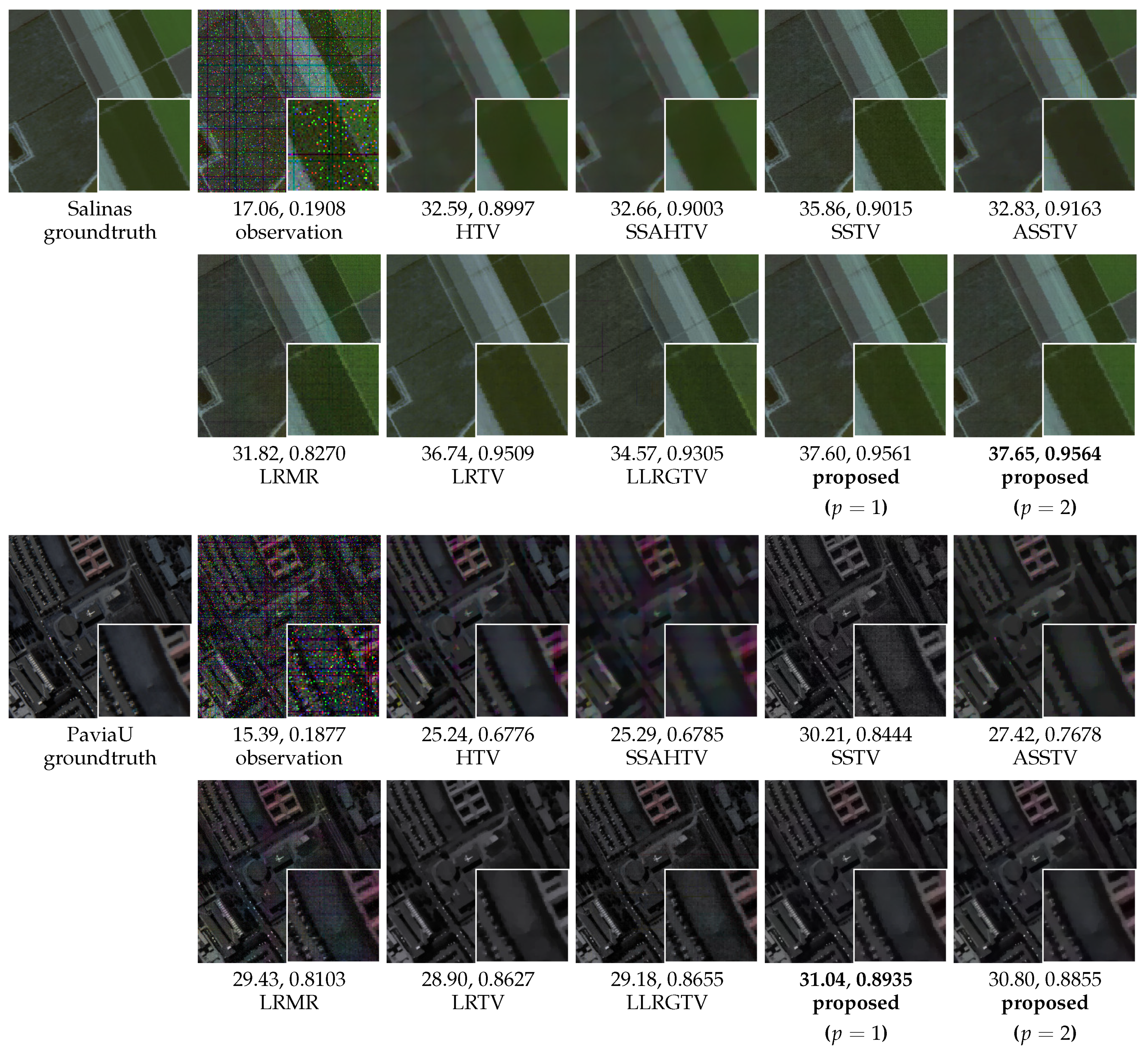

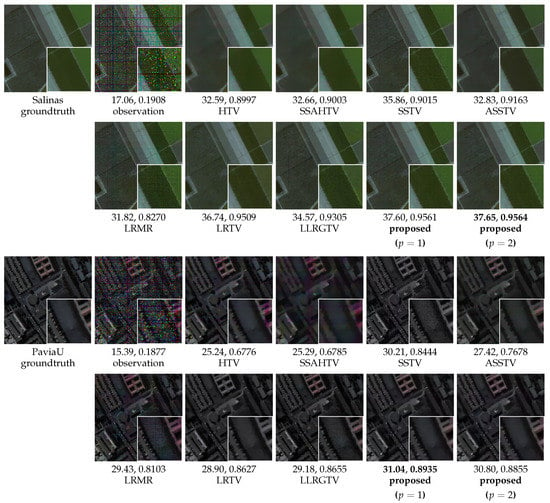

Figure 3 shows the resulting images on Salinas (the noise level (i), top) and PaviaU (the noise level (ii), bottom), with their PSNR (left) and SSIM (right). Here, we depicted these HS images as RGB images (R = 8th, G = 16th, and B = 32nd bands). One can see that the results by HTV, SSAHTV, and ASSTV lose spatial details, and noise remains in the results by SSTV, LRMR, and LLRGTV. Besides, since the restored images by SSTV and LRTV lose color with large noise intensity, SSTV and LRTV change spectral variation. In contrast, the proposed method can restore HS images preserving both details and spectral information without artifacts.

Figure 3.

Resulting HS images with their PSNR (left) and SSIM (right) in the mixed noise removal experiment (top: Salinas, the noise level (i), bottom: PaviaU, the noise level (ii)).

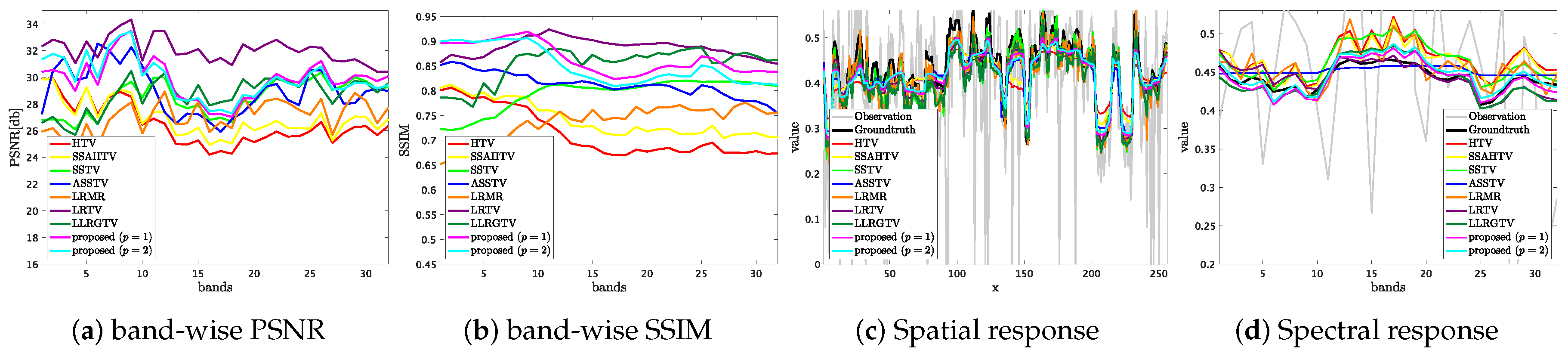

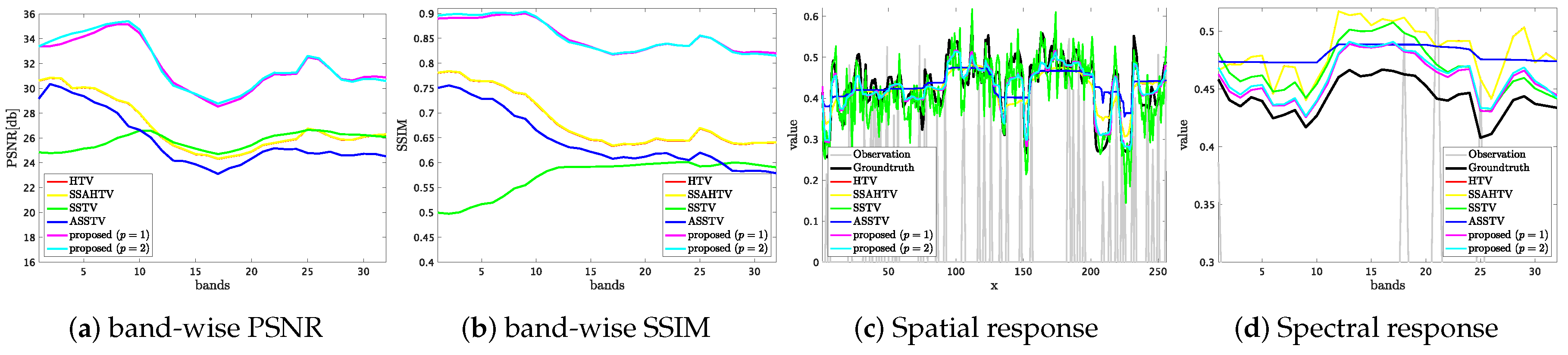

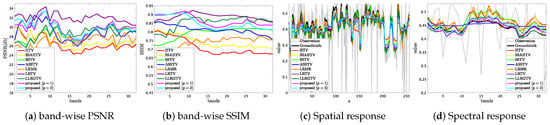

Figure 4 plots band-wise PSNR and SSIM (left), and spatial and spectral responses (right) of the denoised Suwannee HS image in the case of the noise level (ii). The graphs regarding band-wise PSNR and SSIM show that the proposed method achieves higher-quality restoration than HTV, SSAHTV, and SSTV for all bands and ASSTV and LRMR for most bands. Besides, even though the proposed method only utilizes HSSTV, the results by the proposed method outperform those by LRTV for some bands of the SSIM case and those by LLRGTV for many bands. Graph (c) plots the spatial response of the 243rd row of the 30th band. Similarly, graph (d) plots the spectral response of the 243rd row and 107th col. We can see that the spatial response of the results by HTV and SSAHTV is too smooth compared with the groundtruth one. On the other hand, there exist undesirable variations in the spatial response of the results by SSTV, LRMR, and LLRGTV. In contrast, ASSTV, LRTV, and the proposed method restore similar responses to the groundtruth one. In graph (d), one can see that (i) HTV, SSAHTV, and LRMR produce spectral artifacts, (ii) the shape of the spectral responses of the results by SSTV is similar to that of the groundtruth one, but the mean value is larger than the groundtruth one, (iii) the spectral response of the results by ASSTV is too smooth and different from the groundtruth one, and (iv) LRTV, LLRGTV, and the proposed method can restore a spectral response very similar to the groundtruth one.

Figure 4.

Band-wise PSNR and SSIM and spatial and spectral responses in the mixed noise removal experiment (Suwannee).

5.2. Real Noise Removal

We also examined HTV, SSAHTV, SSTV, ASSTV, LRMR, LRTV, LLRGTV, and the proposed method on an HS image with real noise. We selected noisy 16 bands from Suwannee and used it as a real observed HS image . To maximize the performance of each method, we searched for suitable values of , , , and , and we set the parameters as with Section 5.1. Specifically, we set the parameter and for all TVs. Besides, the parameters , , and in ASSTV are set as 1, 1, 3, respectively, r and k in LRMR are set as 3 and , respectively, r and in LRTV are set as 2 and , and r, , and in LLRGTV are set as 2, and .

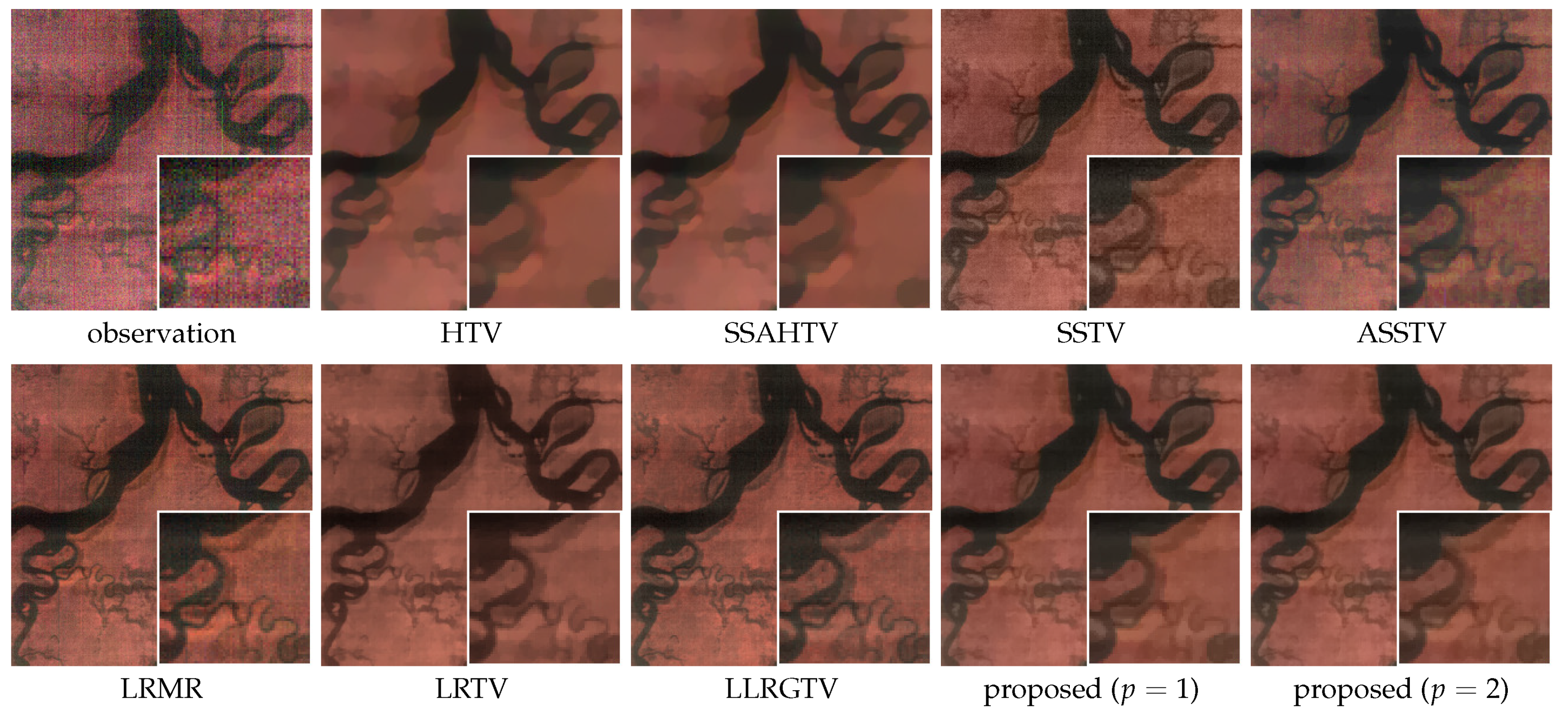

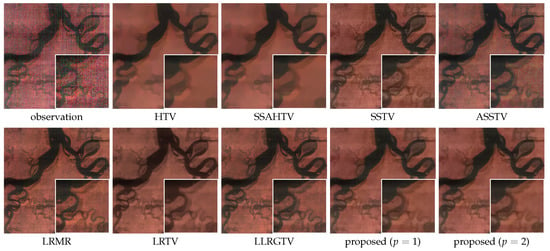

Figure 5 shows the results, where the HS images are depicted as RGB images (R = 2nd, G = 6th, and B = 13rd bands). The results by HTV and SSAHTV have spatial oversmoothing, and SSTV, ASSTV, LRMR, and LLRGTV produce spatial artifacts. Besides, one can see that the results by LRMR and LRTV have spectral artifacts. On the other hand, the proposed method can restore a detail-preserved HS image without artifacts.

Figure 5.

The denoising results on the real noise removal experiments.

5.3. Compressed Sensing Reconstruction

We experimented on compressed sensing (CS) reconstruction [56,57]. The CS theory says that high-dimensional signal information can be reconstructed from incomplete random measurements by exploiting sparsity in some domains, e.g., the gradient domain (TV). In general, HS imaging captures an HS image by scanning 1D spatial and spectral information because it senses spectral information by dispersing the incident light. Therefore, capturing moving objects is very difficult in HS imaging. To overcome the drawback, one-shot HS imaging based on CS has been actively studied [4,5].

In this experiment, we assume that in (9) is a random sampling matrix with the sampling rate m ( and ). Here, since is a semiorthogonal matrix, we can efficiently solve the problem, as explained in Section 4.2. Moreover, since the main objective in the experiments is to verify CS reconstruction performance by HSSTV, we assume that the observations are contaminated by only an additive white Gaussian noise with noise intensity .

We set the CS reconstruction problem as follows:

The problem is derived by removing the second constraint and from Problem (10). Therefore, we can solve the above problem by removing , , and in Algorithm (1) and replacing and with and , respectively. As in Section 4.2, the update of is strictly-convex quadratic minimization, and so it comes down to

We set or and . In the ASSTV case, we set the parameters , which experimentally achieves the best performance.

Table 4 shows PSNR and SSIM of the reconstructed HS images. For all m and HS images, both PSNR and SSIM of the proposed method results are almost higher than that by HTV, SSAHTV, SSTV, and ASSTV.

Table 4.

PSNR (left) and SSIM (right) in the CS reconstruction experiment.

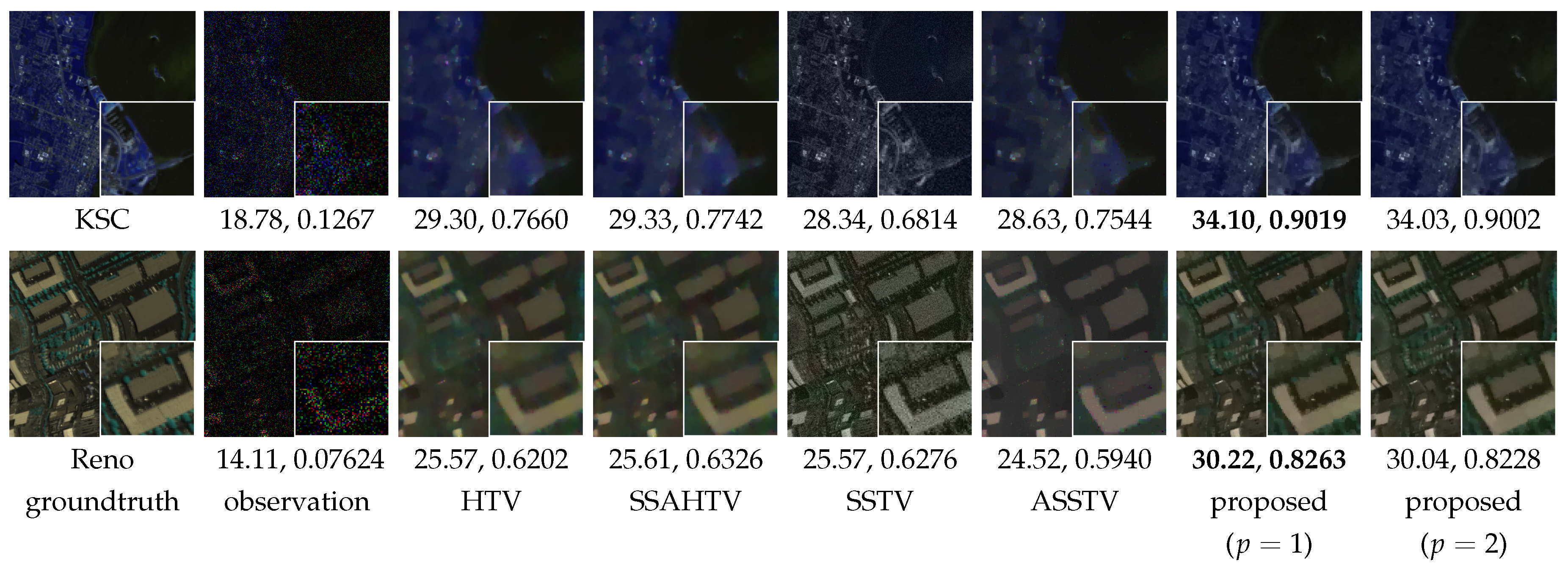

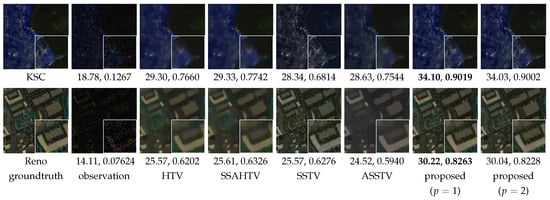

Figure 6 is the reconstructed results on KSC and Reno with the random sampling ratio and , respectively. Here, the HS images are depicted as RGB images (R = 8th, G = 16th, and B = 32nd bands). One can see that (i) HTV and SSAHTV cause spatial oversmoothing, (ii) SSTV produces artifacts and spectral distortion, appearing different from the color of the groundtruth HS images, and (iii) the results by ASSTV have spatial oversmoothing and spectral distortion. On the other hand, the proposed method reconstructs meaningful details without both artifacts and spectral distortion.

Figure 6.

Resulting HS images with their PSNR (left) and SSIM (right) on the compressed sensing (CS) reconstruction experiment (top: KSC, , bottom: Reno, ).

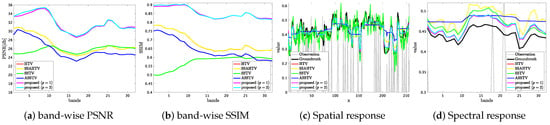

Figure 7 plots band-wise PSNR or SSIM (left) and spatial and spectral responses (right) (Suwannee, ). According to band-wise PSNR and SSIM, one can see that the proposed method achieves higher-quality reconstruction for all bands than HTV, SSAHTV, SSTV, and ASSTV. Graphs (c) and (d) plot the spatial and spectral responses of the same position in Section 5.1. Graph (c) shows that (i) the spatial response of the results by HTV, SSAHTV, and ASSTV are oversmoothing, (ii) SSTV produces undesirable variation, and (iii) the spatial response reconstructed by the proposed method is similar to the groundtruth one. In graph (d), HTV and SSAHTV generate undesirable variation, and ASSTV causes oversmoothing. Thanks to the evaluation of spatio-spectral piecewise-smoothness, SSTV reconstructs a similar spectral response to the groundtruth one. However, the mean value is larger than the groundtruth value. The proposed method achieves the most similar reconstruction of spectral response among all the TVs.

Figure 7.

Band-wise PSNR and SSIM and spatial and spectral responses on the CS reconstruction experiment (Suwannee).

6. Discussion

In this section, we discuss multiple parameters in our proposed method.

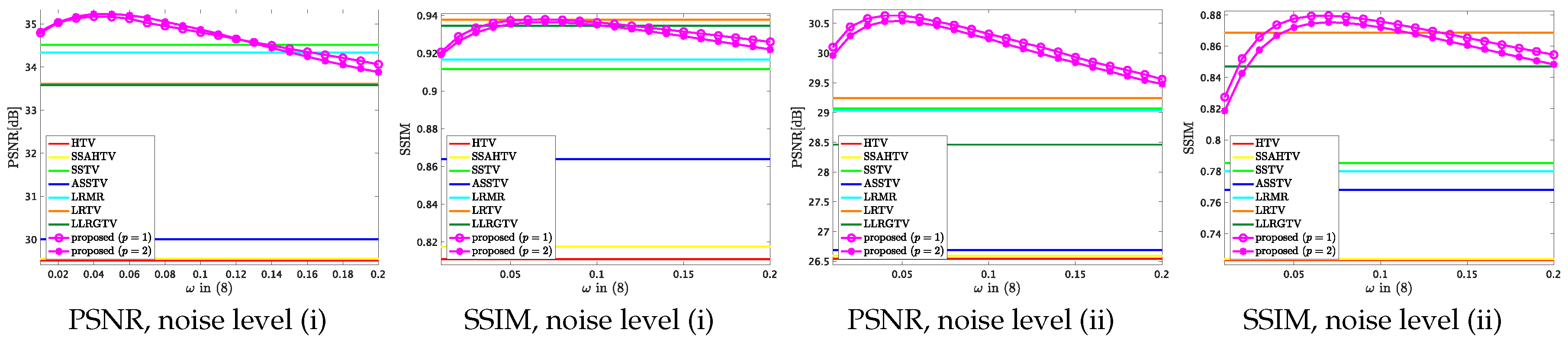

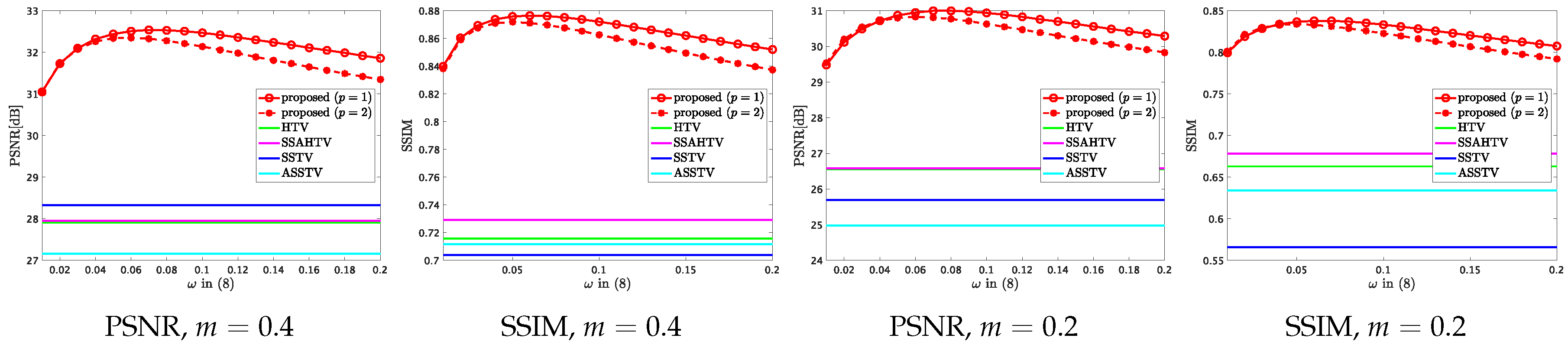

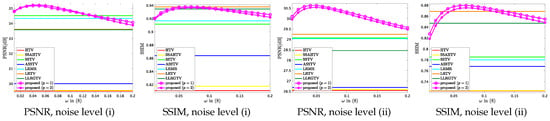

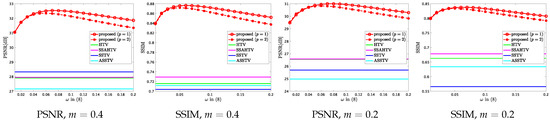

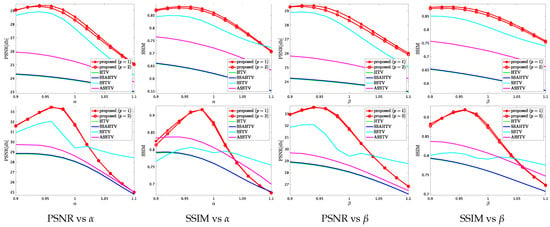

6.1. The Impact of The Weight

The weight in (8) balances spatio-spectral piecewise-smoothness and direct spatial piecewise smoothness. We verified the impact on the mixed noise removal and CS reconstruction experiments. The weight was varied from 0.01 to 0.2 at 0.01 interval. The other settings were the same as Section 5.1 and Section 5.3.

Figure 8 and Figure 9 plot PSNR or SSIM of the results by the proposed method versus various on the mixed noise removal and CS reconstruction experiments, respectively, where the values of PSNR and SSIM are averaged over the 13 HS images. One can see that is a good choice in the mixed noise removal case, and is a good choice in most cases in the CS case. The results show that the suitable range of in CS reconstruction is almost the same as mixed noise removal. ASSTV and LRTV require adjusting the weight and the hyperparameter newly for difference noise intensity, respectively, but the suitable parameter in HSSTV is noise-robust.

Figure 8.

PSNR or SSIM versus in (8) in the mixed noise removal experiment.

Figure 9.

PSNR or SSIM versus in (8) on the CS reconstruction experiment.

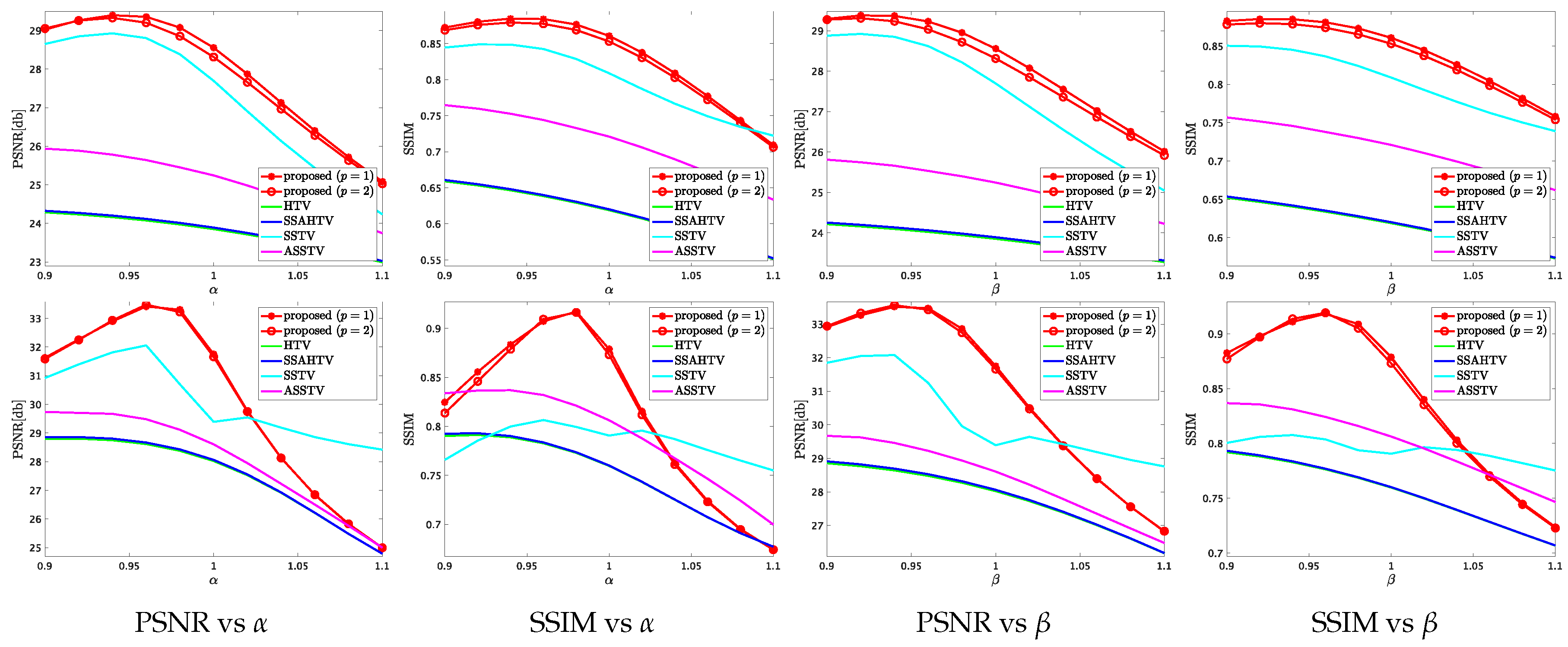

6.2. The Sensitivity of The Parameters and

To verify the sensitivity of the parameter and in (10), we conducted additional mixed noise removal experiments, where we examined various values of and . Specifically, we set and , which are hand-optimized values of the parameters and changed and from to at interval (the DC and the KSC images and the noise level (ii)). Figure 10 plots PSNR or SSIM of the results by HTV, SSAHTV, SSTV, ASSTV, and the proposed method versus or . For HTV, SSAHTV, ASSTV, and the proposed method, the graphs show that the suitable values of and do not vary significantly for both image, so the parameters and are independent of both a regularization technique and an observed image. In the SSTV cases, the shapes of the plots are different between KSC and DC. This is because in the DC case, SSTV converges for all parameter settings, while in the case of KSC, it does not converge when .

Figure 10.

PSNR or SSIM versus or on the mixed noise removal experiment (the noise level (ii), top: DC, bottom: KSC).

7. Conclusions

We have proposed a new constrained optimization approach to HS image restoration. Our proposed method is formulated as a convex optimization problem, where we utilize a novel regularization technique named HSSTV and incorporate data-fidelity as hard constraint. HSSTV evaluates direct spatial piecewise-smoothness and spatio-spectral piecewise-smoothness, and so it has a strong ability of HS restoration. Thanks to the design of the constraint-type data-fidelity, we can independently set the hyperparameters that balance between regularization and data-fidelity. To solve the proposed problem, we develop an efficient algorithm based on ADMM. Experimental results on mixed noise removal, real noise removal, and CS reconstruction demonstrate the advantages of the proposed method over various HS image restoration methods.

Author Contributions

Conceptualization, S.T., S.O., and I.K.; methodology, S.T. and S.O.; software, S.T.; validation, S.T.; formal analysis, S.T.; investigation, S.T.; writing—original draft, S.T.; writing—review and editing, S.O. and I.K.; supervision, S.O., and I.K.; project administration, S.T., S.O., and I.K.; funding acquisition, S.T., S.O., and I.K. All authors read and agreed to the published version of the manuscript.

Funding

This work was supported in part by JST CREST under Grant JPMJCR1662 and JPMJCR1666, and in part by JSPS KAKENHI under Grant 18J20290, 18H05413, and 20H02145.

Conflicts of Interest

The authors declare that there is no conflict of interest.

References

- Chang, C.I. Hyperspectral Imaging: Techniques for Spectral Detection and Classification; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2003; Volume 1. [Google Scholar]

- Plaza, A.; Benediktsson, J.A.; Boardman, J.W.; Brazile, J.; Bruzzone, L.; Camps-Valls, G.; Chanussot, J.; Fauvel, M.; Gamba, P.; Gualtieri, A.; et al. Recent advances in techniques for hyperspectral image processing. Remote Sens. Environ. 2009, 113, S110–S122. [Google Scholar] [CrossRef]

- Rasti, B.; Scheunders, P.; Ghamisi, P.; Licciardi, G.; Chanussot, J. Noise reduction in hyperspectral imagery: Overview and application. Remote Sens. 2018, 10, 482. [Google Scholar] [CrossRef]

- Willett, R.M.; Duarte, M.F.; Davenport, M.A.; Baraniuk, R.G. Sparsity and structure in hyperspectral imaging: Sensing, reconstruction, and target detection. IEEE Signal Process. Mag. 2014, 31, 116–126. [Google Scholar] [CrossRef]

- Arce, G.R.; Brady, D.J.; Carin, L.; Arguello, H.; Kittle, D.S. Compressive coded aperture spectral imaging: An introduction. IEEE Signal Process. Mag. 2014, 31, 105–115. [Google Scholar] [CrossRef]

- Yuan, Q.; Zhang, L.; Shen, H. Hyperspectral image denoising employing a spectral–spatial adaptive total variation model. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3660–3677. [Google Scholar] [CrossRef]

- Aggarwal, H.K.; Majumdar, A. Hyperspectral Image Denoising Using Spatio-Spectral Total Variation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 442–446. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.; Fang, H.; Luo, C. Anisotropic spectral-spatial total variation model for multispectral remote sensing image destriping. IEEE Trans. Image Process. 2015, 24, 1852–1866. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Sun, P.; Du, Q.; Wu, Z.; Wei, Z. Hyperspectral Image Restoration Based on Low-Rank Recovery with a Local Neighborhood Weighted Spectral-Spatial Total Variation Model. IEEE Trans. Geosci. Remote Sens. 2018, 57, 1–14. [Google Scholar] [CrossRef]

- Zhang, H.; He, W.; Zhang, L.; Shen, H.; Yuan, Q. Hyperspectral image restoration using low-rank matrix recovery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4729–4743. [Google Scholar] [CrossRef]

- Sun, L.; Ma, C.; Chen, Y.; Zheng, Y.; Shim, H.J.; Wu, Z.; Jeon, B. Low rank component induced spatial-spectral kernel method for hyperspectral image classification. IEEE Trans. Circuits Syst. Video Technol. 2019, 4133–4148. [Google Scholar] [CrossRef]

- Li, H.; Sun, P.; Liu, H.; Wu, Z.; Wei, Z. Non-Convex Low-Rank Approximation for Hyperspectral Image Recovery with Weighted Total Varaition Regularization. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018; pp. 2733–2736. [Google Scholar]

- He, W.; Zhang, H.; Zhang, L.; Shen, H. Total-variation-regularized low-rank matrix factorization for hyperspectral image restoration. IEEE Trans. Geosci. Remote Sens. 2016, 54, 178–188. [Google Scholar] [CrossRef]

- Aggarwal, H.K.; Majumdar, A. Hyperspectral unmixing in the presence of mixed noise using joint-sparsity and total variation. IEEE Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4257–4266. [Google Scholar] [CrossRef]

- Sun, L.; Wu, F.; Zhan, T.; Liu, W.; Wang, J.; Jeon, B. Weighted Nonlocal Low-Rank Tensor Decomposition Method for Sparse Unmixing of Hyperspectral Images. IEEE Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1174–1188. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Shen, H.; Zhang, L. Hyperspectral image denoising using local low-rank matrix recovery and global spatial–spectral total variation. IEEE Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 713–729. [Google Scholar] [CrossRef]

- Kong, X.; Zhao, Y.; Xue, J.; Chan, J.C.; Ren, Z.; Huang, H.; Zang, J. Hyperspectral Image Denoising Based on Nonlocal Low-Rank and TV Regularization. Remote Sens. 2020, 12, 1956. [Google Scholar] [CrossRef]

- Cao, W.; Wang, K.; Han, G.; Yao, J.; Cichocki, A. A robust PCA approach with noise structure learning and spatial–spectral low-rank modeling for hyperspectral image restoration. IEEE Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3863–3879. [Google Scholar] [CrossRef]

- Wang, Y.; Peng, J.; Zhao, Q.; Leung, Y.; Zhao, X.; Meng, D. Hyperspectral image restoration via total variation regularized low-rank tensor decomposition. IEEE Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 1227–1243. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, Z.; Jin, J.; Wang, T.; Shen, Y. Low rank constraint and spatial spectral total variation for hyperspectral image mixed denoising. Signal Process. 2018, 142, 11–26. [Google Scholar] [CrossRef]

- Sun, L.; Zhan, T.; Wu, Z.; Xiao, L.; Jeon, B. Hyperspectral mixed denoising via spectral difference-induced total variation and low-rank approximation. Remote Sens. 2018, 10, 1956. [Google Scholar] [CrossRef]

- Ince, T. Hyperspectral Image Denoising Using Group Low-Rank and Spatial-Spectral Total Variation. IEEE Access 2019, 7, 52095–52109. [Google Scholar] [CrossRef]

- Gabay, D.; Mercier, B. A dual algorithm for the solution of nonlinear variational problems via finite elements approximations. Comput. Math. Appl. 1976, 2, 17–40. [Google Scholar]

- Eckstein, J.; Bertsekas, D.P. On the Douglas—Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 1992, 55, 293–318. [Google Scholar]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar]

- Takeyama, S.; Ono, S.; Kumazawa, I. Hyperspectral Image Restoration by Hybrid Spatio-Spectral Total Variation. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 4586–4590. [Google Scholar]

- Takeyama, S.; Ono, S.; Kumazawa, I. Mixed Noise Removal for Hyperspectral Images Using Hybrid Spatio-Spectral Total Variation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3128–3132. [Google Scholar]

- Moreau, J.J. Fonctions convexes duales et points proximaux dans un espace hilbertien. C. R. Acad. Sci. Paris Ser. A Math. 1962, 255, 2897–2899. [Google Scholar]

- Bresson, X.; Chan, T.F. Fast dual minimization of the vectorial total variation norm and applications to color image processing. Inverse Probl. Imag. 2008, 2, 455–484. [Google Scholar]

- Sun, L.; He, C.; Zheng, Y.; Tang, S. SLRL4D: Joint Restoration of Subspace Low-Rank Learning and Non-Local 4-D Transform Filtering for Hyperspectral Image. Remote Sens. 2020, 12, 2979. [Google Scholar]

- Afonso, M.; Bioucas-Dias, J.; Figueiredo, M. An augmented Lagrangian approach to the constrained optimization formulation of imaging inverse problems. IEEE Trans. Image Process. 2011, 20, 681–695. [Google Scholar]

- Chierchia, G.; Pustelnik, N.; Pesquet, J.C.; Pesquet-Popescu, B. Epigraphical projection and proximal tools for solving constrained convex optimization problems. Signal Image Video Process. 2015, 9, 1737–1749. [Google Scholar]

- Ono, S.; Yamada, I. Signal recovery with certain involved convex data-fidelity constraints. IEEE Trans. Signal Process. 2015, 63, 6149–6163. [Google Scholar]

- Xie, Y.; Qu, Y.; Tao, D.; Wu, W.; Yuan, Q.; Zhang, W. Hyperspectral image restoration via iteratively regularized weighted schatten p-norm minimization. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4642–4659. [Google Scholar]

- Ono, S. L0 gradient projection. IEEE Trans. Image Process. 2017, 26, 1554–1564. [Google Scholar] [CrossRef] [PubMed]

- Takeyama, S.; Ono, S.; Kumazawa, I. Robust and effective hyperspectral pansharpening using spatio-spectral total variation. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 1603–1607. [Google Scholar]

- Takeyama, S.; Ono, S.; Kumazawa, I. Hyperspectral Pansharpening Using Noisy Panchromatic Image. In Proceedings of the Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Honolulu, HI, USA, 12–15 November 2018; pp. 880–885. [Google Scholar]

- Chan, S.H.; Khoshabeh, R.; Gibson, K.B.; Gill, P.E.; Nguyen, T.Q. An augmented Lagrangian method for total variation video restoration. IEEE Trans. Image Process. 2011, 20, 3097–3111. [Google Scholar] [CrossRef] [PubMed]

- Takeyama, S.; Ono, S.; Kumazawa, I. Hyperspectral and Multispectral Data Fusion by a Regularization Considering. In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2152–2156. [Google Scholar]

- Hansen, P.C.; Nagy, J.G.; O’Leary, D.P. Deblurring Images: Matrices, Spectra, and Filtering; SIAM: Philadelphia, PA, USA, 2006. [Google Scholar]

- Combettes, P.L.; Pesquet, J.C. Proximal splitting methods in signal processing. In Fixed-Point Algorithms for Inverse Problems in Science and Engineering; Springer: Berlin/Heidelberg, Germany, 2011; pp. 185–212. [Google Scholar]

- Golub, G.H.; Loan, C.F.V. Matrix Computations, 4th ed.; Johns Hopkins University Press: Baltimore, MD, USA, 2012. [Google Scholar]

- Chambolle, A.; Pock, T. A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 2010, 40, 120–145. [Google Scholar] [CrossRef]

- Combettes, P.L.; Pesquet, J.C. Primal-dual splitting algorithm for solving inclusions with mixtures of composite, Lipschitzian, and parallel-sum type monotone operators. Set-Valued Var. Anal. 2012, 20, 307–330. [Google Scholar] [CrossRef]

- Condat, L. A primal-dual splitting method for convex optimization involving Lipschitzian, proximable and linear composite terms. J. Optim. Theory Appl. 2013, 158, 460–479. [Google Scholar] [CrossRef]

- Ono, S.; Yamagishi, M.; Miyata, T.; Kumazawa, I. Image restoration using a stochastic variant of the alternating direction method of multipliers. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016. [Google Scholar]

- Chambolle, A.; Ehrhardt, M.J.; Richtárik, P.; Schonlieb, C.B. Stochastic primal-dual hybrid gradient algorithm with arbitrary sampling and imaging applications. SIAM J. Optim. 2018, 28, 2783–2808. [Google Scholar] [CrossRef]

- Combettes, P.L.; Pesquet, J.C. Stochastic forward-backward and primal-dual approximation algorithms with application to online image restoration. In Proceedings of the 2016 24th European Signal Processing Conference (EUSIPCO), Budapest, Hungary, 29 August–2 September 2016; pp. 1813–1817. [Google Scholar]

- Ono, S. Efficient constrained signal reconstruction by randomized epigraphical projection. In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 4993–4997. [Google Scholar]

- SpecTIR. Available online: http://www.spectir.com/free-data-samples/ (accessed on 4 July 2016).

- MultiSpec. Available online: https://engineering.purdue.edu/biehl/MultiSpec (accessed on 4 July 2016).

- GIC. Available online: http://www.ehu.eus/ccwintco/index.php?title=Hyperspectral_Remote_Sensing_Scenes (accessed on 4 July 2016).

- Liu, W.; Lee, J. A 3-D Atrous Convolution Neural Network for Hyperspectral Image Denoising. IEEE Trans. Geosci. Remote Sens. 2019. [Google Scholar] [CrossRef]

- Gou, Y.; Li, B.; Liu, Z.; Yang, S.; Peng, X. CLEARER: Multi-Scale Neural Architecture Search for Image Restoration. In Proceedings of the NeurIPS 2020: Thirty-Fourth Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar]

- Baraniuk, R.G. Compressive sensing. IEEE Signal Process. Mag. 2007, 24, 118–121. [Google Scholar] [CrossRef]

- Candès, E.; Wakin, M. An introduction to compressive sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).