- Article

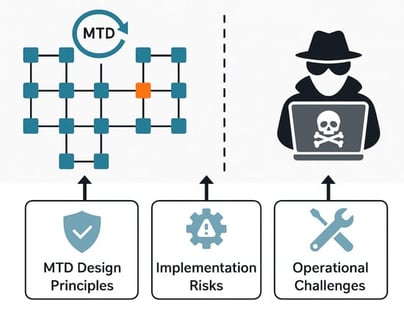

Rethinking the Security Assurances of MTD: A Gap Analysis for Network Defense

- Łukasz Jalowski,

- Marek Zmuda and

- Paulina Rekosz

- + 1 author

Moving Target Defense (MTD) is a paradigm that has the potential to revolutionize the approach to network security. Although a significant number of papers have been published on the topic, there are still no standards or any dominant implementations of this concept. This article identifies and attempts to bridge the gap in understanding various aspects of MTD security while also defining the research directions necessary to implement MTD techniques in real-world scenarios. It discusses the security of key design principles of MTD, considers problems regarding applying MTD to real networks, and finally addresses threat modeling in the context of MTD. By aggregating various security aspects related to MTD, some of which have not been typically discussed in the available literature on the subject, this work aims to assist in designing future MTD schemes, help navigate around various security caveats, and highlight possible research directions that have not been sufficiently explored in the existing literature.

7 February 2026