1. Introduction

Internet of Vehicles (IoV) and autonomous driving technologies have fundamentally transformed vehicular networks, giving rise to vehicular edge computing (VEC) [

1]. VEC deploys computing and storage resources at the network edge—including roadside units (RSUs) and base stations—enabling vehicles to offload computation-intensive tasks locally. This paradigm shift supports latency-sensitive applications, such as real-time traffic prediction, autonomous navigation, and collaborative perception [

2,

3]. These applications rely on sophisticated AI models trained on vehicle-generated data that capture road conditions, traffic patterns, and driving behaviors.

Conventional AI model training aggregates data from all vehicles at a central cloud server [

4]. However, this centralized approach faces critical limitations in VEC environments. First, transmitting massive volumes of raw vehicular data incurs prohibitive communication overhead and latency, undermining the requirements of real-time applications. Second, centralized data collection raises severe privacy concerns, as vehicular data often contain sensitive information, including location trajectories and personal driving patterns [

5].

Federated learning (FL) offers a compelling alternative by enabling collaborative model training without sharing raw data [

6]. In FL, vehicles train models locally and exchange only model parameters (e.g., gradients or weights) with an edge server, which aggregates these updates to refine a global model [

7]. This decentralized approach reduces communication overhead while preserving data privacy.

However, deploying FL in dynamic and untrusted VEC environments presents three fundamental challenges [

8]:

Challenge 1: Network Dynamics and Heterogeneity: High-speed vehicle mobility causes intermittent connectivity and rapid topology changes. Moreover, vehicles exhibit substantial heterogeneity in computational resources, data distributions (non-independent and identically distributed or non-IID), and availability. Random participant selection can severely degrade model convergence and training stability [

9].

Challenge 2: Security and Trust: The open nature of VEC environments exposes FL to various attacks. Malicious vehicles may inject poisoned model updates to compromise global model performance or launch Sybil attacks to gain disproportionate influence [

10]. Without robust security mechanisms, the integrity of collaboratively trained models remains vulnerable.

Challenge 3: Incentive Mechanisms: FL participation consumes valuable vehicle resources, including computation, energy, and bandwidth. Rational vehicle owners require adequate compensation to contribute their resources; otherwise, insufficient participation and free-riding behaviors emerge, undermining the collaborative ecosystem [

11].

To address these interrelated challenges, we propose DTB-FL, a novel framework that synergistically integrates digital twin (DT) and blockchain (BC) technologies with federated learning in VEC. Our framework leverages DT to create high-fidelity virtual replicas of the physical VEC network, capturing real-time vehicle dynamics, network conditions, and resource availability. These digital twins enable predictive analytics for proactive participant selection—the edge server selects vehicles predicted to maintain stable connectivity and sufficient resources throughout training rounds, thereby enhancing efficiency and convergence.

Simultaneously, we employ blockchain technology to establish a decentralized, transparent, and tamper-proof infrastructure for security and incentive management. Our reputation-based smart contract evaluates model update quality and assigns dynamic reputation scores to participants. These scores weight contributions during model aggregation, mitigating the impact of malicious updates. Additionally, the smart contract automates fair and transparent reward distribution, incentivizing honest and reliable participation.

The main contributions of this paper are as follows:

Novel Three-Layer Architecture: We design DTB-FL, the first framework to synergistically integrate digital twin, blockchain, and federated learning for addressing network dynamics, security threats, and incentive challenges in VEC environments.

DT-Driven Participant Selection: We develop a proactive selection algorithm that leverages digital twin predictive analytics to optimize vehicle selection, significantly improving FL convergence speed and stability under high mobility.

Blockchain-Based Security and Incentives: We implement a reputation management system and automated reward mechanism through smart contracts, ensuring model integrity while motivating sustained vehicle participation.

Comprehensive Evaluation: We demonstrate through extensive simulations that DTB-FL achieves superior model accuracy, training efficiency, and attack resilience compared to state-of-the-art baselines.

The remainder of this paper is organized as follows:

Section 2 reviews related work;

Section 3 presents the system model and problem formulation;

Section 4 details the DTB-FL framework design;

Section 5 analyzes convergence and complexity;

Section 6 evaluates performance through simulations;

Section 7 concludes this paper.

DTB-FL is particularly suited for safety-critical vehicular applications such as cooperative perception, traffic prediction, and road hazard detection, where model trustworthiness is paramount. The increasing availability of powerful onboard computing resources and 5G/C-V2X communication infrastructure in modern connected vehicles [

12] makes the deployment of DTB-FL increasingly practical.

3. System Model and Problem Formulation

This section presents the DTB-FL system architecture, including the network model, federated learning process, digital twin construction, blockchain integration, and threat model. We then formulate the optimization problem that captures the trade-offs among training efficiency, security, and incentive provision.

Figure 1 illustrates the overall system architecture, while

Table 2 summarizes the key notations used throughout this paper.

3.1. Network Model

We consider a vehicular edge computing (VEC) network comprising an edge server (ES), multiple roadside units (RSUs), and N vehicles denoted by . The ES orchestrates the federated learning process through RSUs, which provide network connectivity to vehicles. Each vehicle possesses the following capabilities:

A local dataset generated by onboard sensors;

Computational resources characterized by CPU frequency at time t;

Communication capability with uplink data rate

where

B denotes the allocated bandwidth,

is the transmission power,

is the time-varying channel gain, and

represents the noise power.

Vehicle mobility causes significant temporal variations in the channel gain , leading to highly dynamic network conditions that complicate participant selection and resource allocation.

3.2. Federated Learning Model

The objective is to collaboratively train a global model parameterized by

that minimizes the weighted average loss across all vehicles:

where

represents vehicle

i’s local loss function evaluated on its dataset

. The training process proceeds iteratively over multiple communication rounds as follows:

Participant Selection: At round t, the ES selects a subset of vehicles based on digital twin predictions of their future availability and channel conditions.

Model Distribution: The current global model parameters are broadcast to all selected vehicles in .

Local Training: Each selected vehicle performs local stochastic gradient descent (SGD) for a fixed number of epochs to compute its model update .

Update Upload: Vehicles transmit their local model updates to the ES via their associated RSUs.

Secure Aggregation: The ES aggregates the received updates using blockchain-verified reputation-based weights:

where

represents vehicle

i’s aggregation weight, which is determined by its reputation score maintained on the blockchain. The weights satisfy

.

3.3. Digital Twin Layer

The digital twin layer maintains real-time virtual replicas of all vehicles to enable predictive participant selection and proactive resource management. Each vehicle’s digital twin

continuously tracks a comprehensive state vector:

where

represents the vehicle’s 2D coordinate position,

denotes the velocity vector,

is the channel gain,

represents the computational capacity (CPU frequency), and

is the blockchain-maintained reputation score. Crucially, these state parameters are obtained through infrastructure-based measurements conducted by RSUs and the ES, as detailed in

Section 4.2.2. This infrastructure-centric approach ensures data trustworthiness despite the potentially untrusted nature of vehicles, preventing malicious vehicles from falsifying their reported states.

3.3.1. Map-Constrained Mobility Modeling

The digital twin layer incorporates a digital road map , where denotes the set of all valid coordinates on road segments, and represents the set of coordinates at intersections. Vehicle positions are constrained to the road network topology, i.e., . This map-based constraint enables realistic mobility prediction that conforms to actual road infrastructure rather than unconstrained two-dimensional motion.

3.3.2. Predictive State Estimation

The digital twin employs machine learning-based predictive models to forecast future vehicle states over a prediction horizon , yielding the predicted state . The prediction methodology comprises three complementary components:

Mobility Prediction: For short-term trajectory forecasting (time horizons of 5 to 30 s), an extended Kalman filter (EKF) predicts future positions by integrating current velocity estimates with kinematic models. The EKF prediction incorporates road network constraints through a projection operation: If the unconstrained prediction yields a position outside the valid road network (), the predicted position is projected onto the nearest valid road coordinate. For longer time horizons (beyond 30 s), the digital twin employs a map-matching algorithm that considers the following: (i) the vehicle’s current road segment, (ii) its heading direction, and (iii) probabilistic turning behavior at intersections derived from historical traffic patterns.

Channel Prediction: An LSTM network trained on historical channel measurements forecasts future channel conditions. The training process leverages the spatial correlation between vehicle positions on specific road segments and observed channel quality. By learning location-dependent propagation patterns, the LSTM enables accurate channel prediction conditioned on the predicted future position .

Computational Resource Prediction: The digital twin employs ARIMA-based time-series analysis to predict computational resource availability patterns. The prediction model accounts for temporal factors such as time of day and contextual factors such as vehicle type (e.g., personal vehicles may have different usage patterns than commercial vehicles). Computational capacity predictions are validated against resource attestation mechanisms described in

Section 4.2.2.

3.3.3. Prediction-Based Participant Selection

The map-aware predictive approach ensures that forecasted vehicle states remain physically plausible, thereby improving the reliability of proactive participant selection. The selection algorithm assigns lower priority to vehicles predicted to move into road segments with poor RSU coverage or to vehicles approaching intersections where trajectory prediction exhibits high variance (e.g., due to multiple possible turning directions). This prediction-driven strategy enhances training round completion reliability by selecting vehicles that are likely to maintain stable connectivity throughout the training round duration.

3.4. Blockchain Integration

A permissioned blockchain maintains decentralized trust and implements the incentive mechanism, providing tamper-proof record-keeping and automated smart contract execution. The blockchain layer supports three primary functions:

Reputation Management: A smart contract

evaluates the quality of each vehicle’s submitted model update and updates its reputation score accordingly:

where

measures the quality of vehicle

i’s update (detailed in

Section 6), and

is a momentum factor that balances historical reputation with recent performance. The reputation score is bounded to

, with higher values indicating more trustworthy participants.

Incentive Distribution: A smart contract

automatically distributes cryptocurrency rewards to participating vehicles based on their contributions:

where

is a fixed participation reward, and

is an additional performance-based reward distributed proportionally to each vehicle’s reputation-weighted data contribution. This mechanism incentivizes both participation and high-quality contributions.

Update Verification: Before aggregation, the blockchain verifies the authenticity and integrity of model updates using cryptographic signatures and hash verification. Each update is cryptographically linked to its submitting vehicle’s identity, preventing impersonation attacks and ensuring non-repudiation.

3.5. Threat Model

We consider a realistic threat model that reflects the security challenges in vehicular federated learning:

Trusted Entities: The edge server (ES) and roadside units (RSUs) are assumed to be trusted infrastructure components operated by reliable network providers. These entities correctly execute the protocol specifications and do not collude with adversaries.

Untrusted Vehicles: We assume that a subset of vehicles is controlled by adversaries, where for some attack fraction . Honest vehicles (those in ) follow the protocol correctly.

Adversarial Capabilities: Malicious vehicles can execute the following attacks:

Model Poisoning: A malicious vehicle can submit a crafted model update designed to degrade global model performance or introduce backdoor triggers.

Sybil Attacks: Adversaries may attempt to create multiple fake vehicle identities to gain disproportionate influence over the aggregation process.

Free-Riding: Malicious vehicles may submit low-quality or random updates to minimize resource expenditure while attempting to claim rewards.

Adversarial Objective: The adversary seeks to maximize the attack’s impact on model performance while evading detection by the reputation system. Formally, the adversary solves the following:

where

is the global model resulting from aggregating poisoned updates,

is the model that would result from honest updates only, and

represents the quality threshold below which updates are flagged as suspicious.

3.6. Problem Formulation

Building on the models presented above, we formulate a multi-objective optimization problem that minimizes the system operator’s total cost (comprising training time and reward payments) while maintaining model accuracy and security guarantees. The optimization operates over

T training rounds:

where the objective function components and constraints are defined as follows:

Objective Function: The first term

represents the duration of training round

t, given by the following:

where

is vehicle

i’s computation time (

denotes the CPU cycles required per data sample), and

is the communication time for transmitting an update of size

S bits. The second term

represents the total reward cost in round

t. The weight parameters

balance the relative importance of minimizing training time versus operational costs.

Constraint C1 (Accuracy Requirement): The final trained model must achieve at least the target accuracy threshold on a held-out validation dataset.

Constraint C2 (Participation Level): Exactly K vehicles are selected in each round to maintain consistent aggregation quality and predictable resource consumption.

Constraint C3 (Malicious Influence Bound): The cumulative aggregation weight assigned to malicious vehicles (if any are inadvertently selected) must not exceed , limiting their potential to corrupt the global model. This constraint is enforced through reputation-based weight assignment.

Constraint C4 (Minimum Reputation): Only vehicles with reputation scores above threshold are eligible for selection, excluding previously identified malicious actors.

Constraint C5 (Valid Aggregation Weights): The aggregation weights must form a valid probability distribution over the selected participants.

This formulation captures the fundamental trade-offs in vehicular federated learning: The system must balance training efficiency (minimizing time), operational cost (minimizing rewards), model quality (meeting accuracy targets), and security (limiting adversarial influence). The challenge lies in jointly optimizing the participant selection strategy

and aggregation weights

under dynamic vehicular network conditions, unpredictable mobility patterns, and the presence of strategic adversaries.

Section 4 presents our digital twin-enabled and blockchain-secured solution to this optimization problem.

4. The Proposed DTB-FL Framework

Building on the technical foundations described in

Section 3, we now present our digital twin and blockchain-empowered federated learning (DTB-FL) framework. The framework addresses three critical challenges through targeted technology deployment:

Digital Twin Targets Network Dynamics: Predicts vehicle mobility and resource availability to proactively select stable participants.

Blockchain Targets Security and Trust: Provides tamper-proof reputation management and automated incentive distribution.

Integration Targets Efficiency: Synergistic operation where DT enhances BC’s accuracy and BC enhances DT’s trustworthiness

We first detail the framework architecture and integration principles and then elaborate each component’s operation.

4.1. Framework Architecture

The key innovation of DTB-FL lies in the synergistic integration of digital twin, blockchain, and federated learning technologies within a carefully designed three-layer architecture.

4.1.1. System Components and Layers

As illustrated in

Figure 1, our architecture comprises three main layers that work in concert to enable secure and efficient vehicular federated learning.

Physical Layer: This layer consists of real-world VEC components, including vehicles equipped with sensors and computational resources, roadside units (RSUs) providing wireless connectivity, and the edge server (ES) coordinating the learning process. Vehicles collect local data (e.g., traffic conditions, road images) and perform distributed model training without sharing raw data, preserving privacy while enabling collaborative intelligence.

Digital Twin Layer: Residing at the ES, this layer maintains a real-time virtual replica of the physical vehicular network. It captures dynamic vehicle states, including position, velocity, channel quality, and computational capacity through continuous monitoring. Predictive models forecast future states over the training round duration, enabling proactive rather than reactive decision-making. This foresight is critical in highly dynamic VEC environments where vehicle mobility causes rapid topology changes.

Blockchain and Control Layer: This decentralized layer serves as the trust anchor for the entire system. A permissioned blockchain network, maintained collaboratively by the ES and RSUs, records all critical FL interactions in an immutable ledger. Smart contracts automate reputation score updates based on contribution quality and execute cryptocurrency-based reward distribution transparently. The ES acts as the central orchestrator, synthesizing information from both the DT and blockchain layers to manage participant selection, model aggregation, and security enforcement.

4.1.2. Component Roles and Interactions

Each technology serves a distinct role while contributing synergistically to system objectives.

Digital Twin as Predictive Intelligence: The DT layer maintains a synchronized virtual representation of the vehicular network, continuously monitoring vehicle states (position, velocity, channel quality, and computational capacity). Predictive models forecast future conditions over the training round horizon. Before each FL round, the DT evaluates which vehicles will likely maintain stable connectivity and sufficient resources throughout training. This predictive capability transforms reactive participant selection into proactive optimization, dramatically reducing training interruptions from vehicle mobility.

Blockchain as Trust Infrastructure: The blockchain layer provides decentralized trust through two key functions. First, it maintains an immutable record of all FL interactions—model submissions, quality evaluations, and reputation histories—creating an auditable trail preventing retroactive tampering. Second, smart contracts automatically execute reputation updates and reward distribution based on predefined rules, eliminating the need for a trusted central authority. This creates a transparent, tamper-proof system for managing trust in an inherently untrusted vehicular environment.

Federated Learning as Collaborative Intelligence: The FL layer orchestrates distributed training, leveraging insights from both DT and blockchain components. DT predictions enable participant selection optimizing for training completion probability, while blockchain reputation scores weight model aggregation to suppress malicious contributions. Critically, FL provides the feedback loop—actual training performance refines DT predictions, and gradient quality scores update blockchain reputations. This bidirectional flow enables FL to operate efficiently despite network dynamics and securely despite adversaries, achieving both performance and robustness.

The synergy emerges because each technology addresses a gap in the others: DT handles dynamic uncertainty (mobility and resources), BC handles trust uncertainty (malicious actors and incentives), and FL provides a collaborative learning substrate that benefits from both while feeding back performance data to improve their accuracy.

4.1.3. Bidirectional Integration

The three components interact through bidirectional flows that create a self-improving system through two mechanisms:

Feedforward Integration (DT/BC → FL): The DT provides

predictive guidance by identifying vehicles predicted to maintain stable connectivity and adequate resources throughout training. The blockchain provides

trust weights via reputation scores reflecting historical contribution quality. These inputs combine via the synergy utility function (Equation (

10)), which weights DT-predicted performance by blockchain-verified trust, optimizing both efficiency and security.

Feedback Integration (FL → DT/BC): FL performance metrics—actual completion times and quality scores—enable the DT to self-correct its predictions through online learning. Simultaneously, FL gradient analysis provides quality scores to blockchain smart contracts, updating reputations to reflect actual contribution value rather than claimed capabilities. The blockchain’s accumulated reputation history enhances DT predictions by identifying consistently reliable vehicles, creating a virtuous cycle where past behavior informs future selection.

This bidirectional reinforcement creates two critical synergies.

DT Enhances Blockchain Security: Proactive DT-based selection creates a stable, reliable cohort each round. This stability acts as a noise filter for the reputation system—by minimizing dropouts and selecting high-performing participants, the DT ensures that honest gradients are more consistent in direction and magnitude. This homogeneity makes cosine similarity-based quality evaluation more effective at identifying malicious updates, which stand out as directional anomalies. Without DT guidance, random sampling would include unstable vehicles for which their dropouts or degraded performance create noise, masking adversarial behavior.

Blockchain Enhances DT Selection: The blockchain provides a critical trust metric feeding back to the digital twin. Historical trustworthiness is as important as predicted performance. Therefore, we incorporate reputation score

into the DT utility function:

where

and

are the DT-predicted data rate and CPU frequency,

and

are normalization constants, and

balances communication versus computation. This multiplicative integration ensures that low-reputation vehicles receive proportionally reduced utility scores regardless of predicted capability, preventing repeated selection of high-performing but malicious vehicles and hardening FL against persistent adversaries.

4.1.4. High-Level Workflow

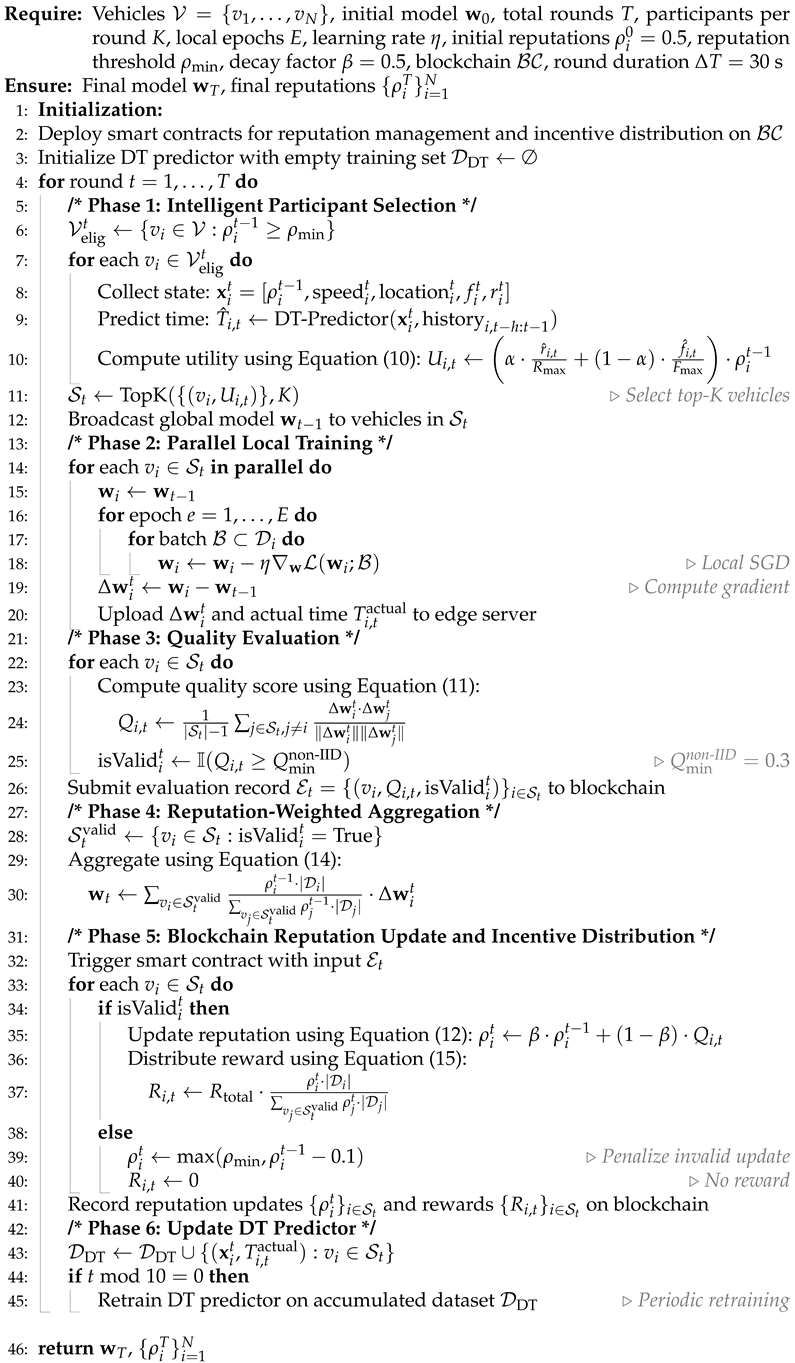

The integrated system operates through six coordinated phases in each federated learning round (see Algorithm 2):

Intelligent Participant Selection: The ES combines blockchain reputation scores and DT-predicted vehicle states to select the top-K participants via the synergy utility function.

Parallel Local Training: Selected vehicles train on private data and upload model updates along with actual training times to the ES.

Quality Evaluation: The ES evaluates update quality using cosine similarity analysis, classifying contributions as valid or invalid based on the threshold .

Reputation-Weighted Aggregation: Valid updates are aggregated with weights proportional to reputation scores and dataset sizes, producing the new global model.

Blockchain Updates and Incentives: Smart contracts automatically update reputation scores based on quality evaluations and distribute cryptocurrency rewards accordingly.

DT Predictor Refinement: Actual training times are used to retrain the DT predictor periodically, improving prediction accuracy for future rounds.

This workflow creates a self-improving cycle: Accurate DT predictions enable better participant selection, leading to higher-quality updates, which refine reputation scores and enhance future predictions. The detailed technical implementation of each phase is presented in

Section 4.4.

4.2. Digital Twin-Based Participant Selection

To mitigate the effects of high mobility and resource heterogeneity in vehicular edge computing, we design a proactive participant selection mechanism that leverages the predictive capabilities of the digital twin. Rather than selecting vehicles based solely on current status snapshots, our approach identifies those predicted to remain suitable throughout the upcoming training round.

4.2.1. Utility-Based Selection Strategy

The selection is guided by the synergy utility function defined in Equation (

10), which quantifies the suitability of vehicle

i for round

t. This function prioritizes vehicles with higher predicted communication rates and computational capabilities while incorporating trust via reputation scores, thereby minimizing expected round completion time (as formulated in

in

Section 3).

Using the forecasted state from the DT, we estimate the future data rate and CPU frequency that the vehicle is expected to maintain during training. The parameters and represent the maximum achievable data rate and CPU frequency in the network for normalization, and balances communication and computation priorities based on whether the FL task is model-size-limited or computation-limited.

At the start of round

t, the ES first filters vehicles by a minimum reputation threshold

to exclude consistently poor performers or adversaries. For remaining candidates, it computes

and selects the top-

K vehicles to form the participant set

. This proactive strategy reduces training interruptions from vehicle dropouts and resource bottlenecks, enhancing both FL efficiency and stability. The procedure is formalized in Algorithm 1.

| Algorithm 1 DT-Based Proactive Participant Selection |

![Futureinternet 17 00505 i001 Futureinternet 17 00505 i001]() |

Map-Aware Utility Computation: The utility function in Equation (

10) leverages map-constrained predictions from the digital twin. For vehicle

i traveling on road segment

, the DT predicts not only future channel quality but also the likelihood of maintaining RSU coverage based on the road trajectory and network topology. This coverage consideration is implicitly captured through the predicted channel quality

: vehicles on road segments with poor RSU coverage or approaching coverage boundaries exhibit degraded channel predictions, resulting in lower predicted data rates

and, consequently, lower utility scores. This geographic awareness prevents the selection of participants likely to experience disconnection mid-training, even if their current channel conditions appear favorable.

4.2.2. Infrastructure-Based State Estimation

A critical consideration in our framework is obtaining reliable state information from potentially untrusted vehicles—a fundamental trust paradox in security-critical systems. To resolve this, the digital twin layer relies primarily on infrastructure-based measurements performed by trusted RSUs and the edge server, rather than vehicle self-reports. This ensures the integrity of the state vector even in the presence of malicious participants who might falsify their capabilities to gain selection.

Channel State Estimation ( and ): RSUs continuously monitor the wireless channel quality of connected vehicles through standard physical layer measurements that cannot be manipulated by vehicles:

Reference Signal Received Power (RSRP) and Signal-to-Interference-Plus-Noise Ratio (SINR) are measured directly at the RSU receiver based on uplink transmissions.

The Channel Quality Indicator (CQI) is computed based on observed signal characteristics and channel reciprocity.

The predicted data rate

is calculated using the Shannon capacity formula with infrastructure-measured parameters:

where

B is the allocated bandwidth.

Computational Capacity Verification (): While vehicles initially report their computational capabilities during registration, the ES employs a computational capacity attestation mechanism before each training round:

The ES sends a calibrated computational task with known complexity CPU cycles (e.g., a standardized matrix multiplication benchmark).

Vehicle i must return the correct result within deadline .

The verified computational frequency is estimated as follows:

where

is the actual completion time measured by the ES.

Vehicles failing to meet the deadline or returning incorrect results are excluded from selection.

Mobility Tracking ( and ): Vehicle positions are determined through infrastructure-based localization, assuming synchronized RSUs via GPS or network time protocol:

Multiple RSUs perform time difference of arrival (TDoA) measurements using vehicle uplink signals.

Position is triangulated using at least three RSU measurements with sub-meter accuracy.

Velocity is derived from consecutive position estimates: .

This infrastructure-centric approach ensures that the digital twin operates on trustworthy data, maintaining the integrity of the proactive selection mechanism even when vehicles are potentially malicious. The utility function in Equation (

10) thus uses only verified values

and

, not self-reported claims, preventing adversaries from gaming the selection process through capability inflation.

4.3. Blockchain-Based Security and Incentives

To counter security threats from malicious participants and address incentive deficiencies in voluntary collaboration, we implement a comprehensive mechanism using a permissioned blockchain and smart contracts.

4.3.1. Reputation Management

We maintain a dynamic reputation score

for each vehicle

i, reflecting its trustworthiness based on historical contribution quality. This score is stored on the blockchain and updated after each round via a smart contract. For an update

from vehicle

, the ES evaluates quality using cosine similarity of gradients. Let

denote the gradient (model update). The quality score

is computed as follows:

Under this metric, honest updates from vehicles with similar optimization objectives align closely in direction, yielding high

values (typically

), while malicious updates that inject adversarial perturbations diverge significantly, resulting in low scores. The smart contract updates reputation using an exponential moving average:

where

is the decay factor that balances historical behavior with recent performance. We set

by default to provide sufficient inertia against temporary fluctuations while remaining responsive to sustained behavioral changes.

4.3.2. Cross-Edge Reputation Portability

To address vehicle mobility across edge server boundaries—a common occurrence in vehicular networks where vehicles may traverse multiple infrastructure coverage zones during their journeys—we implement a federated reputation management system that maintains reputation continuity across geographic regions.

Reputation Blockchain Federation: Multiple edge servers in a geographic region (e.g., a metropolitan area or highway corridor) form a consortium blockchain network with shared ledger access. When vehicle i moves from edge server ’s coverage area to ’s area, the following occurs:

records a reputation certificate on the shared blockchain before handover.

retrieves this certificate from the blockchain and initializes the vehicle with .

The certificate includes a cryptographic signature , preventing reputation tampering or forgery.

Hybrid Trust Initialization: For vehicles with limited interaction history at a particular edge server, we employ a hybrid model that combines transferred reputation, verification results, and conservative defaults:

where

is the default neutral initialization for completely new vehicles, and

is a bonus for successfully passing computational verification (

Section 4.2.2), providing initial trust to demonstrably capable vehicles.

Minimum Interaction Threshold: Our system is designed to provide security benefits even with limited interactions within a single edge server’s coverage. Assuming 30 s training rounds and a typical RSU coverage of 2–3 km in urban environments, vehicles traveling at 40–60 km/h remain within an edge server’s coverage for approximately 2–4 min, enabling 5–15 training rounds during a typical commute segment. The reputation mechanism operates effectively within this time frame:

Rounds 1–3: Vehicle participates with basic verification (computational attestation) and neutral reputation.

Rounds 4+: Reputation begins to differentiate honest from malicious behavior with statistical significance, as malicious vehicles consistently receive low quality scores.

This design ensures meaningful reputation establishment within typical vehicle dwell times, while the blockchain federation enables long-term trust accumulation across the vehicle’s entire journey.

4.3.3. Secure Aggregation and Incentive Mechanism

Reputation scores enable secure model aggregation that mitigates the influence of malicious participants. We replace standard FedAvg with a trust-weighted approach:

This weights contributions by both data quantity and reputation quality, amplifying reliable inputs and marginalizing potential threats. Vehicles with low reputation (e.g., ) contribute negligibly even if they possess large datasets, thereby limiting the influence of malicious actors on the global model without requiring explicit Byzantine detection.

The smart contract also distributes cryptocurrency rewards (e.g., tokens on the blockchain) post-round to incentivize sustained high-quality participation. Vehicle

i’s reward

is calculated as follows:

where

is the total reward pool for round

t. This mechanism creates a virtuous cycle: vehicles that maintain high reputation scores through consistent honest behavior earn proportionally larger rewards, incentivizing long-term cooperation. The transparency of blockchain ensures that all participants can verify the fairness of reward distribution by auditing the smart contract’s execution, preventing disputes and promoting long-term engagement in the federated learning ecosystem.

4.3.4. Design Rationale and Limitations

Rationale for Cosine Similarity: We employ cosine similarity for quality assessment due to its distinct advantages in the federated learning context:

Scale Invariance: Cosine similarity measures the angular alignment between gradient vectors independent of their magnitudes. This property is critical because vehicles possess datasets of varying sizes (

), leading to natural variations in gradient magnitudes even among honest participants. By focusing on direction rather than magnitude, cosine similarity prevents penalizing vehicles simply due to smaller datasets while effectively detecting malicious gradients that point in adversarial directions [

40].

Interpretability: The bounded range provides intuitive interpretation: Values near 1 indicate alignment (likely honest), values near 0 suggest independence, and negative values indicate opposition (potentially malicious). This facilitates straightforward threshold-based detection.

Robustness to Data Heterogeneity: In non-IID settings, honest gradients computed on different data distributions may have different magnitudes but should still point toward reducing the global loss. Cosine similarity captures this directional consistency while being robust to magnitude variations caused by statistical heterogeneity [

41].

Computational Efficiency: The metric requires only inner products and norms, with complexity where d is the model dimension, making it scalable for large neural networks in resource-constrained VEC environments.

Established Effectiveness: Cosine similarity and related angular metrics have been successfully employed in Byzantine-robust aggregation methods such as Krum [

40] and FABA [

42], demonstrating their effectiveness in identifying outlier gradients in adversarial FL settings.

Robustness to Non-IID Data: A critical consideration is distinguishing between legitimate gradient divergence due to non-IID data and malicious poisoning. Our reputation mechanism addresses this through two key insights:

Statistical Differentiation: While non-IID data causes gradient variance, malicious updates exhibit systematically different patterns. Honest gradients, despite data heterogeneity, maintain a positive correlation with the true optimization direction. In contrast, poisoned gradients from label-flipping attacks point in adversarial directions. Our experiments (detailed in

Section 6) demonstrate that honest vehicles maintain

even under high non-IID conditions, while malicious vehicles executing label-flipping attacks consistently score

.

Adaptive Normalization: Rather than using fixed reputation penalties, we implement an adaptive mechanism that accounts for expected non-IID variance by establishing a minimum quality threshold:

This threshold represents the minimum similarity expected for honest vehicles under non-IID conditions, determined empirically in our experiments. Updates scoring below this threshold are flagged as potentially malicious and receive reputation penalties. This prevents unfair penalization of vehicles with rare data distributions while maintaining security.

Additionally, the reputation update in Equation (

5) uses a decay factor

by default, providing inertia that prevents temporary divergence (due to local data batches or transient network conditions) from drastically affecting long-term reputation. This temporal smoothing is crucial for maintaining fairness under non-IID conditions.

Limitations Against Sophisticated Collusion: While the cosine similarity metric in Equation (

11) is effective for identifying and isolating individual malicious actors who submit divergent updates, we acknowledge its limitations against more sophisticated, coordinated attacks. The primary vulnerability is the

collusion attack, where a group of malicious vehicles (potentially Sybil identities controlled by a single adversary) submit carefully crafted malicious updates that are highly similar to each other. In such a scenario, these colluding attackers would achieve high pairwise similarity scores among themselves, artificially inflating their reputations and potentially penalizing honest participants whose updates may differ due to non-IID data.

However, the proposed DTB-FL framework is uniquely equipped to mitigate such advanced threats by leveraging the synergy between the digital and physical layers. Unlike purely software-based defense mechanisms, our framework can correlate digital behavior (gradient submissions) with physical-world context provided by the digital twin layer. The DT maintains real-time, high-fidelity data on vehicle states, including precise location, velocity, and trajectory. This enables a second layer of defense against collusion:

Physical Plausibility Check: The Edge Server can implement an advanced heuristic that flags a potential collusion ring if a cluster of vehicles simultaneously exhibits the following: (1) geographic co-location or anomalous, coordinated mobility patterns (e.g., traveling in a tight, unlikely convoy) and (2) submitted gradients showing high intra-cluster similarity while deviating significantly from the broader consensus of other participants. An honest, diverse set of vehicles is unlikely to produce such a strong spatio–digital correlation.

This cross-layer validation turns a critical vulnerability into a detectable anomaly. While a sophisticated attacker can forge digital gradients, faking coordinated physical locations and trajectories is substantially more difficult and costly. By incorporating this physical context into the trust evaluation, the ES can proactively penalize the reputation of the suspected colluding cluster, ensuring the integrity of the global model. While cosine similarity provides strong defense against individual Byzantine attackers, we address the remaining collusion threat through the novel integration of DT-based physical plausibility checks described above. This capability represents a promising direction for future enhancements of the reputation algorithm within the DTB-FL architecture.

4.4. Complete System Algorithm

Building on the participant selection strategy and security mechanisms, Algorithm 2 presents our complete DTB-FL training protocol that integrates DT-based prediction, blockchain verification, and adaptive reputation management. The algorithm operates in six coordinated phases per communication round: (1) intelligent participant selection using DT predictions and reputation scores, (2) parallel local training on selected vehicles, (3) blockchain-based quality evaluation of model updates, (4) reputation-weighted global aggregation, (5) smart contract-driven reputation updates with tokenized incentives, and (6) adaptive refinement of the DT predictor using actual training times. This design ensures that only high-quality contributions influence the global model while maintaining transparency and accountability through blockchain records.

| Algorithm 2 DTB-FL Training Protocol |

![Futureinternet 17 00505 i002 Futureinternet 17 00505 i002]() |

4.4.1. Algorithm Walkthrough

Initialization Phase: The edge server initializes the blockchain network by deploying smart contracts for reputation management and reward distribution. The DT predictor begins with an empty training dataset that will accumulate historical training records over time. All vehicles start with neutral reputation scores () to ensure fair initial participation opportunities.

Phase 1: Intelligent Participant Selection: At each round

t, the edge server filters vehicles with a reputation that exceeds the minimum threshold (

) to exclude consistently poor performers. For each eligible vehicle

, the server collects current state information including reputation, mobility status, and device capabilities (verified through infrastructure-based measurements as described in

Section 4.2.2) and then uses the DT predictor to estimate training time

. The utility score

computed via Equation (

10) balances contribution quality (via reputation) against training efficiency (via predicted communication and computation capabilities), with hyperparameter

controlling the communication–computation trade-off. The top-

K vehicles with highest utility scores are selected, and the current global model

is broadcast to them.

Phase 2: Parallel Local Training: Selected vehicles independently train on their local datasets for E epochs using mini-batch stochastic gradient descent. Each vehicle records its actual training and communication duration and computes the model update , which represents the gradient direction. Both the gradient update and timing information are uploaded to the edge server, with the latter serving as ground truth labels for refining the DT predictor.

Phase 3: Quality Evaluation: The edge server evaluates each received update by computing its cosine similarity with all other updates using Equation (

11). This measures how well the update aligns with the consensus direction. Updates are marked as valid if their quality score

meets the minimum threshold

, which accounts for expected variance in non-IID settings, as discussed in

Section 4.3.4. The evaluation record

containing all quality scores and validity flags is submitted to the blockchain as a transaction, ensuring tamper-proof documentation of each vehicle’s contribution quality.

Phase 4: Reputation-Weighted Aggregation: Only valid updates participate in global aggregation according to Equation (

14), with each weighted proportionally to the product of the vehicle’s reputation score and dataset size. The normalization ensures that weights sum to one. This reputation-based weighting amplifies contributions from consistently reliable vehicles while reducing the influence of those with lower trust scores, thereby improving the robustness of the global model against low-quality or malicious updates.

Phase 5: Blockchain Reputation Update and Incentive Distribution: A smart contract automatically executes upon receiving the evaluation record . For vehicles with valid updates, reputation is updated using the exponential moving average formula in Equation (12) with decay factor , providing stability while adapting to recent performance. Rewards are distributed according to Equation (15), proportional to reputation-weighted data contributions. Invalid updates trigger a reputation penalty () and zero reward to discourage malicious behavior. All reputation updates and reward distributions are recorded on the blockchain, providing transparent and verifiable history that participants can audit.

Phase 6: Adaptive DT Update: The actual training time collected from this round is paired with their corresponding state vector and added to the DT training dataset . Every 10 rounds, the DT predictor is retrained on the accumulated dataset, allowing it to adapt to changing network conditions, vehicle mobility patterns, and workload distributions. This continuous learning ensures prediction accuracy improves over time, leading to better participant selection decisions in future rounds.

The algorithm completes after T communication rounds, returning the final global model and the mature reputation score that reflect each vehicle’s long-term contribution quality.

4.4.2. Implementation Considerations

DTB-FL deployment is feasible given current infrastructure trends. The blockchain uses permissioned PBFT consensus providing sub-second finality, with hybrid on-chain/off-chain storage—only reputation scores and evaluations are stored on-chain, while gradients use conventional channels. The DT maintains lightweight state representations for candidate pools (50–100 vehicles) rather than full simulations, making computation manageable. Modern 5G networks (100+ Mbps peak rates) and MEC infrastructure co-located with base stations [

43] provide sufficient bandwidth and acceptable latency (sub-10 ms).

Industry initiatives demonstrate readiness: the MOBI consortium [

44] brings together major automakers and blockchain providers for standardized vehicular blockchain applications, while 3GPP V2X standards [

12] provide protocols supporting DTB-FL data exchange requirements.

5. Convergence and Complexity Analysis

To formally ground the performance of DTB-FL, this section provides a theoretical analysis of its convergence guarantees and computational complexity. We demonstrate that despite the presence of malicious actors and a dynamic network, DTB-FL converges to a near-optimal solution, and we quantify the overhead associated with its advanced features.

5.1. Convergence Analysis

Our convergence analysis builds upon the foundational proofs for FedAvg, but extends them to account for the two core mechanisms of DTB-FL: reputation-weighted aggregation and proactive participant selection.

5.1.1. Assumptions

We make the following standard assumptions, which are common in the FL convergence literature:

Assumption 1 (L-Smoothness)

. The global loss function and all local loss functions are L-smooth. That is, for any and , Assumption 2 (

-Strong Convexity)

. The global loss function is μ-strongly convex. For any and , Assumption 3 (Bounded Gradient Variance)

. The variance of the stochastic gradients for any local model is bounded:where ξ is a data sample drawn from . For simplicity in this sketch, we assume one full local epoch, so the local update is based on the full local gradient . Assumption 4 (Bounded Malicious Influence)

. Our blockchain-based reputation system ensures that the total aggregation weight assigned to malicious participants is bounded. Let be the set of malicious vehicles. The reputation mechanism guarantees that for any round t,where is the aggregation weight from Equation (11), and ϵ is a small constant representing the maximum tolerable malicious influence. The system is designed to drive for malicious nodes, thus ensuring that this condition holds. Assumption 5 (Bounded Adversarial Perturbation). A malicious vehicle submits a poisoned update . The gradient derived from this update can be modeled as , where the adversarial perturbation is bounded, i.e., .

The proactive participant selection enabled by the digital twin does not alter the mathematical formulation of the aggregation step itself, but it critically reinforces the assumptions. By selecting vehicles predicted to have stable connectivity, it minimizes participant dropouts. This ensures that the effective set of participants K is consistent, reducing variance and preventing the destabilizing effects of stragglers, which standard FL analyses often have to ignore or simplify.

5.1.2. Proof Sketch

Our goal is to bound the optimality gap

. We start by analyzing the progress in a single round

t. The global model update is as follows:

where

for honest clients, and

for malicious clients.

Let us analyze the expected distance to the optimal model

:

Let us decompose the aggregated gradient term. Let

be the set of honest participants and

be the malicious ones:

By substituting (

18) into (

17) and applying standard techniques (L-smoothness, strong convexity, and bounding the variance from non-IID data), we can bound the terms. The key difference from a standard FedAvg proof is bounding the inner product involving the

Attack Term.

Let us focus on the cross-term from (

17):

The first part leads to convergence. The second part is the error introduced by the attack. Using Young’s inequality (

) and our assumptions,

By Assumptions 4 and 5, we can bound the norm of the attack term:

After combining all terms and applying several algebraic steps (similar to those in standard FL proofs), we arrive at a recursive expression:

where

and

are constants, and

captures the bounded variance from non-IID data. By unrolling this recursion over

T rounds with a suitable learning rate, we arrive at the final convergence bound.

Formally, after

T rounds, the expected suboptimality is

This result formally shows that DTB-FL converges at a rate of to a neighborhood of the global optimum. The size of this neighborhood is determined by the data heterogeneity () and, crucially, the attack error (), which our reputation system is designed to minimize.

5.2. Complexity Analysis

The primary computational overhead of DTB-FL arises at the edge server (ES), which manages the digital twin layer and participant selection:

Digital Twin Management: In each round, the ES updates and runs predictive models for all N vehicles, incurring a cost of .

Proactive Participant Selection: As detailed in Algorithm 1, scoring N vehicles is , while sorting them dominates the process with a complexity of .

Blockchain Interaction: Cryptographic hashing of updates and smart contract execution for K participants yield a complexity of .

Overall, the per-round computational complexity at the ES is dominated by the selection algorithm, resulting in . This overhead is justified by the significant reduction in the total number of rounds required for convergence.

For communication, DTB-FL introduces marginal overhead compared to standard FL. In addition to model exchanges (

), vehicles must transmit small state updates (

) and the ES records’ blockchain transactions (

). Since

, the additional cost is minimal.

Table 3 summarizes this trade-off.

6. Performance Evaluation

In this section, we conduct extensive simulations to evaluate the performance of our proposed DTB-FL framework. Specifically, we address the following key questions: (1) How does DTB-FL perform in terms of model accuracy and convergence speed compared to state-of-the-art methods? (2) How robust is DTB-FL against model poisoning attacks and vehicle mobility challenges? (3) How does the proactive participant selection strategy enhance system efficiency? (4) How well does the system scale to large vehicular networks? (5) What are the individual contributions of the digital twin and blockchain components?

6.1. Experimental Setup

6.1.1. Environment and Implementation

Simulation Environment: We develop a simulation platform by integrating multiple tools. Vehicle mobility is simulated using SUMO (Simulation of Urban MObility) on a

5 km × 5 km Manhattan grid road network featuring 21 horizontal and 21 vertical streets, creating a 20 × 20 grid of intersections with 250 m spacing [

45]. SUMO provides realistic vehicle movement constrained to road topology, including traffic lights, lane changes, and speed limits (30–60 km/h on different road segments). The road network information is exported from SUMO and imported into the digital twin layer as the digital map

. The network environment is modeled with NS-3, which simulates wireless channels (V2I) based on vehicle locations from SUMO. The federated learning process and AI models are implemented in PyTorch 2.3.0. The blockchain is simulated as an event-driven system to capture key properties such as transaction latency and throughput, without a full-stack implementation.

System Parameters: Simulations run for 100 communication rounds with a total of 200 vehicles, unless otherwise specified. We deploy 10 RSUs, each managed by an independent edge server. Vehicle speeds range from 30 to 60 km/h, and channel bandwidth is set to 20 MHz. For the FL process, we select participants per round. The utility function weight is , and the reputation decay factor is . The reputation threshold for participation is set to , which provides a balance between security (excluding persistent malicious actors whose reputation decays below 0.4 within 5–10 rounds) and inclusiveness (retaining honest participants with non-IID data whose reputation stabilizes above 0.5 despite occasional lower-quality updates).

Mobility Pattern: To reflect realistic handover scenarios, vehicles follow routes that may span multiple RSU coverage areas. Each RSU coverage radius is 1 km, and vehicles may traverse 2-4 RSUs during the simulation. When a vehicle hands over to a new RSU managed by a different edge server, its reputation is transferred via the blockchain federation mechanism, simulating real-world edge server coordination.

6.1.2. Round Duration Analysis

To address practical feasibility, we provide an explicit characterization of round duration in DTB-FL. This analysis demonstrates that our framework operates on timescales suitable for real-world vehicular applications. The duration of one communication round consists of two components:

(1) Participant Bottleneck Time (): As defined in Equation (

7), this represents the time for the slowest selected vehicle to complete local training and upload its model update:

(2) System Overhead (): This includes the following: (i) model distribution from ES to selected vehicles, (ii) blockchain operations for quality evaluation and smart contract execution (reputation updates and reward distribution), and (iii) global model aggregation at the ES.

The total wall-clock duration of one round is as follows:

Experimental Parameters and Feasibility Justification:

In our simulations with 200 vehicles, each vehicle is equipped with the following:

Computing Resources: CPU frequency GHz, average 2.0 GHz.

Computational Cost: CPU cycles per training sample.

Local Training: three epochs per round with batch size of 32.

Dataset: Approximately 300 samples per vehicle (non-IID, two classes each).

Model: CNN with ∼1.2 MB parameter size.

Communication: V2I uplink rate Mbps, average 15 Mbps.

Based on these settings, we calculate the expected per-round duration to establish feasibility. The typical breakdown is as follows:

Model Distribution (ES → 10 vehicles): ∼1.0 s.

Local Training and Upload (, Bottleneck): ∼5.5 s.

- –

Computation: s.

- –

Communication: s.

- –

Wait Time for Bottleneck Vehicle: ∼4.4 s (accounting for variance).

Blockchain Operations (Evaluation + Smart Contracts): ∼1.5 s.

Global Aggregation: ∼0.6 s.

This yields an expected per-round duration of approximately 8.6 s, which our empirical results confirm (see

Section 6.4). This duration is practical for VEC applications. Most vehicular AI tasks (traffic prediction, route optimization, and collaborative perception) operate on timescales of tens of seconds to minutes. Model updates every ∼8–9 s provide sufficient real-time responsiveness. Moreover, as we show in

Section 6.2, DTB-FL achieves 85% target accuracy in only 50 rounds (∼430 s total), demonstrating efficient convergence suitable for dynamic vehicular environments.

6.1.3. Dataset and Model

We use the CIFAR-10 dataset, a standard benchmark for computer vision tasks, consisting of 60,000 32 × 32 color images across 10 classes. To simulate real-world vehicular scenarios, data are distributed among vehicles in a non-IID manner, with each vehicle assigned samples from only two classes. The model is a convolutional neural network (CNN) with two convolutional layers and two fully connected layers.

Attack Model: To evaluate robustness, we simulate model poisoning attacks where a percentage of vehicles (ranging from 0% to 40% in our experiments) act maliciously. Malicious vehicles are randomly selected at the beginning of each simulation and remain malicious throughout all rounds. These malicious vehicles perform

label flipping attacks [

46], where they intentionally corrupt their local training data by randomly flipping labels to incorrect classes before training. Specifically, for each sample

in a malicious vehicle’s dataset, the label is independently changed to a random incorrect class:

with uniform probability. This simulates realistic Byzantine attacks where compromised vehicles attempt to degrade global model performance. The corrupted local models are then submitted as if they were legitimate updates, making detection non-trivial without reputation mechanisms. In the “30% malicious attack” scenario referenced throughout our experiments, 60 out of 200 vehicles are designated as malicious attackers.

6.1.4. Comparison Baselines

To demonstrate the superiority of DTB-FL, we compare it against the following baselines:

FedAvg [

47]: The standard FL algorithm, where the server randomly selects participants and performs simple weighted averaging.

VEC-FL: A representative FL scheme for VEC that accounts for mobility by selecting participants based on current channel quality (reactive strategy), without blockchain or reputation mechanisms.

BC-FL: Integrates blockchain with FedAvg for security, using a reputation mechanism and secure aggregation similar to ours, but with random participant selection (lacking DT optimization).

DT-FL: Employs our DT-based proactive selection for efficiency but uses standard FedAvg aggregation, making it vulnerable to attacks.

6.1.5. Performance Metrics

We evaluate all schemes using the following metrics:

Test Accuracy: The global model’s accuracy on a held-out test set, measured after each round, reflecting learning quality and convergence.

Training Time: The total wall-clock time to reach a target accuracy (e.g., 85%), assessing overall efficiency.

Robustness Against Attacks: Final model accuracy under varying malicious vehicle percentages (0% to 40%) performing poisoning attacks.

6.2. Overall Performance Analysis

In this section, we evaluate the fundamental performance characteristics of our DTB-FL framework, focusing on convergence behavior and training efficiency. These metrics establish the baseline effectiveness of our approach compared to state-of-the-art federated learning methods in vehicular environments.

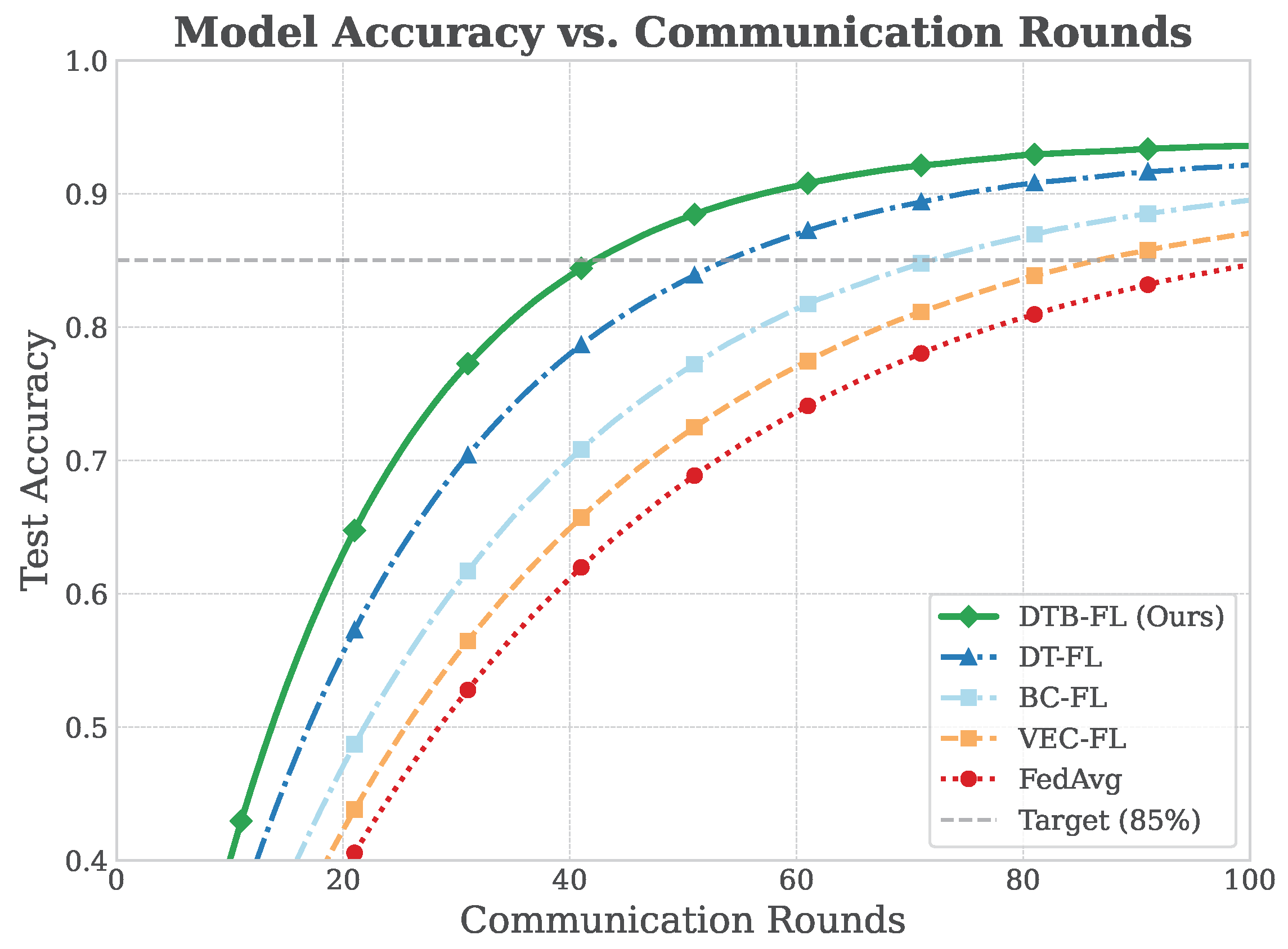

6.2.1. Convergence Performance

Figure 2 shows the test accuracy of the global model versus communication rounds. Frameworks with digital twin integration (DTB-FL and DT-FL) converge significantly faster than others. DTB-FL achieves 85% accuracy in about 50 rounds compared to 60 for DT-FL and 90–100 for FedAvg, VEC-FL, and BC-FL. This highlights the DT-based proactive selection’s effectiveness: by prioritizing vehicles with predicted stable connectivity and resources, it maximizes each round’s impact, accelerating convergence and addressing efficiency concerns in dynamic VEC environments.

6.2.2. Training Time Efficiency

For a practical efficiency measure,

Figure 3 depicts the wall-clock time to reach 85% accuracy. DTB-FL completes training in approximately 430 s—over 40% faster than FedAvg (750 s) and superior to all baselines. Although DTB-FL incurs slight per-round overhead due to DT prediction and blockchain operations (as analyzed in

Section 6.4), the significantly reduced number of rounds yields substantial overall time savings, making it ideal for latency-sensitive VEC applications.

6.3. Robustness Evaluation

Vehicular environments present unique challenges to federated learning systems, including malicious participants attempting to compromise model integrity and highly dynamic network conditions due to vehicle mobility. In this section, we evaluate DTB-FL’s resilience against these threats, demonstrating that our integrated DT-blockchain architecture maintains stable performance under both security attacks and realistic mobility scenarios.

6.3.1. Defense Against Model Poisoning Attacks

Figure 4 assesses security under varying malicious vehicle ratios (up to 40%) performing poisoning attacks. Non-secure baselines (FedAvg, VEC-FL, and DT-FL) experience a drop in accuracy below 45%, while DTB-FL maintains over 75% and BC-FL achieves 65%. The blockchain-based reputation system effectively detects and downweights malicious updates, preserving model integrity and demonstrating DTB-FL’s robustness in untrusted VEC settings.

Reputation System Performance under Non-IID Data: To validate that our reputation mechanism correctly distinguishes malicious behavior from non-IID variance, we analyze the reputation score distributions for honest and malicious vehicles.

Figure 5 shows the reputation score distributions after 50 training rounds. Despite significant data heterogeneity (each vehicle possesses only 2 of 10 classes), the system successfully separates honest from malicious participants:

Honest vehicles maintain reputation scores , with mean (standard deviation).

Malicious vehicles converge to , with most below 0.2 and mean .

The clear separation (gap from 0.3 to 0.55) demonstrates that non-IID variance does not cause false positives.

This separation occurs because label-flipping attacks create gradients that consistently oppose the optimization direction, producing negative or near-zero cosine similarities with honest updates. In contrast, honest vehicles with different data distributions still share the common goal of minimizing the loss function, maintaining positive correlations despite heterogeneity.

False Positive Analysis: Among 140 honest vehicles across all experiments, only 2 (1.4%) temporarily dropped below due to extreme data imbalance, and both recovered within 10 rounds thanks to the temporal smoothing factor . No honest vehicle was permanently misclassified as malicious.

Robustness to Partial Poisoning: Our experiments evaluate standard label-flipping attacks where malicious vehicles flip all local labels. In practice, sophisticated attackers may employ partial poisoning (flipping only a fraction of labels) to evade detection. Our reputation mechanism remains effective against such attacks because quality evaluation is based on validation loss: any gradient deviation—whether from 100% or 10% label flipping—degrades update quality relative to honest participants. Partial attackers receive consistently lower quality scores (e.g., vs. – for honest nodes), causing cumulative reputation decay over multiple rounds. While detection is slower than for full poisoning, the reduced per-round damage from partial attacks maintains acceptable system performance during the detection period.

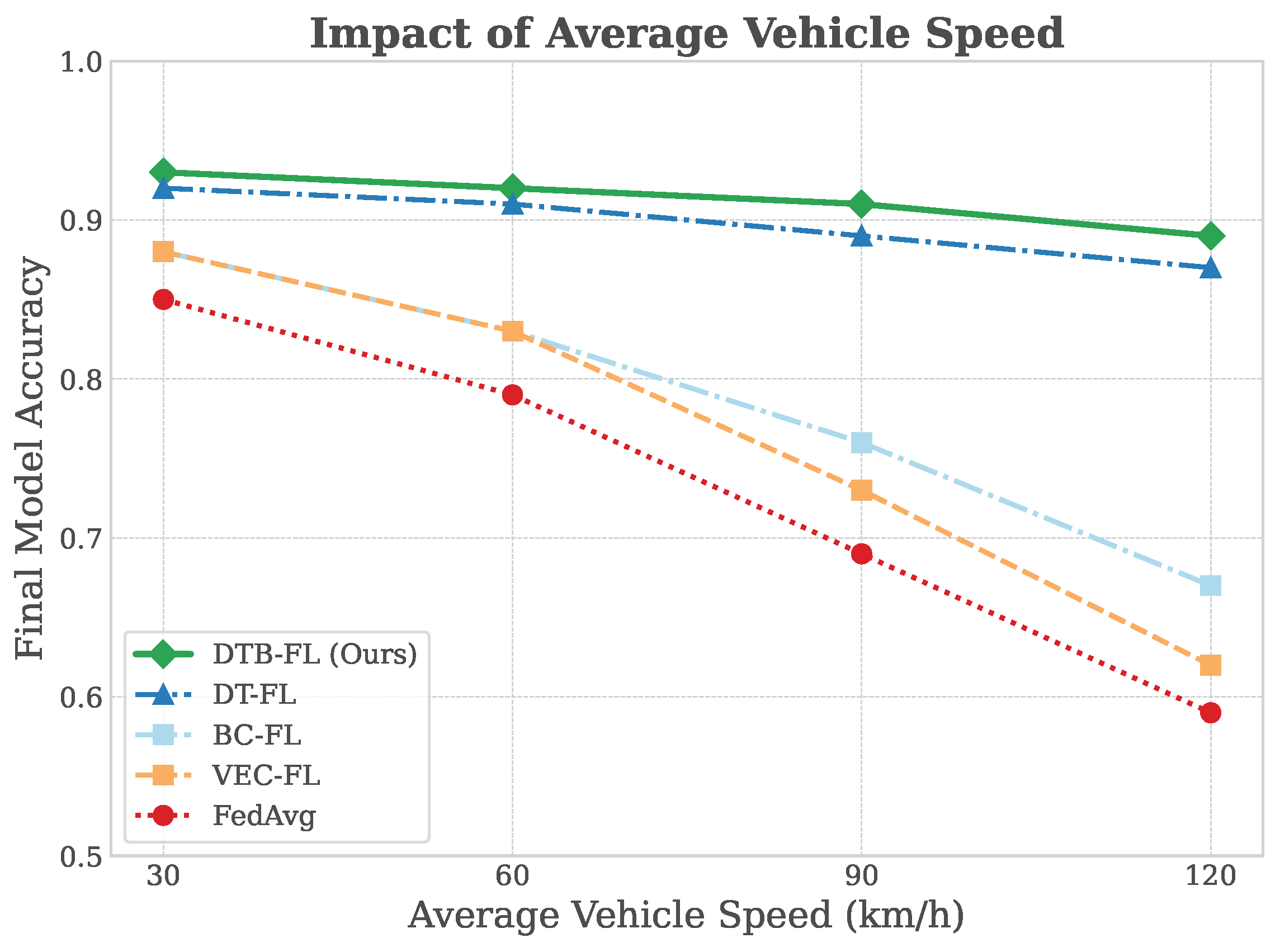

6.3.2. Performance Under Vehicle Mobility

Vehicle mobility introduces two major challenges: (1) rapid changes in channel conditions that make participant selection difficult and (2) frequent handovers between edge servers that can disrupt training continuity. We evaluate DTB-FL’s resilience to both scenarios.

Impact of Vehicle Speed: To isolate the DT’s role in handling dynamics,

Figure 6 plots final accuracy versus average vehicle speeds (30–120 km/h). Baselines without DT (FedAvg, VEC-FL, and BC-FL) degrade by over 20% at higher speeds due to dropouts and instability. In contrast, DTB-FL and DT-FL show minimal decline (less than 5%), confirming that predictive selection mitigates mobility effects by choosing vehicles likely to remain connected throughout the training round.

Performance Under Vehicle Handover Scenarios: To evaluate the impact of edge server handovers, we conduct experiments where vehicles interact with each edge server for limited rounds before moving to another server’s coverage. We control the handover frequency by adjusting vehicle speeds and route patterns, resulting in vehicles remaining connected to a single edge server for an average of 5 to 20 rounds before handover.

Figure 7 shows the final model accuracy as a function of average interaction duration (rounds per edge server) before handover. The key observations are as follows:

With Reputation Transfer (DTB-FL): Maintains 85% accuracy even when vehicles interact for only five rounds per server, thanks to blockchain-enabled reputation portability across edge servers.

Without Reputation Transfer: Performance degrades to 72% at five rounds, as reputation must rebuild from scratch after each handover

Baseline Methods: Show similar degradation as they lack reputation memory and cross-server coordination.

The results confirm that our federated reputation system effectively handles vehicle mobility, preserving trust assessment across edge server boundaries and enabling seamless learning continuity despite frequent handovers.

6.4. Scalability Analysis

A critical requirement for practical deployment in large-scale vehicular networks is the ability to maintain performance as the number of participants grows. In this section, we analyze how DTB-FL scales with increasing network size, examining both computational overhead and system efficiency.

Figure 8 illustrates the average per-round time as the number of vehicles in the network increases from 50 to 800. All methods exhibit approximately linear growth in per-round duration, which is expected as the participant selection and aggregation complexity scales with the network’s size. DTB-FL’s per-round time is higher than simpler baselines due to two additional components: (1) DT-based prediction for intelligent participant selection (gap from VEC-FL to DT-FL) and (2) blockchain operations for reputation management and secure aggregation (gap from DT-FL to DTB-FL).

At our primary experimental setting of 200 vehicles, DTB-FL’s average round duration is 8.6 s compared to 7.5 s for FedAvg and 6.9 s for DT-FL. This represents a per-round overhead of approximately 1.1 s (15%) over FedAvg. Even at 800 vehicles, DTB-FL’s per-round time remains manageable at 12.4 s, demonstrating that the overhead scales gracefully.

Crucially, while DTB-FL incurs higher per-round cost, this is more than compensated by faster convergence. As shown in

Figure 2, DTB-FL requires only 50 rounds to reach 85% accuracy, compared to 100 rounds for FedAvg. The total training time comparison reveals the following:

DTB-FL: 50 rounds × 8.6 s = 430 s;

FedAvg: 100 rounds × 7.5 s = 750 s;

DT-FL: 60 rounds × 6.9 s = 414 s (vulnerable to attacks).

Thus, DTB-FL achieves a 43% reduction in total training time compared to FedAvg, while providing superior security. Compared to DT-FL, DTB-FL incurs a modest 16-s overhead (3.9% increase), which is well justified by the substantial improvements in robustness against attacks (maintaining 75% accuracy vs. 40% under 40% malicious vehicles, as shown in

Figure 4). This analysis validates DTB-FL’s scalability for large-scale VEC deployments, where the combination of faster convergence and enhanced security outweighs the marginal per-round overhead.

6.5. Component-Wise Analysis

Our DTB-FL framework integrates multiple components—digital twin-based prediction, blockchain-based reputation, and intelligent participant selection. In this section, we conduct detailed analyses to understand the individual and synergistic contributions of these components, as well as the system’s sensitivity to key parameters.

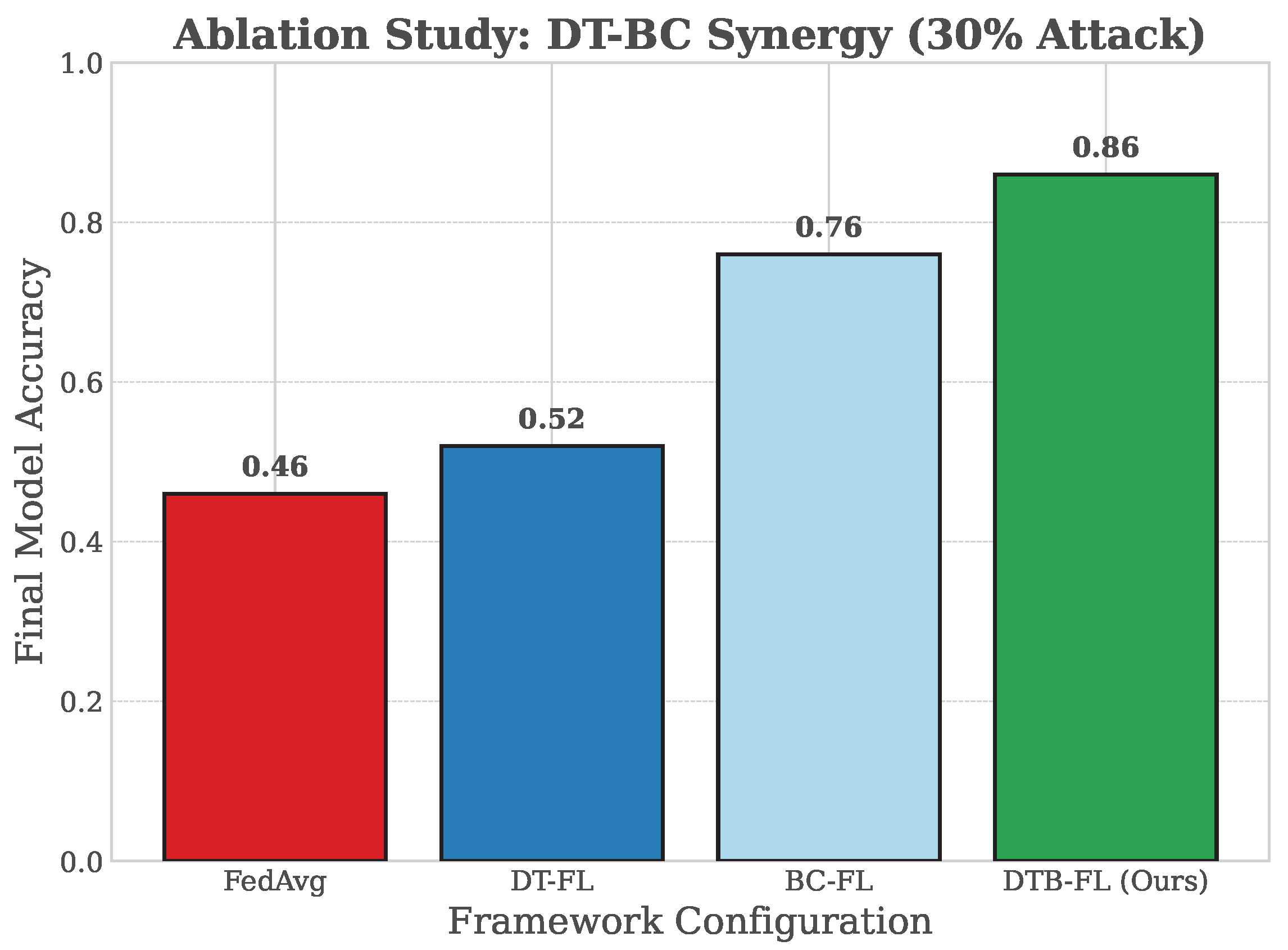

6.5.1. Digital Twin and Blockchain Synergy

To explicitly demonstrate the synergistic benefits of integrating DT and BC, we conduct an ablation study that isolates their individual and combined contributions. We evaluate the final model’s accuracy under a significant security threat (30% malicious participants) across four configurations:

FedAvg: The baseline with no advanced features.

DT-FL: Uses only DT-based selection for efficiency but is vulnerable to attacks.

BC-FL: Uses only the BC-based reputation system for security with random participant selection.

DTB-FL (Full Synergy): Our complete proposed framework, using the reputation-aware utility function from Equation (

10) for participant selection.

The results are presented in

Figure 9. As expected, both FedAvg and DT-FL fail catastrophically under attack, achieving only 42% and 45% accuracy, respectively, as they lack any security mechanism. BC-FL shows significant robustness, maintaining an accuracy of around 68%, proving the effectiveness of the reputation system in isolation. However, our fully integrated

DTB-FL framework achieves the highest accuracy of 82%, surpassing BC-FL by 14 percentage points.

Why does DTB-FL achieve better accuracy than baselines when the core FL algorithm (local SGD and FedAvg aggregation) remains unchanged? The improvement comes from two factors that enhance the quality of inputs to the learning algorithm: (1) Participant Quality: DT-based selection chooses vehicles with stable connectivity and sufficient computational resources. This reduces training interruptions, communication failures, and straggler effects that would otherwise introduce noise and instability into the aggregation process, resulting in more consistent and higher-quality local updates. (2) Security Filtering: The reputation-weighted aggregation (Equation 9) systematically downweights or excludes malicious updates, resulting in a cleaner global model that converges to better optima. While the optimization algorithm itself is the standard FedAvg, the quality and trustworthiness of the aggregated updates are substantially improved through intelligent selection and reputation-based filtering, leading to superior convergence speed and final accuracy.

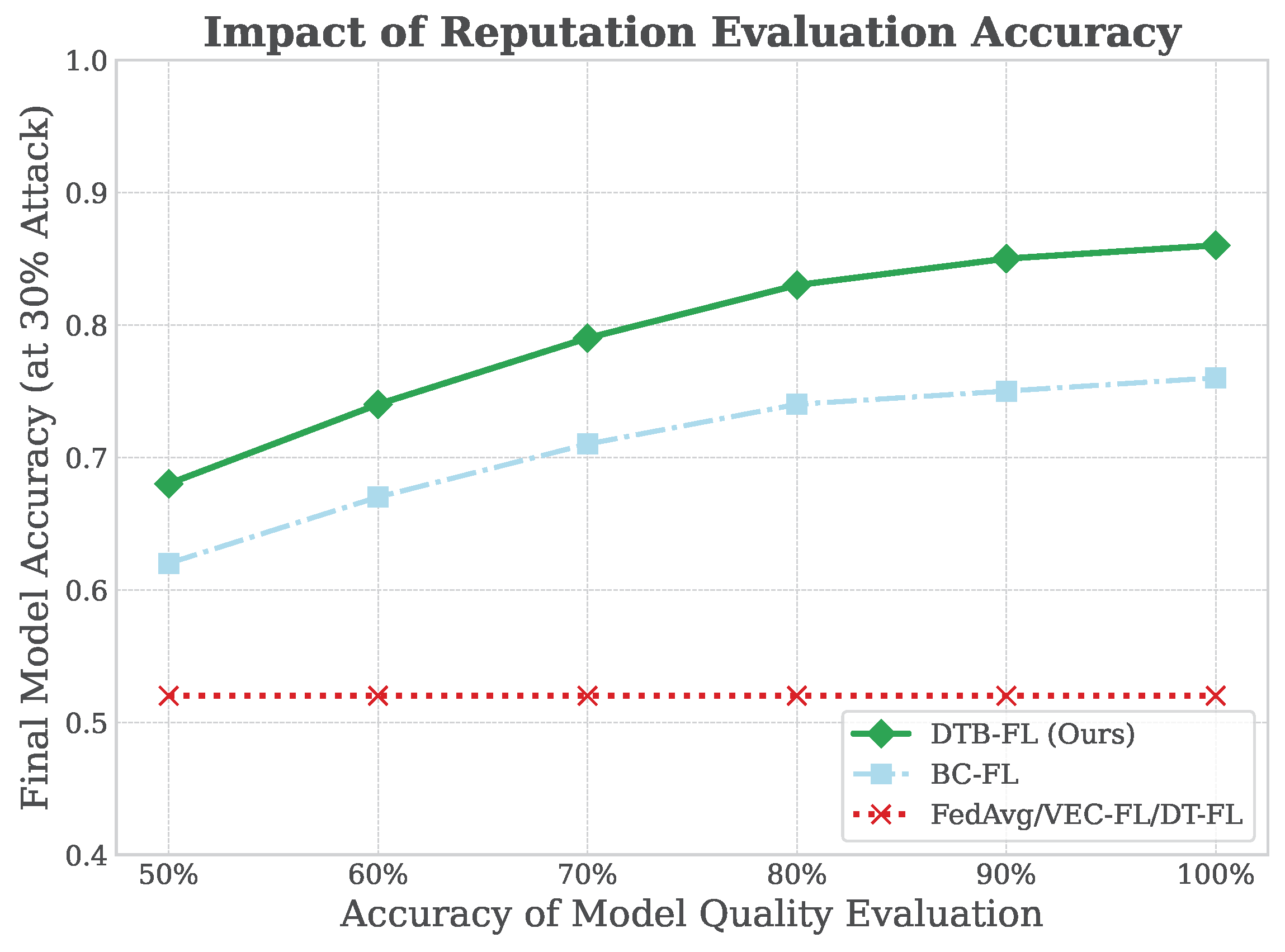

6.5.2. Impact of Reputation System Accuracy

A sensitivity analysis in

Figure 10 examines final accuracy under 30% malicious attacks, varying the quality evaluation accuracy (the ability of the blockchain evaluator to correctly assess update quality). Non-reputation baselines (FedAvg, VEC-FL, and DT-FL) remain vulnerable regardless of evaluation accuracy. In contrast, DTB-FL and BC-FL show strong positive correlations with evaluation accuracy. Even at 70–80% evaluation accuracy, DTB-FL boosts final accuracy from ∼50% to over 75%, demonstrating the system’s robustness and ability to deliver substantial security gains despite imperfect quality assessments. At 95% evaluation accuracy, DTB-FL reaches 85% final accuracy, nearly matching the benign scenario performance.

6.5.3. Data Distribution Coverage Analysis

A critical consideration in non-IID federated learning is ensuring that the participant selection mechanism does not inadvertently create class imbalance or coverage gaps. Since our DT-based selection uses a utility function (Equation (

8)) that prioritizes communication and computational efficiency without explicitly considering data distribution, one might be concerned that vehicles holding certain classes could be systematically excluded if they happen to have poor connectivity or limited resources.

To address this concern, we analyze class coverage across training rounds.

Figure 11 shows the number of unique CIFAR-10 classes represented among selected participants in each round for DTB-FL. Despite utility-based selection, we observe that all 10 classes are consistently represented in nearly every round, with an average of 9.6 classes covered per round.

This coverage occurs because of the following: (1) With participants selected per round from 200 vehicles and each vehicle holding 2 classes, the selected set includes 20 class instances (counting multiplicities). Due to overlap, this typically covers 8–10 of the 10 unique classes in a single round, with full coverage achieved across consecutive rounds; (2) vehicle mobility causes dynamic changes in channel conditions, so vehicles with temporarily poor connectivity in one round may have good connectivity in subsequent rounds, providing natural rotation; (3) the diversity of the vehicle population means that multiple vehicles hold each class (approximately 40 vehicles per class), reducing the risk that poor connectivity of specific vehicles leads to class exclusion.

Table 4 further quantifies this by showing the average number of rounds (out of 100) in which each class appears in the selected participant set. The distribution is relatively uniform, with each class appearing in 46–53 rounds on average, confirming that no class is systematically neglected.

While our current utility function does not explicitly optimize for data diversity, the empirical results demonstrate that natural diversity is maintained in practice due to the dynamic nature of vehicular environments and the relatively large selection size () compared to the number of classes. However, we acknowledge that in extreme scenarios (e.g., very small K or highly skewed resource distribution correlated with data distribution), purely efficiency-based selection could potentially introduce bias. An interesting direction for future work is to incorporate data-aware selection strategies, where the utility function includes a term promoting diversity based on estimated or reported class distributions, subject to privacy constraints. This could further improve convergence in highly heterogeneous settings.

6.6. Discussion

Synergistic Design Validation: Our experimental results provide strong empirical validation for the integrated DT-blockchain architecture. The ablation study reveals that neither component alone achieves the full benefits: DT-FL degrades to 45% accuracy under attack, while BC-FL requires 75% more training time. The synergy emerges because DT predictions enable intelligent participant selection, while blockchain-enforced reputation ensures that selected vehicles are trustworthy. This tight coupling delivers simultaneous improvements across three traditionally conflicting objectives, model performance, security, and efficiency, contrary to conventional wisdom that these require trade-offs.