The last decades have witnessed a constantly increasing attention toward the definition of common strategies to deal with climate change. In this respect, the Organization of United Nations (ONU) and the European Union (EU) have defined medium- and long-term strategies with challenging goals, including the reduction of 55% of net greenhouse gas (GHG) emissions by 2030 [

1] and becoming a climate-neutral continent by 2050 [

2], respectively. To achieve these challenging objectives, a crucial aspect is tackling residential and business buildings’ emissions. Indeed, buildings account for 35% of the EU emissions related to energy production [

3]. The diffusion and integration of distributed renewable energy sources (RESs) and energy storage systems (ESSs) with the traditional electric grid and/or microgrids, peer-to-peer (P2P) energy trading, and the creation of energy communities (ECs) have been identified as crucial tools to tackle the buildings’ emissions reduction problem [

4,

5]. With respect to ECs, several EU directives have been published to regulate ECs’ implementation rules, principles and setup criteria and for specifying the roles of all the involved actors [

6,

7,

8].

Energy communities are defined as citizen-driven local communities aimed at cooperating for more efficient and cleaner energy production and consumption. Beyond technical research activities on efficient materials, ECs require intensive research on the best energy management policies that allow for meeting the EU-specified criteria and maximizing customer satisfaction. These policies may include one or more of the following aspects: accurate energy production forecasts, intelligent ESS charging and discharging strategies, cooperative satisfaction of EC members’ load demands, and the smart exchange of surplus energy. In this article, the energy forecast problem will not be addressed. The proposed control framework oversees understanding, in real-time, of the best energy management strategy with respect to the EC members’ load demand satisfaction, the ESS charging problem, and the energy surplus management problem. In this respect, the next subsection will present a literature review related to these aspects. More specifically, since the most recent EU policy updates were made in 2022 and 2023, the literature review will focus on the most recent articles related to ECs in which the new directives’ principles have been considered.

1.1. Related Works

Energy communities are characterized by the presence of many members, each of whom may have a local ESS and/or RES, as well as different comfort levels. As already mentioned, beyond the load satisfaction problem, other issues shall be addressed, such as the ESS charging and discharging processes, the maximization of self-consumption, the promotion of collaborative behaviours and the management of energy surplus.

In the literature, several articles focus on the analysis of the environmental and economic benefits of real-world use cases. In [

9], for example, the authors investigate the economic benefits of the joint use of multiple energy sources such as photovoltaic (PV) systems, ESSs and hydrogen sources. The article’s case study is the EC campus of the Marche Polytechnic University (UNIVPM) located in Ancona, Italy. The authors propose a mixed-integer linear programming (MILP) optimization model to reduce GHG emissions by considering energy source installation, operation and maintenance costs. In [

10], the environmental and economic benefits of a renewable energy community (REC) with a shared PV system located in Rome, Italy, are discussed. In [

11], the authors investigate the impact of distributed renewable energy systems in a real-world scenario located in Italy. To evaluate the benefits of distributed RESs, the authors consider not only economic aspects but also socio-economic aspects such as unemployment rates and population conformation. The particularity of this article relies on the specific application domain investigated, which is an area subject to reconstruction activities after the 2009 and 2016 earthquakes. In [

12], the authors investigate the impact of climate change on PV energy production, analysing three different representative concentration pathway scenarios, starting from a pessimistic scenario to an optimistic one. The results confirmed that the average temperature increase, as a consequence of climate change, has an impact on the efficiency of RES production. Nevertheless, the article points out that the compensation due to the increase in radiation leads to a general improvement of the annual energy production of PVs, even in the pessimistic scenarios. In [

13,

14], the authors provide a state-of-the-art analysis on European and Italian ECs, with a focus on the city of Naples, Italy. Energy communities located in Naples have also been the focus of [

15], in which several optimization methods have been adopted to foster the transition of buildings to a nearly zero-energy community by evaluating multiple possible EC features. The authors use a brute force algorithm to select the most appropriate EC features. In [

16], the authors perform an analysis of a REC located in Magliano Alpi, Italy, using load shifting. The authors introduce several key performance indices (KPIs) useful for evaluating Italian EC performances. In [

17], the authors analyse the performance of a REC set up in Austria, under forecasting and price uncertainties, developing a MILP-based MPC framework. In [

18], the authors analyse the impact of different types of ESS management for a REC located in Florence, Italy, proposing a smart ESS management method for collective self-consumption. The results have shown better performances than the method for individual self-consumption. In [

19], the authors describe a Deming Cycle-based approach for managing Italian ECs, considering both physical and virtual self-consumption to reduce GHG emissions, to prevent energy poverty and to optimize RECs’ and customers’ profits. The proposed approach has been validated in an EC located in Sicily, Italy. The results show good performance in facilitating the setup of such communities and with respect to the considered objectives. Energy poverty reduction has also been considered in [

20], in which the authors present an optimization model to dimension energy source capacity and to manage shared energy under the Italian regulatory framework. The proposed model considers both physical and virtual sharing schemes in centralised and decentralised configurations, as well as the influence of different ESS configurations. The results have confirmed economic, environmental and social benefits for EC members and the EC itself. In [

21], a three-stage optimization method for the management of EC shared energy under the Portuguese regulatory framework is presented. The proposed algorithm minimizes individual and collective energy costs and manages the flexible resources of EC members and the EC itself. The authors also consider grid constraints, exploiting the degrees of freedom provided by the available energy resources and, eventually, by the local energy generation and/or consumption. The proposed algorithm is based on an MILP optimization problem.

A similar solution based on a multi-stage optimal control-based approach has been presented in [

22]. The authors propose a two-stage optimization algorithm to manage an EC with shiftable ESS and electric vehicles (EVs), satisfying EC members’ load demands and improving EC flexibility. The proposed model also takes into account customers’ preferences and their interaction with the community manager (who acts as a local market operator) for the energy sharing process. The presence of EVs is also considered in [

23], where the authors envisage the presence of EVs, heat pumps and thermal ESSs. The objective of this approach is to minimize customers’ bill costs and EV battery degradation. The proposed solution exploits a two-stage MPC-based method that takes into account customers’ thermal preferences.

In [

24], the authors propose the use of a decentralised autonomous organizational (DAO) model for the management of RECs to improve members’ participation and cooperation in sustainable projects, and to cope with scalability and flexibility issues. In [

25], the integration of distributed energy resources (DERs) in microgrids is discussed. The article also explores the use of blockchain technologies to enable EC setup while preserving the microgrid’s reliability, stability and flexibility. To support investment decisions in ECs, Ref. [

26] presents an optimisation model focusing on RECs for the generation of green energy and the promotion of internal energy sharing. Ref. [

27] analyses the economic benefits of participating in P2P energy trading among different energy communities, developing a mathematical model with constraints as a game with multiple leaders, a distribution system operator (DSO) and a market operator. In [

28], an economic analysis of ECs is proposed. The authors describe an optimization model based on self- and third-party investments while also considering different pricing schemes for determining energy costs. A smart definition of energy costs has also been addressed in [

29], in which the authors propose a real-time optimization method. The proposed solution adopts a linear quasi-relaxation approach and dynamic partitioning technique. Ref. [

30] performs a variance-based sensitivity analysis focusing on the influence of (i) forecasting uncertainties and (ii) resource availability and flexibility in REC management. The authors state that this sensitivity analysis can be used as a supportive decision-making tool to evaluate energy performance in uncertain environments.

An interesting solution is proposed in [

31], in which the authors combine an optimal control-based approach (a MILP-based algorithm) and deep reinforcement learning (DRL) algorithm. The proposed solution is able to maximize social and economic benefits while taking into account the role of ESS in balancing REC demand. In [

32], an analysis of the influence of EC customers’ preferences in a decentralised EC framework is presented. The authors focus on the assignment of collective renewable energy under different fairness policies. DRL is also adopted in [

33], in which the authors focus on developing energy-aware distributed cyber-physical systems (DCPS) exploiting the cloud and edge computing paradigms. The proposed approach considers the presence of RESs and exploits DRL algorithms to forecast their energy production to optimize energy usage. In [

34], the authors focus on the energy sharing problem and address it by means of a DRL algorithm. More specifically, the Deep Q-Network (DQN) algorithm is used to learn the energy consumption behaviour of a household and a centralized agent is used to compute the energy status of the EC. The individual DRL agents receive data regarding the energy status and obtain a reward which will induce a cooperative behaviour. In [

35], instead, the authors focus on community energy storage systems (CESSs) allowing flexible energy sharing among EC members. Deep learning techniques are used to forecast RES energy production, whereas hybrid optimization techniques are used to cluster EC members and to provide the optimal management of RESs and the shared ESSs. In [

36], the authors investigate the benefits of P2P energy trading in the presence of distributed ESSs and RESs, considering multiple application scenarios. The proposed control framework to manage the P2P energy trading is based on a multi-agent DRL algorithm trained in a decentralized environment.

Table 1 summarizes the findings of the literature review. The articles have been compared based on nine main features, as described in the following. F1 captures the nature (decentralized/distributed versus centralized) of the proposed control framework. F2 and F3 indicate whether distributed RESs and ESSs have been considered, respectively. F4 specifies whether, in the adopted EC model, a shared ESS was considered. F5 and F6 specify whether the energy surplus and ESS charging process management problems have been considered, respectively. F7 describes whether, in the proposed control framework, load demand and RESs’ energy production forecasts have been embedded. F8 captures the inclusion of fairness principles in the energy management control problem. In the context of energy communities, fairness is defined with respect to (i) decision-making processes and (ii) benefits and costs sharing [

37]. The former aspect has been tackled in the literature from the design and investment point of view [

38,

39,

40]. The latter element has been addressed by developing fair P2P energy trading strategies [

41,

42,

43] or fair energy transactive market policies [

44,

45].

Table 1 describes whether at least one of these aspects has been considered. Finally, F9 specifies whether EC members’ personalized comfort levels have been considered. Based on such features, it is possible to state the following. Most of the articles based on real-world use cases focus on analysing the economic and environmental benefits of given ECs rather than on the control problems related to the EC energy management. Some articles describe the control algorithms proposed to solve the energy management problem, and they mostly involve the adoption of optimal control-based approaches (e.g., MILP and MPC). This type of solution has the advantage of providing the optimal solution at the cost of high computational costs, limiting their applicability in real-time. In this respect, AI-based solutions allow reducing the computational burden and the need to derive a precise mathematical model of the considered system. However, AI has been mostly used to forecast the energy production of RESs or to model the energy consumption of consumers/households. Furthermore, DRL algorithms are characterized by several hyperparameters whose tuning is a complex task based on trial and error. More generally, the problems addressed in the literature with respect to ECs include (i) P2P energy trading, (ii) economic and environmental analyses, (iii) management of ESSs and RESs for maximizing self-consumption, (iv) satisfaction of grid constraints and (v) dimensioning of EC elements.

The authors believe that there is a lack of an integrated scalable approach jointly tackling the EC members’ load demand, the optimization of the members’ RES energy production and ESS usage, the consideration of shared ESSs and the energy trading with the EC and the grid, and the consideration of personalized criteria based on EC members’ preferences. Motivated by these considerations, the proposed control framework adopts a hierarchical multi-agent reinforcement learning (RL) approach. To each EC member, there is associated a RL agent in charge of learning the best energy management strategy with respect to all the above-mentioned problems.

1.2. Article’s Contributions

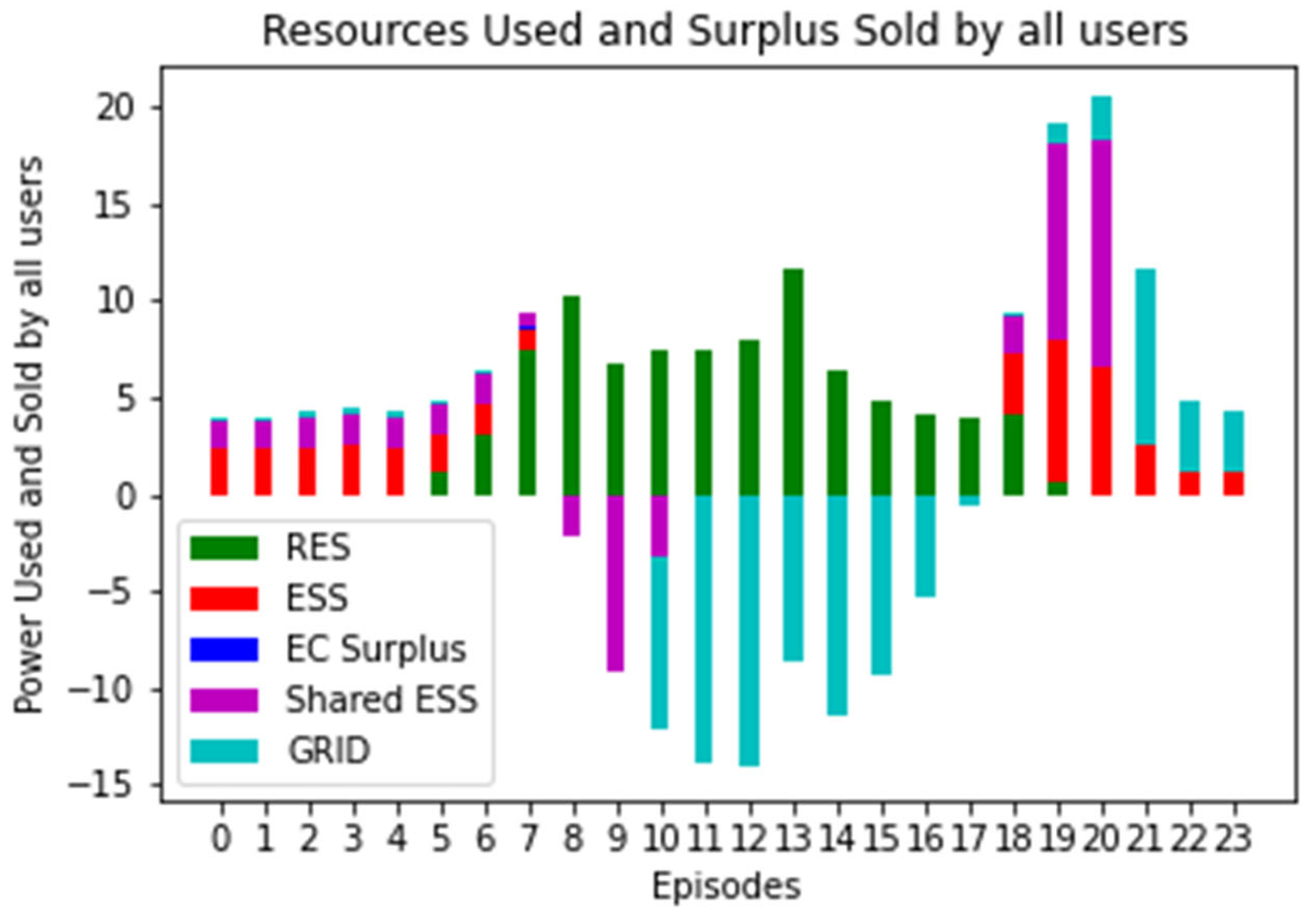

The major contribution of this paper is the development of a cooperative multi-agent AI-based control framework able to learn the optimal energy management strategy in a given EC. The proposed solution can be used to solve the EC energy management problem as well as to understand EC performances in different configurations and thus to dimension the EC elements (e.g., ESSs, RESs, community members, member profiles, etc.). The EC energy management problem consists of understanding the best way to exploit the available energy sources. The load demand of a given EC member can be satisfied through (i) the energy produced by the RES, (ii) the energy stored in the ESS, (iii) the energy stored in the shared ESS, (iv) the EC’s energy surplus and (v) the electric grid. Furthermore, it is necessary to understand the best control strategy to charge the shared ESS and to manage the EC’s energy surplus. With the proposed solution, each building in the EC can be equipped with local energy management software, exploiting the trained artificial RL agents in charge of deciding the energy exchanges between the building and (i) the distributed RES and ESS, (ii) the EC and (iii) the electric grid.

To the best of the authors’ knowledge, a multi-agent AI-based control framework for simultaneously tackling the load demand satisfaction problem, the individual and shared ESS and RES management and the management of the EC energy surplus has not been proposed yet in the literature. From a technical perspective, the proposed multi-agent solution is based on the well-known Q-Learning algorithm.

Furthermore, under specific conditions, the Q-Learning algorithm guarantees that the learned policy is the optimal one. This property is not guaranteed by Deep Reinforcement Learning algorithms. However, the convergence property comes at the cost of low scalability. To address this aspect, the considered control problems were decoupled into three sequential stages. In addition, an ad hoc state aggregation procedure was developed to map the RL agents’ continuous state spaces into discrete state spaces of limited dimensions, further reducing the algorithms’ computational complexity. Finally, the proposed solution captures the most recent EU directive principles with respect to EC structures, member cooperation and energy management that is privacy-preserving and fair.