Abstract

Achieving attention tracking as easily as recording eye movements is still beyond reach. However, by exploiting Steady-State Visual Evoked Potentials (SSVEPs) we could recently record in a satisfactory way the horizontal trajectory of covert visuospatial attention in single trials, both when attending target motion and during mental motion extrapolation. Here we show that, despite the different cortical functional architecture for horizontal and vertical motion processing, the same result is obtained for vertical attention tracking. Thus, it seems that trustworthy real-time two-dimensional attention tracking, with both physical and imagined target motion, is not a too far goal.

Introduction

Eye movements are often considered a proxy of overt visuospatial attention. Indeed, attention shifts customarily accompany saccades and smooth pursuit eye movements (Groner & Groner, 1989; Deubel & Schneider, 1996; Hoffman & Subramaniam, 1995; Lovejoy, Fowler, & Krauzlis, 2009; Van Donkelaar & Drew, 2002). Because recording eye movements is easy and fast, eye tracking is commonly used as a mean to assess the movements of visuospatial attention in real time (Hyönä, Radach, & Deubel, 2003). However, covertly shifting attention does not involve eye movements, except microsaccades (Corneil & Munoz, 2014; Laubrock, Engbert, & Kliegl, 2005).

Since the pioneering studies of Hermann von Helmholtz, several behavioral methods have been developed to assess covert attention shifts (Deubel & Schneider, 1996; Posner, 1980), but none affords continuous measurement of covert attention movements in the same way eye tracking affords eye movement recording. This stems from the trivial fact that these methods require single responses (e.g., a speeded response or a detection response) that need to be averaged in order to document an attention shift (Deubel & Schneider, 1996; Posner, 1980; Shioiri, Cavanagh, Miyamoto, & Yaguchi, 2000; Shioiri, Yamamoto, & Yaguchi, 2000). This holds also for ERPs based studies (Drew, Mance, Horowitz, Wolfe, & Vogel, 2014; Drew & Vogel, 2008; Mangun, Hillyard, & Luck, 1993). When the trajectory of covert attention movements is at stake—i.e., not simply distinguishing attended from non-attended stimuli—the problem is even more germane. Several hundreds of trials may be required in order to reconstruct an attention movement a posteriori and necessarily in a gross way (e.g., de’Sperati & Deubel, 2006).

To overcome these difficulties, we recently described a method to track the continuous horizontal movements of covert visuospatial attention by means of Steady-State Visual Evoked Potentials (SSVEPs, Gregori Grgič, Calore, & de’Sperati, 2016). SSVEPs are a characteristic cortical response evoked by the presentation of a repetitive visual stimulations, consisting in a sinusoidal waveform oscillating at the same frequency of the stimulus, originating from early visual cortex (Di Russo et al., 2007; Pastor, Artieda, Arbizu, Valencia, & Masdeu, 2003). SSVEP amplitude was found to be strongly modulated by spatial selective attention, being enlarged in response to a flickering stimulus at an attended versus unattended location (Morgan, Hansen, & Hillyard, 1996). Thus, SSVEPs provide an efficient method to study covert visuospatial attention.

To achieve continuous attention tracking, we used a background double-flickering technique, where the right and left halves of the display flickered at distinct frequencies. This produced two distinct SSVEPs in posterior cortical regions whose amplitude varied reciprocally as a function of horizontal eye position. When observers followed with the eyes a target oscillating sinusoidally in the horizontal plane, the ensuing SSVEP modulation reflected rather faithfully the smooth pursuit ocular traces (Figure 1A). In most cases, the SSVEP modulation was well visible even in single trials. Importantly, this held true also when observers were asked to covertly attend the moving target without moving the eyes, although in that case SSVEP modulation was about one fourth of that obtained during overt tracking (Figure 1B). That is, we achieved covert attention tracking in a way operatively similar to eye tracking.

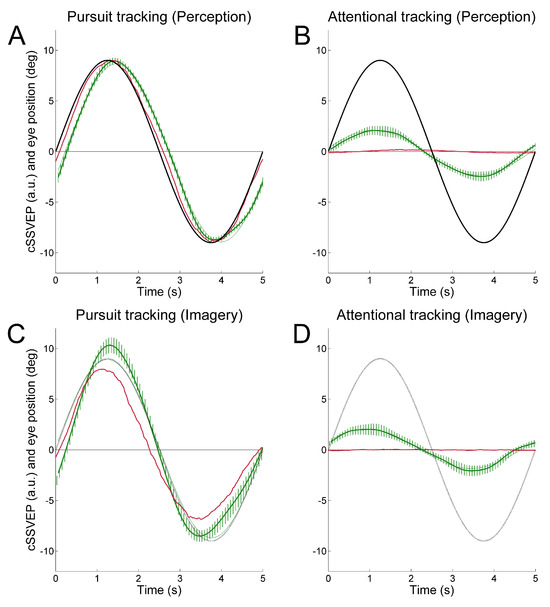

Figure 1.

Average cSSVEP modulation (dark green traces) during horizontal sinusoidal tracking in Pursuit (A, C) and Attentional (B, D) tracking. Only the second (perception: A, B) and third (imagery: C, D) tracking cycles are shown. The thickness of the vertical lines represents the instantaneous 99% confidence interval around the mean. The thin dark sinusoidal curves superimposed on the cSSVEP traces are the best fitting curves. Target position and average eye position are illustrated in black and dark red.

We found identical results when observers mentally extrapolated in imagery the trajectory of a moving target (Figure 1C, D), thus providing strong evidence that dynamic mental imagery, even when unaccompanied by overt eye movements, is associated to sensory modulation in visual cortical areas, at least in a simple motion extrapolation task (de’Sperati, 1999, 2003; Jonikaitis, Deubel, & de’Sperati, 2009, see also Makin & Bertamini, 2014).

In this preceding study, we tested only horizontal movements of visuospatial attention. Clearly, it is desirable to be able to achieve two-dimensional attention tracking. This could in principle be possible by using four flickering frequencies, two for each tracking dimension. As a preliminary step, here we examined whether the same experimental setup previously used for horizontal movement works also with purely vertical tracking. In principle, in fact, SSVEP modulation produced by vertical attention shifts might not be equivalent to that produced by comparable horizontal shifts, as interhemispheric mechanisms may affect the former but not the latter (Drew, et al., 2014; Stormer, Alvarez, & Cavanagh, 2014). We found that, similarly to horizontal attention tracking, also vertical attention tracking induced quasi-sinusoidal, target-related SSVEP modulations.

Methods

Participants

Eight participants were recruited (1 male and 7 females, right-handed, with normal or corrected-tonormal vision, aged between 20 and 22). All subjects had no or very limited prior experience with eye movements, visuospatial attention or mental imagery experiments. None of the participants or their firstdegree relatives had a history of neurological diseases. The study was conducted in accordance with the recommendations of the Declaration of Helsinki and the local Ethical Committee (“Comitato Etico”, San Raffaele). Before the experiment, participants signed the informed consent.

Stimuli and tasks

Observers were seated in a moderately darkened room in front of a computer screen (Dell P991 Trinitron, 19 inches; frame rate: 60 Hz; resolution: 1600 x 1200 pixels; viewing distance: ~57 cm), with their heads resting on a forehead support. The stimulus presentation software was developed in C++, using the OpenGL library, with a triggering mechanism tightly synchronized with the acquisition software. For the entire duration of each trial, the screen was split in two halves, an upper half and a lower half, flickering at two different frequencies, 15 and 20 Hz respectively or vice-versa. The flickering frequencies had to be sufficiently high to apply a short moving window (1 s) to the EEG signal and to avoid excessive visual discomfort (Wang, Wang, Gao, Hong, & Gao, 2006). Flickering was obtained by alternating a white and a black half-screen patch (luminance: 113 and 0.8 cd/m2, respectively). Superimposed on the flickering background, a target (a circular dark gray spot, not flickering; diameter: 0.5 deg; luminance: 11 cd/m2), oscillated vertically with sinusoidal motion (0.2 Hz, ±9 deg). The target moved for two cycles (Perception cycles: motion observation), disappeared for the third cycle (Imagery cycle: motion imagery), and became visible again for the fourth (last) cycle. Participants had to attend to the target motion for the entire duration of the trial (4 cycles, 20 s). During the third cycle, however, the target was invisible and observers had to mentally imagine its motion “as if the target were still present”. At the end of each trial participants performed a temporal discrimination task in which they reported whether they had the impression of leading or lagging behind the target during the imagery cycle, based on the re-appearance of the visible target at the beginning of the fourth cycle. Because their heads were restrained, observers gave their responses through a pre-determined code (onefinger tap = lead, two-finger tap = lag).

The experiment consisted of 2 tasks: Pursuit tracking and Attentional tracking. During the Pursuit tracking task, participants attended to the target by following it with smooth pursuit eye movements (overt visuospatial attention), and were free to move their eyes in the third cycle (imagery condition). The Attentional tracking task was identical to the Pursuit tracking task, except that participants fixated a central fixation dot (covert visuospatial attention). A few familiarization trials were provided before the beginning of the experiment.

Eye tracking and EEG recordings

Parallel eye movements recording and EEG recording have been performed for each participant. Vertical eye movements were measured monocularly through infrared oculometry (Dr. Bouis Oculometer, nominal accuracy: <0.3 deg) in a head-restrained condition. The analog eye position signal was calibrated, visualized in real time on an oscilloscope, sampled through an A/D converter (resolution: 12 bit; sampling frequency: 512 Hz), low-pass filtered (cut-off: 256 Hz), and stored for subsequent analysis. Eye blinks were eliminated and substituted by linear interpolation.

EEG traces were recorded using the g.tec g.MOBIlab+ (resolution: 16 bit; sampling frequency: 256 Hz). The EEG traces were recorded using 4 electrodes positioned on scalp locations over the visual cortex: PO7-PO8-Oz-Pz, referenced to the right ear lobe and grounded to Fpz, according to the extended 10-20 system. Electrodes were gel-based passive gold plates, and were placed on the scalp by means of an EEG cap. The impedance was kept under 5 kΩ (measured at 10 Hz). Data were acquired using the OpenVibe software framework (Renard et al., 2010) and saved for off-line analysis.

EEG signal processing

The SSVEP response was computed using the Minimum Energy Combination method extracting the index (Friman, Volosyak, & Graser, 2007) from the EEG signals of all electrodes (PO7, PO8, Oz, Pz) in overlapping moving windows (length of windows: 1 s; temporal gap between two subsequent moving windows: 62.5 ms). The Minimum Energy Combination method combines electrode signals into ‘channel’ signals in order to amplify the SSVEP response and to reduce the impact of the unrelated brain activity and of the noise. A channel signal is a linear combination of electrode signals that spa-tially filters the original multielectrodes recording.

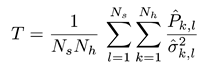

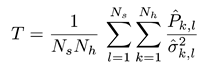

Equation (1) shows how the T index is defined:

where

where  is the estimated SSVEP power for the k-th harmonic frequency in channel signal sl and

is the estimated SSVEP power for the k-th harmonic frequency in channel signal sl and  is an estimate of the noise and unrelated brain activity in the same frequency. Thus, the T index evaluates the SSVEP response intensity with respect to the noise, by averaging the SNR (i.e., Signal-to-Noise Ratio) ratios across Nh harmonics and Ns channel signals. In this study, as in our previous work, we considered the first har-monic for the 20 Hz frequency and the first 2 harmonics for the 15 Hz frequency.

is an estimate of the noise and unrelated brain activity in the same frequency. Thus, the T index evaluates the SSVEP response intensity with respect to the noise, by averaging the SNR (i.e., Signal-to-Noise Ratio) ratios across Nh harmonics and Ns channel signals. In this study, as in our previous work, we considered the first har-monic for the 20 Hz frequency and the first 2 harmonics for the 15 Hz frequency.

is the estimated SSVEP power for the k-th harmonic frequency in channel signal sl and

is the estimated SSVEP power for the k-th harmonic frequency in channel signal sl and  is an estimate of the noise and unrelated brain activity in the same frequency. Thus, the T index evaluates the SSVEP response intensity with respect to the noise, by averaging the SNR (i.e., Signal-to-Noise Ratio) ratios across Nh harmonics and Ns channel signals. In this study, as in our previous work, we considered the first har-monic for the 20 Hz frequency and the first 2 harmonics for the 15 Hz frequency.

is an estimate of the noise and unrelated brain activity in the same frequency. Thus, the T index evaluates the SSVEP response intensity with respect to the noise, by averaging the SNR (i.e., Signal-to-Noise Ratio) ratios across Nh harmonics and Ns channel signals. In this study, as in our previous work, we considered the first har-monic for the 20 Hz frequency and the first 2 harmonics for the 15 Hz frequency.See our previous research for more details about EEG signal processing (Gregori Grgič et al., 2016).

Experimental design and statistical analyses

The task sequence was fixed and presented in the order described above. The Pursuit task was run first because it was used to calibrate the SSVEP signal.

Flickering side and motion direction were balanced within each tasks, so that these Pursuit and Attentional tracking tasks consisted of 8 trials each: 2 motion directions (target starting upward or downward) x 2 flickering sides (upper screen side at 15 Hz and bottom screen side at 20 Hz, or vice-versa) x 2 repetitions. The entire experiment, including subject preparation, lasted less than half an hour. Participants were invited to take breaks whenever they wished.

For the statistical analyses, ANOVA for repeated measures and paired samples and one-sample, onetailed Student’s t-test (with Bonferroni correction) were used. Except for single-trial analyses, data were collapsed subject-wise. The distributions conformed to the normality assumption (Kolmogorov-Smirnov Z test).

Results

Each half screen flickering (15 and 20 Hz) elicited a corresponding oscillation in the EEG. We computed the instantaneous profile of the T index (obtained through the Minimum Energy Combination algorithm, (Friman et al., 2007)) for the 20 Hz component, the instantaneous profile of the T index for the 15 Hz component, and the instantaneous profile for the combined T index. The combined T index was computed as the average of the two individual T values, one inverted in sign. In this way the evolution of two different signals was condensed into a single quantity: thus, a prevalence of the 20 Hz component produced a positive inflection of the combined T index, while a prevalence of the 15 Hz component produced a negative inflection. The combined T index was zero if the two components were equal.

The combined T index was then normalized, for each subject, to the second cycle of pursuit tracking, when the SSVEP signal is more stable. We called this new quantity ‘combined SSVEP’ (cSSVEP). When the gaze was in the centre of the screen the cSSVEP value assigned was zero, whereas when the gaze was directed at the maximal target distance from the centre (±9 deg) the cSSVEP was set to ±9 (arbitrary units). The cSSVEP signal was then low-pass filtered (cutoff frequency: 0.25 Hz), and subjected to drift removal (moving average, span=79 points). Due to the smooth nature of sinusoidal target motion, we used a moving window of 1 s.

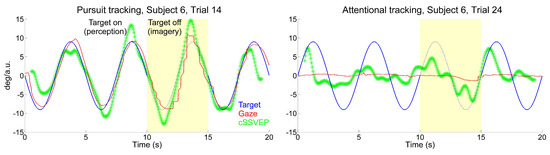

When observers followed the target with their eyes moving upward and downward across the doubleflickering background, the cSSVEP signal presented a clear target-contingent, sinusoidal-like modulation. This systematic modulation was present in many single-trial recordings, even when subjects had to keep their gaze on the central fixation dot, although with some irregular variations. Examples of eye movements and cSSVEP recordings during one overt and one covert tracking trial are given in Figure 2. In the covert tracking trials, the cSSVEP signal was noisy, but almost regular target-contingent cSSVEP modulations were nonetheless often visible, both during perception (white background) and imagery (yellow background).

Figure 2.

Examples of single-trial cSSVEP recordings (green traces). Data are taken from one representative observer during overt (left panel) and covert (right panel) attentional tracking. Target position and eye position are shown in blue and red, respectively. The yellow transparent patch indicates the imagery cycle.

Despite the high variability present in various trials, the average cSSVEP traces showed a clear systematic modulation paralleling the target motion (Figure 3), both when the target was visible and when it was invisible. Before averaging, the individual traces underwent phase-compensation because averaging non-phased signals may erase systematic modulation, especially during covert tracking of the invisible target. We took the phase shift of the sinusoidal modulation of the cSSVEP signal relative to the target motion resulting from a sinusoidal fitting, and shifted the entire cSSVEP trace in time by the opposite amount. The mean phase shift across trials and subjects was then re-introduced in the averaged cSSVEP trace by shifting it in time by the corresponding amount. As in our previous study, we dropped the trials with a significant correlation between cSSVEP modulation and residual eye movements in the Attentional tracking task (56% of the covert attention tracking trials, 28% overall). Thus, our results could not be explained by miniature fixational eye movements directed towards the target position.

Figure 3.

Average cSSVEP modulation (green traces) during vertical sinusoidal tracking in the 2 tasks. Only the second (perception) and third (imagery) tracking cycles are illustrated. The thickness of the trace represents the instantaneous 99% confidence interval around the mean. The thin dark sinusoidal curves superimposed on the cSSVEP traces are the best fitting curves for the perception and imagery cycles. Target position and average eye position are shown in blue and red, respectively. The yellow transparent patch indicates the imagery cycle.

These findings showed that vertical movements of covert visuospatial attention were accompanied by a clear systematic modulation of cSSVEPs.

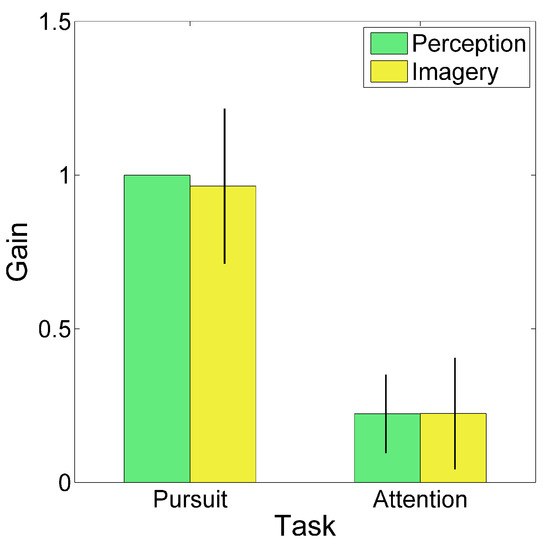

To quantify this cSSVEP modulation, the average traces were fitted with a sinusoidal function (thin dark curves in Figure 3). We computed the gain of the cSSVEP modulation in single trials, separately for the perception (second cycle) and imagery (third cycle) conditions. Gain was defined as the ratio between the cSSVEP peak-to-peak fitted sinusoidal amplitude and the peak-to-peak target sinusoidal amplitude. Then, given the applied normalization, the mean cSSVEP gain was 1 in the perception cycles during overt tracking. The mean cSSVEP gain, averaged across trials and subjects, is illustrated in Figure 4, separately for the different tasks and conditions (perception and imagery). The gain values in the covert attention task (Attentional tracking) confirmed that a clear cSSVEP modulation was present even when central fixation was required, both when the target was visible and when it was invisible. No gain differences emerged between perception and imagery (main effect of Condition: F(1,7)=0.238, p=0.640; interaction Task x Condition: F(1,7)=0.106, p=0.755). The main effect of Task was statistically highly significant (F(1,7)=116.364, p<0.001), because of the large difference between overt and covert tracking. All gain values were significantly larger than 0 (imagery during pursuit task: t(7)=11.437, p<0.001, Effect Size Cohen’s d=4.043; perception during attentional task: t(7)=5.207, p=0.001, Effect Size d=1.841; imagery during attentional task: t(7)=3.705, p=0.004, Effect Size d=1.310; note that the critical value to reach significant results is 0.0125 due to Bonferroni correction). Thus, the main findings from the gain analysis suggested the presence of a clear cSSVEP modulation in the covert attention task in the vertical plane, amounting to about 22% of the modulation amplitude found during overt tracking.

Figure 4.

Gain of cSSVEP modulation. Perception and Imagery are the second and third cycle, respectively. Bars represent the 99% confidence interval (in the perception cycle of Pursuit tracking there is no bar because the gain is 1 by definition).

As for the phase shift, we computed means and confidence intervals through circular statistics. When the observers tracked the target with smooth pursuit eye movements, in the perception condition there was a mean cSSVEP phase lag of 143 ms. Eye movements were lagging on average by 85 ms behind the target position, thus the net lag of cSSVEP behind eye position was 58 ms. This indicates that also in this vertical setup, once the steady-state is reached, SSVEPs reflect the changes of cortical visual responsiveness almost in real-time. In the imagery condition, attentional tracking was associated with a cSSVEP phase lead (38 ms), but neither the ANOVAs nor the paired samples t-tests gave statistically significant results (always p>0.1, likely due to a large variability in the covert condition, possibly due to the unnatural fixation effort.

Identical results were found when all trials were included in the analyses (Gain: main effect of Task: (F(1,7)=255.478, p<0.001; main effect of Condition: F(1,7)=0.268, p=0.620; interaction Task x Condition: F(1,7)=0.109, p=0.751; Student’s t tests always p<0.001; Phase: always p>0.05).

Next, we carried out an analysis on the relationship between cSSVEP modulation and subjective timing in imagery. We did not find a significant association between the subjects’ response and the cSSVEP phase (trial-wise point-biserial correlation, R=0.061, p>0.05). The phase difference between the two average cSSVEP traces in the two subjective conditions was 76 ms, as computed through sinusoidal fitting, which is revelatory of a general tendency of cSSVEP modulation to be delayed when observers subjectively reported a delay in imagery. It is likely the low number of trials was responsible for the above-mentioned lack of correlation.

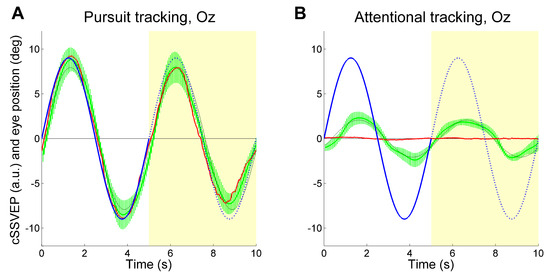

For the above analyses, we have used the signals from all electrodes (PO7, PO8, Oz, Pz), combined together through the Minimum Energy Combination algorithm (see Gregori Grgič, et al., 2016 for details). To assess the contribution of the more occipital cortical regions to SVVEP modulation, we analyzed the results by extracting the T index derived only from electrode Oz. This was done separately for each flickering frequency before combining them into the cSSVEP. Again, we found a clear target-related cSSVEP modulation (see Figure 5), indicating that attention tracking is feasible by using a single electrode at Oz also during vertical tracking.

Figure 5.

Average cSSVEP modulation (green traces) during Vertical tracking (Pursuit tracking and Attentional tracking), using the signal from the electrode at Oz. Conventions as in Figure 3.

Discussion

Our results showed that the continuous movements of covert visuospatial attention can be measured through SSVEPs also along the vertical axis. In fact we replicated our previous results obtained with horizontal attention movements (Gregori Grgič, et al., 2016). Also in this vertical setup, there were clear cSSVEP sinusoidal-like modulations contingent to target motion, both when the target was visible (perception condition) and when it was invisible (imagery condition), observable even in single trials, although not in all trials and with high variability.

At variance with our previous study, here we recorded only 8 subjects. Although this implies a somewhat reduced generalization of the results at the population level, we assume that generalization is the same that we found with horizontal attention tracking, where all individual observers showed a target-contingent SSVEP modulation (Gregori Grgič et al., 2016). Moreover, the fact that SSVEP modulation gain and phase did not change when all trials were included, as compared to the condition in which trials with a significant correlation between SSVEP modulation and residual eye movements were excluded, indicates that the results were not biased by low statistical power. The similarity of results when all trials were included also argues in favor of the lack of important effects of residual eye movements on SSVEP modulation, at least in our experimental conditions. The only finding that may have been influenced by the low trial number is the correlation with the behavioral reports, which showed the same effect direction as in our previous study, but without reaching statistical significance.

The fact that we independently achieved horizontal and vertical covert attention tracking suggests that true two-dimensional tracking is feasible. Then, four flickering frequencies one pair for each dimension should be used. In that case, a possible issue may arise, namely, that non-linear interactions between the horizontal and the vertical dimensions could determine a complex behavior in SSVEP responsiveness, such as for example a mutual dependency between the two dimensions. However, it is expected that, similarly to what has become common practice in eye movement recording, calibration in the 2D space should solve this problem. This would imply a different way to calibrate the SSVEP signal, as compared to the simple calibration that we adopted in the present study, for example one based on a virtual fixation matrix, where observers subsequently attend covertly to a given target in the 2D space, coupled to a suitable surface fitting algorithm. Alternatively, given that keeping a constant level of attention on a stationary target may not be too easy, a moving target that samples the entire 2D space may better do the job.

In both the current and previous studies we could capture the movement of covert attention even in single trials, though clearly the cSSVEP signal was noisier and less stable than in the averaged data, and with only one electrode at Oz. These features are of a certain importance in the context of independent BCI (Brain Computer Interface) research. Indeed, the signal processing techniques that we have used in this study can be easily implemented in a real-time mode (Calore, 2014).

Previous BCI researches allowed the subjects to control the movement of a cursor (Allison et al., 2012; Marchetti, Piccione, Silvoni, & Priftis, 2012; McFarland & Wolpaw, 2008; Trejo, Rosipal, & Matthews, 2006, Wolpaw & McFarland, 1994; 2004) or to perform other mental navigations, by decoding certain features extracted from the EEG that corresponded to specific device commands; for example, it could be possible to drive a wheelchair (Cao, Li, Ji, & Jiang, 2014) or a virtual helicopter or a real drone quadcopter (Doud, Lucas, Pisansky, & He, 2011; LaFleur et al., 2013). Therefore, subjects never explicitly imagined the vehicle trajectory, but they controlled discrete command instead. By contrast, in our studies, observers attended to the moving target or imagined its motion, and the resulting cSSVEP modulation represented attention movements directly.

Visuospatial attention tracking may thus become an important tool to communicate with completely locked-in patients or minimally conscious patients. It would be possible to let them operate attentioncontingent devices. This could go beyond ad-hoc imagery-based procedures (Monti et al., 2010; Owen et al., 2006), that could be very complex in terms of subject’s mental workload. By contrast, voluntarily shifting visuo-spatial attention or allocating it to a moving target are very natural acts, and could be recorded and exploited with our method in a similar way as eye tracking. Indeed, the eye muscle was shown to be the last muscle group under voluntary control before ALS patients entering a completely locked-in state (Murguialday et al., 2011). Attention tracking could replace eye tracking to maintain assistive communication in these patients. Also, patients may replace gaze gestures (Rozado, Agustin, Rodriguez, & Varona, 2012) with visuospatial attention gestures. They may learn to make simple attentional motions with certain characteristics (e.g., fast or slow, wide or narrow), or even to draw simple 2D figures.

Conclusions

We tracked visuospatial attention movements through SSVEPs along the vertical axis, replicating our previous results on horizontal tracking. Therefore, twodimensional tracking of covert attention movements should be achievable and consequently the possibilities of independent BCI would be expanded.

Acknowledgments

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

References

- Allison, B. Z., C. Brunner, C. Altstätter, I. C. Wagner, S. Grissmann, and N. Neuper. 2012. A hybrid ERD/SSVEP BCI for continuous simultaneous two dimensional cursor control. Journal of Neuroscience Methods 209, 2: 299–307. [Google Scholar] [PubMed]

- Calore, E. 2014. Towards Steady-State Visually Evoked Potentials Brain-Computer Interfaces for Virtual Reality environments explicit and implicit interaction. PhD, Università degli Studi di Milano. [Google Scholar]

- Cao, L., J. Li, H. Ji, and C. Jiang. 2014. A hybrid brain computer interface system based on the neurophysiological protocol and brain-actuated switch for wheelchair control. Journal of Neuroscience Methods 229, 33–43. [Google Scholar]

- Corneil, B. D., and D. P. Munoz. 2014. Overt responses during covert orienting. Neuron 82, 6: 1230–1243. [Google Scholar] [CrossRef] [PubMed]

- de’Sperati, C. 1999. Saccades to mentally rotated targets. Experimental Brain Research 126, 4: 563–577. [Google Scholar] [PubMed]

- de’Sperati, C. 2003. Precise oculomotor correlates of visuospatial mental rotation and circular motion imagery. Journal of Cognitive Neuroscience 15, 8: 1244–1259. [Google Scholar]

- de’Sperati, C., and H. Deubel. 2006. Mental extrapolation of motion modulates responsiveness to visual stimuli. Vision Research 46, 16: 25932601. [Google Scholar]

- Deubel, H., and W. X. Schneider. 1996. Saccade target selection and object recognition: Evidence for a common attentional mechanism. Vision Research 36, 12: 1827–1837. [Google Scholar] [CrossRef]

- Di Russo, F., S. Pitzalis, T. Aprile, G. Spitoni, F. Patria, A. Stella, and S. A. Hillyard. 2007. Spatiotemporal analysis of the cortical sources of the steady-state visual evoked potential. Human Brain Mapping 28, 4: 323–334. [Google Scholar]

- Doud, A. J., J. P. Lucas, M. T. Pisansky, and B. He. 2011. Continuous three-dimensional control of a virtual helicopter using a motor imagery based brain-computer interface. PLoS One 6, 10: e26322. [Google Scholar] [CrossRef]

- Drew, T., I. Mance, T. S. Horowitz, J. M. Wolfe, and E. K. Vogel. 2014. A soft handoff of attention between cerebral hemispheres. Current Biology 24, 10: 1133–1137. [Google Scholar]

- Drew, T., and E. K. Vogel. 2008. Neural measures of individual differences in selecting and tracking multiple moving objects. The Journal of Neuroscience 28, 16: 4183–4191. [Google Scholar] [CrossRef] [PubMed]

- Friman, O., I. Volosyak, and A. Graser. 2007. Multiple channel detection of steady-state visual evoked potentials for brain-computer interfaces. IEEE Transactions on Biomedical Engineering 54, 4: 742–750. [Google Scholar] [CrossRef] [PubMed]

- Gregori Grgič, R., E. Calore, and C. de’Sperati. 2016. Covert enaction at work: Recording the continuous movements of visuospatial attention to visible or imagined targets by means of Steady-State Visual Evoked Potentials (SSVEPs). Cortex 74: 31–52. [Google Scholar] [CrossRef] [PubMed]

- Groner, R., and M. Groner. 1989. Attention and eye movement control: An overview. European Archives of Psychiatry and Neurological Sciences 239: 9–16. [Google Scholar] [CrossRef]

- Hoffman, J. E., and B. Subramaniam. 1995. The role of visual attention in saccadic eye movements. Perception & Psychophysics 57, 6: 787–795. [Google Scholar]

- Hyönä, J., R. Radach, and H. Deubel. 2003. The mind’s eye: Cognitive and applied aspects of eye movement research. North-Holland, Elsevier. [Google Scholar]

- Jonikaitis, D., H. Deubel, and C. de’Sperati. 2009. Time gaps in mental imagery introduced by competing saccadic tasks. Vision Research 49, 17: 2164–2175. [Google Scholar] [CrossRef]

- LaFleur, K., K. Cassady, A. Doud, K. Shades, E. Rogin, and B. He. 2013. Quadcopter control in threedimensional space using a noninvasive motor imagery-based brain-computer interface. Journal of Neural Engineering 10, 4: 046003. [Google Scholar] [CrossRef]

- Laubrock, J., R. Engbert, and R. Kliegl. 2005. Microsaccade dynamics during covert attention. Vision Research 45, 6: 721–730. [Google Scholar] [CrossRef]

- Lovejoy, L. P., G. A. Fowler, and R. J. Krauzlis. 2009. Spatial allocation of attention during smooth pursuit eye movements. Vision Research 49, 10: 1275–1285. [Google Scholar] [CrossRef]

- Makin, A. D. J., and M. Bertamini. 2014. Do different types of dynamic extrapolation rely on the same mechanism? Journal of Experimental Psychology: Human Perception & Performance 40, 4: 1566–1579. [Google Scholar]

- Mangun, G. R., S. A. Hillyard, and S. J. Luck. 1993. Edited by D. E. K. Meyer. Electrocortical substrates of visual selective attention. In Attention and performance XIV. MIT Press: pp. 219–243. [Google Scholar]

- Marchetti, M., F. Piccione, S. Silvoni, and K. Priftis. 2012. Exogenous and endogenous orienting of visuospatial attention in P300-guided brain computer interfaces: A pilot study on healthy participants. Clinical Neurophysiology 123, 4: 774–779. [Google Scholar] [CrossRef]

- McFarland, D. J., and J. R. Wolpaw. 2008. Sensorimotor rhythm-based brain-computer interface (BCI): Model order selection for autoregressive spectral analysis. Journal of Neural Engineering 5, 2: 155–162. [Google Scholar] [CrossRef] [PubMed]

- Monti, M. M., A. Vanhaudenhuyse, M. R. Coleman, M. Boly, J. D. Pickard, L. Tshibanda, and S. Laureys. 2010. Willful modulation of brain activity in disorders of consciousness. The New England Journal of Medicine 362, 7: 579–589. [Google Scholar] [CrossRef] [PubMed]

- Morgan, S. T., J. C. Hansen, and S. A. Hillyard. 1996. Selective attention to stimulus location modulates the steady-state visual evoked potential. Proceedings of the National Academy of Sciences of the United States of America 93, 10: 4770–4774. [Google Scholar]

- Murguialday, A. R., J. Hill, M. Bensch, S. Martens, S. Halder, F. Nijboer, and A. Gharabaghi. 2011. Transition from the locked in to the completely locked-in state: A physiological analysis. Clinical Neurophysiology 122, 5: 925–933. [Google Scholar] [CrossRef] [PubMed]

- Owen, A. M., M. R. Coleman, M. Boly, M. H. Davis, S. Laureys, and J. D. Pickard. 2006. Detecting awareness in the vegetative state. Science 313, 5792: 1402. [Google Scholar] [CrossRef]

- Pastor, M. A., J. Artieda, J. Arbizu, M. Valencia, and J. C. Masdeu. 2003. Human Cerebral Activation during Steady-State Visual-Evoked Responses. The Journal of Neuroscience 23, 37: 11621–11627. [Google Scholar]

- Posner, M. I. 1980. Orienting of attention. Quarterly Journal of Experimental Psychology 32, 1: 3–25. [Google Scholar] [CrossRef]

- Renard, Y., F. Lotte, G. Gibert, M. Congedo, E. Maby, V. Delannoy, and A. Lècuyer. 2010. OpenViBE: An Open-Source Software Platform to Design, Test and Use Brain-Computer Interfaces in Real and Virtual Environments. Presence Teleoperators & Virtual Environments 19, 1: 35–53. [Google Scholar]

- Rozado, D., J. S. Agustin, F. B. Rodriguez, and P. Varona. 2012. Gliding and saccadic gaze gesture recognition in real time. ACM, Transactions on Interactive Intelligent Systems (TiiS) 1, 2: 1–27. [Google Scholar]

- Shioiri, S., P. Cavanagh, T. Miyamoto, and H. Yaguchi. 2000. Tracking the apparent location of targets in interpolated motion. Vision Research 40, 10-12: 1365–1376. [Google Scholar] [CrossRef]

- Shioiri, S., K. Yamamoto, and H. Yaguchi. 2000. Movement of visual attention while tracking objects. Paper presented at the 7th International Conference on Neural Information Processing (Iconip). [Google Scholar]

- Stormer, V. S., G. A. Alvarez, and P. Cavanagh. 2014. Within-Hemifield Competition in Early Visual Areas Limits the Ability to Track Multiple Objects with Attention. The Journal of Neuroscience 34, 35: 11526–11533. [Google Scholar] [CrossRef] [PubMed]

- Trejo, L. J., R. Rosipal, and B. Matthews. 2006. Braincomputer interfaces for 1-D and 2-D cursor control: Designs using volitional control of the EEG spectrum or steady-state visual evoked potentials. IEEE, Transactions on Neural System and Rehabilitation Engineering 14, 2: 225–229. [Google Scholar]

- Van Donkelaar, P., and A. S. Drew. 2002. The allocation of attention during smooth pursuit eye movements. Progress in Brain Research 140: 267–277. [Google Scholar]

- Wang, Y., R. Wang, X. Gao, B. Hong, and S. Gao. 2006. A practical VEP-based brain-computer interface. IEEE Transactions on Neural Systems and Rehabilitation Engineering 14, 2: 234–240. [Google Scholar]

- Wolpaw, J. R., and D. J. McFarland. 1994. Multichannel EEG-based brain-computer communication. Electroencephalography and Clinical Neurophysiology 90, 6: 444–449. [Google Scholar]

- Wolpaw, J. R., and D. J. McFarland. 2004. Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proceedings of the National Academy of Sciences of the United States of America 101, 51: 17849–17854. [Google Scholar]

Copyright © 2016. This article is licensed under a Creative Commons Attribution 4.0 International License.