Abstract

In scenarios such as field exploration, disaster relief, and agricultural automation, LIDAR-based reconstructed terrain models can largely contribute to robot activities such as passable area identification and path planning optimization. However, unstructured terrain environments are typically absent and poorly characterized by artificially labeled features, which makes it difficult to find reliable feature correspondences in the point cloud between two consecutive LiDAR scans. Meanwhile, the persistence of noise accompanying unstructured terrain environments also causes certain difficulties in finding reliable feature correspondences between two consecutively scanned point clouds, which in turn leads to lower matching accuracy and larger offsets between neighboring frames. Therefore, this paper proposes an unstructured terrain construction algorithm combined with graph optimization theory based on LOAM algorithm further introducing the robots motion information provided by IMU. Experimental results show that the method proposed in this paper can achieve accurate and effective reconstruction in unstructured terrain environments.

1. Introduction

With the rapid development of unmanned robots, geohazard monitoring, and field exploration, the demand for high-precision 3D reconstruction of complex unstructured terrain is becoming increasingly urgent []. Unstructured terrain, such as mountains, ruins, forests, etc., is characterized by irregular surface morphology, severe occlusion, and sparse texture features []. Traditional 3D reconstruction methods based on GPS or preset markers have significant limitations in complex environments, resulting in sparse reconstructed point clouds, much noise interference, and low efficiency []. How to achieve efficient and robust 3D point cloud reconstruction of unstructured terrain has become a research hotspot in the fields of computer vision, autonomous robot navigation, and environment perception [].

Three-dimensional reconstruction can be mainly divided into two research directions, LiDAR map construction [] and visual map construction [], depending on the different types of sensors. Visual sensor-based map construction has been widely utilized due to its low energy consumption and light weight []. Boston Dynamics employs a combination of binocular vision and sensors such as IMUs to achieve autonomous exploration of unknown environments in the wild by robot dogs. Cheng Y et al. investigated visual sensor-based environment modeling to provide critical Mars surface terrain information for motion control as well as path planning of the rover []. However, the accuracy of visual sensor modeling is relatively low, in contrast to LiDAR sensor-based map construction that can reach millimeter-level accuracy and is more widely adopted in applied research on environmental reconstruction [].

The crucial factor in LIDAR-based environment modeling is the process of data association and matching between neighboring point cloud frames []. A typical method to identify the corresponding transformation relationship between two neighboring frames of LiDAR scanned point cloud is the ICP algorithm []. The algorithm iteratively aligns the two point clouds by finding the correspondence of each point between the two frames until a set threshold is reached and then outputs the transformation parameters between the two point clouds. However, when the scanned point cloud is of a large order of magnitude, the algorithm employed results in excessive computational cost and poor timeliness [].

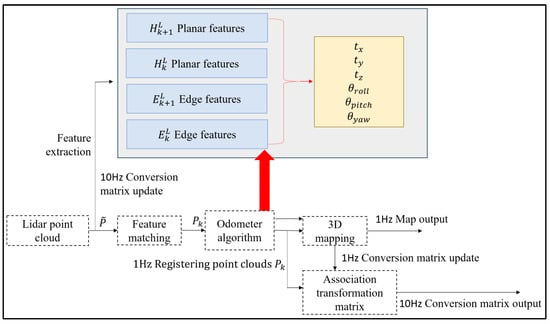

Zhang et al. proposed the LOAM real-time laser odometry and construction algorithm [], whose algorithm focuses on matching the estimated state information in order to further realize the real-time construction of maps. When employing LiDAR sensing sensors for mapping research and motion estimation, the LOAM algorithm offers several benefits, including high accuracy and strong real-time performance. The map construction problem is split into two distinct processes by this algorithm, which runs concurrently. A range algorithm is implemented at a higher frequency by an algorithm to estimate the single line LiDAR’s motion state. As illustrated in Figure 1, an alternative technique matches point clouds at lower frequencies with accuracy to produce real-time environmental mapping.

Figure 1.

LOAM mapping framework with laser odometer.

In recent years, with the development of multi-sensor fusion applications and deep learning, LOAM algorithms have evolved a variety of optimization algorithms. LeGO-LOAM splits a single-frame point cloud into subframes for parallel matching and shortens the processing delay []. LO-Net convolutional network jointly learns inter-frame matching with position and attitude estimation, and implicitly handles dynamic objects through mask-weighted geometrically constrained loss with accuracy comparable to LOAM and better generalization performance []. CSS-LOAM replaces traditional curvature computation with curvature scale space algorithms to analyze multi-scale geometric properties of point clouds with 40% computational efficiency improvement []. KDD-LOAM constructs keyframe KD trees for millisecond feature retrieval in loopback detection []. However, the majority of such algorithms show better performance and accuracy in structured environments, which are distorted to a certain extent in the face of unclear environmental features of unstructured terrain and large fluctuations in robot positional attitude caused by terrain excitation. Therefore, in this paper, we take the algorithm LOAM as the basis to explore the accurate environment modeling method for unstructured terrain.

However, in order to gather more precise and thorough three-dimensional environmental information, the LOAM algorithm often adopts a stop-scan-forward motion using a single line LiDAR. This results in subpar real-time performance across the system. Additionally, robots operating in complicated unstructured terrain conditions frequently experience unstable motion because of the nonlinear stimulation of the ground on the tires []. This will result in the distortion of LiDAR-scanned point cloud data, which will have a significant impact on the subsequent data correlation. Finding trustworthy feature correspondences in the point cloud between two consecutive LiDAR scans is further complicated by the absence of manually labeled features and ambiguous features in unstructured terrain environments []. This paper presents IMU motion information in response to the aforementioned features, with the aim of mitigating the distortion impact of scanned point clouds. In addition, we present graph optimization theory to enhance and optimize the conventional LOAM algorithm, accomplishing an accurate reconstruction of unstructured terrain.

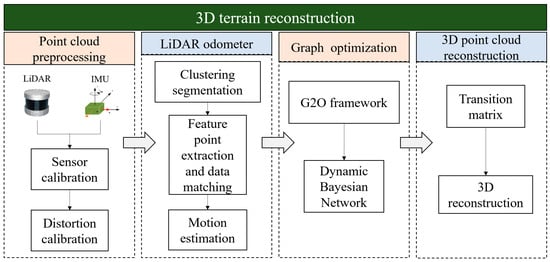

Figure 2 displays the foundation for 3D terrain reconstruction using LiDAR data. First, calibrate the LiDAR and IMU. Then, use the motion information from the IMU to lessen the distortion effect of the scanned point cloud. To perform LiDAR motion estimation, next processes include clustering and segmenting the scanned point cloud, extracting feature points, and finishing data matching. In order to optimize the mapping technique and accomplish the reconstruction of unstructured terrain environments, the graph optimization theory is finally introduced under the G2O framework.

Figure 2.

LiDAR based 3D unstructured terrain reconstruction framework.

2. Preprocessing of Point Clouds Scanned by LiDAR

LiDAR is relatively insensitive to light compared to visual sensors that can be used stably even at night when there is insufficient light []. In addition, the high resolution of LiDAR permits it to capture details of the environment at remote distances over a wide field of view. The error of LiDAR is relatively constant whether the distance measured is remote or not []. Therefore, robot-mounted LiDAR is used as an environment-aware sensor for reconstruction in this paper. However, the LiDAR is in motion along with the movement of the robots. The movement-induced distortion of the ambient 3D point cloud data may usually be disregarded if the LiDAR scan speed is significantly faster than the speed of the moving robots []. In this case, the standard ICP algorithm can be utilized for matching between different scanned frames to achieve better matching performance. Meanwhile, compensation following velocity estimation based on ICP can reduce LiDAR motion-induced distortion. However, if the scanning motion of LiDAR is relatively slow, especially in unstructured terrain environment where the robots are often accompanied by unsteady motion, it will further result in the distortion of the scanned point cloud affecting the accuracy of the algorithms. Therefore, the pre-processing of the LiDAR scanned point cloud is required. In this case, this paper introduces the IMU motion information for aberration calibration of the acquired raw point clouds.

2.1. Calibration of LiDAR Distortion

One potential source of distortion in a single-frame point cloud obtained through LiDAR scanning is the robot-mounted LiDAR, which tracks the motion of the robots. Unpredictable and variable terrain, especially in unstructured terrain conditions, results in sudden shifts in robots’ motion, which worsens the distortion of the point cloud data.

Independent position estimation is a commonly used approach to address LiDAR distortion. The odometer is another kind of measurement technique. For instance, we calibrate it by using an encoder mounted on the wheel for motion estimation. Wheel encoders are inappropriate in unstructured terrain due to the propensity of wheels to slip. Therefore, in this paper, the robots motion data provided by the IMU is introduced in the initial stage of the point cloud processing to eliminate the point cloud distortion caused by nonlinear motion.

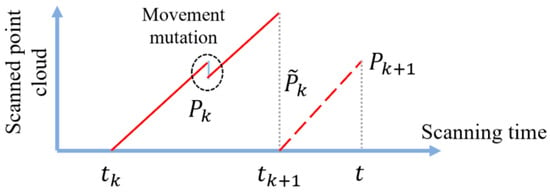

Motion compensation is another way of explaining the elimination of distortion. Concurrently map every point in the constantly scanned point cloud to the same time . As illustrated in Figure 3, calibrate the posture increment during this scanning phase during [, ] between the gradually detected point cloud and the final scanned point cloud .

Figure 3.

Two consecutive frames of point cloud scanned by LiDAR.

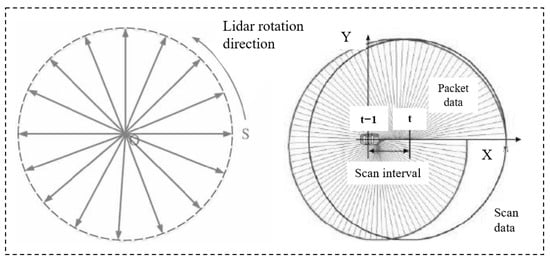

The data acquisition frequency of the LiDAR is set to 10 Hz to maintain the data density of the acquisition and the effective operation of real-time data processing. A single frame of LiDAR data acquisition is separated by 100 milliseconds between the beginning and the end of the frame. In the same point cloud frame, the attitude of the body will be changed. Therefore, as the LiDAR is moved with the robots, each scan line will no longer be a traditional circle, but a spiral line as shown in Figure 4. Segmentation may occur to varying degrees when there is a sudden change in robots’ motion, and therefore it is necessary to compensate for this in conjunction with the attitude information of the robots’ motion.

Figure 4.

LiDAR data calibration following robots’ movement.

Compared with 10 Hz LiDAR data, the IMU’s acquisition frequency is 100 Hz, which can effectively eliminate the data distortion caused by LiDAR’s movement with the robots, and better compensate for the loss of LiDAR information during the scanning process. It is important to note that all IMU information throughout the scanning period is collected during the point cloud preprocessing phase of the point cloud data motion distortion calibration procedure.

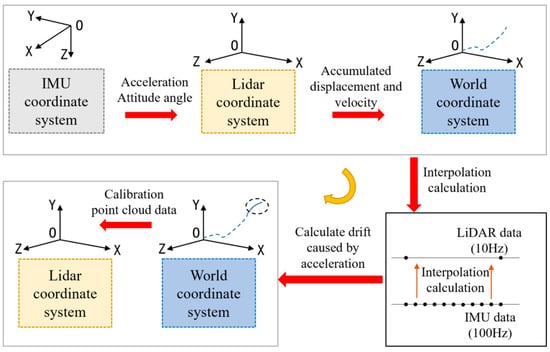

Figure 5 illustrates the method of calibrating LiDAR point cloud distortion. Initially, the acceleration and Euler angles measured by the IMU were converted to the LiDAR coordinate system using the transformation relations identified during the calibration process. Then, the acceleration from the LiDAR coordinate system is converted to the world coordinate system utilizing the Euler Angle Transform, and the acceleration data from the IMU is utilized to calculate the cumulative displacement and velocity.

Figure 5.

Calibrating Lidar point cloud distortion procedure.

The main contributor to the distortion is the drift caused by the unstable motion of the LiDAR. Attitude measurements during LiDAR accelerated motion are calculated by interpolating the IMU data in this paper.

The sampling frequencies of the LiDAR and IMU are 10 Hz and 100 Hz, respectively. This determines the timestamps of the two IMU data points corresponding to the start and end of the scan, as well as the timestamp of the current LiDAR scan frame. The displacement produced by the accelerated motion from the current frame of the LiDAR point cloud to the current scan time is:

In the formula above, the drift displacement is denoted by , and the total displacement is denoted by .

The calculated values are referenced to the world coordinate system and require an additional conversion to the LiDAR coordinate system in order to compensate for the corresponding LiDAR point cloud data to achieve an aberration calibration of the point cloud.

2.2. Three-Dimensional Point Cloud Feature Extraction

Since unstructured terrain environments contain common and relatively smooth terrain, planar segmentation of the original point cloud is performed to speed up the computational efficiency of the algorithm. The original point cloud is initially downsampled by the VoxelGrid to reduce the amount of data and maintain the overall geometric and topological properties of the point cloud. The normal is then utilized as a local descriptor of the point cloud to seed a number of points with minimal curvature for growth clustering in order to cluster points that satisfy a curvature smoothing threshold to form planar blocks. Finally, the plane is fitted utilizing a random sampling consistent iterative algorithm.

For the dense point cloud generated, it is essential to ensure that enough stable feature points can be efficiently extracted to fulfill the alignment requirements. The parameter settings of the feature point detection algorithm are shown in Table 1, and all the parameters are determined and approved through a large number of experiments to guarantee the high efficiency and stability of the algorithm.

Table 1.

Parameters of feature point detection algorithm.

The point cloud is further segmented in order to improve processing efficiency and feature extraction accuracy. Suppose is the original single frame point cloud acquired at time . is a point in the single-frame point cloud . is initially projected onto the distance image, and associated with represents the Euclidean distance from the corresponding point . Then, the points are clustered utilizing a distance-based clustering segmentation method and independent labels are assigned to the points in the same class. The original point cloud contains numerous independent noisy points at the same time, which may produce unreliable features, in order to perform fast and reliable feature extraction in this paper clustering with less than a specific number of thresholds is ignored. Once planar segmentation has been performed, only points that may represent large objects are retained for further subsequent processing.

To create a single frame point cloud , LiDAR scans the surrounding at a frequency of 10 Hz. Edge and planar points are chosen to be feature points in the laser point cloud . Let represent a point in , . Considering is the collection of continuous points that the same scanning line’s LiDAR collected. will comprise half of the points on either side of when a complete point cloud is obtained, with an angle separation of 0.2° between neighboring points. Define an expression for evaluating the smoothness of a localized surface as follows:

The coordinates of the -th point in the point cloud in the LiDAR origin coordinate system are represented by in the formula above.

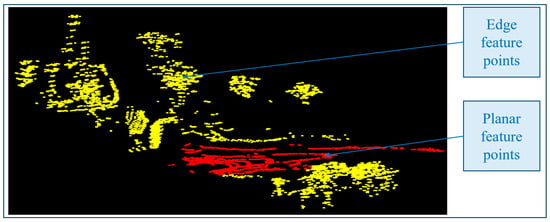

Sort the points in the same scan line based on the smoothness value of the local surface and select feature points. The threshold is used to distinguish different types of feature points. Points with smaller values are chosen as planar feature points, whereas ones with larger values are chosen as edge feature points. Figure 6 displays the feature point extraction results from the point cloud. The red and yellow spots correspond to the planar feature points and edge feature points, respectively.

Figure 6.

Planar and edge feature points were taken from a single frame point cloud.

2.3. Matching of Point Cloud Feature Points

The parameter settings of the feature point alignment algorithm are shown in Table 2, and all the parameters are determined through a large number of experiments to ensure the high efficiency and stability of the algorithm.

Table 2.

Parameters of the feature point alignment algorithm.

Meanwhile, assuming is the start scanning time of the current point cloud frame . LiDAR scanned a single frame point cloud, which is represented as . Additionally, represents the point cloud at time where it is reprojected. Combine the recently obtained point cloud and inside the next scanning period to estimate the LiDAR’s motion status. Moreover, the set of planar feature points as and the set of edge feature points as from the using the previously mentioned technique. Determine which edge lines in match the points in , and which planar blocks in match the points in .

Assuming that is the six degrees of freedom position and that attitude transformation corresponds to the LiDAR in time :

where the translation distance along the X, Y, and Z axes is represented by the values , , and . The X, Y, and Z axes’ rotation angle information for , , and .

Being calibrated using the IMU, LiDAR motion can be roughly assumed to remain constant for a certain amount of time. The calibration to be performed above is as expressed in Section 2.1. In this paper, the LiDAR is initially calibrated with the IMU. Then, we refer to the six degrees of freedom information of vehicle motion provided by the IMU and combine it with the LiDAR point cloud to eliminate the point cloud distortion due to nonlinear motion. Prior to the point cloud data calibration process, all six degrees of freedom information of the IMU for that scan time was already available. Finally, the accumulated displacements and velocities are calculated according to the information of the six degrees of freedom of the IMU to form a complete point cloud which is closer to the real unstructured environment.

The position and attitude transformation between and [, ], for a given point and time , can then be used to determine using linear interpolation of :

The collection of edge points and plane points that extracted, and the set of points that and reprojected at time , are denoted by and , respectively. Between and or and , the conversion connection is:

The coordinates of and midpoint in the LiDAR origin coordinate system are represented by and . R is the rotation matrix, and stands for the first to third rows of :

The rotation angle is denoted by in the equation above,

It can be concluded that:

Next, for every point in and , find the closest neighboring point in . If and are the respective edge lines for the edge point in the point cloud , then the equivalent distance is:

The coordinates of points , , and are denoted by the symbols , , and in the formula above.

For the planar point , if , , and are the corresponding planar blocks in the point cloud , the corresponding distances are:

The coordinates of , , , and are represented by the letters , , , and in the formula above.

3. Algorithm for 3D Reconstruction

3.1. Point Cloud Feature Matching for 3D Reconstruction

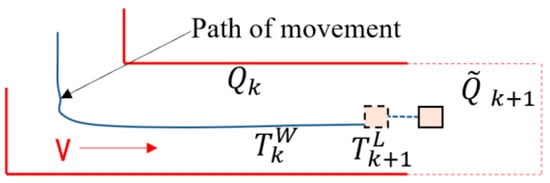

Figure 7 displays the schematic diagram for 3D reconstruction. The LiDAR posture produced by the algorithm at time is represented by the blue curve. The LiDAR motion data computed with the previously described laser mileage calculation method is represented by the blue dotted line. Project the calibrated point cloud onto the red area of that is depicted on the current map after obtaining and . On the map, the calibrated point cloud is shown as .

Figure 7.

An illustration of the 3D reconstruction schema.

The detailed procedure is as follows:

Firstly, convert the global coordinate system from the calibrated point cloud, and then match features with the local map that was created. Point clouds outside of the range will be eliminated upon surpassing the predetermined threshold range. Secondly, based on the smoothness S of the nearby surface, ascertain if the point is a plane feature point or an edge feature point. Finally, locate the feature points’ matching points on the created local map . Determine the plane block of the matching plane formed by the three nearest neighbor plane points, or the edge line of the corresponding edge formed by the two nearest neighbor edge points. Accurately match newly contributed point clouds to the locally created map. Simultaneously, the attitude determined by the laser mileage computation method was slightly modified.

When compared to the laser mileage calculation approach, the reconstruction algorithm in this paper operates at a very low frequency. The laser odometer operates at a frequency of 10 Hz, whereas the reconstruction process operates at 1 Hz. When , LiDAR scanning produces an undistorted point cloud frame and concurrently produces motion pose change information from the LiDAR. At the end of the scanning time , the attitude of the LiDAR on the local map is denoted by , and the generated map point cloud gathered to that point is specified as . The point cloud of represented by , and it is projected onto the global coordinate system. Next, use the LiDAR’s attitude to optimize the match between and .

Determine the matching relationship between feature points first. To facilitate point retrieval, store the point cloud in a designated cubic region with a predetermined edge length. Then, extract the points that correspond to the feature point and store them in an octree. The covariance matrix of is , assuming that is a collection of surrounding points inside the area surrounding the feature points identified in . has an eigenvalue of and an eigenvector of . has two larger and one smaller eigenvalue if is on a plane block. The minimal eigenvalue of a feature vector corresponds to the direction of a planar block. has one larger and two smaller eigenvalues if is on the edge. The feature vector that corresponds to the largest eigenvalue represents the edge line’s orientation. Three points are chosen on the plane block, or two points are chosen on the edge line and fitted using the L-M method, then we add to the locally created map. This process is performed in order to further calculate the distance between feature points and their corresponding points.

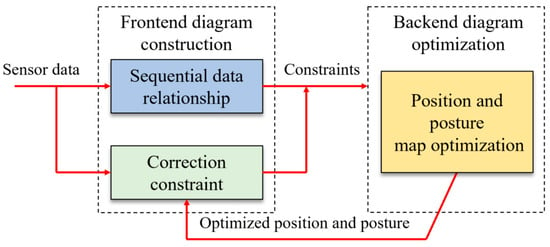

3.2. An Algorithm for Modeling Optimization Derived from Graph Optimization Theory

The accuracy of reconstruction cannot be guaranteed when using data association matching between two point clouds, as this might easily result in inaccurate matching and error accumulation []. Graph optimization can solve drift and erroneous matching while reducing cumulative errors even further, increasing the algorithm’s adaptability []. In order to achieve complete motion trajectory estimation of robots while preserving global consistency in map construction, this algorithm determines the relationship between the historical pose state of the robots and the current observation information, and forms correction constraints between the current pose and the historical pose. A backend graph optimization processing approach is presented in this article with the ultimate goal of improving local map creation outcomes. Figure 8 shows the process of graph optimization.

Figure 8.

Diagrammatic representation of the optimization process.

It can be broken down into the subsequent steps:

3.2.1. Converting the Real-Time Reconstruction Issue into a Description-Based Least Squares Problem

The fundamental task of real-time reconstruction is to solve the maximum posterior probability estimation of the position of feature points and the pose of moving robots on the map based on the observer’s output and the motion state data analyzed by the LiDAR mileage calculation method, as indicated by the following equation:

in the formula above stands for the estimated pose information of moving robots and the position information of feature points on the map. The motion control input and the corrective constraint result are included in the parameter U. To solve the joint probability distribution on the right side of the equation above, break it down as follows:

Determine the logarithm of the two sides of the preceding equation by breaking down the posterior probability estimation into closed-loop constraints and motion information model constraints:

At this stage, the least squares optimization problem will be solved in place of the real-time reconstruction.

3.2.2. Fundamental Structure Derived from Graph Optimization Theory

Real-time reconstruction is modeled using graph models algorithm based on graph optimization theory. It utilizes observed data to maximize the estimation of a moving robot’s whole motion trajectory and produces mapping results that are globally consistent. In the graph model, the edges stand in for constraint connections, and each node represents the system state of moving robots and the environment at various moments in time.

Graph optimization theory separates the real-time reconstruction problem into two parts: the back-end, which further optimizes the graph model created by the front-end, and the front-end, which creates graph models based on observation and system constraints. The front-end data association pertains to the assessment of the relative attitude of robots and the inter-frame matching of neighboring data. From the standpoint of data processing, the main goal of this process is to address the problem of data correlation. Graph optimization takes into account global data correlation without directly processing observed data. From the optimization process’s point of view, it is dependent on the observed data to determine the ideal relationship between local graphs and finish the construction of the graph.

The front-end’s graph will not match the global graph because of mistakes and noise in observations. In an ideal state, all of the following criteria must be satisfied for there to be a fully closed loop:

The identity matrix is denoted by I in the formula above, and the relative transformation matrix between the observed data frames is denoted by .

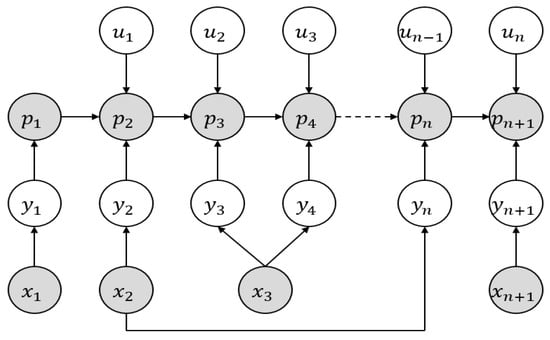

3.2.3. Building a Dynamic Bayesian Network-Based Graph Model

Dynamic Bayesian networks are currently widely used in research domains like pattern recognition, computer vision, and signal processing. The time-varying random variables and their interrelation can be expressed simply by using dynamic Bayesian networks. Typically, the graph model represented by dynamic Bayesian networks consists of two kinds of nodes: implicit nodes that correspond to unobservable parameter variables and explicit nodes that match observable parameter variables. Figure 9 depicts the graph model structure for real-time reconstruction based on dynamic Bayesian network expression, with white nodes standing in for visible explicit parameter variables. As an illustration, consider the observation output information (i = 1, …, n + 1) of feature points and the control input information (i = 1, …, n) of mobile robots. Gray nodes stand in for the unobservable implicit parameter variables. Edges in the graph, such as the pose information (i = 1, …, n + 1) of mobile robots and the position information (i = 1, …, n + 1) of feature points, describe the conditional dependency relationship between nodes.

Figure 9.

Dynamic Bayesian network representation-based graph structure.

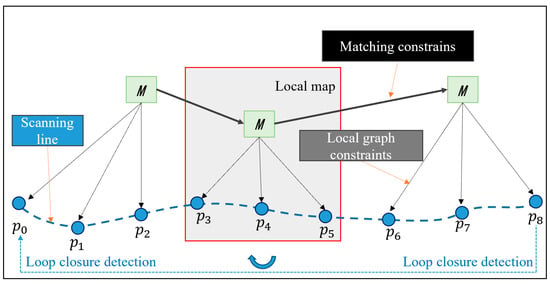

The moving robots current pose is represented by the constraint equation nodes that were built in order to optimize the structure in this paper. The point cloud matching result parameters and pose state information are represented by the edge. Using the nonlinear least squares approach to solve the optimization result that minimizes the error and creates an objective function of error through the establishment of a covariance matrix. Figure 10 displays the structure of the established graph optimization model. stands for the local map among them, and for the LiDAR pose parameter data, respectively.

Figure 10.

Structure of the Optimization Framework.

By incorporating node operators and edge error functions, parameter analysis is carried out by further utilizing the G2O framework to address local and global graph optimization issues. The purposeful function is:

represents a set of points in the point cloud. indicates neighboring nodes. indicates the point cloud’s coordinate value. indicates the rotation angle of each axis. indicates the covariance matrix. indicates the edge of the observation constraint, and indicates the edge that has to be estimated.

An unbiased estimate of the error variance is represented by in the formula above. depicts the matrix of correlation. The mistake at the corresponding location is denoted by , and the concatenated matrix made up of is represented by . The discrepancy between the point cloud’s actual position and its predicted optimal position is denoted by . The computed decomposition matrix is represented by . The decomposition matrix is used to determine the covariance of the point cloud, and the concatenated matrix is made up of .

Further linearize in the form of correlation matrix: . and are, respectively, composed of and in series. The objective function is expressed as:

The block array in the formula above is made up of , and the block array is made up of connected in series.

Supposing , then the sub items of and can be expressed as:

Lastly, a system of equations solved in order to define the optimization problem as . The optimum posture information is as follows:

The current pose is denoted by in the formula above. The difference between the current and estimated poses is represented by , and the optimized position is denoted by .

While the local maps are being constructed, the algorithm calculates a fast point feature histogram for each frame of the point clouds. In this paper, loop closure detection is executed with this histogram to improve the efficiency of the algorithm. The new constraints gained from loop closure detection will be added into the graph as new edges, which can effectively reduce the cumulative error during the robot movement. As shown in Figure 10, the robot travels sequentially from P0 to P8 and detects a marked similarity with P0 at point P8. Therefore, the P0–P8 position and attitudes constraints are established, and this constraint is added into the graph as a new edge. Finally, the local map positions and attitudes are optimized according to the graph optimization framework, and the local maps are stitched together to form a global map.

4. Experimentation

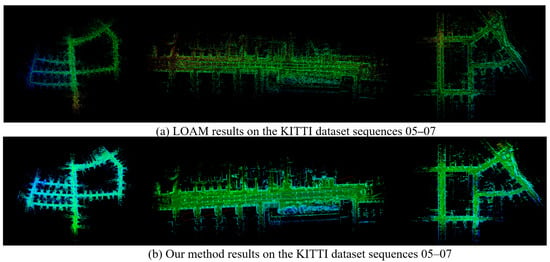

4.1. Experimental Performance in KITTI Dataset

This article validates the feasibility of our method on the 05–07 sequence of the KITTI dataset, and the results are shown in Figure 11. It can be seen that the 3D point cloud constructed by the algorithm proposed in this article has better performance. Especially at corners and loop nodes, the point clouds of each frame have better overlap.

Figure 11.

Experimental results on the KITTI dataset sequences 05–07.

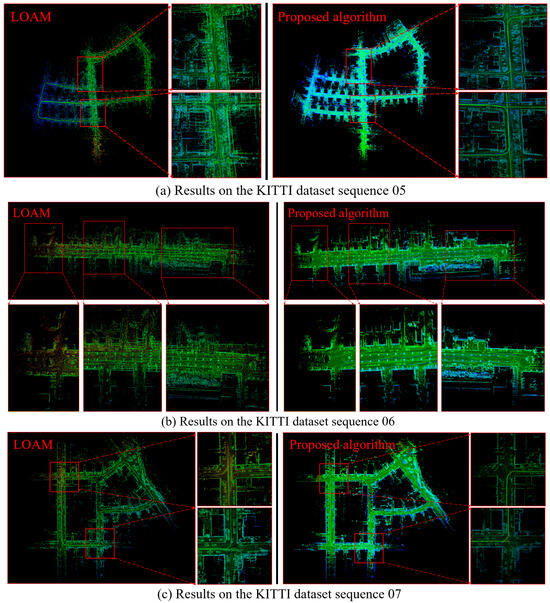

The specific details are enlarged, as shown in Figure 12. It can be seen that the algorithm proposed in this article has a better reconstruction effect, and the trajectory is more in line with the actual running state.

Figure 12.

Enlarged details on the KITTI dataset sequences 05–07.

The comparison of APE (Absolute Percentage Error) and RPE (Relative Percentage Error) in the KITTI dataset experimental results is shown in Table 3.

Table 3.

The comparison of APE and RPE results.

4.2. Unstructured Terrain Environment Reconstruction Experimentation

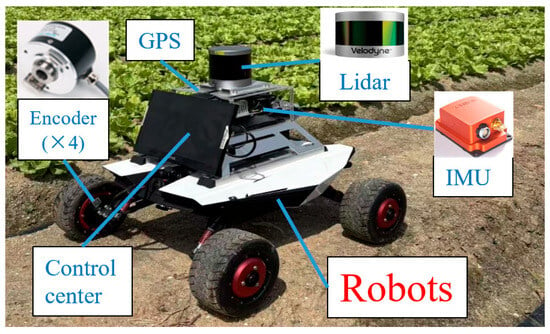

Figure 13 displays the experimental platform that was used to further validate the advantages of the algorithm proposed.

Figure 13.

Experimental platform.

The primary sensors mounted on the robots are LiDAR, IMU, and Encoders. Table 4 displays the sensor’s precise parameters.

Table 4.

Experimental platform sensor parameters.

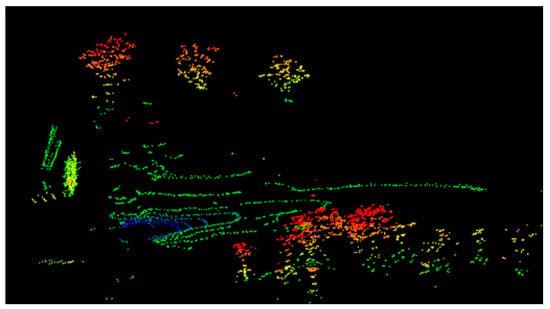

The algorithm for processing LiDAR data was running on a portable computer with 32 GB of RAM, eight cores, and 2.3 GHz throughout the experiment. The mileage calculation method and the reconstruction algorithm operate on two different kernels simultaneously during the algorithm’s execution. Figure 14 displays the created local terrain point cloud. The reconstruction algorithm in this article has a calculation frequency of 1 Hz.

Figure 14.

Construction effect of terrain point cloud.

The aforementioned graphic illustrates how well the local terrain point cloud created by the technique suggested in this article can capture the features of the ground and trees in unstructured terrain conditions. It is challenging to assess the algorithm’s efficacy because of the limited quantity of point clouds in the created single frame local terrain point cloud. Longer reconstruction tests were therefore carried out in outdoor settings.

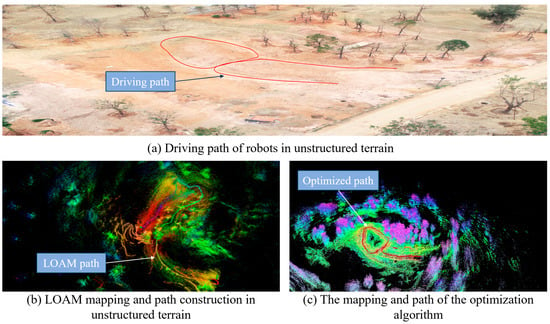

The LiDAR was mounted on a robot for the outdoor unstructured terrain environment experiment.

Figure 15a depicts the robots driving path in an unstructured terrain environment; Figure 15b depicts the reconstruction effect and path of the standard reconstruction algorithm, LOAM, for a single LiDAR in the same environment. It is evident that the LOAM algorithm has exhibited considerable distortion and has not created a fully circular junction path because of the intricacy of the unstructured terrain environment and the ambiguous elements. Figure 15c illustrates the reconstruction impact and path of the optimization procedure suggested in this paper in an unstructured terrain environment. As can be observed, the reconstruction effect is more in line with the actual unstructured terrain environment, and the algorithm suggested in this article is more consistent with the driving trajectory of the robots.

Figure 15.

Comparison of reconstruction results in unstructured terrain.

Further ablation experiments of the proposed algorithm for module gain and runtime are conducted, and the results are shown in Table 5. The LOAM base model performed consistently over the course of the experiment. By adding the position and attitude optimization module alone, the accuracy is improved significantly. It can be observed that the unstructured environment has a greater influence on the robot position and attitude, which affects the accuracy of terrain modeling. With the addition of the graph optimization module alone, the improvement in accuracy is less significant, while the runtime is longer. The highest accuracy is achieved when both modules are added at the same time, although the runtime is consumed longer.

Table 5.

Ablation performance of optimization algorithms.

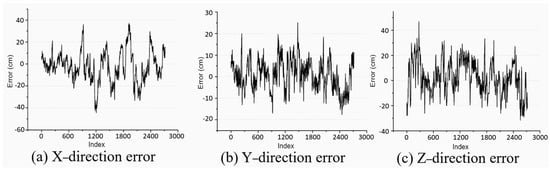

The specific error analysis on the unstructured terrain environment is shown in Figure 16. From the figure, it can be seen that the method proposed in this article has high accuracy in pose estimation. The average value of position estimation error is 9.16 cm.

Figure 16.

Analysis of position estimation error.

5. Discussion

The experimental results in Section 4 demonstrate several important findings: Firstly, with the introduction of IMU information and the graph optimization algorithm, the algorithm proposed in this paper can achieve better 3D reconstruction performance. We believe that this performance improvement is not only attributed to the integration of IMU information, but also benefits from the fact that the graph optimization algorithm can optimize the one-to-one correspondence between point clouds. Secondly, we conducted comprehensive comparative experiments on the structured dataset, which effectively verified the effectiveness of the method we proposed. In addition, especially in experiments involving unstructured terrain environments, traditional algorithms have shown signs of failure, and the robots’ trajectory has significantly deviated from the true value. However, the algorithm proposed in this paper can not only effectively achieve the three-dimensional reconstruction of unstructured terrain point clouds but also maintain a high level of accuracy.

6. Conclusions

In order to calibrate the distortion of LiDAR and successfully mitigate the effects of nonlinear robots’ motion, this article first employs IMU to give robots motion information. Lastly, a thorough investigation of the reconstruction of the terrain in outdoor unstructured environment was carried out. The results of the experiments demonstrate that the algorithm presented in this research is able to reconstruct the unstructured terrain environment with more accuracy.

Author Contributions

Conceptualization, W.C.; data curation, X.Z.; investigation, X.L.; formal analysis, W.C. and X.Z.; funding acquisition, W.C., X.Z. and X.L.; methodology, W.C.; resources, X.L. and X.Z.; software, W.C.; supervision, W.C.; validation, X.L.; visualization, W.C. and X.Z.; writing, W.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financially supported by the Natural Science Foundation of Fujian Province (Grant No. 2022J05235); Science and Technology Department of Fujian Province (Grant No. 2023I0039); Minjiang University (Grant Nos. MJY22022 and ZD202303).

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, W.; Liu, Q.J.; Hu, H.S.; Liu, J.; Wang, S.J.; Zhu, Q.Y. Novel laser-based obstacle detection for autonomous robots on unstructured terrain. Sensors 2020, 20, 5048. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Zhu, Q.Y.; Zheng, X.P.; Liu, D.; Guo, H.F.; Song, S.; Tang, X.T. A feature fusion-based optimization approach for unstructured terrain modeling in agriculture. Comput. Electron. Agric. 2024, 225, 109276. [Google Scholar] [CrossRef]

- Fu, J.L.; Jenelius, E.; Koutsopoulos, H.N. Identification of workstations in earthwork operations from vehicle GPS data. Autom. Constr. 2017, 83, 237–246. [Google Scholar] [CrossRef]

- Gholami, A.; Ramirez-Serrano, A. Terrain traversability via sensed data for robots operating inside heterogeneous, highly unstructured spaces. Sensors 2025, 25, 439. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, M.F.; Masood, K.; Fremont, V.; Fantoni, I. Active SLAM: A review on last decade. Sensors 2023, 23, 8097. [Google Scholar] [CrossRef] [PubMed]

- Han, S.R.; Li, M.C.; Tang, H.Y.; Song, Y.Z.; Tong, G.J. UVMO: Deep unsupervised visual reconstruction-based multimodal-assisted odometry. Pattern Recognit. 2024, 153, 110573. [Google Scholar] [CrossRef]

- Li, C.D.; Yu, L.; Fei, S.M. Large-scale, real-time 3D scene reconstruction using visual and IMU sensors. IEEE Sens. J. 2020, 20, 5597–5605. [Google Scholar] [CrossRef]

- Cheng, Y.; Maimone, M.; Matthies, L. Visual odometry on the Mars Exploration Rovers. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, Waikoloa, HI, USA, 10–12 October 2005; pp. 903–910. [Google Scholar]

- Shao, J.; Yao, W.; Wang, P.Z.; He, Z.Y.; Luo, L. Urban GeoBIM construction by integrating semantic LiDAR point clouds with as-designed BIM models. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5701712. [Google Scholar] [CrossRef]

- Hao, X.S.; Diao, Y.F.; Wei, M.C.; Yang, Y.F.; Hao, P.; Yin, R.; Zhang, H.; Li, W.M.; Zhao, S.; Liu, Y. MapFusion: A novel BEV feature fusion network for multi-modal map construction. Inf. Fusion 2025, 119, 103018. [Google Scholar] [CrossRef]

- Sang, M.T.; Wang, W.; Pan, Y.N. RGB-ICP Method to calculate ground three-dimensional deformation based on point cloud from airborne LiDAR. Remote Sens. 2022, 14, 4851. [Google Scholar] [CrossRef]

- Tuna, T.; Nubert, J.; Nava, Y.; Khattak, S.; Hutter, M. X-ICP: Localizability-aware LiDAR registration for robust localization in extreme environments. IEEE Trans. Rob. 2024, 40, 452–471. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. Low-drift and real-time lidar odometry and mapping. Auton. Rob. 2017, 41, 401–416. [Google Scholar] [CrossRef]

- Shan, T.X.; Englot, B. LeGO-LOAM: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Li, Q.; Chen, S.Y.; Wang, C.; Li, X.; Wen, C.L.; Cheng, M.; Li, J. LO-Net: Deep real-time lidar odometry. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8465–8474. [Google Scholar]

- Gonzalez, C.; Adams, M. Curvature scale space LiDAR odometry and mapping (LOAM). J. Intell. Rob. Syst. 2024, 110, 67. [Google Scholar] [CrossRef]

- Huang, R.L.; Zhao, M.L.; Chen, J.M.; Li, L. KDD-LOAM: Jointly learned keypoint detector and descriptors assisted LiDAR odometry and mapping. In Proceedings of the IEEE International Conference on Robotics and Automation, Yokohama, Japan, 13–17 May 2024; pp. 8559–8565. [Google Scholar]

- Zhang, W.; Gong, Q.S.; Yang, H.Y.; Tang, Y.J. CPG Modulates the omnidirectional motion of a hexapod robot in unstructured terrain. J. Bionic Eng. 2023, 20, 558–567. [Google Scholar] [CrossRef]

- Siva, S.; Wigness, M.; Rogers, J.G.; Quang, L.; Zhang, H. Self-reflective terrain-aware robot adaptation for consistent off-road ground navigation. Int. J. Rob. Res. 2024, 43, 1003–1023. [Google Scholar] [CrossRef]

- Park, E.S.; Arshad, S.; Park, T.H. Initial pose estimation method for robust LiDAR-inertial calibration and mapping. Sens. 2024, 24, 8199. [Google Scholar] [CrossRef] [PubMed]

- An, P.; Gao, Y.S.; Wang, L.H.; Chen, Y.F.; Ma, J. Online extrinsic calibration on LiDAR-camera system with lidar intensity attention and structural consistency loss. Remote Sens. 2022, 14, 2525. [Google Scholar] [CrossRef]

- Kim, T.H.; Park, T.H. Placement optimization of multiple Lidar sensors for autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 21, 2139–2145. [Google Scholar] [CrossRef]

- Ji, J.S.; Wang, W.W.; Ning, Y.P.; Bo, H.W.; Ren, Y.F. Research on a matching method for vehicle-borne laser point cloud and panoramic images based on occlusion removal. Remote Sens. 2024, 16, 2531. [Google Scholar] [CrossRef]

- Li, C.H.; Guo, G.H.; Yi, P.; Hong, Y.G. Distributed pose-graph optimization with multi-level partitioning for multi-robot SLAM. IEEE Rob. Autom. Lett. 2024, 9, 4926–4933. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).