EdgeVidCap: A Channel-Spatial Dual-Branch Lightweight Video Captioning Model for IoT Edge Cameras

Abstract

1. Introduction

- To propose a keyframe guidance mechanism that achieves precise localization and extraction of critical information in video sequences through cross-attention computation.

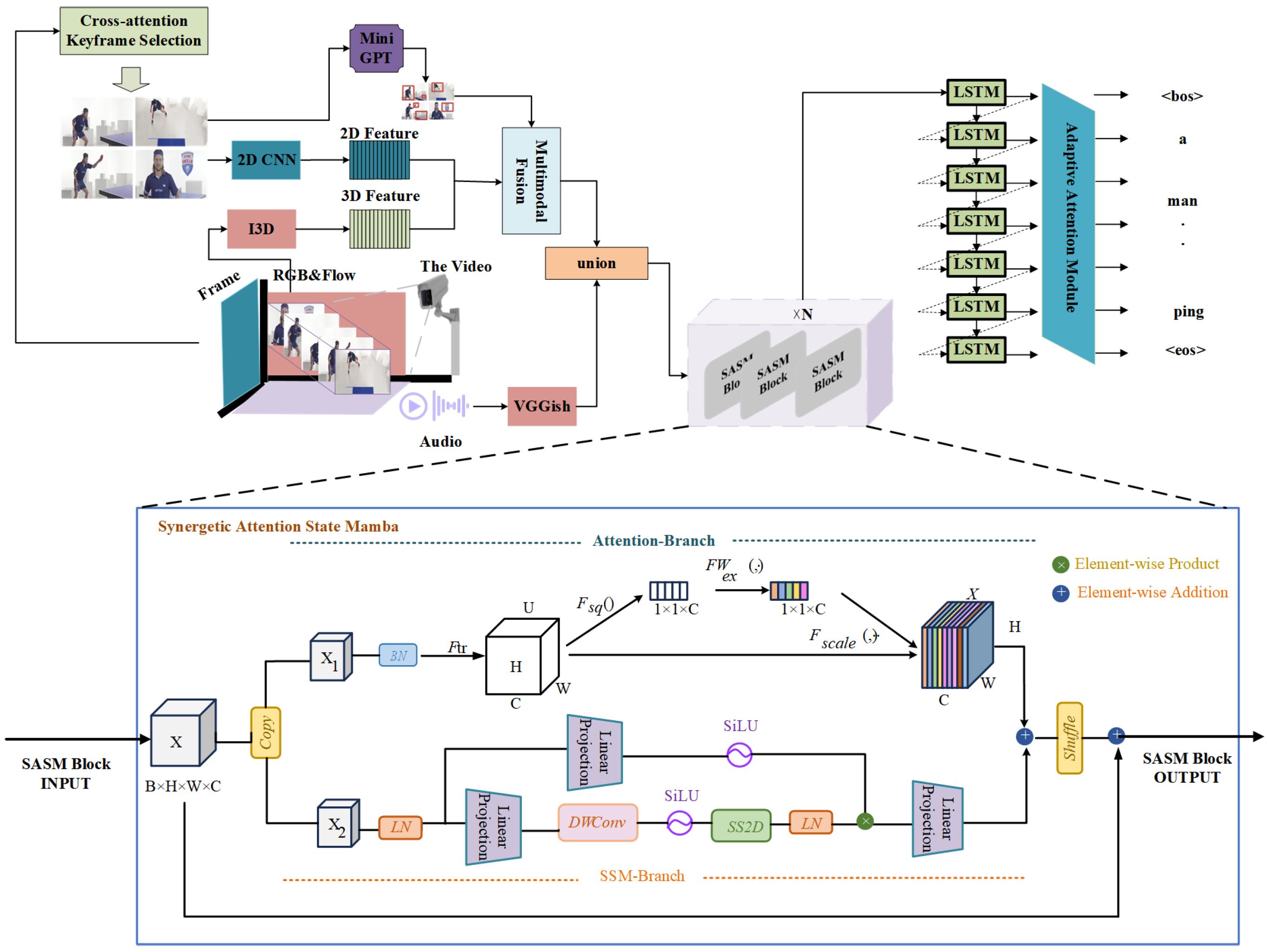

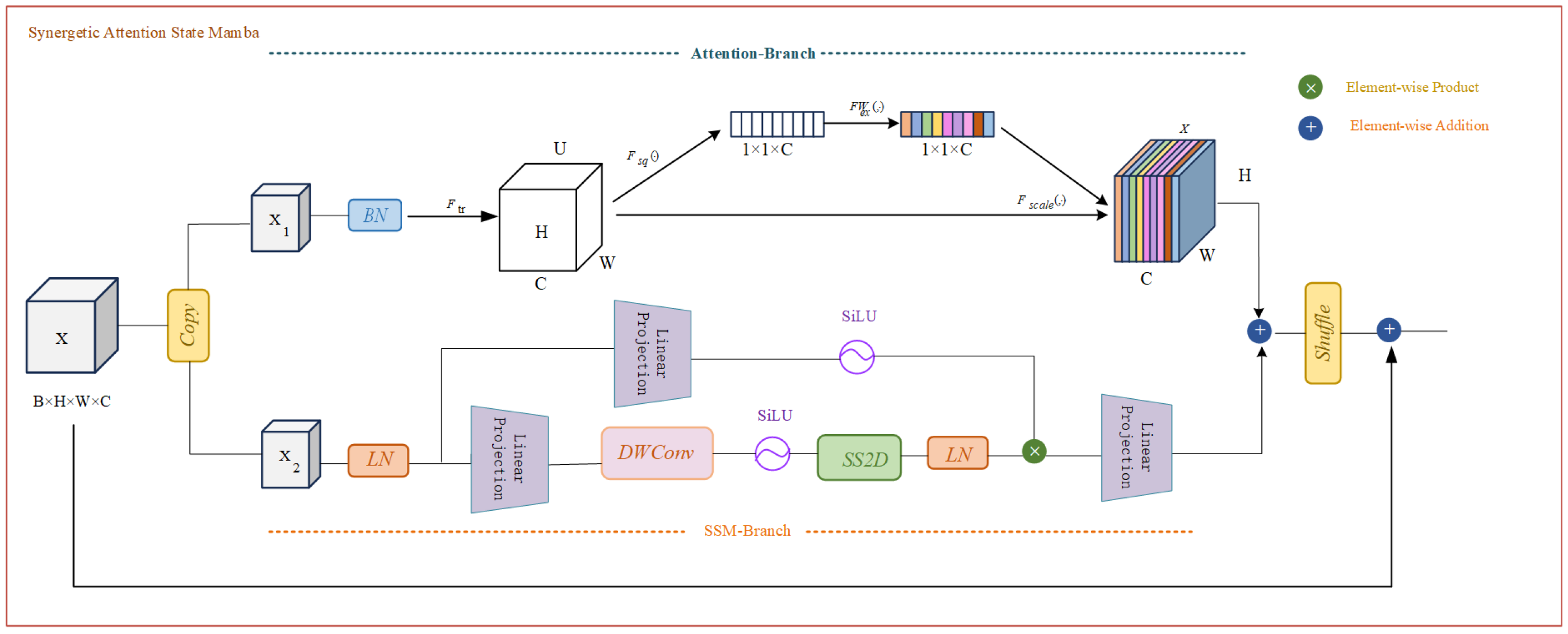

- To design a hybrid module called Synergetic Attention State Mamba (SASM), which incorporates channel attention mechanisms to enhance feature selection capabilities while leveraging State Space Models (SSMs) to efficiently capture long-range spatial dependencies, enabling efficient spatiotemporal modeling of multimodal video features.

- Introduce adaptive attention mechanisms to construct a sentence decoder that achieves selective focus on fused features through dynamic adjustment of feature weights at different temporal instances.

2. Related Work

3. Problem Definition

3.1. Task Overview

3.2. System Pipeline Overview

4. Model Construction

4.1. Model Architecture

4.2. Visual Span Video Keyframe Selection

| Algorithm 1 Cross-attention keyframe selection |

| Input: |

|

| Output: Keyframe set K |

| Steps: |

|

4.3. Multimodal Attention Fusion

4.4. Synergetic Attention State Mamba

4.4.1. Preliminary

4.4.2. SASM Block

| Algorithm 2 Synergetic Attention State Mamba Block |

| Input: |

|

| Output: Output tensor |

| Steps: |

|

4.5. Adaptive Attention Module

5. Experimental Analysis

5.1. Experimental Platform

5.2. Data Preprocessing

5.3. Experimental Parameter Settings

5.4. Analysis of Experimental Results

6. Concluding Remarks

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Aafaq, N.; Mian, A.; Liu, W.; Gilani, S.Z.; Shah, M. Video description: A survey of methods, datasets, and evaluation metrics. ACM Comput. Surv. 2019, 52, 1–37. [Google Scholar] [CrossRef]

- Han, D.; Shi, J.; Zhao, J.; Wu, H.; Zhou, Y.; Li, L.-H.; Khan, M.K.; Li, K.-C. LRCN: Layer-residual Co-Attention Networks for visual question answering. Expert Syst. Appl. 2025, 263, 125658. [Google Scholar] [CrossRef]

- Chen, S.; Jin, Q. Multi-modal conditional attention fusion for dimensional emotion prediction. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 571–575. [Google Scholar]

- Huang, S.-H.; Lu, C.-H. Sequence-Aware Learnable Sparse Mask for Frame-Selectable End-to-End Dense Video Captioning for IoT Smart Cameras. IEEE Internet Things J. 2023, 11, 13039–13050. [Google Scholar] [CrossRef]

- Lu, C.-H.; Fan, G.-Y. Environment-aware dense video captioning for IoT-enabled edge cameras. IEEE Internet Things J. 2021, 9, 4554–4564. [Google Scholar] [CrossRef]

- Venugopalan, S.; Rohrbach, M.; Donahue, J.; Mooney, R.; Darrell, T.; Saenko, K. Sequence to sequence-video to text. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4534–4542. [Google Scholar]

- Zhao, B.; Gong, M.; Li, X. Hierarchical multimodal transformer to summarize videos. Neurocomputing 2022, 468, 360–369. [Google Scholar] [CrossRef]

- Pan, Y.; Yao, T.; Li, Y.; Mei, T. X-linear attention networks for image captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10971–10980. [Google Scholar]

- Zhu, D.; Chen, J.; Shen, X.; Li, X.; Elhoseiny, M. Minigpt-4: Enhancing vision-language understanding with advanced large language models. arXiv 2023, arXiv:2304.10592. [Google Scholar]

- Gemmeke, J.F.; Ellis, D.P.W.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio set: An ontology and human-labeled dataset for audio events. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 776–780. [Google Scholar]

- Hou, Y.; Zhou, Q.; Lv, H.; Guo, L.; Li, Y.; Duo, L.; He, Z. VLSG-net: Vision-Language Scene Graphs network for Paragraph Video Captioning. Neurocomputing 2025, 636, 129976. [Google Scholar] [CrossRef]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Liu, Y.; Sun, Y.; Chen, Z.; Feng, C.; Zhu, K. Global spatial-temporal information encoder-decoder based action segmentation in untrimmed video. Tsinghua Sci. Technol. 2024, 30, 290–302. [Google Scholar] [CrossRef]

- Zhang, B.; Gao, J.; Yuan, Y. Memory-enhanced hierarchical transformer for video paragraph captioning. Neurocomputing 2025, 615, 128835. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Z.; Chen, C.; Wang, Z.; Cui, L.; Lin, C.-W. Magic ELF: Image deraining meets association learning and transformer. arXiv 2022, arXiv:2207.10455. [Google Scholar]

- Yao, L.; Torabi, A.; Cho, K.; Ballas, N.; Pal, C.; Larochelle, H.; Courville, A. Describing videos by exploiting temporal structure. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4507–4515. [Google Scholar]

- Pan, P.; Xu, Z.; Yang, Y.; Wu, F.; Zhuang, Y. Hierarchical recurrent neural encoder for video representation with application to captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1029–1038. [Google Scholar]

- Krishna, R.; Hata, K.; Ren, F.; Fei-Fei, L.; Niebles, J.C. Dense-captioning events in videos. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 706–715. [Google Scholar]

- Wang, X.; Chen, W.; Wu, J.; Wang, Y.-F.; Wang, W.Y. Video captioning via hierarchical reinforcement learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4213–4222. [Google Scholar]

- Yu, H.; Wang, J.; Huang, Z.; Yang, Y.; Xu, W. Video paragraph captioning using hierarchical recurrent neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4584–4593. [Google Scholar]

- Zhang, J.; Peng, Y. Object-aware aggregation with bidirectional temporal graph for video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8327–8336. [Google Scholar]

- Wang, Y.; Li, K.; Li, Y.; He, Y.; Huang, B.; Zhao, Z.; Zhang, H.; Xu, J.; Liu, Y.; Wang, Z.; et al. Internvideo: General video foundation models via generative and discriminative learning. arXiv 2022, arXiv:2212.03191. [Google Scholar]

- Guo, L.; Zhao, H.; Chen, Z.; Han, Z. Semantic guidance network for video captioning. Sci. Rep. 2023, 13, 16076. [Google Scholar] [CrossRef]

- Li, K.; He, Y.; Wang, Y.; Li, Y.; Wang, W.; Luo, P.; Wang, Y.; Wang, L.; Qiao, Y. Videochat: Chat-centric video understanding. arXiv 2023, arXiv:2305.06355. [Google Scholar]

- Zhang, H.; Li, X.; Bing, L. Video-llama: An instruction-tuned audio-visual language model for video understanding. arXiv 2023, arXiv:2306.02858. [Google Scholar]

- Xu, J.; Mei, T.; Yao, T.; Rui, Y. Msr-vtt: A large video description dataset for bridging video and language. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5288–5296. [Google Scholar]

- Hung, Y.-N.; Lerch, A. Feature-informed Latent Space Regularization for Music Source Separation. arXiv 2022, arXiv:2203.09132. [Google Scholar]

- Guo, K.; Zhang, Z.; Guo, H.; Ren, S.; Wang, L.; Zhou, X.; Liu, C. Video super-resolution based on inter-frame information utilization for intelligent transportation. IEEE Trans. Intell. Transp. Syst. 2023, 24, 13409–13421. [Google Scholar] [CrossRef]

- Liao, J.; Yu, C.; Jiang, L.; Guo, L.; Liang, W.; Li, K.; Pathan, A.-S.K. A method for composite activation functions in deep learning for object detection. Signal Image Video Process. 2025, 19, 362. [Google Scholar] [CrossRef]

- Xiao, Y.; Yuan, Q.; Jiang, K.; Chen, Y.; Wang, S.; Lin, C. Multi-Axis Feature Diversity Enhancement for Remote Sensing Video Super-Resolution. IEEE Trans. Image Process. 2025, 34, 1766–1778. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, J.; Jiao, Y.; Zhang, Y.; Chen, L.; Li, K. A Multimodal Semantic Fusion Network with Cross-Modal Alignment for Multimodal Sentiment Analysis. In ACM Transactions on Multimedia Computing, Communications and Applications; ACM: New York, NY, USA, 2025. [Google Scholar]

- Liu, Z.; Dang, F.; Liu, X.; Tong, X.; Zhao, H.; Liu, K.; Li, K. A Multimodal Fusion Framework for Enhanced Exercise Quantification Integrating RFID and Computer Vision. Tsinghua Sci. Technol. 2025. [Google Scholar] [CrossRef]

- Xia, C.; Sun, Y.; Li, K.-C.; Ge, B.; Zhang, H.; Jiang, B.; Zhang, J. Rcnet: Related context-driven network with hierarchical attention for salient object detection. Expert Syst. Appl. 2024, 237, 121441. [Google Scholar] [CrossRef]

- Xu, G.; Deng, X.; Zhou, X.; Pedersen, M.; Cimmino, L.; Wang, H. Fcfusion: Fractal componentwise modeling with group sparsity for medical image fusion. IEEE Trans. Ind. Inform. 2022, 18, 9141–9150. [Google Scholar] [CrossRef]

- Zhao, H.; Guo, L.; Chen, Z.; Zheng, H. Research on video captioning based on multifeature fusion. Comput. Intell. Neurosci. 2022, 2022, 1204909. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual state space model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Wang, F.; Wang, J.; Ren, S.; Wei, G.; Mei, J.; Shao, W.; Zhou, Y.; Yuille, A.; Xie, C. Mamba-Reg: Vision Mamba Also Needs Registers. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 14944–14953. [Google Scholar]

- Yang, N.; Wang, Y.; Liu, Z.; Li, M.; An, Y.; Zhao, X. SMamba: Sparse Mamba for Event-based Object Detection. Proc. Aaai Conf. Artif. Intell. 2025, 39, 9229–9237. [Google Scholar] [CrossRef]

- Kannan, A.; Lindquist, M.A.; Caffo, B. BrainMT: A Hybrid Mamba-Transformer Architecture for Modeling Long-Range Dependencies in Functional MRI Data. arXiv 2025, arXiv:2506.22591. [Google Scholar]

- Xiao, Y.; Yuan, Q.; Jiang, K.; Chen, Y.; Zhang, Q.; Lin, C. Frequency-Assisted Mamba for Remote Sensing Image Super-Resolution. IEEE Trans. Multimed. 2025, 27, 1783–1796. [Google Scholar] [CrossRef]

- Cui, J.; Li, Y.; Shen, D.; Wang, Y. MGCM: Multi-modal Graph Convolutional Mamba for Cancer Survival Prediction. Pattern Recognit. 2025, 169, 111991. [Google Scholar] [CrossRef]

- Yan, C.; Hao, Y.; Li, L.; Yin, J.; Liu, A.; Mao, Z. Task-adaptive attention for image captioning. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 43–51. [Google Scholar] [CrossRef]

- Jin, N.; Yang, F.; Mo, Y.; Zeng, Y.; Zhou, X.; Yan, K.; Ma, X. Highly accurate energy consumption forecasting model based on parallel LSTM neural networks. Adv. Eng. Inform. 2022, 51, 101442. [Google Scholar] [CrossRef]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002. [Google Scholar]

- Lin, C.-Y. Rouge: A package for automatic evaluation of summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 25–30 June 2005; pp. 65–72. [Google Scholar]

- Vedantam, R.; Zitnick, C.L.; Parikh, D. Cider: Consensus-based image description evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Chen, D.; Dolan, W.B. Collecting highly parallel data for paraphrase evaluation. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011. [Google Scholar]

- Hou, J.; Wu, X.; Zhao, W.; Luo, J.; Jia, Y. Joint Syntax Representation Learning and Visual Cue Translation for Video Captioning. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Zhang, Z.; Shi, Y.; Yuan, C.; Li, B.; Wang, P.; Hu, W.; Zha, Z.J. Object Relational Graph with Teacher-Recommended Learning for Video Captioning. Computer Vision and Pattern Recognition. arXiv 2020, arXiv:2002.11566. [Google Scholar]

- Ryu, H.; Kang, S.; Kang, H.; Yoo, C.D. Semantic Grouping Network for Video Captioning. In National Conference on Artificial Intelligence. arXiv 2021, arXiv:2102.00831. [Google Scholar]

- Gu, X.; Chen, G.; Wang, Y.; Zhang, L.; Luo, T.; Wen, L. Text with knowledge graph augmented transformer for video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 18941–18951. [Google Scholar]

- Jing, S.; Zhang, H.; Zeng, P.; Gao, L.; Song, J.; Shen, H.T. Memory-based augmentation network for video captioning. IEEE Trans. Multimed. 2023, 26, 2367–2379. [Google Scholar] [CrossRef]

- Shen, Y.; Gu, X.; Xu, K.; Fan, H.; Wen, L.; Zhang, L. Accurate and fast compressed video captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 15558–15567. [Google Scholar]

- Luo, X.; Luo, X.; Wang, D.; Liu, J.; Wan, B.; Zhao, L. Global semantic enhancement network for video captioning. Pattern Recognit. 2024, 145, 109906. [Google Scholar] [CrossRef]

- Ma, Y.; Zhu, Z.; Qi, Y.; Beheshti, A.; Li, Y.; Qing, L.; Li, G. Style-aware two-stage learning framework for video captioning. Knowl.-Based Syst. 2024, 301, 112258. [Google Scholar] [CrossRef]

| Experimental Platform | Environment Configuration |

|---|---|

| RAM | 128 GB |

| CPU | Intel(R) Xeon(R) Gold 5118 CPU @ 2.30 GHz (Intel Corporation, Santa Clara, CA, USA) |

| GPU_Memory | 32 GB |

| GPU | NVIDIA Tesla V100 (NVIDIA Corporation, Santa Clara, CA, USA) |

| Driver | NVIDIA CUDA 11.0 |

| Deep Learning Acceleration Library | cuDNN-V8.0.5 |

| Deep Learning Framework | PyTorch 1.6 |

| Inference device | NVIDIA Jetson TX2 (NVIDIA Corporation, Santa Clara, CA, USA) |

| Model | BLEU-4 | METEOR | ROUGEL | CIDEr |

|---|---|---|---|---|

| OA-BTG [21] | 45.8 | 33.3 | - | 73.0 |

| POS+VCT [50] | 52.8 | 36.1 | 71.8 | 87.8 |

| ORG-TRL (IRV2 + C3D) [51] | 54.3 | 36.4 | 73.9 | 95.2 |

| SGN [52] | 52.8 | 35.5 | 72.9 | 94.3 |

| TextKG [53] | 60.8 | 38.5 | 75.1 | 105.2 |

| MAN [54] | 60.1 | 37.1 | 74.6 | 101.9 |

| VIT/L14 [55] | 60.1 | 41.4 | 78.2 | 121.5 |

| GSEN [56] | 58.8 | 37.6 | 75.2 | 102.5 |

| STLF [57] | 60.9 | 40.8 | 77.5 | 110.9 |

| ours | 61.6 | 42.7 | 96.1 | 123.9 |

| Model | BLEU-4 | METEOR | fROUGEL | CIDEr |

|---|---|---|---|---|

| OA-BTG [21] | 41.4 | 28.2 | - | 46.9 |

| POS+VCT [50] | 42.3 | 29.7 | 62.0 | 49.1 |

| ORG-TRL (IRV2 + C3D) [51] | 43.6 | 28.8 | 62.1 | 50.9 |

| SGN [52] | 40.8 | 28.3 | 60.8 | 49.5 |

| TextKG [53] | 43.7 | 29.6 | 62.4 | 52.4 |

| MAN [54] | 42.5 | 28.6 | 62.2 | 50.4 |

| VIT/L14 [55] | 44.4 | 30.3 | 63.4 | 57.2 |

| GSEN [56] | 42.9 | 28.4 | 61.7 | 51.0 |

| STLF [57] | 47.5 | 30.3 | 63.8 | 54.1 |

| ours | 45.1 | 30.6 | 63.8 | 58.6 |

| Model | Memory Usage | Inference Time(s) |

|---|---|---|

| Gated-ViGAT | 3.2 GB | 0.3483 |

| SLSMedvc | 3.1 GB | 0.3090 |

| ours | 2.97 GB | 0.3000 |

| Multimodal Features | Uniform Frame Sampling | Cross-Attention Frame Selection | miniGPT Visual Localization | Adaptive Attention | SASM | BLEU-4 | METEOR | ROUGEL | CIDEr |

|---|---|---|---|---|---|---|---|---|---|

| ✔ | ✔ | 58.1 | 40.3 | 93.7 | 115.4 | ||||

| ✔ | ✔ | 59.2 | 41.0 | 94.1 | 118.3 | ||||

| ✔ | ✔ | ✔ | 58.6 | 41.4 | 93.8 | 118.6 | |||

| ✔ | ✔ | ✔ | 60.2 | 41.7 | 95.2 | 120.8 | |||

| ✔ | ✔ | ✔ | 59.9 | 41.2 | 95.1 | 120.5 | |||

| ✔ | ✔ | ✔ | 60.5 | 41.8 | 95.4 | 121.3 | |||

| ✔ | ✔ | ✔ | ✔ | 61.1 | 42.2 | 95.7 | 121.6 | ||

| ✔ | ✔ | ✔ | 60.0 | 41.6 | 94.8 | 120.2 | |||

| ✔ | ✔ | ✔ | ✔ | ✔ | 60.9 | 42.1 | 95.5 | 122.4 | |

| ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | 61.6 | 42.7 | 96.1 | 123.9 |

| Multimodal Features | Uniform Frame Sampling | Cross-Attention Frame Selection | miniGPT Visual Localization | Adaptive Attention | SASM | BLEU-4 | METEOR | ROUGEL | CIDEr |

|---|---|---|---|---|---|---|---|---|---|

| ✔ | ✔ | 39.2 | 27.8 | 60.1 | 52.7 | ||||

| ✔ | ✔ | 40.3 | 28.1 | 60.7 | 53.5 | ||||

| ✔ | ✔ | ✔ | 39.5 | 27.9 | 60.0 | 54.3 | |||

| ✔ | ✔ | ✔ | 42.3 | 28.7 | 61.2 | 55.1 | |||

| ✔ | ✔ | ✔ | 42.0 | 29.1 | 60.4 | 55.8 | |||

| ✔ | ✔ | ✔ | 44.1 | 29.8 | 63.1 | 57.4 | |||

| ✔ | ✔ | ✔ | ✔ | 44.6 | 30.1 | 63.0 | 58.2 | ||

| ✔ | ✔ | ✔ | 41.8 | 29.0 | 61.8 | 55.7 | |||

| ✔ | ✔ | ✔ | ✔ | ✔ | 43.9 | 29.8 | 62.9 | 57.3 | |

| ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | 45.1 | 30.6 | 63.8 | 58.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, L.; Li, X.; Wang, J.; Xiao, J.; Hou, Y.; Zhi, P.; Yong, B.; Li, L.; Zhou, Q.; Li, K. EdgeVidCap: A Channel-Spatial Dual-Branch Lightweight Video Captioning Model for IoT Edge Cameras. Sensors 2025, 25, 4897. https://doi.org/10.3390/s25164897

Guo L, Li X, Wang J, Xiao J, Hou Y, Zhi P, Yong B, Li L, Zhou Q, Li K. EdgeVidCap: A Channel-Spatial Dual-Branch Lightweight Video Captioning Model for IoT Edge Cameras. Sensors. 2025; 25(16):4897. https://doi.org/10.3390/s25164897

Chicago/Turabian StyleGuo, Lan, Xuyang Li, Jinqiang Wang, Jie Xiao, Yufeng Hou, Peng Zhi, Binbin Yong, Linghuey Li, Qingguo Zhou, and Kuanching Li. 2025. "EdgeVidCap: A Channel-Spatial Dual-Branch Lightweight Video Captioning Model for IoT Edge Cameras" Sensors 25, no. 16: 4897. https://doi.org/10.3390/s25164897

APA StyleGuo, L., Li, X., Wang, J., Xiao, J., Hou, Y., Zhi, P., Yong, B., Li, L., Zhou, Q., & Li, K. (2025). EdgeVidCap: A Channel-Spatial Dual-Branch Lightweight Video Captioning Model for IoT Edge Cameras. Sensors, 25(16), 4897. https://doi.org/10.3390/s25164897