Distributed Non-Communicating Multi-Robot Collision Avoidance via Map-Based Deep Reinforcement Learning

Abstract

1. Introduction

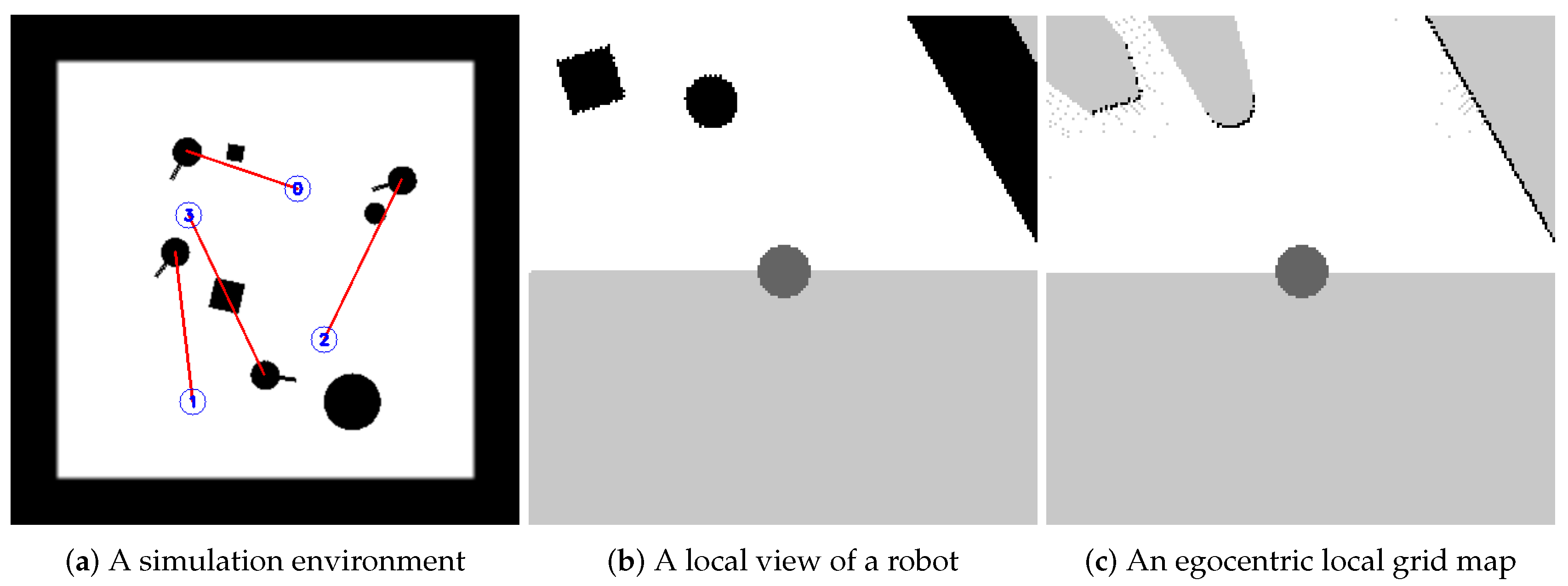

- We propose a map-based DRL multi-robot collision avoidance approach in a communication-free environment, where egocentric local grid maps are used to represent the environmental information around the robot, which can be easily generated by using multiple sensors or sensor fusion.

- We train the collision avoidance policy in multiple simulation environments using DPPO, which can be deployed to real robots without tedious parameter tuning, where the network considers egocentric local grid maps as inputs and directly outputs low-level robot control commands.

- We evaluate our approach with multiple scenarios both in the simulator and on many differential-drive mobile robots in the real world. Both qualitative and quantitative experiments show that our approach is efficient and outperforms existing related approaches in many indicators.

- We conduct ablation studies that specify the positive effects of using egocentric grid maps and multi-stage curriculum learning.

2. Problem Formulation

3. Approach

3.1. Reinforcement Learning Components

3.1.1. Observation Space

3.1.2. Action Space

3.1.3. Reward Function

3.2. Distributed Proximal Policy Optimization

3.2.1. Training Process

| Algorithm 1: Distributed Proximal Policy Optimization |

|

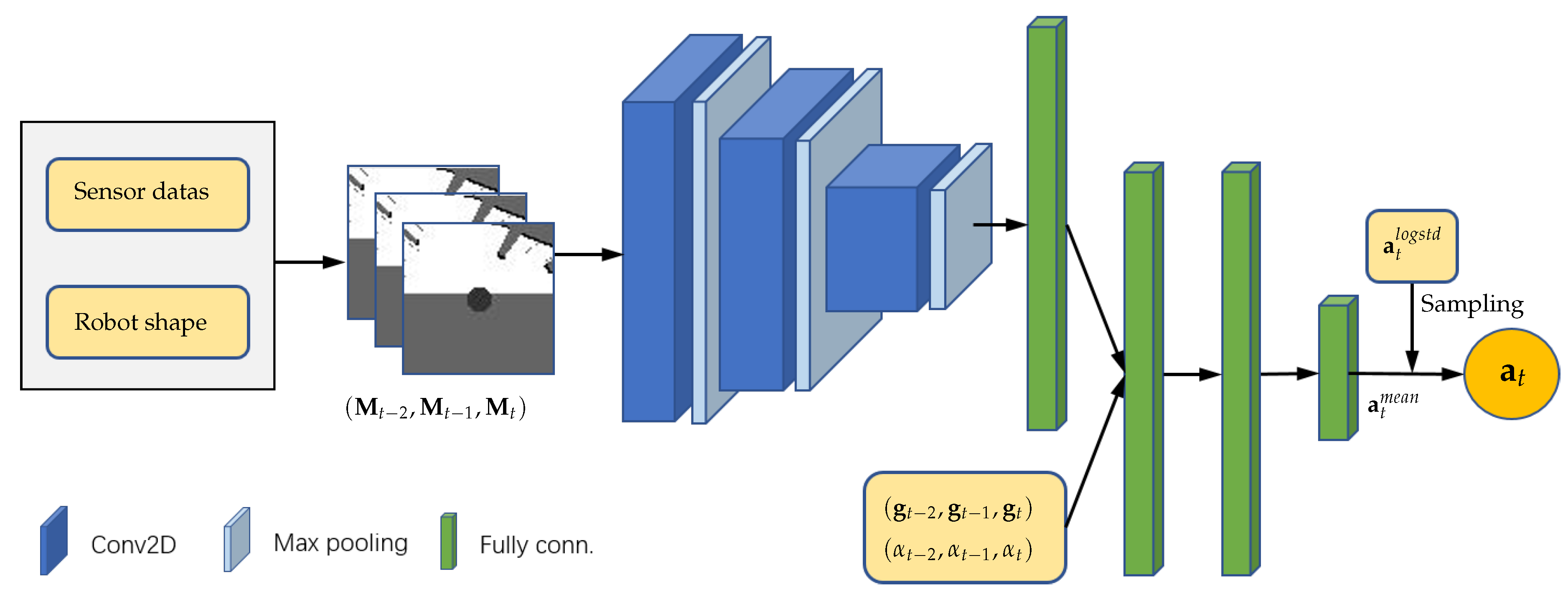

3.2.2. Network Architecture

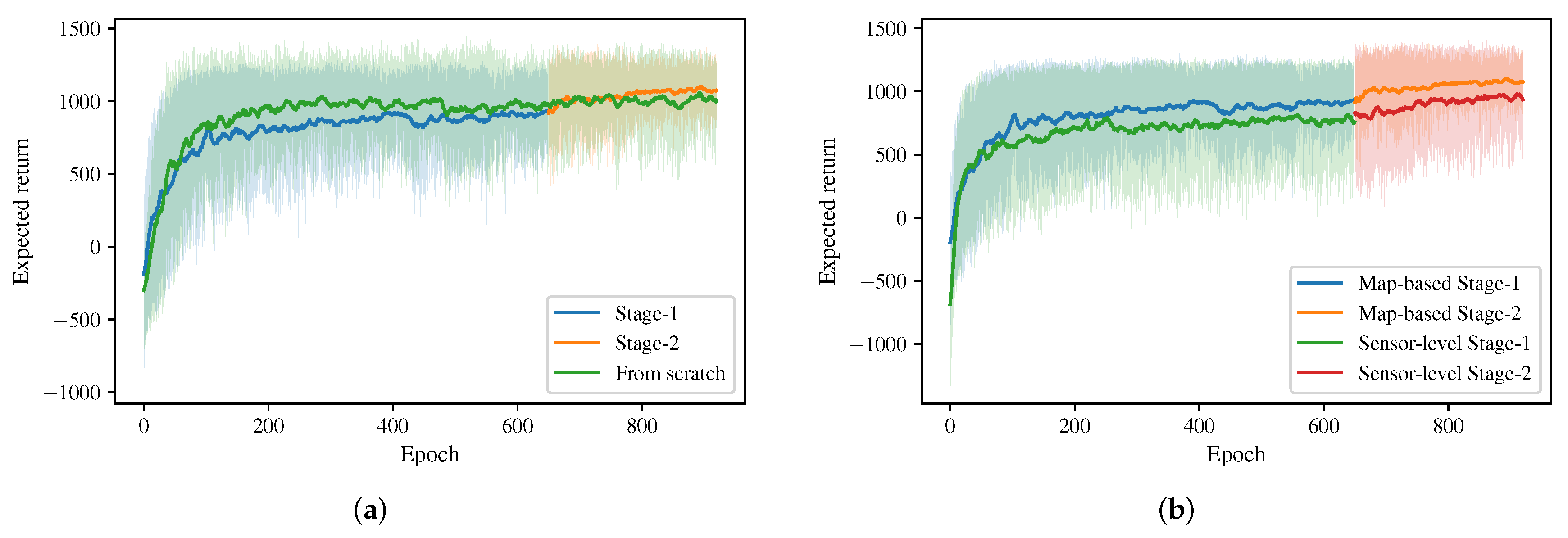

3.2.3. Multi-Stage Curriculum Learning

4. Simulation Experiments

- NH-ORCA policy: the policy generated by the state-of-the-art rule-based agent-level multi-robot collision avoidance approach proposed by Alonso-Mora et al. [18,19]. Hyper-parameters of the NH-ORCA algorithm used in our comparison experiments are listed in Table 1. For the details of definition of each parameter, please refer to [14].

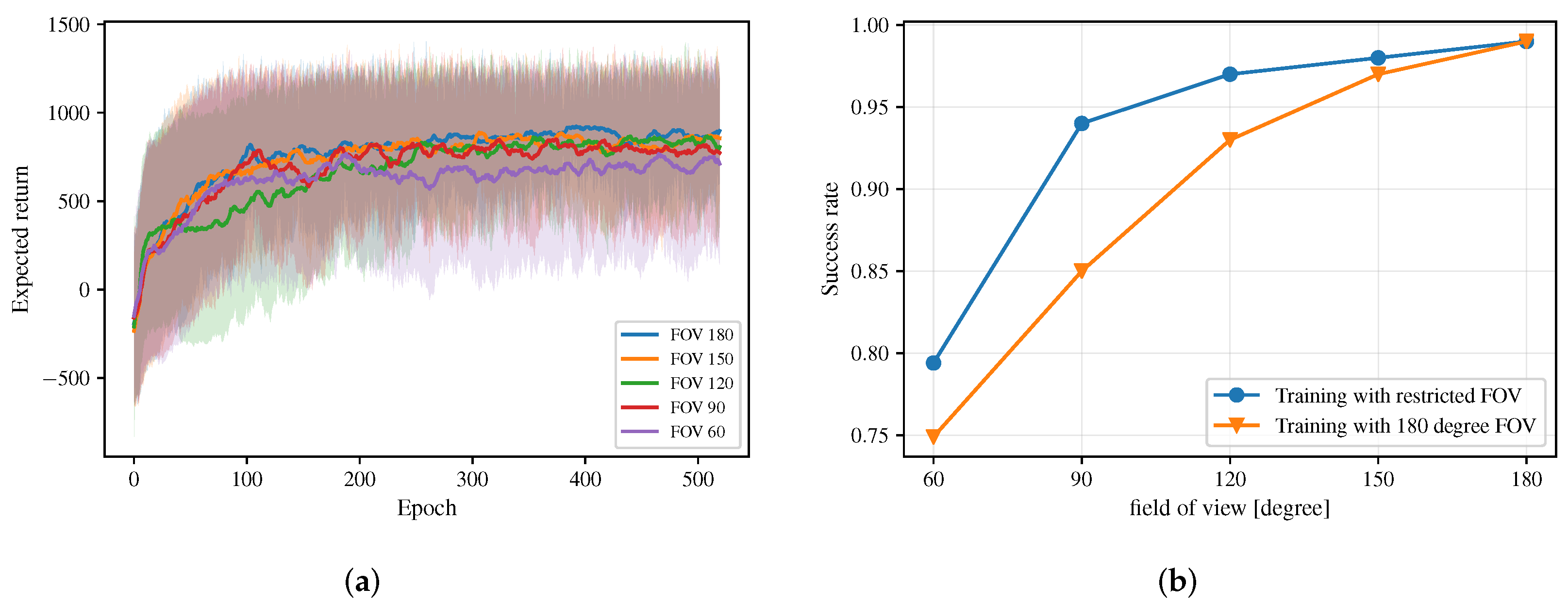

- Sensor-level policy: the policy generated by the DRL based approach proposed by Long et al. [42] and Fan et al. [43]. Different from our approach, their network considers the original 2D laser data (in a 1D form) as the input and uses 1D convolutions to handle the input. For a fair comparison, we trained this DRL-based approach in the same training process, i.e., the two-stage training process, as our approach. The learning curve of its training process is shown in Figure 4b, where we denote their approach as “Sensor-level” and our approach as “Map-based”. Note that our approach converges with a higher expected return in the training process.

- Map-based policy: the policy generated by our approach in this article, which considers egocentric local grid maps as inputs.

4.1. Reinforcement Learning Setup

4.2. Implementation Details

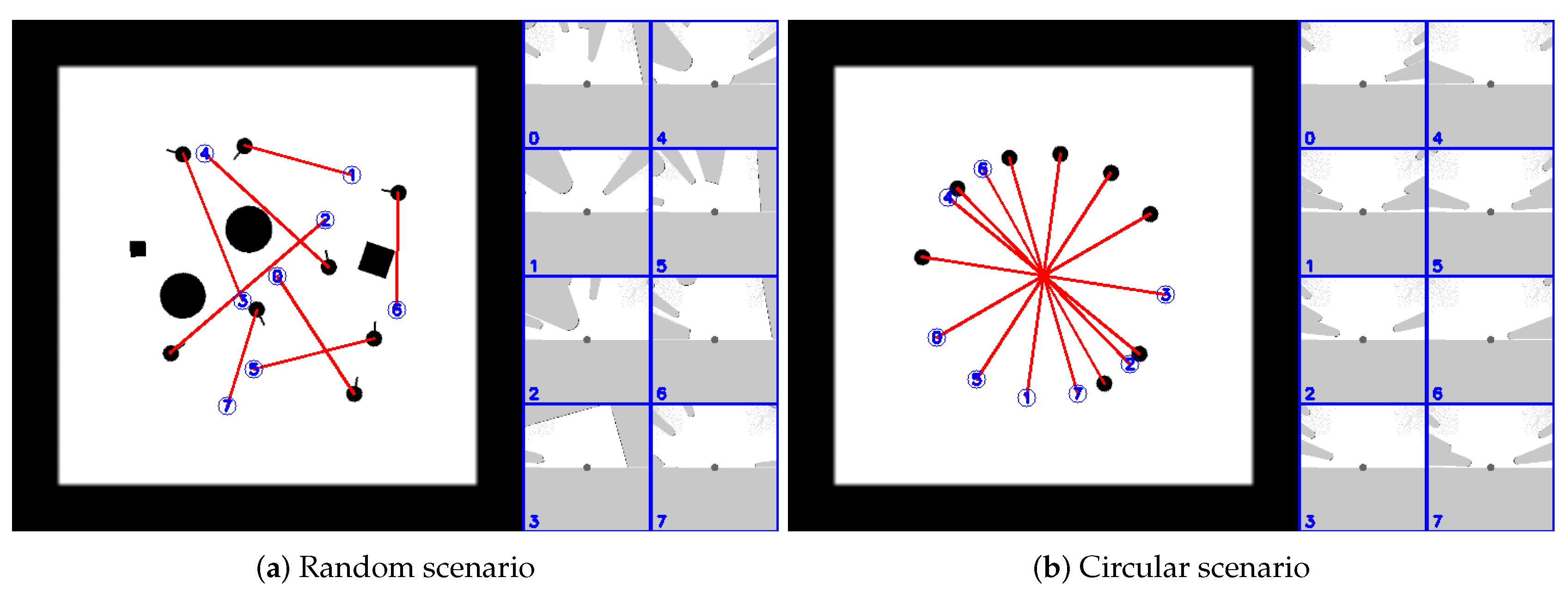

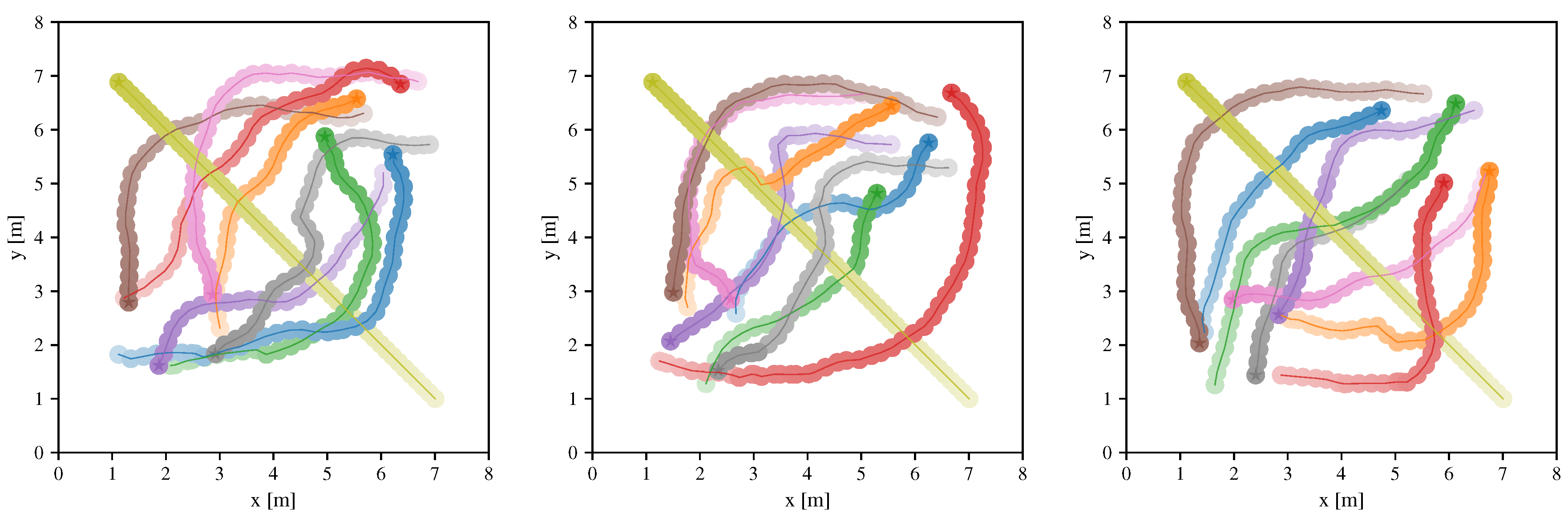

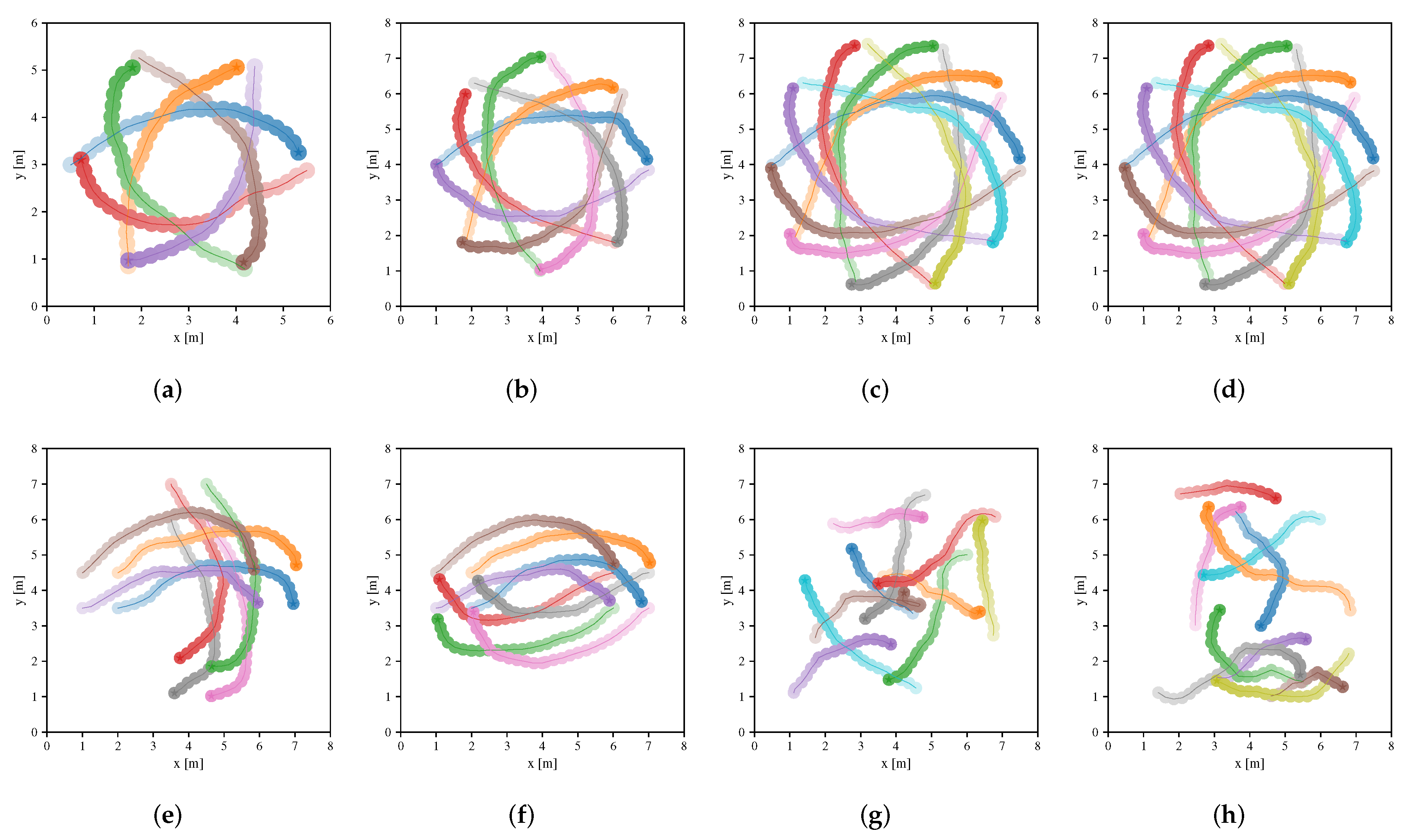

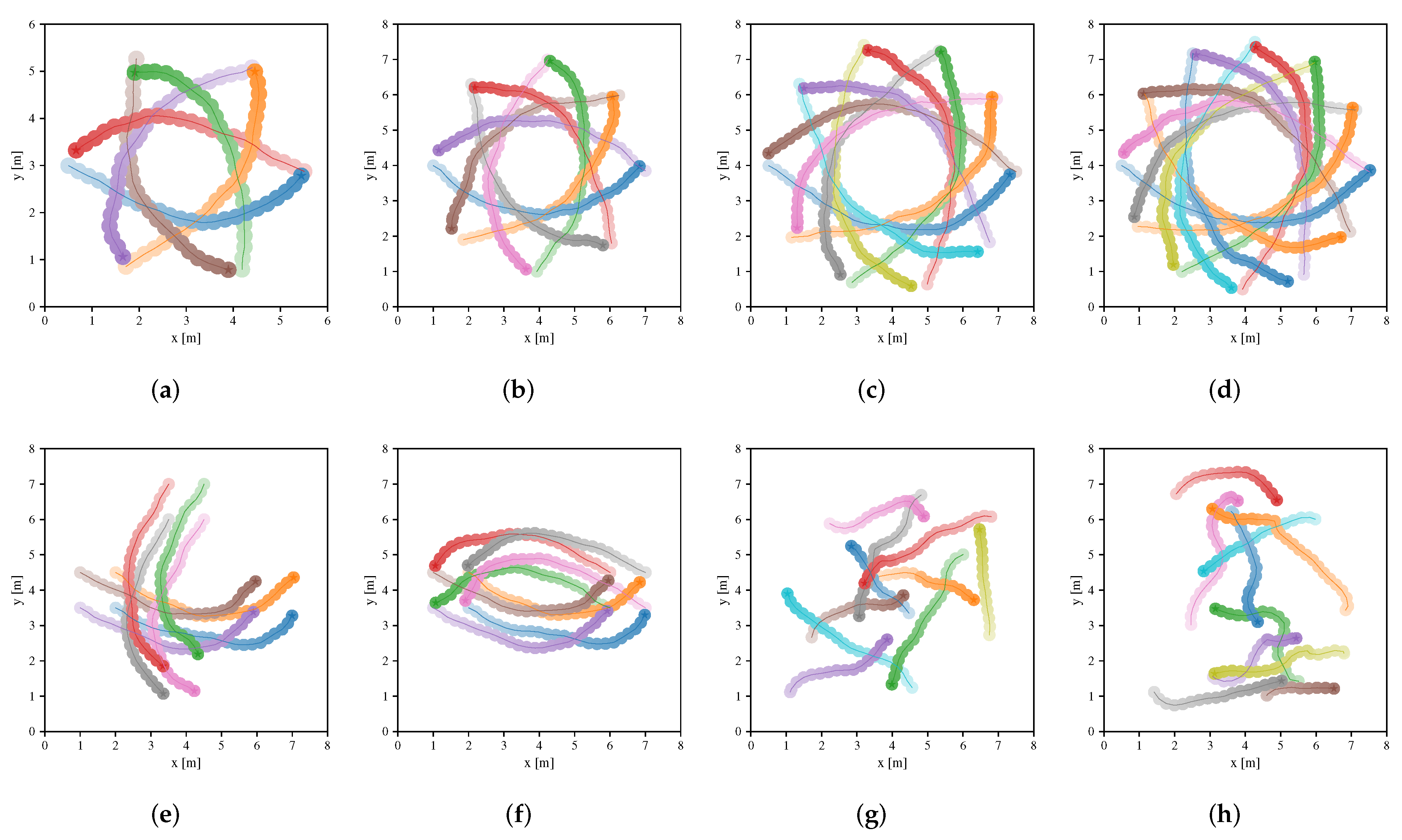

4.3. Generalization Capability

4.3.1. Non-Cooperative Robots

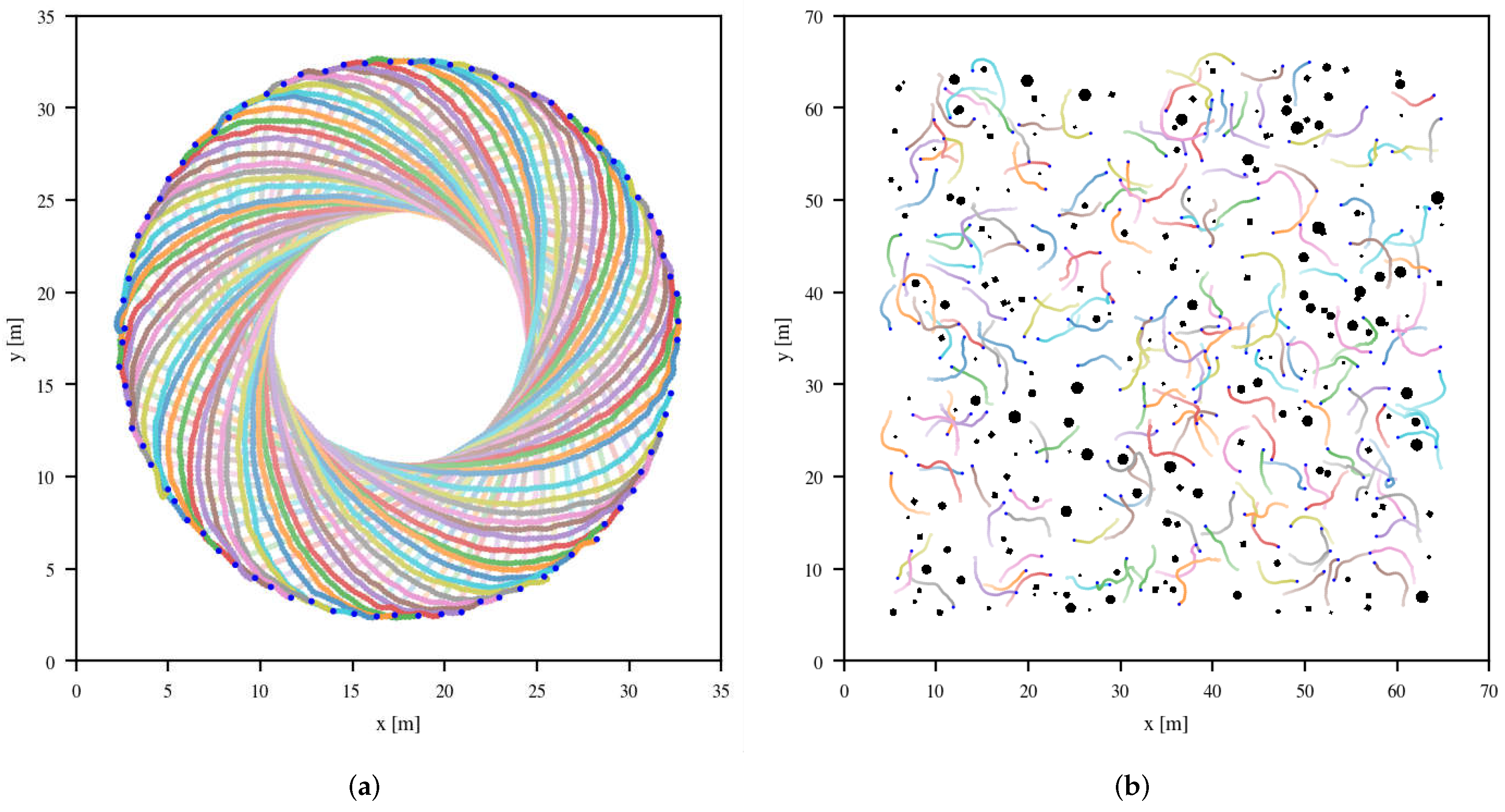

4.3.2. Large-Scale Scenarios

4.3.3. Heterogeneous Robots

4.4. Efficiency Evaluation

4.4.1. Metrics and Scenarios

- Success rate : the ratio of the episodes that end with the robot reaching its target without any collision.

- Extra time : the average time required for every robot to successfully reach their targets without any collisions minus the average time for every robot to drive straight to their targets with the maximum speed.

- Extra distance : the average moving distance required for every robot to successfully reach their targets without any collisions minus the average moving distance for every robot to drive straight to their targets with the maximum speed.

- Average linear velocity : the average linear velocity for every robot during the navigation.

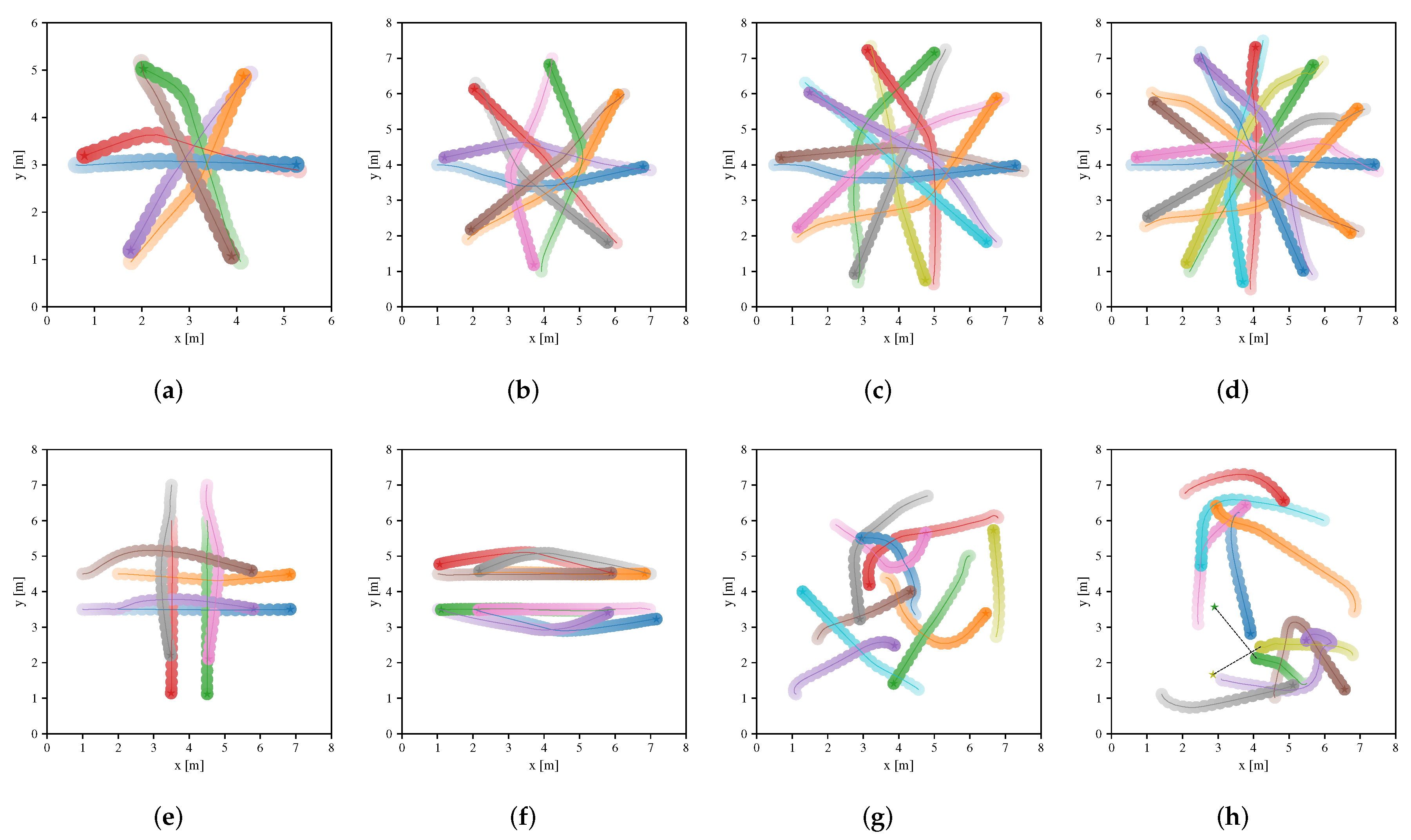

- Circle scenario: the scenario that is similar to the circular scenario, except that the initial positions of all robots are uniformly placed on the circle. This scenario can be categorized into four types by the different number of robots and the radius of the circle, i.e., 6 robots with radius 2.5 m, 8 robots with radius 3 m, 10 robots with radius 3.5 m and 12 robots with radius 3.5 m.

- Cross scenario: the scenario that requires two groups of robots (four robots in each group) to move cross each other.

- Swap scenario: the scenario that requires two groups of robots (four robots in each group) to move towards each other and swap their positions.

- New random scenario: the scenario that is similar to the random scenario, except that 10 robots are considered in the scenario.

4.4.2. Quantitative Results

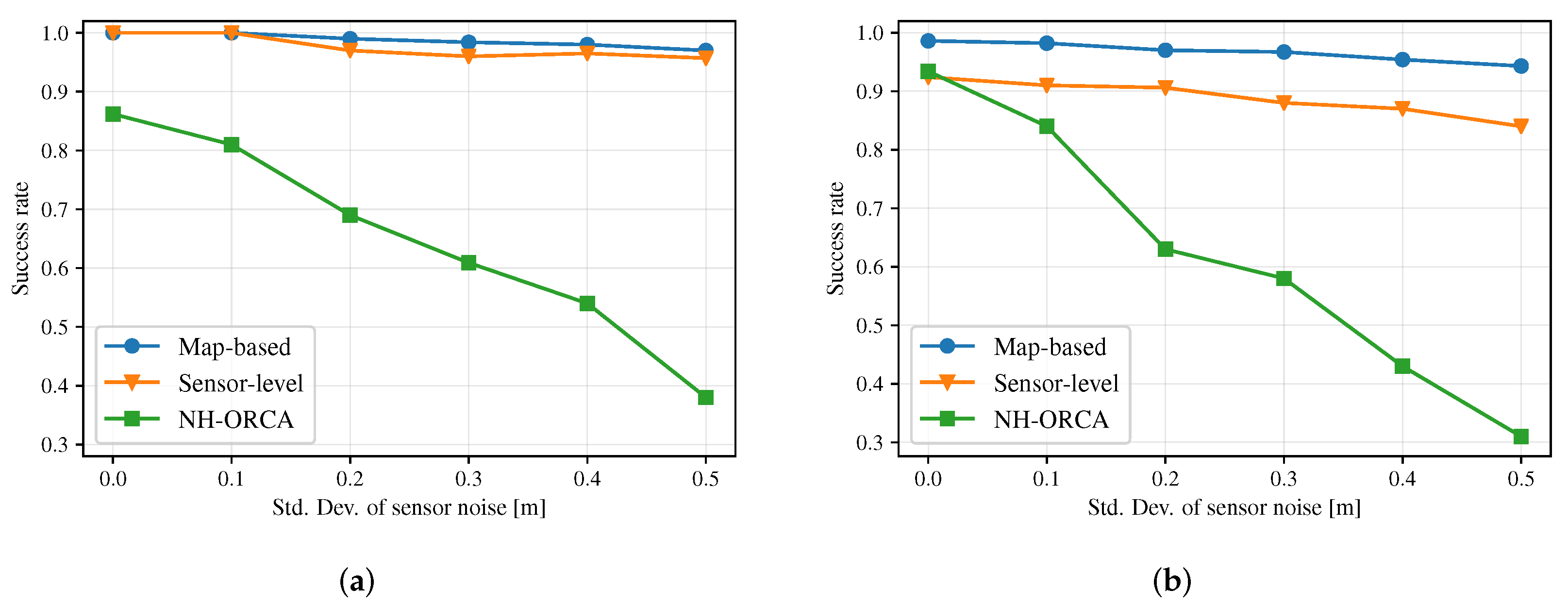

4.5. Robustness Evaluation

4.5.1. Different Sensor Noise

4.5.2. Different FOV Limits

4.5.3. Different Sensor Types

5. Real-World Experiments

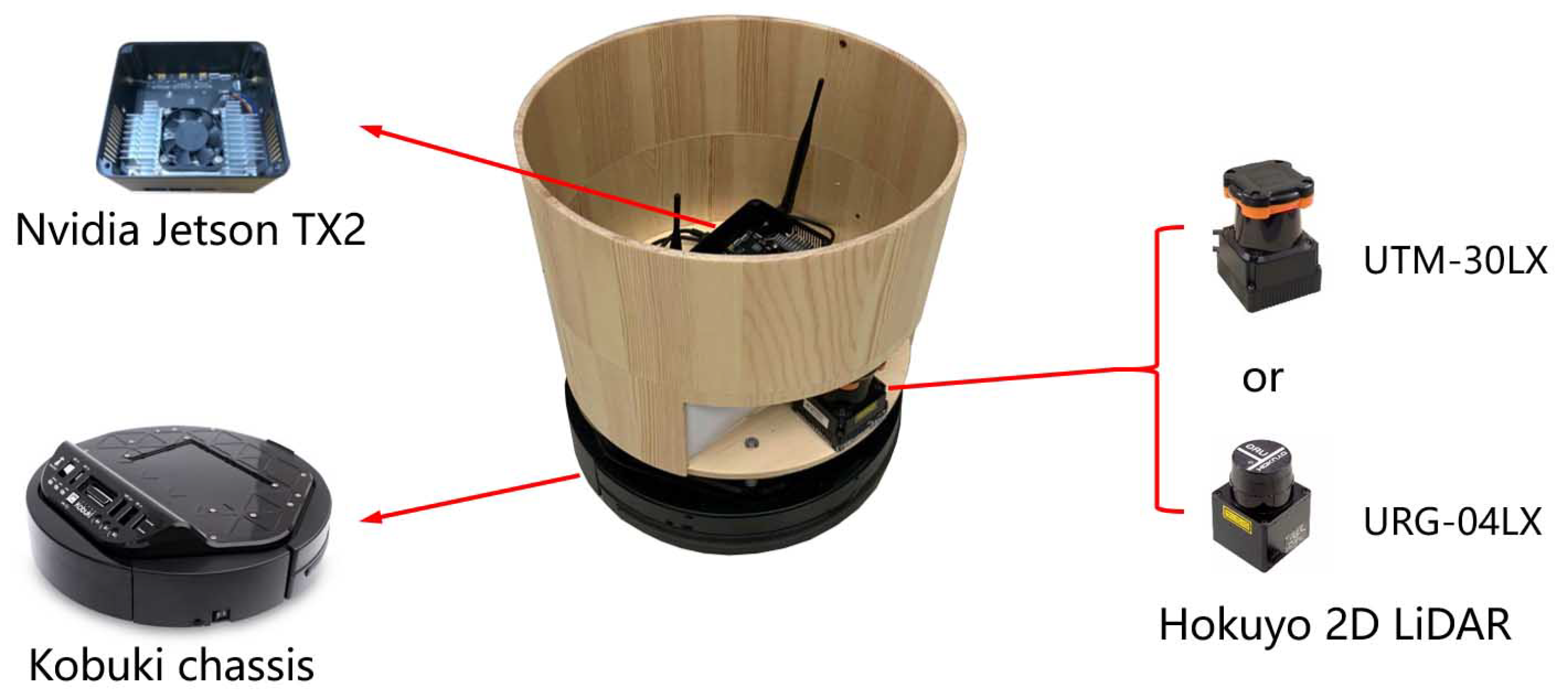

5.1. Hardware Setup

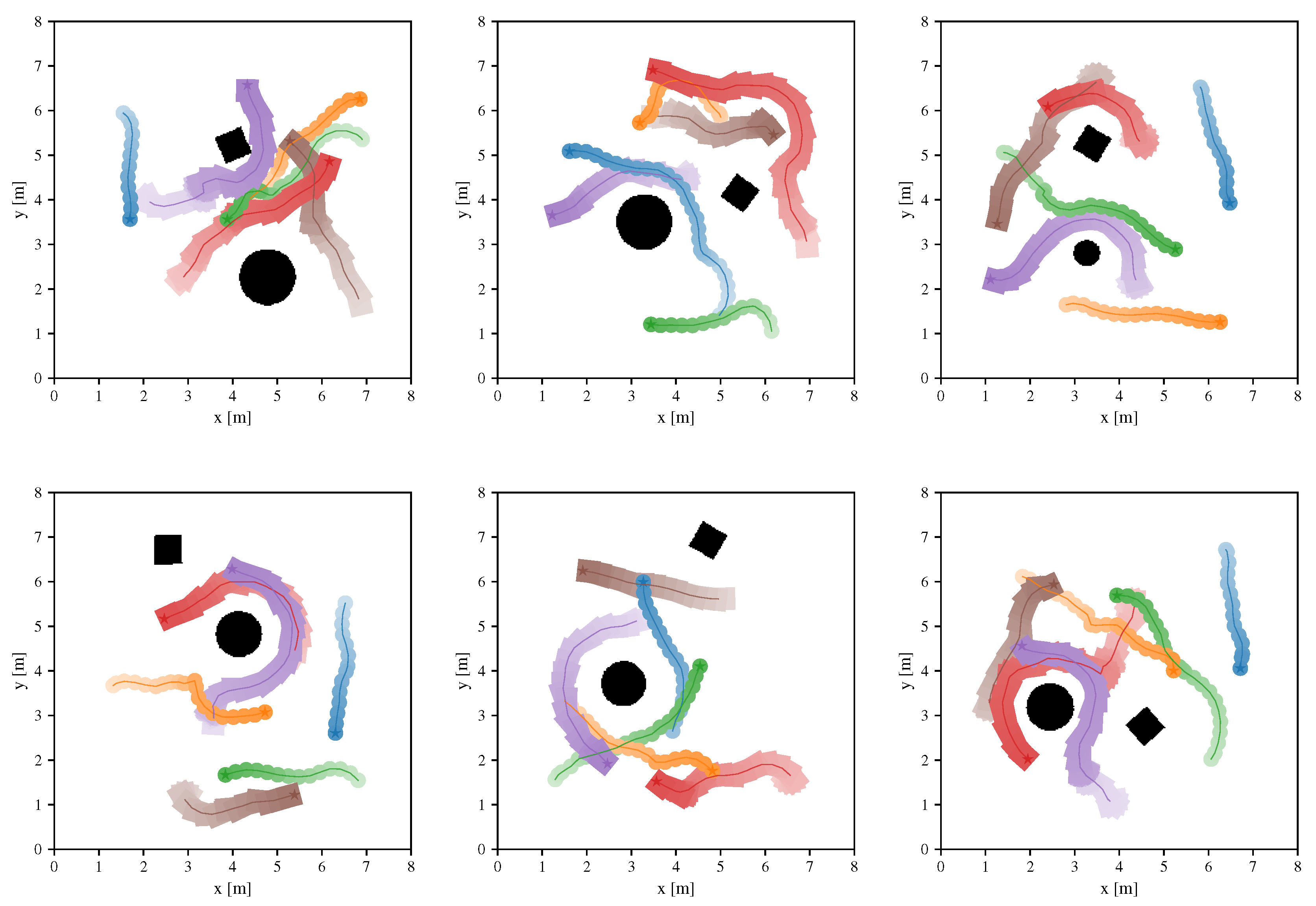

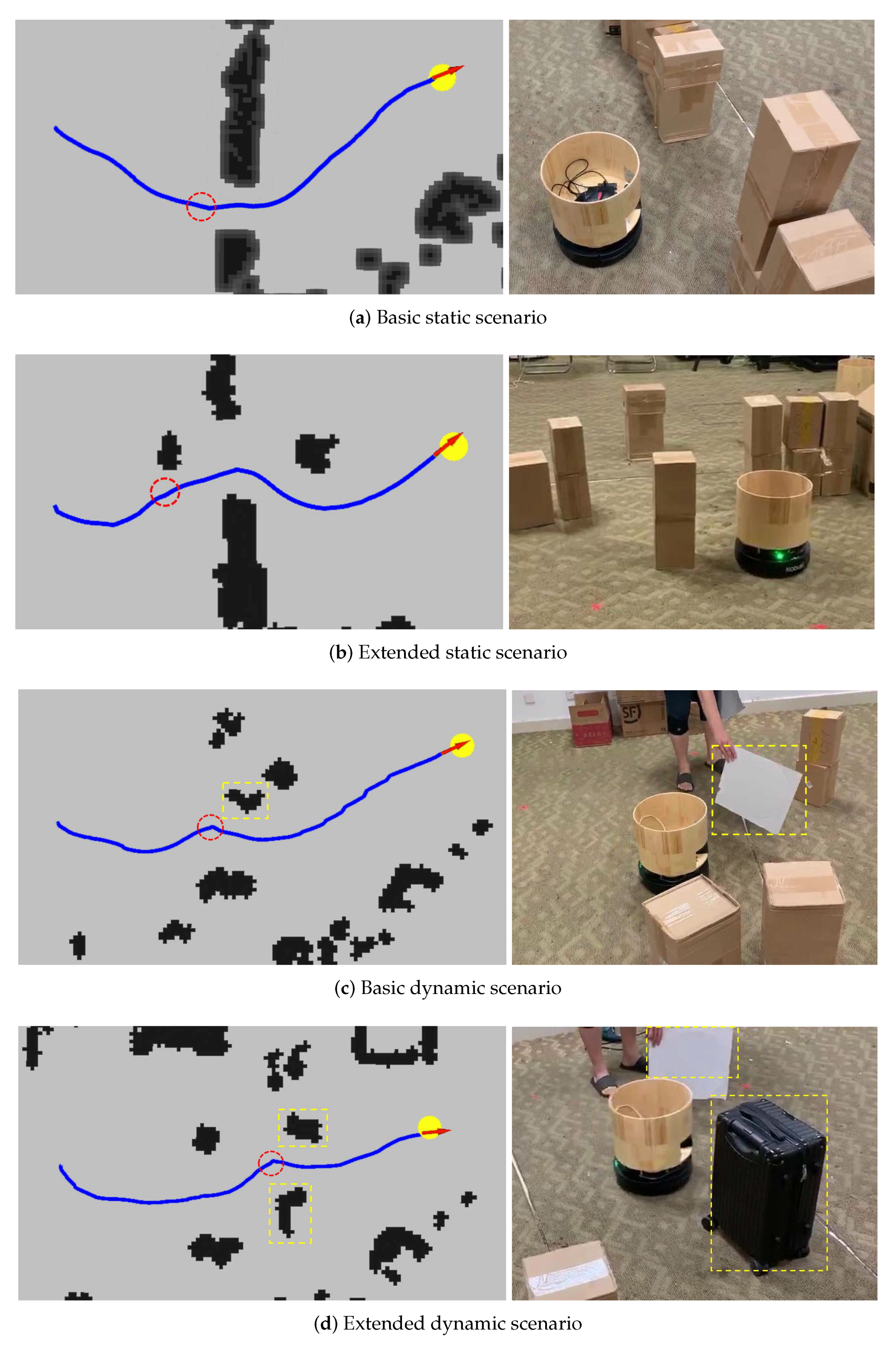

5.2. Static and Dynamic Scenarios

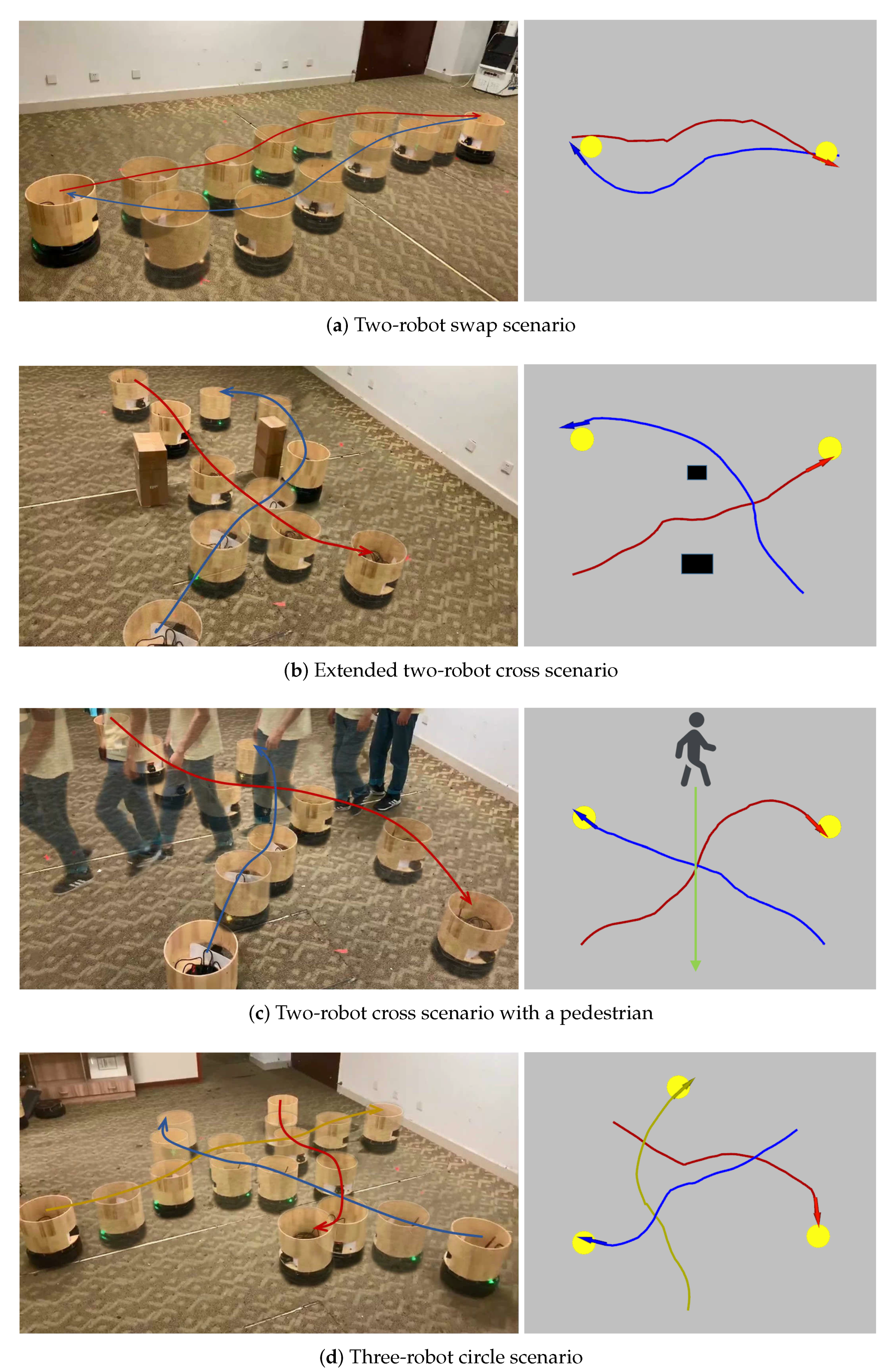

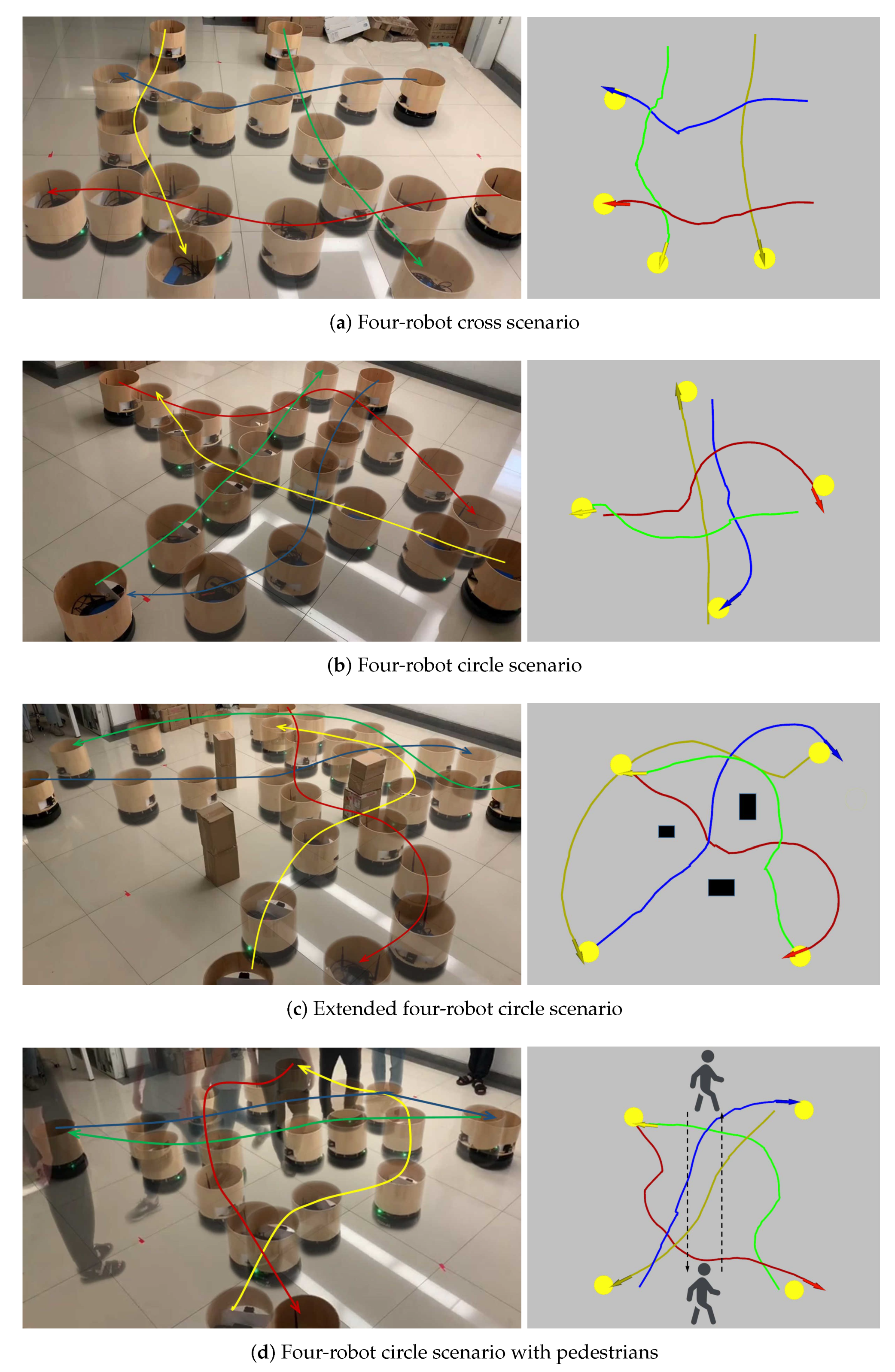

5.3. Multi-Robot Scenarios

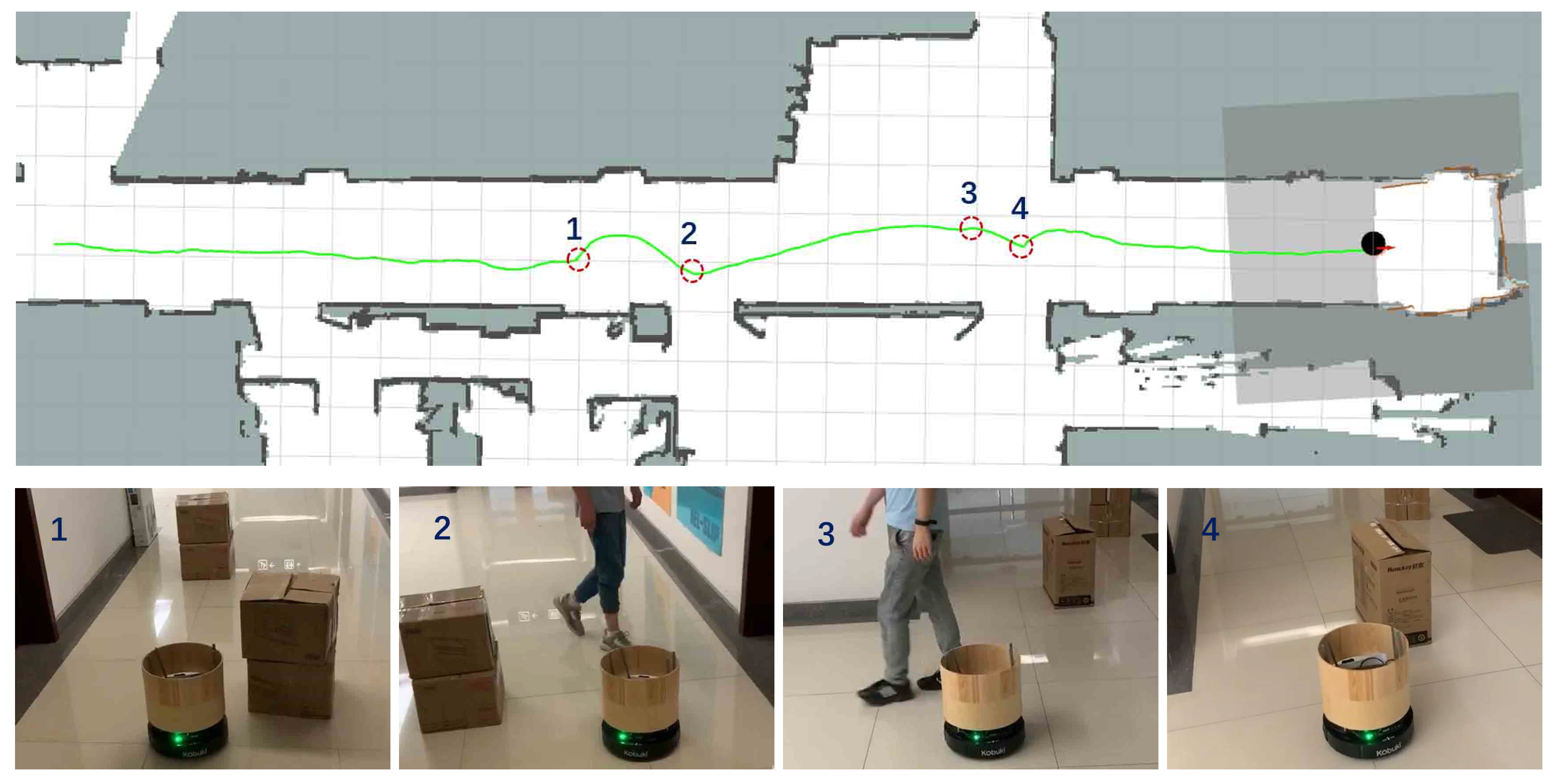

5.4. Long-Range Navigation

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wang, H.; Zhang, C.; Song, Y.; Pang, B. Master-followed multiple robots cooperation SLAM adapted to search and rescue environment. Int. J. Control. Autom. Syst. 2018, 16, 2593–2608. [Google Scholar] [CrossRef]

- Li, Z.; Barenji, A.V.; Jiang, J.; Zhong, R.Y.; Xu, G. A mechanism for scheduling multi robot intelligent warehouse system face with dynamic demand. J. Intell. Manuf. 2020, 31, 469–480. [Google Scholar] [CrossRef]

- Truong, X.T.; Ngo, T.D. Toward socially aware robot navigation in dynamic and crowded environments: A proactive social motion model. IEEE Trans. Autom. Sci. Eng. 2017, 14, 1743–1760. [Google Scholar] [CrossRef]

- Shalev-Shwartz, S.; Shammah, S.; Shashua, A. Safe, multi-agent, reinforcement learning for autonomous driving. arXiv 2016, arXiv:1610.03295. [Google Scholar]

- Wang, Z.; Hu, J.; Lv, R.; Wei, J.; Wang, Q.; Yang, D.; Qi, H. Personalized privacy-preserving task allocation for mobile crowdsensing. IEEE Trans. Mob. Comput. 2018, 18, 1330–1341. [Google Scholar] [CrossRef]

- Ge, X.; Han, Q.L. Distributed formation control of networked multi-agent systems using a dynamic event-triggered communication mechanism. IEEE Trans. Ind. Electron. 2017, 64, 8118–8127. [Google Scholar] [CrossRef]

- Michael, N.; Fink, J.; Kumar, V. Cooperative manipulation and transportation with aerial robots. Auton. Robot. 2011, 30, 73–86. [Google Scholar] [CrossRef]

- Alonso-Mora, J.; Baker, S.; Rus, D. Multi-robot formation control and object transport in dynamic environments via constrained optimization. Int. J. Robot. Res. 2017, 36, 1000–1021. [Google Scholar] [CrossRef]

- Fiorini, P.; Shiller, Z. Motion planning in dynamic environments using velocity obstacles. Int. J. Robot. Res. 1998, 17, 760–772. [Google Scholar] [CrossRef]

- Van Den Berg, J.; Guy, S.J.; Lin, M.; Manocha, D. Reciprocal n-body collision avoidance. In Robotics Research; Springer: Berlin/Heidelberg, Germany, 2011; pp. 3–19. [Google Scholar]

- Snape, J.; Van Den Berg, J.; Guy, S.J.; Manocha, D. The hybrid reciprocal velocity obstacle. IEEE Trans. Robot. 2011, 27, 696–706. [Google Scholar] [CrossRef]

- Bareiss, D.; van den Berg, J. Generalized reciprocal collision avoidance. Int. J. Robot. Res. 2015, 34, 1501–1514. [Google Scholar] [CrossRef]

- Hennes, D.; Claes, D.; Meeussen, W.; Tuyls, K. Multi-robot collision avoidance with localization uncertainty. In Proceedings of the 11th International Conference on Autonomous Agents and Multiagent Systems (AAMAS-12), Valencia, Spain, 4–8 June 2012; pp. 147–154. [Google Scholar]

- Claes, D.; Hennes, D.; Tuyls, K.; Meeussen, W. Collision avoidance under bounded localization uncertainty. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-12), Algarve, Portugal, 7–12 October 2012; pp. 1192–1198. [Google Scholar]

- Godoy, J.E.; Karamouzas, I.; Guy, S.J.; Gini, M. Implicit coordination in crowded multi-agent navigation. In Proceedings of the 30th AAAI Conference on Artificial Intelligence (AAAI-16), Phoenix, AZ, USA, 12–17 February 2016; pp. 2487–2493. [Google Scholar]

- Zhou, D.; Wang, Z.; Bandyopadhyay, S.; Schwager, M. Fast, on-line collision avoidance for dynamic vehicles using buffered voronoi cells. IEEE Robot. Autom. Lett. 2017, 2, 1047–1054. [Google Scholar] [CrossRef]

- Snape, J.; Van Den Berg, J.; Guy, S.J.; Manocha, D. Smooth and collision-free navigation for multiple robots under differential-drive constraints. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-10), Taipei, Taiwan, 18–22 October 2010; pp. 4584–4589. [Google Scholar]

- Alonso-Mora, J.; Beardsley, P.; Siegwart, R. Cooperative collision avoidance for nonholonomic robots. IEEE Trans. Robot. 2018, 34, 404–420. [Google Scholar] [CrossRef]

- Alonso-Mora, J.; Breitenmoser, A.; Rufli, M.; Beardsley, P.; Siegwart, R. Optimal reciprocal collision avoidance for multiple non-holonomic robots. In Distributed Autonomous Robotic Systems; Springer: Berlin/Heidelberg, Germany, 2013; pp. 203–216. [Google Scholar]

- Phillips, M.; Likhachev, M. Sipp: Safe interval path planning for dynamic environments. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA-11), Shanghai, China, 9–13 May 2011; pp. 5628–5635. [Google Scholar]

- Aoude, G.S.; Luders, B.D.; Joseph, J.M.; Roy, N.; How, J.P. Probabilistically safe motion planning to avoid dynamic obstacles with uncertain motion patterns. Auton. Robot. 2013, 35, 51–76. [Google Scholar] [CrossRef]

- Trautman, P.; Krause, A. Unfreezing the robot: Navigation in dense, interacting crowds. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-10), Taipei, Taiwan, 18–22 October 2010; pp. 797–803. [Google Scholar]

- Kretzschmar, H.; Spies, M.; Sprunk, C.; Burgard, W. Socially compliant mobile robot navigation via inverse reinforcement learning. Int. J. Robot. Res. 2016, 35, 1289–1307. [Google Scholar] [CrossRef]

- Peter, T.; Richard, J.M.; Murray, M.; Krause, A. Robot navigation in dense human crowds: The case for cooperation. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA-13), Karlsruhe, Germany, 6–10 May 2013; pp. 2153–2160. [Google Scholar]

- Kuderer, M.; Kretzschmar, H.; Sprunk, C.; Burgard, W. Feature-based prediction of trajectories for socially compliant navigation. In Proceedings of the 2012 Robotics: Science and systems (RSS-12), Sydney, NSW, Australia, 9–13 July 2012. [Google Scholar]

- Trautman, P.; Ma, J.; Murray, R.M.; Krause, A. Robot navigation in dense human crowds: Statistical models and experimental studies of human–robot cooperation. Int. J. Robot. Res. 2015, 34, 335–356. [Google Scholar] [CrossRef]

- Aquino, G.; Rubio, J.D.J.; Pacheco, J.; Gutierrez, G.J.; Ochoa, G.; Balcazar, R.; Cruz, D.R.; Garcia, E.; Novoa, J.F.; Zacarias, A. Novel nonlinear hypothesis for the delta parallel robot modeling. IEEE Access 2020, 8, 46324–46334. [Google Scholar] [CrossRef]

- de Jesús Rubio, J. SOFMLS: Online self-organizing fuzzy modified least-squares network. IEEE Trans. Fuzzy Syst. 2009, 17, 1296–1309. [Google Scholar] [CrossRef]

- Giusti, A.; Guzzi, J.; Cireşan, D.C.; He, F.L.; Rodríguez, J.P.; Fontana, F.; Faessler, M.; Forster, C.; Schmidhuber, J.; Di Caro, G.; et al. A machine learning approach to visual perception of forest trails for mobile robots. IEEE Robot. Autom. Lett. 2015, 1, 661–667. [Google Scholar] [CrossRef]

- Gandhi, D.; Pinto, L.; Gupta, A. Learning to fly by crashing. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-17), Vancouver, BC, Canada, 24–28 September 2017; pp. 3948–3955. [Google Scholar]

- Tai, L.; Li, S.; Liu, M. A deep-network solution towards model-less obstacle avoidance. In Proceedings of the 2016 IEEE/RSJ international conference on intelligent robots and systems (IROS-16), Daejeon, Korea, 9–14 October 2016; pp. 2759–2764. [Google Scholar]

- Pfeiffer, M.; Schaeuble, M.; Nieto, J.; Siegwart, R.; Cadena, C. From perception to decision: A data-driven approach to end-to-end motion planning for autonomous ground robots. In Proceedings of the 2017 IEEE international conference on robotics and automation (ICRA-17), Singapore, 29 May–3 June 2017; pp. 1527–1533. [Google Scholar]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of go without human knowledge. Nature 2017, 550, 354. [Google Scholar] [CrossRef]

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 2018, 362, 1140–1144. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Babuschkin, I.; Czarnecki, W.M.; Mathieu, M.; Dudzik, A.; Chung, J.; Choi, D.H.; Powell, R.; Ewalds, T.; Georgiev, P.; et al. Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature 2019, 575, 350–354. [Google Scholar] [CrossRef] [PubMed]

- Open, A.I.; Berner, C.; Brockman, G.; Chan, B.; Cheung, V.; Dębiak, P.; Dennison, C.; Farhi, D.; Fischer, Q.; Hashme, S.; et al. Dota 2 with Large Scale Deep Reinforcement Learning. arXiv 2019, arXiv:1912.06680. [Google Scholar]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the 2017 IEEE international conference on robotics and automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Ibarz, J.; Quillen, D. Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Robot. Res. 2018, 37, 421–436. [Google Scholar] [CrossRef]

- Xie, L.; Wang, S.; Rosa, S.; Markham, A.; Trigoni, N. Learning with training wheels: Speeding up training with a simple controller for deep reinforcement learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA-18), Brisbane, Australia, 21–25 May 2018; pp. 6276–6283. [Google Scholar]

- Chen, Y.F.; Liu, M.; Everett, M.; How, J.P. Decentralized non-communicating multiagent collision avoidance with deep reinforcement learning. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA-17), Singapore, 29 May–3 June 2017; pp. 285–292. [Google Scholar]

- Chen, Y.F.; Everett, M.; Liu, M.; How, J.P. Socially aware motion planning with deep reinforcement learning. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-17), Vancouver, BC, Canada, 24–28 September 2017; pp. 1343–1350. [Google Scholar]

- Long, P.; Fan, T.; Liao, X.; Liu, W.; Zhang, H.; Pan, J. Towards optimally decentralized multi-robot collision avoidance via deep reinforcement learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA-18), Brisbane, Australia, 21–25 May 2018; pp. 6252–6259. [Google Scholar]

- Fan, T.; Long, P.; Liu, W.; Pan, J. Distributed multi-robot collision avoidance via deep reinforcement learning for navigation in complex scenarios. Int. J. Robot. Res. 2020, 0278364920916531. [Google Scholar] [CrossRef]

- Chen, G.; Cui, G.; Jin, Z.; Wu, F.; Chen, X. Accurate Intrinsic and Extrinsic Calibration of RGB-D Cameras With GP-Based Depth Correction. IEEE Sens. J. 2018, 19, 2685–2694. [Google Scholar] [CrossRef]

- Kaelbling, L.P.; Littman, M.L.; Cassandra, A.R. Planning and acting in partially observable stochastic domains. Artif. Intell. 1998, 101, 99–134. [Google Scholar] [CrossRef]

- Moravec, H.P. Sensor fusion in certainty grids for mobile robots. AI Mag. 1988, 9, 61–74. [Google Scholar]

- Lu, D.V.; Hershberger, D.; Smart, W.D. Layered costmaps for context-sensitive navigation. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-14), Chicago, IL, USA, 14–18 September 2014; pp. 709–715. [Google Scholar]

- Ng, A.Y.; Harada, D.; Russell, S. Policy invariance under reward transformations: Theory and application to reward shaping. In Proceedings of the 16th International Conference on Machine Learning (ICML-99), Bled, Slovenia, 27–30 June 1999; Volume 99, pp. 278–287. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Schulman, J.; Moritz, P.; Levine, S.; Jordan, M.; Abbeel, P. High-dimensional continuous control using generalized advantage estimation. arXiv 2015, arXiv:1506.02438. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the 27th International Conference on Machine Learning (ICML 2010), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Bengio, Y.; Louradour, J.; Collobert, R.; Weston, J. Curriculum learning. In Proceedings of the 26th annual international conference on machine learning (ICML-09), Montreal, QC, Canada, 14–18 June 2009; pp. 41–48. [Google Scholar]

- Elman, J.L. Learning and development in neural networks: The importance of starting small. Cognition 1993, 48, 71–99. [Google Scholar] [CrossRef]

- Chen, G.; Pan, L.; Chen, Y.; Xu, P.; Wang, Z.; Wu, P.; Ji, J.; Chen, X. Robot Navigation with Map-Based Deep Reinforcement Learning. arXiv 2020, arXiv:2002.04349. [Google Scholar]

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-04), Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Vaughan, R. Massively multi-robot simulation in stage. Swarm Intell. 2008, 2, 189–208. [Google Scholar] [CrossRef]

- Bresenham, J.E. Algorithm for computer control of a digital plotter. IBM Syst. J. 1965, 4, 25–30. [Google Scholar] [CrossRef]

- Duchoň, F.; Babinec, A.; Kajan, M.; Beňo, P.; Florek, M.; Fico, T.; Jurišica, L. Path planning with modified a star algorithm for a mobile robot. Procedia Eng. 2014, 96, 59–69. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA-16), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

| Parameter | Value |

|---|---|

| VO type | HRVO |

| Use ORCA | True |

| Use clearpath | True |

| Epsilon | 0.1 |

| Time horizon | 10 |

| Time to holonomic | 0.4 |

| Minimum tracking error | 0.02 |

| Maximum tracking error | 0.1 |

| Parameter | Value |

|---|---|

| in line 5 | 2000 |

| in line 12 | 200 |

| in line 14 | 0.2 |

| in line 19 | 0.95 |

| in line 19 and 35 | 0.99 |

| in line 26 | 80 |

| in line 27 | 0.2 |

| in line 28 | 0.01 |

| in line 31 | (Stage 1), (Stage 2) |

| in line 34 | 80 |

| in line 36 |

| Scenarios (Agents, Range) | Method | (mean/std) | (mean/std) | (mean/std) | |

|---|---|---|---|---|---|

| Circle scenario (6, radius m) | NH-ORCA | 0.969 | 2.6676/1.3981 | 0.2004/0.1160 | 0.4490/0.1537 |

| Sensor-level | 1.000 | 2.0620/0.5576 | 0.8773/0.2269 | 0.5636/0.1328 | |

| Map-based Stage-1 | 0.937 | 8.2528/6.4266 | 0.7861/0.4763 | 0.3328/0.2881 | |

| Map-based Stage-2 | 1.000 | 2.0000/0.3502 | 0.8648/0.1447 | 0.5659/0.1283 | |

| Circle scenario (8, radius 3 m) | NH-ORCA | 0.950 | 3.4988/1.9744 | 0.2057/0.1299 | 0.4479/0.1520 |

| Sensor-level | 1.000 | 2.5400/0.5084 | 1.1992/0.1918 | 0.5687/0.1233 | |

| Map-based Stage-1 | 0.914 | 10.3488/6.3236 | 0.9185/0.5446 | 0.3218/0.2880 | |

| Map-based Stage-2 | 1.000 | 2.3170/0.2577 | 1.0204/0.1513 | 0.5730/0.1146 | |

| Circle scenario (10, radius m) | NH-ORCA | 0.892 | 4.2930/2.6132 | 0.2486/0.1983 | 0.4366/0.1546 |

| Sensor-level | 1.000 | 3.3045/0.4784 | 1.5991/0.2145 | 0.5734/0.1142 | |

| Map-based Stage-1 | 0.903 | 11.9304/9.2772 | 1.0635/0.6968 | 0.3212/0.2867 | |

| Map-based Stage-2 | 1.000 | 2.5881/0.4650 | 1.1870/0.1710 | 0.5735/0.1114 | |

| Circle scenario (12, radius m) | NH-ORCA | 0.862 | 5.2137/3.4742 | 0.2817/0.2599 | 0.4078/0.1711 |

| Sensor-level | 1.000 | 3.7290/0.5355 | 1.7884/0.2525 | 0.5699/0.1170 | |

| Map-based Stage-1 | 0.873 | 15.7697/11.7475 | 1.0773/0.7475 | 0.2698/0.2871 | |

| Map-based Stage-2 | 1.000 | 2.6133/0.4527 | 1.2170/0.1769 | 0.5745/0.1120 | |

| Cross scenario (8, m) | NH-ORCA | 0.958 | 2.1283/1.5166 | 0.1883/0.2081 | 0.4851/0.1430 |

| Sensor-level | 0.995 | 2.8238/1.2894 | 1.1174/0.5214 | 0.5419/0.1588 | |

| Map-based Stage-1 | 0.950 | 4.0802/3.4952 | 1.0158/0.7322 | 0.4764/0.2278 | |

| Map-based Stage-2 | 1.000 | 1.8315/1.2333 | 0.7873/0.4912 | 0.5608/0.1384 | |

| Swap scenario (8, m) | NH-ORCA | 0.906 | 2.2174/2.1307 | 0.2651/0.2228 | 0.4845/0.1648 |

| Sensor-level | 1.000 | 2.7357/0.9494 | 1.1498/0.3479 | 0.5535/0.1419 | |

| Map-based Stage-1 | 0.994 | 2.7272/2.2479 | 0.8017/0.5761 | 0.5206/0.1874 | |

| Map-based Stage-2 | 1.000 | 2.0201/1.0430 | 0.9816/0.3660 | 0.5584/0.1424 | |

| New random scenario (10, m) | NH-ORCA | 0.934 | 4.3181/3.1353 | 0.5697/0.6412 | 0.3760/0.1890 |

| Sensor-level | 0.924 | 3.4519/3.4162 | 0.5417/0.5048 | 0.4017/0.2687 | |

| Map-based Stage-1 | 0.955 | 3.1650/2.5632 | 0.5514/0.4643 | 0.4202/0.2590 | |

| Map-based Stage-2 | 0.986 | 2.9009/2.4523 | 0.4531/0.3610 | 0.4460/0.2497 |

| Model No. | UTM-30LX | URG-04LX |

|---|---|---|

| Measuring area | 0.1 to 30 m, | 0.02 to 5.6 m, |

| Accuracy | 0.1 to 10 m: ±30 mm, 10 to 30 m: ±50 mm | 0.06 to 1 m: ±30 mm, 1 to 4.095 m: |

| Angular resolution | ||

| Scanning time | 25 ms/scan | 100 ms/scan |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, G.; Yao, S.; Ma, J.; Pan, L.; Chen, Y.; Xu, P.; Ji, J.; Chen, X. Distributed Non-Communicating Multi-Robot Collision Avoidance via Map-Based Deep Reinforcement Learning. Sensors 2020, 20, 4836. https://doi.org/10.3390/s20174836

Chen G, Yao S, Ma J, Pan L, Chen Y, Xu P, Ji J, Chen X. Distributed Non-Communicating Multi-Robot Collision Avoidance via Map-Based Deep Reinforcement Learning. Sensors. 2020; 20(17):4836. https://doi.org/10.3390/s20174836

Chicago/Turabian StyleChen, Guangda, Shunyi Yao, Jun Ma, Lifan Pan, Yu’an Chen, Pei Xu, Jianmin Ji, and Xiaoping Chen. 2020. "Distributed Non-Communicating Multi-Robot Collision Avoidance via Map-Based Deep Reinforcement Learning" Sensors 20, no. 17: 4836. https://doi.org/10.3390/s20174836

APA StyleChen, G., Yao, S., Ma, J., Pan, L., Chen, Y., Xu, P., Ji, J., & Chen, X. (2020). Distributed Non-Communicating Multi-Robot Collision Avoidance via Map-Based Deep Reinforcement Learning. Sensors, 20(17), 4836. https://doi.org/10.3390/s20174836