Abstract

Related to the letters of an alphabet, entropy means the average number of binary digits required for the transmission of one character. Checking tables of statistical data, one finds that, in the first position of the numbers, the digits 1 to 9 occur with different frequencies. Correspondingly, from these probabilities, a value for the Shannon entropy H can be determined as well. Although in many cases, the Newcomb–Benford Law applies, distributions have been found where the 1 in the first position occurs up to more than 40 times as frequently as the 9. In this case, the probability of the occurrence of a particular first digit can be derived from a power function with a negative exponent p > 1. While the entropy of the first digits following an NB distribution amounts to H = 2.88, for other data distributions (diameters of craters on Venus or the weight of fragments of crushed minerals), entropy values of 2.76 and 2.04 bits per digit have been found.

Keywords:

alphabet; Boltzmann; calcite; Clausius; craters; density function; entropy; exoplanets; first-digit phenomenon; fragment; granite; information; marble; mineral; Newcomb–Benford law; populations; probability; scale invariance; Shannon entropy; space probes; statistical thermodynamics; stock prices; street addresses; Venus; wages 1. Introduction

According to information theory, the Shannon entropy H of a variable is the average level of information inherent to the variable’s possible outcomes [1]. Originally, the term entropy was coined by Clausius [2] for a state variable in thermodynamics. Boltzmann [3] related the entropy of an ideal gas to the multiplicity, the number of microstates corresponding to the gas´s macrostate. From the probability of occurrence of letters, Shannon calculated the entropy H of an alphabet [4,5]. This gives the minimum number of binary digits necessary for the transmission of one character and, simultaneously, means its average information. In quite a similar way, one can obtain a value of H from the probability of occurrence of the first digits in tables of numbers. This gives the average information of a first digit and the number of binary digits required for their transmission as well. Due to Kwiatkowsky [6], entropy is a measure of uncertainty.

One goal of this manuscript is to apply Shannon´s method to first digits of data collections. The entropy of the first digits, giving the minimum of binary digits necessary to characterize their distribution, may be of some value in case the signal to noise ratio is extremely low, e.g., when transmitting data from space probes.

Another goal is to find first digits´ distributions that follow a continuous function, such as the data that obey Newcomb–Benford´s Law, but on the other hand exhibit a clearly different frequency of the first digits. Examples seem to be rare.

The question is also discussed regarding distributions of the first digits that are very different from the NB Law. In the case of fractured minerals, a possible explanation is presented.

For an alphabet, the entropy H is determined in the following way [7]: if the letters occur with different probabilities, pi, one forms the logarithmus dualis of a particular character, ld pi, and multiplies this value with the probability of its occurrence in the text, giving pi ld pi. Taking the sum over all letters results in

with n being the total number of letters [8,9] in this alphabet. The average information of a single character shows its highest value when all letters are equally likely. Correspondingly, one can calculate a value for the entropy H from the probability of occurrence of first digits in data collections as well.

2. The Newcomb–Benford Distribution

Checking tables of statistical data (e.g., atomic weights, masses of exoplanets, but also wages and prices, stock prices, populations of communities, street addresses, lengths of rivers, page numbers in literature citations, development aid), one finds quite often that 1 occurs more frequently in the first position of numbers than 9. The best-known example is the Newcomb–Benford distribution, saying that the number 1 occurs 6.58 times more often as the leading digit than 9 [10,11,12,13]. Some continuous processes satisfy this exactly (in the asymptotic limit as the process continues through time). Examples are exponential growth or decay processes [12]: if a quantity is exponentially increasing or decreasing in time, then the percentage of time that it has each first digit satisfies Newcomb–Benford’s Law. An example is given in Appendix A. Many—though not all—distributions follow the NB Law more or less closely (the population of municipalities, microorganisms in a culture, spread of internet contents, capital growth, wages and prices, loss of value over time, street addresses [12], page numbers in literature citations [14]). Biau [15] analyses the discrepancies between the NB Law and first-digits frequencies in data of turbulent flows through Shannon’s entropy.

For a more general approach to the problem of first digits´ probabilities, one starts from the density function

The probability W(a,b) of the object to exhibit a size between a and b then is

In case of exponential growth of a certain object, x means the time and p equals 1.

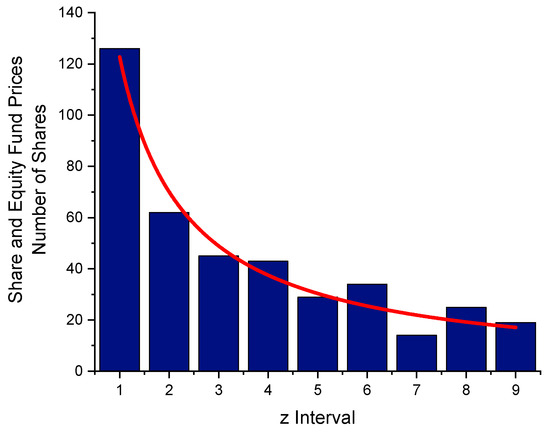

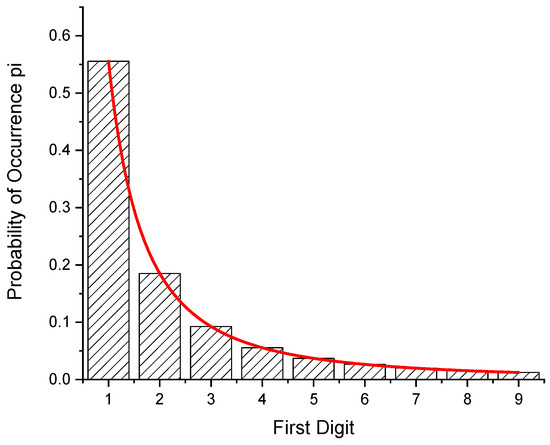

The bars in Figure 1 refer to subsequent integers a, b, defining a “z-interval“.

Figure 1.

The Newcomb–Benford probability distribution of first digits. The probability of a 1 being the leading digit is 6.58 times as high as a 9. The red curve shows W(z), Equation (4).

Equations (3) and (4) are scale invariant, i.e., one would obtain the same power law and the same ratio of 1s to 9s in the first digital place if one would use other units to measure the objects´ properties, as long as one chooses comparable intervals (e.g., one decade).

2.1. Entropy of the Newcomb–Benford Distribution

Similar to the letters of an alphabet, the probability of occurrence can be determined from the first digits of a data set following the Newcomb–Benford Law. In Table 1, the values for W(z), 1 ≤ z ≤9, are listed, together with the probability of occurrence of a particular digit in the first position of numbers. From the probabilities, one finds the numerical value of the entropy with Equation (1) of the first digits´ distribution to be H (NB Law) = 2.8762 bits per digit. This means the average information of a single first digit. In case the leading digits were evenly distributed (all pi = ), the entropy would amount to H = −= 3.1699 bpd.

Table 1.

Entropy H of the first digits´ distribution due to the Newcomb–Benford law.

2.2. Non-NB Distributions

In case the first digits´ distribution does not follow the NB Law (Figure 2), it can be derived from the density function D(x) = with p ≠ 1. The proportion of numbers falling into the range between x1 and x2 is calculated from

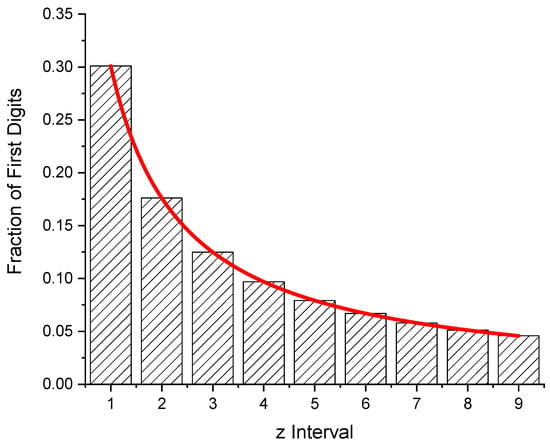

Figure 2.

Density function of a first digit distribution obeying the NB Law (D(x) ~ x−1; red) and of a distribution deviating from it ((D(x) ~ x−2.003; black). The areas between the dashed lines correspond to the amount of data starting with a 1 or a 9, respectively. The black curve gives the density function of the first digit distribution of the weight of samples of crushed marble, as shown in Figure 3.

This law is scale invariant. In case x1 and x2 are chosen as subsequent integers, W(x1, x2) = W(z) is in proportion to the numbers starting with the digit x1 = z. From the probabilities of the first digits, the average entropy of a digit can be determined (Equation (1)).

3. Mass Distributions of Minerals

3.1. Fragments of Marble

The distribution of the first digits of fractions of minerals occasionally deviates strongly from the NB Law [16]. Six samples of marble (irregularly shaped plates of 10 mm thickness, weighing between 4.70 and 51.69 g) were crushed in a hydraulic press, and the weights of 1052 fragments between 10 and 99 mg were taken. Fragments below 10 and above 99 mg have been omitted. In Table 2, the numbers of fragments sharing the same first digit are listed. From the probabilities, pi, the value of the entropy H = 2.0370 has been found.

Table 2.

Probabilities of fragments of marble.

From a fit of Equation (5) (red curve in Figure 3), the value of p = 2.003 (25) has been determined. Assuming the distribution would follow exactly this function, the statistical weights and the first digits´ probabilities can be obtained from

where a, b are the integers defining the z intervals.

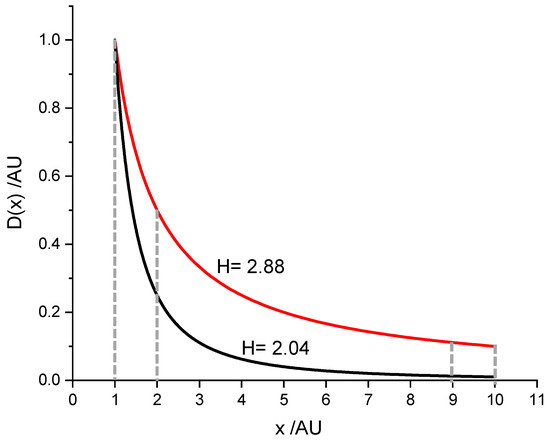

Figure 3.

Fragments of six samples of marble. Fit of the function W(z) to a bar graph giving the probabilities of first digits of the weights of 1052 fragments between 10 and 99 mg. From the probabilities of occurrence of the first digits, a value of the entropy H = 2.0370 bits per digit has been found. The function fitted corresponds to a density function with p = 2.003 (25).

In this case, a 1 (a = 1, b = 2) is expected to occur as a leading digit 45.27 times more often than a 9 (a = 9, b = 10). For statistical reasons, the observed ratio is different (58.8). It is, e.g., closer for the ratio of the 1 s to the 7 s (calculated: 29.8, observed: 30.9).

Why Is the Number 1 So Strong in The Majority of Cases?

It is surprising that, when it comes to the weight of the fragments, the number 1 occurs even more often as a leading digit than expected from the NB Law. To answer this question, one has to determine the probability of the weight of a fragment exhibiting a certain first digit.

Table 3 shows the number of first digits for this case, so one would divide the sample into the number of equal-sized fragments given in the left column. For example, cutting a mass of 81,475 g (a weight chosen arbitrarily) into 815 pieces of equal size, one finds that the weight of each piece starts with a 9. This holds up to 905 fragments. Dividing the sample into smaller parts (between 906 and 1018 fragments) results in an 8 in the first position. Between 4074 and 8147 fragments of equal size, the leading digit will be a 1. Although the sample will probably never break into pieces of equal size, there will be at least a high probability for a majority of the fragments that their weight will start with a 1. A 9 will be rare. Table 4 gives the probabilities of occurrence of the first digits.

Table 3.

Number of fragments of a mass of 81,475 g with identical first digits.

Table 4.

Probabilities of occurrence of first digits.

From Table 5, one can see that, in this example, the ratio of 1 s to 9 s is 45265/1006 = 44.995. Starting from samples with different weights produces quite similar results.

Table 5.

Ratio 1 vs. 9 and entropy H.

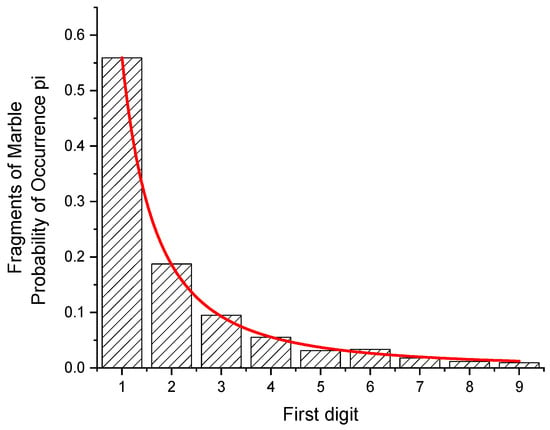

Figure 4 gives the distribution of probabilities among the z-intervals. From a fit (Equation (5); Figure 4), one obtains p = 2.0 for the exponent of the density function. From this value, the ratio of the 9 s to the 1 s (occurring as a first digit) should be exactly 45. From the experiments, the ratios 42.12 (calcite), and 27.62 (granite) have been calculated (Section 3.2 and Section 3.3).

Figure 4.

Probability of first digits when the sample is divided hypothetically into fragments of equal size, as shown in Table 3. From the fit of the probability function, W(z), for the density function exponent, a value of p = 2.00003 (4) is obtained.

Table 3 also shows that there is kind of periodicity in the occurrence of a certain first digit when dividing the sample into more and more pieces. Inspecting the table from top to bottom, one finds that the number of a particular digit increases approximately by a factor of 10.

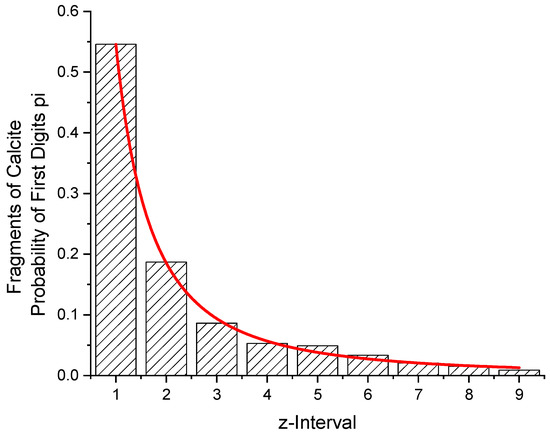

3.2. Fragments of Calcite

From 2 samples of calcite, 775 fragments between 10 and 99 mg were obtained. Table 6 and Figure 5 give the distribution with respect to the first digits of their weight. A value for the entropy of H = 2.1064 is obtained. The exponent of the density function amounts to p = 1.966 (36).

Table 6.

First digits´ entropy of samples of calcite.

Figure 5.

Fit of the function W(z) to a bar graph giving the probabilities of first digits of the weight of 775 fragments of calcite between 10 and 99 mg. p = 1.966 (36). From the function, the probabilities for W(z = 1) and W(z = 9) have been calculated. Their ratio amounts to 42.12.

From the function, the probabilities for W(z = 1) and W(z = 9) have been calculated. Their ratio is 42.12. From the counted number of fragments, a ratio of 423/7 = 60.43 is obtained.

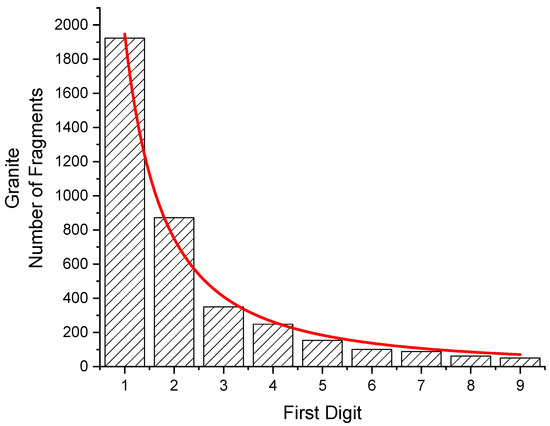

3.3. Fragments of Granite

Samples of granite (13 square discs, 34 × 34 × 9.5 mm3, 28 g each, with 3 more samples, slightly larger) were crushed in a hydraulic press. The weight of 3849 fragments between 10 mg and 99 mg was taken. The distribution is given in Figure 6 as well as in Table 7. From this, a value of H = 2.1817 bits per digit has been found.

Figure 6.

Fit of the function W(z) to a bar graph giving the probabilities of first digits of the weight of 3849 fragments of granite between 10 and 99 mg (obtained from 15 samples). P = 1.748 (81). From the function, the probabilities for W(z = 1) and W(z = 9) have been calculated. Their ratio is 27.62. From the counted number of fragments, the ratio is 37.71.

Table 7.

First digits´ entropy of samples of granite.

From a fit of Equation (5) (red curve in Figure 6), the value for the parameters p = 1.748 (81) has been determined. Assuming the distribution would follow exactly this function, the statistical weights and the first digits´ probabilities can be obtained from

From this, a 1 is expected to occur as a leading digit 27.62 times more often than a 9. From the counted number of fragments, the ratio is found to be larger (1923/51 = 37.71).

The distribution of the first digits would be close to the same power law when fragments are selected in the comparable 10-fold range of 1.0 × 10−4 ounces to 9.9 × 10−4 ounces.

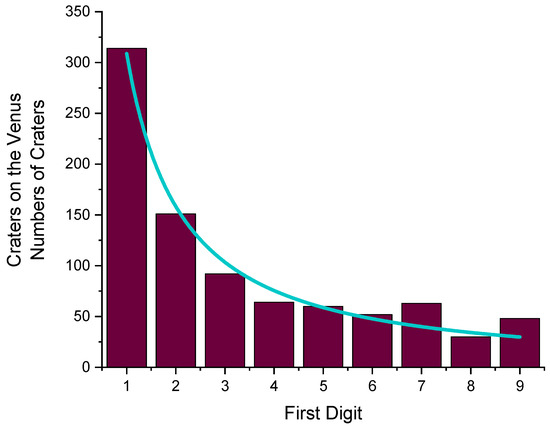

5. Craters on the Venus

The first digits of the diameters of 874 craters on Venus [18] produced an H value of 2.764 bits per digit (Table 9). Figure 8 shows the distribution. Due to the function fitted (p = 1.238(73)), a 1 should be 10.36 as frequent as a 9.

Table 9.

First digits´ distribution of the diameters of 874 craters on Venus.

Figure 8.

Distribution of the first digits of the diameters of 874 craters on Venus. H = 2.764 bits per digit. From a fit (blue curve), p = 1.238 (73) has been derived.

6. Results

The entropy of most of the first digits´ distributions in listings encountered in daily life exhibits a value around H = 2.9 bits per digit, while in some cases (diameters of craters on the planet Venus, weights of mineral fragments), considerably lower values have been found. Table 10 gives the results.

Table 10.

Values for first digit entropy for different distributions.

The largest deviation of the entropy from the NB distribution was observed for the weight of fragments of some minerals. Whether this is due to material constants or whether it can be attributed to the experimental conditions has to be left to future investigations.

Funding

This research received no external funding.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. First Digits’ Distribution of Exponentially Growing Objects

Suppose an object starts growing at a certain size according to the function

S = exp [0.057565 t].

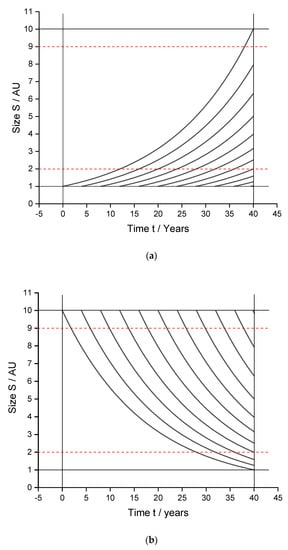

The number 0.057565 has been chosen such that the first object reaches size S = 10 after 40 years. Figure A1 shows the size of objects starting to grow at different times: the first one at t = 0, the others following in each case at intervals of four years. After 40 years, one will find more objects whose size starts with a 1 than with a 9.

This can be shown in the following way: from the functions n = 0.25 t + 1 (n = number of objects; t = time/years) and S = exp [0.057565 t], one obtains dn = 0.25 dt and dS = 0.057565 exp [0.057565 t] dt, and from , one gets the number n of objects within a certain size range

After 40 years, in the limiting case of an infinite number of objects, 30.102 % of them will exhibit a size between 1 and 2 units, but only 4.576 % between 9 and 10 units, with their ratio being 6.58, corresponding to the NB Law (Figure 1).

Figure A1.

Size of a group of objects as a function of time when (a) growing or (b) decaying exponentially.

References

- Available online: https://en.wikipedia.org/wiki/Entropy_(information_theory) (accessed on 20 June 2022).

- Clausius, J.E.C. Available online: https://www.britannica.com/biography/Rudolf-Clausius (accessed on 17 August 2022).

- Available online: https://en.wikipedia.org/wiki/Boltzmann%27s_entropy_formula (accessed on 1 September 2022).

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Shannon, C.E. Prediction and Entropy of Printed English. Bell Syst. Tech. J. 1951, 30, 50–64. [Google Scholar] [CrossRef]

- Kwiatkowski, S. Entropy Is a Measure of Uncertainty. Available online: https://towardsdatascience.com/entropy-is-a-measure-of-uncertainty-e2c000301c2c (accessed on 20 June 2022).

- Available online: https://www.tf.uni-kiel.de/matwis/amat/mw1_ge/kap_5/advanced/t5_3_2.html (accessed on 13 June 2022).

- Schöning, U. Algorithmik; Springer Spektrum: Berlin/Heidelberg, Germany, 2011; ISBN 9783827427991. [Google Scholar]

- Schöning, U. Mathe-Toolbox. 3., Überarbeitete Auflage; Lehmanns Media: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Newcomb, S. Note on the Frequency of Use of the Different Digits in Natural Numbers. Am. J. Math. 1881, 4, 39–40. [Google Scholar] [CrossRef]

- Benford, F. The Law of Anomalous Numbers. Proceed. Am. Phil. Soc. 1938, 78, 551–572. [Google Scholar]

- Available online: https://en.wikipedia.org/wiki/Benford%27s_law (accessed on 21 July 2022).

- Hill, T.P. A Statistical Derivation of the Significant-Digit Law. Statist. Sci. 1995, 10, 354–363. [Google Scholar] [CrossRef]

- Stylometry and Numerals Usage: Benford’s Law and Beyond. Available online: https://encyclopedia.pub/entry/17716 (accessed on 17 August 2022).

- Biau, D. The First-Digit Frequencies in Data of Turbulent Flows. Phys. A Stat. Mech. Its Appl. 2015, 440, 147–154. [Google Scholar] [CrossRef]

- Kreiner, W.A. On the Newcomb-Benford Law. Z. Nat. 2003, 58a, 618–622. [Google Scholar] [CrossRef]

- Posch, P.N.; Kreiner, W.A. Analysing digits for portfolio formation and index tracking. J. Asset Manag. 2006, 7, 69–80. [Google Scholar] [CrossRef]

- Available online: https://en.wikipedia.org/wiki/List_of_craters_on_Venus (accessed on 20 June 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).