Abstract

This research investigates how hybrid review systems integrating human-generated reviews and AI-generated summaries shape consumer trust and decision-related confidence. Across three controlled experiments conducted in simulated e-commerce environments, when and how hybrid reviews enhance consumer evaluations were examined. Study 1 demonstrates that hybrid reviews, which combine the emotional authenticity of human input with the analytical objectivity of AI, elicit greater levels of review trust and decision confidence than single-source reviews. Study 2 employs an experimental manipulation of presentation order and demonstrates that decision confidence increases when human reviews are presented before AI summaries, because this sequencing facilitates more effective cognitive integration. Finally, Study 3 shows that AI literacy strengthens the positive effect of perceived diagnosticity on confidence, while information overload mitigates it. By explicitly testing these processes across three experiments, this research clarifies the mechanisms through which hybrid reviews operate, identifying authenticity and objectivity as dual mediators, and sequencing, literacy, and cognitive load as critical contextual moderators. This research advances current theories on human–AI complementarity, information diagnosticity, and dual-process cognition by demonstrating that emotional and analytical cues can jointly foster trust in AI-mediated communications. This integrative evidence contributes to a nuanced understanding of how hybrid intelligence systems shape consumer decision-making within digital marketplaces.

1. Introduction

Online reviews have become a dominant form of electronic word-of-mouth (eWOM), profoundly shaping consumer decision-making in digital marketplaces [1,2]. However, review credibility has become more uncertain as platforms increasingly incentivize consumers to post short reviews, often by offering discounts, loyalty points, or small rewards. As a result, many consumers have begun to doubt whether photo-based or text reviews genuinely reflect authentic experiences or are simply responses to these incentives [3]. At the same time, the explosion of review volume has intensified information overload, leading users to rely on aggregated metrics or algorithmic summaries rather than detailed human narratives [4]. As a result, the traditional assumption that user-generated reviews inherently signal trustworthiness is no longer consistently accurate.

Recent advances in generative artificial intelligence (AI) have further transformed the creation and presentation of reviews. Large language models, such as ChatGPT and Gemini, can summarize thousands of reviews into concise, fact-based overviews and even generate synthetic AI reviews. These AI reviews promise efficiency and objectivity [5]; however, they also raise concerns about authenticity, transparency, and emotional depth [6]. Thus, rather than eliminating human reviews, many platforms now integrate human-generated reviews and AI-generated summaries, such as user photos and comments, which are accompanied by algorithmic summaries or “key insights” sections. This hybrid review environment, in which human and AI reviews coexist, represents a new frontier for understanding how consumers form trust and develop confidence in online decisions.

Although research on AI communication has been rapidly expanding, most studies have adopted a competitive framing that contrasts human and machine reviewers [7]. These works implicitly assume that AI and human reviews are mutual substitutes, and that one will outperform or undermine the other. Yet, in real-world commerce, consumers increasingly encounter both simultaneously. The potential complementarity between human and AI reviews, in which each fulfills different psychological functions, remains underexplored. Moreover, the cognitive processes underlying how such hybrid review systems affect trust and decision confidence have yet to be clearly identified.

To address these gaps, this research proposes that human and AI-generated summaries jointly influence consumer trust and decision confidence through the perceptual mechanisms of perceived authenticity and perceived objectivity. Drawing on human–AI complementarity theory [8], we argue that human reviews provide emotional richness and experiential cues, while AI reviews offer structured and balanced information. Together, they form a more diagnostic and trustworthy information environment. From an information diagnosticity perspective [2], the coexistence of authentic and concise cues can enhance consumer confidence in reviews’ informational quality. Finally, grounded in dual-process and cognitive load theory [9], we suggest that the presentation sequence of information and perceived information overload can affect how consumers process hybrid reviews and form trust-based judgments.

Based on this theoretical integration, this study addresses three central research questions: (1) How does a hybrid (human + AI) review format affect consumer trust and decision confidence compared with a single-source review format? (2) Do perceived authenticity and objectivity jointly mediate the effect of review type on trust and confidence? And (3) How do individual and contextual factors, specifically AI literacy and the presentation sequence of information, moderate these relationships?

To examine these questions, we conduct three controlled online experiments across distinct service contexts: food delivery, travel bookings, and home services. These experiments test the main effects of hybrid reviews (Study 1), the moderating role of presentation sequence (Study 2), and the effects of AI literacy and information overload (Study 3).

This research makes several contributions to the literature. First, it reframes the study of algorithmic reviews to one of coexistence rather than competition, proposing a complementary human–AI perspective that better reflects contemporary review environments. Second, it identifies authenticity and objectivity as dual mediators, advancing theory on how consumers form trust in mixed human–machine information systems. Third, it introduces AI literacy and presentation design as critical boundary conditions, clarifying when and how hybrid review systems enhance consumer decision confidence. Together, these insights extend the existing research on eWOM, AI communication, and trust formation in digital marketplaces, providing theoretical and managerial implications for the design of transparent and credible review systems.

2. Theoretical Framework and Hypotheses Development

This study develops an integrated theoretical framework that explains how consumers form trust and decision confidence when exposed to hybrid review environments that combine human-generated reviews and AI-generated summaries. Drawing on human–AI complementarity theory, the information diagnosticity perspective, and the dual-process and cognitive load theory, the framework posits that human reviews provide emotionally rich and experiential cues that enhance perceived authenticity, whereas AI-generated summaries offer structured and balanced information that contributes to perceived objectivity. In this study, AI-generated summaries refer specifically to data-driven algorithmic summaries that aggregate existing customer feedback, rather than synthetic or fabricated narratives. This operationalization reflects the formats currently utilized by commercial review platforms [10].

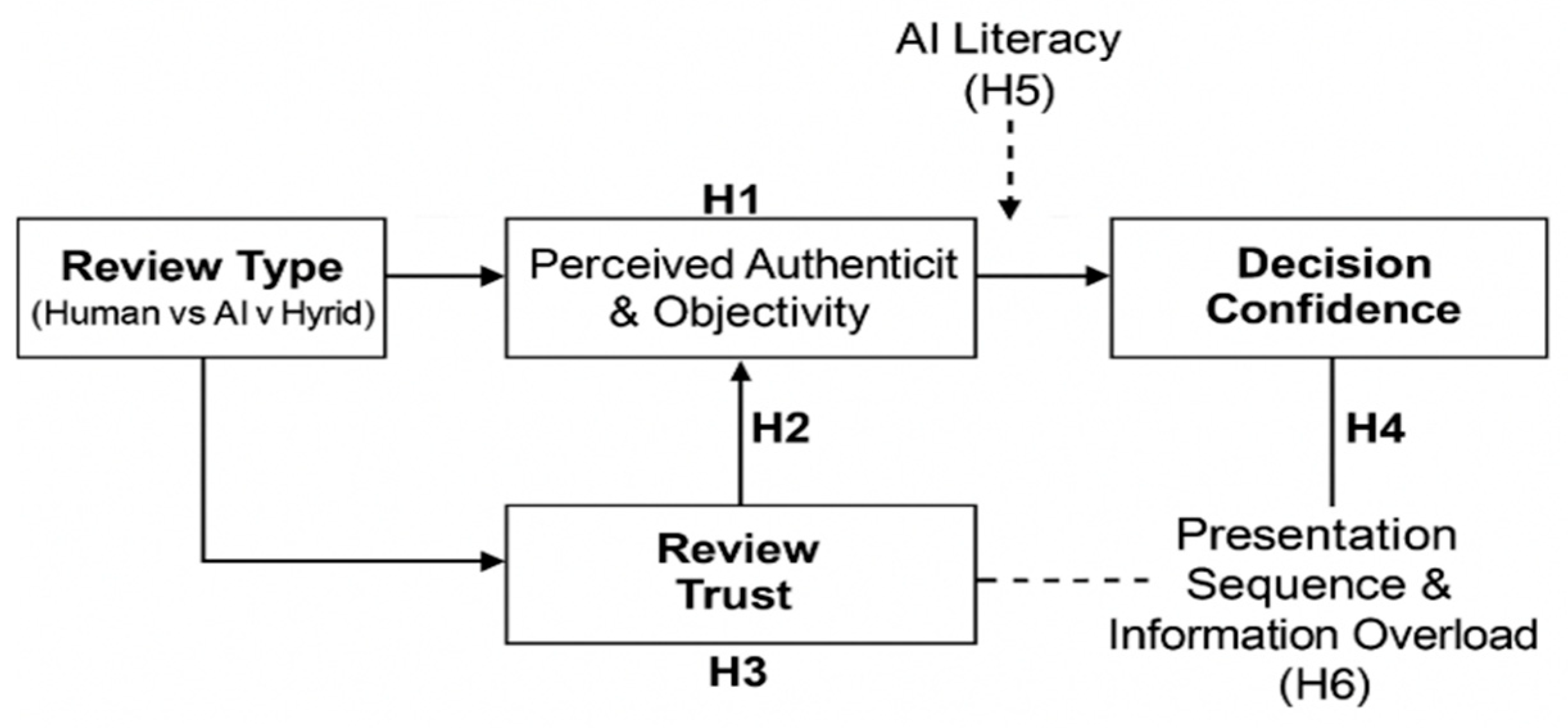

Together, these dual cues improve the diagnosticity of review information, leading to higher levels of review trust and decision confidence. To maintain conceptual clarity, it is important to distinguish between the theoretical and operational uses of diagnosticity within this research. Conceptually, diagnosticity refers to the extent to which information enables accurate and confident judgments by integrating authenticity- and objectivity-based cues [11]. However, in Study 3, diagnosticity is operationalized as participants’ perceived usefulness and decision relevance of the review information. Clarifying this distinction ensures that the overarching construct and its empirical measurement are not conflated throughout the analyses. Furthermore, the effects of review type are contingent on individual and contextual moderators, such as AI literacy, presentation sequence, and perceived information overload. These factors shape how consumers cognitively process hybrid reviews. Figure 1 presents the proposed conceptual model.

Figure 1.

Conceptual Model.

2.1. Human–AI Complementarity Theory

The concept of human–AI complementarity emphasizes that humans and AI systems can jointly create superior outcomes by leveraging their distinct yet synergistic capabilities rather than functioning as substitutes for one another. While humans possess affective sensitivity, contextual reasoning, and social intuition, AI systems excel in analytical precision, consistency, and scalability [12]. In service and consumption contexts, this complementarity enables the co-creation of value, with human judgment adding emotional depth and contextual nuance, and AI contributing efficiency and objectivity [5,13].

Recent studies have further highlighted that consumer acceptance of AI is dependent not only on its analytical capabilities but also on how effectively it complements, rather than replaces, human input [3,6]. When human and AI agents collaborate to deliver information or recommendations, consumers may perceive the resulting output as more comprehensive, balanced, and trustworthy. Integrating emotional and analytical inputs can enhance the perceived quality of information, thereby increasing consumers’ decision-making confidence and trust in hybrid information sources.

In the context of online reviews, human-generated reviews typically convey subjective, experiential cues, such as emotions, empathy, and authenticity, while AI-generated summaries contribute objective, structured, and balanced summaries of product or service attributes. Drawing on human–AI complementarity theory, this study argues that combining these two information sources creates a richer and more diagnostic review environment. Consumers exposed to hybrid reviews are expected to perceive them as more authentic and objective, which, in turn, strengthens their trust in the review and their confidence in subsequent decisions. Thus, hypothesis H1 is proposed:

H1.

Hybrid reviews (human and AI-generated) lead to higher levels of review trust and decision confidence than single-source reviews (human-only or AI-only).

2.2. Information Diagnosticity Perspective

The information diagnosticity perspective explains how consumers evaluate the usefulness and credibility of information for making judgments and decisions [2,3]. Diagnostic information can help individuals reduce uncertainty and increase confidence in their evaluations, and in the online review context, diagnosticity refers to the degree to which consumers perceive reviews as authentic, balanced, and relevant for assessing products or services [1,4]. When these two dimensions coexist, consumers are exposed to richer sets of informational cues that enhance their overall diagnostic capabilities. Thus, high diagnosticity increases review trust because consumers perceive information as credible and comprehensive [3].

From this perspective, perceived authenticity and perceived objectivity serve as dual pathways linking review type to trust and decision confidence. Authentic cues build affective trust by signaling honesty and personal experience, while objective cues develop cognitive trust by conveying analytical rigor and neutrality. Drawing on dual-process theories of cognition, these two cues correspond to distinct but complementary processing systems: authenticity primarily operates through affective and heuristic processing, whereas objectivity supports deliberative and analytic evaluation [9].

Importantly, these cues are not independent or substitutable. Authenticity without objectivity may generate emotional reassurance but fail to support diagnostic judgment, whereas objectivity without authenticity may enhance perceived accuracy while lacking relational credibility. Trust and decision confidence therefore emerge most strongly when affective authenticity and analytic objectivity are jointly activated, creating a synergistic diagnostic signal rather than isolated effects. Thus, hypotheses H2 and H3 are proposed:

H2.

Perceived authenticity and perceived objectivity mediate the effect of review type on review trust.

H3.

Review trust mediates the effect of perceived authenticity and perceived objectivity on decision confidence.

2.3. Dual-Process and Cognitive Load Theory

According to the dual-process theory, individuals process information through two cognitive systems: a fast, intuitive, affect-driven system, and a slow, deliberate, analytical system [9]. When cognitive resources are limited or information is presented in an excessively complex format, consumers tend to rely more on heuristic cues, such as perceived authenticity or source familiarity, to form their judgments. Conversely, when cognitive capacity is available, they engage in systematic processing, deeply evaluating diagnostic cues such as balance, structure, and objectivity [7].

Within hybrid review environments, heuristic and systematic cues are simultaneously available. Therefore, the order and volume of information presented are critical in shaping how consumers integrate these cues. When AI-generated summaries are presented first, it may establish an analytical framework that prompts more systematic evaluation; however, when human reviews appear first, emotional or social cues may dominate, anchoring perceptions of authenticity. Thus, the presentation sequence moderates the pathway from perceived authenticity and objectivity to decision-related confidence.

Cognitive load theory [14] further suggests that excessive information volume, commonly experienced as information overload, can deplete cognitive resources and impair systematic processing. In such situations, consumers may rely on simple judgments and trust rather than engaging in thorough analysis [4]. Accordingly, when information overload is significant, the positive effects of diagnostic cues on decision confidence likely weaken as consumers shift from rational to intuitive processing modes. Thus, hypotheses H4, H5, and H6 are proposed:

H4.

Presentation sequence moderates the effect of perceived authenticity and objectivity on decision confidence.

H5.

AI literacy moderates the strength of the relationship between perceived diagnosticity and decision confidence, such that the relationship is stronger among consumers with greater AI literacy.

H6.

Information overload attenuates the positive effects of perceived authenticity and objectivity on decision confidence.

3. Study 1: Complementarity Between Human-Generated Reviews and AI-Generated Summaries

3.1. Purpose and Overview

Study 1 presents the first empirical test of our conceptual model and examines how various types of online reviews (i.e., human-written, AI-generated, and hybrid) affect consumers’ perceptions of authenticity, objectivity, review trust, and decision confidence. While much of the existing research considers human and algorithmic reviews as rival sources of persuasion, we instead focus on their potential complementarity. Drawing on human–AI complementarity theory [5,8] and information diagnosticity [2], we propose that human reviews convey emotional and experiential cues that enhance perceived authenticity, complemented by AI reviews that deliver analytical and balanced content that strengthens perceived objectivity. When presented together, these cues jointly form a more diagnostic information environment that fosters greater trust and confidence. Accordingly, Study 1 tests H1–H3, focusing on the main and mediating effects of review type on review trust and decision-related confidence.

3.2. Participants and Design

Data were collected from 204 adult consumers recruited from a professional online research panel company in South Korea. Among them, 54.2% were female, with a mean age of 31.5 years (SD = 8.7). All participants had purchased services online and voluntarily participated in the study in exchange for a small monetary incentive in accordance with the company’s policy. Participants were randomly assigned to one of the three review-type conditions using Qualtrics’ built-in simple randomization algorithm, which ensured equal assignment probability across conditions. The experiment utilized a single-factor, between-subjects design with three conditions: human-only, AI-only, and hybrid. The independent variable was review type, and the dependent variables were perceived authenticity, perceived objectivity, review trust, and decision confidence. Demographic variables (gender, age, AI familiarity) were collected as controls.

The final sample size exceeded the minimum requirement for detecting a medium effect in a one-way ANOVA (f = 0.25, α = 0.05, 1 − β = 0.95), as determined by G*Power. In line with institutional policy, online survey studies involving minimal risk are exempt from formal ethical review.

3.3. Stimuli and Procedure

The experimental stimuli were designed to closely resemble a realistic online service booking environment. Participants were instructed to imagine selecting a home service (e.g., a beauty salon or home cleaning) through an e-commerce platform. They viewed a simulated service page containing identical descriptions, prices, and overall ratings across all conditions, with the only variability being the type of customer reviews presented.

In this study, AI-generated summaries were operationalized as data-driven algorithmic summaries that aggregate existing customer ratings and textual feedback. These summaries were explicitly distinguished from synthetic or fabricated narrative reviews that AI systems can autonomously produce. This operationalization reflects the summary-style AI review features commonly utilized by contemporary e-commerce platforms and ensures that participants interpreted the AI content as legitimate aggregated information rather than inauthentic reviews.

In the human-only condition, the page displayed three reviews written in a natural, experiential style emphasizing personal emotions and firsthand narratives. For example, one review stated, “The stylist was kind and made me feel comfortable throughout the session. The atmosphere was very relaxing.” These reviews convey affective and authentic cues consistent with typical human-generated reviews.

In the AI-only condition, the same service page featured three reviews identified as “AI-generated summaries,” which were presented in a neutral, analytical tone that focused on factual and structured information. For example, a representative review read, “The service quality was consistent across visits, with an average satisfaction score of 4.6 out of 5.” Such reviews were explicitly described as being generated by an AI system that aggregated and summarized multiple consumer opinions.

In the hybrid condition, both review types were presented together to simulate a mixed human–AI information environment. Participants first read the human-written reviews, followed by the AI-generated summary, which was labeled as “AI Summary.” This sequence was designed to mirror actual e-commerce interfaces, in which consumers evaluating services often encounter both personal reviews and algorithmic summaries.

All visual components, including layout, imagery, ratings, and typography, were held constant across conditions to ensure that the review type served as the sole manipulated variable. After reviewing the information, participants completed measures assessing perceived authenticity, perceived objectivity, review trust, and decision confidence. They then responded to manipulation-check questions confirming whether they recognized the review type (i.e., human, AI, or hybrid).

The manipulation check results confirmed that participants successfully distinguished between the three review conditions, with over 90% correctly identifying their assigned condition. A one-way ANOVA revealed significant differences in perceived authenticity (F(2, 201) = 11.42, p < 0.001) and perceived objectivity (F(2, 201) = 9.85, p < 0.001). Post hoc comparisons (Tukey HSD) indicated that human-written reviews were rated as more authentic (M = 5.83) than AI-generated ones (M = 4.92, p < 0.001). In contrast, AI-generated summaries were perceived as more objective (M = 5.78) than human-written ones (M = 4.96, p < 0.001). The hybrid condition yielded intermediate scores for both authenticity (M = 5.41) and objectivity (M = 5.52), supporting the theoretical expectation of human–AI complementarity.

3.4. Measures

All focal constructs were measured using multi-item scales adapted from prior research to align with this study’s online service review context. Unless otherwise noted, all items were assessed using seven-point Likert scales (1 = strongly disagree, 7 = strongly agree).

To capture perceived authenticity, participants evaluated how genuine, believable, and reflective of real consumer experiences the reviews appeared to be based on three items adapted from Filieri et al. [3] and Park and Lee [4], including “These reviews reflect actual consumer experiences.” These constructs were designed to represent the affective and experiential richness of human communication, which prior research has identified as a critical determinant of review credibility. Reliability analysis indicated good internal consistency (α = 0.82).

Perceived objectivity was measured to reflect the extent to which participants regarded the reviews as neutral, balanced, and fact-based. Drawing on Mudambi and Schuff’s [2] operationalization of informational neutrality, participants rated items such as “The reviews are objective and unbiased.” Such constructs reflect the analytical and structured nature of algorithmically generated information. The internal reliability was satisfactory (α = 0.84).

To assess review trust, participants were asked to indicate how credible, reliable, and trustworthy they perceived the content to be. Three items adapted from Flavián et al. [7] were used, including “I trust the information provided in these reviews.” These variables capture the cognitive evaluation that integrates perceptions of authenticity and objectivity into an overall sense of informational reliability. The Cronbach’s alpha for this scale was 0.87.

Decision confidence was measured to assess how confident and assured participants felt about their hypothetical purchase decision after reading the reviews. Three items were adapted from Chevalier and Mayzlin [1], including “I am confident in my decision after reading these reviews.” Such constructs represent a downstream evaluative outcome reflecting the perceived diagnosticity of the review environment. The reliability coefficient for this scale was α = 0.85.

In addition to the focal variables, AI familiarity was included as a control measure to account for individual differences in exposure to AI technologies. Participants responded to a single item, “How familiar are you with AI technologies?”, which was measured using a seven-point scale. This variable was later entered as a covariate in the main analyses to rule out alternative explanations linked to technological experience.

3.5. Results

First, the analyses examined how different types of reviews affected consumers’ evaluations of information quality and decision confidence. As predicted in H1, review type significantly affected both review trust and decision confidence. Participants exposed to hybrid reviews combining human-generated reviews and AI-generated summaries reported significantly higher trust in the information (M = 5.82, SD = 0.84) than those viewing human-only (M = 5.34, SD = 0.93, p = 0.014) or AI-only reviews (M = 5.21, SD = 0.91, p = 0.006). A similar pattern emerged for decision confidence, with hybrid reviews (M = 5.87, SD = 0.86) producing higher confidence than human-only (M = 5.39, SD = 0.88, p = 0.021) or AI-only conditions (M = 5.12, SD = 0.94, p = 0.003). These results confirm that consumers perceive hybrid review environments as the most trustworthy and confidence-enhancing. Therefore, H1 was supported.

Next, H2 proposed that perceived authenticity and objectivity would mediate the relationship between review type and trust. Mediation analyses using PROCESS Model 4 [15] with 5000 bootstrapped samples showed that both perceptual factors acted as significant indirect routes. Notably, human-written reviews increased trust primarily through perceived authenticity (indirect effect = 0.21, 95% CI [0.09, 0.37]), while AI-generated summaries did so through perceived objectivity (indirect effect = 0.18, 95% CI [0.06, 0.32]). The hybrid condition activated both mechanisms simultaneously, yielding the strongest total indirect effect (0.39, 95% CI [0.22, 0.57]).

To verify that this effect was statistically stronger than those of the single-source conditions, pairwise bootstrapped contrasts were conducted. The total indirect effect in the hybrid condition was significantly greater than that of the human-only condition (Δindirect = 0.17, 95% CI [0.05, 0.32]) or the AI-only condition (Δindirect = 0.14, 95% CI [0.03, 0.29]). Both confidence intervals excluded zero, confirming that the hybrid format activates authenticity- and objectivity-based pathways more strongly than either single-source review type. This pattern supports the notion that human and AI reviews complementarily enhance informational trustworthiness by jointly activating authenticity and objectivity cues. Thus, H2 was supported.

Finally, H3 predicted that review trust would mediate the joint effects of perceived authenticity and objectivity on consumers’ decision confidence. The sequential mediation analysis using PROCESS Model 6 confirmed this expectation, as both authenticity (β = 0.36, p < 0.001) and objectivity (β = 0.28, p = 0.002) positively predicted review trust, which in turn significantly predicted decision confidence (β = 0.42, p < 0.001). Furthermore, the indirect pathway via review trust was significant (indirect effect = 0.27, 95% CI [0.12, 0.48]). When review trust was included in the model, the direct effects of authenticity and objectivity on decision confidence became nonsignificant, indicating complete mediation. This result demonstrates that confidence in decision-making arises indirectly through trust in the review information rather than directly from perceptions of authenticity or objectivity. Therefore, H3 was supported. Table 1 summarizes the hypothesis testing results of Study 1.

Table 1.

Summary of Hypothesis Testing: Study.

3.6. Discussion

The findings from Study 1 confirm that hybrid reviews significantly increase consumers’ review trust and decision confidence compared to single-source reviews, thereby supporting H1. Furthermore, perceived authenticity and objectivity function as dual mediators between review type and trust (H2), and review trust fully mediates the effect of authenticity/objectivity on decision confidence (H3). Importantly, these mediating effects are not independent but complementary: authenticity primarily supports affective trust formation, whereas objectivity facilitates analytic evaluation and epistemic confidence.

Consistent with dual-process perspectives, trust and decision confidence emerge most strongly when these affective and analytic processes operate in tandem, rather than in isolation. These results extend the existing literature on informational diagnosticity by illustrating how affective (authenticity) and analytical (objectivity) cues operate together to foster trust and confidence in online review contexts. This aligns with recent evidence that AI systems may be perceived as more transparent and credible in recommendation settings [16], while human-like AI anthropomorphism affects trust dynamics in human–AI collaboration [17].

4. Study 2: The Moderating Role of Presentation Sequence

4.1. Purpose and Overview

Building on the findings of Study 1, Study 2 examines how the presentation sequence of human-generated reviews and AI-generated summaries influences consumers’ evaluations of informational quality and their resulting decision confidence. While Study 1 demonstrated that hybrid reviews elicit greater trust and confidence, Study 2 explores whether the order of exposure—human-first versus AI-first—modulates these perceptions.

Drawing on the dual-process theory and cognitive load perspectives, Study 2 proposes that the review presentation sequence affects how consumers allocate attention and integrate affective and analytical cues. When consumers encounter human reviews first, affective authenticity likely anchors their interpretation of subsequent AI information, enhancing trust and confidence. Conversely, when AI reviews are presented first, it may establish a more analytical framing that heightens perceived objectivity but attenuates emotional engagement, potentially reducing decision confidence.

Study 2 specifically tests H4, which predicts that presentation sequence moderates the effect of perceived authenticity and objectivity on decision confidence. This experiment extends the conceptual model by identifying the temporal dynamics of hybrid review processing, illustrating how the presentation order of hybrid review information shapes consumer judgment in online service contexts. Unlike Study 1, which included a hybrid control condition to establish baseline differences across review systems, Study 2 deliberately focuses on human-first and AI-first sequences to isolate the temporal effects of review ordering. Including a hybrid control condition in this study would have introduced additional structural variation and limited the precision with which sequencing-driven cognitive effects could be identified.

4.2. Participants and Design

Study 2 included 218 adult participants recruited from a professional online research panel company in South Korea. Among the respondents, 52.8% were female, with a mean age of 32.1 years (SD = 9.2). All participants had experience purchasing or reserving services on online platforms and voluntarily participated in exchange for a small monetary incentive in accordance with panel policy.

The experiment utilized a 2 × 2 between-subjects factorial design, manipulating review type (human-only vs. AI-only) and presentation sequence (human-first vs. AI-first). This design enabled the examination of how the temporal order of human-generated reviews and AI-generated summaries affects perceived authenticity, objectivity, review trust, and decision confidence.

Participants were randomly assigned to one of four experimental cells using a computer-based randomization procedure on the Qualtrics platform. In the human-first condition, three consumer-written reviews emphasizing emotional and experiential cues were presented, followed by three AI-generated summaries labeled “AI Summary.” In the AI-first condition, the order was reversed. All other content, including the service description, images, layout, and ratings, was identical across conditions.

A manipulation check confirmed that participants correctly recognized the review source (human vs. AI) and the presentation order. The findings demonstrate that participants in the human-first condition were significantly more likely to identify human reviews as appearing first than those in the AI-first condition (χ2(1) = 27.48, p < 0.001). Moreover, perceived authenticity was rated higher in the human-first condition (M = 5.61) than in the AI-first condition (M = 4.89, t(216) = 3.42, p < 0.001). However, perceived objectivity was higher in the AI-first condition (M = 5.73) than in the human-first condition (M = 5.04, t(216) = 3.17, p < 0.01). These results indicate that the manipulation successfully induced the intended perceptual distinctions.

The sample size exceeded the minimum requirement for detecting a medium-sized interaction effect (f = 0.25, α = 0.05, 1 − β = 0.95), as calculated using G*Power 3.1 [18]. Following institutional policy, this online survey involved minimal risk and was therefore exempt from formal ethical review.

4.3. Stimuli and Procedure

The experimental materials were designed to replicate a realistic online service-booking context, similar to those found on major e-commerce or reservation platforms (e.g., beauty salons or home cleaning services). Participants were instructed to imagine they were booking a service online and to carefully read the product page presented on their screens.

Each participant viewed a mock-up webpage displaying the same service description, image, price, and overall rating (4.6/5). The only variation was in the type and order of customer reviews. In the human-first condition, participants read three human-written reviews, followed by three AI-generated summaries. In contrast, in the AI-first condition, participants encountered three AI-generated summaries, then three human-written reviews. The content of the reviews was identical across conditions, differing only in linguistic tone and source framing. Human reviews contained affective and experiential language (e.g., “The stylist was friendly and made me feel comfortable”), while AI-generated summaries adopted a structured and analytic tone (e.g., “The average satisfaction score was 4.4 out of 5”).

To enhance realism, each review set was displayed in a scrollable layout, consistent with real-world review interfaces. Additionally, participants could not skip forward until all reviews were displayed for at least ten seconds, ensuring full exposure to both review types. The experimental order was implemented within Qualtrics, and participants’ response times were recorded to confirm sufficient engagement.

After exposure to the stimuli, participants completed a post-task questionnaire to measure the key dependent variables: perceived authenticity, perceived objectivity, review trust, and decision confidence. Each construct was measured using multi-item Likert-type scales adapted from existing literature, as described in Study 1. Participants also completed manipulation-check items to verify that they correctly perceived the sequence of review presentation (human-first vs. AI-first) and recognized review sources.

All materials were translated and back-translated in accordance with standard cross-cultural procedures [19]. A short debriefing statement was provided at the end, explaining that the AI-generated summaries were fictitious and created solely for research purposes.

4.4. Measures

All focal constructs were measured using the same validated scales utilized in Study 1 to ensure conceptual comparability across experiments. Specifically, perceived authenticity, perceived objectivity, review trust, and decision confidence were assessed using multi-item measures identical to those used in Study 1, which were themselves adapted from prior studies [1,2,3,7]. Reliability coefficients for all scales exceeded α = 0.80, indicating satisfactory internal consistency.

As Study 2 examined the moderating role of presentation sequence, two additional constructs were measured to capture the underlying cognitive mechanisms. Specifically, processing fluency was assessed using three items adapted from Lee and Labroo [20], capturing the ease with which participants could interpret and integrate review information (e.g., “It was easy for me to understand the review content”; α = 0.81). Additionally, perceived sequence clarity was measured using two items (e.g., “I could easily recognize which type of review appeared first”), demonstrating acceptable reliability (α = 0.79).

All scale items were presented in Korean, translated, and back-translated according to established cross-cultural translation guidelines [19]. Composite means were computed for each construct after confirming unidimensionality via exploratory factor analysis. To reduce multicollinearity in interaction analyses, continuous variables were mean-centered before computing interaction terms.

4.5. Results

To confirm that the presentation sequence manipulation operated as intended, participants were asked to indicate which type of review appeared first (i.e., human-written vs. AI-generated). The findings show that recognition accuracy was significantly above chance, χ2(1) = 29.14, p < 0.001, confirming that participants clearly recognized the review order.

Additionally, perceptions of authenticity and objectivity varied across sequence conditions, indicating that the presentation order influenced how participants interpreted reviews. Specifically, those in the human-first condition perceived the reviews as more authentic (M = 5.62, SD = 1.01) than those in the AI-first condition (M = 4.91, SD = 1.09; t(216) = 3.46, p < 0.001). Conversely, perceived objectivity was rated higher when AI-generated summaries appeared first (M = 5.74, SD = 1.04) compared to the initial appearance of human reviews (M = 5.07, SD = 1.08; t(216) = 3.18, p < 0.01). These findings validate the effectiveness of the presentation sequence manipulation.

A moderation analysis using Hayes’ PROCESS Model 1 [14] examined whether the presentation sequence moderated the effects of perceived authenticity and objectivity on decision confidence, with review trust included as a covariate. The overall model was significant (F(4, 213) = 19.62, p < 0.001, R2 = 0.27). Furthermore, significant interaction effects emerged between perceived authenticity and presentation sequence (β = 0.18, SE = 0.07, t = 2.57, p = 0.011), and between perceived objectivity and presentation sequence (β = −0.15, SE = 0.06, t = −2.43, p = 0.016).

Simple slope analyses revealed that when human reviews appeared first, perceived authenticity strongly predicted decision confidence (β = 0.42, p < 0.001), while objectivity did not (β = 0.08, p = 0.21). In contrast, when AI reviews were initially presented, objectivity became a strong predictor of confidence (β = 0.39, p < 0.001), and the effect of authenticity weakened (β = 0.12, p = 0.18). These findings support H4, confirming that the presentation order of human versus AI-generated summaries moderates how authenticity and objectivity translate into consumer confidence.

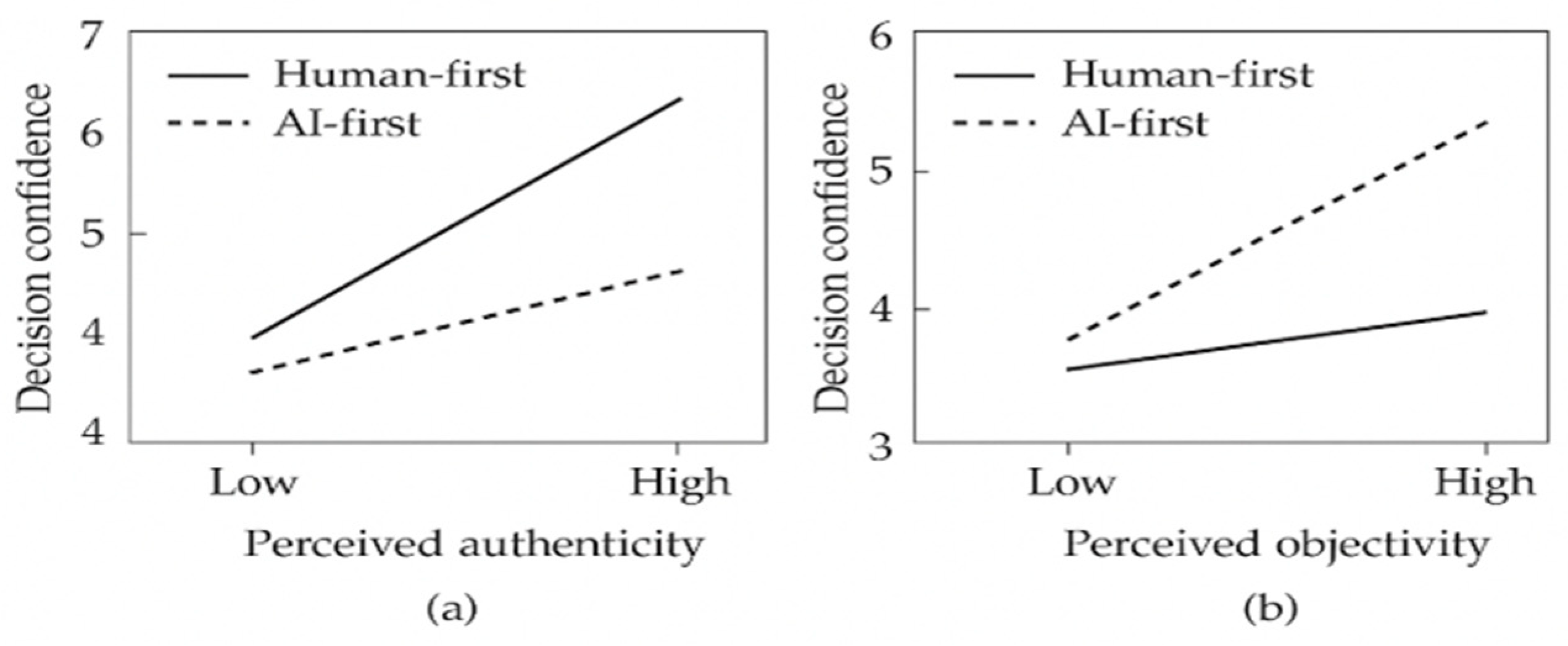

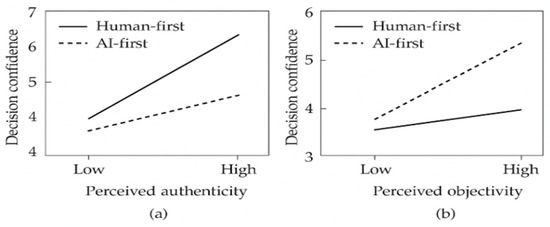

Figure 2 illustrates these moderating effects. Figure 2a shows that when human reviews were presented first, decision confidence increased sharply with higher perceived authenticity. However, this effect was weaker in the AI-first condition. Conversely, as shown in Figure 2b, objectivity had a stronger positive effect on confidence when AI reviews appeared first, reflecting a shift toward analytical evaluation rather than experiential.

Figure 2.

The moderating effects of presentation sequence on the relationships between (a) perceived authenticity and decision confidence, and (b) perceived objectivity and decision confidence.

4.6. Discussion

Study 2 examined the moderating effects of presentation sequence on the relationship between perceived authenticity, perceived objectivity, and decision confidence. The results demonstrated that when human-generated reviews preceded AI-generated ones (human-first condition), perceived authenticity exerted a stronger positive effect on decision confidence. However, when AI-generated summaries appeared first, perceived objectivity became the dominant driver of confidence. This asymmetrical pattern suggests that the cognitive primacy of emotional versus analytical cues is dependent on initial information framing, consistent with dual-process perspectives [9]. Moreover, these findings extend the notion of human–AI complementarity by showing that temporal sequencing can structure the balance between affective and cognitive processing in hybrid information contexts [21]. This finding also aligns with recent studies that emphasize the importance of the exposure order of human versus algorithmic inputs in shaping trust calibration and cognitive load during decision-making [16,17]. Collectively, Study 2 provides evidence that consumers adaptively integrate human and AI information depending on how these sources are temporally structured, highlighting the managerial importance of deliberate design in AI-mediated review platforms. Although the observed interaction effects are moderate in magnitude, their practical significance should not be underestimated, as even modest shifts in decision confidence can accumulate into meaningful behavioral differences at scale in digital platforms.

From a managerial perspective, these findings suggest that review order should be treated as an algorithmic design variable rather than a neutral display choice. Platforms can implement default sequencing rules that present human experiential reviews first for novice users or exploratory browsing contexts, while foregrounding AI-generated summaries for experienced users seeking efficiency. Such sequencing strategies can be dynamically personalized based on user signals such as prior purchase history, interaction depth, or time spent on review pages, thereby aligning review presentation with users’ cognitive processing modes and enhancing decision confidence.

5. Study 3: AI Literacy and Information Overload

5.1. Purpose and Overview

Building on the findings of Studies 1 and 2, which established the main and sequencing effects of hybrid reviews, Study 3 investigates two boundary conditions that determine when consumers rely on hybrid review information. Prior research has suggested that users’ cognitive capacity and technological literacy critically shape how they interpret and trust AI-generated information [5,7]. However, few studies have examined how these individual differences interact with perceived informational quality in hybrid human–AI review contexts.

This study focuses on two moderating mechanisms: AI literacy and information overload. AI literacy is proposed to strengthen consumers’ ability to interpret AI-generated cues, thereby amplifying the positive effect of perceived diagnosticity on decision confidence (H5). Conversely, information overload is expected to hinder the cognitive integration of authentic and objective cues, attenuating their effect on confidence (H6). Together, these moderators provide a more nuanced understanding of when human–AI review synergy enhances, or fails to enhance, consumer decision assurance.

5.2. Participants and Design

Data were collected from 232 adult consumers recruited through a professional online research panel company in South Korea. Participants (52.6% female; Mage = 32.1 years, SD = 7.9) all had experience using online booking platforms.

The experiment adopted a 2 × 2 between-subjects design, manipulating AI literacy (high vs. low) and information overload (low vs. high). Random assignments occurred automatically within Qualtrics. AI literacy was enhanced through a brief instructional text. Participants in the high-literacy condition read an educational primer explaining how AI summarization models process multiple reviews. In contrast, those in the low-literacy condition read a neutral platform policy text of equal length. Information-overload manipulation varied the number and density of reviews, with four concise hybrid reviews (two human + two AI) for the low-load condition, and twelve mixed, densely formatted reviews with additional icons for the high-load condition. Following exposure, participants rated their levels of perceived authenticity, objectivity, trust, decision confidence, diagnosticity, AI literacy, and information overload.

Manipulation checks confirmed that both experimental treatments were successful. Participants in the high-literacy condition reported a significantly greater understanding of AI-generated summaries (M = 5.87 vs. 4.26; F(1, 228) = 41.32, p < 0.001). Similarly, those in the high-load condition perceived greater information overload (M = 5.43 vs. 3.92; F(1, 228) = 54.07, p < 0.001). Furthermore, a G*Power 3.1 analysis confirmed that the sample size had sufficient power (1 − β = 0.95) in detecting medium interaction effects (f = 0.25, α = 0.05). According to institutional policy, minimal-risk online survey experiments are exempt from formal ethics review.

5.3. Stimuli and Procedure

The experimental stimuli were designed to reflect a realistic online service booking environment. Participants were asked to imagine they were selecting a beauty or home service (e.g., a spa, salon, or cleaning service) through an e-commerce platform that displayed both human-written and AI-generated summaries. The layout and visual design closely resembled actual online review pages to ensure ecological validity.

Each participant was randomly assigned to one of two experimental conditions, which manipulated AI literacy (high vs. low) and information overload (low vs. high). In the high AI literacy condition, participants were first presented with a brief infographic that described how AI systems summarize multiple customer reviews using sentiment analysis and topic modeling, with an emphasis placed on transparency and interpretability. In the low AI literacy condition, this explanation was omitted, and participants were only informed that the system used “AI to analyze customer opinions.”.

To manipulate information overload, participants were shown either three concise reviews (low overload) or twelve mixed-length reviews (high overload). Both sets included a combination of human-generated reviews and AI-generated summaries, maintaining equal proportions of positive and neutral sentiments to control for valence effects. All reviews were pretested to ensure comparable readability and relevance.

After reading the reviews, participants completed a questionnaire using established multi-item scales adapted from prior research to measure perceived diagnosticity, perceived authenticity, objectivity, review trust, and decision confidence. Manipulation-check items verified whether participants perceived the content as human- or AI-generated, and if they considered the page to be information-heavy. Participants also answered questions regarding their familiarity with AI and online review systems, which were used as control variables.

All procedures were conducted online using a professional research panel platform. On average, participants required approximately eight minutes to complete the survey. The stimulus examples used in the study are provided in Appendix A for reference.

5.4. Measures

All main constructs were measured using seven-point Likert-type scales (1 = strongly disagree, 7 = strongly agree) and were adapted from prior research to ensure conceptual equivalence and internal consistency.

Perceived diagnosticity was assessed using three items adapted from Mudambi and Schuff [2] and Filieri et al. [3], capturing the extent to which participants considered the reviews to be helpful, informative, and useful for decision-making (e.g., “These reviews provide enough information to make an informed decision”; α = 0.79). Consistent with our theoretical distinction, this scale reflects the operational form of diagnosticity—participants’ perceived usefulness and the decision relevance of the provided information.

AI literacy, the focal moderator, was measured using four items adapted from Flavián et al. [7] to evaluate individuals’ understanding and perceived competence with AI-driven systems (e.g., “I understand how AI analyzes customer feedback and generates summaries”; α = 0.81). Information overload was measured using four items from Park and Lee [4], reflecting the levels of perceived information saturation and cognitive strain (e.g., “There was too much information to process easily”; α = 0.77).

Review trust [7] (α = 0.84) and decision confidence [1] (α = 0.85) were measured using the same items as in Studies 1 and 2 to maintain comparability across experiments. Manipulation checks confirmed that participants accurately recognized the review types (human, AI, or hybrid) and perceived information load as intended. Over 92% correctly identified their condition, indicating strong validity of manipulation.

5.5. Results

Before presenting the moderation results, it is important to clarify that diagnosticity in the following analyses refers to participants’ perceived diagnosticity, that is, the operationalized assessment of the usefulness and decision relevance of the review information, rather than diagnosticity as a broader conceptual mechanism.

Manipulation checks confirmed that participants accurately perceived both experimental manipulations. Those in the high AI literacy condition reported a greater understanding of AI-generated summaries (M = 5.72) than those in the low literacy condition (M = 4.31), t(198) = 6.84, p < 0.001. Additionally, participants in the high information overload condition perceived the review set as more demanding (M = 5.81) than those in the low overload condition (M = 3.92), t(198) = 9.12, p < 0.001, confirming successful manipulations.

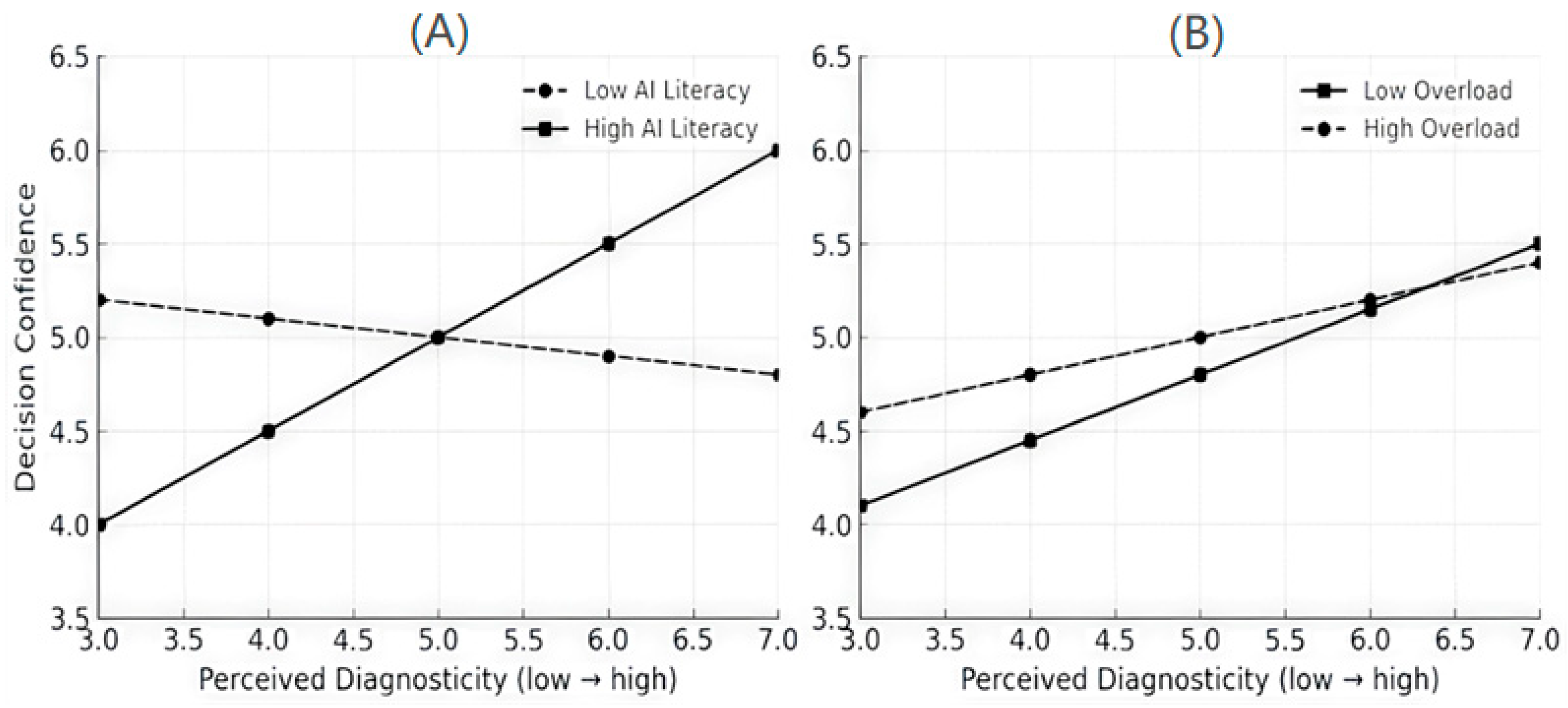

Moderated regression analyses were conducted using PROCESS Model 1, with decision confidence as the dependent variable. Predictors included perceived diagnosticity, AI literacy, information overload, and their interaction terms. Testing H5 demonstrated that the interaction between perceived diagnosticity and AI literacy was significant (β = 0.19, SE = 0.08, t = 2.41, p = 0.017). Furthermore, simple slope tests revealed that perceived diagnosticity had a stronger positive effect on decision confidence among individuals with high AI literacy (β = 0.46, t = 5.12, p < 0.001) compared to those with low literacy (β = 0.20, t = 1.97, p = 0.051). Thus, H5 was supported, indicating that a better understanding of AI strengthens consumers’ confidence derived from diagnostic review information.

For H6, the interaction between perceived diagnosticity and information overload was marginally significant (β = −0.13, SE = 0.07, t = −1.89, p = 0.061). Notably, when the information load was low, diagnosticity positively predicted decision confidence (β = 0.41, t = 4.86, p < 0.001). However, under a high load, the relationship was weaker and approached non-significance (β = 0.18, t = 1.82, p = 0.071). Therefore, H6 was partially supported, suggesting that although overload reduces the benefit of diagnostic information, it does not entirely eliminate its positive effect.

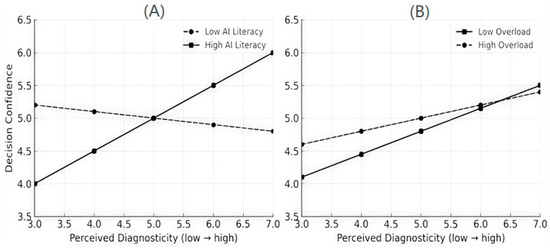

As illustrated in Figure 3, Figure 3A shows a clear crossover interaction in which decision confidence increases sharply with diagnosticity under high AI literacy but remains flat in low literacy conditions, consistent with a strong moderation effect (H5). Figure 3B shows parallel lines indicating attenuation rather than the reversal of the effect under high information overload, consistent with partial moderation (H6).

Figure 3.

The moderating Effects of AI Literacy and Information Overload on the Relationship between Perceived Diagnosticity and Decision Confidence. (A) H5: AI Literacy × Diagnosticity; (B) H6: Overload × Diagnosticity (Partial).

To further examine the moderating mechanisms proposed in H5 and H6, additional PROCESS Model 1 analyses were conducted using AI literacy and information overload as moderators of the relationship between perceived diagnosticity and decision confidence. As shown in Table 2, the interaction between diagnosticity and AI literacy was significant (ΔR2 = 0.020, F = 5.81, p < 0.05), indicating that individuals with higher AI literacy presented a stronger positive link between diagnosticity and confidence (β = 0.46, p < 0.001) compared to those with low literacy (β = 0.20, p = 0.051). This finding supports H5 and confirms that AI literacy increases users’ reliance on diagnostic information when forming confident evaluations. Conversely, the interaction with information overload was marginally significant (ΔR2 = 0.015, F = 3.57, p ≈ 0.061). Specifically, under low overload conditions, diagnosticity strongly enhanced confidence (β = 0.41, p < 0.001), whereas under high overload, this effect weakened (β = 0.18, p = 0.071). This pattern partially supports H6, suggesting that cognitive strain attenuates the benefits of diagnostic review information in hybrid human–AI review contexts.

Table 2.

Additional moderation diagnostics (Study 3).

5.6. Discussion

Study 3 extends the previous findings by demonstrating that the influence of perceived diagnosticity on decision confidence is not uniform; rather, it varies depending on consumers’ AI literacy and cognitive capacity. These findings should be interpreted as specifying the conditions under which the diagnosticity mechanism becomes more or less influential, rather than as redefining diagnosticity itself as a causal process. Consistent with dual-process reasoning, those with greater AI literacy were more capable of interpreting AI-generated summaries analytically, integrating both human and algorithmic cues into coherent information. This pattern corresponds with recent evidence that domain-specific AI competence enhances users’ epistemic trust and adaptive reliance on algorithmic outputs [22]. Conversely, when consumers experienced significant information overload, they exhibited diminished sensitivity to diagnostic information, indicating a shift toward heuristic rather than systematic processing. This finding is consistent with emerging research suggesting that excessive review information or complex AI interfaces can suppress evaluative accuracy and induce cognitive fatigue [23]. Although these moderation effects are modest in statistical magnitude, they are substantively meaningful in applied contexts, where even small shifts in decision confidence can influence reliance on reviews, choice persistence, and platform engagement.

Importantly, however, information overload attenuated—rather than eliminated—the effects of diagnosticity. One plausible explanation is that AI-generated summaries provide a structurally efficient cue that reduces redundancy and helps consumers extract the essential meaning from dispersed review content, even under high-load conditions. Rather than adding to the burden, the algorithmic summary may counteract some of the cognitive strain by organizing information into a more comprehensible form [24]. Additionally, consumers in technologically advanced markets such as South Korea generally display strong information-seeking motivation and higher digital literacy, which may help buffer them against overload-induced heuristic processing. As a result, hybrid human–AI cues lose some of their potency under cognitive strain but still retain a meaningful impact—a pattern suggesting that the complementarity effect is robust even within demanding informational environments.

Together, these results refine the theoretical boundaries of human–AI complementarity by revealing its dependence on users’ technological literacy and cognitive constraints. While prior studies have often treated AI literacy as a stable individual difference [25], the present findings highlight its contextual nature since it strengthens trust when information is clear and digestible but loses influence under conditions of cognitive overload. Moreover, by integrating diagnosticity and overload dynamics, this study responds to recent calls for a more nuanced understanding of cognitive resource allocation in hybrid human–AI decision environments [26]. Thus, Study 3 underscores that technological fluency facilitates (but does not guarantee) optimal decision confidence within complex review ecosystems.

6. General Discussion

This study’s findings collectively demonstrate how hybrid review systems that combine human-generated reviews and AI-generated summaries shape consumer trust and decision confidence, rather than merely answering the three research questions proposed in the introduction. Across the three studies, hybrid reviews significantly enhanced perceived trust and confidence relative to human- or AI-only reviews, supporting the theoretical notion of human–AI complementarity. Specifically, affective cues derived from human content and the analytical precision provided by AI jointly create a more credible and diagnostically rich information environment, such that neither source alone is sufficient. This synergy between emotional authenticity and cognitive objectivity aligns with prior evidence that hybrid human–machine systems may outperform individual human or algorithmic sources in evaluative contexts [16,26].

Beyond these main effects, the findings clarify the psychological mechanisms through which hybrid reviews operate. Across the first two studies, perceived authenticity and perceived objectivity jointly mediated the effect of review type on trust and decision confidence, indicating that trust in hybrid information environments emerges from the integration of affective and analytical cues rather than from either dimension independently. Together, these dual pathways strengthen the perceived reliability of hybrid information environments. The findings also resonate with recent discussions emphasizing that trust in AI-assisted communication depends on balanced transparency and equitable human–machine contributions [17,21].

The results further show that the effectiveness of hybrid reviews is contingent on contextual and individual-level factors. Study 2 demonstrated that the order in which human-generated reviews and AI-generated summaries appeared influenced decision confidence, with human-first sequences facilitating an affective-to-cognitive information processing flow. Study 3 further revealed that greater AI literacy amplified the positive effect of diagnostic information on confidence, whereas information overload attenuated this effect. Together, these findings highlight that cognitive load and technological familiarity systematically shape how consumers interpret and rely on hybrid review information, extending prior examinations of cognitive fatigue and adaptive trust calibration in AI-based decision environments [22,23].

6.1. Theoretical Implications

This research advances the existing theory in three significant ways. First, it sharpens the theoretical boundaries of human–AI complementarity by specifying the psychological mechanisms through which hybrid human–AI review cues operate. While prior studies have largely portrayed human and AI sources as substitutes or competitors [12], our findings demonstrate that emotional authenticity and analytical objectivity serve as distinct yet synergistic pathways. By identifying these dual mechanisms, the present research extends complementarity theory beyond performance- or task-efficiency contexts into the domain of consumer trust formation. This theoretical refinement clarifies not only that hybrid cues work, but why and through which processes they produce greater trust and confidence [27,28].

Second, this study contributes to information diagnosticity theory by conceptualizing diagnosticity as a dual-source construct jointly shaped by affective and analytical cues. Previous research has emphasized either content richness or source credibility [29], but has lacked an integrated account of how emotional and factual dimensions interact to produce diagnostic value. Our results show that informational value is contingent upon the simultaneous activation of authenticity and objectivity cues, thereby extending diagnosticity theory into hybrid information environments where mixed-source cues coexist. These findings also align with evidence that cognitive effort and multimodal inputs shape perceived helpfulness [30], indicating that hybrid reviews enhance diagnostic quality through both emotional resonance and factual precision.

Third, this research refines both dual-process and cognitive load frameworks by introducing presentation sequence, AI literacy, and information overload as theoretically meaningful boundary conditions in hybrid communication. These results show that the sequencing of human and AI cues systematically shifts the balance between experiential and analytical processing, while AI literacy shapes consumers’ capacity to interpret algorithmic summaries. Moreover, cognitive overload attenuates diagnosticity effects without eliminating them, suggesting a nuanced boundary condition for hybrid information processing within high-density review environments. These insights broaden cognitive load theory to include AI-mediated judgment contexts and align with recent work on adaptive trust calibration and mental-effort regulation [31].

Therefore, these contributions offer a more bounded and theoretically precise account of hybrid human–AI communication by clarifying (a) the dual psychological mechanisms that underpin complementarity, (b) the integrated structure of diagnosticity in mixed-source environments, and (c) the contextual conditions under which hybrid cues retain or lose their effectiveness. This multi-level integration paves the way for further theoretical developments in trust, diagnosticity, and human–AI collaboration research [32,33].

6.2. Managerial Implications

This study provides two key managerial insights for e-commerce platforms and digital marketers operating in AI-mediated review environments. First, the findings suggest that hybrid review formats that integrate human-generated reviews and AI-generated summaries can strategically enhance consumer trust and decision confidence. Thus, e-commerce managers should consider designing review interfaces that deliberately combine the authentic, experiential tone of human reviews with the objective, data-driven precision of AI-generated insights. For instance, platforms can present concise AI-generated summaries, fact-checking annotations, or attribute-based comparisons alongside selected customer reviews that emphasize lived experience and emotional authenticity. Such designs may foster affective credibility and analytical reassurance, which can, in turn, reduce consumer skepticism toward AI involvement. In practice, platforms such as Amazon, Trip.com, and Alibaba could implement dual-source review panels or split-screen layouts that simultaneously highlight experiential narratives and AI-curated diagnostic information, thereby optimizing user confidence and conversion rates.

Second, the moderating roles of AI literacy and information overload imply that personalization and adaptive presentation mechanisms are essential for optimizing user experience. For consumers with greater AI literacy, providing diagnostic metrics (e.g., AI credibility scores or reasoning explanations) may further strengthen decision confidence [17]. However, under conditions of significant information overload, excessive AI input may reduce cognitive clarity and trust [34]. Accordingly, platforms could deploy adaptive interface algorithms that infer users’ engagement levels or browsing patterns and dynamically adjust review presentation. For example, users experiencing high cognitive load could initially be shown brief AI summaries followed by optional human reviews, whereas highly involved or AI-literate users could be offered expandable AI explanations or customizable levels of review detail. These insights emphasize that effective hybrid review management is more than merely a matter of content generation but also of context-sensitive and user-adaptive presentation strategies that align with consumers’ cognitive states.

6.3. Limitations and Future Research Directions

This research has several methodological limitations that offer promising directions for future work. First, this study’s experiments utilized controlled, scenario-based designs to ensure internal validity; however, such designs may incompletely capture the complexity of real-world decision-making in e-commerce environments. Actual consumers often encounter dynamic cues, such as fluctuating source credibility, review updates, and interactive recommendation systems, potentially altering trust formation over time. Therefore, future research should employ field experiments or longitudinal platform-based studies (e.g., on Amazon, Booking.com, or Coupang) to test whether the effects of hybrid reviews persist under ecologically valid conditions. These studies could strengthen the findings of the current research and offer deeper insights into the timing of trust calibration in hybrid human–AI communication.

Second, while the present data were derived from self-reported survey measures, integrating multi-source data could provide a richer and more objective understanding of consumer trust and decision confidence. Therefore, future work should merge experimental responses with clickstream behavior, purchase records, or sentiment analysis of actual review content to triangulate perceptual and behavioral dimensions of information processing. This data fusion approach could strengthen causal inference and illuminate how diagnosticity perceptions translate into actual marketplace behaviors.

Third, because all three studies relied on samples drawn from South Korean online consumers, the generalizability of the findings may be culturally bounded. Notably, South Korea is characterized by high levels of technology adoption, strong platform-based trust norms, and an established familiarity with algorithmic recommendation systems. These socio-technical characteristics may have amplified the study participants’ receptiveness to AI-generated summaries and hybrid human–AI reviews. In cultural contexts with lower institutional trust or weaker digital literacy, the relative influence of authenticity and objectivity cues may unfold differently. Future research should therefore examine whether the complementarity effects observed here replicate across diverse cultural settings and socio-technical environments.

Beyond serving as a methodological limitation, cultural context may function as a theoretically meaningful boundary condition for hybrid human–AI review effects. Cultural dimensions such as uncertainty avoidance, institutional trust, and technology acceptance norms are likely to systematically shape how consumers interpret AI-generated summaries and balance authenticity and objectivity cues. For instance, in cultures characterized by high uncertainty avoidance, AI-generated summaries may strengthen perceived objectivity while simultaneously heightening skepticism toward authenticity, thereby attenuating the joint mediation observed in the present study [35]. Likewise, in contexts marked by lower institutional trust or weaker norms of algorithmic transparency, consumers may be less inclined to rely on AI-generated summaries, which could alter the relative contribution of human and AI cues to trust formation. Examining these culturally contingent pathways would provide a stronger theoretical foundation for future cross-cultural research on hybrid review systems.

Finally, future studies could explore cross-cultural variations in hybrid human–AI trust dynamics. As perceptions of authenticity, objectivity, and AI credibility are often shaped by cultural values and technological norms, examining consumers from diverse socio-technical contexts would broaden the theoretical and practical generalizability of hybrid review effects.

Author Contributions

Conceptualization, Y.L. and H.-Y.H.; methodology, H.-Y.H.; software, Y.L.; validation, Y.L.; formal analysis, H.-Y.H.; investigation, Y.L.; resources, Y.L.; data curation, Y.L.; writing—original draft preparation, Y.L.; writing—review and editing, H.-Y.H.; visualization, Y.L.; supervision, H.-Y.H.; project administration, H.-Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This research did not require ethics approval in accordance with the policies of Dongguk University, as it involved anonymized, non-sensitive survey data from adult participants. All participants provided informed consent prior to participating. According to the policies of Dongguk University (https://rnd.dongguk.edu/ko/page/sub/sub0601_02.do, accessed on 30 September 2025), research of this type (non-clinical, non-sensitive, anonymized survey without personally identifiable information) does not require Institutional Review Board (IRB) or Ethics Committee approval. All participants were fully informed about the purpose of the study and their voluntary participation, and informed consent was obtained prior to data collection.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data presented in this study are available on request from the corresponding author due to restrictions on external exposure of data.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Experimental Stimuli (Study 3)

Appendix A.1. AI Literacy Manipulation Text

Participants were randomly assigned to one of two AI literacy conditions. In the high-literacy condition, they read a short educational passage describing how AI systems generate reviews using advanced natural language processing and sentiment analysis techniques, emphasizing the reliability and data-driven nature of AI-generated content. In the low-literacy condition, they read a neutral description that briefly defined AI-generated reviews as “automatically produced texts based on online information,” without mentioning analytical processes or reliability.

Appendix A.2. Review Stimuli (Low Information Load Condition)

Participants viewed four customer reviews for a home-cleaning service. Two reviews were written by human users highlighting emotional and experiential aspects (e.g., “The cleaner was very polite and thorough, leaving my house spotless!”), while two reviews were AI-generated and emphasized structured and analytical information (e.g., “Service quality maintained consistent 4.7/5 satisfaction across visits.”). The balance between human and AI cues was designed to represent a moderate cognitive load.

Appendix A.3. Review Stimuli (High Information Load Condition)

Participants in the high-load condition viewed twelve reviews: six human-written and six AI-generated ones. The human reviews conveyed diverse emotions, while the AI-generated reviews presented aggregated summaries and factual statements. The information density and number of distinct viewpoints were intended to induce a higher level of perceived information overload, consistent with cognitive load theory.

References

- Chevalier, J.A.; Mayzlin, D. The Effect of Word of Mouth on Sales: Online Book Reviews. J. Mark. Res. 2006, 43, 345–354. [Google Scholar] [CrossRef]

- Mudambi, S.M.; Schuff, D. What Makes a Helpful Online Review? A Study of Customer Reviews on Amazon.com. MIS Q. 2010, 34, 185–200. [Google Scholar] [CrossRef]

- Filieri, R.; Raguseo, E.; Vitari, C. What Moderates the Influence of Extremely Negative Ratings? The Role of Review and Reviewer Characteristics. Int. J. Hosp. Manag. 2019, 77, 333–341. [Google Scholar] [CrossRef]

- Park, D.-H.; Lee, J. eWOM Overload and Its Effect on Consumer Behavioral Intention Depending on Consumer Involvement. Electron. Commer. Res. Appl. 2008, 7, 386–398. [Google Scholar] [CrossRef]

- Dogru, T.; Line, N.; Zhang, T.; Altin, M.; Olya, H.; Zhang, Y.; Ye, B.H.; Wang, C.; Law, R.; Guillet, B.D.; et al. Generative Artificial Intelligence in the Hospitality and Tourism Industry: Developing a Framework for Future Research. J. Hosp. Tour. Res. 2025, 49, 235–253. [Google Scholar] [CrossRef]

- Longoni, C.; Bonezzi, A.; Morewedge, C.K. Resistance to Medical Artificial Intelligence. J. Consum. Res. 2019, 46, 629–650. [Google Scholar] [CrossRef]

- Flavián, C.; Pérez-Rueda, A.; Belanche, D.; Casaló, L.V. Intention to Use Analytical Artificial Intelligence (AI) in Services—The Effect of Technology Readiness and Awareness. J. Serv. Manag. 2022, 33, 293–320. [Google Scholar] [CrossRef]

- Brynjolfsson, E.; McAfee, A. Machine, Platform, Crowd: Harnessing Our Digital Future; W.W. Norton & Company: New York, NY, USA, 2017. [Google Scholar]

- Petty, R.E.; Cacioppo, J.T. Communication and Persuasion: Central and Peripheral Routes to Attitude Change; Springer: New York, NY, USA, 2012. [Google Scholar]

- Carichon, F.; Ngouma, C.; Liu, B.; Caporossi, G. Objective and Neutral Summarization of Customer Reviews. Expert Syst. Appl. 2024, 255, 124449. [Google Scholar] [CrossRef]

- Kempf, D.S.; Smith, R.E. Consumer Processing of Product Trial and the Influence of Prior Advertising: A Structural Modeling Approach. J. Mark. Res. 1998, 35, 325–338. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Gursoy, D.; Cai, R. Artificial Intelligence: An Overview of Research Trends and Future Directions. Int. J. Contemp. Hosp. Manag. 2025, 37, 1–17. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive Load During Problem Solving: Effects on Learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Hayes, A.F. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach, 2nd ed.; Guilford Press: New York, NY, USA, 2017. [Google Scholar]

- Schmidt, P.; Biessmann, F.; Teubner, T. Transparency and Trust in Artificial Intelligence Systems. J. Decis. Syst. 2020, 29, 260–278. [Google Scholar] [CrossRef]

- McGrath, M.J.; Duensen, A.; Lacey, J.; Paris, C. Collaborative Human–AI Trust (CHAI-T): A Process Framework for Active Management of Trust in Human–AI Collaboration. Comput. Hum. Behav. Artif. Hum. 2025, 6, 100200. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.-G. Statistical Power Analyses Using G*Power 3.1: Tests for Correlation and Regression Analyses. Behav. Res. Methods 2009, 41, 1149–1160. [Google Scholar] [CrossRef]

- Sousa, V.D.; Rojjanasrirat, W. Translation, Adaptation and Validation of Instruments or Scales for Use in Cross-Cultural Health Care Research: A Clear and User-Friendly Guideline. J. Eval. Clin. Pract. 2011, 17, 268–274. [Google Scholar] [CrossRef]

- Lee, A.Y.; Labroo, A.A. The Effect of Conceptual and Perceptual Fluency on Brand Evaluation. J. Mark. Res. 2004, 41, 151–165. [Google Scholar] [CrossRef]

- Kong, X.; Fang, H.; Chen, W.; Xiao, J.; Zhang, M. Examining Human–AI Collaboration in Hybrid Intelligence Learning Environments: Insight from the Synergy Degree Model. Humanit. Soc. Sci. Commun. 2025, 12, 821. [Google Scholar] [CrossRef]

- Kulal, A. Cognitive Risks of AI: Literacy, Trust, and Critical Thinking. J. Comput. Inf. Syst. 2025, online ahead of print. [Google Scholar] [CrossRef]

- Fu, S.; Li, H.; Liu, Y.; Pirkkalainen, H.; Salo, M. Social Media Overload, Exhaustion, and Use Discontinuance: Examining the Effects of Information Overload, System Feature Overload, and Social Overload. Inf. Process. Manag. 2020, 57, 102307. [Google Scholar] [CrossRef]

- Wang, L.; Che, G.; Hu, J.; Chen, L. Online Review Helpfulness and Information Overload: The Roles of Text, Image, and Video Elements. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 1243–1266. [Google Scholar] [CrossRef]

- Almatrafi, O.; Johri, A.; Lee, H. A Systematic Review of AI Literacy Conceptualization, Constructs, and Implementation and Assessment Efforts (2019–2023). Comput. Educ. Open 2024, 6, 100173. [Google Scholar] [CrossRef]

- Hemmer, P.; Schemmer, M.; Kühl, N.; Vössing, M.; Satzger, G. Complementarity in Human–AI Collaboration: Concept, Sources, and Evidence. Eur. J. Inf. Syst. 2025, 34, 979–1002. [Google Scholar] [CrossRef]

- Rahwan, I.; Cebrian, M.; Obradovich, N.; Bongard, J.; Bonnefon, J.-F.; Breazeal, C.; Crandall, J.W.; Christakis, N.A.; Couzin, I.D.; Jackson, M.O.; et al. Machine behaviour. Nature 2019, 568, 477–486. [Google Scholar] [CrossRef]

- Dellermann, D.; Ebel, P.; Söllner, M.; Leimeister, J.M. Hybrid intelligence. Bus. Inf. Syst. Eng. 2019, 61, 637–643. [Google Scholar] [CrossRef]

- Cheung, M.Y.; Luo, C.; Sia, C.L.; Chen, H. Credibility of Electronic Word-of-Mouth: Informational and Normative Determinants of On-line Consumer Recommendations. Int. J. Electron. Commer. 2009, 13, 9–38. [Google Scholar] [CrossRef]

- Zhang, Y.; Norman, D.A. Representations in distributed cognitive tasks. Cogn. Sci. 1994, 18, 87–122. [Google Scholar] [CrossRef]

- Marusich, L.R.; Files, B.T.; Bancilhon, M.; Rawal, J.C.; Raglin, A. Trust Calibration for Joint Human/AI Decision-Making in Dynamic and Uncertain Contexts. In Artificial Intelligence in HCI; Degen, H., Ntoa, S., Eds.; Lecture Notes in Computer Science, Volume 15819; Springer: Cham, Switzerland, 2025. [Google Scholar]

- Ulfert, A.-S.; Georganta, E.; Centeio Jorge, C.; Mehrotra, S.; Tielman, M. Shaping a Multidisciplinary Understanding of Team Trust in Human–AI Teams: A Theoretical Framework. Eur. J. Work Organ. Psychol. 2024, 33, 158–171. [Google Scholar] [CrossRef]

- Lukyanenko, R.; Maass, W.; Storey, V.C. Trust in Artificial Intelligence: From a Foundational Trust Framework to Emerging Research Opportunities. Electron. Mark. 2022, 32, 1993–2020. [Google Scholar] [CrossRef]

- Eppler, M.J.; Mengis, J. The Concept of Information Overload: A Review of Literature from Organization Science, Accounting, Marketing, MIS, and Related Disciplines. Inf. Soc. 2004, 20, 325–344. [Google Scholar] [CrossRef]

- Hofstede, G. Culture’s Consequences: Comparing Values, Behaviors, Institutions, and Organizations Across Nations; Sage Publication: Thousand Oaks, CA, USA, 2001. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.