- Proceeding Paper

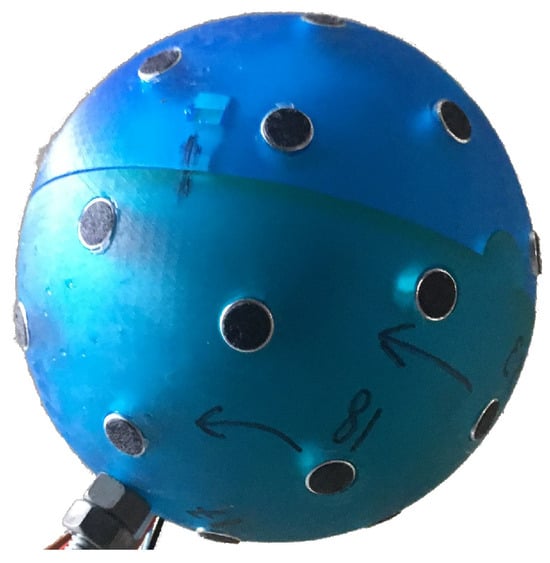

Nested Sampling for Detection and Localization of Sound Sources Using a Spherical Microphone Array

- Ning Xiang and

- Tomislav Jasa

Since its inception in 2004, nested sampling has been used in acoustics applications. This work applies nested sampling within a Bayesian framework to the detection and localization of sound sources using a spherical microphone array. Beyond an existing work, this source localization task relies on spherical harmonics to establish parametric models that distinguish the background sound environment from the presence of sound sources. Upon a positive detection, the parametric models are also involved to estimate an unknown number of potentially multiple sound sources. For the purpose of source detection, a no-source scenario needs to be considered in addition to the presence of at least one sound source. Specifically, the spherical microphone array senses the sound environment. The acoustic data are analyzed via spherical Fourier transforms using a Bayesian model comparison of two different models accounting for the absence and presence of sound sources for the source detection. Upon a positive detection, potentially multiple source models are involved to analyze direction of arrivals (DoAs) using Bayesian model selection and parameter estimation for the sound source enumeration and localization. These are two levels (enumeration and localization) of inferential estimations necessary to correctly localize potentially multiple sound sources. This paper discusses an efficient implementation of the nested sampling algorithm applied to the sound source detection and localization within the Bayesian framework.

20 May 2024