Abstract

The evolution of space technology in recent years, fueled by advancements in computing such as Artificial Intelligence (AI) and machine learning (ML), has profoundly transformed our capacity to explore the cosmos. Missions like the James Webb Space Telescope (JWST) have made information about distant objects more easily accessible, resulting in extensive amounts of valuable data. As part of this work-in-progress study, we are working to create an atmospheric absorption spectrum prediction model for exoplanets. The eventual model will be based on both collected observational spectra and synthetic spectral data generated by the ROCKE-3D general circulation model (GCM) developed by the climate modeling program at NASA’s Goddard Institute for Space Studies (GISS). In this initial study, spline curves are used to describe the bin heights of simulated atmospheric absorption spectra as a function of one of the values of the planetary parameters. Bayesian Adaptive Exploration is then employed to identify areas of the planetary parameter space for which more data are needed to improve the model. The resulting system will be used as a forward model so that planetary parameters can be inferred given a planet’s atmospheric absorption spectrum. This work is expected to contribute to a better understanding of exoplanetary properties and general exoplanet climates and habitability.

1. Introduction

In the expanding field of exoplanet studies, we aim to develop a machine learning system that will predict exoplanetary atmospheric absorption spectra based on a set of approximately 30 planetary parameters (PPs). This system will be trained on both real and synthetic data. This data includes spectra recorded from terrestrial planets within our solar system, along with synthetic spectra modeled using the ROCKE-3D [1] general circulation model (GCM) at NASA’s Goddard Institute for Space Studies (GISS). In this paper, as an early step towards building our final framework, we present a proof-of-concept implementation of a simplified version of this framework. This system promises to enhance our understanding of exoplanetary properties, as well as general planetary habitability.

By predicting the atmospheric absorption spectra based on given planetary parameters, this machine learning system will effectively act as a predictive forward model, which can then be used to evaluate likelihood functions for a Bayesian inference engine that will infer probable exoplanet parameters from observed atmospheric absorption spectra. Much of the initial training data will be sourced from recorded spectra from Earth, Venus, Mars, and Titan, as well as synthetic spectra generated by simulation studies conducted using 3D climate models of the Archean and Proterozoic Earth, as well as similar past and present climate models of Venus and Mars [2,3,4].

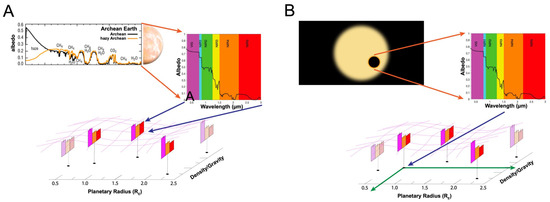

The machine learning system will be trained (Figure 1A) so that it will be able to predict atmospheric absorption spectra from planetary parameters. Once this has been accomplished, the system’s ability to predict spectra will make it useful for evaluating likelihood functions in a Bayesian inference engine that will then estimate planetary parameters based on atmospheric spectra, as illustrated in Figure 1B.

Figure 1.

(A) The machine learning system will be initially trained on recorded present spectra and historic synthetic spectra generated by ROCKE-3D GCM simulations at NASA’s GISS. (B) Once trained, the system can serve as a predictive forward model that will enable a Bayesian inference engine to estimate planetary parameters from recorded exoplanetary spectra.

The atmospheric spectra will be modeled as discrete bins consisting of approximately 20 bins spanning the visible (VIS) to near-infrared (NIR) (Figure 2). Each planet is characterized by approximately 30 parameters, such as planetary radius, orbital radius, stellar classification, day-side temperature, and oxygen content, to name a few. This complex modeling involves predicting multiple (20) scalar values (bin values of spectral intensity) in a 30-dimensional planetary parameter space.

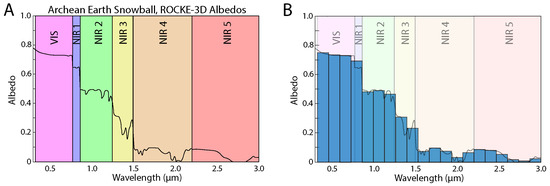

Figure 2.

(A) A synthetic atmospheric absorption spectrum, ranging from the visible (VIS) range through to near-infrared (NIR), of Archean snowball Earth generated using ROCKE-3D [5]. (B) A summary of the spectrum as a set of 20 discrete bins.

As a proof-of-concept, we are working with one planetary parameter, limiting the problem to one dimension and focusing on just six spectral bins. At this stage, we are employing spline models to address the design of a prototype model while being aware that we will need to adopt a model that will scale to 30 dimensions. Our current focus is to demonstrate the use of spline interpolation and Bayesian Adaptive Exploration in a low-dimensional setting before scaling it to a higher-dimensional model. As the dimensionality increases, so will the complexity; hence, designing an efficient model and establishing a viable methodology are of prime importance.

This research discusses the various methodologies that could be implemented to design the model, as well as their possible limitations and improvements for further research. This paper is divided into sections based on the following format: Section 2 reviews related work and provides the contextual background. Section 3 delves into the problem of modeling spectra. Section 4 discusses the other potential approaches, followed by Section 5 that discusses the methodology, explaining the mathematical formulation and approach. Section 6 presents the results and limitations of the current methodology, and finally, Section 7 offers concluding statements.

2. Related Work and Context

The launch of advanced space-based observatories, such as the James Webb Space Telescope (JWST), has enabled the acquisition of medium-resolution atmospheric spectral data for exoplanets. This influx of detailed observational data has accelerated progress in atmospheric characterization and highlighted the need for scalable and computationally efficient analysis frameworks. McDonald and Batalha [6] provide a comprehensive overview of the current atmospheric retrieval techniques, many of which use Bayesian inference incorporated using machine learning to enhance the parameter estimation and spectral fitting [6]. Alternatively, detailed examinations with forward modeling approaches, which help us understand how light interacts with planetary atmospheres to reveal their chemical composition, can be achieved using tools like Tau-REx [7], NEMESIS [8], and CHIMERA [9,10]. These frameworks treat atmospheric retrieval as an inverse problem, using computationally intensive, precomputed forward models to infer atmospheric properties.

Recent efforts have focused on the integration of general circulation models (GCMs) [11] to improve both scalability and realism. For example, Aura-3D [12] performs transmission spectrum retrieval using full 3D atmospheric simulations. Machine learning has been used to enhance the OASIS framework [13] by reducing the number of parameters. In another approach, where GCMs like MITgcm [14] are being adapted for exoplanet studies [15], their use in surrogate modeling remains limited due to the complexity and high computational cost of simulating exoplanet spectra. Most current approaches tend to focus on isolated parts of the retrieval pipeline, either forward modeling or parameter inference, rather than offering a fully integrated solution.

To address these limitations and gaps, our work aims to develop a scalable forward surrogate model capable of predicting atmospheric absorption spectra from approximately 30 planetary parameters. This work constitutes a generic proof-of-concept involving a single planetary parameter and spline interpolation. By adopting a spline model (which works well with a single parameter) with machine learning, we have developed a predictive system that fits seamlessly into a Bayesian inference framework. This enables parameter estimation and supports active data acquisition via Bayesian Adaptive Exploration [16]. Unlike traditional inverse methods, our approach generates complete spectral outputs while also quantifying uncertainty. A key strength of our model lies in its use of ROCKE-3D, a terrestrial GCM originally designed for Earth climate studies and later adapted for exoplanet research [1] to produce physically consistent training data. This hybrid strategy enables a more holistic and efficient exploration of exoplanetary atmospheres, moving beyond the limitations of conventional retrieval frameworks.

3. Modeling Spectra

The long-term goal of this project revolves around characterizing a planet using approximately 30 parameters and relating these parameters to the planet’s atmospheric absorption spectra. Each spectrum will be described using a set of approximately 20 discrete bins, like a histogram [17]. Figure 2A provides an example of an expected spectrum. This spectrum is derived from a ROCKE-3D simulation of an Archean Snowball Earth [5], exhibiting six distinct albedo bands used in the GCM: the visible (VIS) range, near-infrared (NIR), and subsequent bands up to a wavelength of . A detailed synthetic spectrum will be summarized as a set of 20 bins (Figure 2B).

The objective of the model is to predict the heights of these 20 bins on a bar graph (Figure 2B) for a given planet based on its planetary parameter values, where the height of each bin is expected to be a function of up to 30 planetary parameters. Considering each parameter on its individual dimension, a multidimensional model is created. The heights, h, of the 20 bins can be expressed as follows:

where signifies the height of the b-th bin, through denote the 30 planetary parameters, and represents the functional dependence of the b-th bin’s height on the planetary parameters.

However, this task presents challenges, and to address these challenges, we simplify the problem in this paper by avoiding the complexity of considering all 30 planetary parameters. We instead considered a simple model of 6 bins instead of 20 bins and focused on the problem in one dimension with one planetary parameter. We model each of the six functions, , as a Piecewise Cubic Hermite Interpolating Polynomial (PCHIP) [18], which is a specific kind of spline curve [19]. The PCHIP is characterized by a set of K spline knots, each defined by the planetary parameter value x and the spectra bin height f at that planetary parameter value, , such that [18]

for all spline knots indexed by , where in each subinterval , the function is a monotonic cubic polynomial defined by

where for and , and are the usual cubic Hermite basis functions for the interval :

where

The advantage of utilizing a spline model is the flexibility of modeling a potentially complicated function with a finite number of spline knots. The fact that the spline curves are fully defined by their knot positions and heights streamlines the modeling process considerably. In particular, the PCHIP model was chosen in this study as it is shape-preserving in the sense that it conserves monotonicity, which controls overshoots and oscillations better in cases where the data are not smooth. However, it should be noted that cubic splines, while slightly wilder in terms of oscillations, tend to produce more accurate results if the data are smooth (continuous first derivative).

The primary benefit of using spline interpolation is that it allows for the modeling of complicated continuous and smooth functions with a finite number of points [19]. However, while spline curves are straightforward for one-dimensional data, the required number of spline curves increases exponentially with the dimensionality of the parameter space, making it essential to validate this approach in one dimension before generalizing it further.

4. Other Potential Approaches

Given the challenges of dimensionality, it is tempting to consider simpler approaches. Interpolation techniques such as linear interpolation and logarithmic interpolation offer alternative approaches to estimating values between known data points. Linear interpolation connects adjacent data points with straight lines, providing a simple and computationally efficient method represented by the formula [20]

where and are the coordinates of the known data points, and x is the point of interest between and [21].

Conversely, logarithmic interpolation assumes a logarithmic relationship between data points, which is useful when the dependent variable changes exponentially relative to the independent variable [20]. Logarithmic interpolation is represented by [21]

where and are the coordinates of the known data points, and x is the point of interest between and [19,20]. Neither of these models is as flexible as the cubic spline model.

We examined other techniques—for example, multivariate regression models [22], multivariate interpolation [23,24], and the linear Shepherd method of linear interpolation [25]—and found that they are not as convenient for this problem. Below, we discuss their limitations.

Multivariate regression models the relationship between multiple dependent and independent variables by fitting a linear equation to the observed data [22]. With it, there is a risk of overfitting with many predictors, reducing the model’s generalizability to new data, in addition to the fact that a line will fail to capture the non-linear relationships that are common in such data [22].

Multivariate interpolation [23,24] is a method used to estimate unknown values at specific points based on known values at surrounding points in multiple dimensions. It creates a smooth surface that passes through the given data points, making it useful for filling in gaps in spatial or temporal datasets [23,24]. It also ensures an exact fit to the observed data points and can be applied to both regular and irregular grids of data. However, the method can suffer from overfitting to the noise in the observations, leading to a poor performance on new data, and it can become computationally intensive and less effective with very large datasets [23,24]. Moreover, it may experience boundary issues, where the accuracy of interpolation decreases near the edges of the dataset [23,24].

The linear Shepherd method of linear interpolation [25] is a technique that estimates unknown values by taking the weighted average of nearby known values, where the weights are inversely proportional to the distance from the unknown point to the known points, making it a simple and efficient method for local interpolation in multiple dimensions. However, as a linear method, it may not capture non-linear relationships well, which are often present in such data, and it can suffer from boundary effects. Additionally, the interpolation accuracy may not be as high as that of other, more sophisticated techniques, especially in regions with sparse data [25].

5. Methods

Ideally, a planet, and its atmospheric absorption spectrum, is defined by at least 30 parameters; further predicting the climate (the ultimate goal) is not just dependent on the existence of these parameters but also the right factorization of each inter-correlation amongst the parameters [2]. Hence, the ultimate aim of this research is to develop a model which could describe each of the 20 spectral bins in a 30-dimensional space. As a demonstration of concept in this present work, we focus on a much simpler problem using synthetic data. Here, we create synthetic spectra with six spectral bins, each of which has a height, , that varies with respect to a single planetary parameter, x, according to the following functions:

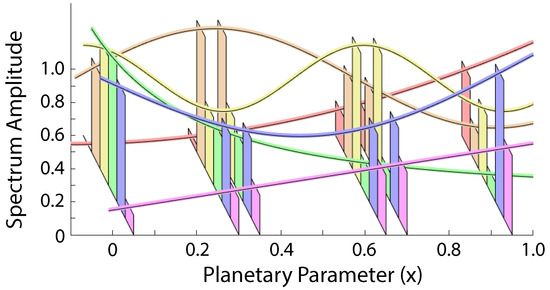

These six spectral bins are generated as data at a planetary parameter value that we simply call x. The x-values of the spectral data are given by , as illustrated in Figure 3. No noise was introduced into the synthetic data in this initial study.

Figure 3.

An illustration of the synthetic spectrum. There are six synthetic spectral bins at x-values: . The spectrum is represented by six spectral bins with amplitudes defined by the functions illustrated by the corresponding curves.

Modeling these spectral bins in one dimension is essentially a problem of fitting six curves to the data (one curve per spectral bin). Each curve was modeled as a Piecewise Cubic Hermite Interpolating Polynomial (PCHIP) [18] defined by six spline knots at positions , for which both the x and y values were determined using the data.

The PCHIP model for each bin is defined by six to-be-determined PCHIP spline knots. The x-positions of two of the spline knots are held at the extreme points, and , to limit the polynomial divergences to infinity, whereas the x-positions of the other four PCHIP spline knots, and , are allowed to vary between 0 and 1 with the restriction that they are not allowed to be closer to one another than . In addition, the resulting PCHIP curves are all constrained to have for all . The x-positions of the four internal PCHIP knots and the y-positions of the six PCHIP knots are found separately for each bin by sampling from the posterior probability using the nested sampling algorithm [26,27]. The resulting solutions are found by computing the mean of the sampled PCHIP functions.

To perform the posterior sampling using nested sampling, a Gaussian likelihood is employed, which depends on the sum of the square differences between the synthetic spectral bin heights, , at positions , and the value of the PCHIP curve for that bin at , , which is defined by the set of spline knots , where indexes the bin number, and indexes the specific spline knot (with the values and , fixed for all bins b). The log likelihood is then given by

where the standard deviation is set to .

6. Results

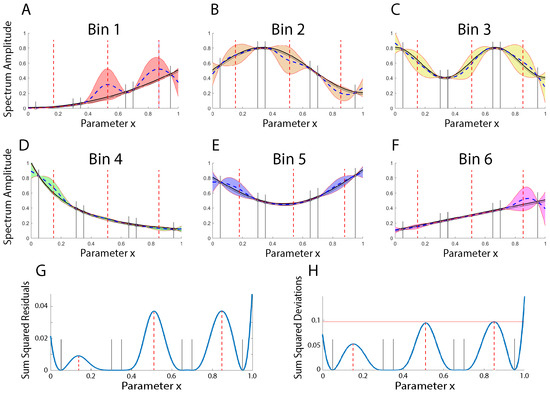

Figure 4A–F depict the plots of the mean estimated spectral functions (dashed curve) for each bin estimated using the six synthetic spectra at , as illustrated in Figure 3. The mean estimated spectral interpolants are indicated by the dashed curve, which is seen to be close to the (true) curves used to generate the data (Figure 3). The standard deviations of the estimated interpolants are illustrated by the colored/shaded regions. These shaded regions indicate the predictive distribution. That is, the shaded regions with the mean denoted by the dashed curve summarize what is known about the functional relationship between the planetary parameter x and the planetary spectra. The most informative regions for sampling data are given by the regions for which the entropy of the predictive distribution is maximum. These are the regions for which sampling will provide the most information. Such informed sampling is known as Bayesian Adaptive Exploration [16,28,29]. The posterior probabilities of the spline knot positions in x and y are what is predicted, and these posterior distributions are Gaussian. The predictive distribution of the spline curve is not Gaussian. In the case of a Gaussian distribution, the entropy is proportional to the variance. So, often, the variance in the predictive distribution, which contributes to the spread of the distribution, is a relatively good approximate measure of the entropy. As a result, the location of the greatest entropy in the interior of the domain of x is given by the peak at (Figure 4G). It will therefore be most informative to collect another data sample at .

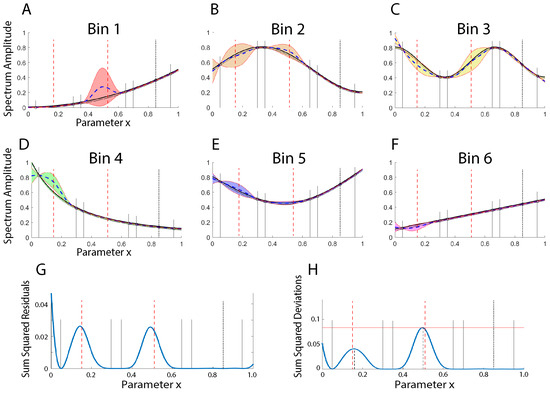

Figure 4.

(A–F) An illustration of the estimated mean functions (dashed curves) and standard deviations (colored/shaded regions) for the six spectral bins. The (truth) function from which the data were generated is shown as the solid line curve (see Figure 3). The solid vertical lines indicate the positions of the data. The red vertical dashed lines illustrate the locations of greatest uncertainty, which indicate where further spectral measurements would be most informative. (G) An illustration the sum of the squared residuals between the estimated and true functional relationship. (H) An illustration of the sum of the squared differences (summed over bins) between the estimated functions and the true functions. Larger values indicate regions of (overall) greater uncertainty proportional to the information that is to be gained by obtaining data there.

Figure 4H illustrates the sum of the squared residuals, which represents the overall difference between the predicted spectra and the correct solution. Figure 4G illustrates the combined uncertainties in the predictive distribution, as a function of the parameter x, found by summing the squared standard deviations (variance) of the mean interpolants in each of the bins. This plot summarizes where information is most needed. While the greatest uncertainty remains at the end point, there are three regions between the data points (at , , and ) at which the uncertainties are high and where the most information is to be gained.

Figure 5 illustrates the improvements made to the model by including another data spectrum at . Note that not only has the uncertainty on the right-hand side of the domain been brought under control but the uncertainty at the boundary also has. It is important to note that the shapes and positions of the remaining peaks in Figure 5G,H have shifted slightly. This highlights the fact that the new information gained by sampling at x = 0.85 has changed the overall landscape. Despite this, sampling data at the original positions of x = 0.15 and 0.51 will still be useful.

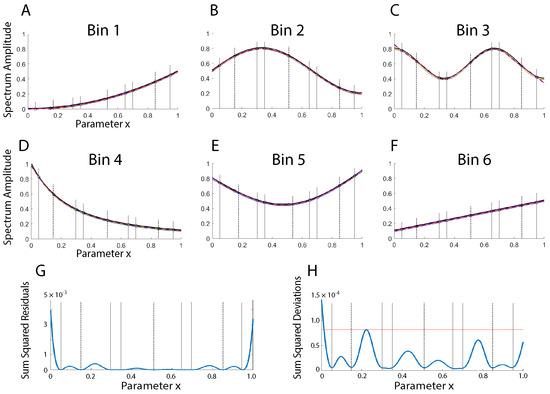

Figure 5.

(A–F) An illustration of the estimated mean functions (dashed lines) and standard deviations (colored/shaded regions) for the six spectral bins with an additional data spectrum at the location , indicated by the black vertical dotted line. (G) illustrates that the fit has been improved in the region where . (H) The sum of the squared deviations shows that collecting data near and would still be most informative, despite the fact the shape and positions of these peaks have shifted slightly, as indicated by the fact that the red vertical dashed lines (indicating the initial positions expected to be most informative) have now slightly deviated from the peaks of the sum squared deviations.

Figure 6 illustrates the utility of including data at all three parameter values of . Figure 6G shows that the function is rather well modeled, with the sum of the squared residuals being below everywhere. The peaks in the uncertainties in the predictive distribution (Figure 6H) indicate where collecting more data could improve the model further.

Figure 6.

(A–F) An illustration of the estimated mean functions (dashed curves, which lie under the solid curves) and standard deviations (colored/shaded regions) for the six spectral bins including additional data at all three parameter values of , illustrated by the black vertical dotted lines. (G) This illustrates that the fit is very good, with the sum of the squared residuals well below . (H) The sum of the squared deviations (solid blue curve) shows where collecting additional measurements, if desired, will be most informative.

7. Discussion

We have demonstrated the basic ideas behind the project to develop a machine-learning-based model of exoplanetary atmospheric absorption spectra based on planetary parameters. This paper presents a proof-of-concept focused on modeling a small number of spectral bins for one planetary parameter in one dimension. Although a PCHIP spline model was convenient to use in this simplified case, it is clear that representing the model as a collection of spline knots in 30 dimensions will be impractical. This compels us to consider more sophisticated models in future work on the full problem. By considering a simplified problem, we were able to demonstrate the utility of Bayesian Adaptive Exploration, in which sampling the solutions from the posterior and identifying the planetary parameters for which the predictive distribution is the broadest signify the planetary parameter values for which further sampling would be most informative. This alone is a significant advantage over more simplistic averaging or modeling techniques.

Author Contributions

Conceptualization: K.H.K. and M.J.W.; methodology: V.T., K.H.K., and M.J.W.; software: V.T. and K.H.K.; writing—original draft preparation: V.T.; writing—review and editing: V.T., K.H.K., and M.J.W.; supervision: K.H.K. and M.J.W.; project administration: K.H.K. and M.J.W.; funding acquisition: K.H.K. and M.J.W. All authors have read and agreed to the published version of the manuscript.

Funding

The authors V.T. and K.H.K. were supported by the NASA Interdisciplinary Consortia for Astrobiology Research (ICAR) grant 22-ICAR22_2-0015. M.J.W. was supported by NASA’s Nexus for Exoplanet System Science (NExSS) and the NASA Interdisciplinary Consortia for Astrobiology Research (ICAR). M.J.W. also acknowledges support from the GSFC Sellers Exoplanet Environments Collaboration (SEEC), which is funded by the NASA Planetary Science Divisions Internal Scientist Funding Model, and ROCKE-3D, which is funded by the NASA Planetary and Earth Science Divisions Internal Scientist Funding Model.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This study used synthetic data generated using the mathematical functions described in Section 4 of this paper.

Acknowledgments

The authors V.T., K.H.K. and M.J.W. thank the anonymous referee for their detailed review and constructive suggestions, which greatly improved this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Way, M.J.; Aleinov, I.; Amundsen, D.S.; Chandler, M.A.; Clune, T.L.; Del Genio, A.D.; Fujii, Y.; Kelley, M.; Kiang, N.Y.; Sohl, L.; et al. Resolving orbital and climate keys of Earth and extraterrestrial environments with dynamics (ROCKE-3D) 1.0: A general circulation model for simulating the climates of rocky planets. Astrophys. J. Suppl. Ser. 2017, 231, 12. [Google Scholar] [CrossRef]

- Team, L. The LUVOIR mission concept study final report. arXiv 2019, arXiv:1912.06219. [Google Scholar] [CrossRef]

- Schmid, P.; Grenfell, J.L.; Godolt, M.; von Paris, P.; Stock, J.W.; Lehmann, R.; Gebauer, S.; Rauer, H. Habitability of tidally locked M-dwarf planets is sensitive to atmospheric CO2 concentration. Proc. Natl. Acad. Sci. USA 2022, 119, e2112930118. [Google Scholar] [CrossRef]

- Way, M.J.; Del Genio, A.D.; Kiang, N.Y.; Sohl, L.E.; Grinspoon, D.H.; Aleinov, I.; Kelley, M.; Clune, T.L. Climates of coupled land–ocean worlds with carbon dioxide. J. Geophys. Res. Planets 2020, 125, e2019JE006276. [Google Scholar] [CrossRef]

- Way, M.; Ackerman, A.; Aleinov, I.; Barnes, R.; Chandler, M.; Colose, C.; Fauchez, T.; Guzewich, S.; Harman, C.; Kiang, N.; et al. Habitability Space: Exploring a New Frontier via Climate Models and Planetary Statistics; NASA Goddard Institute for Space Studies: New York, NY, USA, 2023; Unpublished Grant Proposal. [Google Scholar]

- MacDonald, R.J.; Batalha, N.E. A catalog of exoplanet atmospheric retrieval codes. Res. Notes AAS 2023, 7, 54. [Google Scholar] [CrossRef]

- Waldmann, I.P.; Tinetti, G.; Rocchetto, M.; Barton, E.J.; Yurchenko, S.N.; Tennyson, J. TAU-REX I: A Next Generation Retrieval Code for Exoplanetary Atmospheres. Astrophys. J. 2015, 802, 107. [Google Scholar] [CrossRef]

- Irwin, P.; Teanby, N.; de Kok, R.; Fletcher, L.; Howett, C.; Tsang, C.; Wilson, C.; Calcutt, S.; Nixon, C.; Parrish, P. The NEMESIS planetary atmosphere radiative transfer and retrieval tool. J. Quant. Spectrosc. Radiat. Transf. 2008, 109, 1136–1150. [Google Scholar] [CrossRef]

- Line, M.R.; Wolf, A.S.; Zhang, X.; Knutson, H.; Kammer, J.A.; Ellison, E.; Deroo, P.; Crisp, D.; Yung, Y.L. A systematic retrieval analysis of secondary eclipse spectra. I. A comparison of atmospheric retrieval techniques. Astrophys. J. 2013, 775, 137. [Google Scholar] [CrossRef]

- Rengel, M.; Adamczewski, J. Radiative transfer and inversion codes for characterizing planetary atmospheres: An overview. Front. Astron. Space Sci. 2023, 10, 1176740. [Google Scholar] [CrossRef]

- NASA Earth Observatory. General Circulation Models (GCMs). 2025. Available online: https://earthobservatory.nasa.gov/features/ClimateModeling (accessed on 27 July 2025).

- Nixon, M.C.; Madhusudhan, N. Aura-3D: A three-dimensional atmospheric retrieval framework for exoplanet transmission spectra. Astrophys. J. 2022, 935, 73. [Google Scholar] [CrossRef]

- Tahseen, T.P.A.; Mendonça, J.M.; Yip, K.H.; Waldmann, I.P. Enhancing 3D planetary atmosphere simulations with a surrogate radiative transfer model. Mon. Not. R. Astron. Soc. 2024, 535, 2210–2227. [Google Scholar] [CrossRef]

- MITgcm Group. MITgcm User Manual. 2025. Available online: https://mitgcm.readthedocs.io/en/latest/ (accessed on 27 July 2025).

- Ranftl, R.; von der Linden, W. Bayesian surrogate analysis and uncertainty propagation. Math. Comput. Appl. 2021, 26, 6. [Google Scholar] [CrossRef]

- Loredo, T.J. Bayesian adaptive exploration. AIP Conf. Proc. 2004, 707, 330–346. [Google Scholar] [CrossRef]

- Knuth, K.H. Optimal data-based binning for histograms and histogram-based probability density models. Digit. Signal Process. 2019, 95, 102581. [Google Scholar]

- Fritsch, F.N.; Carlson, R.E. Monotone piecewise cubic interpolation. SIAM J. Numer. Anal. 1980, 17, 238–246. [Google Scholar] [CrossRef]

- Ahlberg, J.H.; Nilson, E.; Walsh, J.L. The Theory of Splines and Their Applications; Academic: Cambridge, MA, USA, 1967. [Google Scholar]

- Burden, R.; Faires, J. Numerical Analysis; Brooks/Cole: Pacific Grove, CA, USA, 2004. [Google Scholar]

- Dorn, W.S.; McCracken, D.D.; Gass, S.I. Modeling and Analysis of Manufacturing Systems; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Lux, T.C.H.; Watson, L.T.; Chang, T.H.; Hong, Y.; Cameron, K. Interpolation of sparse high-dimensional data. Numer. Algorithms 2021, 88, 281–313. [Google Scholar] [CrossRef]

- Lux, T.C.H.; Watson, L.T.; Chang, T.H.; Bernard, J.; Li, B.; Yu, X.; Xu, L.; Back, G.; Butt, A.R.; Cameron, K.W.; et al. Novel meshes for multivariate interpolation and approximation. In Proceedings of the ACMSE 2018 Conference, Richmond, KY, USA, 29–31 March 2018; pp. 1–7. [Google Scholar]

- Schaback, R. Multivariate interpolation by polynomials and radial basis functions. Constr. Approx. 2005, 21, 293–317. [Google Scholar] [CrossRef]

- Thacker, W.I.; Zhang, J.; Watson, L.T.; Birch, J.B.; Iyer, M.A.; Berry, M.W. Algorithm 905: SHEPPACK: Modified Shepard algorithm for interpolation of scattered multivariate data. ACM Trans. Math. Softw. (TOMS) 2010, 37, 1–20. [Google Scholar] [CrossRef]

- Skilling, J. Nested sampling for general Bayesian computation. Bayesian Anal. 2006, 1, 833–859. [Google Scholar] [CrossRef]

- Skilling, J. Bayesian computation in big spaces-nested sampling and Galilean Monte Carlo. AIP Conf. Proc. 2012, 1443, 145–156. [Google Scholar] [CrossRef]

- Knuth, K.H. Intelligent machines in the twenty-first century: Foundations of inference and inquiry. Philos. Trans. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 2003, 361, 2859–2873. [Google Scholar] [CrossRef]

- Malakar, N.K.; Knuth, K.H. Entropy-Based Search Algorithm for Experimental Design. AIP Conf. Proc. 2011, 1305, 157–164. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).