Abstract

Identifying knowledge gaps and predictors of performance are proven ways to implement changes to a curriculum. This cross-sectional study investigates the subjective and objective competency of 52 medical students at McGill University in musculoskeletal (MSK) medicine, with a focus on orthopaedic surgery. We surveyed medical students to assess their confidence levels in orthopaedic surgery and their perceptions of its teaching. The students then completed a 25-question orthopaedics-focused exam as an objective assessment of their knowledge. Descriptive statistics were calculated, exam performance was compared across academic years, predictors of exam scores were analyzed, and student self-assessment accuracy was evaluated. Students reported lower confidence in orthopaedic surgery than in many other specialties, exam scores varied significantly across academic years (p = 0.007), and predicted exam performance was the only significant predictor of test score in multiple linear regression (R2 = 0.313, p = 0.025). Calibration analysis revealed a substantial miscalibration, where students with higher predicted scores tended to overestimate their performance, while those with lower predictions tended to underestimate themselves (intercept = 27.2, slope = 0.54). A Bland–Altman plot demonstrated wide limits of agreement between predicted and actual scores (mean bias −1.2%, 95% LoA −35.0% to +32.6%). These findings highlight meaningful orthopaedic knowledge gaps and miscalibrated self-assessment, emphasizing the need for targeted, structured educational interventions in the MSK curriculum.

1. Introduction

Musculoskeletal complaints are among the most common reasons for physician visits, accounting for approximately 15–30% of primary care consultations [1,2]. Despite this, medical education often lacks sufficient MSK training, leaving graduates underprepared [2,3]. In fact, the Association of American Medical Colleges has highlighted that medical schools may be placing their students at a disadvantage when encountering MSK-related conditions [1,4]. This concern has been explored across several educational parameters in North American programs, where it has been found that many institutions are lacking in fundamental teaching of MSK basic science and MSK-focused clinical rotations, leading to inadequate student competency upon entering residency [5,6]. A recent systematic review also found that multiple programs dedicate less than 3% of their total curriculum to MSK content, indicating substantial underrepresentation [7]. This issue is especially pressing because general physicians, not just specialists, are often first-line healthcare providers for orthopaedic complaints [2,6].

The issue of inadequate MSK training in medical curricula has been documented internationally, with studies conducted in Europe and Australia also indicating similar gaps in MSK knowledge among medical graduates [8,9]. Curriculum evaluations have revealed that limited exposure to MSK topics during undergraduate medical training negatively affects student confidence and competence, which can subsequently affect patient care as well as clinical outcomes [4,8,9]. Moreover, a 2025 survey of fourth-year curricula across the United States found that even programs designed to improve clinical exposure failed to resolve student confidence deficits without concrete curricular interventions, such as longitudinal review of physical exam skills and relevant anatomy [10]. These observations highlight the importance of international collaboration in identifying specific deficiencies in MSK curricula and the need to address them systematically.

Previous research has highlighted mismatches between medical students’ self-perceived competence (subjective confidence) and their actual MSK knowledge (performance on a standardized test) [1,3,5]. This aligns with the cognitive biases studied in educational theory, most importantly the Dunning–Kruger effect, which assesses that learners with limited knowledge can often overestimate their capabilities, which impedes on their ability to perform accurate self-assessment and to engage in self-directed learning [11]. Addressing this mismatch between confidence and competence is vital for effective education since optimal self-assessment is needed for lifelong professional development through self-regulated learning [12].

These educational challenges are important to consider in the context of established learning theories. Kolb’s experiential learning theory underscores the value of learning through active experimentation, reflective observation and abstract conceptualization to develop real-world competence [13,14]. Theories of situated learning and constructivism reinforce that MSK skills are best acquired in authentic clinical contexts, supported by methods such as simulation and debriefing [15,16]. Furthermore, self-determination theory suggests that students’ intrinsic motivation stems from their perceived competence [17].

Established in 1829, McGill University’s Faculty of Medicine and Health Sciences is the oldest medical school in Canada [18]. The program was most recently revised in 2013 and is divided into three curricular components over four years. In the first 18 months, students complete the Fundamentals of Medicine and Dentistry, covering basic and clinical science through systems-based blocks. For example, the “Movement” block covers MSK medicine over the span of four weeks, or approximately 7% of the pre-clerkship curriculum; while increasing MSK content may help address existing gaps, doing so would require reallocating time from other subjects, a contentious process beyond this study’s scope. Pre-clerkship teaching methods include lectures, histology and cadaveric anatomy laboratories, and small-group discussions. Students then progress to the six-month Transition to Clinical Practice component, before entering the two-year clerkship component of the curriculum, which culminates in the receipt of a Doctor of Medicine and Master of Surgery degree [19]. To our knowledge, medical students’ competency in MSK medicine at McGill University has not been previously assessed.

The objectives of this study were to assess senior medical students’ self-reported confidence in orthopaedics, objectively measure their knowledge in the field, and identify perceived barriers to orthopaedic education. By examining these factors, we aimed to find specific curricular gaps and suggest targeted improvements to strengthen MSK education and better prepare medical students for clinical practice.

2. Materials and Methods

2.1. Study Design and Participants

We conducted this cross-sectional study at McGill University’s Faculty of Medicine, where we employed a voluntary convenience sampling strategy, inviting second-, third- and fourth-year medical students to complete a two-part survey on Microsoft Forms. We recruited participants via social media announcements on class forums in order to maximize outreach.

Survey A (Document S1) aimed to assess the subjective competence of participants in orthopaedic surgery by examining the following aspects:

- (a)

- Students’ self-reported confidence in orthopaedic surgery compared to 19 other medical specialties using a five-point Likert scale (ranging from 1 = very unconfident to 5 = very confident).

- (b)

- Students’ self-reported clinical knowledge in orthopaedic surgery (i.e., estimating the percentage of orthopaedic knowledge retained from previous coursework).

- (c)

- Students’ perceived educational gaps/barriers in MSK teaching (participants could select multiple specific content areas such as oncology, sports medicine, trauma, etc.).

- (d)

- Students’ predicted performance on the exam.

We developed Survey A based on previously published research highlighting important educational gaps and commonly encountered MSK clinical scenarios [3,20]. An experienced faculty member, a senior orthopaedic surgery resident and two senior medical students independently reviewed the questions to ensure clarity, comprehensiveness and relevance to the content in the curriculum.

Survey B (Document S2) aimed to evaluate the performance of medical students in orthopaedic surgery topics using questions from the MSK30 exam, a validated tool to assess musculoskeletal knowledge, focusing on clinically relevant MSK conditions encountered by both primary care practitioners and specialists [6,20]. An orthopaedic surgery senior resident carefully selected the 25 multiple-choice questions included in Survey B based on their relevance to frequently encountered conditions in clinical practice and alignment with learning objectives in the curriculum. Indeed, topics spanned from nerve compression syndromes and common fractures to MSK oncology and paediatric conditions. The difficulty and clinical pertinence of these questions were considered to reflect expected competencies for graduating medical students.

Although Survey A and B were not formally validated as standardized testing instruments, they were developed from previously published research and peer-reviewed question banks. They were subsequently reviewed by a faculty-affiliated orthopaedic surgeon for accuracy and curricular alignment.

2.2. Ethical Review

The McGill University Institutional Review Board exempted this study from ethical review, under Article 2.5 of the Tri-Council Policy Statement (TCPS) on quality assurance and quality improvement studies. We obtained written informed consent electronically from participants prior to participation.

2.3. Data Analysis

We summarized subjective competence, perceived knowledge retention, and exam performance using descriptive statistics. We used paired t-tests to compare students’ self-reported confidence in orthopaedic surgery to their confidence in other medical and surgical specialties. To analyze differences in exam performance across academic years, we used Mann–Whitney U tests with Holm-adjusted p values, Cliff’s δ effect sizes, and Kruskal–Wallis tests, given the non-normal distribution of data, which was confirmed by visual inspection and Shapiro–Wilk tests. Multiple linear regression analysis explored potential predictors of exam performance, namely, self-reported Likert-scale confidence in orthopaedics, confidence in answering questions and encountering scenarios related to orthopaedics, perceived knowledge gaps in the topic, estimated retained knowledge and predicted exam performance. Normality of residuals, multicollinearity, and homoscedasticity were verified before model interpretation. We used Spearman’s correlation tests to assess the influence of clinical exposure on confidence. We used three complementary approaches to evaluate mismatches between predicted and actual exam performance: Wilcoxon signed-rank tests, calibration curve analysis (linear regression with confidence intervals) and Bland–Altman plots to visualize bias and limits of agreement. Statistical significance was set at an alpha of 0.05.

We conducted statistical analysis using JASP (version 0.17.2.0; JASP Team, Amsterdam, The Netherlands). The Google Colab platform was used for custom code execution, and the figures were generated using the Matplotlib plotting library, version 3.6.3. The dataset was managed securely and was anonymized prior to its analysis, with all identifying information removed as per ethical standards for educational research.

3. Results

3.1. Student Demographics

The study cohort consisted of medical students at McGill University, with 20 (38.5%) in their second year, 12 (23%) in their third year, and 20 (38.5%) in their fourth year of medical school. Among the participants, approximately one quarter (26.9%, n = 14) had either completed an elective in orthopaedic surgery or expressed the intention to do so, while the majority (73.1%, n = 38) had not pursued or planned to pursue an orthopaedic surgery elective.

3.2. Survey A

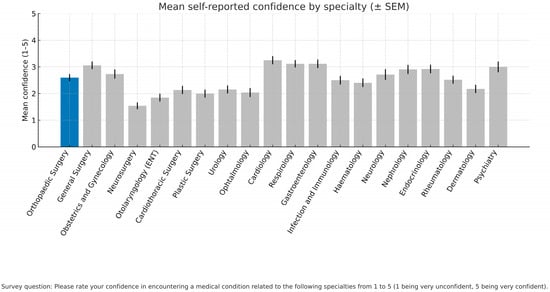

The mean confidence levels of students in various medical specialties were measured on a Likert-scale ranging from 1 to 5, as shown in Figure 1. The mean subjective confidence score in orthopaedic surgery (mean = 2.60, SD = 0.98) was significantly lower than in many other specialties, including cardiology (mean = 3.25, SD = 1.10, t = −3.68, p = 0.001), general surgery (mean = 3.06, SD = 1.06, t = −3.33, p = 0.002), and respirology (mean = 3.12, SD = 1.00, t = −3.01, p = 0.004). However, some surgical specialties exhibited even lower mean confidence levels among students, notably neurosurgery (mean = 1.54, SD = 0.94, t = 6.38, p < 0.001) and otolaryngology (mean = 1.85, SD = 1.07, t = 4.38, p < 0.001).

Figure 1.

Mean self-reported confidence levels in various medical and surgical specialties. Students rated their confidence in encountering a condition in 20 medical specialties on a Likert scale from 1 to 5 (1 = very unconfident, 5 = very confident). Bars show the mean Likert score for each specialty, and error bars indicate 95% confidence intervals.

The numbers and percentages of students who selected each possible answer to the qualitative questions in Survey A were compiled in Table 1. Most participants felt “mildly confident” when asked about their confidence in encountering orthopaedic clinical scenarios (53.8%, n = 28), while only 9.6% (n = 5) felt “confident”, and over one third reported feeling “unconfident” (36.5%, n = 19). As for confidence in answering theoretical orthopaedic questions, the answer distribution was similar: 57.7% (n = 30) felt “mildly confident”, 9.6% (n = 5) felt “confident”, and 32.7% (n = 17) felt “unconfident”. Moreover, the majority of students (61.5%, n = 32) estimated that they retained 30 to 59% of their orthopaedic knowledge from prior coursework, while approximately one quarter (23.1%, n = 12) self-estimated a retention of 60 to 89%. Of note, no students reported retaining more than 90% of their orthopaedic knowledge, and a small group of students (15.4%, n = 8) reported retaining less than 30%. The most frequently selected gaps in orthopaedic knowledge were related to the spine and to MSK oncology, both identified by approximately two thirds of students (67.3%, n = 35). Other commonly reported gaps included paediatrics (53.8%, n = 28), hand and wrist (53.8%, n = 28), and sports medicine (50.0%, n = 26). When asked about potential areas for curricular improvement, hospital-based teaching was identified by more than four out of five students (82.7%, n = 43), followed by clinical orientation in lectures and coursework (57.7%, n = 30).

Table 1.

Responses to qualitative orthopaedic surgery questions.

3.3. Survey B

The average exam score across all participants was 14.5 out of 25 (58.0%). Performance varied considerably between questions, with the most challenging question, “A patient has a disc herniation pressing on the 5th lumbar nerve root. How is motor function of 5th nerve root tested?”, receiving only 12 correct answers from the 52 participants (23.1%), and the easiest question, “Which nerve is compressed in carpal tunnel syndrome?”, receiving 46 correct answers (88.5%).

Exam performance was categorized by academic year, as displayed in Table 2. Fourth-year medical students obtained the highest scores, with a mean of 65.3% (SD = 14.5%) and a median of 70.5%. Third-year students earned a mean of 63.1% (SD = 17.5%) and a median of 59.0%, while second-year students had the lowest scores, with a mean of 47.5% (SD = 17.0%) and a median of 50.5%.

Table 2.

Exam performance and normality testing by academic year.

The Kruskal–Wallis test revealed significant differences in exam performance across academic years (H = 10.02, p = 0.007), as shown in Table 3. Pairwise comparisons using Mann–Whitney U tests indicated that fourth-year medical students scored significantly higher than second-year medical students after Holm adjustment (U = 86.5, δ = −0.568, p = 0.007), and third-year students also performed better than second-year students, with the difference in exam scores having a medium effect size and approaching significance after adjustment (U = 67.5, δ = −0.438, p = 0.086). However, no significant difference was found between third- and fourth-year students (U = 108.5, δ = −0.096, p = 0.668).

Table 3.

Pairwise comparisons of exam scores across academic years.

3.4. Predicted vs. Actual Exam Performance

To further examine predictors of exam performance, we performed a multiple linear regression analysis with the following variables: Likert-scale confidence in orthopaedic surgery, perceived knowledge gaps, retained knowledge, predicted exam performance, confidence in encountering scenarios related to orthopaedics, and confidence in answering questions related to orthopaedics. As summarized in Table 4, predicted exam performance was the only statistically significant predictor of test scores (p = 0.025). The model’s R2 value of 0.313 indicates that 31.3% of the variation in total exam scores can be attributed to these aforementioned predictors. Of note, none of the variables directly relating to confidence in orthopaedic surgery were identified as predictors of exam performance, suggesting that self-reported confidence was not aligned with exam outcomes. However, we found a moderately positive correlation between Likert-scale confidence levels in orthopaedic surgery and students having either previously pursued an elective in the specialty or the intention to do so in the future (ρ = 0.47, p < 0.001).

Table 4.

Multiple linear regression analysis of potential predictors of exam score.

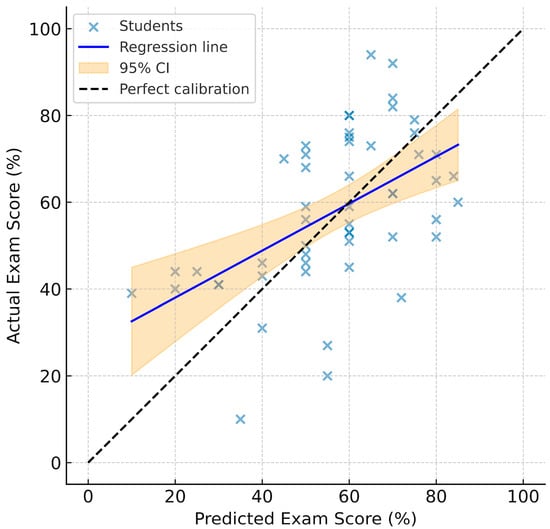

A Wilcoxon signed-rank test revealed no significant difference between predicted and actual exam scores (W = 596.5, p = 0.533; median difference +2.5%). However, further analysis demonstrated substantial miscalibration at the individual level. The calibration curve, shown in Figure 2, displayed a wide scatter between the predicted and actual scores, with a sizeable departure from the line of perfect calibration (intercept = 27.2, p < 0.001; slope = 0.54, p < 0.001; R2 = 0.277). With a slope of significantly less than 1, students at the high end of the curve frequently overestimated their results, while students at the low end frequently underestimated them; for every 10% increase in predicted score, actual performance rose only by 5.4%. To visualize how far students were from their predicted exam scores, we performed a Bland–Altman analysis, with the difference defined as predicted minus actual score (Figure S1). The mean bias was −1.2% and the 95% limits of agreement were wide, from −35.0% to +32.6%, indicating that while the overall mean error was small, individual errors were often large; 32 of 52 students incorrectly predicted their scores by 10% to 30%.

Figure 2.

Calibration curve comparing predicted exam performance to achieved exam performance. The 45° dashed line represents perfect calibration (predicted performance = actual performance), while the solid line is the fitted regression line (Actual = 27.2 + 0.54 × Predicted; R2 = 0.277), and the shaded area indicates the 95% confidence interval for the mean regression line.

4. Discussion

This study highlights gaps in orthopaedic education at McGill University. An important finding was the significantly lower confidence among students in orthopaedic surgery compared to other specialties, such as general surgery and cardiology. This aligns with prior research indicating that MSK education is often insufficiently addressed in medical school curricula despite the high clinical prevalence of MSK issues [3,5,6,21]. This underrepresentation can leave students feeling underprepared for clerkship, potentially influencing their career decisions and discouraging them from pursuing related electives [2]. For example, the most challenging question in our study pertained to disc herniation compressing the fifth lumbar nerve root, an MSK issue frequently encountered by family physicians, underscoring the disconnect between student preparedness and real-world clinical demands [6,22]. Albeit concerning, similar findings have been reported across Canada, with most medical students perceiving their MSK training as inadequate, and up to 50% of practicing physicians failing basic MSK competency exams [7]. This gap between education and clinical needs has also been observed elsewhere, with a systematic review finding that only around 15% of US medical schools require an MSK clinical rotation, and that UK medical graduates report low confidence in their ability to manage orthopaedic problems [23].

As expected, our findings show significant improvement in exam scores between second- and fourth-year medical students, which most likely reflects the educational impact of clinical rotations. Despite this improvement, the median performance of third-year students was still below the provincial passing grade of 60% [24], and the means of third- and fourth-year students were only a few percentage points above it, possibly highlighting not only suboptimal pre-clerkship MSK education, but also insufficient exposure throughout clerkship [25]. From a theoretical perspective, these findings reflect Kolb’s experiential learning theory, which emphasizes the crucial role of clinical exposure and active experimentation in developing student competency [13,26]. Indeed, Kolb’s framework for learning is a cyclical process whereby concrete experiences stimulate reflective observation, conceptualization and active experimentation, which strengthen competency and confidence [13,26]. Our data suggest that limited MSK clinical exposure, especially prior to clerkship, likely limits students’ experiential learning cycle, impeding knowledge integration. Consistent with this finding, educational experts have called for longitudinal reinforcement of MSK learning by introducing core physical exam skills early on and reinforcing them during clerkship [10].

The linear regression model emphasizes that predicted confidence plays a significant role in orthopaedic expertise, reinforcing the value of early clinical exposure and targeted educational support to address gaps in both competence and confidence before clinical electives [27]. In fact, our analysis shows a positive correlation between having taken or intending to take an orthopaedic surgery elective and students’ confidence in the specialty. This aligns with self-determination theory, which stipulates that student motivation, confidence and performance are improved when educational environments are designed to support student autonomy and competence [28]. Structured clinical exposure with supportive feedback could nurture these psychological needs, bolstering motivation and perceived competence.

Additionally, the discrepancy between students’ self-reported and actual orthopaedic knowledge highlights the need for improved self-assessment training and targeted feedback in the curriculum [29,30]. This finding strongly aligns with the literature on self-regulated learning, which suggests that structured training in self-assessment techniques can strengthen judgement of one’s own learning needs, leading to more effective learning behavior [31]. This is further reinforced by constructivist learning theory, which states that learning is most effective when students are actively constructing their knowledge through critical reflection and self-assessment [32]. Although the cohort shows minimal average bias, calibration is suboptimal; as predicted exam performance rises, actual performance rises half as steeply, meaning at high predictions students tend to overestimate their score, while at low predictions they tend to underestimate it. A misalignment between perceived competence and actual performance can lead to suboptimal learning outcomes, student frustration, and ultimately, suboptimal patient care [30]. Such miscalibration can be problematic; as shown in our calibration curve, the least proficient students were often overconfident, whereas the most proficient students often conservatively assessed their abilities, reflecting the classic Dunning–Kruger paradigm [29,33]. Indeed, overconfident learners may not seek the learning they need, while underconfident learners may limit their own learning opportunities.

To address these deficiencies, we propose implementing strategies such as enhanced MSK clinical skills training, earlier exposure to orthopaedic cases, simulation-based learning, and integrated anatomy teaching [5]. These educational interventions align with situated learning theory, where knowledge acquisition occurs through authentic contexts and practical engagement, potentially bridging the gap between theoretical knowledge and confidence in its clinical application [34,35]. Such simulated learning environments could enable learners to shift from superficial participation to active involvement, thus facilitating orthopaedic knowledge acquisition, understanding, and transfer to real-world settings [34,35]. For example, simulation-based learning has been shown to not only boost learner confidence but also improve clinical skills by allowing for repetitive practice in a low-risk environment with immediate feedback [36,37]. More ambitious educational interventions could employ artificial intelligence for uses ranging from virtual patients to intelligent tutoring systems, but educators must remain vigilant that reliance on such systems does not diminish critical thinking in trainees [38,39]. In practice, we advocate for the addition of a structured MSK rotation during the final year of medical school to serve as a capstone for consolidation of orthopaedic knowledge through supervised clinical experience [40,41]. Given the finite amount of time in the medical curriculum, some reallocation may be required, such as trimming less clinically relevant content in other domains.

This mismatch between confidence and competence, as further emphasized by the wide limits of agreement on the Bland–Altman plot, may also push students to avoid electives in disciplines where they perceive knowledge gaps and instead opt for electives aimed at enhancing their residency applications, usually in fields where their actual knowledge is high. Indeed, it is crucial for students and physicians to develop self-assessment strategies, as these are essential for identifying personal knowledge gaps and maintaining competency throughout their careers [12]. Effective self-assessment interventions could be informed by formative feedback approaches such as reflection exercises, peer assessment, and guidance from the appropriate educators [42,43]. These formative assessment practices follow the principles of Kirkpatrick’s hierarchy of educational outcomes, where assessing learner reactions, behavior, and ultimately patient outcomes must be integrated into medical education programs [44]. Our study mainly assessed student reactions and learning outcomes, which are levels 1 and 2 of Kirkpatrick’s hierarchy, but future curricular interventions should also aim to measure higher level outcomes such as behavioral changes in clinical practice and patient care impacts, which are levels 3 and 4 of Kirkpatrick’s hierarchy.

This study’s modest sample size (n = 52), voluntary participation, lack of formal survey validation, and potential selection bias from social media engagement or interest in orthopaedics limit its generalizability. Furthermore, third-year students are underrepresented in our study cohort compared to second- and fourth-year students, likely due to the increased clinical demands of the clerkship year. The perceptions of second-year medical students might reflect recent exam performance, while responses from third- and fourth-year students may show recall bias. Self-reported confidence measures in Survey A are subjective and may lead to inaccuracies, influenced by factors like the Dunning–Kruger effect and survey fatigue [29]. Additionally, Survey B only assessed theoretical knowledge, not clinical competency, and it may not comprehensively cover all clinically relevant orthopaedic topics. We also did not directly evaluate the adequacy of the curriculum nor the extent of teaching efforts in orthopaedics. Finally, this study may not be generalizable to other institutions with different curriculums. To address these limitations, future research could be conducted at multiple institutions, in a longitudinal fashion, to comprehensively assess the effectiveness of proposed educational interventions.

5. Conclusions

This study offers insight into the knowledge proficiency of medical students at McGill University in MSK medicine and orthopaedic surgery. Most importantly, it identified a significant discrepancy between students’ subjective confidence and objective competence in orthopaedic surgery, underlining the need for curricular adjustments. Although some students showed partial accuracy in self-assessment, our findings revealed substantial miscalibration, where lower-performing students tended to overestimate their knowledge, while higher-performing students tended to underestimate it. We recommend implementing targeted educational interventions earlier in the curriculum, such as simulation-based learning and guided self-assessment training, to improve both confidence and competence. In the future, addressing these gaps in calibration and knowledge may better equip physicians to manage the high prevalence of MSK complaints that they are likely to encounter in clinical practice.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/ime4030031/s1, Document S1: Survey A; Document S2: Survey B; Figure S1: Bland-Altman Plot of Predicted vs Actual Exam Performance.

Author Contributions

Conceptualization, M.B., L.G., K.A. and A.A.; methodology, K.A.; software, M.B.; validation, M.B., K.A. and A.A.; formal analysis, M.B.; investigation, M.B.; resources, K.A.; data curation, M.B.; writing—original draft preparation, M.B.; writing—review and editing, M.B., L.G., K.A. and A.A.; visualization, M.B.; supervision, A.A.; project administration, K.A.; funding acquisition, N/A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study following Article 2.5 of the Tri-Council Policy Statement (TCPS) on quality assurance and quality improvement studies, as confirmed by the McGill University Institutional Review Board. The procedures used in this study adhere to the tenets of the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The original contributions presented in this study are included in this article/Supplementary Material. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MSK | Musculoskeletal |

References

- Monrad, S.U.; Zeller, J.L.; Craig, C.L.; Diponia, L.A. Musculoskeletal education in US medical schools: Lessons from the past and suggestions for the future. Curr. Rev. Musculoskelet. Med. 2011, 4, 91–98. [Google Scholar] [CrossRef]

- Pinney, S.J.; Regan, W.D. Educating medical students about musculoskeletal problems. J. Bone Joint Surg. Am. 2001, 83, 1317–1320. [Google Scholar] [CrossRef]

- Bernstein, J.; Alonso, D.R.; DiCaprio, M.; Friedlander, G.E.; Heckman, J.D.; Ludmerer, K.M. Curricular reform in musculoskeletal medicine: Needs, opportunities and solutions. Clin. Orthop. Relat. Res. 2003, 415, 302–308. [Google Scholar] [CrossRef] [PubMed]

- DiGiovanni, B.F.; Chu, J.Y.; Mooney, C.J.; Lambert, D.R. Maturation of medical student musculoskeletal medicine knowledge and clinical confidence. Med. Educ. Online 2012, 17, 17092. [Google Scholar] [CrossRef]

- DiCaprio, M.R.; Covey, A.; Bernstein, J. Curricular requirements for musculoskeletal medicine in American medical schools. J. Bone Joint Surg. Am. 2003, 85, 565–567. [Google Scholar] [CrossRef]

- Freedman, K.B.; Bernstein, J. Educational deficiencies in musculoskeletal medicine. J. Bone Joint Surg. Am. 2002, 84, 604–608. [Google Scholar] [CrossRef]

- Peeler, J. Addressing musculoskeletal curricular inadequacies within undergraduate medical education. BMC Med. Educ. 2024, 24, 845. [Google Scholar] [CrossRef] [PubMed]

- Al-Nammari, S.S.; James, B.K.; Ramachandran, M. The inadequacy of musculoskeletal knowledge after foundation training in the United Kingdom. J. Bone Joint Surg. Br. 2009, 91, 1413–1418. [Google Scholar] [CrossRef] [PubMed]

- Queally, J.M.; Cummins, F.; Brennan, S.A.; Shelly, M.J.; O’Byrne, J.M. Assessment of a new undergraduate module in musculoskeletal medicine. J. Bone Joint Surg. Am. 2011, 93, e9. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Knox, J.; Carek, S.M.; Cheerla, R.; Cochella, S.; DeCastro, A.O.; Deck, J.W.; DeStefano, S.; Hartmark-Hill, J.; Petrizzi, M.; Sepdham, D.; et al. Recommended Elements of a Musculoskeletal Course for Fourth-Year Medical Students: A Modified Delphi Consensus. Fam. Med. 2025, 57, 48–54. [Google Scholar] [CrossRef]

- Kruger, J.; Dunning, D. Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J. Pers. Soc. Psychol. 1999, 77, 1121–1134. [Google Scholar] [CrossRef] [PubMed]

- Eva, K.W.; Regehr, G. Self-assessment in the health professions: A reformulation and research agenda. Acad. Med. 2005, 80, S46–S54. [Google Scholar] [CrossRef]

- Wijnen-Meijer, M.; Brandhuber, T.; Schneider, A.; Berberat, P.O. Implementing Kolb’s Experiential Learning Cycle by Linking Real Experience, Case-Based Discussion and Simulation. J. Med. Educ. Curric. Dev. 2022, 9, 23821205221091511. [Google Scholar] [CrossRef]

- Marlow, N.J. SP1.2.8 Applying Kolb’s Experiential Learning Theory Improves Medical Student Confidence in Reviewing Post-Operative Patients. Br. J. Surg. 2022, 109, S5. [Google Scholar] [CrossRef]

- Mukhalalati, B.A.; Taylor, A. Adult Learning Theories in Context: A Quick Guide for Healthcare Professional Educators. J. Med. Educ. Curric. Dev. 2019, 6, 2382120519840332. [Google Scholar] [CrossRef]

- Cheng, A.; Eppich, W.; Grant, V.; Sherbino, J.; Zendejas, B.; Cook, D.A. Debriefing for technology-enhanced simulation: A systematic review and meta-analysis. Med. Educ. 2014, 48, 657–666. [Google Scholar] [CrossRef]

- Ten Cate, T.J.; Kusurkar, R.A.; Williams, G.C. How self-determination theory can assist our understanding of the teaching and learning processes in medical education. AMEE guide No. 59. Med. Teach. 2011, 33, 961–973. [Google Scholar] [CrossRef]

- Hanaway, J.; Cruess, R. McGill Medicine The First Half Century, 1829–1885; McGill-Queen’s University Press: Montreal, QC H3A 0G4, Canada, 1996. [Google Scholar]

- Faculty of Medicine and Health Sciences. Curriculum Components. Available online: https://www.mcgill.ca/ugme/mdcm-program/curriculum-structure/curriculum-components (accessed on 10 June 2025).

- Cummings, D.L.; Smith, M.; Merrigan, B.; Leggit, J. MSK30: A validated tool to assess clinical musculoskeletal knowledge. BMJ Open Sport. Exerc. Med. 2019, 5, e000495. [Google Scholar] [CrossRef]

- Day, C.S.; Yeh, A.C.; Franko, O.; Ramirez, M.; Krupat, E. Musculoskeletal medicine: An assessment of the attitudes and knowledge of medical students at Harvard Medical School. Acad. Med. 2007, 82, 452–457. [Google Scholar] [CrossRef] [PubMed]

- Leutritz, T.; Krauthausen, M.; Simmenroth, A.; Konig, S. Factors associated with medical students’ career choice in different specialties: A multiple cross-sectional questionnaire study at a German medical school. BMC Med. Educ. 2024, 24, 798. [Google Scholar] [CrossRef] [PubMed]

- Jin, Y.; Ma, L.; Zhou, J.; Xiong, B.; Fernando, A.; Snelgrove, H. A call for improving of musculoskeletal education on physical medicine and rehabilitation studies: A systematic review with meta-analysis. BMC Med. Educ. 2024, 24, 1163. [Google Scholar] [CrossRef]

- Taylor, H. Differences in grading systems among Canadian universities. Can. J. High. Educ. 1977, 7, 47–54. [Google Scholar] [CrossRef]

- Yu, J.H.; Lee, M.J.; Ss, K. Assessment of medical students’ clinical performance using high-fidelity simulation: Comparison of peer and instructor assessment. BMC Med. Educ. 2021, 21, 506. [Google Scholar] [CrossRef] [PubMed]

- Kolb, D.A. Experiential Learning: Experience as the Source of Learning and Development, 2nd ed.; Pearson Education: Upper Saddle River, NJ, USA, 2015. [Google Scholar]

- Maksoud, A.; AlHadeed, F. Self-directed learning in orthopaedic trainees and contextualisation of knowledge gaps, an exploratory study. BMC Med. Educ. 2024, 24, 1321. [Google Scholar] [CrossRef] [PubMed]

- Deci, E.L.; Ryan, R.M. Self-determination theory in health care and its relations to motivational interviewing: A few comments. Int. J. Behav. Nutr. Phys. Act. 2012, 9, 24. [Google Scholar] [CrossRef]

- Knof, H.; Berndt, M.; Shiozawa, T. Prevalence of Dunning-Kruger effect in first semester medical students: A correlational study of self-assessment and actual academic performance. BMC Med. Educ. 2024, 24, 1210. [Google Scholar] [CrossRef]

- Eva, K.W.; Cunnington, J.P.; Reiter, H.I.; Keane, D.R.; Norman, G.R. How can I know what I don’t know? Poor self-assessment in a well-defined domain. Adv. Health Sci. Educ. Theory Pract. 2004, 9, 211–224. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Self-regulated learning and academic achievement: An overview. Educ. Psychol. 1990, 25, 3–17. [Google Scholar] [CrossRef]

- Thomas, A.; Menon, A.; Boruff, J.; Rodriguez, A.M.; Ahmed, S. Applications of social constructivist learning theories in knowledge translation for healthcare professionals: A scoping review. Implement. Sci. 2014, 9, 54. [Google Scholar] [CrossRef]

- Rahmani, M. Medical Trainees and the Dunning-Kruger Effect: When They Don’t Know What They Don’t Know. J. Grad. Med. Educ. 2020, 12, 532–534. [Google Scholar] [CrossRef]

- Lave, J.; Wenger, E. Situated Learning: Legitimate Peripheral Participatio; Cambridge University Press: Cambridge, UK, 1991. [Google Scholar]

- Yardley, S.; Teunissen, P.W.; Dornan, T. Experiential learning: AMEE Guide No. 63. Med. Teach. 2012, 34, e102–e115. [Google Scholar] [CrossRef] [PubMed]

- Elendu, C.; Amaechi, D.C.; Okatta, A.U.; Amaechi, E.C.; Elendu, T.C.; Ezeh, C.P.; Elendu, I.D. The impact of simulation-based training in medical education: A review. Medicine 2024, 103, e38813. [Google Scholar] [CrossRef]

- Murphy, R.F.; LaPorte, D.M.; Wadey, V.M. Musculoskeletal education in medical school: Deficits in knowledge and strategies for improvement. J. Bone Joint Surg. Am. 2014, 96, 2009–2014. [Google Scholar] [CrossRef]

- Gordon, M.; Daniel, M.; Ajiboye, A.; Uraiby, H.; Xu, N.Y.; Bartlett, R.; Hanson, J.; Haas, M.; Spadafore, M.; Grafton-Clarke, C.; et al. A scoping review of artificial intelligence in medical education: BEME Guide No. 84. Med. Teach. 2024, 46, 446–470. [Google Scholar] [CrossRef]

- Narayanan, S.; Ramakrishnan, R.; Durairaj, E.; Das, A. Artificial Intelligence Revolutionizing the Field of Medical Education. Cureus 2023, 15, e49604. [Google Scholar] [CrossRef]

- Thompson, A.E. Improving Undergraduate Musculoskeletal Education: A Continuing Challenge. J. Rheumatol. 2008, 35, 2298–2299. [Google Scholar] [CrossRef]

- Harkins, P.; Burke, E.; Conway, R. Musculoskeletal education in undergraduate medical curricula—A systematic review. Int. J. Rheum. Dis. 2023, 26, 210–224. [Google Scholar] [CrossRef] [PubMed]

- Nicol, D.J.; Macfarlane-Dick, D. Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Stud. Higher Educ. 2006, 31, 199–218. [Google Scholar] [CrossRef]

- Epstein, R.M. Assessment in medical education. N. Engl. J. Med. 2007, 356, 387–396. [Google Scholar] [CrossRef] [PubMed]

- Johnston, S.; Coyer, F.M.; Nash, R. Kirkpatrick’s Evaluation of Simulation and Debriefing in Health Care Education: A Systematic Review. J. Nurs. Educ. 2018, 57, 393–398. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the Academic Society for International Medical Education. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).