Abstract

Currently, for Pinus pinea L., a valuable Mediterranean forest species in Catalonia, Spain, pinecone production is quantified visually before harvest with a manual count of the number of pinecones of the third year in a selection of trees and then extrapolated to estimate forest productivity. To increase the efficiency and objectivity of this process, we propose the use of remote sensing to estimate the pinecone productivity for every tree in a whole forest (complete coverage vs. subsampling). The use of unmanned aerial vehicle (UAV) flights with high-spatial-resolution imaging sensors is hypothesized to offer the most suitable platform with the most relevant image data collection from a mobile and aerial perspective. UAV flights and supplemental field data collections were carried out in several locations across Catalonia using sensors with different coverages of the visible (RGB) and near-infrared (NIR) spectrum. Spectral analyses of pinecones, needles, and woody branches using a field spectrometer indicated better spectral separation when using near-infrared sensors. The aerial perspective of the UAV was anticipated to reduce the percentage of hidden pinecones from a one-sided lateral perspective when conducting manual pinecone counts in the field. The fastRandomForest WEKA segmentation plugin in FIJI (Fiji is just ImageJ) was used to segment and quantify pinecones from the NIR UAV flights. The regression of manual image-based pinecone counts to field counts was R2 = 0.24; however, the comparison of manual image-based counts to automatic image-based counts reached R2 = 0.73. This research suggests pinecone counts were mostly limited by the perspective of the UAV, while the automatic image-based counting algorithm performed relatively well. In further field tests with RGB color images from the ground level, the WEKA fastRandomForest demonstrated an out-of-bag error of just 0.415%, further supporting the automatic counting machine learning algorithm capacities.

1. Introduction

Currently, pine forest pinecone production is quantified visually before the start of the collection using a subjective estimate of the number of pinecones in a selection of trees in a forest and then extrapolating to an estimation of the full productivity of the forest [1,2,3]. In order to increase the efficiency and objectivity of the process, one possible option for improvement is the use of remote sensors to estimate the pine productivity for a sufficient number of trees in a systematic way for the same extrapolation or even count the pinecones directly or estimate the productivity for every tree in a whole forest (complete coverage vs. subsampling) [4]. Ideally, the sensor used should be able to differentiate pinecones from the rest of the tree and the individual trees from their surrounding environment where they are located [5]. The use of unmanned aerial vehicle (UAV) flights in close proximity to specific sensors might offer the most suitable platform for sensors since they enable a high enough resolution data collection for pinecones to be detected. Previous undocumented tests have attempted to use aerial color photography images captured from UAVs to quantify pinecone production directly before harvest, but these studies were concluded without reaching satisfactory results (pers comm). Thus, improvement hinges on the capture of both high-resolution images and using sensors with more spectral information-differentiation than is offered by RGB (red, green, blue) color sensors operating only in visible light region spectral (VIS) bands. Therefore, this study was planned to harness the full potential of UAV platforms to quantify Pinus pinea L. pinecone production directly by expanding measurements towards improving the differentiation of pinecones by including measurements in the near-infrared (NIR) regions of the electromagnetic spectrum and deploying more powerful machine learning techniques for both tree and pinecone differentiation. The overall objective is directly quantifying pinecone production with UAV remote imaging sensors to develop faster, more efficient, and more precise forest productivity evaluations than the current manually intensive visual pinecone counting protocols.

2. Materials and Methods

2.1. Spectoscopy Study of Pinecones

On the 28 January of 2019, we collected samples of pinecones, needles, and wood in order to take to the University of Barcelona laboratory for spectral measurements with an OceanOptics FLAME (Ocean Optics Inc., Dunedin, FL, USA) VIS-NIR spectrophotometer with spectral coverage of 350 to 1000 nm and a spectral resolution of 0.375 nm in 2048 spectral bands. A total of 100 spectral measurements were made with the spectrophotometer, including 45 pinecone measurements, 25 of pine needles, and 35 measurements of wood on a different sample.

2.2. UAV Flights and Tree Delineation

In order to best test the possibility of UAV-captured images to quantify pinecones using RGB or infrared imaging, images and data produced from flights of the DJI Mavic 2 Pro UAV (DJI Inc., Shenzhen, China) with the 12 MP G-R-NIR modified-RGB camera (modified to be able to capture green, red, and near-infrared by maxmax.com, accessed on 10 June 2022). Images at all sites were captured every 2 s during flight for a minimum of 80% overlap and processed to create orthomosaics using Agisoft Photoscan (AgiSoft LLC, St. Petersburg, Russia). The orthomosaics each included >100 images taken from both 30 m and 15 m to produce quality mosaics from the 15 m data with a GSD of approximately 0.3 cm/pixel.

Here, we aimed to test if these sensors provide sufficiently high spatial resolution and spectral differentiation in UAV flights at different stages of pinecone maturity with flights in May, July, and November of 2020. Three different stands of P. pinea were selected for the test flights: one experimental stand at IRTA in Caldes de Montbui and two others in Girona province. Only preliminary analyses of a selection of the data are presented here.

2.3. Pinecone Quantification

In order to further test the parameters used in the automated image-based pinecone counting process, more images were captured from the ground level in order to acquire additional image data for training and testing the functionality of the different input parameters of the fastRandomForest image classification algorithm as implemented in FIJI by using the Trainable WEKA segmentation plugin [6].

3. Results

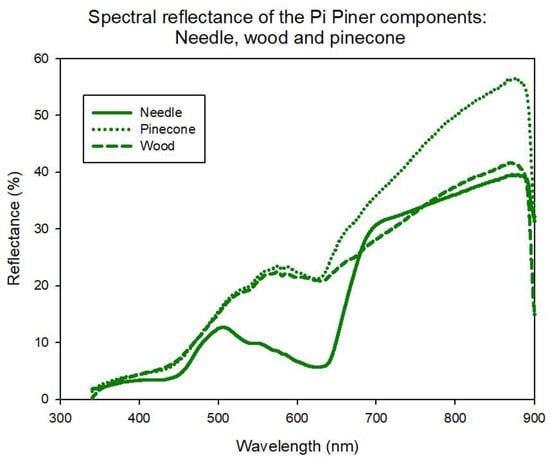

In Figure 1, we can observe clear differences between the spectral reflectance of the cones, leaves, and pine wood of P. pinea. Above all, the spectral study carried out in the laboratory at the UB with the Oceanoptics Flame VIS-NIR spectrophotometer indicates that three of the four bands of the multispectral sensor, the red, the red-edge, and the near-infrared, would be able to distinguish between pinecones, leaves, and wood in a VIS-NIR multispectral image, whether in discrete bands or in an NDVI-modified RGB camera.

Figure 1.

The spectral reflectance of the three main components of the P. pinea L. pines: needle (Dotted line), pinecone (semi-solid line), and wood (solid line). The most marked differences are 550–630 nm (red) and 750–850 nm (red-edge and near-infrared).

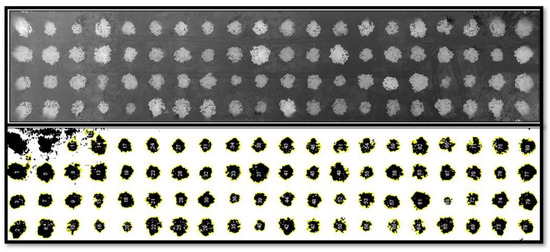

Tree crown delineation was achieved within FIJI using a simple thresholding to remove low vegetation after terrain correction subtracting the Agisoft Photoscan Structure from Motion (SfM) digital elevation model (DEM) from the full digital surface model (DSM) to obtain the resulting digital vegetation model (DVM). (DVM = (DSM > 0.5) − DEM). In the example shown in Figure 2 with evenly spaced trees in a forest plantation plot, the result was easily converted to a tree segmentation and numeration with a few lines of code in a FIJI macro. The eventual goal of the study is to put together a plugin of useful forest productivity codes that may be of use for forest management using UAV SfM outputs and original RGB and NIR image data.

Figure 2.

An example of the delineation of tree crowns using the integrated GPS of the RGB camera to produce digital vegetation models (DVMs). In this case, the trees are delineated and segmented by solely using the 3D information provided by the Structure from Motion (SfM) workflow and the GPS data integrated with the digital camera as part of the UAV.

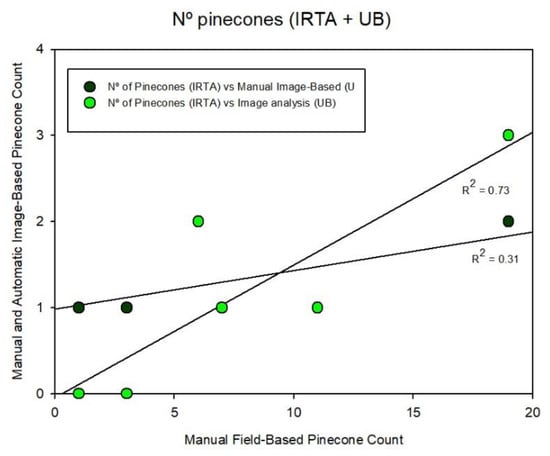

The manual image-based counts were conducted from tree cutouts at full resolution using a selection of the best images for each tree. In other segmentation and quantification, this is a critical pass to check if the “perspective of observation” is adequate for achieving realistic results. By the slope of the correlation shown in Figure 3, we may surmise that 1/10th or more of pinecones were not observed from a nadir perspective. The R2 for the manual image-based pinecone count did increase slightly to 0.35 when excluding the observations with 0 manual image-based values but note that then only 7/33 trees had any visible pinecones observed from the 15 m a.g.l. zenithal UAV images. In the follow-up analysis pipeline, 6/7 tree images where pinecones had been visually observed were combined into an image montage and passed together through an image-based automated classification and pinecone-counting process. For the comparison of manual image-based and automatic image-based counting, the R2 was 0.78.

Figure 3.

The correlation between manual image-based counts (a best-case scenario) and manual field-based counts (real ground truthing) for 12 MP modified-RGB (G-red-near infrared) images collected at the IRTA plantation site taken 15 m above ground level. The R2 = 0.24 here is based on all 33 trees for which the pinecones were counted in the field (R2 = 0.35 excluding the image-based 0 s). The automatic image-based pinecone count increases to R2 = 0.725 but note that in this case it includes only six trees, as detailed below.

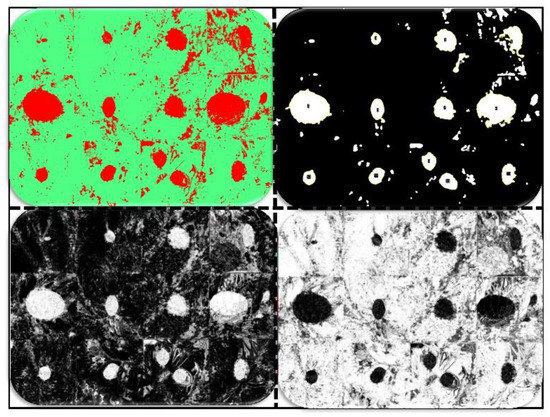

In general, the WEKA fastRandomForest classification results, as shown in detail in Figure 4 of the modified NDVI and RGB images taken at the ground level, were excellent though quite computationally expensive (heavy on processing and memory), though the algorithm can be trimmed to include only the basic input image as an original jpeg file and with only a limited amount of texture analyses (part of its success is in the textural information extracted from a moving window analysis of image variance as well as the spectral separation of the image pixels (information based on a single pixel)). In this instance, false positives vs. false negatives are shown with a bootstrapping and an out-of-bag error of 0.415% for the image pixel-based classification, though the quantification of pinecones may itself cause some additional errors as the filtering and counting needs to include approximate pinecone size in pixels. Similar results were obtained for RGB images taken from the field and UAV.

Figure 4.

Top left, a modified-RGB image montage fastRandomForest classification map; top right, a pinecone count using the FIJI macro code for counting pinecones from the trees from above (the pinecone size filter should be adjusted); bottom left, the “pinecone” probability map with high pinecone probabilities in white; and bottom right, the “other” probability map with a low probability of being in the “other” class in black (indicating pinecones).

4. Discussion

This study represents the first known documented attempt at automatic pinecone quantification using UAV imaging techniques, as far as the authors were aware at the time of submission. We have attempted a systematic approach to this challenge and have met with mixed success. While large spectral libraries exist, we still felt it best to start with a preliminary study of the spectral separability of the tree components for the specific case of segmenting Pinus pinea pinecones as shown in Figure 1. This helped us to identify the need for separate red and infrared light coverage for the optimal spectral differentiation of pinecones, needles, and woody branches. As the modified RGB cameras often combine red and near-infrared light through filter modifications, they did not necessarily represent an improvement over the generally high quality of images from RGB cameras themselves, with regards to the extraction of both color and texture features. Figure 2 demonstrates the ease of segmentation of the pine tree canopies in this case, which is much easier in the earlier growth stages, as shown in this study, but this becomes complicated rapidly once the canopies overlap. Figure 3 and Figure 4 show our limited results at the tree level while also more promising visual results from images taken at the ground level, suggesting that there could be some potential for image analyses to provide for pinecone productivity assessments from a profile perspective, depending on the tree-canopy density.

Numerous studies have been developed along the lines of tree crown delineation at different spatial scales, including UAVs [7,8], yet the very fine detail required for the segmentation of pinecones and other fruits using UAVs is relatively recent [9,10,11]. Malik et al. [9] were successful with RGB images from UAVs but had the benefit of segmenting bright orange fruit from mostly just green leaves and used a simpler k-means segmentation statistical approach. Likewise, Qureshi et al. [10] also achieved good results, using a combined k-nearest neighbor pixel classification and contour segmentation and support vector approaches but on more colorfully distinct mango tree canopies. Sethy et al. [11] present a thoughtful review of several statistical advancements focused on more colorful fruits rather than pinecones. In many cases, the counting of expertly pruned commercial fruit trees bearing easily distinguished fruits has proven to be less of a challenge than counting pinecones in forest plantations. Furthermore, the Pinus pinea pinecones that are ready for harvest and of the most interest mature in their third year, resulting in them being largely masked from view, making the issue more of a matter of perspective of observation than of algorithm or spectral separability capacities. In this case, it may make sense to use either aerial off-nadir views or terrestrial platforms for image-data capture.

5. Conclusions

Firstly, we tentatively conclude that there may be some options for the automated or semi-automatic estimation of pinecones in trees of Pinus pinea. This may be considered somewhat different from the original goal or challenge of counting pinecones using UAVs. Based on the comparisons of manual field-based pinecone counting with manual image-based pinecone counting, there are a very low number of pinecones visible in the tree crowns when observed directly from above using a UAV. On the other hand, the manual image-based pinecone counts correlated well with the automatic image-based pinecone counts at both the UAV and field levels for the modified-RGB camera. Similar results were observed from the RGB images. Finally, there is a chance that the combination of some additional tree level data along with the pinecone estimation from UAVs may help to improve the results (a multivariate model with tree allometry). To this end, for future work we may propose an improved modified-RGB camera system with integrated UAV GPS for the optimal combination of GPS integration with NIR spectral coverage.

Author Contributions

Conceptualization, S.C.K., M.B., X.L. and M.P.; methodology, S.C.K., M.L.B. and J.S.; software, S.C.K. and J.A.F.G.; validation, S.C.K., M.L.B. and J.S.; formal analysis, S.C.K., M.L.B. and J.S.; investigation, S.C.K., M.L.B. and J.S.; resources, X.L., M.P.; data curation, S.C.K., M.L.B., J.S., X.L. and M.P.; writing—original draft preparation, S.C.K.; writing—review and editing, S.C.K., M.B. and M.P.; visualization, S.C.K.; supervision, S.C.K., J.L.A. and M.P.; project administration, S.C.K., J.L.A. and M.P.; and funding acquisition, S.C.K., M.B. and M.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been supported by the Project POCTEFA Quality Pinea and the Centre National de la Propriété Forestière (CNPF). Support was also provided by GO Pinea (operating group), co-financed by the European Agricultural Fund for Rural Development (FEADER) and the General State Administration (AGE). S.C. Kefauver is wholly supported by the MINECO Ramon y Cajal Fellowship RYC-2019-027818-I.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No data reported.

Acknowledgments

We would also like to thank and acknowledge the field trial management, ancillary data, and access contributions of Neus Aletà (IRTA Torre Marimon), Carles Vaello, and Jaime Coello (CTFC).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Loewe-Muñoz, V.; Balzarini, M.; Álvarez-Contreras, A.; Delard-Rodríguez, C.; Navarro-Cerrillo, R.M. Fruit productivity of stone pine (Pinus pinea L.) along a climatic gradient in Chile. Agric. For. Meteorol. 2016, 223, 203–216. [Google Scholar] [CrossRef]

- Aguirre, A.; del Río, M.; Condés, S. Productivity estimations for monospecific and mixed pine forests along the Iberian Peninsula aridity gradient. Forests 2019, 10, 430. [Google Scholar] [CrossRef]

- Loewe-Muñoz, V.; Balzarini, M.; Delard, C.; del Río, R.; Álvarez, A. Inter-annual variability of Pinus pinea L. cone productivity in a non-native habitat. New For. 2020, 51, 1055–1068. [Google Scholar] [CrossRef]

- Belmonte, A.; Sankey, T.; Biederman, J.A.; Bradford, J.; Goetz, S.J.; Kolb, T.; Woolley, T. UAV-Derived estimates of forest structure to inform ponderosa pine forest restoration. Remote Sens. Ecol. Conserv. 2020, 6, 181–197. [Google Scholar] [CrossRef]

- Surový, P.; Almeida Ribeiro, N.; Panagiotidis, D. Estimation of positions and heights from UAV-sensed imagery in tree plantations in agrosilvopastoral systems. Int. J. Remote Sens. 2018, 39, 4786–4800. [Google Scholar] [CrossRef]

- Arganda-Carreras, I.; Kaynig, V.; Rueden, C.; Eliceiri, K.W.; Schindelin, J.; Cardona, A.; Seung, H.S. Trainable Weka Segmentation: A machine learning tool for microscopy pixel classification. Bioinformatics 2017, 33, 2424–2426. [Google Scholar] [CrossRef] [PubMed]

- Weinstein, B.G.; Marconi, S.; Aubry-Kientz, M.; Vincent, G.; Senyondo, H.; White, E.P. DeepForest: A Python package for RGB deep learning tree crown delineation. Methods Ecol. Evol. 2020, 11, 1743–1751. [Google Scholar] [CrossRef]

- Gu, J.; Grybas, H.; Congalton, R.G. A comparison of forest tree crown delineation from unmanned aerial imagery using canopy height models vs. spectral lightness. Forests 2020, 11, 605. [Google Scholar] [CrossRef]

- Malik, Z.; Ziauddin, S.; Shahid, A.R.; Safi, A. Detection and counting of on-tree citrus fruit for crop yield estimation. Int. J. Adv. Comput. Sci. Appl. 2016, 7, 519–523. [Google Scholar] [CrossRef]

- Qureshi, W.S.; Payne, A.; Walsh, K.B.; Linker, R.; Cohen, O.; Dailey, M.N. Machine vision for counting fruit on mango tree canopies. Precis. Agric. 2017, 18, 224–244. [Google Scholar] [CrossRef]

- Sethy, P.K.; Panda, S.; Behera, S.K.; Rath, A.K. On tree detection, counting & post-harvest grading of fruits based on image processing and machine learning approach-a review. Int. J. Eng. Technol. 2017, 9, 649–663. [Google Scholar] [CrossRef][Green Version]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).