Abstract

Class imbalance is a common issue in machine learning, often causing bias in learning models toward the majority class and leading to poor predictive performance for the minority class data. To address the class imbalance problem, this paper presents the autoencoder-based generative adversarial network-synthetic minority over-sampling technique-particle swarm optimization (AEGAN-SMOTE-PSO) model. We compared the AEGAN-SMOTE-PSO model with three other state-of-the-art oversampling techniques. Those experimental results demonstrate that the AEGAN-SMOTE-PSO model effectively improves the classification performance of two support vector machine prediction models on two imbalanced medical cases. Compared to the other three oversampling methods, the AEGAN-SMOTE-PSO model effectively provides satisfactory predictive performance in terms of recall, precision, and F1-score.

1. Introduction

Machine learning and deep learning have been widely applied in medicine. They have shown great effectiveness in disease classification and medical diagnosis, aiding doctors in making correct clinical decisions [1]. However, class imbalance is frequently encountered in actual medical data. In breast cancer datasets, for example, benign tumor samples often outnumber malignant ones [2]. The class imbalance may cause the classification model to tend to predict the majority class during training [3], due to insufficient representations of the minority class. If the learning model cannot accurately identify malignant tumor samples, it may lead to delayed treatment, thereby reducing therapeutic drug efficacy.

Currently, various data-driven solutions have been proposed to address the class imbalance problem. Those solutions include under-sampling, over-sampling, and hybrid-sampling. In terms of under-sampling, it can effectively improve classifiers’ classification accuracy by randomly removing samples from the original dataset. On the downside, it may lead to the loss of key information in the majority class samples, which would affect the model’s ability to distinguish the majority class [4]. Among over-sampling methods, the synthetic minority over-sampling technique (SMOTE) is one of the most representative techniques. It increases the number of minority class examples by generating synthetic samples, thereby balancing class distribution. Additionally, compared with other oversampling techniques, SMOTE is less prone to overfitting [5]. It synthesizes new samples by performing linear interpolation on minority class samples [6]. However, when the minority class is sparsely distributed, SMOTE might produce unrepresentative examples (i.e., noise), which may negatively affect the performance of traditional learning models [7]. On the other hand, classical generative adversarial networks (GANs) are often used to learn the data distribution of the minority class to produce new samples resembling the original minority class data [8]. However, GAN has the mode collapse problem during training, which generates high-quality but low-variant synthetic samples, which may be invalid for improving the performance of predictive models.

As a result, we developed a novel hybrid oversampling model, termed AEGAN-SMOTE-PSO, which integrates an autoencoder GAN (AEGAN) model and SMOTE with a particle swarm optimization (PSO) algorithm. The developed generative model contains an autoencoder deep learning network, which enables the generative model to extract deep-detailed features to generate high-quality samples. Different from traditional GAN, AEGAN effectively alleviates the mode collapse problem during training [9,10]. Additionally, it can generate more diverse samples via a combination of SMOTE and GAN [11]. Using PSO, potential noise and low-quality samples can be filtered out [12]. Moreover, the classification performance of the predictive model for minority class data can be further enhanced [13].

To evaluate the effectiveness of the AEGAN-SMOTE-PSO model, we compared it with three oversampling methods: SMOTE, SMOTE-PSO, and AEGAN (without SMOTE-PSO). The three evaluation metrics included recall, precision, and F1-score to measure the classification results of these algorithms. Two breast cancer datasets—downloaded from the UCI repository [14], the breast cancer Wisconsin original, and the breast cancer Wisconsin diagnostic datasets—were used in the experiment. Two SVM classifiers with two kernel functions: polynomial (poly) and radial basis function (rbf) were deployed to verify efficacy in using these oversampling methods. Experimental results demonstrated that the AEGAN-SMOTE-PSO model outperforms the other oversampling methods across the three metrics for two SVM prediction models.

2. Related Works

2.1. GAN

In the GAN architecture, a random latent variable is fed into the generator to produce synthetic samples . The discriminator is used to determine whether an input sample comes from the real data distribution . The training of GAN is carried out through a minimax adversarial learning process. The objective function is expressed as Equation (1).

The training objective of the generator is to produce artificial samples that can fool the discriminator. Its loss function is expressed as Equation (2).

where D(.) represents the probability that the input sample is from the real data. By minimizing this function, the generator aims to increase the probability that the artificial samples are judged as real samples. Conversely, the training objective of the discriminator is to correctly classify the input samples as real or artificial samples. Its loss function is expressed as Equation (3).

2.2. PSO

To select the most representative synthetic samples, we adopted a PSO algorithm. PSO elucidates the optimal artificial samples via simulating the movement behavior of particles in the feature space. The velocity and position of each particle are updated based on its personal best solution and the global best solution. The formulas for updating velocity and position are expressed as Equations (4) and (5).

where and represent the current position and velocity of the -th particle at iteration , respectively. is the personal best position, and is the global best position. The parameters , , and denote the inertia weight and acceleration coefficients, while and are random variables uniformly distributed in the range [0, 1].

3. Method

3.1. Procedure

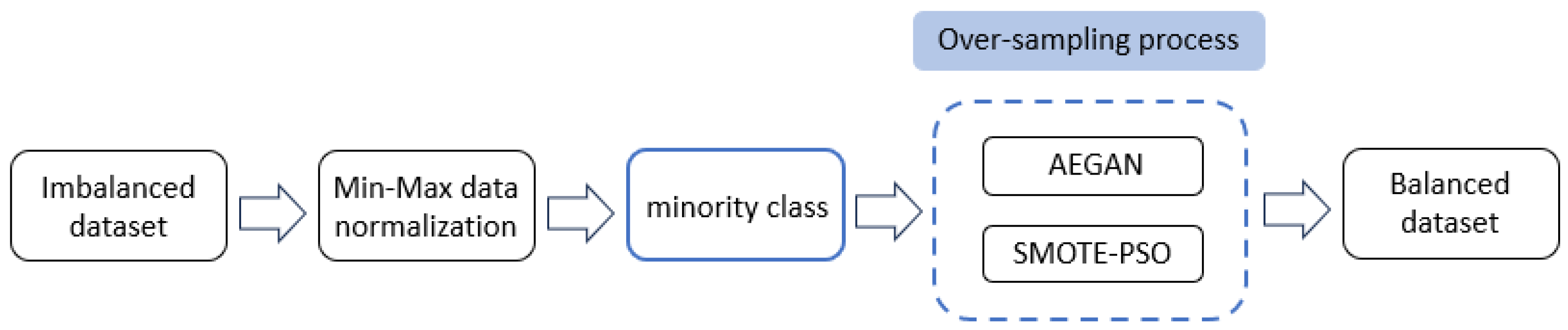

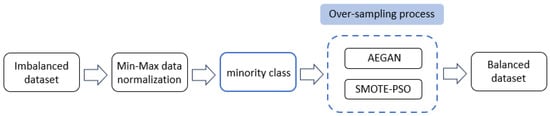

The AEGAN-SMOTE-PSO method was applied to generate samples for the various examples of the minority class. After using AEGAN for generating new minority class samples, SMOTE was used to generate synthetic minority class examples, and PSO was applied to select the best synthetic samples among them. Figure 1 displays the implementation procedure of the proposed AEGAN-SMOTE-PSO method.

Figure 1.

Implementation of the AEGAN-SMOTE-PSO model.

Before performing AEGAN-SMOTE-PSO, we applied min-max normalization to scale the original imbalanced dataset into the domain [0, 1]. The mathematical formula for the normalization is defined as Equation (6).

where represents the original feature value, while and denote the minimum and maximum values of features in datasets, respectively.

3.2. Autoencoder GAN

The generator is composed of an autoencoder neural network, where the number of neurons in the hidden layers is set to 64, 32, and 64. Both the hidden and output layers employ the sigmoid activation function to ensure the features of the generated sample are constrained within the range [0, 1]. The sigmoid function is defined as Equation (7).

During the training process, the discriminator architecture consists of a single hidden layer with 64 neurons. In this study, the discriminator is trained with a batch size of 1. The AEGAN is trained for 50 iterations, with each iteration updating all weights of AEGAN using noises in a 64-dimensional space. The weights of the generator and discriminator are optimized using the Adam optimizer. In the proposed method, the number of generated samples is set to half the difference between the number of majority class samples and the minority class sample size. The mathematical formulation is expressed as Equation (8).

where denotes the number of generated samples with AEGAN, and () represents the number of samples in the majority (minority) classes, respectively. In the feature space, SMOTE is used to identify the nearest neighbors for each minority class sample and generates new synthetic samples through linear interpolation. The parameters of SMOTE are set to k_nei = 1 and n_nei = 1, meaning that each minority class sample is interpolated with only one nearest neighbor. The interpolation formula is defined as Equation (9):

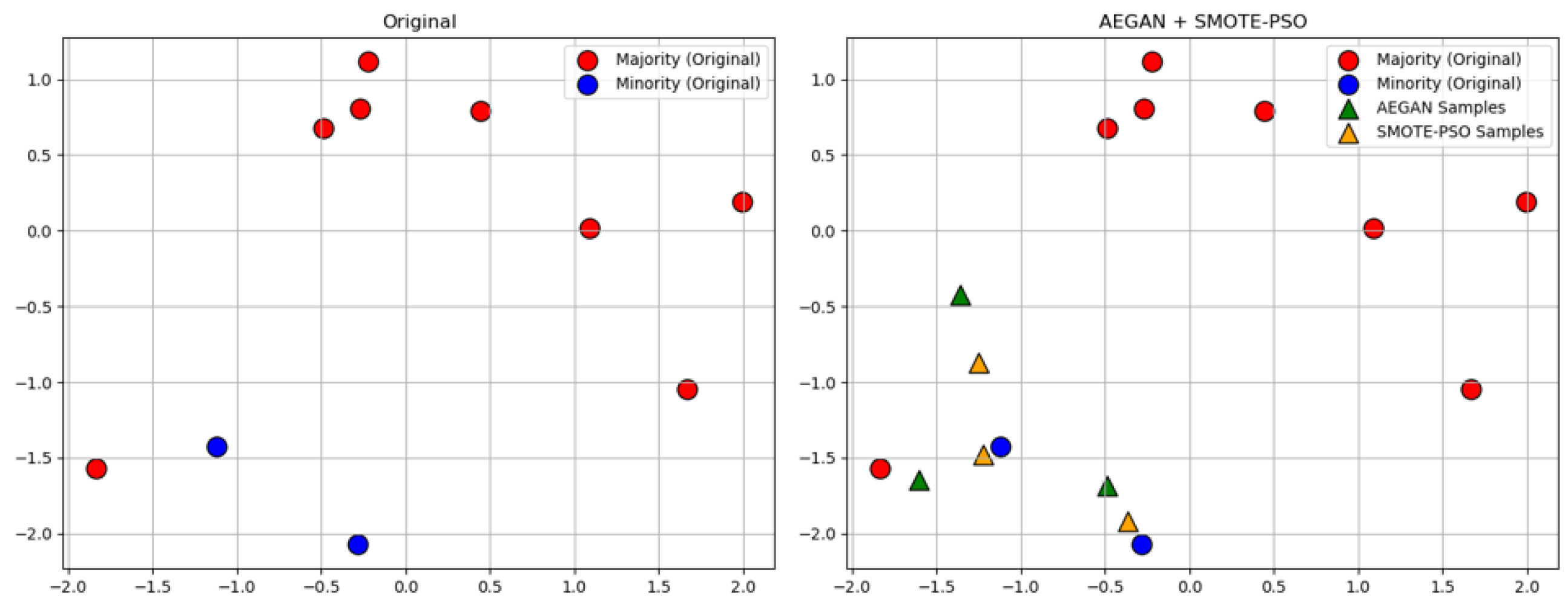

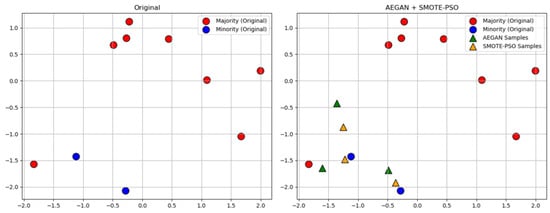

where represents ith original minority class sample, while is a neighboring sample selected from its nearest neighbors. The parameter is a real number randomly chosen from the interval [0, 1]. For example, half of the synthetic minority class samples are generated by AEGAN and the other half by SMOTE-PSO, as shown in Figure 2.

Figure 2.

Generate synthetic samples using AEGAN-SMOTE-PSO.

4. Results

After completing the balance of the imbalanced data using AEGAN-SMOTE-PSO, based on this balanced training dataset, we built two SVM classification models with two kernel functions: polynomial (SVM_poly) and radial basis function (SVM_rbf).

4.1. Case Description

We utilized two breast cancer datasets: the Wisconsin original and the Wisconsin diagnostic data. The Wisconsin original data and Wisconsin diagnostic data contain 699 and 569 samples with 9 and 30 features, respectively. To ensure the stability and reliability of the predictive results, the experimental process was repeated 30 times.

4.2. Experiment

For SVM with polynomial and radial basis function kernels, the cost parameter was set at 1 and the polynomial degree 3. Additionally, the gamma parameter was set to “scale.”, calculated by the following Equation (10).

where is the number of features and Var(X) is the variance of X. In our experiments, we set the imbalance ratio to 9, meaning that the number of samples in the majority class was nine times that of the minority class. This is used to evaluate the effectiveness of our method under imbalanced conditions. After balancing the imbalanced data, we built the SVM model based on the balanced training dataset. In this study, we evaluate the performance of the model with a confusion matrix, as shown in Table 1. The four valuation indicators can be used to assess the classification performance of imbalanced data.

Table 1.

Confusion matrix.

In this study, we evaluated prediction accuracy using recall, precision, and F1-score indicators, as expressed in Equations (11)–(13).

where recall measures the proportion of actual positive instances that are correctly identified by the classification model, precision indicates the proportion of predicted positive instances that are truly positive, and F1-score is a harmonic mean of recall and precision.

4.3. Experimental Results

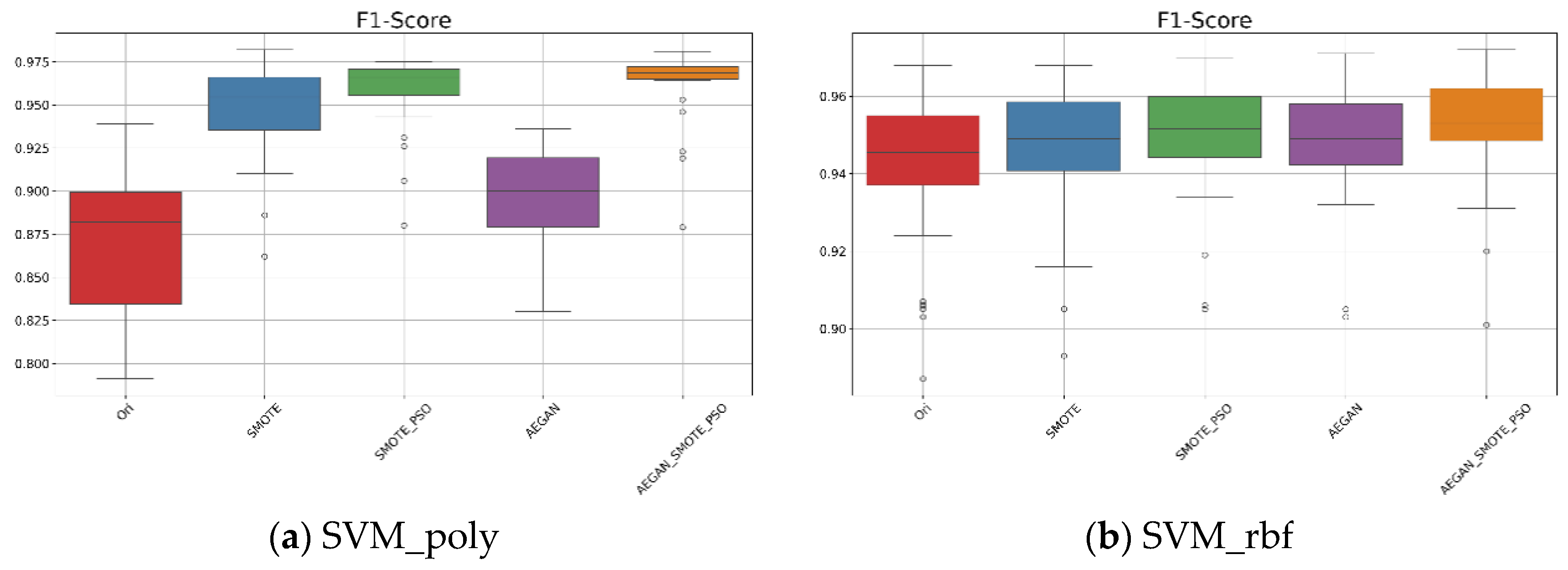

4.3.1. Wisconsin Original Data

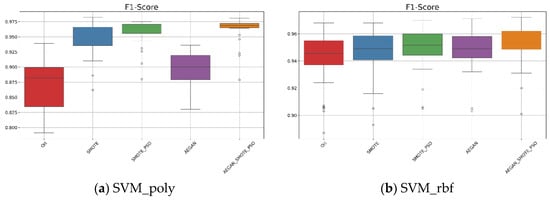

According to Table 2, when the kernel is set to polynomial, the AEGAN-SMOTE-PSO model showed an average F1-score of 0.962 with a standard deviation of 0.021 for the SVM_poly model. From Figure 3, these experimental results demonstrate that the proposed AEGAN-SMOTE-PSO method (orange box plot) outperforms Ori for using original dataset (red box plot), SMOTE (blue box plot), SMOTE-PSO (green box plot), and AEGAN (purple box plot). Additionally, as illustrated in Figure 3a, for the SVM_poly model, the F1-score results of the AEGAN-SMOTE-PSO model are more concentrated with a narrower interquartile range. This further highlights the AEGAN-SMOTE-PSO model’s robustness and its ability to enhance classification performance. When the kernel function is switched to RBF, AEGAN-SMOTE-PSO still yields the best results. As shown in Figure 3b and Table 2, the average F1-score was 0.952, slightly higher than that of SMOTE-PSO, which was 0.949.

Table 2.

Average and standard deviation of the two SVM models using the techniques on the Wisconsin original data.

Figure 3.

Boxplots for the SVM results on the Wisconsin original data.

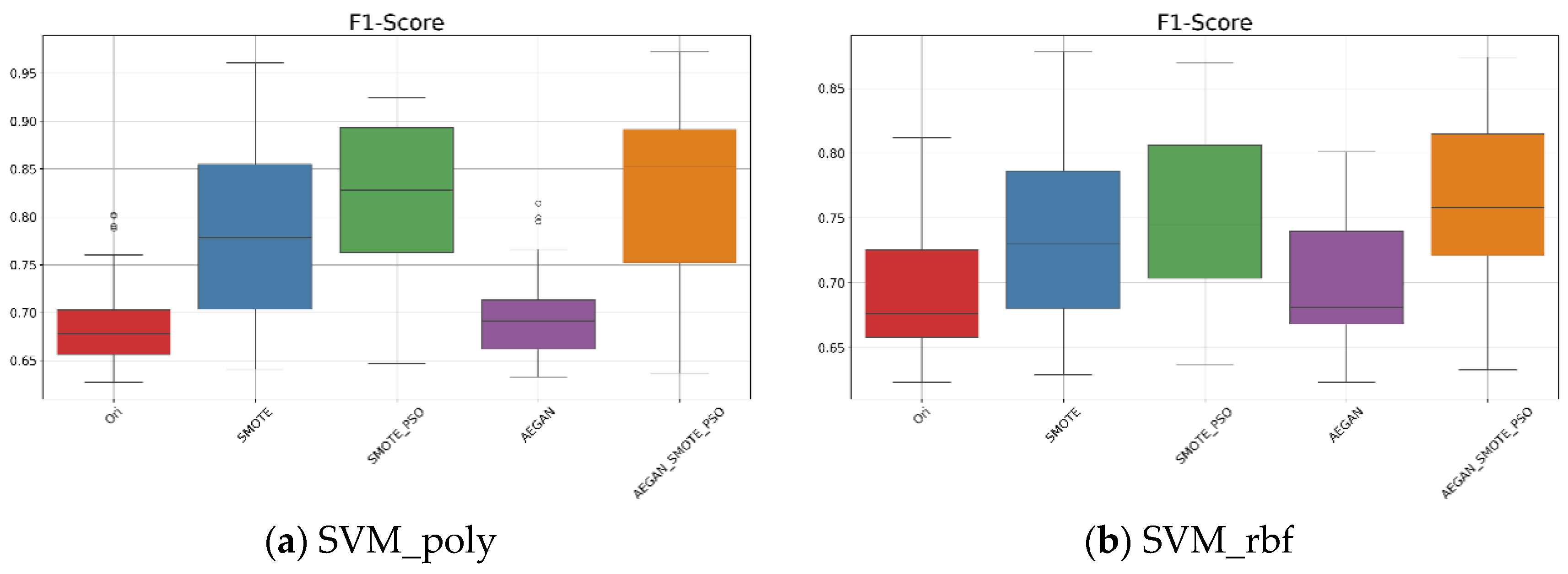

4.3.2. Wisconsin Diagnostic Data

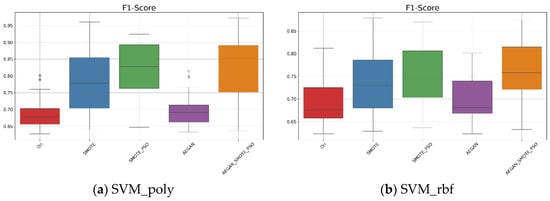

In Table 3 and Figure 4a, based on the original dataset, when the kernel function is set to polynomial, it can be seen that the average F1-score was 0.691. After applying the AEGAN-SMOTE-PSO model, the F1-score was increased to 0.823. The model effectively generated representative synthetic samples. The standard deviation of the F1-score was 0.094, indicating that the classification results using the AEGAN-SMOTE-PSO model are relatively stable. When the kernel function is changed to RBF, as illustrated in Figure 4b, the AEGAN-SMOTE-PSO model demonstrates the best predictive performance among all compared methods, where its average F1-score reached 0.762.

Table 3.

Average and standard deviation of the two SVM models using the techniques on the Wisconsin diagnostic data.

Figure 4.

Boxplots for the SVM results on the Wisconsin diagnostic data.

5. Conclusions

In medicine, class imbalance may lead to incorrect disease classification and misdiagnosis. To tackle the class imbalance problem, we developed the AEGAN-SMOTE-PSO model. By combining AEGAN with SMOTE-PSO, the diversity of the minority class examples is enhanced to effectively boost predictive performance for imbalanced data. A comparative analysis was performed using three oversampling methods—SMOTE, SMOTE-PSO, and AEGAN—alongside the original data. The experimental results indicate that under an imbalance ratio of nine, the developed AEGAN-SMOTE-PSO model consistently outperforms the other four methods for two SVM models across three evaluation indicators. This demonstrates its superior classification performance and effectiveness in addressing class imbalance. In the future, the developed model needs to be extended to other imbalanced medical datasets.

Author Contributions

Software, writing—original draft preparation, S.-E.J.; visualization, writing—original draft preparation, Y.-Y.L.; methodology, conceptualization, writing—review and editing, L.-S.L.; formal analysis C.-H.L.; formal analysis, H.-Y.C.; software, J.-S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Science and Technology Council grant contract NSTC 113-2221-E-227-004-MY2.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The experimental dataset is openly available at the UCI machine learning repository.

Acknowledgments

This study was supported by the National Science and Technology Council, Taiwan, and the National Taipei University of Nursing and Health Sciences, Taiwan.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bhavsar, K.A.; Singla, J.; Al-Otaibi, Y.D.; Song, O.-Y.; Zikria, Y.B.; Bashir, A.K. Medical diagnosis using machine learning: A statistical review. CMC-Comput. Mater. Contin. 2021, 67, 107–125. [Google Scholar] [CrossRef]

- Subasree, S.; Sakthivel, N.; Shobana, M.; Tyagi, A.K. Deep learning based improved generative adversarial network for addressing class imbalance classification problem in breast cancer dataset. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 2023, 31, 387–412. [Google Scholar] [CrossRef]

- Walsh, R.; Tardy, M. A comparison of techniques for class imbalance in deep learning classification of breast cancer. Diagnostics 2022, 13, 67. [Google Scholar] [CrossRef] [PubMed]

- Mishra, S. Handling imbalanced data: SMOTE vs. random undersampling. Int. Res. J. Eng. Technol. 2017, 4, 317–320. [Google Scholar]

- Van den Goorbergh, R.; van Smeden, M.; Timmerman, D.; Van Calster, B. The harm of class imbalance corrections for risk prediction models: Illustration and simulation using logistic regression. J. Am. Med. Inf. Assoc. 2022, 29, 1525–1534. [Google Scholar] [CrossRef] [PubMed]

- Elreedy, D.; Atiya, A.F. A comprehensive analysis of synthetic minority oversampling technique (SMOTE) for handling class imbalance. Inf. Sci. 2019, 505, 32–64. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 139–144. [Google Scholar]

- Lazarou, C. Autoencoding generative adversarial networks. arXiv 2020, arXiv:2004.05472. [Google Scholar] [CrossRef]

- Zou, Y.; Wang, Y.; Lu, X. Auto-encoding generative adversarial networks towards mode collapse reduction and feature representation enhancement. Entropy 2023, 25, 1657. [Google Scholar] [CrossRef]

- Sharma, A.; Singh, P.K.; Chandra, R. SMOTified-GAN for class imbalanced pattern classification problems. IEEE Access 2022, 10, 30655–30665. [Google Scholar] [CrossRef]

- Cervantes, J.; Garcia-Lamont, F.; Rodriguez, L.; López, A.; Castilla, J.R.; Trueba, A. PSO-based method for SVM classification on skewed data sets. Neurocomputing 2017, 228, 187–197. [Google Scholar] [CrossRef]

- Lu, J.; Zhang, C.; Shi, F. A classification method of imbalanced data base on PSO algorithm. In Proceedings of the Social Computing: Second International Conference of Young Computer Scientists, Engineers and Educators, ICYCSEE 2016, Harbin, China, 20–22 August 2016; Proceedings, Part II 2. pp. 121–134. [Google Scholar]

- Asuncion, A.; Newman, D. UCI Machine Learning Repository; Donald Bren Hall: Irvine, CA, USA, 2007. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.