Abstract

With the implementation of the 2022 revised curriculum in South Korea, significant changes are taking place in education as of 2025. This curriculum emphasizes self-directed learning and a proactive attitude toward life, which are essential in the era of digital transformation. Accordingly, there is a growing need to establish systematic environments that support self-directed learning. The number of instructional hours for information education has been doubled to 34 h in elementary school and 68 h in middle school, highlighting the increased importance of the subject. In addition, 2025 marked the official introduction of AI-based digital textbooks. However, their diversity and functionality as effective learning resources remain limited. In programming education, most studies on learning difficulty have focused on basic concepts, while research on more advanced topics, such as object-oriented programming, has been insufficient. Therefore, this research aims to develop a digital learning tool that supports high school students in engaging in self-directed learning. Specifically, it focuses on the “Implementing Classes for Objects” unit from the MiraeN textbook and provides customized support within a tablet-based learning environment. The tool also includes chatbot-based problem generation and feedback functions. To analyze the accuracy and level of the problems generated by the chatbot, both the researcher and ChatGPT were used simultaneously.

1. Introduction

With the implementation of the 2022 Revised National Curriculum, significant changes are taking place in the Korean education landscape as of 2025. The curriculum, announced by the Ministry of Education, is designed to cultivate learners’ ability to proactively respond to the uncertainties of the digital transformation era and to foster self-directed agency in managing their own lives and learning. Notably, the vision of an ideal learner includes individuals who actively shape their career paths and lives, thereby emphasizing the importance of self-management competencies [1]. This focus on learner autonomy underscores the need to build educational environments that systematically support students’ self-directed learning.

In line with this curricular shift, the increased instructional hours allocated to computer science education are also noteworthy. Under the 2022 Revised Curriculum, students are now required to complete at least 34 h of information education in elementary school and 68 h in middle school—double the hours mandated by the 2015 curriculum (17 h in elementary school and 34 h in middle school). This change reflects a growing recognition of the importance of computer science education.

The year 2025 also marked the introduction of AI-based digital textbooks, which represent a major turning point in the classroom [2]. However, according to press releases from the Ministry of Education, only a limited number of publishers—namely, Chunjae Textbook and Geumseong Publishing—have passed the official screening process for AI digital textbooks. This limitation presents challenges in providing diverse and effective digital textbook options [3]. Furthermore, the current digital textbooks fail to fully leverage the benefits of digital environments and fall short of serving as effective resources for supporting students’ self-directed learning.

Meanwhile, in the domain of programming education, most studies on learning difficulty levels have been limited to basic functions, with relatively little research on the systematic analysis of more advanced topics such as object-oriented programming. This research gap hinders the development of effective learning support tools for advanced programming concepts covered in high school computer science curricula.

In response to these challenges, this research aims to develop a digital learning tool that enables high school students to engage in self-directed learning of computer science content anytime and anywhere using tablet devices. Specifically, the tool focus on “Implementing Classes for Objects” chapter in Unit 3, “Algorithms and Programming,” of the MiraeN textbook. The goal is to create a learning support environment for object-oriented programming concepts that have not been sufficiently addressed in previous research.

The remainder of this article is organized as follows. Section 2 reviews the theoretical background and related studies on self-directed learning, tablet-based learning, and the use of generative AI in education, and provides a rationale for platform and textbook selection. Section 3 outlines the design principles and implementation features of the learning support tool and defines the criteria for problem generation and validation. Section 4 evaluates the performance of the developed system by analyzing the quality of generated questions, the appropriateness of feedback provided to learners, and the quality of chatbot responses. Section 5 concludes the research by summarizing the findings and discussing limitations and future research directions.

2. Background Knowledge

2.1. Self-Directed Learning

Self-directed learning competency refers to the abilities and characteristics necessary for individuals to independently plan, manage, and execute their own learning, as well as to acquire and develop the required knowledge and skills [4]. It includes the capacity and attitude to independently plan, organize, implement, and evaluate learning activities. The 2022 Revised National Curriculum emphasizes self-directedness not merely as a learning methodology but as a core competency essential for navigating the uncertainties of a future society. Accordingly, the establishment of digital learning environments that support self-directed learning has become crucial.

2.2. Programming Education Tools Based on Language Models

2.2.1. Trends in Personalized Learning Using Language Models

Personalized learning methods using various language models have garnered significant attention. It has been shown that ChatGPT-4o can tailor educational content and styles according to individual needs and preferences, thereby overcoming limitations of traditional classroom-based learning [5]. In fact, Korean universities are actively promoting personalized learning with ChatGPT. For example, in 2024, Inha University’s Software-Centered University Project hosted a competition for learning strategies using ChatGPT. Likewise, Sungkyunkwan University established a ChatGPT Research Committee and Task Force, and launched an official website offering comprehensive guidance, usage examples by students and faculty, and educational models integrating AI.

2.2.2. Utilization of Large Language Models (LLMs)

LLMs are foundational models trained on massive text datasets, equipped with the ability to understand and generate natural language [6]. With the advancement of LLMs and their integration with natural language processing (NLP) technologies, a wide range of AI-powered applications have emerged. Representative LLMs include OpenAI’s GPT series, Google’s Gemini, and Anthropic’s Claude. These models utilize deep learning-based Transformer architectures to contextually understand user input and generate coherent text. In particular, OpenAI’s ChatGPT demonstrates high performance across tasks such as Q&A, text summarization, content editing, and even educational problem generation. LLM-based problem generation technologies enable the delivery of personalized learning content tailored to individual learners’ levels and needs. Additionally, they automate the traditional manual process of developing educational problems, greatly improving the efficiency and scalability of content creation.

2.3. Image-Based Learning and Handwriting Recognition Technologies

2.3.1. Educational Effects of Visual Materials and Note-Taking

The core educational functions of visual materials can be categorized into four roles [3]. First, they directly or indirectly explain the objectives or content of a subject. Second, they help clarify abstract or inferential textual content through visual representation. Third, they stimulate learners’ curiosity, motivation, imagination, and creativity. Fourth, they serve as effective tools for delivering textbook content in a clearer and more accessible manner [3,6,7]. In the case of note-taking, structured organization techniques such as visual mapping and marking may significantly influence learning outcomes [8]. Therefore, the educational effects of visual learning and note-taking must also be considered within digital learning environments.

2.3.2. Optical Character Recognition (OCR)

OCR is a computer vision technology that extracts textual information from unstructured documents, such as images or portable document formats, into digital form. Modern OCR systems employ deep learning models like convolutional neural networks or vision transformers (ViTs) to extract visual features from images and combine them with language models to recognize text. Recent multimodal models, such as those combining GPT with ViT-based visual encoders, possess the capability to accurately recognize and interpret various forms of text by leveraging both visual features and contextual language inference. These technological advancements suggest the potential to digitize printed textbooks and handwritten notes, making them usable as input data for AI-based problem generation systems.

3. Methodology

3.1. Platform Selection

With increased access to digital devices, there is a wider range of platforms available for implementing learning support tools. To determine the most suitable platform, a survey was conducted among 42 middle and high school students. Results showed that 71.4% of respondents owned tablet PCs and frequently used them for academic purposes. Utilizing widely available devices minimizes the need for additional infrastructure. Moreover, tablet PCs support both keyboard typing and stylus-based handwriting, allowing for a balanced integration of digital and analog learning interactions.

The educational effectiveness of tablets has been supported by this research. Studies indicate that while there is no statistically significant difference in pre-existing academic conditions between groups using tablets and those using handwritten notes, the tablet group showed higher engagement, academic enthusiasm, and achievement. From a technical standpoint, this research selected Android-based tablets due to their superior API compatibility and open development environment. The Android platform facilitates seamless integration with external services and offers a favorable ecosystem for educational application development [9].

3.2. System Design

The main features of the proposed system are shown in Table 1. The system developed in this study was designed based on a client-server architecture, in which the Android application (client) is seamlessly integrated with cloud services such as Firebase Realtime Database and the OpenAI API.

Table 1.

Design of the main functions of the proposed system.

3.3. Conditions for Programming Problem Generation and Criteria for Difficulty Classification

We identified the conditions for generating programming problems and the criteria for difficulty classification based on the previous research proposed by Park and Hyun [10]. The problem types adopted in this research are presented in Table 2.

Table 2.

Problem type classification.

Drawing on the linguistic nature of programming, the classification of programming problem types was informed by the problem-type structure of the Test of English as a Foreign Language test. Among the listening and speaking categories, the speaking type has been reclassified as a written response to better align with the digital handwriting-based learning environment used in this research. The code refactoring type was excluded due to its relatively high difficulty level, while a new category—debugging—was added, which goes beyond simple error detection to include actual debugging processes. Answer formats were categorized into multiple-choice, short-answer, and descriptive types.

The programming elements selected in the referenced research [10] include identifiers such as variables, constants, literals, as well as data types, operators, expressions, control structures, and functions. However, object-oriented concepts such as instances and classes were not included. Therefore, this research referred to the 2022 revised MiraeN High School Computer Science textbook of South Korea [11] and selected problem components as operators, statements, functions, and classes. The difficulty classification criteria were divided into quantitative and qualitative components, and are defined in Table 3, based on the same referenced research [10].

Table 3.

Difficulty classification criteria.

3.4. System Implementation

The implementation environment of the system developed is as follows. This system is a mobile application designed to support high school students’ self-directed learning in computer science education. For the database, Google’s Firebase Realtime Database is utilized to ensure high compatibility with Android applications and to enable real-time storage and synchronization of user input. This facilitates immediate interaction among learners and allows effective tracking of problem-solving history. The system also integrates OpenAI’s GPT-4o and assistants API to implement AI-powered features such as personalized problem generation, handwriting recognition-based grading, and real-time conversational learning support. Additionally, reliable networking libraries like Retrofit and OkHttp are used to ensure stable HyperText Transfer Protocol communication, while the PhotoView library enables intuitive zooming of textbook images, enhancing learner engagement. Other libraries required during the development process will be flexibly incorporated as needed.

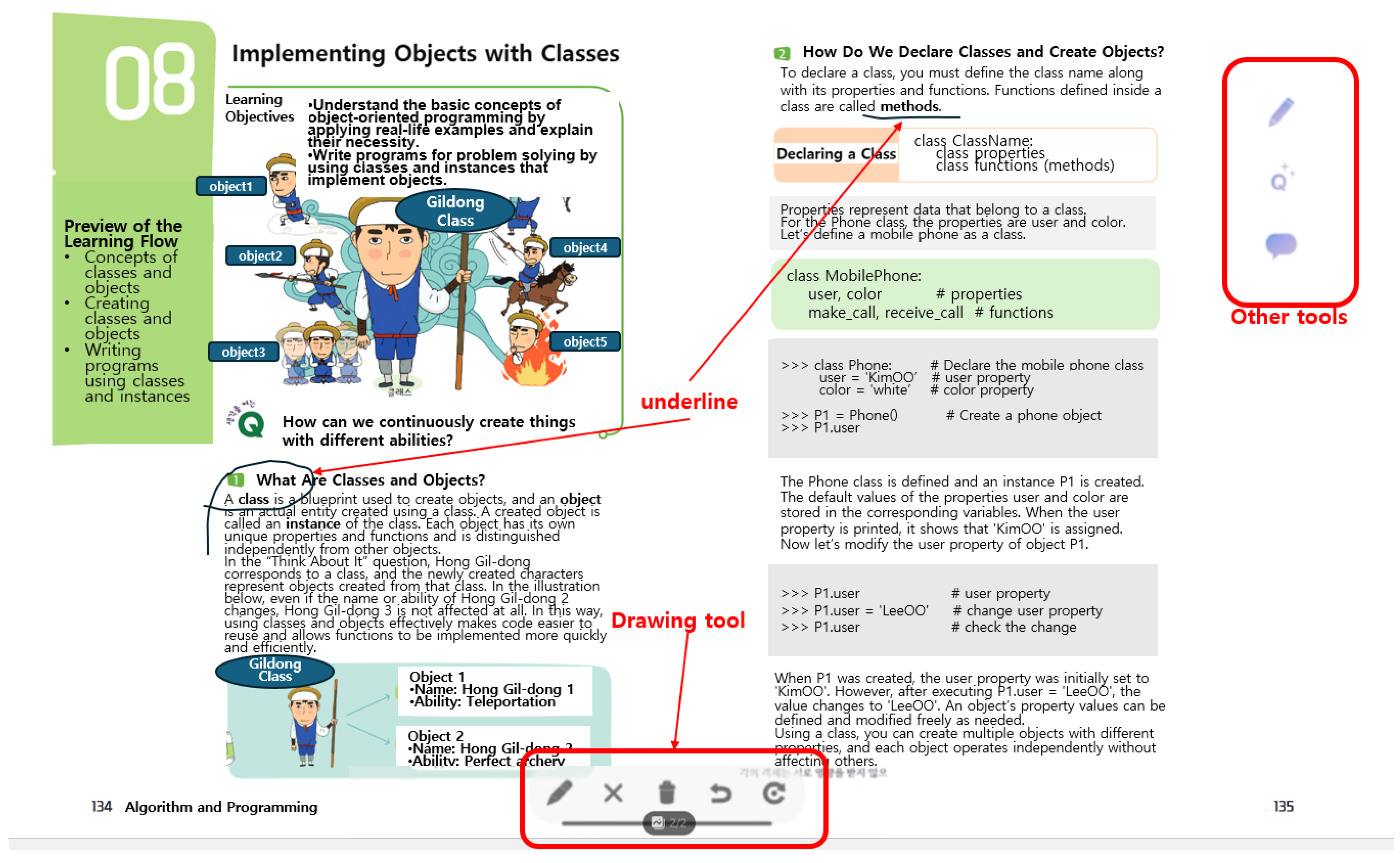

The developed system integrates the PhotoView component to enable pinch-to-zoom and panning functionality for textbook pages, with swipe navigation disabled during zoom/pan states for stability. Real-time handwriting is implemented via DrawingView, supporting pen and eraser tools, undo/redo features, and button-based interactions. Z-order values are assigned to UI components to manage display layers, ensuring drawing tools appear on top when active and are hidden when deactivated. An Example implementation screen of the interactive image-based learning environment is shown in Figure 1.

Figure 1.

Interactive image-based learning environment.

4. Results and Discussion

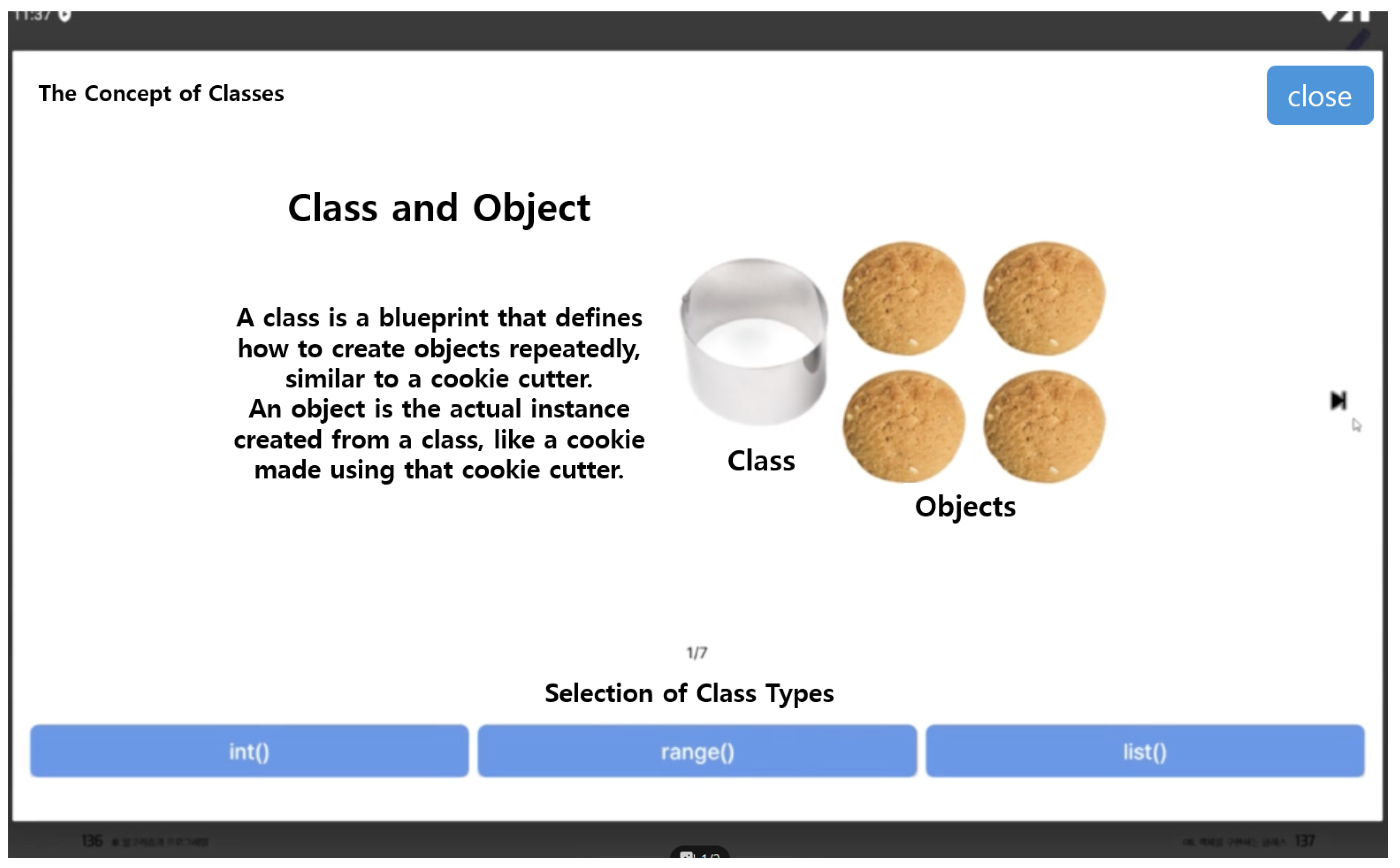

The system helps learners effectively understand complex concepts in object-oriented programming by providing hierarchical explanations integrated with visual materials. These features are implemented through ConceptExplanationDialogFragment.kt. The unit selected for this implementation is [III. Algorithms and Programming—08. Implementing Classes for Objects] from the MiraeN high school computer science textbook, and the key concepts are elaborated in detail. For example, modal dialogs that explain “Class” and “Object” with real-world analogies. Sub-concepts like int(), range(), and list() are accessible through interactive buttons, each linked to explanations and related practice problems, as shown in Figure 2. AI-generated problems are created using OpenAI Assistants and stored in Firebase, with learners selecting difficulty levels to receive customized problems. A handwriting input view allows responses via stylus, which are processed using the OpenAI Vision and Chat APIs for grading and feedback. Additionally, real-time Q&A is supported through a chatbot integrated via Retrofit, offering contextual answers aligned with textbook content.

Figure 2.

Explanation of the class concept.

The system automatically generates 50 Python3.9.X problems using a preconfigured OpenAI Assistant on the Python backend and stores them in Firebase Realtime Database. In the app, when a learner taps the AI-generated problem icon, a difficulty selection window appears. Based on the selected difficulty level, the system filters the problems in real time and randomly presents one that matches the chosen level. This allows learners to receive personalized problem sets tailored to their proficiency. A handwriting input view was implemented to allow learners to write their answers to AI-generated problems by hand. This feature supports a variety of question types, including multiple choice, short answer, and descriptive responses, providing a convenient way to input answers.

The workflow is as follows: the learner writes their response using digital handwriting. The system uses the OpenAI Vision API to recognize the handwriting and displays the extracted text in a dialog for the learner to review. Due to the unstructured nature of handwritten and descriptive responses, the system leverages the OpenAI Chat API to compare the learner’s answer with the correct one, assess the response, and provide personalized feedback, highlighting both strengths and areas for improvement.

To enable real-time Q&A with the OpenAI Assistant, the system uses a RESTful API communication structure based on the Retrofit library. When a learner taps the chat icon within the app and asks a question in natural language, the system sends the query to the assistant, receives a response, and displays it on the screen. The assistant’s responses are designed to reflect the content of the textbook, providing contextually relevant information.

A responsive UI was implemented using resource qualifiers that automatically adjust the layout based on screen size and orientation. This prevents issues such as oversized text or undersized buttons and enhances the overall user experience. A 2-pane layout optimized for tablet environments was applied, where problems appear on the left and answer inputs and explanations are displayed on the right. In addition, ViewPager2 was implemented in MainActivity.kt to allow textbook page transitions, enhanced by DepthPageTransformer to simulate the feel of turning a physical page. Concepts such as “class” and “method” are also presented as image slides, allowing smooth transitions via swiping or arrow buttons.

5. Conclusions

The developed system contributes to the field of computer science education by providing a systematic analysis of problem types and difficulty levels in object-oriented programming—a topic that has not been thoroughly addressed in prior research. Furthermore, it presents a methodological framework for designing and implementing a personalized learning support system using generative AI, thus advancing research in AI-based educational technologies.

In this study, we established eight evaluation criteria: (1) alignment with the curriculum and accuracy of object-oriented programming (OOP) concepts, (2) appropriateness and balance of difficulty, (3) diversity and originality of question types, (4) compliance with problem composition guidelines, (5) technical accuracy and code quality, (6) appropriateness of answer and distractor design, (7) quality and completeness of explanations, and (8) learner-friendliness and practical relevance. We used ChatGPT to analyze 50 automatically generated Python problems and 20 randomly selected ones.

The analysis results revealed that most problems were well-aligned with curriculum achievement standards and accurately reflected key OOP concepts such as classes, objects, and methods. Moreover, in terms of technical accuracy and code quality, the problems were highly satisfactory, with most adhering to Python’s PEP 8 coding conventions and containing no syntax errors.

However, areas for improvement were identified, too. The distribution of difficulty levels was unbalanced, with only 8% of problems classified as high-difficulty, and some problems were miscategorized. The types of questions were heavily skewed toward reading and multiple-choice formats, while debugging and descriptive questions were relatively underrepresented. Many scenarios were repeated, indicating a lack of creativity. In several problems, output examples were missing or the blank formatting was inconsistent. While most answer choices were well-structured, some incorrect options lacked plausibility, and certain questions with multiple correct answers did not provide appropriate guidance. The explanations were concise, logical, and effectively conveyed key concepts, especially those related to OOP. Real-world examples were naturally integrated, enhancing both learning effectiveness and practical relevance.

Based on the results of this study, key improvement areas include diversifying problem types, rebalancing difficulty levels, and expanding the variety of real-life scenarios. Practically, the results can be used to foster self-directed learning competencies emphasized in the 2022 revised national curriculum by establishing a digital learning environment that supports active, learner-driven engagement. In particular, by providing a tablet-based mobile learning environment, the system enables learning beyond time and space constraints, thereby enhancing educational accessibility and equity.

Author Contributions

Conceptualization, H.E.K. and H.M.K.; methodology, H.E.K., H.M.K., C.J.P., and C.M.K.; software, H.E.K. and H.M.K.; validation, H.E.K., H.M.K., and C.J.P.; writing—original draft preparation, H.E.K. and H.M.K.; writing—review and editing, C.J.P. and C.M.K.; project administration, C.J.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the research grant of Jeju National University in 2025.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ministry of Education. 2022 Revised National Curriculum General Guidelines; Ministry of Education: Sejong, Republic of Korea, 2022.

- Ha, H.-Y. Misconceptions and Truths About AI Digital Textbooks Explored Through AI TalkTalk; Field Insight: Melbourne, Australia; Korea Foundation for the Advancement of Science and Creativity: Seoul, Republic of Korea, 2023. [Google Scholar]

- Ministry of Education. Announcement of Final Review Results for AI Digital Textbook Screening; Press Release; Ministry of Education: Sejong, Republic of Korea, 2024.

- Lee, E.-C. Development of a self-directed learning measurement tool for university students based on a complex structure model. J. Korea Contents Assoc. 2016, 16, 382–392. [Google Scholar]

- Lee, G.-R. University students’ change of awareness and self-directed learning competencies after experience using and applying Chat GPT. J. Teach. Learn. Res. 2023, 16, 71–94. [Google Scholar]

- IBM. Large Language Models. Available online: https://www.ibm.com/kr-ko/think/topics/large-language-models (accessed on 1 July 2025).

- Seo, H.-J. Analysis of Visual Materials of a High School Science Textbook Developed Under The 7th Version of The National Curriculum. Master’s Thesis, Graduate School of Education, Chosun University, Gwangju, Republic of Korea, 2007. [Google Scholar]

- Jeon, S.-J.; Wi, J.-E.; Park, I.-Y. A study on the learning effects of different note-taking methods by media type. J. Integr. Des. Res. 2020, 19, 9–26. [Google Scholar]

- Cho, H.-Y.; Kwon, H.-K. Analysis of learning effects between groups using tablet PCs and handwritten notes. J. Learn.-Centered Curric. Instr. 2024, 24, 209–222. [Google Scholar]

- Park, C.-J.; Hyun, J.-S. Development of programming question types and difficulty measurement methods based on programming elements in high school computer science. J. Korean Assoc. Comput. Educ. 2024, 27, 25–34. [Google Scholar] [CrossRef]

- Informatics. MiraeN. Available online: https://22txbook.m-teacher.co.kr/book/view.mrn?id=107 (accessed on 1 May 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.