1. Introduction

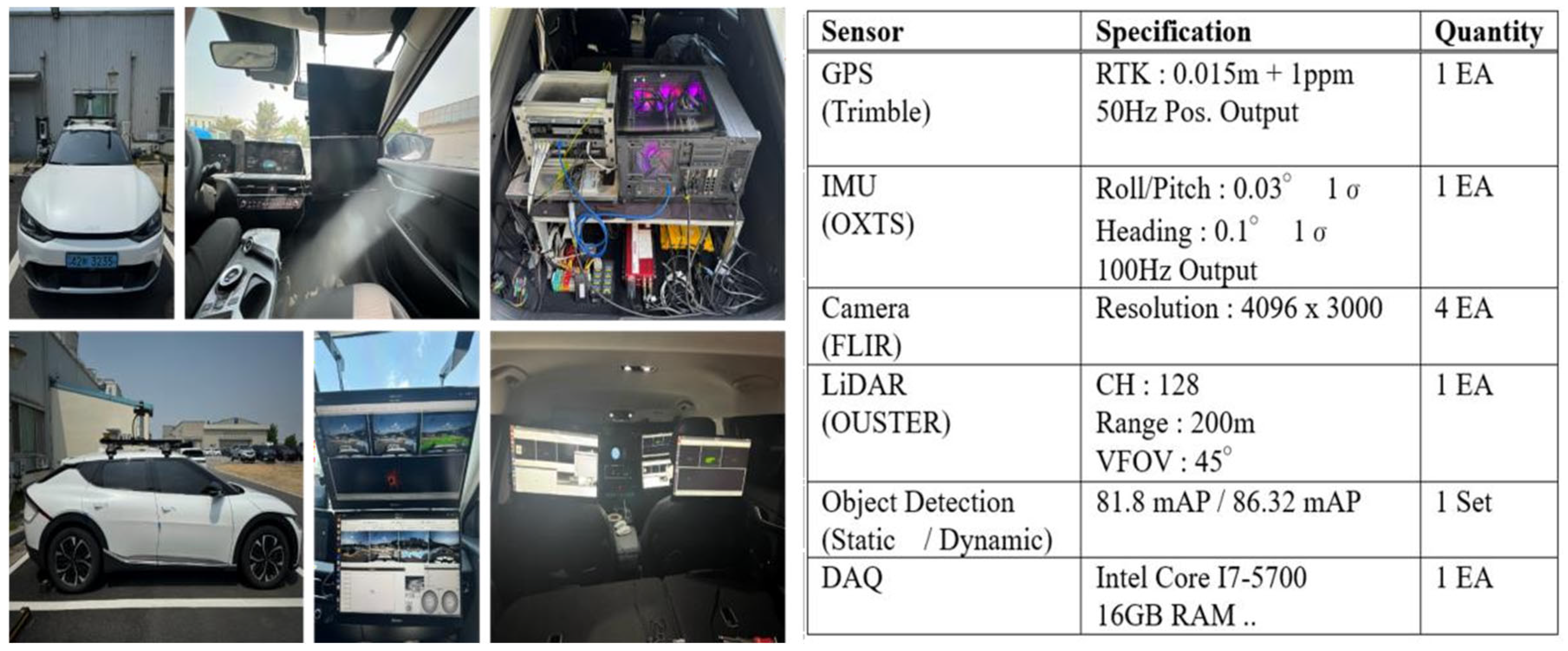

Performance experiments of navigation technology based on an autonomous driving simulation vehicle were conducted in the Suri Tunnel (approximately 1.8 km) and the Suam Tunnel (approximately 1.2 km) of the Seoul Metropolitan Area 1st Ring Expressway. The test vehicle used was a EV6(KIA, Seoul, Republic of Korea) configured to enable a hybrid navigation system that integrates GPS-based absolute positioning with relative positioning using IMU (Inertial Measurement Unit), IVN (In-Vehicle Network), a camera, and LiDAR. The detailed specifications of the system are shown in

Figure 1 below.

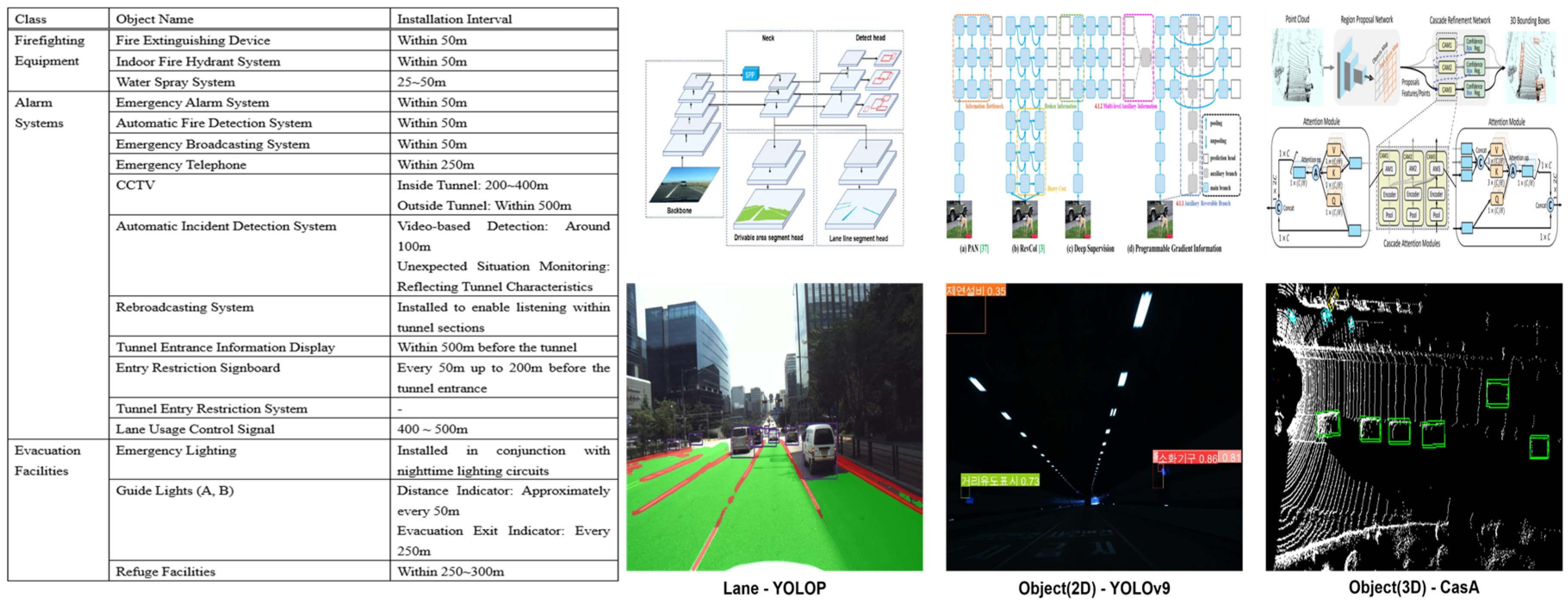

To enable object recognition inside tunnels, data collection, refinement, and preprocessing were conducted for tunnels and underground roads in the Seoul and Gyeonggi regions, covering a total length of approximately 30 km. For relative position correction, a total of 18 types of tunnel interior objects were selected, including firefighting equipment (3 types), alarm systems (11 types), and evacuation facilities (4 types).

For the implementation of Lane/Object (2D/3D) Detection [

1,

2,

3], the following models were used: YOLOP for Lane Detection, YOLOv9 for 2D Object Detection [

2], and CasA for 3D Object Detection [

1]. The objects and models used for training, as well as their results, are shown in

Figure 2 below.

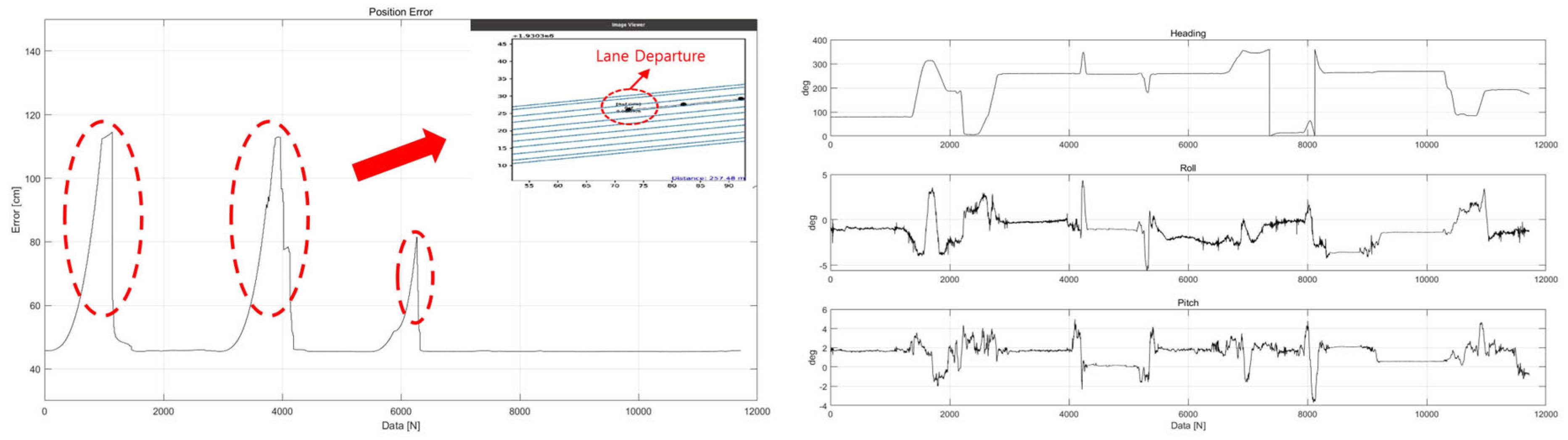

To integrate GPS, IMU, and IVN, a Loosely Coupled-based Kalman Filter was designed and applied [

4,

5]. An error model was formulated and incorporated, considering factors such as DR (Dead Reckoning) attitude angles, speedometer conversion coefficients, and gyroscope bias. The detailed implementation is shown in

Figure 3 below.

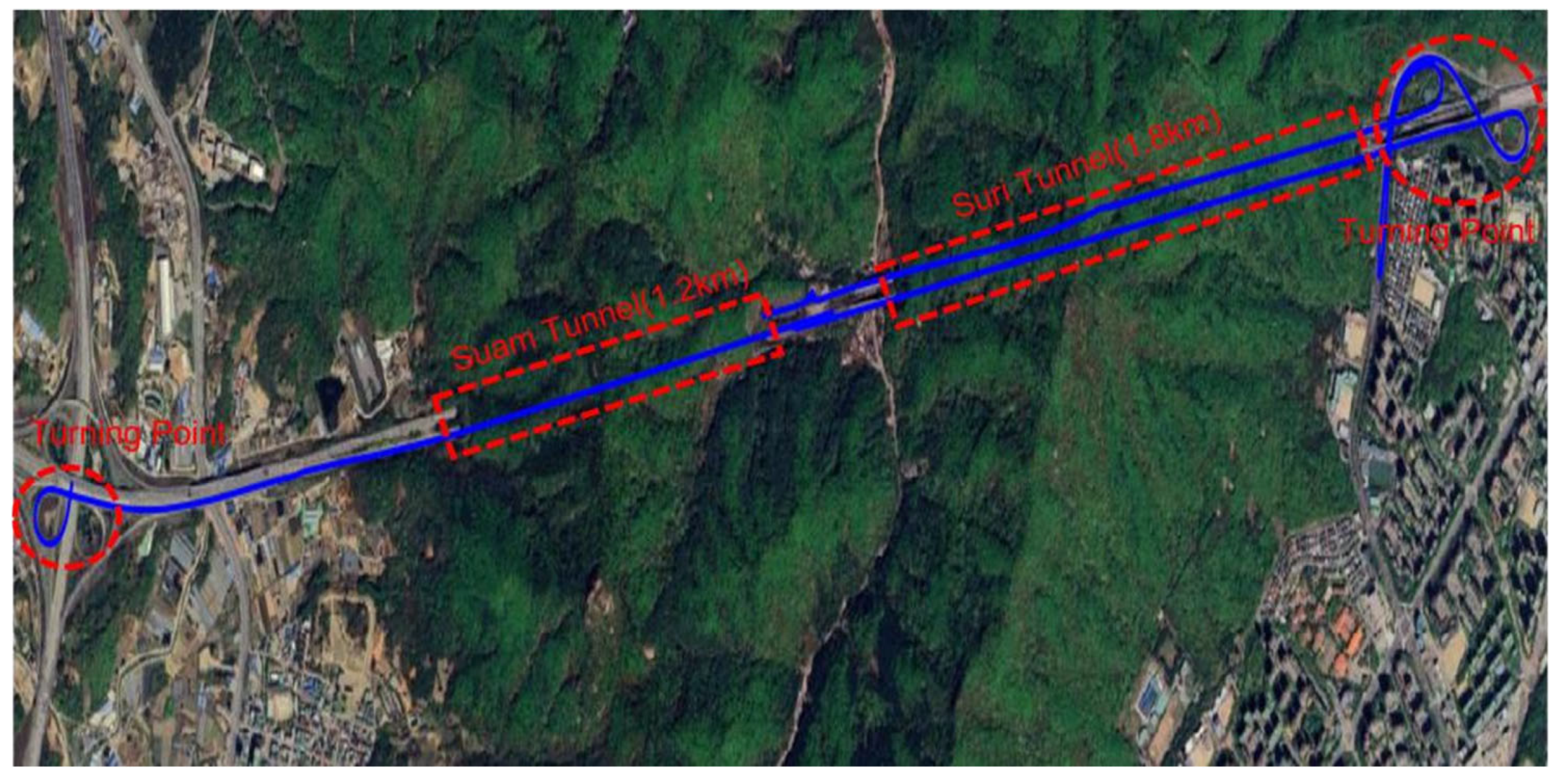

Based on the configured system, an experiment was conducted by driving round-trip from Anyang–Pyeongchon to Pangyo, passing through the Suam Tunnel and Suri Tunnel in sequence (

Figure 4). The driving conditions followed the expressway speed regulations and were conducted on the fourth lane after 4:00 p.m. The driving results are presented in

Figure 5 below.

2. Results

In this study, a positioning accuracy experiment was conducted on vehicle navigation technology in ultra-long underground expressways using an autonomous driving simulation vehicle. In occlusion sections where GPS signals are interrupted, inertial navigation positioning was performed by fusing IMU/IVN, and vision-based navigation positioning was conducted by integrating a camera/LiDAR. Due to challenging tunnel environments (e.g., signal obstruction by moving objects and multipath fading), fluctuations in absolute positioning were observed in certain sections, resulting in a maximum error of 113.62 cm. While this level of accuracy is acceptable for non-research vehicles using commercial navigation systems, it was deemed insufficient for application in autonomous driving systems. Based on the experimental results, it was concluded that to achieve accurate positioning even in GPS-denied areas for both autonomous vehicles and non-research vehicles, a continuous absolute positioning system integrating infrastructure-based technologies such as virtual GPS signals, BLE (Bluetooth Low Energy), and UWB (Ultra-Wideband) is required. Future research will focus on integrating these technologies with autonomous driving systems and commercial navigation systems to develop a stable vehicle navigation system in way tunnel environments.

Author Contributions

Conceptualization, K.-S.C. and S.-J.K.; methodology, K.-S.C.; software, Y.-H.S. and M.-G.C.; validation, K.-S.C. and Y.-H.S.; formal analysis, M.-G.C.; investigation, K.-S.C. and M.-G.C.; resources, S.-J.K. and W.-W.L.; data curation, K.-S.C. and Y.-H.S. and M.-G.C.; writing—original draft preparation, K.-S.C. and Y.-H.S.; writing—review and editing, K.-S.C. and Y.-H.S.; visualization, M.-G.C.; supervision, S.-J.K. and W.-W.L.; project administration, S.-J.K. and W.-W.L.; funding acquisition, S.-J.K. and W.-W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Land, Infrastructure and Transport / Korea Agency for Infrastructure Technology Advancement under the project titled “Development of Technology to Enhance Safety and Efficiency of Ultra-Long K-Underground Expressway Infrastructure“ [Project No. RS-2024-00416524]. The APC was funded by the same project.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available in this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wu, H.; Deng, J.; Wen, C.; Li, X.; Wang, C.; Li, J. CasA: A Cascade Attention Network for 3-D Object Detection from LiDAR Point Clouds. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5704511. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, M. Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the Computer Vision—ECCV, Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Wu, D.; Liao, M.; Zhang, W.; Wang, X.; Bai, X.; Cheng, W.; Liu, W.Y. A simple feature augmentation for domain generalization. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Montreal, QC, Canada, 1 October 2021. [Google Scholar]

- Groves, P.D. Principles of GNSS, Inertial, and Multi-Sensor Integrated Navigation Systems, 2nd ed.; Artech House: Norwood, MA, USA, 2008. [Google Scholar]

- Titterton, D.H.; . Weston, J.L. Strapdown Inertial Navigation Technology, 2nd ed.; The Institution of Engineering and Technology: London, UK, 2004. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).