Abstract

Semantic segmentation is a vast field with many contributions, which can be difficult to organize and comprehend due to the amount of research available. Advancements in technology and processing power over the past decade have led to a significant increase in the number of developed models and architectures. This paper provides a brief perspective on 2D segmentation by summarizing the mechanisms of various neural network models and the tools and datasets used for their training, testing, and evaluation. Additionally, this paper discusses methods for identifying new architectures, such as Neural Architecture Search, and explores the emerging research field of continuous learning, which aims to develop models capable of learning continuously from new data.

1. Introduction

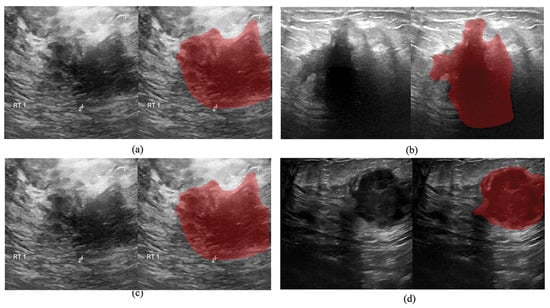

Semantic segmentation is an image analysis technique that assigns a label to every pixel in an image to obtain pixel-accurate masks of all represented objects [1]. This approach improves on object detection methods that frame objects within a rectangle, which have larger errors, finding applications in fields such as medicine, autonomous driving, and video surveillance. For instance, medical imaging serves as a non-invasive diagnostic technique that produces images of the internal structures of a patient’s body. These images can be analyzed using semantic segmentation to aid medical professionals in diagnosing conditions such as cancer [2], as illustrated in Figure 1.

Autonomous driving, on the other hand, involves the integration of sensors and software, including segmentation networks, into a vehicle to actively identify and segment objects, pedestrians, traffic signs, and other relevant elements. The ultimate objective is to achieve fully autonomous vehicles [3]. Similarly, video surveillance, commonly referred to as Closed-Circuit Television (CCTV), involves the real-time recording of individuals, locations, and events. The use of artificial intelligence in these systems improves the detection of individuals and suspicious activities. However, it also raises concerns regarding privacy, civil liberties, and the potential misuse of this technology [4,5].

Figure 1.

Example of a breast cancer segmentation result, using DeepLabV3+ with (a) DarkNet53, (b) SqueezeNet, (c) EfficientNet-b0, (d) DarkNet19. Reproduced from Deepak and Bhat [6], licensed under CC BY-NC 4.0.

Currently, machine learning algorithms and techniques form the basis of most semantic image segmentation solutions. Traditional methods, which include thresholding [7], region-growing [8], watershed segmentation [9], Conditional and Markov Random Fields (CRFs) [10], active contours [11], and edge detection methods [12], are sometimes incorporated into artificial intelligence solutions but are typically less accurate and adaptable to light changes and intraclass differences compared to deep learning methods [13]. Intraclass differences are particularly important for object identification, as objects can vary widely in shape, size, and texture.

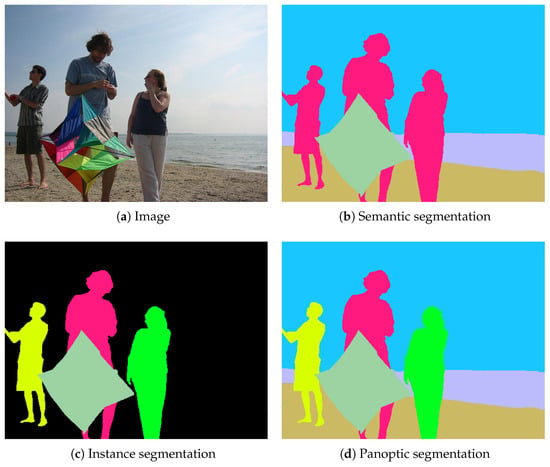

Semantic segmentation can be improved by including instance segmentation, which differentiates multiple instances of an object in an image [14]. This technique is known as panoptic segmentation and combines semantic and instance segmentation to obtain a more comprehensive description of an image. Panoptic segmentation presents additional challenges since it requires accurate semantic and instance segmentation simultaneously [15,16], as exemplified in Figure 2.

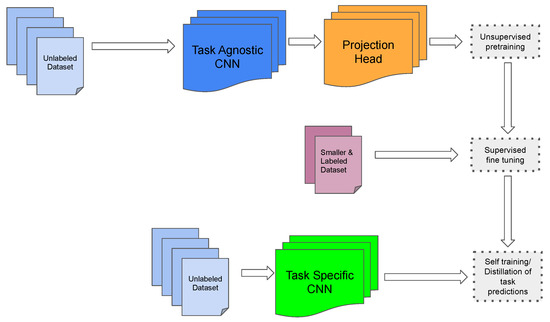

Object detection using semantic segmentation can be computationally intensive, making it challenging to use in real-time or in video applications. Video Semantic Segmentation (VSS) introduces additional challenges because it needs to produce consistent results over time while dealing with changing lighting conditions, possible occlusions, and object movement. Furthermore, semantic segmentation requires significant time to label each image, adding to the computation time [17]. To address these challenges, researchers have been exploring semi-supervised annotation methods to train their networks more efficiently [18]. One such model is SimCLRv2 [19], which pre-trains a ResNet model with unlabeled data in a task-agnostic way, followed by training with a small amount of labeled data, and finally training with unlabeled data in a task-specific way, as depicted in Figure 3. In addition to SimCLRv2, other techniques such as pseudo-labeling [20], consistency regularization [21], and teacher–student networks [22] have been proposed for semi-supervised semantic segmentation, each aiming to reduce the need for labeled data while preserving model performance.

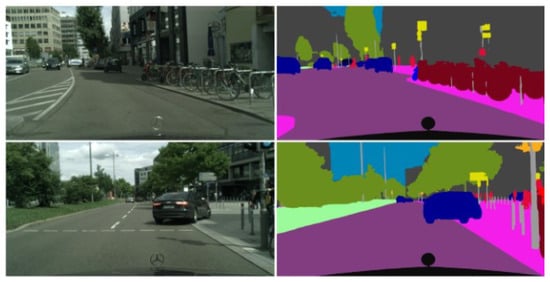

Despite the abundance of research on semantic segmentation and panoptic segmentation for images, there are fewer examples of networks designed for their video equivalents: VSS [23] and Video Panoptic Segmentation (VPS) [15]. VPS has been less popular, likely due to the scarcity of appropriately labeled datasets for this task, which are not as readily available as image segmentation datasets. An example of video semantic segmentation is shown in Figure 4.

Figure 2.

Example of semantic, instance, and panoptic image segmentation: (a) the original image; (b) semantic segmentation, with no distinction between each individual; (c) instance segmentation, distinguishing between individuals, but no segmentation of the background; and (d) panoptic segmentation, distinguishing between individuals while performing background segmentation. Reproduced from Jung et al. [24], licensed under CC BY 4.0.

Figure 3.

SimCLRv2, a model where deep semi-supervised learning is applied. The ResNet model is pre-trained with unlabelled data, then trained with a small amount of labeled data, and finally trained for a more specific task with unlabelled data.

Figure 4.

Ground-truth labels of objects in different video frames. Adapted from Portillo-Portillo et al. [25], licensed under CC BY 4.0.

This paper presents a brief perspective of deep learning 2D semantic segmentation techniques, discussing diverse approaches critical to this field of research. Although many recent models integrate components from multiple architectural paradigms—such as encoder–decoder structures enhanced with attention mechanisms or hybrid convolutional-transformer designs—we adopt a modular organization centered on primary architectural families for simplicity and historical continuity. In Section 2, we start by highlighting the fundamentals of Fully Convolutional Neural Networks (FCNs), introducing core concepts and analyzing various convolution types such as atrous and transposed convolution [26,27,28]. In Section 3, we introduce Graph Convolutional Networks (GCNs), examining their functionalities and inherent limitations. In Section 4, we introduce fundamental encoder–decoder models, offering insights into their structures, with special emphasis on U-Nets [29]; this is followed by Section 5, where Feature Pyramid Networks (FPNs) are discussed. Recurrent Neural Networks (RNNs) are introduced in Section 6. Section 7 describes Generative Adversarial Networks (GANs), and Section 8 introduces Attention-Based Networks (ABNs). We focus on Neural Architecture Search (NAS) in Section 10, followed by a detailed discussion on Continuous Learning in Section 11. Section 12 presents several important datasets of the different deep learning architectures, covering both 2D and 2.5D cases. Section 13 addresses key implementation considerations, including software frameworks, hardware constraints, and optimization techniques relevant to training segmentation models. Metrics for assessing algorithm accuracy and their respective drawbacks are discussed in Section 14. Section 15 looks into the accuracy of several state-of-the-art models on various datasets, discussing architectural reasons for performance increases over time, highlighting possible paths for additional improvement. In Section 16, several very recent trends in semantic segmentation are analysed, hinting at potential future paths in this field. This paper closes with Section 17, in which we offer some conclusions.

2. Fully Convolutional Neural Networks

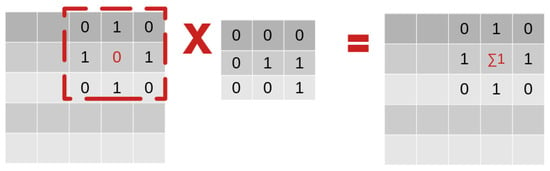

Convolutional Neural Networks (CNNs) [30] are a popular type of neural network architecture, especially for tasks related to image processing. CNNs use convolutional layers to apply convolutions to input images, as demonstrated in Figure 5.

Figure 5.

Example of a convolutional layer operation, where a convolutional 3 × 3 filter is applied to the center pixel highlighted in red. The filter sums the multiplications between the area lined in red (on the left) and the filter (center), which results in the center pixel changing value from 0 to 1.

The convolutional layer operation is defined by Equation (1), where is the input feature map, is the convolutional kernel, is the output feature map, f is the activation function (such as Rectified Linear Unit, or ReLU), b is the bias term, and M and N are the dimensions of the convolution kernel.

Note that Equation (1) assumes that the input feature map and the convolutional kernel have the same number of channels. When input and output channel dimensions differ, convolutional layers apply multiple filters, where each filter spans all input channels and produces one output channel, enabling the network to learn diverse feature representations [31].

Apart from convolutional layers, CNNs often include non-convolutional layers such as pooling layers, which simplify the feature maps and activation functions, allowing the network to model non-linear functions. The most commonly used types of pooling layer are max and average [31,32]. Equations (2) and (3) describe the operation of max and average pooling layers, respectively, where is the input feature map, is the output feature map, M and N are the dimensions of the pooling kernel, and s is the stride (i.e., the distance between adjacent pooling operations). Each pooling kernel slides over the input feature map, replacing each region with its maximum or average value [33].

There are other types of pooling layers. For example, in L2 pooling [34], each patch of the input feature map is replaced with the L2 norm of the values in that patch. Similarly, a stochastic pooling layer [35] replaces each patch of the input feature map with a randomly selected value from that patch, with the probability of each value being selected proportional to its magnitude. Finally, global pooling layers [36], such as global max or global average, operate on the entire feature map and produce a single output value, which creates lighter architectures. All of these components enable CNNs to achieve state-of-the-art performance on a variety of computer vision tasks.

FCNs [1] are a variation of CNNs that consist solely of convolutional layers. This architecture preserves spatial information by allowing the output to be the same size as the input image. However, FCNs typically have significantly more weight parameters than traditional CNNs, since the convolutional layers in FCNs have to learn more complex and diverse features from the input data. Despite this drawback, FCNs are powerful tools for various image segmentation and dense prediction tasks.

2.1. Atrous Convolution

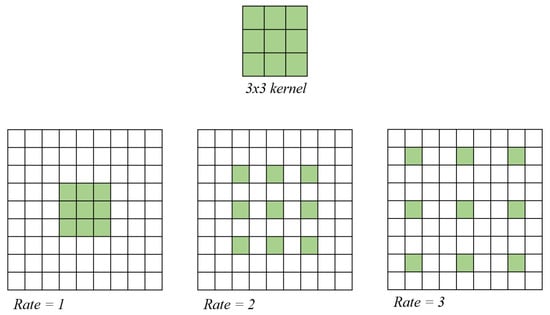

In addition to the standard convolutions, another technique called atrous or dilated convolution has gained popularity [26,27]. The purpose of atrous convolution is to address the issue of losing spatial information during pooling stages in a traditional convolutional network. By introducing “holes” into the convolutional kernel, as shown in Figure 6, atrous convolutions upsample the filter kernels, allowing for the recycling of pre-trained models to extract more detailed feature maps [27]. Specifically, the last pooling layers in a model can be replaced with atrous convolutional layers [37]. Dilated convolutions also increase the network’s receptive field, enabling it to learn more detailed features in the inputs without adding extra learnable parameters [38]. Dilated convolutions are commonly used in various applications, such as image segmentation [26], object detection [38], and video processing [39].

Figure 6.

Atrous convolutional layer. The kernel uses the same functions as a convolutional kernel but includes a new rate parameter. This parameter determines how distant the kernel cells are from each other and performs the convolutional operation with the represented pixels in green, depending on the rate value.

2.2. Transposed Convolution

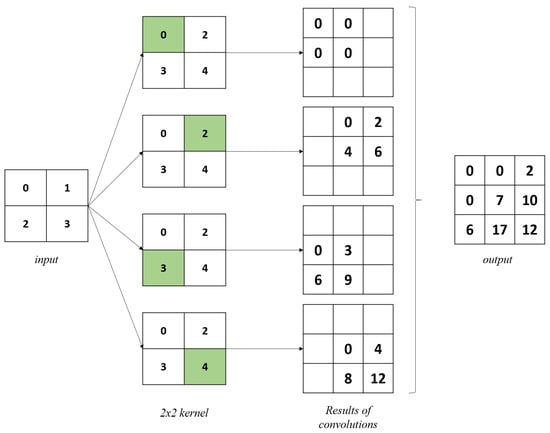

Transposed Convolution [28], also known as fractionally strided convolution or deconvolution, operates in the opposite direction of regular convolution and is typically used in the decoder layers of autoencoders or segmentation networks to increase the spatial resolution of feature maps. The purpose of transposed convolution is to recover spatial resolution by upsampling feature maps, helping to reverse the downsampling effects of pooling layers. While it can help control model complexity, the number of learnable parameters depends on how it is implemented within the network architecture [40]. This process is highlighted in Figure 7.

Figure 7.

Transposed convolutional layer. As pictured, a transposed convolution operation multiplies each kernel cell with each pixel value in the input space and organizes it in a space, and whichever values occupy the same output space are summed.

Despite its many advantages, one of the main problems with transposed convolution is the checkerboard problem. This arises from uneven overlap patterns during the operation, which can occur due to the interaction between kernel size, stride, and padding—even when the kernel size is divisible by the stride—leading to some output pixels being updated more frequently than others. As a result, some pixels may be updated multiple times while others are not, creating a checkerboard pattern in the output feature map [41]. This can limit the network’s capacity to recreate photo-realistic images. Several techniques have been proposed to solve this problem. One solution is to use the pixel transpose convolutional layer proposed by Gao et al. [42], which creates intermediate feature maps of the input feature maps in sequence. This adds dependencies between adjacent pixels in the final output feature map, which helps avoid the creation of checkerboard artifacts.

3. Graph Convolutional Networks

GCNs [43] are a type of CNN used for analyzing data represented in graphs. Graph data structures can capture complex relationships present in the data. However, the non-Euclidean geometry of graph-structured data presents a significant challenge for obtaining deep insights about the information it contains. Traditional graph [44,45,46] and network embedding methods [47,48] attempt to address this issue by studying the low-dimensional characteristics of graphs. However, these methods can suffer from shallow learning and may not capture the complex nature of the data [49].

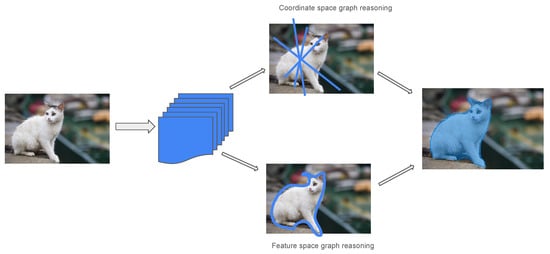

To overcome these limitations, deep learning approaches like GCNs have been developed to enable a better understanding of graph-structured data. GCNs extend CNNs to non-Euclidean geometry, which is necessary for analyzing data represented in graphs that lack a regular structure [50]. GCNs work by applying convolutional filters to node features through message passing. In this process, each node in the graph aggregates information from its neighbors, transforms the information, and then sends it back to its neighbors [49]. This enables GCNs to learn representations that capture the underlying patterns and relationships within the graphs. One such GCN for semantic segmentation is DGCNet [51], as represented in Figure 8.

Figure 8.

DGCNet, an example of a GCN created for semantic segmentation. The model consists of two GCN branches that propagate information in both spatial and channel dimensions of a convolutional feature map.

By leveraging these representations, GCNs can solve a wide range of problems in various domains, including social networks [52], biology [53], recommender systems [54], drug development [55], and text classification [56].

4. Encoder–Decoder Models and U-Nets

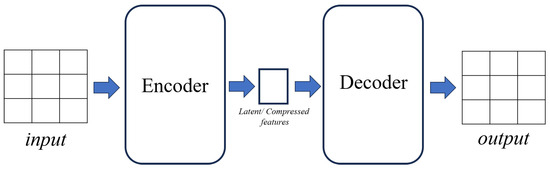

Encoder–decoder models (Figure 9) consist of two key components: an encoder, which applies a function of to the input, and a decoder, which predicts the output y from z. The encoder extracts semantic information from the input, which is useful for predicting the output, such as pixel-wise masks. Auto-encoders are a special type of encoder–decoder model, where the decoder attempts to reconstruct the original input from the lower-dimensional representation given by the encoder.

Figure 9.

Encoder–decoder networks architecture. The architecture and what functions it applies to the inputs are varied. This figure describes an input image that goes through an encoder, into other possible operations of feature compression before passing through the decoder network.

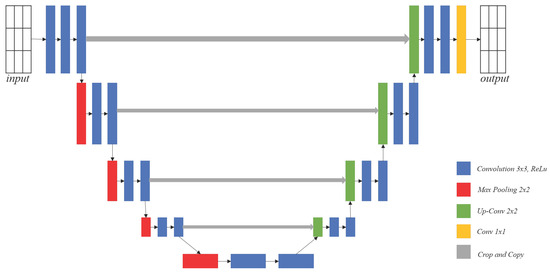

U-Nets, illustrated in Figure 10, are a type of encoder–decoder architecture characterized by an encoder for feature extraction and a decoder that mirrors the encoder’s structure. The decoder consists of transposed convolutions and skip connections, which link corresponding layers in the encoder and decoder to preserve spatial information.

Figure 10.

The U-Net architecture is composed of a decoder that behaves similarly to an auto-encoder. The input passes through several convolutional and pooling layers, and each level in the architecture sends their respective feature maps to the layer symmetrically opposed, which, through a series of up convolutions, tries to recreate the input using both of the available feature maps.

U-Nets are commonly used in medical imaging [29], displaying exceptional efficiency in segmentation and classification tasks—including cell tracking and radiography [57]—and high precision when distinguishing between lesions and organs. Figure 11 shows the use of a U-Net architecture in retinal birefringence scanning segmentation tasks. In addition, U-Nets have been studied in combination with other methods—such as attention modules and residual structures—to further enhance their performance. Attention U-Net [58], for instance, has been successful in accurately detecting smaller organs like the pancreas, while RU-Net [59] has fewer parameters, improving performance. TransUNet [60], on the other hand, integrates transformers and CNNs into the encoder to utilize medium and high-resolution feature maps, which help to maintain more fine-grained information about the images.

Figure 11.

Examples of different output images from R2U-Net model for Retinal Birefringence Scanning (RBS) segmentation in three datasets: (a) DRIVE dataset; (b) STARE dataset; and, (c) CHASE_DB1 dataset. For all examples, the first row displays the input images, the second row depicts the ground-truth images, and the third row shows the results. Adapted from Alom et al. [61], licensed under CC BY 4.0.

5. Feature Pyramid Networks

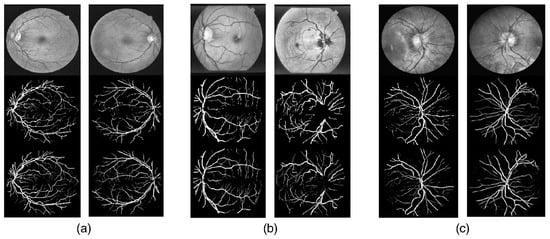

FPNs, as shown in Figure 12, were developed to reduce the computational costs associated with pyramid representations in object detectors [62]. FPN leverages the inherent multi-scale and pyramidal hierarchy found in deep convolutional networks, enabling the creation of a feature pyramid with significantly reduced computational demands. This approach also facilitates object recognition across a wide range of scales, thanks to the pyramid-scaled feature maps. FPN comprises the following key components [62]:

Figure 12.

Feature Pyramid Network architecture. The input passes through each layer from #1 to #6 consecutively through the bottom-up pathways, which are represented horizontally, creating the respective feature maps. In this image, the stronger the features in each map, the bolder the borders of the squares. Besides this pathway, the input also travels laterally, as represented by the vertical arrows between each layer. In the final layers, the input is fed through the top-down pathway, going right to left in this image; in addition to feeding into the next layer, each stage also receives the feature maps from the lateral connections that send the superficial feature maps.

- The bottom-up pathway, responsible for the feed-forward computation of the convolutional network’s backbone. This pathway aggregates feature maps from multiple scales, scaling them by a factor of 2 to establish the feature hierarchy.

- Top-down pathways, which generate higher-resolution features by upsampling feature maps with lower spatial resolutions but richer semantic content from higher pyramid levels.

- Lateral connections, which merge maps from both the bottom-up and top-down pathways, ensuring that maps with matching spatial sizes are combined. The bottom-up pathway provides detailed information about lower-level semantics and precise object locations, while the top-down pathway contributes to higher-level semantic context. This merging process involves element-wise addition, followed by a convolution to reduce aliasing effects resulting from upsampling.

By employing these techniques, the network creates a feature pyramid with rich semantics at all levels. This feature pyramid finds applications across various domains and serves as a versatile network that can replace traditional featured image pyramids without introducing additional computational burdens, compromising speed, or increasing memory usage [63,64].

6. Recurrent Neural Networks

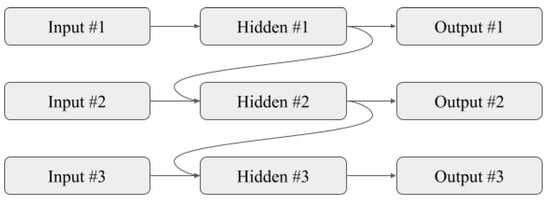

Processing sequential data, such as speech, videos, or text, requires the network to remember previous data. This allows the network to maintain an understanding of the context and relationships between the inputs and classify the action or intent behind the data. RNNs [65] are commonly used when the data need this type of analysis, as they can process sequential data with a variable length. Figure 13 depicts a simplified representation of an unfolded RNN.

Figure 13.

Simplified representation of an unfolded RNN. Each hidden layer has n hidden units that are connected through time recurrently.

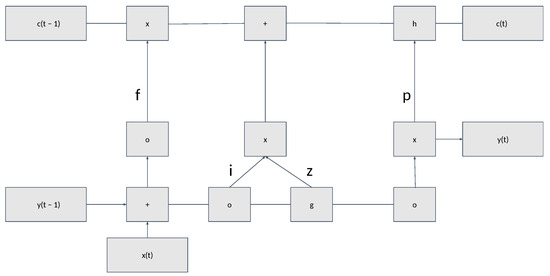

RNNs work by recurrently feeding information regarding the previous input states into the following processing states, which allows the end nodes to analyze data regarding all previous inputs and their relationships with minimal loss of context. However, simple RNNs have some limitations. For example, vanishing gradients can make it difficult to learn long-term dependencies, and short-term memory makes them less effective for longer chains of data that need to be analyzed together [66]. To overcome these limitations, two specialized architectures were created: Long Short-Term Memory (LSTM) [67] and Gated Recurrent Unit (GRU) [68]. An LSTM block is shown in Figure 14.

Figure 14.

LSTM block, where the represents the sigmoid activation function and g and h represent input activation functions, usually tanh.

LSTMs and GRUs can learn the relationships between different inputs of sequential data while solving the short-term memory problem using gates. These gates can learn which information to add or remove from the hidden state, allowing them to selectively keep or discard relevant information.

RNNs have been successfully used in many applications, including speech recognition [69], language modeling [70], machine translation [68], and sentiment analysis [71], to name a few. Their ability to process sequential data with a variable length makes them a powerful tool for many real-world problems.

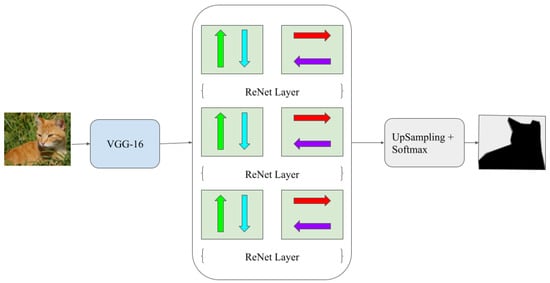

The ReSeg model is an illustrative application of RNNs in semantic segmentation [72]. This approach incorporates ReNet layers, each consisting of four RNN modules that analyze the image from different directions (up-down, left-right, and vice-versa). The overall network architecture combines a VGG-16 network (a CNN typically used for image recognition and classification tasks) [73], which takes input images, with ReNet layers. Subsequently, the processed information passes through upsampling and softmax layers to generate the final object mask, as depicted in Figure 15.

Figure 15.

ReSeg network. The input image is preprocessed through a pre-trained VGG-16 network and fed through consecutive ReNet layers, followed by upsampling and non-linear softmax. The ReNet layers use two RNNs. The first RNN combs through the image pixel values in an up-down pattern, and the second does the same but reads the image sideways. Both feature maps are then fed through two more ReNet layers which behave in the same way, followed by the upsampling and softmax layers.

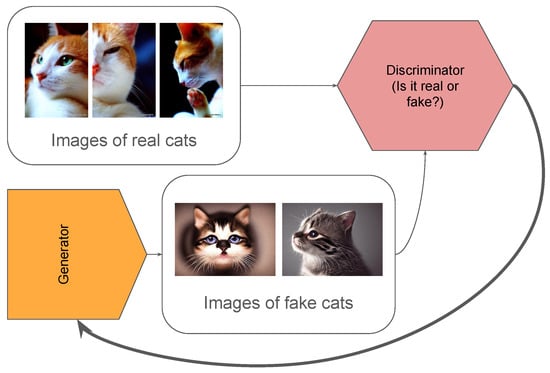

7. Generative Adversarial Networks

GANs [74] are a type of neural network composed of two components: a generator network G and a discriminator network D, as represented in Figure 16. The generator network creates new data samples, such as images, while the discriminator network learns to distinguish between real data and data created by the generator. The two networks are trained in a minimax game, where the generator minimizes the loss of generating realistic samples while the discriminator maximizes its ability to distinguish real from fake data.

Figure 16.

GAN network simplified. The network is made of two components: the discriminator and the generator. The discriminator receives two sets of inputs: real images and fake images generated by the generator, and tries to differentiate one type from the other. After this, the generator receives the results of the discriminator’s test and tries to create better fake images.

GANs have shown great promise in various machine learning tasks, including unsupervised learning [75], semi-supervised learning [76], and reinforcement learning [77]. For example, in unsupervised learning, GANs have been used for image synthesis [78], data augmentation [79], and video prediction [80]. However, GANs also have some limitations, such as instability during training [81], mode collapse [82], and difficulty in controlling the output [83].

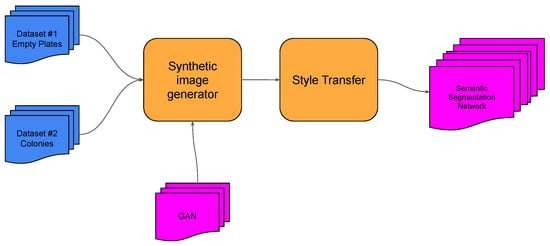

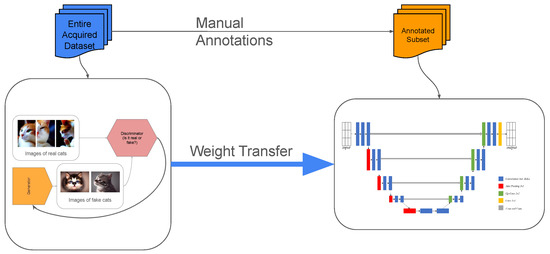

GANs have been applied to the problem of insufficient data for certain image segmentation problems. For example, Andreini et al. [84] created a GAN model trained to generate synthetic data of bacterial colonies in agar plates to train a CNN to segment the bacteria from the background, as illustrated in Figure 17. Besides being useful for the creation of new data from smaller datasets, GANs can also be used for optimizing the initial weights for certain networks; a case in point, Majurski et al. [85] used a GAN trained on unlabeled data, transferred the values of the resulting weights into a U-Net, and finally re-trained the U-Net with a small amount of labeled data, thus improving the overall performance compared to a baseline U-Net. This overall methodology is summarized in Figure 18.

Figure 17.

GAN network trained with images of bacterial colonies in agar plates to create synthetic data for a CNN to segment the bacteria from the rest of the image. This diagram refers to the method described by Bonechi et al. [84].

Figure 18.

GAN-based transfer learning for U-Net segmentation. The GAN learns without supervision from unlabeled data, capturing different patterns and relationships. The resulting weights are then transferred to a U-Net that is trained using a small labeled dataset. This diagram demonstrates the method described by Majurski et al. [85].

8. Attention-Based Networks

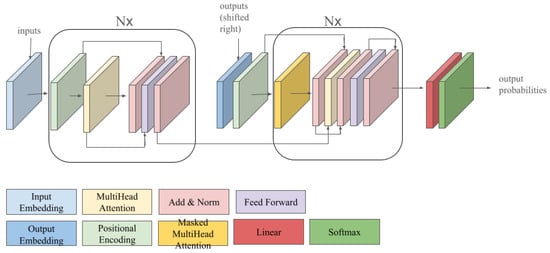

Attention-based networks, or transformer networks [86], use the concept of self-attention from Natural Language Processing (NLP) [87], which assigns importance scores to each token in a sentence. This mechanism enables the transformer, as illustrated in Figure 19, to capture long-range dependencies more effectively than RNNs, which process inputs sequentially and may forget earlier inputs.

Figure 19.

A simplified diagram of a transformer model architecture. This model uses a pair of encoder–decoder blocks with fully connected layers, in addition to attention layers.

Recently, researchers have applied the same concepts and structures to computer vision tasks [88], including semantic segmentation [89]. A transformer model can process multiple inputs at once by dividing the image into smaller patches [90], which are loaded and processed simultaneously. The network receives each batch and creates several attention vectors that describe the contextual relationship between the different regions in the image [90]. Attention allows the model to selectively focus on important parts of the input, improving its ability to capture long-range dependencies and relationships [91].

Compared to convolutional architectures, transformers have several advantages for computer vision tasks, including the ability to train on larger datasets [92], better inductive bias [93], generalization to other fields, and better modeling of long-range interactions between inputs [94]. In semantic segmentation, transformers have shown promising results and may offer an alternative to traditional CNNs [88]. Due to the large size of these architectures, the network requires more data for training and may struggle to learn effectively with smaller datasets. Additionally, these architectures consume more memory.

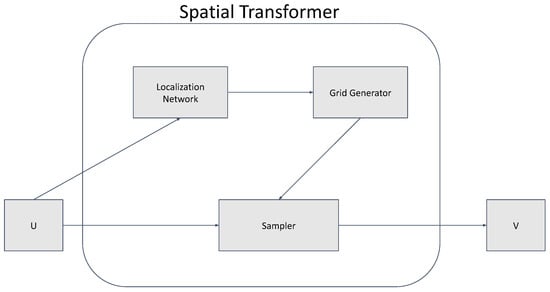

9. Spatial Transformer Networks

Spatial Transformer Networks (STNs) enable the dynamic manipulation of spatial data within images. They consist of three main components: the localization network, the grid generator, and the sampler, as depicted in Figure 20.

Figure 20.

Architecture of a spatial transform network. Input U passes through a localization net that performs a regression operation. The grid generator creates a sampling grid that is applied over U, producing the feature map V. The central block represents the spatial transformer network, which consists of a localization net, a grid generator, and a sampler.

The localization network predicts transformation parameters based on the input feature map. The grid generator creates a coordinate grid based on predicted parameters. The sampler uses a grid to sample the input feature map and generates a transformed feature map [86]. STNs can be plugged as learnable modules into CNN architectures, allowing the latter to actively transform feature maps based on the feature maps themselves, and do not require additional training supervision or modifications to the optimization process. STNs enable models to learn invariant transformations such as translation, scaling, rotation, and more general distortions, resulting in state-of-the-art performance across various benchmarks and transformation types [86].

10. Neural Architecture Search

The preceding sections delineated solutions that address the challenges inherent to semantic segmentation [95]. Nonetheless, these solutions present a high barrier to entry, as they require the involvement of experts well-versed in the details and operations of neural networks for their creation and/or adaptation to specific problems [96]. Neural Architecture Search (NAS) aims to design architectures primarily through algorithmic processes, with minimal human intervention or oversight. Within this domain, two notable pioneers are NAS-RL and MetaQNN [97]. The use of NAS has been successful at creating new architectures that outperform human-designed ones in image classification. This relatively new approach to creating networks has already been applied to semantic segmentation with promising results.

These architectures can be derived using techniques such as Reinforcement Learning (RL) [98], heuristic algorithms [99,100] (such as evolutionary algorithms [101,102]), Bayesian optimization [103], and gradient-based search [97]. Initially, several studies focused on creating the architecture from scratch; however, the large computational overhead was a major drawback to this approach [96]. Recent works deal with this issue by keeping the backbone static and using NAS to optimize the repeatable cell patterns. Weng et al. [104] designed two cell types using NAS, which were repeatedly stacked to build a U-Net-like architecture to apply medical semantic segmentation while requiring fewer parameters than U-Net. Liu et al. [105] used a hierarchical search space that included both cell-based and architecture search to create Auto-DeepLab. Fan et al. [106] created a search space to find a self-attention unit that can capture relations in all dimensions (height, width, and channels). Despite the vast body of work within NAS research, replication proves challenging due to the variability across experiments in terms of search space, hyperparameters, strategies, and other factors.

The exploration of the search space in NAS is crucial for refining existing architectures, with many efforts dedicated to enhancing both its efficiency and speed. NAS is also able to optimize the parameters for a specific module, replicating it to make improved models [104,105,107,108,109]. Additionally, NAS can replicate the macrostructure of well-established architectures while searching for improved architectural components, such as block types or layer connections, rather than directly optimizing hyperparameters.

11. Continual Semantic Segmentation

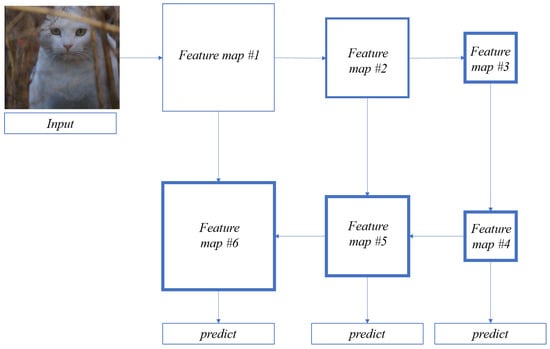

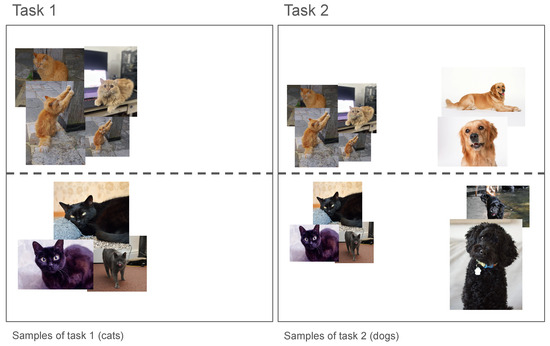

The previous sections addressed several network architectures for 2D segmentation, along with their primary features, weighing their respective advantages and disadvantages; they also discussed algorithmic network construction using NAS. However, once a network is established, the main question emerges: how to effectively train it [110]. Traditionally, networks are trained using self-contained labeled image datasets. Yet, contemporary approaches require continuous learning and adaptation to newly available data while also maintaining previous knowledge [110]. This previous knowledge can be tasks learned by the network, domain knowledge for when the network is trained in one dataset, class knowledge that includes all learned classes, and certain modalities of data included during training, such as text present in the image data. Since a network can hold these different types of knowledge, continuous learning can be divided into four categories: (1) task-incremental, which retrains the model to learn new tasks; (2) domain-incremental, which introduces new data to the model; (3) class-incremental, for adding new classes into the network knowledge, which can introduce issues such as confusion between similar classes, especially when they are not presented simultaneously during training, as illustrated in Figure 21; and (4) modality-incremental, which adds new input data, such as text into an image, sensor information, and other information [110].

Figure 21.

Visualisation of class-incremental learning with errors. The network never learns to differentiate between cats and dogs since it was never trained at the same time with both.

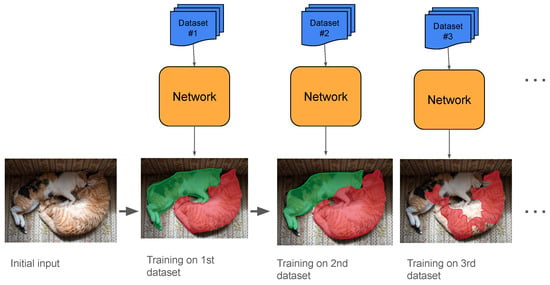

Retraining a model with new information may lead to an event known as catastrophic forgetting. This occurs when the model loses previously acquired knowledge during the process of learning new data. Semantic drift is another challenge, created from the addition of new background information in the data, potentially causing previously learned classes to be misclassified as background, as shown in Figure 22. This contributes to the loss of previously learned knowledge, harming the model’s overall performance [111].

Figure 22.

Example of what catastrophic forgetting can look like after several rounds of retraining with new data. The previously learned objects can begin to be forgotten and classified as background.

Moreover, this type of continual learning is resource-intensive, incurring significant costs. Obtaining and managing the data also poses a challenge, as it may be inaccessible due to privacy concerns or constrained by storage limitations [112]. Therefore, the issues associated with continuous learning in neural networks require balancing improved performance with the practical constraints of data availability and storage. While multiple approaches exist, two predominant methods stand out: data-free and data-replay [110,111].

Data-free methods include all techniques that do not store data and aim to keep the existing knowledge in the model while teaching it new information. There are three well-known techniques to achieve this: self-supervision, regularization, and dynamic architecture. Self-supervision utilizes labeled data and uses image manipulation techniques, as in rotation, and context reconstruction. Regularization can consist, for example, of freezing weight parameters to maintain old knowledge, as done in elastic weight consolidation [113]. Another related method, learning without forgetting [114], preserves prior outputs as soft targets to prevent forgetting during new task training. Finally, dynamic architecture restructures the network’s design for each continual learning task, for example, by adding new modules. A significant advantage in data-free methods lies in not needing to store any historical data, which may accumulate and significantly increase storage and processing costs. Additionally, this approach decreases concerns related to the storage of potentially sensitive or private information. Data-free methods are appealing in scenarios where resource efficiency and privacy are important, for example, medical image segmentation [115,116].

Conversely, data-replay methods involve storing a certain amount of older data or utilizing a GAN to present the model, with generated images mimicking the old data. However, the effectiveness of GAN-based data-replay hinges on the performance of the GAN. This method provides a mechanism to reinforce the model’s memory of past experiences but introduces the challenge of maintaining the quality and fidelity of the replayed data [117,118].

In summary, choosing between data-free and data-replay methods involves a trade-off between resource efficiency, privacy concerns, and the fidelity of retained information. Striking a balance between these considerations is crucial for designing a continuous learning solution that is both effective and ethically sound [110,111]. To possibly mimic the stability and plasticity of real human brains with networks and algorithms, research in continuous learning can contribute to creating better solutions that require models to be flexible, especially in problems where learning new knowledge is critical, such as the case of self-driving cars.

12. Datasets

This section describes several 2D and 2.5D datasets used in image segmentation tasks (2.5D datasets include image depth information). The selected datasets and their main characteristics are listed in Table 1. These datasets were selected based on their diversity, data volume, and frequent use in benchmarking within related literature.

Among the most commonly used datasets are PASCAL VOC 2012 [119] and Cityscapes [17]. PASCAL VOC 2012 offers a diverse collection of real-world images labeled for 20 object classes. In contrast, PASCAL Context [120], derived from the same dataset family, provides denser semantic annotations with a significantly larger set of classes, including both objects and background elements.

These datasets are ideal for testing neural networks’ capabilities in recognizing varied object types and for supporting transfer learning due to their diversity. However, larger datasets often require greater memory and computational resources during training. When training across all available classes is unnecessary, using a subset of the dataset (in terms of images or class labels) can be a more efficient alternative.

Some datasets are created with specific application domains in mind. For example, Cityscapes, CamVid [121], and KITTI [122,123,124,125] are designed for urban scene understanding and are widely used in research on autonomous driving. For tasks involving weakly supervised object detection and object tracking in videos, the YouTube-Objects dataset [126] offers short video clips centered around 10 object categories from PASCAL VOC, though it does not include full-frame annotations or support real-time processing out of the box. The PASCAL Part dataset [127] builds on PASCAL VOC by adding part-level annotations for several object classes, providing finer-grained information such as heads, wings, wheels, or legs, depending on the object category.

Table 1.

Common datasets for semantic segmentation, most of which are used in the works discussed in this paper.

Table 1.

Common datasets for semantic segmentation, most of which are used in the works discussed in this paper.

| Type | Dataset | Use Cases | Size | Classes | Notes |

|---|---|---|---|---|---|

| 2D | PASCAL VOC 2012 [119] | People, animals, vehicles, objects | 11,530 | 20 | 27,450 ROI and 6929 segmentations |

| PASCAL Context [120] | Objects, stuff, and hybrids | 10,103 | 400 | 9637 testing images | |

| MSCOCO [128] | Objects and stuff | 330K | 171 | 80 object classes and 91 stuff categories | |

| Cityscapes [17] | Street scenes and objects | 25K | 30 | 5K fine annotated and 20K coarsely annotated images | |

| ADE20K [129] | Scene images | 25,574 | 150 | Validation set of 2K images | |

| BSDS500 [130] | Contour and edge detection | 300 | Training set of 200 and test set of 100 images 12K hand labelled ROIs | ||

| YouTube-Objects [126] | YouTube videos | 10 | Each class has 9–24 videos of 3 s to 3 min length | ||

| CamVid [121] | Road driving scenes | 701 | 32 | Samples taken at 1 fps and 15 fps and manually annotated | |

| SBD [131] | Contour and edge detection | 11,355 | 20 | Images taken from PASCAL VOC 2011 | |

| PASCAL Part [127] | Body parts of each object | 10K+ | Testing set of 9637 images | ||

| OpenEarthMap [132] | Aereal images | 5000 | 8 | Over 64 regions, across 6 continents | |

| SIFTFlow [133] | Scene images | 33 | Two types of labelling: semantic and geometrical, has unannotated images | ||

| Stanford Background [134] | Scene images | 715 | 11 | 8 object classes and 3 geometric classes | |

| KITTI [122,123,124,125] | Road driving scenes | pixel-level and instance-level segmentation images and videos | |||

| BraTS [135] | Brain tumor segmentation | 775K+ | 4 | Multimodal MRI Dataset | |

| CHAOS [136] | Abdominal organ segmentation | 4.3K+ | 3 | CT and Multimodal MRI (T1-Dual, T2-SPIR) | |

| ISIC [137] | Skin lesion segmentation | 25K+ | 1 | Dermoscopic RGB Images | |

| DeepGlobe [138] | Satellite image segmentation | 24K+ | 7 | High-resolution Satellite RGB Imagery (0.5 m/pixel) | |

| SpaceNet [139] | Building footprint segmentation | 40K+ | 1 | Satellite RGB and Multispectral Imagery | |

| 2.5D | NYU-Depth V2 [140] | Indoor scenes | Video sequences of indoor scenes | ||

| SUN RGB-D [141] | RGB-D indoor scenes | 10,335 | Includes depth and segmentation masks | ||

| ScanNet [142] | Indoor scenes | 1513 | Instance level with 2D and 3D data | ||

| Stanford 2D-3D [143] | Indoor scenes | 70K+ | Includes raw sensor data, depth, surface normals, and semantic annotations |

Beyond the datasets discussed thus far, domain-specific segmentation datasets—such as those used in medical imaging, remote sensing, and off-road environments—introduce additional challenges, including class imbalance, sensor-induced noise, and complex object geometries. For instance, medical datasets such as BraTS [135], CHAOS [136], and ISIC [137] focus on segmenting tumors, organs, and skin lesions from modalities such as CT, MRI, and dermoscopy. These datasets are commonly used to evaluate model performance under conditions of high class imbalance, limited labeled data, and modality-specific artifacts. In the field of remote sensing, high-resolution aerial or satellite datasets such as DeepGlobe [138] and SpaceNet [139] are designed for the segmentation of land use, buildings, and road networks. These datasets challenge models with scale variation, visual similarity between classes, and geometric complexity. Including such domain-specific datasets in benchmarking enables a more comprehensive evaluation of model generalization and real-world applicability.

13. Implementation Considerations

When implementing semantic segmentation models, it is important to consider the software frameworks and hardware limitations that could affect the development and training process.

Most of the architectures discussed in this paper are implemented using popular deep learning frameworks such as PyTorch [144] and TensorFlow [145], which provide modular APIs, pretrained models, and active open-source communities. These frameworks support GPU acceleration and allow fine-grained customization of model architecture, training procedures, and loss functions. PyTorch, in particular, is widely adopted in research and industry due to its dynamic computation graph and broad community support. It is often used for rapid prototyping and experimentation with the most recent state-of-the-art models such as transformers and NAS-based models. TensorFlow is commonly used in production environments due to its robust deployment tools (e.g., TensorFlow Lite, TensorFlow Serving).

Training deep neural networks for semantic segmentation presents several practical challenges. One significant issue is high memory consumption, particularly when processing high-resolution images. This problem becomes more pronounced with larger batch sizes or architectures that generate numerous intermediate feature maps, often exceeding the memory limits of standard GPUs. To address this, various optimization strategies are employed, including gradient checkpointing, mixed precision training (as described by Micikevicius et al. [146]), and patch-based training approaches. Another major challenge is the extended training time required by deep models—especially transformer-based architectures—when applied to large-scale datasets. In such cases, distributed training methods, such as data or model parallelism across multiple GPUs, are commonly used to reduce convergence time. Additionally, semantic segmentation tasks frequently suffer from class imbalance, wherein certain classes (e.g., background) dominate the dataset, adversely affecting model convergence and generalization. To mitigate this, specialized loss functions such as Dice loss, focal loss [147], or class-weighted cross-entropy are often utilized. Finally, effective preprocessing and data augmentation techniques—including random cropping, flipping, rotation, and color jittering—are crucial for improving model generalization and robustness during training.

Considering these training challenges, high-performance GPUs with large memory are recommended for training state-of-the-art models. In low-resource scenarios, transfer learning or training on lower-resolution images are alternative options to run deep models. To reduce the model size while preserving performance, deployment on edge devices may require model pruning, quantization, or knowledge distillation techniques [148,149].

14. Metrics

The performance of neural networks for 2D image segmentation tasks can be determined using various metrics. These metrics allow for comparing different networks, architectures, and techniques, offering some insights into how well a given configuration will perform and its ability to provide consistent results. In the following subsections, we discuss five performance metrics widely used in 2D image segmentation.

14.1. Pixel Accuracy

Pixel Accuracy (), given by Equation (4), calculates the percentage of correctly classified pixels in the output image [150]. In Equation (4), K is the number of classes, is the number of correctly identified pixels from class i, and is the number of pixels belonging to class i that were predicted as class j. Where an object does not occupy a large enough region, the classifier might still correctly classify most pixels even if it fails every single object pixel. This method is very vulnerable to class imbalances.

14.2. Mean Pixel Accuracy

14.3. Intersection over Union

Intersection Over Union () is the most commonly used method for measuring performance. As highlighted in Equation (6), IoU determines the overlap coefficient between the ground-truth labels and predicted labels, which makes it more consistent in detecting smaller objects in an image. Unlike PA or mPA, IoU does not compare all categorized pixels in an image, just the ones in the predicted and ground truth areas [152].

14.4. Mean IoU

The Mean IoU () is given by Equation (7), where is the IoU for a certain class i. This metric provides a score to the network and how well it performs while taking into account precision (how many predictions are true positives) and recall (how many true positives were identified as such) [153].

14.5. Dice Coefficient

The Dice coefficient, as expressed in Equations (8) and (9), quantifies the similarity between two images by calculating twice the area of their overlap divided by the sum of the total number of pixels in both images. The first equation expresses the Dice coefficient in terms of set overlap, while the second uses confusion matrix components (true positives, false positives, and false negatives) commonly found in classification tasks. This metric is closely related to the IoU method. Both metrics typically provide consistent evaluations, such that if the IoU indicates that one model’s output is superior to another, the Dice coefficient will likely concur [154]. As highlighted in Equation (9), the Dice coefficient is also mathematically equivalent to the F1-score in the binary classification setting, though differences may arise in multi-class or multi-label segmentation tasks.

15. Discussion

This section provides a discussion of various models and their performance on the datasets they were trained on. Table 2 highlights the performance, using the mIoU metric, of each network on common and less common datasets, respectively. Due to differences in evaluation settings and incomplete reporting across studies, some entries are missing. This table is provided on a best-effort basis to illustrate general performance trends rather than to serve as a unified benchmark.

Table 2.

The mean Intersection over Union (mIoU) of different networks, from 2014 to 2023, in more common datasets.

The results presented in Table 2, highlighting a selection of network architectures spanning from 2014 to 2023, offer some insights into the progression of state-of-the-art semantic segmentation [96]. NAS research is more common among image classification and object detection, probably due to the higher difficulty and more complex architectures of image segmentation. The increased use of transformer architectures may lead to a corresponding rise in NAS-derived architectures since the modular nature of the former simplifies the latter’s search space [105].

Although Table 2 suggests a general trend of improved performance over time, inconsistencies in evaluation protocols and dataset usage throughout studies make precise comparisons difficult. The evolution in research for this field started with a focus on CNNs but found better success with later architectures and techniques, such as RNNs and transformers [66,94]. Nonetheless, RNNs have been dropped in favor of transformers due to having shorter memory.

Although these results outline improvements in mIoU, such gains can also be attributed to key architectural shifts. Early CNN-based models relied heavily on local receptive fields and deep hierarchical representations, both of which struggled with context-aware segmentation. Techniques such as pyramid pooling and dilated convolutions were developed to address this issue, but long-range spatial dependencies remained a limitation.

Transformer-based models introduced a paradigm shift by using self-attention mechanisms, which directly model the relationships between any two points in an image—regardless of their distance. This technique for capturing global context represents an improvement in the field of semantic segmentation, where semantically related regions may be spatially distant.

Hybrid approaches, such as TransUNet [60], combine the spatial precision of convolutional encoders with the contextual modeling of transformers to achieve a better balance between accuracy and hardware costs. The scalability of transformer-based models is also a significant advantage as datasets grow larger and more complex.

In addition to this performance improvement using transformer-based models, transformers require substantially more computational resources and are susceptible to overfitting when working with small datasets. Thus, techniques such as pretraining, transfer learning, and data augmentation are often necessary to ensure generalization.

The evolution in segmentation research has started with a focus on CNNs but found better results with later architectures and techniques such as RNNs and transformers [66,94]. Although this paper organizes models by their dominant architectural paradigms—such as FCNs, GCNs, encoders–decoders, and attention-based networks—it is important to note that many modern semantic segmentation models span multiple categories. For example, transformer-based models often adopt encoder–decoder structures, and hybrid approaches like TransUNet combine convolutional backbones with attention mechanisms. These overlapping characteristics reflect the modular and compositional nature of recent models, which increasingly draw from multiple architectural ideas to improve performance and adaptability.

Orthogonal to these architectural advances, continuous learning in image segmentation remains uncommon, with most networks being trained using closed datasets. Training on closed datasets means that networks learn their tasks once and cannot be updated with new or improved data. Different methods, or the optimization of existing methods, in continual semantic segmentation could result in networks that can learn and improve continuously. Further research could lead to networks that can learn new tasks or correct previous knowledge [110].

16. Current and Future Directions in Semantic Segmentation

Recent developments in computer vision have introduced a new class of models that significantly enhance the capabilities of semantic segmentation by moving beyond fixed-class labels and closed-world assumptions. One major advancement is the integration of Large Language Models (LLMs) into vision systems, leading to the emergence of multimodal approaches. Architectures such as CLIP [172] and LLaVA [173] combine visual encoders with language representations, allowing segmentation models to generalize to previously unseen object categories through text prompts. This enables open-vocabulary or zero-shot segmentation [174], where target classes are defined dynamically rather than being constrained to a predefined label set.

Another notable contribution is the Segment Anything Model (SAM) [175], which has been trained on over one billion masks spanning eleven million images. SAM supports the segmentation of arbitrary objects using point, box, or mask prompts and can generalize to new tasks and domains without the need for retraining or fine-tuning. In the realm of open-vocabulary and zero-shot segmentation, models such as MaskCLIP [176] and ViL-Seg [177] extend CLIP-based visual-language embeddings to pixel-level tasks, enabling segmentation of categories that were not present during training.

Furthermore, the diffusion paradigm—originally developed for image generation—has recently been adapted for semantic segmentation. Methods like Efficient Semantic Segmentation with Diffusion Models [178] and SEEM [179] leverage denoising diffusion processes to produce high-resolution segmentation masks, offering a new generative approach to this task.

17. Conclusions

The field of semantic segmentation includes a wide range of techniques and tools, many of which are still under active research and development. Starting with early solutions based on CNNs, the field has since evolved to include more advanced architectures that offer improved accuracy and efficiency. While this paper primarily focuses on image semantic segmentation, many of these methods are also being adapted for video semantic segmentation, which requires real-time detection and segmentation. The techniques and datasets reviewed in this work provide a foundational understanding of the various subdomains within this research area.

In addition to the techniques developed in recent years, newer methods such as NAS have also been analyzed. NAS, in particular, still faces challenges in reducing computational costs. However, being a relatively recent approach, NAS may benefit significantly from future research in the optimization techniques themselves, as well as from the introduction of newer architectures, which could offer more robust baseline networks to optimize, combine, and enhance further.

The availability of diverse datasets is also of great importance for testing and deploying these networks, as they must meet the varying requirements of researchers. Despite the existing datasets, networks may need to continuously learn from new data as they become available, introducing a unique set of challenges. These challenges are actively being addressed within the field of continual semantic segmentation.

In summary, and despite the significant challenges lying ahead for the field, semantic segmentation has made significant strides in the last few years, particularly with the development of advanced neural network architectures and techniques, supported by the growing availability of diverse datasets. Thus, it seems clear that the future of semantic segmentation lies in creating more adaptive, efficient models capable of real-time processing and continual learning from diverse data sources.

Author Contributions

Conceptualization, S.S. and J.P.M.-C.; methodology, S.S.; software, S.S.; validation, S.S., N.F. and J.P.M.-C.; formal analysis, N.F. and J.P.M.-C.; investigation, S.S.; resources, S.S.; data curation, S.S., N.F. and J.P.M.-C.; writing—original draft preparation, S.S.; writing—review and editing, S.S., N.F. and J.P.M.-C.; visualization, N.F. and J.P.M.-C.; supervision, N.F. and J.P.M.-C.; project administration, N.F. and J.P.M.-C.; funding acquisition, N.F. and J.P.M.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by Fundação para a Ciência e a Tecnologia (FCT) under Grants Copelabs ref. UIDB/04111/2020, Centro de Tecnologias e Sistemas (CTS) ref. UIDB/00066/2020, LASIGE Research Unit, ref. UID/00408/2025, and COFAC ref. CEECINST/00002/2021/CP2788/CT0001; and, Instituto Lusófono de Investigação e Desenvolvimento (ILIND) under Project COFAC/ILIND/COPELABS/1/2024.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN | Convolutional Neural Network |

| CCTV | Closed Circuit Television |

| CRF | Conditional Random Fields |

| VSS | Video Semantic Segmentation |

| VPS | Video Panoptic Segmentation |

| FCN | Fully Convolutional Network |

| FPN | Feature Pyramid Network |

| NAS | Neural Architecture Search |

| GCN | Graph Convolutional Networks |

| CT | Computed Tomography |

| MRI | Magnetic Resonance Imaging |

| RNN | Recurrent Neural Network |

| GAN | Generative Adversarial Network |

| NLP | Natural Language Processing |

| STN | Spatial Transformer Network |

| RL | Reinforcement Learning |

| GPT | Generative Pre-training Transformer |

| PA | Pixel Accuracy |

| mPA | Mean Pixel Accuracy |

| IoU | Intersection Over Union |

| mIoU | Mean Intersection Over Union |

References

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Xian, M.; Zhang, Y.; Cheng, H.; Xu, F.; Zhang, B.; Ding, J. Automatic Breast Ultrasound Image Segmentation: A Survey. arXiv 2017, arXiv:1704.01472. [Google Scholar] [CrossRef]

- Li, B.; Liu, S.; Xu, W.; Qiu, W. Real-time object detection and semantic segmentation for autonomous driving. In Proceedings of the MIPPR 2017: Automatic Target Recognition and Navigation, Xiangyang, China, 28–29 October 2017; Liu, J., Udupa, J.K., Hong, H., Eds.; International Society for Optics and Photonics, SPIE: California, CA, USA, 2018; Volume 10608, pp. 167–174. [Google Scholar] [CrossRef]

- Yasuno, M.; Yasuda, N.; Aoki, M. Pedestrian detection and tracking in far infrared images. In Proceedings of the 2004 Conference on Computer Vision and Pattern Recognition Workshop, Washington, DC, USA, 27 June–2 July 2004; p. 125. [Google Scholar] [CrossRef]

- Victoria Priscilla, C.; Agnes Sheila, S.P. Pedestrian Detection—A Survey. In Proceedings of the First International Conference on Innovative Computing and Cutting-Edge Technologies (ICICCT 2019), Istanbul, Turkey, 30–31 October 2019; Jain, L.C., Peng, S.L., Alhadidi, B., Pal, S., Eds.; Springer Nature: Cham, Switzerland, 2020; pp. 349–358. [Google Scholar] [CrossRef]

- Deepak, G.D.; Bhat, S.K. A comparative study of breast tumour detection using a semantic segmentation network coupled with different pretrained CNNs. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2024, 12, 2373996. [Google Scholar] [CrossRef]

- Kohler, R. A segmentation system based on thresholding. Comput. Graph. Image Process. 1981, 15, 319–338. [Google Scholar] [CrossRef]

- Gómez, O.; González, J.A.; Morales, E.F. Image Segmentation Using Automatic Seeded Region Growing and Instance-Based Learning. In Progress in Pattern Recognition, Image Analysis and Applications; Rueda, L., Mery, D., Kittler, J., Eds.; Springer Nature: Berlin/Heidelberg, Germany, 2007; pp. 192–201. [Google Scholar]

- Roslin, A.; Marsh, M.; Provencher, B.; Mitchell, T.; Onederra, I.; Leonardi, C. Processing of micro-CT images of granodiorite rock samples using convolutional neural networks (CNN), Part II: Semantic segmentation using a 2.5D CNN. Miner. Eng. 2023, 195, 108027. [Google Scholar] [CrossRef]

- Lapa, P.A.F. Conditional Random Fields Improve the CNN-Based Prostate Cancer Classification Performance. Master’s Thesis, NOVA Information Management School, Lisbon, Portugal, 2019. [Google Scholar]

- Zhang, M.; Dong, B.; Li, Q. Deep active contour network for medical image segmentation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, 4–8 October 2020; Proceedings, Part IV 23. Springer: Berlin/Heidelberg, Germany, 2020; pp. 321–331. [Google Scholar]

- Li, P.; Xia, H.; Zhou, B.; Yan, F.; Guo, R. A Method to Improve the Accuracy of Pavement Crack Identification by Combining a Semantic Segmentation and Edge Detection Model. Appl. Sci. 2022, 12, 4714. [Google Scholar] [CrossRef]

- Yuheng, S.; Hao, Y. Image Segmentation Algorithms Overview. arXiv 2017, arXiv:1707.02051. [Google Scholar] [CrossRef]

- Tian, D.; Han, Y.; Wang, B.; Guan, T.; Gu, H.; Wei, W. Review of object instance segmentation based on deep learning. J. Electron. Imaging 2021, 31, 041205. [Google Scholar] [CrossRef]

- Kim, D.; Woo, S.; Lee, J.; Kweon, I.S. Video Panoptic Segmentation. arXiv 2020, arXiv:2006.11339. [Google Scholar] [CrossRef] [PubMed]

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollar, P. Panoptic Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9396–9405. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar] [CrossRef]

- Prakash, V.J.; Nithya, L.M. A Survey on Semi-Supervised Learning Techniques. arXiv 2014, arXiv:1402.4645. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Swersky, K.; Norouzi, M.; Hinton, G.E. Big Self-Supervised Models are Strong Semi-Supervised Learners. In Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 22243–22255. [Google Scholar]

- Ran, L.; Li, Y.; Liang, G.; Zhang, Y. Pseudo Labeling Methods for Semi-Supervised Semantic Segmentation: A Review and Future Perspectives. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 3054–3080. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, Y.; Li, Y.; Wan, Y.; Guo, H.; Zheng, Z.; Yang, K. Semi-supervised deep learning via transformation consistency regularization for remote sensing image semantic segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5782–5796. [Google Scholar] [CrossRef]

- Xie, J.; Shuai, B.; Hu, J.F.; Lin, J.; Zheng, W.S. Improving fast segmentation with teacher-student learning. arXiv 2018, arXiv:1810.08476. [Google Scholar] [CrossRef]

- Wang, W.; Zhou, T.; Porikli, F.; Crandall, D.J.; Gool, L.V. A Survey on Deep Learning Technique for Video Segmentation. arXiv 2021, arXiv:2107.01153. [Google Scholar] [CrossRef]

- Jung, S.; Heo, H.; Park, S.; Jung, S.U.; Lee, K. Benchmarking Deep Learning Models for Instance Segmentation. Appl. Sci. 2022, 12, 8856. [Google Scholar] [CrossRef]

- Portillo-Portillo, J.; Sanchez-Perez, G.; Toscano-Medina, L.K.; Hernandez-Suarez, A.; Olivares-Mercado, J.; Perez-Meana, H.; Velarde-Alvarado, P.; Orozco, A.L.S.; García Villalba, L.J. FASSVid: Fast and Accurate Semantic Segmentation for Video Sequences. Entropy 2022, 24, 942. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Dumoulin, V.; Visin, F. A guide to convolution arithmetic for deep learning. arXiv 2018, arXiv:1603.07285. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef] [PubMed]

- Fukushima, K. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 2004, 36, 193–202. [Google Scholar] [CrossRef] [PubMed]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Scherer, D.; Müller, A.; Behnke, S. Evaluation of Pooling Operations in Convolutional Architectures for Object Recognition. In Proceedings of the Artificial Neural Networks—ICANN 2010, Thessaloniki, Greece, 15–18 September 2010; Diamantaras, K., Duch, W., Iliadis, L.S., Eds.; Springer Nature: Cham, Switzerland, 2010; pp. 92–101. [Google Scholar] [CrossRef]

- O’Shea, K.; Nash, R. An Introduction to Convolutional Neural Networks. arXiv 2015, arXiv:1511.08458. [Google Scholar] [CrossRef]

- Estrach, J.B.; Szlam, A.; LeCun, Y. Signal recovery from Pooling Representations. In Proceedings of the 31st International Conference on Machine Learning, Bejing, China, 22–24 June 2014; Xing, E.P., Jebara, T., Eds.; Proceedings of Machine Learning Research. Volume 32, pp. 307–315. [Google Scholar]

- Wang, S.H.; Lv, Y.D.; Sui, Y.; Liu, S.; Wang, S.J.; Zhang, Y.D. Alcoholism detection by data augmentation and Convolutional Neural Network with stochastic pooling. J. Med. Syst. 2017, 42, 2. [Google Scholar] [CrossRef] [PubMed]

- Kassani, S.H.; Kassani, P.H.; Wesolowski, M.J.; Schneider, K.A.; Deters, R. Breast Cancer Diagnosis with Transfer Learning and Global Pooling. In Proceedings of the 2019 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 16–18 October 2019; pp. 519–524. [Google Scholar] [CrossRef]

- Lv, Y.; Ma, H.; Li, J.; Liu, S. Attention guided u-net with atrous convolution for accurate retinal vessels segmentation. IEEE Access 2020, 8, 32826–32839. [Google Scholar] [CrossRef]

- Qiao, S.; Chen, L.C.; Yuille, A. DetectoRS: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10208–10219. [Google Scholar] [CrossRef]

- Kyrkou, C.; Theocharides, T. EmergencyNet: Efficient aerial image classification for drone-based emergency monitoring using atrous convolutional feature fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1687–1699. [Google Scholar] [CrossRef]

- Zhou, Y.; Chang, H.; Lu, Y.; Lu, X. CDTNet: Improved image classification method using standard, Dilated and Transposed Convolutions. Appl. Sci. 2022, 12, 5984. [Google Scholar] [CrossRef]

- Odena, A.; Dumoulin, V.; Olah, C. Deconvolution and Checkerboard Artifacts. Distill 2016. [Google Scholar] [CrossRef]

- Gao, H.; Yuan, H.; Wang, Z.; Ji, S. Pixel transposed convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 1218–1227. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Tong, H.; Xu, J.; Maciejewski, R. Graph convolutional networks: A comprehensive review. Comput. Soc. Netw. 2019, 6, 11. [Google Scholar] [CrossRef] [PubMed]

- Tenenbaum, J.B.; de Silva, V.; Langford, J.C. A Global Geometric Framework for Nonlinear Dimensionality Reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef] [PubMed]

- Roweis, S.T.; Saul, L.K. Nonlinear Dimensionality Reduction by Locally Linear Embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef] [PubMed]

- Belkin, M.; Niyogi, P. Laplacian Eigenmaps and Spectral Techniques for Embedding and Clustering. In Advances in Neural Information Processing Systems; Dietterich, T., Becker, S., Ghahramani, Z., Eds.; MIT Press: Cambridge, MA, USA, 2001; Volume 14. [Google Scholar]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. DeepWalk: Online Learning of Social Representations. arXiv 2014, arXiv:1403.6652. [Google Scholar] [CrossRef]

- Grover, A.; Leskovec, J. node2vec: Scalable Feature Learning for Networks. arXiv 2016, arXiv:1607.00653. [Google Scholar] [CrossRef]

- Wu, F.; Souza, A.; Zhang, T.; Fifty, C.; Yu, T.; Weinberger, K. Simplifying Graph Convolutional Networks. In Proceedings of the 36th International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; Chaudhuri, K., Salakhutdinov, R., Eds.; Proceedings of Machine Learning Research. Volume 97, pp. 6861–6871. [Google Scholar]

- Chen, M.; Wei, Z.; Huang, Z.; Ding, B.; Li, Y. Simple and Deep Graph Convolutional Networks. In Proceedings of the 37th International Conference on Machine Learning, PMLR, Online, 13–18 July 2020; Daumé, H., Singh, A., Eds.; Proceedings of Machine Learning Research. Volume 119, pp. 1725–1735. [Google Scholar]

- Zhang, L.; Li, X.; Arnab, A.; Yang, K.; Tong, Y.; Torr, P.H.S. Dual Graph Convolutional Network for Semantic Segmentation. arXiv 2019, arXiv:1909.06121. [Google Scholar] [CrossRef]

- Bian, T.; Xiao, X.; Xu, T.; Zhao, P.; Huang, W.; Rong, Y.; Huang, J. Rumor Detection on Social Media with Bi-Directional Graph Convolutional Networks. Proc. AAAI Conf. Artif. Intell. 2020, 34, 549–556. [Google Scholar] [CrossRef]

- Schulte-Sasse, R.; Budach, S.; Hnisz, D.; Marsico, A. Graph Convolutional Networks Improve the Prediction of Cancer Driver Genes. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2019: Workshop and Special Sessions, Munich, Germany, 17–19 September 2019; Tetko, I.V., Kůrková, V., Karpov, P., Theis, F., Eds.; Springer Nature: Cham, Switzerland, 2019; pp. 658–668. [Google Scholar] [CrossRef]

- Wang, H.; Zhao, M.; Xie, X.; Li, W.; Guo, M. Knowledge Graph Convolutional Networks for Recommender Systems. In Proceedings of the The World Wide Web Conference (WWW ’19), San Francisco, CA, USA, 13–17 May 2019; pp. 3307–3313. [Google Scholar] [CrossRef]

- Sun, M.; Zhao, S.; Gilvary, C.; Elemento, O.; Zhou, J.; Wang, F. Graph convolutional networks for computational drug development and discovery. Briefings Bioinform. 2019, 21, 919–935. [Google Scholar] [CrossRef] [PubMed]

- Yao, L.; Mao, C.; Luo, Y. Graph Convolutional Networks for Text Classification. Proc. AAAI Conf. Artif. Intell. 2019, 33, 7370–7377. [Google Scholar] [CrossRef]

- Ghosh, S.; Das, N.; Das, I.; Maulik, U. Understanding Deep Learning Techniques for Image Segmentation. arXiv 2019, arXiv:1907.06119. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.C.H.; Heinrich, M.P.; Misawa, K.; Mori, K.; McDonagh, S.G.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning Where to Look for the Pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar] [CrossRef]

- Alom, M.Z.; Yakopcic, C.; Hasan, M.; Taha, T.M.; Asari, V.K. Recurrent residual U-Net for medical image segmentation. J. Med. Imaging 2019, 6, 014006. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar] [CrossRef]

- Alom, M.Z.; Hasan, M.; Yakopcic, C.; Taha, T.M.; Asari, V.K. Recurrent Residual Convolutional Neural Network based on U-Net (R2U-Net) for Medical Image Segmentation. arXiv 2018, arXiv:1802.06955. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. arXiv 2017, arXiv:1612.03144. [Google Scholar] [CrossRef]

- Hu, M.; Li, Y.; Fang, L.; Wang, S. A2-FPN: Attention Aggregation Based Feature Pyramid Network for Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15338–15347. [Google Scholar] [CrossRef]

- Kirillov, A.; Girshick, R.; He, K.; Dollar, P. Panoptic Feature Pyramid Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6392–6401. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Ribeiro, A.H.; Tiels, K.; Aguirre, L.A.; Schön, T. Beyond exploding and vanishing gradients: Analysing RNN training using attractors and smoothness. In Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics, PMLR, Online, 26–28 August 2020; Chiappa, S., Calandra, R., Eds.; Proceedings of Machine Learning Research. Volume 108, pp. 2370–2380. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; van Merrienboer, B.; Gülçehre, Ç.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. arXiv 2014, arXiv:1406.1078. [Google Scholar] [CrossRef]

- Miao, Y.; Gowayyed, M.; Metze, F. EESEN: End-to-end speech recognition using deep RNN models and WFST-based decoding. In Proceedings of the 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), Scottsdale, AZ, USA, 13–17 December 2015; pp. 167–174. [Google Scholar] [CrossRef]

- Mikolov, T.; Kombrink, S.; Burget, L.; Černocký, J.; Khudanpur, S. Extensions of recurrent neural network language model. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 5528–5531. [Google Scholar] [CrossRef]

- Li, D.; Qian, J. Text sentiment analysis based on long short-term memory. In Proceedings of the 2016 First IEEE International Conference on Computer Communication and the Internet (ICCCI), Wuhan, China, 13–15 October 2016; pp. 471–475. [Google Scholar] [CrossRef]

- Visin, F.; Romero, A.; Cho, K.; Matteucci, M.; Ciccone, M.; Kastner, K.; Bengio, Y.; Courville, A. ReSeg: A Recurrent Neural Network-Based Model for Semantic Segmentation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 426–433. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; Bengio, Y., LeCun, Y., Eds.; ICLR: Appleton, WI, USA, 2015. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Advances in Neural Information Processing Systems; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Zhang, X.; Jian, W.; Chen, Y.; Yang, S. Deform-GAN: An Unsupervised Learning Model for Deformable Registration. arXiv 2020, arXiv:2002.11430. [Google Scholar] [CrossRef]

- Dai, Z.; Yang, Z.; Yang, F.; Cohen, W.W.; Salakhutdinov, R.R. Good Semi-supervised Learning That Requires a Bad GAN. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]