Abstract

A networked system consists of a collection of interconnected autonomous agents that communicate and interact through a shared communication infrastructure. These agents collaborate to pursue common objectives or exhibit coordinated behaviors that would be difficult or impossible for a single agent to achieve alone. With widespread applications in domains such as robotics, smart grids, and communication networks, the coordination and control of networked systems have become a vital research focus—driven by the complexity of distributed interactions and decision-making processes. Graph-based reinforcement learning (GRL) has emerged as a powerful paradigm that combines reinforcement learning with graph signal processing and graph neural networks (GNNs) to develop policies that are relationally aware, scalable, and adaptable to diverse network topologies. This survey aims to advance research in this evolving area by providing a comprehensive overview of GRL in the context of networked coordination and control. It covers the fundamental principles of reinforcement learning and graph neural networks, examines state-of-the-art GRL models and algorithms, reviews training methodologies, discusses key challenges, and highlights real-world applications. By synthesizing theoretical foundations, empirical insights, and open research questions, this survey serves as a cohesive and structured resource for the study and advancement of GRL-enabled networked systems.

1. Introduction

The rapid emergence of large-scale, networked systems, such as multi-robot teams, autonomous vehicle fleets, wireless sensor networks, and smart grids, has brought renewed attention to the challenges of coordination and control in complex, distributed environments [1,2,3]. These systems consist of multiple autonomous agents that interact with one another over structured topologies, exchanging information and making decisions to achieve global objectives. The inherent graph-structured nature of these systems, characterized by dynamic inter-agent relationships, spatial constraints, and localized communication, presents unique opportunities and challenges for learning-based control [4].

Reinforcement learning (RL) has gained popularity as a powerful framework for sequential decision making under uncertainty [5]. It enables agents to learn optimal control policies through trial-and-error interactions with their environment. However, conventional RL methods typically assume flat or unstructured observation spaces, making them ill-suited for multi-agent systems with relational dependencies. In contrast, graph neural networks (GNNs) offer a principled way to model such relational structures by leveraging the underlying graph topology to encode spatial or communication dependencies among agents [6,7].

The integration of GNNs with MARL has emerged as an effective approach for scalable and generalizable coordination in networked systems. GNN-enhanced MARL methods enable agents to learn policies that are permutation-invariant, localized, and transferable across varying graph topologies and scales. By embedding the message-passing principles of GNNs into policy learning, these approaches can exploit both structural and dynamic regularities to enhance learning outcomes and coordination effectiveness.

This survey provides a comprehensive overview of recent advances in GNN-MARL for networked coordination and control. We examine how GNNs enhance fundamental RL elements, including state representation, action selection, and reward design; analyze the architectural principles underlying message-passing and agent coordination mechanisms; and evaluate different training frameworks from centralized approaches to distributed coordination strategies. We discuss representative applications spanning autonomous systems, traffic networks, wireless communications, smart energy systems, and supply chain management, and identify open research challenges, including computational complexity, convergence properties, and cross-domain generalization. By synthesizing theoretical foundations, architectural innovations, and practical insights, this survey aims to provide a structured guide for researchers and practitioners seeking to leverage graph-enhanced learning approaches for coordination and control in complex networked environments.

The remainder of this survey is organized as follows. Section 2 provides background on RL and GNNs. Section 3, the core technical contribution, examines how GNNs enhance MARL systems through several aspects: fundamental RL elements (state representation, action selection, reward design), multi-agent communication and coordination mechanisms, training methods, and performance evaluation criteria. Section 4 presents real-world applications in robotics, transportation, wireless networks, and manufacturing. Section 5 identifies research challenges and future directions on scalability, communication efficiency, and theoretical foundations. Finally, Section 6 concludes the survey. Throughout, we emphasize both theoretical advances and empirical validations to provide a comprehensive resource for researchers and practitioners.

2. Related Work

In recent years, the integration of graph neural networks (GNNs) with reinforcement learning (RL), especially multi-agent reinforcement learning (MARL), has emerged as a rapidly growing research area. Along with this development, several surveys have been published to provide overviews of the field. These works are valuable for positioning current progress, yet they differ in scope, focus, and level of detail. In this section, we briefly review representative surveys and outline how our work builds upon and complements them.

The survey Challenges and Opportunities in Deep Reinforcement Learning with Graph Neural Networks: A Comprehensive Review of Algorithms and Applications [8] provides a broad overview of how GNNs can be combined with deep RL. It systematically discusses algorithmic advances and a wide range of applications, but its emphasis is largely on single-agent RL. As a result, MARL-specific challenges such as inter-agent communication, coordination, and credit assignment are not addressed in depth.

Another early effort, Graph Neural Networks and Reinforcement Learning: A Survey [9], offers wide coverage, from theoretical foundations to practical algorithms. While the work carefully categorizes existing approaches, MARL is not treated as a central analytical focus. Moreover, the survey does not provide a systematic discussion of learning and training frameworks, which are critical for scaling GNN-enhanced MARL to practical systems.

Closer to our topic, Graph Neural Network Meets Multi-Agent Reinforcement Learning: Fundamentals, Applications, and Future Directions [10] specifically addresses the intersection of GNNs and MARL. It clearly presents the fundamentals of GNNs in MARL and organizes existing applications around functional roles such as communication and representation learning. However, given its time of publication, it does not capture several recent algorithmic directions such as symmetry-aware architectures (e.g., PEnGUiN), hypergraph-based coordination (e.g., HYGMA), or advanced decomposition methods (e.g., QMIX-GNN, ExpoComm). Furthermore, its perspective is primarily function-oriented, whereas our work takes a more fundamental view by aligning GNN methods with the core components of RL.

Compared with these surveys, our contribution is threefold. First, we adopt an inside-out organization that begins with the essential building blocks of RL—state, action, and reward—and we systematically analyze how GNNs enhance each component. Second, we highlight MARL-specific mechanisms by dedicating separate sections to message passing and multi-agent coordination, showing how GNNs reshape interaction and cooperation. Finally, we provide a structured overview of learning and training frameworks, including centralized training, decentralized execution, and heterogeneous-agent settings, which are essential for bridging theoretical advances with real-world deployment. Therefore, our survey not only complements prior reviews but also provides methodological guidance for future research in GNN-enhanced MARL.

3. Background: Concepts and Principles

In this section, fundamental concepts and principles of RL and GNNs are presented, serving as a basis for detailed discussion on GRL in later sections.

3.1. Reinforcement Learning

This subsection is focused on fundamental RL algorithms, starting with single-agent RL, followed by multi-agent RL.

3.1.1. Single-Agent Reinforcement Learning (RL)

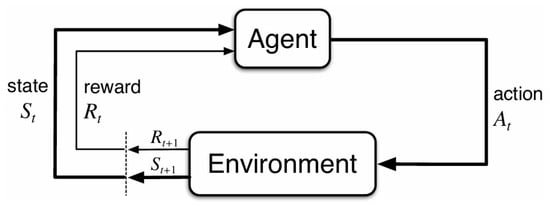

Single-agent reinforcement learning (RL) [11] is a computational framework for learning goal-oriented behavior through trial-and-error. The core of RL lies in the continuous interaction loop between an agent and its environment, as depicted in Figure 1 and formalized as a Markov decision process (MDP). This interaction unfolds sequentially over discrete timesteps. At each timestep t, the process begins with the agent observing the environment’s current state, , which provides a complete description of the situation. Based on this state, the agent consults its policy, , to select an action, . This action is then executed, causing the environment to change. In the subsequent timestep, , the environment responds with two crucial pieces of information: a new state, , and a scalar reward, . The reward signal provides evaluative feedback, indicating how beneficial the action was in state . The agent’s objective is to learn a policy that refines its decision making over time by leveraging this reward feedback to maximize the expected cumulative discounted reward, known as the return: where the discount factor determines the present value of future rewards.

Figure 1.

The agent–environment interaction in a Markov decision process [11].

Typical single-agent RL algorithms and their advantages and disadvantages are briefly explained below.

Q-learning [12] is a model-free, off-policy algorithm that learns an action-value function through temporal difference updates:

The algorithm directly learns optimal action-values regardless of the followed policy, effectively handling discrete action spaces. However, its tabular nature becomes impractical for large state spaces, motivating the development of function approximation methods.

Policy gradient methods [13] directly optimize parameterized policies by ascending the gradient of the expected return as

The REINFORCE algorithm [13] implements this using Monte Carlo estimates. While these methods naturally support continuous action spaces and stochastic policies, they suffer from high variance in gradient estimates, motivating the development of variance reduction techniques such as baselines or advantage functions.

Actor–critic methods [14,15] combine value-based and policy-based approaches to reduce variance. The actor learns the policy , while the critic learns the value function , with updates using the advantage . This advantage function serves as a lower-variance replacement for the full return used in the vanilla policy gradient update, Equation (2), leading to more stable learning. This hybrid architecture provides more stable learning than pure policy gradient methods while maintaining their flexibility.

Advantage actor–critic (A2C) is a synchronous variant derived from A3C [16], using the advantage function to compute policy gradients as

where is typically estimated using temporal-difference returns.

Asynchronous advantage actor–critic (A3C) [16] introduced asynchronous parallel training to actor–critic methods, significantly improving their scalability and stability. Multiple workers independently interact with separate environment instances, computing policy updates using n-step advantage estimates:

These workers asynchronously update shared global parameters, eliminating the need for experience replay. The diversity of parallel trajectories improves exploration and leads to more stable and efficient learning.

Deep Q-network (DQN) [17] extends Q-learning to high-dimensional state spaces by approximating the action-value function with deep neural networks. Two key innovations help stabilize training: experience replay mitigates temporal correlations by sampling transitions from a replay buffer; target networks, updated periodically, provide more stable learning targets. The loss function

allows learning directly from raw pixel inputs and enables DQN to reach human-level performance on Atari games.

Double deep Q-network (DDQN) [18] addresses DQN’s overestimation bias by decoupling action selection and evaluation:

Unlike the standard DQN target in Equation (5), which uses the target network for both selecting the best next action and evaluating its value, the DDQN target in Equation (6) uses the online network () to select the action and the target network () to evaluate it. The current network selects actions while the target network evaluates them, significantly reducing overestimation and improving learning stability across various domains.

Deep deterministic policy gradient (DDPG) [19,20] extends actor–critic methods to continuous control using deterministic policies . The algorithm employs experience replay, separate target networks with soft updates (), and exploration noise (typically the Ornstein–Uhlenbeck process). The critic updates minimize the loss function,

while the actor maximizes expected Q-values.

Twin delayed deep deterministic policy gradient (TD3) [21] improves DDPG through three modifications: twin Q-functions with minimum target values to reduce overestimation

delayed policy updates relative to value updates, and target policy smoothing with clipped noise. These changes significantly enhance stability and performance in continuous control tasks.

Soft actor–critic (SAC) [22] incorporates maximum entropy reinforcement learning to balance exploration and exploitation:

This objective modifies the standard expected return by adding a weighted entropy term, encouraging the policy to be as random as possible while still maximizing rewards. The algorithm uses stochastic policies, twin soft Q-functions, and automatic temperature tuning for the entropy coefficient . SAC’s robustness to hyperparameters and sample efficiency make it highly effective for continuous control.

Proximal policy optimization (PPO) [23] simplifies trust region methods using a clipped surrogate objective

where . This prevents destructively large policy updates while maintaining simplicity. PPO typically includes generalized advantage estimation (GAE) and has become the standard choice for policy optimization due to its stability and performance.

3.1.2. Multi-Agent Reinforcement Learning (MARL)

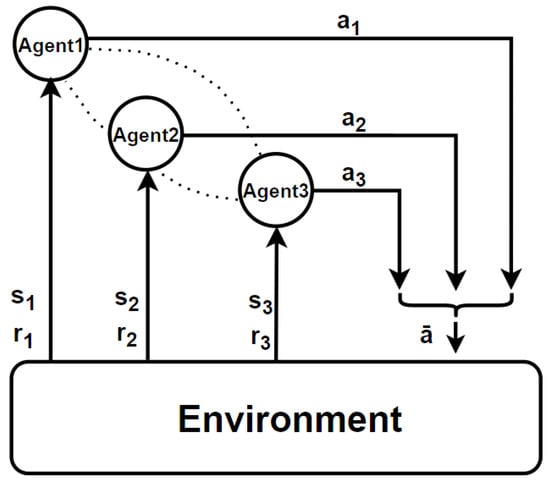

MARL [24,25] extends the reinforcement learning framework to environments with multiple simultaneously learning agents. Figure 2 illustrates this setting: each agent (e.g., Agent1, Agent2, Agent3) interacts with its local observation and reward from the environment, while their actions are combined into a joint action that drives the next state transition. This joint dependence highlights that each agent’s learning process is coupled with others, unlike the single-agent case. Additionally, agents often need to collaborate to achieve a common goal, as indicated by dashed lines in the figure representing their interactions. These relationships could be used to construct a graph neural network (GNN), enabling the development of GNN-MARL models that effectively capture inter-agent dependencies.

Figure 2.

The diagram of multi-agent reinforcement.

By contrast, Figure 1 shows the classical single-agent RL setup, where the agent interacts with the environment in isolation, receiving a state and reward and producing an action that solely determines the next state. MARL generalizes this to Markov games (also known as stochastic games), formalized as . Here, N agents share a global state space S, each with its own action space and reward function . The transition function T depends on the joint action of all agents, distinguishing it from the single-agent setting where transitions depend only on one agent’s action. Similarly, each agent’s reward is a function of the global state and the joint action, a significant departure from the single-agent reward , which results from an individual agent’s action . This coupling is the primary source of non-stationarity in MARL. When , the Markov game reduces to the standard MDP.

This multi-agent extension introduces new challenges [24], such as non-stationarity, partial observability, and coordination complexity. Consequently, specialized MARL algorithms are required to address cooperation, competition, or mixed interactions among agents.

Multi-agent policy optimization. Policy-based optimization is widely used in multi-agent reinforcement learning (MARL) due to several key advantages: handling high-dimensional and continuous action spaces, decentralized execution with centralized training, stable convergence in cooperative and mixed environments, support for stochastic policies for exploration and robustness, flexibility with shared or individual policies, and compatibility with actor–critic methods. Some typical policy-based MARL methods are:

- Multi-agent deep deterministic policy gradient (MADDPG) [26] addresses non-stationarity through centralized training with decentralized execution (CTDE). Each agent i maintains a decentralized actor using only local observations, while training employs centralized critics accessing all agents’ information. This design stabilizes learning while maintaining practical deployability.

- Multi-agent proximal policy optimization (MAPPO) [27] demonstrates that properly configured PPO achieves strong multi-agent performance. Key modifications include centralized value functions conditioned on global state, PopArt value normalization, and careful hyperparameter tuning. This adapts the single-agent PPO framework, whose objective is shown in Equation (10), to the multi-agent domain by ensuring the advantage estimates used for the clipped objective are derived from a centralized critic aware of all agents’ states. MAPPO’s simplicity and effectiveness have established it as a standard baseline for cooperative multi-agent tasks.

- Counterfactual multi-agent policy gradients (COMA) [28] addresses the credit assignment problem in cooperative multi-agent settings by using counterfactual baselines. Each agent’s advantage function compares the joint action value with a counterfactual baseline computed by marginalizing over that agent’s actions while keeping other agents’ actions fixed, providing a more sophisticated way to calculate the advantage term seen in single-agent actor-critic updates like Equation (3) for the multi-agent case, enabling more accurate individual contribution assessment.

- Mean field actor–critic (MFAC) [29] tackles large-scale multi-agent systems by approximating the complex many-agent interactions through mean field theory. Instead of modeling all pairwise interactions, each agent considers the mean effect of neighboring agents, significantly reducing computational complexity while maintaining effective coordination in dense multi-agent environments.

3.2. Graph Neural Networks

Graph neural networks (GNNs) [30] generalize neural network models to graph-structured data, capturing complex relational patterns. A graph is defined as , where V is a set of nodes and E are edges. The original GNN model uses an information diffusion mechanism in which nodes iteratively update their states until a stable equilibrium is reached. For a node v, the state update is

where is the state of node v, is its label, are the labels of edges connected to v, are the states of neighboring nodes, and are their labels. The output is computed as . The model ensures convergence by requiring the transition function to be a contraction mapping, with states being iteratively updated until a fixed point is reached.

The foundational GNN framework has inspired numerous variants that simplify the computational requirements while maintaining the core message-passing paradigm. These modern approaches have led to the widespread adoption of graph neural networks across diverse domains:

Graph convolutional networks (GCN) [31] extends convolution to graphs by efficiently aggregating neighbor features through spectral graph theory. The model is motivated by a first-order approximation of spectral graph convolutions, leading to the layer-wise propagation rule:

where contains node features at layer l, adds self-loops to prevent vanishing gradients, is the degree matrix, is a trainable weight matrix, and is an activation function. The symmetric normalization ensures stable training by controlling the eigenvalue range. This propagation rule scales linearly with the number of edges and enables semi-supervised node classification by conditioning on both node features and graph structure. GCNs have proven effective for citation networks, knowledge graphs, and social network analysis.

Graph sample and aggregate (GraphSAGE) [32] addresses the limitation of transductive GCNs by enabling inductive learning on unseen nodes. Instead of training individual embeddings for each node, GraphSAGE learns aggregator functions that generate embeddings by sampling and aggregating features from a node’s local neighborhood:

where represents a fixed-size uniform sample of node v’s neighbors to maintain constant computational complexity. The concatenation operation acts as a skip connection, preserving the node’s representation while incorporating neighborhood information. GraphSAGE explores multiple aggregator architectures: the mean aggregator (similar to GCN’s spectral convolution), LSTM aggregators for higher expressive capacity, and pooling aggregators that apply element-wise max-pooling after transforming each neighbor through a neural network. The framework uses an unsupervised loss function based on random walks to encourage nearby nodes to have similar representations. This inductive capability enables GraphSAGE to generalize to entirely unseen graphs and dynamically handle evolving networks, making it particularly valuable for production systems processing streaming graph data.

Graph attention networks (GAT) [33] introduce learnable attention mechanisms to selectively weight neighbor contributions, addressing GraphSAGE’s uniform treatment of neighbors. GAT computes attention coefficients through a shared neural network that evaluates pairwise node interactions:

where W transforms node features, is a learnable attention vector, and ∥ denotes concatenation. These raw attention scores are normalized via softmax to obtain attention weights:

The final node representation aggregates neighbor features weighted by attention:

This attention-based aggregation, defined in Equation (16), allows the model to learn the importance of different neighbors dynamically. This contrasts sharply with the graph convolutional network’s propagation rule, Equation (12), where neighbor contributions are weighted by a fixed, pre-computed normalization constant derived from the graph structure itself. GAT employs multi-head attention for stability, computing K independent attention mechanisms and concatenating (or averaging) their outputs. This design enables the model to focus on different aspects of neighborhood relationships simultaneously. Unlike spectral methods, GAT operates locally without requiring global graph structure knowledge, making it naturally applicable to both transductive and inductive settings. The attention mechanism provides interpretability by revealing which neighbors contribute most to each node’s representation, though careful regularization is needed to prevent attention collapse on small neighborhoods.

Relational graph convolutional networks (R-GCN) [34] extends standard GCNs to handle multi-relational graphs where edges have different semantic meanings. R-GCNs modify the aggregation mechanism to use relation-specific transformations:

where denotes the set of relation types, represents neighbors of node i under relation r, is a relation-specific weight matrix, and is a normalization constant (typically ). The self-loop term preserves the node’s own information across layers.

To address the parameter explosion problem when dealing with many relation types, R-GCNs employ two regularization techniques: basis decomposition, where shares basis matrices across relations with learned coefficients ; and block-diagonal decomposition, which constrains weight matrices to block-diagonal structure. These techniques enable R-GCNs to scale to knowledge graphs with hundreds of relation types while maintaining expressive power. R-GCNs have proven particularly effective for knowledge base completion tasks, where understanding the semantic differences between relations is crucial for accurate link prediction and entity classification.

Graph isomorphism networks (GIN). Many GNNs struggle to distinguish certain graph structures. Graph isomorphism networks [35] address this by matching the Weisfeiler–Lehman (WL) test’s power. GIN uses:

where MLP is a learnable multi-layer perceptron and is a learned (or fixed) scalar parameter. The use of a multi-layer perceptron (MLP) within the update rule, Equation (18), is a key distinction from models like GCN and GAT, which typically use a single linear transformation (e.g., in Equation (12)). This allows GIN to learn more complex, non-linear aggregation functions, granting it greater expressive power. By combining a tunable self-loop weight with sum aggregation of neighbors, GIN can produce distinct embeddings for different multiset neighborhoods.

The key theoretical insight is that GIN satisfies the conditions for maximal representational power: it uses injective aggregation functions that can distinguish any two different multisets of node features. Specifically, GIN’s aggregation scheme can represent any injective multiset function through the universal approximation properties of MLPs combined with sum pooling.

For graph-level tasks, GIN employs a concatenated readout across all layers:

This design makes GIN provably as powerful as the one-dimensional Weisfeiler–Lehman test in distinguishing graph structures. The enhanced expressiveness benefits applications like molecular property prediction, where distinguishing subtle structural differences is crucial for accurate predictions.

Graph Transformers. Graph Transformers [36] generalize the Transformer architecture to handle graph-structured data by adapting self-attention mechanisms to respect graph topology. Unlike traditional GNNs that aggregate information only from local neighbors, graph Transformers can model long-range dependencies by allowing attention across the entire graph or within a defined neighborhood. To preserve structural information, they incorporate positional encodings derived from Laplacian eigenvectors, which generalize the sinusoidal encodings used in NLP Transformers. These encodings help the model distinguish node positions and capture distance-aware patterns in the graph.

To improve training efficiency and stability, graph Transformers replace LayerNorm with BatchNorm, which leads to better generalization and faster convergence in graph tasks. Additionally, for graphs with edge attributes—such as molecules or knowledge graphs—the model integrates edge features directly into the attention mechanism. By modifying attention scores based on edge embeddings, the model enhances its ability to leverage pairwise relationships. Though computationally demanding, graph Transformers perform particularly well in scenarios where graph sparsity and long-range semantic interactions are important, such as in document graphs or molecular property prediction.

4. GNN-RL Methods and Algorithms

Building on the introduction of GNNs and RL, this section focuses on a review of the state-of-the-art GNN-RL methods and algorithms. First, we explore how GNNs enhance key elements of RL such as state representation, action selection, and reward design. Then, we examine their role in enabling inter-agent communication and coordination, followed by system-level training and evaluation strategies.

4.1. MARL Elements Enhanced by GNNs

This section examines how GNNs transform the core elements of MARL: state representation, action selection, and reward design. Each demonstrates how GNN integration addresses fundamental multi-agent coordination challenges while maintaining computational tractability. Table 1 presents a summary of the methods for the enhanced MARL elements by GNNs.

Table 1.

Summary of the methods for the enhanced MARL elements by GNNs.

4.1.1. State Representation in GNN-Enhanced MARL

State representation in MARL faces the challenge of capturing both individual agent states and complex interactions within dynamic environments [26]. GNN integration introduces novel approaches leveraging inherent graph structures in multi-agent systems, moving beyond traditional vector representations to enable richer coordination modeling [39,55].

The state representation methods can be systematically classified across multiple dimensions based on their underlying network architectures, learning paradigms, and coordination mechanisms. These approaches span from fundamental graph convolutional networks (GCNs) [31] to advanced architectures including hierarchical attention networks [38], partially equivariant systems [39], and hypergraph neural networks [41]. The classification reveals three primary architectural categories: spectral-based methods that provide Laplacian-based neighbor aggregation [31], attention-based approaches that enable selective information processing through learnable attention mechanisms [33,38,42], and advanced specialized architectures including inductive learning methods [37], symmetry-aware networks [39], and hypergraph-based systems [41]. Table 2 provides a comprehensive comparison of these methods across network architectures, learning paradigms, coordination mechanisms, theoretical foundations, and scalability characteristics.

Table 2.

State representation methods in GNN-enhanced MARL.

The evolution from traditional vector representations to graph-based encoding represents a paradigm shift in MARL. Early approaches struggled with exponential state space growth, while modern GNN-based methods naturally model spatial-temporal relationships through dynamic graph structures, enabling efficient neighbor aggregation without full enumeration of the global state space.

Graph-based encoding transforms environments into structured representations where agents are represented as nodes and their interactions as edges. The environment can be modeled as a graph , where denotes the set of nodes (agents) and the set of edges (interactions), enabling localized and topology-aware feature learning.

Graph convolutional network (GCN). A standard graph convolutional network (GCN) [31] performs layer-wise propagation using a message aggregation scheme based on the graph Laplacian. Specifically, the node representations are updated according to:

where is the adjacency matrix with added self-connections, is the degree matrix of , is the node feature matrix at layer l, is the trainable weight matrix, and is an activation function such as ReLU. This update rule is identical to the foundational GCN propagation mechanism previously introduced in Equation (12), which forms the basis for many advanced graph-based MARL models. A well-documented issue with GCNs is the tendency towards over-smoothing, where repeated neighborhood averaging can make node representations overly similar after several layers. For state representation, this may cause distinct agent states to become indistinguishable, potentially hindering fine-grained policy learning.

Multi-agent graph embedding-based coordination (MAGEC). Multi-agent graph embedding methods enhance continuous-space representation by constructing spatial graphs that encode agent–obstacle–target relations [37]. In such spatial graphs, nodes represent agents and relevant environmental entities. To learn coordination policies over this representation, GNNs such as GraphSAGE [32] are applied:

Here, denotes a neighborhood aggregation function. This formulation directly applies the inductive GraphSAGE framework from Equation (13), where the aggregator function learns to generate embeddings from sampled local neighborhoods. This spatial-graph plus GraphSAGE pipeline improves coordination robustness by leveraging spatial locality and inductive generalization.

Multi-agent state aggregation enhancement. Traditional state aggregation in multi-agent systems suffers from the curse of dimensionality. GNN-based models address this by enabling structured and localized message passing. In particular, GCNs enhance state aggregation by embedding agent states within relational graphs, allowing each agent to integrate neighborhood information without full enumeration of the global state space. To extract compact global features, a permutation-invariant pooling function is applied over the final node embeddings:

yielding a fixed-size global state descriptor. To further improve adaptivity, graph attention networks (GAT) employ learnable attention coefficients to prioritize important neighbors [33]:

which allows agents to adaptively focus on the most relevant peers under dynamic conditions. The calculation of these attention weights is the core mechanism of GATs, as detailed earlier in Equations (14) and (15). While GAT’s attention offers expressive weighting, its performance can be sensitive to the graph topology. In sparse neighborhoods, attention weights may become concentrated or noisy, leading to state representations that are unstable or disproportionately influenced by a small subset of neighbors.

Hierarchical graph attention network (HGAT). To address scalability and relational complexity, Ryu et al. [38] propose a Hierarchical Graph Attention Network (HGAT) with a two-level attention architecture. At the lower level, node-level attention models fine-grained interactions. At the higher level, hyper-node attention aggregates representations of predefined agent groups. The local embedding of an agent i is computed using attention-weighted message passing:

where is the attention coefficient. This local update rule directly mirrors the GAT aggregation mechanism previously shown in Equation (16). The effectiveness of HGAT’s hierarchical representation, however, is heavily dependent on the predefined agent grouping strategy. An improperly designed hierarchy can create information bottlenecks or misrepresent the true coordination structure, potentially leading to suboptimal state abstractions. This structure enables HGAT to generate context-aware agent embeddings that are invariant to the number of agents.

Equivariant and symmetry-aware state representations (PEnGUiN). Recent advances leverage structural symmetries to enhance sample efficiency. McClellan et al. [39] introduce partially equivariant graph neural networks (PEnGUiN), which selectively enforces equivariance to agent permutations. Each agent’s representation is decomposed into equivariant and invariant components: . The framework introduces a learnable symmetry score to blend fully equivariant and non-equivariant updates:

While partial equivariance enhances sample efficiency in symmetric tasks, it remains a strong inductive bias. In environments with subtle but critical asymmetries, the equivariant component of the representation might overly constrain the policy space, making it difficult for agents to learn specialized, non-symmetric behaviors. This architecture generalizes both equivariant GNNs and standard GNNs, adapting dynamically to varying degrees of environmental symmetry.

Predictive and hypergraph state representations. Graph-assisted predictive state representations (GAPSR) extend single-agent PSR to multi-agent partially observable systems by leveraging agent connectivity graphs [40]. The framework constructs primitive predictive states between agent pairs: . The final predictive representation is obtained through graph-based aggregation: . This approach enables decentralized agents to capture interactions while avoiding the complexity of joint state estimation.

Hypergraph coordination networks (HYGMA). HYGMA addresses multi-agent coordination through dynamic spectral clustering and hypergraph neural networks to capture higher-order agent relationships [41]. The framework constructs hypergraphs by solving a normalized cut minimization problem:

where is the graph Laplacian. The resulting hypergraph enables attention-enhanced information processing through hypergraph convolution:

where represents learned attention coefficients. Representing states with hypergraphs allows for capturing higher-order relationships, but this comes at the cost of increased computational complexity. The performance is critically dependent on the hyperedge construction step (e.g., spectral clustering), which is often a non-trivial and computationally intensive preprocessing task.

Relationship modeling enhancement via graph edges. Liu et al. [42] propose a two-stage graph attention network (G2ANet) for automatic game abstraction. G2ANet constructs an agent-coordination graph where agents are initially fully connected, then uses hard attention to identify and remove unrelated edges, followed by soft attention to learn importance weights. Hard attention employs Bi-LSTM, and its weight is computed as . Soft attention then uses query-key mechanisms: . The resulting sub-graph for each agent is processed by GNNs to obtain joint encodings .

Relational state abstraction [43] transforms observations into spatial graphs where entities are connected through directional spatial relations. The method employs R-GCNs to aggregate information, , achieving translation invariance. This update rule is a direct application of the R-GCN framework described in Equation (17), using relation-specific weight matrices to process different spatial relationships. A max-pooling operation generates fixed-size representations for the critic network, demonstrating significant sample efficiency improvements.

Multimodal graph fusion. Graph-based multimodal fusion demonstrates superior integration capabilities by explicitly modeling structural relationships between modalities [44]. D’Souza et al. propose a multiplexed GNN approach that creates targeted encodings for each modality through autoencoders, with multiplexed graph layers representing different relationships. This framework processes different data types and addresses challenges where not all modalities are present for all samples.

Generalized graph drawing for Laplacian state representations. Wang et al. [45] develop an improved Laplacian state representation learning method by modeling states and transitions as graph structures. The approach views the MDP as a graph . The graph Laplacian is defined as . Notably, this is the unnormalized graph Laplacian, whereas the propagation in GCNs, as seen in Equation (12), typically utilizes a symmetrically normalized version for stable feature propagation. The d-dimensional Laplacian representation of a state is constructed from the smallest eigenvectors of L. To address the non-uniqueness of the standard spectral graph drawing objective

the authors propose a generalized objective with decreasing coefficients:

where . This generalized objective has the smallest eigenvectors as its unique global minimizer, ensuring a faithful approximation to the ground truth Laplacian representation.

Summary

The evolution of state representation architectures reflects a consistent shift toward capturing richer structural information in multi-agent environments. While GCN-based models rely on spectral aggregation across local neighborhoods, recent approaches such as GAT and hypergraph neural networks have advanced toward modeling high-order relations and multi-scale dependencies. In particular, GraphSAGE introduces behavior-driven aggregation schemes that emphasize stable abstraction over selective attention. Alongside architectural advancements, the theoretical foundations behind these methods, ranging from spectral graph theory to equivariant representation learning, remain heterogeneous and often incomplete. This inconsistency highlights the need for more unified and interpretable frameworks to support generalization across diverse multi-agent structures. Table 3 provides a summary of the advantages and limitations of different categories of state representation methods.

Table 3.

Advantages and limitations of key state representation methods.

In practice, the selection of state representation methods is highly task-dependent. GCN-based methods are appealing in medium-scale systems with relatively stable interaction topologies, but their uniform aggregation limits performance when neighbor importance is uneven. Attention-based approaches (e.g., GAT, G2ANet) provide stronger adaptability to dynamic or heterogeneous environments by selectively weighting neighbors, though at the cost of increased computation. Hypergraph-based models (e.g., HYGMA) naturally capture higher-order or group-level interactions, making them suitable for large-scale systems, yet they require careful hyperedge construction. Symmetry-aware networks (e.g., PEnGUiN) are particularly effective when environments exhibit agent interchangeability or partial symmetry, improving sample efficiency and generalization but offering less benefit in asymmetric domains. Hierarchical models (e.g., HGAT) strike a balance between scalability and structure by integrating both local and group-level interactions, though they depend on meaningful group partitioning for effectiveness.

4.1.2. Action Selection in GNN-Enhanced MARL

GNN-enhanced MARL frameworks fundamentally transform action selection by leveraging graph structures to process agent interactions and environmental information. Based on the core mechanisms that differentiate action generation approaches, we identify three primary categories of GNN-enhanced action selection strategies:

- Information-Aggregation-Based Action Selection: Methods that focus on how agents collect and process neighborhood information during action generation, including attention-based critic mechanisms [46], graph-based attention networks [42], and scalable exponential topology communication [47].

- Decomposition-Based Action Selection: Approaches that address exponential joint action spaces by factorizing complex multi-agent decisions, including heterogeneous value decomposition with graph neural networks [48] and effect-based role decomposition [49].

- Hierarchical and Heterogeneous Decision Making: Systems that structure action selection across multiple organizational levels and adapt to different agent capabilities, including hypergraph-based group coordination [41] and class-specific relational learning for heterogeneous agents [50].

Table 4 categorizes representative methods according to these action selection paradigms, highlighting their core innovations and scalability characteristics.

Table 4.

Representative GNN-enhanced action selection methods.

Information-Aggregation-Based Action Selection

Information-aggregation-based methods focus on how agents collect and process information from their neighborhood to generate actions. The core innovation lies in developing sophisticated mechanisms for weighting and combining neighbor information.

Multi-agent actor–attention-critic (MAAC). MAAC [46] enhances action selection through an attention-based critic design. The critic for each agent learns by selectively attending to information from other agents. For agent i, the Q-value function is computed as:

where represents the contribution from other agents through the attention mechanism:

The attention weight is calculated using a query-key system:

where is agent i’s embedding. This query-key mechanism shares the same principle as the attention score calculation in GAT, shown in Equation (14), by learning pairwise importance scores. This graph-inspired information aggregation provides more accurate value estimates. While the attention-based critic is expressive, its requirement to process information from all other agents can present a scalability challenge, as the critic’s input and the attention computation grow with the number of agents. This leads to improved action selection compared to methods that simply concatenate all agents’ information.

Graph-based multi-agent actor–critic network (G2ANet). G2ANet [42] addresses the scalability challenge through a two-stage attention mechanism. It utilizes hard attention to identify which agents need to interact and soft attention to learn the importance weights of these interactions. This graph-based game abstraction achieves significantly higher success rates than baselines in complex coordination tasks.

Exponential communication (ExpoComm). Exponential topology methods [47] enable scalable communication through rule-based graph structures. In the static exponential graph, agents communicate according to

This rule-based approach for defining a static communication topology contrasts with the data-driven, dynamic weighting found in attention-based models like GAT (Equation (16)), offering a trade-off between communication efficiency and adaptive interaction modeling. The primary trade-off for its high scalability is a lack of adaptivity; the static topology does not adjust to the dynamic state of the environment, which may be suboptimal in tasks where coordination needs are highly context-dependent. This topology ensures agents can exchange messages within timesteps with communication overhead scaling efficiently, enabling coordination in systems with 100+ agents.

Decomposition-Based Action Selection

Decomposition-based methods address the exponential growth of joint action spaces by breaking down complex multi-agent decisions into manageable components.

QMIX with graph neural networks (QMIX-GNN). QMIX-GNN [48] is a GNN-based heterogeneous MARL algorithm built on the QMIX framework. It inherits monotonic value factorization to enable decentralized execution while learning a centralized value function. To enable information sharing, the method introduces a GAT that aggregates observations from each agent’s k-nearest neighbors. This aggregation employs the same principles of learnable, input-dependent weights as described in the foundational GAT model (Equation (16)). This produces a fused team-level representation . Each agent then computes its individual Q-value by combining its projected local observation with the global team context:

where projection matrices handle heterogeneous feature spaces. During decentralized execution, agents select actions via . While inheriting the benefits of QMIX, this approach is also subject to its primary structural limitation: the monotonicity constraint. This assumption limits the class of joint action-value functions that can be represented, which may be insufficient for tasks with complex, non-monotonic agent rewards.

Role-based multi-agent deep reinforcement learning (RODE). RODE [49] decomposes the action selection problem by first learning effect-based action representations, then clustering actions into role-specific action spaces. The performance of this framework is critically dependent on the quality of the learned action-effect representations and the resulting role clustering. In environments with highly stochastic action effects, the learned roles may not be distinct or meaningful. The framework employs a bi-level hierarchical structure where a role selector assigns roles and role policies operate within restricted action spaces, reducing policy search complexity.

Hierarchical and Heterogeneous Decision Making

Hierarchical and heterogeneous methods structure action selection across multiple organizational levels, adapting to different agent capabilities and enabling decisions that consider both multi-scale coordination and agent diversity.

Hypergraph-based multi-agent coordination (HYGMA). HYGMA [41] enhances action selection through hypergraph-based group-aware representations. The method integrates hypergraph convolution features into both value-based and policy-based frameworks: and , where is the group-aware representation generated by the hypergraph network. Although hypergraphs capture higher-order team structures, the dynamic construction of hyperedges via spectral clustering is a computationally expensive step that must be performed regularly, which can pose a challenge for real-time applications. This approach achieves faster convergence and high win rates in challenging MARL benchmarks.

Heterogeneous multi-agent graph Q-network (HMAGQ-Net). HMAGQ-Net [50] addresses action selection when agents have different capabilities. It employs relational graph convolutional networks (RGCN) to establish class-specific communication channels between different agent types. Through hierarchical feature aggregation across K-layer graph convolutions, agents aggregate neighbor information:

where r represents inter-class relations. This update mechanism is identical to the one introduced for relational graph convolutional networks in Equation (17), demonstrating its effectiveness in handling heterogeneous relationships. A key requirement of this approach is the pre-specification of agent classes. In scenarios where agent roles are fluid or not easily categorized, this fixed class-based structure may be too rigid and limit the model’s adaptive capacity. For class-specific policy learning, each agent class c learns specialized Q-functions , enabling personalized policies.

Summary

In GNN-enhanced MARL, action selection has gradually developed along four main directions: information aggregation, decomposition, hierarchical reasoning, and heterogeneity-aware strategies. Information aggregation methods work well when agent interactions are sparse or change over time, since attention can highlight the most relevant neighbors. Decomposition methods are designed for very large action spaces, breaking them into smaller factors or roles to make decision making more manageable. Hierarchical or hypergraph-based approaches are suited for tasks with clear group structures or multiple scales, where agents need to balance local and global objectives. Heterogeneity-aware approaches are essential in realistic settings where agents differ in sensing, actuation, or goals, helping to keep coordination effective despite these differences. In practice, combining these ideas—such as using decomposition together with attention or hierarchy—has shown strong potential in applications like autonomous driving fleets, distributed energy systems, and mixed-agent robotics.

4.1.3. GNN-Enhanced Reward Design in MARL

Graph neural networks (GNNs) have been increasingly adopted for reward modeling in reinforcement learning due to their ability to capture structural dependencies among agents, states, and actions. Existing methods span a variety of strategies, including potential-based reward shaping [51,56,57], representation-based shaping using Laplacian embeddings [45], value function decomposition via graph-based architectures [53], and decentralized intrinsic reward modeling based on local message passing [52,58]. Some works also explore relational reward sharing grounded in graph-structured inter-agent preferences [59]. These approaches collectively demonstrate how GNNs enable more structured and adaptive reward signals, particularly in sparse or multi-agent environments.

We summarize representative methods and their characteristics in Table 5.

Table 5.

Representative GNN-enhanced reward modeling methods.

Reward shaping methods. Reward shaping methods tackle the challenge of sparse reward environments by intelligently designing auxiliary reward signals. Reward propagation [51] employs graph convolutional networks (GCNs) to propagate reward information from rewarding states through message-passing mechanisms, learning potential functions for potential-based reward shaping that preserve the optimal policy. This propagation is a direct application of the GCN update rule shown in Equation (12), where reward signals are treated as node features that are diffused across the state graph. By approximating the underlying state transition graph through sampled trajectories and using the graph Laplacian as a surrogate for the true transition matrix, this method draws connections to the proto-value functions framework. Extensive validation across tabular domains (FourRooms), vision-based navigation tasks (MiniWorld), Atari 2600 games, and continuous control problems (MuJoCo) reveals significant performance improvements over actor–critic baselines while maintaining computational efficiency.

Hierarchical graph topology (HGT). Building upon potential-based reward shaping principles, hierarchical graph topology (HGT) [56] constructs an underlying graph where states serve as nodes and edges represent transition probabilities within the Markov decision process (MDP). Rather than operating on flat graph structures, HGT decomposes complex probability graphs into interpretable subgraphs and aggregates messages from these components for enhanced reward shaping effectiveness. The hierarchical architecture proves especially valuable in environments with sparse and delayed rewards by enabling agents to capture long-range dependencies and structured transition patterns.

Reward shaping with Laplacian representations. Wang et al. [45] explore a geometric approach to reward design by leveraging learned Laplacian representations for pseudo-reward signal generation in goal-achieving tasks. Their strategy centers on Euclidean distance computation within the representation space, where the pseudo-reward corresponds to the negative L2 distance between the current and goal states in the Laplacian representation space:

Given that Laplacian representations can effectively capture the geometric properties of environment dynamics, this representation-space distance metric provides meaningful guidance signals for agent learning. Further analysis reveals that different dimensions of the Laplacian representation contribute varying degrees of effectiveness to reward shaping, with lower dimensions (corresponding to smaller eigenvalues) demonstrating superior learning acceleration. This observation establishes both theoretical foundations and practical guidelines for dimension-selective reward shaping, where pseudo-rewards can be computed using individual dimensions as

for the i-th dimension of the learned representation.

Graph convolutional recurrent networks for reward shaping. Addressing fundamental limitations in existing potential-based reward shaping methods, the graph convolutional recurrent network (GCRN) algorithm [57] introduces three complementary innovations for enhanced reward shaping optimization.

Spatio-Temporal Dependency Modeling: By integrating graph convolutional networks (GCN) with bi-directional gated recurrent units (Bi-GRUs), GCRN simultaneously captures both spatial and temporal dependencies within the state transition graph. The forward computation proceeds as:

where K represents the Krylov basis, X combines state and action information, and ⊕ denotes concatenation.

Augmented Krylov Basis for Transition Matrix Approximation: While traditional approaches rely on the graph Laplacian as the GCN filter under value function smoothness assumptions, GCRN develops an augmented Krylov algorithm for more precise transition matrix P approximation. This reliance on the Laplacian connects to the core mechanism of standard GCNs, as its structure is fundamentally related to the symmetrically normalized adjacency matrix used in Equation (12). The resulting Krylov basis K combines top eigenvectors from the sampled transition matrix with Neumann series vectors, yielding superior short-term and long-term behavior modeling compared to standard graph Laplacian approaches.

Look-Ahead Advice Mechanism: Departing from conventional state-only potential functions, GCRN incorporates a look-ahead advice mechanism that exploits both state and action information. The enhanced shaping function becomes:

where now operates on state–action pairs S and A, facilitating more precise action-level guidance.

Training combines base and recursive loss components derived from hidden Markov model message-passing techniques:

where represents the optimality probability calculated through forward and backward message passing.

By maintaining the policy invariance guarantee of potential-based reward shaping, GCRN achieves substantial improvements in both convergence speed and final performance, as validated through comprehensive experiments on Atari 2600 and MuJoCo environments.

Graph convolutional value decomposition. GraphMIX [53] proposes a GNN-based framework for value function factorization in MARL, enabling fine-grained credit assignment through graph attention mechanisms. It models agents as nodes in a fully connected directed graph, where edge weights, computed via attention, capture dynamic inter-agent influence. This approach leverages the expressive power of architectures like GCN (Equation (12)) or the more powerful GIN (Equation (18)) to process the graph structure and inform the value decomposition. The initial assumption of a fully connected graph, however, introduces a significant scalability bottleneck, as its computational complexity grows quadratically () with the number of agents, making it less suitable for systems with very large agent populations.

To optimize reward allocation, GraphMIX introduces a dual-objective loss structure. A global loss encourages accurate estimation of team-level returns by evaluating joint actions over the full state, while a local loss guides individual agents based on their assigned share of the global reward, computed from learned node-level embeddings. This design ensures that agents not only cooperate effectively but also learn to attribute outcomes to their actions.

The overall training objective combines both components:

This integrated loss promotes coordinated learning by balancing team performance with personalized reward feedback, thereby improving both training stability and credit assignment precision.

Decentralized graph-based multi-agent reinforcement learning using reward machines (DRGM). DGRM [58] integrates GNNs with formal reward machine representations to support decentralized reward optimization in multi-agent settings. The method encodes complex, non-Markovian reward structures using reward machines, while leveraging truncated Q-functions that rely only on information from each agent’s local -hop neighborhood.

By exploiting the structure of agent interaction graphs, DGRM significantly reduces computational overhead—limiting the scope of each agent’s decision making to a small, relevant subgraph. This reliance on local -hop neighborhoods is a principle central to inductive GNNs like GraphSAGE (Equation (13)), enabling scalability by restricting message passing to immediate neighbors. The authors theoretically show that the influence of distant agents on local policy gradients diminishes exponentially with distance, ensuring that this approximation remains accurate. While this design promotes scalability, the truncation to a local neighborhood represents a fundamental trade-off; the model may fail to learn globally optimal strategies in tasks where critical long-range dependencies exist beyond the -hop communication radius. This decentralized design allows agents to coordinate effectively without requiring full access to global information, achieving scalable learning in large systems.

Reward-sharing relational networks. While Haeri et al. [59] do not directly implement graph neural network architectures, their reward-sharing relational networks (RSRN) framework establishes crucial theoretical foundations and design insights for GNN applications in multi-agent reward optimization. RSRN conceptualizes multi-agent systems as directed graphs , where denotes the agent set, represents inter-agent relational edges, and weight matrix elements in quantify how much agent i “cares about” agent j’s success. Relational rewards emerge through scalarization functions , with the weighted product model specified as .

Policy learning occurs through long-term shared return maximization:

The relational graph structure naturally accommodates GNN processing, enabling graph convolutional operations to learn and optimize agent reward propagation patterns. Specifically, architectures like R-GCNs (Equation (17)) are well suited to process such explicitly relational graphs, where each edge type (or weight) can be modeled with a distinct transformation. A primary challenge for this framework is its dependence on a pre-defined relational graph. The design of an effective weight matrix requires significant, often unavailable, domain knowledge about agent social dynamics, and a misspecified structure can lead to unintended behaviors. Different network topologies (survivalist, communitarian, authoritarian, tribal) generate distinct emergent behaviors, suggesting that GNN-based approaches could exploit similar relational inductive biases to enhance multi-agent coordination and reward optimization.

GNN-driven intrinsic rewards for heterogeneous MARL. The CoHet algorithm [52] develops a graph-neural-network-based intrinsic reward mechanism for cooperative challenges in decentralized heterogeneous multi-agent reinforcement learning. CoHet optimizes reward design through GNN message-passing mechanisms, specifically employing local neighborhood observation predictions for intrinsic reward calculation. Coordinated behavior emerges by minimizing deviations between agents’ actual observations and their neighbors’ predictions. The central reward is calculated as

where represents the Euclidean-distance-based weight, and corresponds to neighbor j’s prediction of agent i’s next observation. Local information processing occurs through the GNN architecture . This update function embodies the general message passing paradigm central to GNNs, where a node’s representation is updated by aggregating features from its neighbors.

By enabling decentralized training based exclusively on local neighborhood information, this design promotes effective coordination among heterogeneous agents through intrinsic reward signals.

Summary

GNN-enhanced reward design methods in MARL can be broadly grouped into several categories, each addressing different challenges. Potential-based reward shaping methods (e.g., reward propagation, HGT, GCRN) are effective in sparse-reward settings, as they propagate or decompose reward signals to provide denser guidance, though they may introduce training overhead or require accurate graph approximations. Representation-based shaping with Laplacian embeddings offers geometric insights into environment dynamics and goal distances, making it useful for navigation and goal-reaching tasks, but performance depends heavily on representation quality. Value decomposition methods such as GraphMIX leverage graph attention to balance global team rewards and individual credit assignment, excelling in cooperative domains but requiring careful loss balancing. Decentralized or relational reward designs (e.g., DGRM, RSRN) scale well to large multi-agent systems by exploiting local neighborhoods or inter-agent preference structures, though they may lose global optimality. Finally, intrinsic reward modeling (e.g., CoHet) is particularly suited for heterogeneous teams, as it encourages coordination from local prediction errors, but the design of intrinsic signals can be task-dependent. In practice, potential-based and representation-based shaping are preferable in structured single-task domains, while decentralized and intrinsic reward strategies are more practical in large-scale or heterogeneous environments. Hybrid designs that combine decomposition with relational or intrinsic signals are promising directions for real-world systems such as UAV coordination, mixed-robot teams, and resource allocation networks. Table 6 provides a summary of the applicable scenarios and limitations of different categories of GNN-enhanced reward design methods in MARL.

Table 6.

Reward design paradigms and their applicability.

4.2. GNN-Based Multi-Agent Communication and Coordination

Communication and coordination among agents are central to networked system control. For GNN-MARL approaches, communication and coordination mainly involve three aspects: graph construction that encodes multi-agent environments, distributed information processing for coordination signal flow, and integrated decision-making frameworks that translate graph representations into collective actions.

4.2.1. Graph Construction Strategies

The effectiveness of GNN-based MARL critically depends on how the interaction graph is constructed, as this determines the structure of information flow among agents and with the environment. In practice, the choice of construction strategy should be guided by the characteristics of the target domain. Agent-centric graphs are suitable when modeling direct agent-to-agent interactions is essential, such as in swarm coordination or cooperative control. Environment-centric graphs are preferable when the spatial or topological structure of the environment dominates, e.g., navigation, patrolling, or coverage. Dynamic graph strategies are necessary in scenarios where communication patterns or environment states evolve, enabling agents to adapt to real-time changes. Finally, heterogeneous graph constructions are indispensable when multiple types of agents or entities interact through diverse relations, as in mixed-agent systems or hierarchical control. As summarized in Table 7, each strategy offers distinct advantages for different multi-agent scenarios.

Agent-Centric Graph Construction

Agent-centric graph construction is a fundamental approach in GNN-MARL, where each agent is modeled as a node, and edges represent interactions like proximity or communication. This design captures local dependencies and is widely used due to its simplicity and effectiveness. A representative example is the graph convolutional reinforcement learning presented by Jiang et al. (2018) [55], which models agents’ local interactions via graph convolutions. The information flow in such models typically follows the neighborhood aggregation principle defined in the GCN propagation rule (Equation (12)), where each agent updates its state based on its immediate neighbors. Later works, such as Huang et al. (2021) [60] and Zhao et al. (2024) [61], follow similar agent-centric constructions in process control and UAV swarm coordination.

Environment-Centric Graph Construction

In graph-neural-network-based multi-agent reinforcement learning (GNN-MARL), the environment-centric modeling approach represents key environmental locations or waypoints as graph nodes, with edges denoting feasible connections or relationships between them. Robots (agents) are typically modeled as dynamic nodes, integrated into the graph based on their positions, while edge attributes (e.g., distances or weights) capture interaction or path costs. GNNs leverage message passing to aggregate node and edge features, generating environment-aware embeddings for distributed decision making. For example, waypoints are modeled as grid points for path planning in [62], as patrol nodes for optimizing patrolling tasks in [37], and as spatial nodes for coverage and exploration in [63]. This strategy, however, often abstracts away direct agent-to-agent interactions in favor of agent–environment relationships, making it less suitable for tasks where complex, dynamic inter-agent negotiation is the core challenge. This approach effectively captures environmental topology, enhancing coordination and generalization in multi-agent systems.

Dynamic Graph Construction

Dynamic graph construction aims to reflect the evolving communication topology and environment state in multi-robot systems. Both Li et al. [62] and Tolstaya et al. [63] emphasize that dynamically updating the graph structure allows agents to make decentralized decisions based on real-time information.

In Li et al. [62], each agent is modeled as a graph node, with edges defined by proximity under a communication radius . The graph is updated at every timestep t, where edges are formed if agents are within range. This time-varying graph captures changes in local connectivity due to agent movement, ensuring decentralized processing through the dynamic adjacency matrix .

In contrast, Tolstaya et al. [63] introduces a spatial graph structure where both agents and waypoints (environmental interest points) form the graph’s nodes. Edges represent reachable paths or spatial proximity. As robots explore and discover new regions, nodes and edges dynamically expand, forming a growing graph that captures both inter-agent connectivity and environment-aware exploration capabilities.

While highly adaptive, dynamic graphs introduce significant computational overhead, as the graph structure may need to be reconstructed or updated at each timestep. This can become a performance bottleneck in scenarios with many agents or high-frequency state changes. These dynamic constructions enable the GNN to operate efficiently in partially observable and communication-limited scenarios.

Heterogeneous Graph Structure

In many MARL scenarios, agents and environments exhibit heterogeneity—different types of entities with distinct characteristics interact through various relations. Heterogeneous graph neural networks have emerged to model such diverse multi-agent systems.

The heterogeneous graph attention network (HAN) employs a hierarchical attention mechanism with two levels: node-level attention that learns the importance between a node and its meta-path-based neighbors, and semantic-level attention that automatically selects important meta-paths for specific tasks [64]. This enables HAN to capture different semantic information in heterogeneous graphs through meta-path-based neighbor aggregation.

To handle larger-scale heterogeneous graphs, the heterogeneous graph Transformer (HGT) introduces meta-relation-based attention, decomposing transformations based on node-type and edge-type triplets [65]. This concept of handling diverse, explicit relations is an advanced evolution of the principles seen in R-GCNs (Equation (17)), which also use relation-specific transformations. This design achieves better parameter efficiency while maintaining dedicated representations for different entity types. HGT also incorporates relative temporal encoding (RTE) to handle dynamic heterogeneous graphs, enabling the model to capture temporal dependencies with arbitrary durations.

The power of heterogeneous GNNs comes at the cost of increased design complexity. Defining a meaningful graph schema, including node types and meta-paths or meta-relations, requires substantial domain knowledge and can be a critical point of failure if specified incorrectly. In GNN-MARL applications, these heterogeneous graph methods effectively handle complex interactions between different types of agents (such as UAVs, ground robots, sensor nodes), capturing diverse semantic relationships through meta-path and meta-relation mechanisms, thereby enhancing coordination capabilities and decision quality in multi-agent systems.

Table 7 summarizes various graph construction strategies in GNN-MARL, categorized into static, dynamic, and heterogeneous approaches. Each strategy is evaluated based on its primary focus, scalability, adaptability, and typical application domains.

Table 7.

Comparison of graph construction strategies in GNN-MARL.

Table 7.

Comparison of graph construction strategies in GNN-MARL.

| Strategy | Primary Focus | Scalability | Application Domain |

|---|---|---|---|

| Agent-centric [55,60,61] | Local interaction modeling via agent nodes | Moderate ( with sparse edges) | UAV swarm, process control |

| Environment-centric [37,62,63] | Modeling environment topology via waypoint or spatial nodes | High (fixed graph size, independent of agent count) | Multi-robot path planning, patrolling, coverage |

| Dynamic Graph [62,63] | Time-varying topology reflecting agent movement and environment discovery | High (localized updates) | Coverage, path planning in unknown or dynamic environments |

| Heterogeneous Graph [64,65] | Multi-type entities with semantic relations | High with parameter sharing | Mixed autonomous systems, hierarchical control |

4.2.2. Message Passing and Information Sharing

Message-passing mechanisms in GNN-based multi-agent reinforcement learning have evolved through distinct phases of development, each addressing specific challenges in multi-agent coordination. We categorize these approaches into five main categories based on their core design principles and evolutionary progression: (1) fundamental message passing frameworks establish the basic principles of learnable communication and graph-based information aggregation; (2) attention-based message passing introduces selective communication through attention mechanisms that enable agents to focus on relevant neighbors; (3) adaptive communication architecture advances beyond fixed topologies by learning optimal communication structures dynamically; (4) heterogeneous and hierarchical communication addresses complex systems with diverse agent types and multi-level coordination; and (5) robust and efficient communication ensures reliable performance under adversarial conditions while maintaining computational efficiency. This categorization reflects the field’s progression from simple broadcast mechanisms to sophisticated, context-aware communication protocols that adapt to varying environmental demands.

Fundamental Message Passing Frameworks

The development of effective message-passing mechanisms in GNN-based multi-agent reinforcement learning builds upon fundamental approaches that establish core principles for learnable communication and graph-based information aggregation. These seminal works provide the theoretical and architectural foundations that enable agents to exchange information effectively in distributed environments.

Communication neural network (CommNet). CommNet [66] introduces a foundational approach to learnable communication in multi-agent reinforcement learning through continuous message-passing mechanisms. The architecture enables agents to communicate through a broadcast channel where each agent receives the averaged transmissions from all other agents. The message-passing mechanism is formalized through two key equations:

where represents the hidden state of agent j at communication step i, and denotes the communication vector. The averaging operation in Equation (49) is an early instance of a permutation-invariant mean aggregator, a concept later generalized in frameworks like GraphSAGE. This framework enables agents to exchange information iteratively across K communication steps before taking actions. While effective for broadcasting, the uniform averaging of all messages can become an information bottleneck, as it prevents agents from engaging in specialized or targeted communication with specific peers. The model’s strength lies in its ability to handle dynamic agent compositions while maintaining permutation invariance, making it particularly suitable for scenarios where the number of agents may vary. The continuous nature of communication enables end-to-end learning through standard backpropagation.

GraphSAGE (graph sample and aggregate). GraphSAGE [32] introduces an inductive framework for generating node embeddings in large graphs, enabling generalization to unseen nodes at test time. Rather than learning a unique embedding for each node, GraphSAGE learns a set of aggregation functions that combine feature information from a node’s local neighborhood. This approach operationalizes the general aggregation scheme shown in formulas like Equation (13), providing concrete aggregator designs (mean, LSTM, pooling).

The method employs a hierarchical message-passing process, where node representations are updated layer by layer by aggregating and transforming features from neighboring nodes. The performance of this framework is highly dependent on the availability of rich initial node features, as the aggregation process primarily refines and propagates existing information rather than creating it anew. To scale to large graphs, GraphSAGE uses fixed-size neighborhood sampling, which controls memory usage and computational cost by limiting the number of neighbors sampled per layer. This makes the method highly efficient and well suited for inductive learning on dynamic or partially observed graphs.

The fundamental message passing frameworks are summarized in Table 8.

Table 8.

Fundamental message passing frameworks.

Attention-Based Message Passing

Building upon foundational approaches like CommNet and GraphSAGE, attention-driven methods [42,55,67] introduce sophisticated mechanisms that enable agents to selectively focus on relevant neighbors and adaptively weight communication importance, moving beyond uniform information aggregation towards more targeted coordination strategies.

Graph convolutional reinforcement learning framework. The graph convolutional reinforcement learning approach [55] constructs the multi-agent environment as a dynamic graph where agents are represented as nodes and neighboring relationships define edges that change over time as agents move. The message-passing mechanism employs multi-head attention as relation kernels to compute interactions between agents. For each agent i with neighbors , let denote the set including both and agent i itself. The relation between agent i and agent is computed using attention weights

where is a scaling factor, and , are learned query and key transformation matrices for attention head m. The output of each convolutional layer integrates information from neighboring agents through weighted aggregation