Abstract

In this paper, we consider the problem of estimating the drift parameters in the mixed fractional Vasicek model, which is an extended model of the traditional Vasicek model. Using the fundamental martingale and the Laplace transform, both the strong consistency and the asymptotic normality of the maximum likelihood estimators are studied for all , . On the other hand, we present that the MLE can be simulated when the Hurst parameter .

1. Introduction

The standard Vasicek models, including the diffusion models based on Brownian motion and the jump-diffusion models driven by Lévy processes, provide good service in cases where the data demonstrate the Markovian property and a lack of memory. However, over the past few decades, numerous empirical studies have found that the phenomenon of long-range dependence may be observed in the data of hydrology, geophysics, climatology, telecommunication, economics, and finance. Consequently, several time series models or stochastic processes have been proposed to capture long-range dependence, both in discrete time and in continuous time. In the continuous time case, the best-known and widely used stochastic process that exhibits long-range dependence or short-range dependence is of course the fractional Brownian motion (fBm), which describes the degree of dependence by the Hurst parameter. This naturally explains the appearance of fBm in the modeling of some properties of “real-world” data. As well as in the diffusion model with the fBm, the mean-reverting property is very attractive to understand volatility modeling in finance. Hence, the fractional Vasicek model (fVm) becomes the usual candidate to capture some phenomena of the volatility of financial assets (see, for example, [1,2,3]). More precisely, the fVm can be described by the following Langevin equation:

where , , the initial condition is set at , and , an fBm with Hurst parameter , is a zero mean Gaussian process with the covariance:

The process is self-similar in the sense that , . It becomes the standard Brownian motion when and can be represented as a stochastic integral with respect to the standard Brownian motion. When , it has long-range dependence in the sense that . In this case, the positive (negative) increments are likely to be followed by positive (negative) increments. The parameter H, which is also called the self-similarity parameter, measures the intensity of the long-range dependence. Recently, borrowing the idea of [4], these papers [5,6] used the mixed fractional Vasicek model (mfVm) to describe some phenomena of the volatility of financial assets, which can be expressed as:

where , , and the initial condition is set at . Here, the process of the so-called mixed fractional Brownian motion is defined by where W and are independent standard and fractional Brownian motions.

When the long-term mean in (3) is known (without loss of generality, it is assumed to be zero), (3) becomes the mixed fractional Ornstein–Uhlenbeck process (mfOUp). Using the canonical representation and spectral structure of the mfBm, the authors of [7] originally proposed the maximum likelihood estimator (MLE) of in (3) and considered the asymptotical theory for this estimator with the Laplace transform and the limit presence of the eigenvalues of the covariance operator for the fBm (see [8]). Using an asymptotic approximation for the eigenvalues of its covariance operator, the paper of [9] explained the mfBm from the viewpoint of spectral theory. Some surveys and a complete literature related to the parametric and other inference procedures for stochastic models driven by the mfBm were summarized in a recent monograph of [10,11].

However, in some situations, the long-term mean in (3) is always unknown. Thus, it is important to estimate all the drift parameters, and , in the mfVm. To the best of our knowledge, the asymptotic theory of the MLE of and has not developed yet; even some methods for the fractional diffusion cases can be applied in this situation (e.g., see [12]). This paper fills in the gaps in this area. Using the Girsanov formula for the mfBm, we introduce the MLE for both and . When a continuous record of observations of is available, both the strong consistency and the asymptotic laws of the MLE are established in the stationary case for the Hurst parameter .

In the aspect of simulation, as far as we know, until now, there are few works referring to the exact experiment for the MLE even in the fractional O-U process. The difficulties come from the process defined in (9): it is not easy to simulate and also will cost much time. Here, we try to illustrate that the MLE of the drift parameter in the mixed fractional O-U process (the same for the Vasicek process) can be achieved when , even if it is not practical.

The rest of the paper is organized as follows. Section 2 introduces some preliminaries of the mfBm. Section 3 proposes the MLE for the drift parameters in the mfVm and studies the asymptotic properties of the MLE for the Hurst parameter range in the stationary case. Section 4 provides the proofs of the main results of this paper. We complete with the simulation of the drift parameter in Section 5. Some technical lemmas are gathered in Section 6. We use the following notations throughout the paper: , , , and ∼ denote convergence almost surely, convergence in probability, convergence in distribution, and asymptotic equivalence, respectively, as .

2. Preliminaries

This section is dedicated to some notions that are used in our paper, related mainly to the integro-differential equation and the Radon–Nikodym derivative of the mfBm. In fact, mixtures of stochastic processes can have properties quite different from the individual components. The mfBm drew considerable attention since some of its properties were discovered in [4,11,13]. Moreover, the mfBm has been proven useful in mathematical finance (see, for example, [14]). We start by recalling the definition of the main process of our work, which is the mfBm. For more details about this process and its properties, the interested reader can refer to [4,11,13].

Definition 1.

An mfBm of the Hurst parameter is a process defined on a probability space by:

where is the standard Brownian motion and is the independent fBm with the Hurst exponent and the covariance function:

Let us observe that the increments of the mfBm are stationary and is a centered Gaussian process with the covariance function:

In particular, for , the increments of the mfBm exhibit long-range dependence, which makes it important in modeling volatility in finance. Let . We use the canonical representation suggested in [13], based on the martingale:

To this end, let us consider the integro-differential equation:

By Theorem 5.1 in [13], this equation has a unique solution for any . It is continuous on , and the -martingale defined in (4) and its quadratic variation satisfy:

where the stochastic integral is defined for deterministic integrands in the usual way. By Corollary 2.9 in [13], the process admits canonical representation:

with:

Remark 1.

For , the equation is a Wiener–Höpfner equation:

and the quadratic variation is:

Let us mention that the canonical representation (6) and (7) can be also used to derive an analogue of Girsanov’s theorem, which will be the key tool for constructing the MLE.

Corollary 1.

Consider a process defined by:

where is a process with a continuous path and , adapted to a filtration with respect to a martingale M. Then, Y admits the following representation:

with defined in (8), and the process can be written as:

Let us mention that is a -martingale with the Doob–Meyer decomposition:

where:

In particular, for all . Moreover, if:

then the measures and are equivalent and the corresponding Radon–Nikodym derivative is given by:

where .

3. Estimators and Asymptotic Behaviors

Now, we return to the model (3); similar to the previous corollary, we define:

From the following Lemma 10 and Equation (44), we know:

where is when . By Theorem 2.4 of [13] and Lemma 2.1 of [7], we know the derivative of the martingale bracket exists and is continuous, as well as the process admits the representation as the stochastic integral with respect to auxiliary observation process . That is to say, the process is well defined.

Then, using the quadratic variation of Z on [0, T], we can estimate almost surely from any small interval as long as we have a continuous observation of the process. Moreover, the estimation of H in the mfBm was performed in [15]. As a consequence, for further statistical analysis, we assumed that H and are known, and without loss of generality, from now on, we suppose that is equal to one. For , our observation will be , where satisfies the following equation:

Applying the analog of the Girsanov formula for an mfBm, we can obtain the following likelihood ratio and the explicit expression of the likelihood function:

3.1. Only One Parameter Is Unknown

Denote the log-likelihood equation by . First of all, if we suppose is known and is the unknown parameter, then the MLE is defined by:

then using (10) for all , the estimator error can be presented by:

We have the following results:

Theorem 2.

For ,

and for ,

Now, we suppose is known and is the parameter to be estimated. Then, the MLE is:

Still with (10), the estimator error will be:

The asymptotical property is the same, as well as the linear case, which was demonstrated in [7]. That is, for , where is a constant defined in Theorem 3 and for , .

3.2. Two Parameters Unknown

Then, taking the derivatives of the log-likelihood function, , with respect to and and setting them to zero, we can obtain the following results:

The MLE and is a solution of the equation of (16), and the maximization can be confirmed when we check the second partial derivative of by the Cauchy–Schwarz inequality. Now, the solution of (16) gives us:

and:

From the expression of , we obtain that the error term of the MLE can be written as:

and:

We can now describe the asymptotic laws of and for , but .

Theorem 3.

For and as , we have:

and:

where

Theorem 4.

In the case of , the maximum likelihood estimator of has the same property of the asymptotical normality presented in (19), and for , we have:

Remark 2.

From the previous theorem, we can see that when , whether one parameter is unknown or two parameters are unknown together, the asymptotical normality of the estimator error has the same result, and they are also the same, as well as the linear case and Ornstein–Uhlenbeck process with the pure fBm with Hurst parameter . However, for , the situation changes, and these differences come from the limit representation of the quadratic variation of the martingale .

Now, we consider the joint distribution of the estimator error. For , if we consider as the two-dimensional unknown parameter, then the following theorem gives us the joint distribution of the estimator error of :

Theorem 5.

The maximum likelihood estimator is asymptotically normal:

where and is the matrix of the Fisher information.

Remark 3.

From Theorem 4, we can see that the convergence rates of and are the same, and we can use the central limit theorem of the martingale in the proof. On the contrary, for , when the function defined in (5) has no explicit formula, we cannot use the method in [16] to obtain the joint distribution of . In fact, the convergence rates of and are different, which causes many difficulties, and we leave it for further study.

In the above discussions, we were concerned with the asymptotical laws of the estimators; however, even in [7] with , the authors did not consider the strong consistency of . In what follows, we conclude that converges to almost surely.

Theorem 6.

For , the estimators of have strong consistency, that is, as ,

Remark 4.

For the estimator , the strong consistency is clear when and the same proof for β is unknown, and that is why we do not write this conclusion.

4. Proofs of the Main Results

4.1. Proof of Theorem 2

From (13), we have:

In fact, the process is a martingale. From Lemma 13, when ,

and from Equation (38) in Proof of Theorem 5, when :

The central limit theorem of the martingale (see [17]) achieves the proof.

4.2. Proof of Theorem 3

First, we consider the asymptotical normality of . Using (18), we have:

where is a centered Gaussian random variable with variation . Using Lemmas 11 and 12, we can obtain:

and:

Now, we deal with the convergence of . From (17), we can easily have:

It is worth noting that:

and:

4.3. Proof of Theorem 4

For , let us relook at Equation (24) with . First of all, let us develop the denominator,

where and are defined in Lemma 10. On the other hand:

Consequently, we have:

We study this one by one. From Lemmas 9, 11, and 14, we have:

From [7], we can easily obtain:

Using Lemma 15, we have:

Now, we consider the numerator,

From the previous proof, it is not difficult to show that:

With the fact in [7]:

we have:

Now, we look at . In fact:

We observe that the denominator is the same formula in and it adapts to Equation (31), so we only need to consider the numerator. From Lemmas 11 and 15, it is easy to know:

For the numerator,

With Lemmas 9, 11, and 14:

On the other hand:

4.4. Proof of Theorem 5

We can see that is a martingale and is its quadratic variation. Strictly speaking, in order to use the central limit theorem for the martingale (see [17]), it is better for us if we can compute the Laplace transform for to achieve the proof, but as the quadratic formula of was verified in [7], here, we just study the asymptotical properties of every component of .

First of all, from [7], we know:

On the other hand, from Lemmas 10, 11, and 15, we have:

Finally, from Lemmas 10, 11, and 15 and [7], we have:

Remark 5.

In fact, it is easy to calculate:

which indicates Theorem 4.

4.5. Proof of Theorem 6

First of all, from Lemma 2.2 of [7], for every fixed and fixed T, the Laplace transform:

We prove the strong consistency of . For , the proof is similar. To simplify the notation, we first assumed . Then, using the fact , we can write:

With (39) and similar to Proposition of 2.5 in [18], due to the strong law of large numbers, to obtain the convergence almost surely, it suffices to prove:

From the Appendix of [19], if we define:

then:

for all . When , the limit of the Laplace transform can be written as:

which achieves (40).

Now, we turn to the case of : in this situation, using (18), we have:

For the first term of the above equation, we can write:

From the proof of Lemma 13 and Equation (40), we see immediately that:

With the previous proofs, we obtain that:

is bounded. This shows that the first term tends to zero almost surely, as well as the second term. Hence, , and we have the strong consistency. The result for can be proven with the same method, so we do not present it again.

5. Simulation Study

5.1. Numerical Solution of

From the construction of the MLE of the two parameters and , we found that the procedure of the simulation will be the same for these two. In order to reduce the time of the simulation, in this part, we only considered the mixed fractional O-U case, that is and defined in (43):

Now:

where is defined in (45):

a direct computation leads to:

and now, the only new thing is the numerical solution of . In fact, we have two methods to find the numerical solution of : first of all, we can first find the solution of and where is a small enough positive constant, then we calculate:

However, with this method, we need two different divisions, and how to choose is also a problem. Therefore, we chose the second method—the explicit formula of from Equation (5). However, when , the integral and the difference are not interchangeable, so we only consider and:

The following is the procedure of the numerical solution of . For every t fixed, we divide the interval into n equal parts, and we denote every point , then the distance will be . With Equation (41), for every , we have:

From the definition of the Riemann integral, for when , the term can be negligible. Therefore, we have the following relationship for the vector .

Lemma 7.

With the previous definition, when ,

where is an matrix with and and the vector is defined by .

From this lemma, we have the numerical solution of the function for t fixed with the formula of :

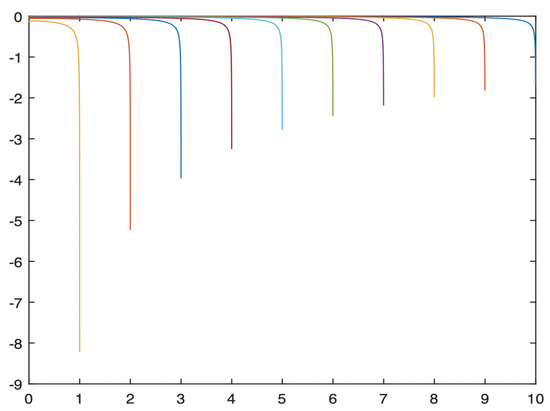

Even if we do not have the explicit solution of , we can use its numerical result with the probability method from [20]. In Figure 1, we simulate the results for and of .

Figure 1.

The solutions of for when .

Then, one may ask: Is our simulation reasonable or not? Of course, we can verify this with the method of the derivative directly, but as we mentioned before, this is very complicated. Notice that when :

From (42), we can compare the two numerical results: and the integral ; if they are nearby, we can say that our simulation is reasonable. We divide all the intervals with n = 5000 for every t fixed, and the following are the numerical results:

- , , , ;

- , , , ;

- , , , ;

- , , , ;

- , , , ;

- , , , .

We can see that the left side and the right side of (42) are almost the same, and we can say our simulation is reasonable.

Remark 6.

When we cannot find the convergence rate of the numerical solution of with the probability method presented in [20], the same problem exists for .

5.2. Procedure of The Simulation of

In this part, we present the simulation of the MLE step by step:

- To obtain our estimator, first of all, we need to simulate the path of the mixed fractional Ornstein–Uhlenbeck process. Different from the general stochastic differential equation, we have the explicit formula of defined in (43):Then, with the numerical result of , we can easily obtain the path of the process of ;

- In the second step, we need the fundamental martingale and the important process ;

- With all these prepared, we use the exact formula:

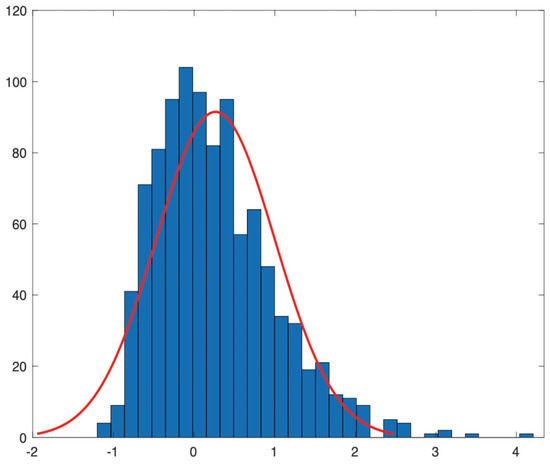

The asymptotical normality of the estimator is presented in Figure 2 for , , and . Even in the figure, still has a bias, but it almost satisfies the property:

Figure 2.

Asymptotical normality of when , , and .

Remark 7.

Compared with the previous estimator, the MLE of course is a good estimator, but why do we not suggest it? First of all, when we take the observation distance T = 10, it is far from the theoretical result. To obtain a reasonable result, at least, we put T = 100; however, this is very time consuming. On the contrary, in [21], we presented a practical estimator, and we just chose a small T and took little time. In general, if one wants to obtain the drift estimator, we do not suggest the MLE, but the previous practical estimator.

6. Auxiliary Results

This section contains some technical results needed in the proofs of the main theorems of the paper. First, we introduce two important results from [7]:

Lemma 8.

For , we have:

This is Lemma 2.5 from [7].

Lemma 9.

For , we have:

This is Lemma 2.6 from [7].

The following lemma shows the relationship between the mfOUp and mfVm.

Lemma 10.

Let be an mfOUp with the drift parameter β:

Then, we have:

Moreover, we have the development of with:

where:

Proof.

In fact, the mixed fractional Vasicek process has a unique solution with the initial value :

On the other hand, the mixed O-U process with is defined by:

Next, we present some limit results.

Lemma 11.

Proof.

From the definition of , we have:

The condition achieves the proof. □

Lemma 12.

For , as , we have:

Proof.

A standard calculation yields:

From Lemma 8,

Now, with the Chebyshev inequality, :

which implies the desired result. □

Lemma 13.

Let ; as , we have:

Moreover, from the martingale convergence theorem, we have:

Proof.

From the definition of , we can write as:

Using (48), we can write our target quantity as:

We consider the above six integrals separately. First, as , from Lemma 8,

From [7], as , we have

Next, from the proof of Lemma 12 and the Borel–Cantelli theorem, as , we obtain:

The following are the results for . When Lemma 11 is also available for all , then:

Lemma 14.

For , we have:

Proof.

The result is clear with Lemma 9. □

Now, we deal with the difficulty of the integral of :

Lemma 15.

For we have:

and:

Proof.

We still consider the integral:

as presented in Lemma 12. When ,

The first part of this expectation, which comes from the Brownian motion of course, admits the result. We develop the second part:

With this development and the same calculation in [22], we have:

and the second converges with the inequality of Cauchy–Schwarz. □

7. Conclusions

In this paper, we considered the maximum likelihood estimator for the drift parameters and in the mixed fractional Vasicek model:

with the continuous observation path . We presented the strong consistency and the asymptotical normality of the MLE and for the two unknown parameters for , as well as the joint distribution when . On the other hand, we also tried to simulate the MLE when with the numerical solution of the derivative of the Wiener–Hopfequation even it is time consuming. There exist two problems to be solved, the joint distribution of the MLE when and the simulation of the MLE when , and they will be our future study.

Author Contributions

Conceptualization, C.-H.C. and W.-L.X.; methodology, all the authors; software, Y.-Z.H. and L.S.; validation, C.-H.C. and W.-L.X.; writing—original draft preparation, Y.-Z.H. and L.S.; writing—review and editing, C.-H.C.; visualization, W.-L.X.; funding acquisition, W.-L.X. and L.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Humanities and Social Sciences Research and Planning fund of the Ministry of Education of China Grant Number 20YJA630053 (L.S.) and the National Nature Science Foundation of China Grant Number 71871202 (W.-L.X.). The APC was funded by the Humanities and Social Sciences Research and Planning fund of the Ministry of Education of China No. 20YJA630053.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Our deepest gratitude goes to the anonymous reviewers for their careful work and thoughtful suggestions that have helped improve this paper substantially.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Aït-Sahalia, Y.; Mancini, T.S. Out of sample forecasts of quadratic variation. J. Econom. 2008, 147, 17–33. [Google Scholar] [CrossRef]

- Comte, F.; Renault, E. Long memory continuous-time stochastic volatility models. Math. Financ. 1998, 8, 291–323. [Google Scholar] [CrossRef]

- Gatheral, J.; Jaisson, T.; Rosenbaum, M. Volatility is rough. Quant. Financ. 2018, 18, 933–949. [Google Scholar] [CrossRef]

- Cheridito, P. Mixed fractional Brownian motion. Bernoulli 2001, 7, 913–934. [Google Scholar] [CrossRef]

- Jacod, J.; Todorov, V. Limit theorems for integrated local empirical characteristic exponents from noisy high-frequency data with application to volatility and jump activity estimation. Ann. Appl. Probab. 2018, 28, 511–576. [Google Scholar] [CrossRef]

- Li, J.; Liu, Y.X. Efficient estimation of integrated volatility functionals via multiscale jackknife. Ann. Stat. 2019, 47, 156–176. [Google Scholar] [CrossRef]

- Chigansky, P.; Kleptsyna, M. Statistical analysis of the mixed fractional Ornstein–Uhlenbeck process. Theory Probab. Its Appl. 2019, 63, 408–425. [Google Scholar] [CrossRef]

- Chigansky, P.; Kleptsyna, M. Exact asymptotics in eigenproblems for fractional Brownian covariance operators. Stoch. Process. Appl. 2018, 128, 2007–2059. [Google Scholar] [CrossRef]

- Chigansky, P.; Kleptsyna, M.; Marushkevych, D. Mixed fractional Brownian motion: A spectral take. J. Math. Anal. Appl. 2020, 482, 123558. [Google Scholar] [CrossRef]

- Kukush, A.; Lohvinenko, S.; Mishura, Y.; Ralchenko, K. Two approachs to consistent estimation of parameters of mixed fractional Brownian motion with trend. Stat. Inference Stoch. Process. 2021. [Google Scholar] [CrossRef]

- Mishura, Y.; Zili, M. Stochastic Analysis of Mixed Fractional Gaussian Processes; Elsevier: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Kubilius, K.; Mishura, Y.; Ralchenko, K. Parameter Estimation in Fractional Diffusion Models; BS Book Series; Springer: Berlin/Heidelberg, Germany, 2017; Volume 8. [Google Scholar]

- Cai, C.; Chigansky, P.; Kleptsyna, M. Mixed Gaussian processes: A filtering approach. Ann. Probab. 2016, 44, 3032–3075. [Google Scholar] [CrossRef]

- Cheridito, P. Arbitrage in fractional Brownian motion models. Financ. Stoch. 2003, 7, 533–553. [Google Scholar] [CrossRef]

- Dozzi, M.; Mishura, Y.; Shevchenko, G. Asymptotic behavior of mixed power variations and statistical estimation in mixed models. Stat. Inference Stoch. Process. 2015, 18, 151–175. [Google Scholar] [CrossRef]

- Lohvinenko, S.; Ralchenko, K. Maximum Likelihood estimation in the non ergodic fractional Vasicek model. Mod. Stoch. Theory Appl. 2019, 6, 377–395. [Google Scholar] [CrossRef]

- Hall, P.; Heyde, C.C. Martingale Limite Theory and Its Application; Academic Press: Cambridge, MA, USA, 1980. [Google Scholar]

- Kozachenko, Y.; Melnikov, A.; Mishura, Y. On drift parameter estimation in models with fractional Brownian motion. Statistics 2015, 49, 35–62. [Google Scholar] [CrossRef]

- Marushkevych, D. Large deviations for drift parameter estimator of mixed fractional Ornstein–Uhlenbeck process. Mod. Stoch. Theory Appl. 2016, 3, 107–117. [Google Scholar] [CrossRef][Green Version]

- Cai, C.; Xiao, W. Simulation of integro-differential equation and application in estimation ruin probability with mixed fractional Brownian motion. J. Integral Equ. Appl. 2021, 33, 1–17. [Google Scholar] [CrossRef]

- Cai, C.; Wang, Q.; Xiao, W. Mixed Sub-fractional Brownian Motion and Drift Estimation of Related Ornstein–Uhlenbeck Process. arXiv 2018, arXiv:1809.02038. [Google Scholar]

- Hu, Y.; Nualart, D. Parameter estimation for fractional Ornstein–Uhlenbeck processes. Stat. Probab. Lett. 2010, 80, 1030–1038. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).