Abstract

Unmanned Aerial Vehicle (UAV)-based photogrammetric reconstruction is a key step in geometric digital twinning of bridges, but ensuring the quality of the reconstruction data through the planning of measurement configurations is not straightforward. This research investigates an approach for quantitatively evaluating the impact of different methodologies and configurations of UAV-based image collection on the quality of the collected images and 3D reconstruction data in the bridge inspection context. For an industry-grade UAV and a consumer-grade UAV, paths for image collection from different Ground Sampling Distance (GSD) and image overlap ratios are considered, followed by the 3D reconstruction with different algorithm configurations. Then, an approach for evaluating these data collection methodologies and configurations is discussed, focusing on trajectory accuracy, point-cloud reconstruction quality, and accuracy of geometric measurements relevant to inspection tasks. Through a case study on short-span road bridges, errors in different steps of the photogrammetric 3D reconstruction workflow are characterized. The results indicate that, for the global dimensional measurements, the consumer-grade UAV works comparably to the industry-grade UAV with different GSDs. In contrast, the local measurement accuracy changes significantly depending on the selected hardware and path-planning parameters. This research provides practical insights into controlling 3D reconstruction data quality in the context of bridge inspection and geometric digital twinning.

1. Introduction

Bridges are critical links of modern transportation networks, requiring effective operation and maintenance based on accurate understanding of current conditions. During bridge condition assessment, various structural components are identified and assessed, and the results should be recorded in an organized manner that can be referred to experts later [1]. To support this process, the concept of geometric digital twinning is often employed, where accurate as-built digital representations of the bridge structure are developed as a platform for assessment and data management [2,3,4,5,6]. Effective integration of such geometric digital twins to the bridge condition assessment workflow is desired to enhance the efficiency and reliability of the life-cycle management of bridge assets.

Photogrammetric 3D reconstruction is a key step in geometric digital twinning [6,7,8]. During the process, UAVs equipped with cameras are often employed to collect images of the target structure and their structural components with an appropriate distance and overlap ratio, followed by the application of Structure-from-Motion (SfM) algorithm to reconstruct the 3D shapes and their textures on those shapes. The reconstructed model contains rich geometric and semantic information, and can be used to assess the as-built structural dimensions compared with as-designed dimensions [9], develop and update Building Information Modeling (BIM) or structural analysis models [8,10,11,12], register observed damage and other findings from visual inspections to specific structural components [13], etc. The data collection process is known to be efficient, with many well-proven software applications to define and execute missions using Global Navigation Satellite System (GNSS) coordinates. Those hardware and software platforms enable the automation of major parts of the data collection process, including mission planning and basic verification, UAV flight and image capture, image post-processing (3D reconstruction), and extraction and registration of damage information [14].

Despite the efficiency of 3D reconstruction by the UAV-based photogrammetry, the data collection step often faces challenges when the technique is applied to tasks requiring high accuracy and level of detail. Compared to the data collection using terrestrial laser scanner (TLS), where the accuracy of the data can be directly controlled by device selection and measurement planning, understanding and incorporating the level of detail and geometric accuracy of the reconstructed models within the inspection context is not straightforward. The operational limits of the UAVs, such as the ones in controlling the UAV and camera poses under the environmental conditions of the bridge site, may change the view of the captured images from the desired views. The error in SfM process may also pose a challenge when highly accurate geometric measurements need to be performed. The quality of the data may need to be assessed depending on the types of measurements performed (e.g., 3D reconstruction data that is accurate enough for the measurement of global dimensions may not have enough detail to measure the extent of local structural damage). Practical insights into the effects of different methodologies and configurations of UAV-based image collection and photogrammetric 3D reconstruction on the resulting quality of the reconstructed data need to be established.

Existing research has attempted to relate data-collection methodologies and configurations to the resulting quality of 3D reconstruction and geometric measurements. For example, data quality at various stages of UAV-based photogrammetry has been investigated in the remote-sensing context [15]. In many such studies, errors from different sources, such as navigational sensor measurements, feature detection and matching, and SfM-based 3D reconstruction, are analyzed in isolation (often as a function of GSD) to enable rigorous statistical characterization. Ref. [16] employed these insights to design and develop UAV-based aerial photogrammetry-aided workflow to the monitoring of the subsidence of structures, including bridges. Ref. [17] compared different GNSS-based point georeferencing approaches in UAV aerial photogrammetry, presenting centimeter-level accuracy compared with traditional indirect georeferencing with ground control points (GCPs) and aided indirect georeferencing. In the context of construction reality capture, Ref. [18] classified the major sources of errors into (1) the systematic error due to camera factors, and (2) the systematic error due to poor planning of camera network geometry. Based on the classification, experiments were designed for a sidewalk slab (flat surface), a concrete retaining wall (moderately curved surface), and a storage building (flat side surfaces of a cubic shape), each with different manual image capturing configurations (equipment, number of photos, and scale bar placements). Accuracy of distance measurements using the reconstructed model is then discussed, clarifying millimeter-level accuracy (less than 1% errors in most of the distance measurements performed), as well as special patterns of distance measurement accuracy for different scale bar placements. Ref. [19] focused on the point cloud registration step and discussed the impact of algorithmic configurations on the resulting accuracy of aligning bridge point-cloud data (a consistent TLS-based data collection was performed to isolate the error arising from point cloud alignment). These quality assessments provide fundamental insight into the individual technical components of bridge reality capture using UAV-based photogrammetry. However, synthesizing these component-level insights to estimate and control the quality of the final outcomes of bridge reality capture is not straightforward; the overall quality depends on many interacting factors, including the geometric and textural characteristics of the target structures, the operational and environmental conditions during field data collection, and the intended application of the reality capture data (e.g., what kinds of information will be extracted from the resulting model).

Existing research has also investigated more direct relationships between data collection methodologies and the quality of 3D reconstruction at the workflow level. Ref. [20] compared point-to-point distances between point clouds obtained from UAV-based photogrammetry and TLS as part of a bridge case study. Ref. [21] proposed an optimal path-planning approach based on a geometric model of the target structure and, as part of its validation, compared UAV-based photogrammetric point clouds acquired with the optimized path against TLS data in terms of point-to-point distances and deck length measurements. Ref. [22] assessed the quality of bridge point-cloud data obtained by UAV-based photogrammetry from multiple aspects, including data coverage, point distribution, outlier patterns, and measurement accuracy of arch dimensions. Assessments include qualitative assessments, as well as quantitative assessments using TLS data as a reference. During the assessments, point clouds of a single arch span are reconstructed using subsets of collected images with two different distance levels. Ref. [23] evaluated the accuracy of 3D reconstruction by comparing the coordinates of the GCPs with corresponding points on the reconstructed model. They showed the reconstruction accuracy for different software and different numbers of GCPs for seismically damaged buildings, demonstrating the potential of using the reconstructed data for the observation of key dimensions relevant to structural deformation. In the context of building reality capture combining TLS data and UAV-based photogrammetry data, Ref. [24] investigated the registration error, scan overlap ratio, point reconstruction error, and coverage rate, wherein methods for combining these two modes of measurements were the parameters for comparisons (a single UAV measurement case was considered, which was combined with other data sources). To enhance the flexibility of such workflow-level assessments, some researchers simulated the data collection plans in photo-realistic synthetic environments and compared the measurement results with the ground truth data available in the simulation environments [25,26,27,28]. These evaluations offer comprehensive views of data quality for various collection plans, but the findings may not carry over to real-world settings as is. Overall, the relationships between the steps of the bridge reality capture workflow in the field and the data quality aspects relevant to inspection tasks are still not fully understood.

In practice, UAV-based image collection is typically planned using simplified metrics that are known to correlate with overall point-cloud quality. Ref. [29] proposed to use redundancy of the observation as a quality metric to guide the measurement planning. Other metrics, such as visibility, GSD, and view angle have also been proposed to indicate the resulting quality of the reconstruction data [14]. These evaluation metrics provide useful insights into the validity of the mission during the preplanning stage, describing the minimum requirement for data collection. However, such approaches face the following challenges when the required accuracy and level of detail are relatively high: (1) these minimum requirements are generally insufficient to achieve the desired data quality (e.g., errors other than pixel discretization are often significant, and the planned and actual GSDs may differ), and (2) these metrics are most closely related to the coordinate-estimation accuracy of individual points and to local point density, which may not directly align with the objectives of reality capture or geometric digital-twin modeling (e.g., capturing images of a certain part of the structure from specific viewpoints may be critically important in applications like [30]).

This research investigates an approach for quantitatively evaluating the impact of different methodologies and configurations of UAV-based image collection on the quality of the resulting data (collected images and 3D reconstructions). Data quality assessment in three key aspects relevant to bridge inspection are developed and combined: trajectory accuracy, point-cloud reconstruction quality, and accuracy of geometric measurements relevant to inspection tasks.

- Trajectory accuracy evaluates how accurately the UAV camera follows the plan of pointing to a specific part of the structure. The assessment does not isolate, for example, errors in UAV flight control or camera gimbal control under ideal conditions. Instead, it evaluates the combined error arising from a commonly adopted practical image-acquisition workflow, including equipment selection, path-planning, GPS localization, and flight control in typical bridge inspection environments.

- Point-cloud reconstruction quality evaluates characteristic error patterns for typical short-span bridges, arising from different image-acquisition methodologies and configurations commonly used in bridge inspection. These error patterns are visualized at the global structure scale and also analyzed statistically using probability density function (PDF) and cumulative distribution function (CDF). The error patterns can provide insights into which geometric dimensions can be measured reliably and which cannot.

- Geometric measurements relevant to inspection tasks are incorporated into the quality assessment process to align the assessment results with many bridge inspection scenarios. In the context of bridge inspection, raw reconstruction results may not be the final product, but the accuracy of specific measurements performed using the reconstructed model is of interest. This assessment can provide insights into how the accuracy of those scenario-specific measurement outcomes changes and can possibly be controlled.

Combining these three evaluations, the proposed approach can characterize data quality aspects relevant to bridge inspection scenarios in the field at the workflow level. The approach is demonstrated through the case study of typical short-span road bridges in Shanghai. For different UAV platforms, an industry-grade UAV and a consumer-grade UAV, paths for image collection from different Ground Sampling Distance (GSD) and image overlap ratios are considered, yielding insights into the change in the data quality caused by different measurement methodologies and configurations. Overall, this research develops a pipeline to assess measurement methodologies and to provide practical insights into controlling 3D reconstruction data quality in the context of bridge inspection and geometric digital twinning.

Compared with existing workflow-level studies that compare or combine point clouds from UAV-based photogrammetry and TLS (e.g., [20,21,31,32]), this study (1) examines the effects of multiple aspects of the reality-capture workflow (e.g., equipment, path-planning, and SfM settings) on the overall quality of the reality-capture results, (2) develops a multidimensional quality assessment scheme (trajectory accuracy, point-cloud reconstruction quality, and geometric measurements relevant to inspection tasks) aligned with common needs of bridge inspection, and (3) reports detailed data and insights on these quality metrics obtained from typical short-span road bridges in the field.

This article is organized as follows: Section 2 discusses the methodologies of this research, including design and implementation of different measurement configurations, as well as the methodology for quantifying errors in different steps of photogrammetric 3D reconstruction. Section 3 provides a case study, demonstrating the data quality evaluation and discussing insights obtained from the evaluation. Section 4 concludes this research and discusses future work.

2. Methodologies

2.1. Overview

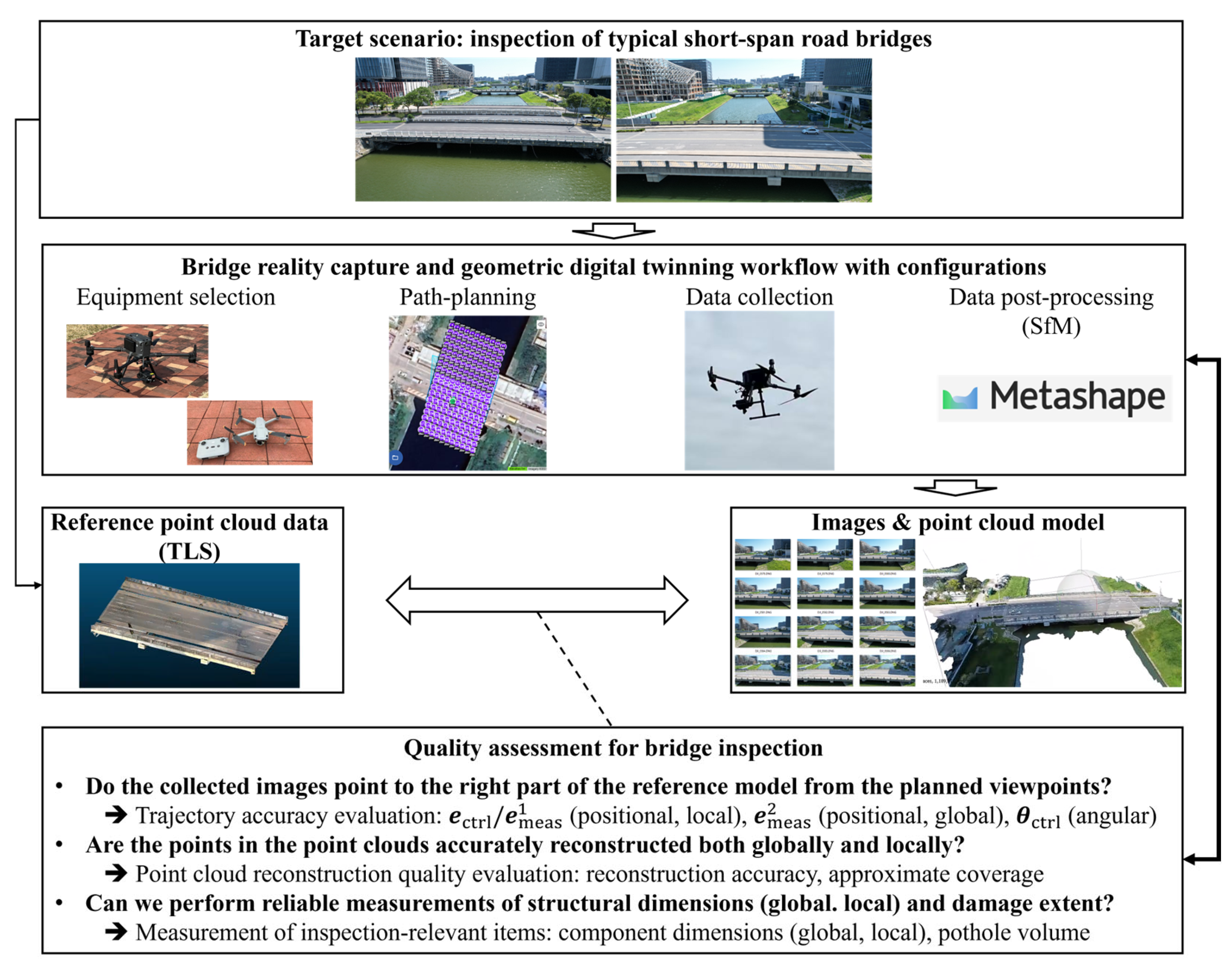

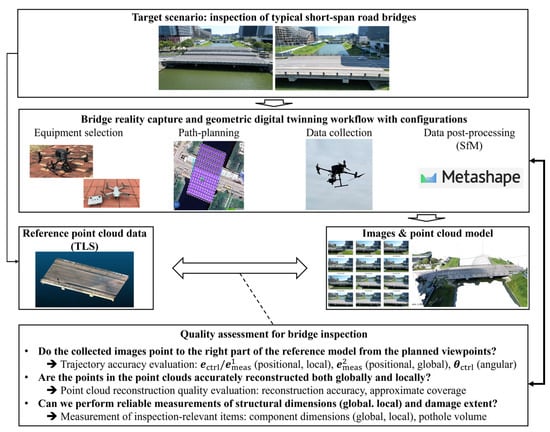

This research investigates the influence of measurement methodologies and configurations, including equipment selection, path-planning parameters, and data post-processing parameters, on the resulting quality of the bridge reality capture data. The overview of the investigation is shown in Figure 1. Focusing on the inspection of short-span road bridges as the target application, this study first designs reality-capture plans with different configurations. These plans follow a commonly adopted workflow, with varying parameters as listed in Table 1, so that the study reflects typical data-collection scenarios in practice. Regarding the equipment, this research selected two UAV platforms representative of an industry-grade UAV and a consumer-grade one, as well as a terrestrial laser scanner (TLS) providing accurate reference measurements. In the UAV path-planning step, this research designs multiple paths using Ground Sampling Distance (GSD) and overlap ratio as the key parameters. The collected images are processed by the Structure-from-Motion (SfM) algorithm to perform 3D reconstruction, followed by the evaluation of trajectory accuracy, point-cloud reconstruction quality (accuracy and approximate coverage), and measurement accuracy for inspection-relevant items. By analyzing the evaluation metrics, the practical influence of measurement configurations on the reconstruction quality is clarified.

Figure 1.

Framework of quality assessment of bridge reality capture and geometric digital twinning data under different measurement configurations.

Table 1.

Overview of the measurement configurations.

2.2. Equipment Selection

This research selects two UAV platforms, each representing large industry-grade UAVs and small consumer-grade UAVs, to clarify the influence of raw data quality on the resulting 3D reality capture quality. Moreover, a terrestrial laser scanner (TLS) is used to provide highly accurate reference data for evaluation purposes.

2.2.1. DJI Matrice 300 RTK (M300)—An Industry-Grade UAV

The DJI Matrice 300 RTK (M300) (DJI, Shenzhen, Guangzhou, China [33]) is an industry-grade drone with Real-Time Kinematic (RTK) positioning for centimeter-level precision (Figure 2). This research mounts the DJI Zenmuse H20T as a payload, which integrates a 20-MP zoom camera with equivalent focal length ranging from 31.7 mm to 556.2 mm, a 12-MP wide-angle camera (equivalent focal length: 24 mm), a thermal camera (resolution: 640 × 512 pixels), and a laser rangefinder. This research uses data from the wide-angle camera, considering the importance of coverage and image overlaps in the reality capture applications. The platform is remotely operated using the DJI Pilot 2 application (version 10.1.8.14). This research develops a Python (version 3.12) helper function to define and format waypoint-based missions, which are uploaded to the applications and executed.

Figure 2.

DJI M300 UAV.

2.2.2. DJI Air 2S—A Consumer-Grade UAV

The DJI Air 2S (DJI, Shenzhen, Guangzhou, China [33]) is a compact consumer-grade drone designed for aerial imaging applications (Figure 3). The Air 2S is equipped with a 1-inch CMOS sensor camera capable of capturing 20-MP still images, with an equivalent focal length of 22 mm. The UAV is remotely operated using the designated controller connected to smartphones with compatible applications; this research used Litchi (version 2.15.7) [35], considering the ease and flexibility of performing missions defined outside the applications (e.g., ones generated using a Python program).

Figure 3.

DJI Air 2S UAV.

2.2.3. FARO Focus S350 Laser Scanner

The FARO Focus S350 (FARO, Lake Mary, FL, USA [34]) is a high-precision terrestrial laser scanner (TLS) designed for detailed 3D data acquisition (Figure 4). The platform offers a maximum range of 350 m with a systematic measurement error of ±1 mm (evaluated at around 10 m and 25 m) and a measurement rate of up to 976,000 points per second. The raw scanning data collected in the field is processed by the FARO SCENE software to obtain a high-precision point cloud model of the entire bridge, serving as the reference for Structure-from-Motion-based point-cloud data quality assessments.

Figure 4.

FARO Focus S350 TLS.

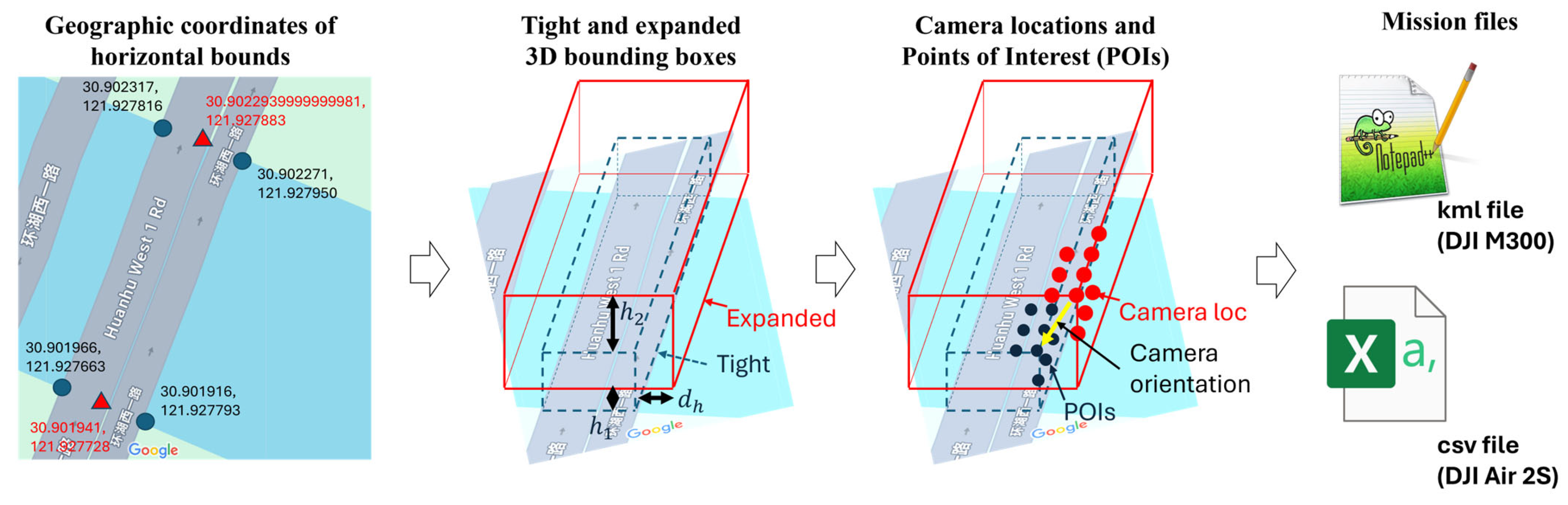

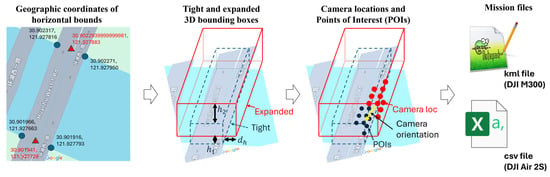

2.3. UAV Path-Planning

This research defines the UAV paths based on the Ground Sampling Distance (GSD) and image overlap ratio as primary parameters, following the steps illustrated in Figure 5. First, the four corners of the bridge footprint are read on the map, and their geographic coordinates are recorded. The horizontal bounding box is extruded into the tight 3D bounding box using the estimated height of the bridge (above ground). This tight bounding box is shifted upward and expanded in both vertical and lateral directions to define the planes where the UAVs maneuver. The vertical offset is defined by the offset of the bottom plane () and that of the top plane (), ensuring sufficient vertical clearance from the ground and from the top surface of the bridge (typically because we need more distance to ensure safety of traffic using the bridge). The horizontal offset, in the figure, was determined using the GSD for bridge sides, [m/pixel] as the parameter, following the equations:

where is the distance from the bottom corners of the expanded bounding box (red box in the figure) to the corresponding bottom corners of the tight bounding box, and is the horizontal component of that distance. For the top surface, the vertical clearance () is set externally considering the safety of the mission, and therefore, the GSD for the top surface, [m/pixel] was calculated by

Figure 5.

Steps of UAV path-planning adopted in this research.

The camera locations were then sampled in the side faces and top face of the expanded bounding box in a grid pattern. Corresponding to those camera locations, camera Points of Interest (POIs) were sampled on the surface of the tight inner bounding box, with the numbers of grid lines in the bridge longitudinal, lateral, and vertical directions equal to those defined for the expanded bounding boxes. The intervals of grid lines on the tight bounding box were first determined by

where and are image resolution (width and height), and is the overlap ratio at the specified GSD (e.g., 0.9). Finally, the numbers of grid lines on the tight bounding box in longitudinal, lateral, and vertical directions were determined by dividing the corresponding dimensions of the tight bounding box by the interval, followed by the ceil operation. The same numbers of grid lines are also placed with uniform intervals on the expanded bounding box, defining the camera locations. The camera poses defined by those locations and POIs are connected by the lawn-mowing pattern.

The missions for M300 UAV are formatted as kml files that can be uploaded to the DJI Pilot 2 application, while missions for Air 2S UAV are formatted as csv files that can be uploaded to the Litchi application. At each waypoint, the UAV stops for 1 s and takes a picture, reducing the impact of UAV motion and ensuring sufficient time to collect and store data.

2.4. Structure-from-Motion-Based 3D Reconstruction and Post-Processing

This research applies the Structure-from-Motion (SfM) method to the collected images to obtain a dense 3D reconstruction of bridges. This research automates the processing of different cases using Agisoft Metashape software (version 2.2.1) with its Python Application Programming Interface (API) [36]. After loading images and aligning cameras (sparse reconstruction), the region of interest (ROI) for dense 3D reconstruction is set by computing a tight axis-aligned bounding box (AABB) in the East–North–Up (ENU) coordinate system and expanding that AABB by 50% in each side and axis. The dense reconstruction in that ROI is then performed, followed by the export of dense point-cloud data and estimated camera poses.

During this process, Metashape sets two parameters (among others) that directly control the accuracy of the SfM results, alignment accuracy and depth-map quality. The alignment accuracy parameter controls the up-/downsampling applied to the images before feature detection and matching. By default, it is set to “High accuracy”, meaning that images are processed at their original resolution. When set to “Highest accuracy”, the images are upsampled by a factor of four before processing. The depth-map quality parameter specifies the resolution at which depth-maps are computed. By default, it is set to “High quality”, meaning that depth-maps are downsampled by four times compared to the original image resolution. When set to “Ultra High quality”, depth-maps are computed at the original image resolution. In all analyses of this research, cameras were self-calibrated, and mild depth filtering was applied (keeping relatively fine details).

The raw dense point-cloud data obtained by UAV imagery and TLS (reference data) is post-processed to enable subsequent data quality evaluations. The processing includes (1) only extracting the bridge part, (2) removing noisy points, including vegetation and other irrelevant objects on and in the immediate vicinity of the bridge, (3) rough manual alignment of SfM point-cloud data to the reference point-cloud data, and (4) fine alignment and scale adjustment by Iterative Closest Point (ICP) algorithm [37]. These steps are applied manually or semi-automatically using CloudCompare software (version 2.13.2) [38].

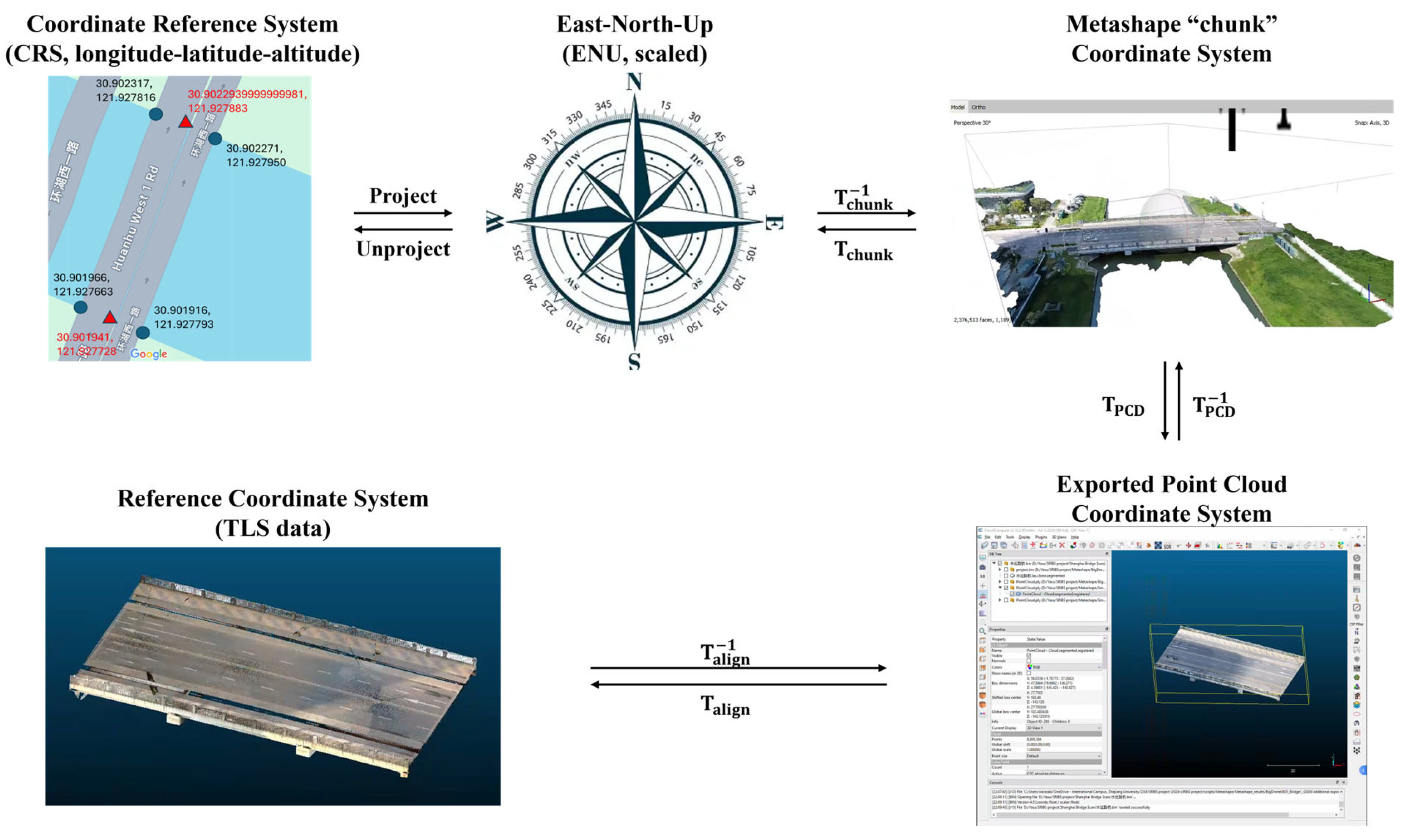

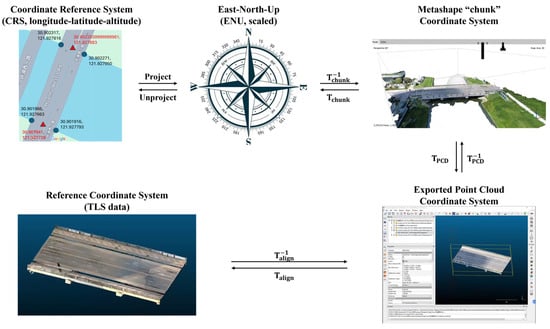

To prepare for the comparative evaluations of different measurement configurations, data should be transformed into appropriate coordinate systems. The coordinate systems and transformation matrices discussed in this research are summarized in Figure 6. Data collection plans (UAV flight paths) are originally expressed in a coordinate reference system (CRS) consisting of longitude, latitude, and altitude (altitude is expressed in either above sea level or above ground level, depending on the context). The coordinates in the CRS are projected to the East–North–Up (ENU) coordinate system, followed by the transform to convert the data to the coordinate system used internally in the SfM algorithm (this research uses the term “chunk”, following the terminology in the Metashape Software). When the point cloud is exported, coordinate transform, , is also applied to express the data in meter units (scale is inferred from GPS data embedded in image EXIF data). This exported point cloud coordinate system is also determined in the SfM process, and therefore, the exported point cloud should be further converted to a reference coordinate system to enable comparative evaluation. This research uses the coordinate system used by the accurate TLS data (Section 2.2.3) as the reference coordinate system, and identifies the similarity transform (rotation, translation, and isotropic scale adjustment) from the exported point cloud coordinate system to the reference coordinate system, , through the alignment of the exported and reference point-cloud data.

Figure 6.

Coordinate systems and transformations discussed in this research.

2.5. Evaluation Methodologies

This research performed evaluations of the bridge reality capture quality in three aspects: trajectory accuracy, point-cloud reconstruction accuracy, and measurement accuracy for specific items relevant to inspection. Evaluations of these aspects are discussed in the following subsections.

2.5.1. Trajectory Accuracy

The evaluation of trajectory accuracy clarifies how accurately UAV image collection plans are executed. UAV image collection plans that can be converted to the reference coordinate system by tracing the transformations shown in Figure 6 (planned camera pose, , or planned camera location, ) are expressed in the CRS. When the plan is executed using a UAV platform, the UAV uses its positioning functionality, based on which the UAV and camera poses are controlled to reach the designated camera pose as accurately as possible. The measured camera coordinates are recorded in image EXIF data, which is also converted to the reference coordinate system (). The difference between planned and measured camera locations primarily indicates the accuracy of UAV and camera pose control during the mission:

The two horizontal components of indicates how well the planned GNSS coordinates are followed by the UAV camera. Interpretation of the vertical components is less straightforward, because missions are defined using height above-ground, while altitude data recorded in EXIF typically contains large offset and is less accurate. This research primarily interprets the error standard deviation for the altitude data.

The UAV and camera trajectories are controlled to match the planned locations (defined by GNSS coordinates) with the measured locations recorded in the EXIF data, while the measured locations are also susceptible to error [39]. This research analyzes this GNSS measurement error in the following two methods. The first method analyzes the random error, , which is defined as the difference between the measured coordinates read from EXIF data and the camera locations estimated by the SfM algorithm (); both are converted to the reference coordinate system by tracing the transforms defined in Figure 6:

This error, , represents the correction made by the SfM algorithm to make the relative camera location to the bridge accurate. This error can capture local random variability of the location measurement, but the systematic error (bias error) cannot be captured (if the entire measurements are offset by a constant amount, SfM will simply reconstruct the model in a slightly shifted location, without correcting camera poses locally). To observe the systematic error, we take the datasets of the same bridge collected at two different measurement configurations (e.g., date and time, GNSS receivers, and other algorithmic factors), and , perform SfM and model alignment, and composite transforms from CRS to the reference coordinate systems for these two times, and , where can be formed by

and defines how the measured GNSS coordinates in and can be transformed to reference coordinate system. Therefore, actual difference in location expressed with the same GNSS coordinates in two different times can be observed by transforming the same coordinates by these two transforms:

This error can be regarded as a composite workflow-level systematic error that arises when the recorded GNSS coordinates are transformed into the reference coordinate system of the structure ( does not represent purely the GNSS positioning error).

Besides camera locations, camera angles also affect the area captured by the image. The planned camera directions () can be extracted from the camera pose matrix expressed in the reference coordinate system, while the corresponding actual camera direction can be estimated () by transforming the SfM results (camera pose) to the reference coordinate system by applying . The angular error can then be estimated by

These errors and their basic statistics characterize the accuracy of performing data collection missions on different platforms.

2.5.2. Point-Cloud Reconstruction Quality

The overall quality of reconstructed point-cloud data is evaluated by comparing the reconstructed point-cloud data with the reference point-cloud data collected by the TLS. The shortest distance from each point in the reconstructed point cloud to the reference point-cloud data is computed to quantify how accurately the geometry of each part is reconstructed (referred to as reconstruction accuracy hereafter). At the same time, the shortest distance from each point in the reference point-cloud data to the reconstructed point-cloud data is evaluated. The set of points in the reference point-cloud data with this shortest distance less than a threshold value indicates the parts of the structure covered by the reconstructed point-cloud data. This research uses the proportion of this set of points over the entire set of points as the approximate metrics of coverage of the reconstructed model.

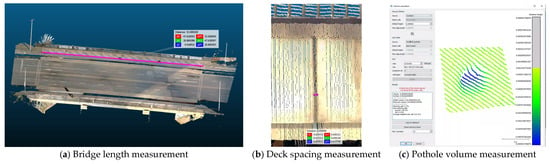

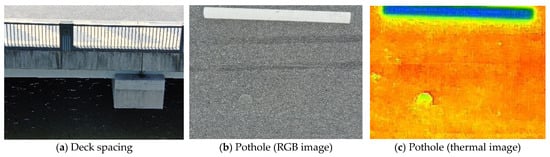

2.5.3. Measurement of Component Dimensions and Damage Extent

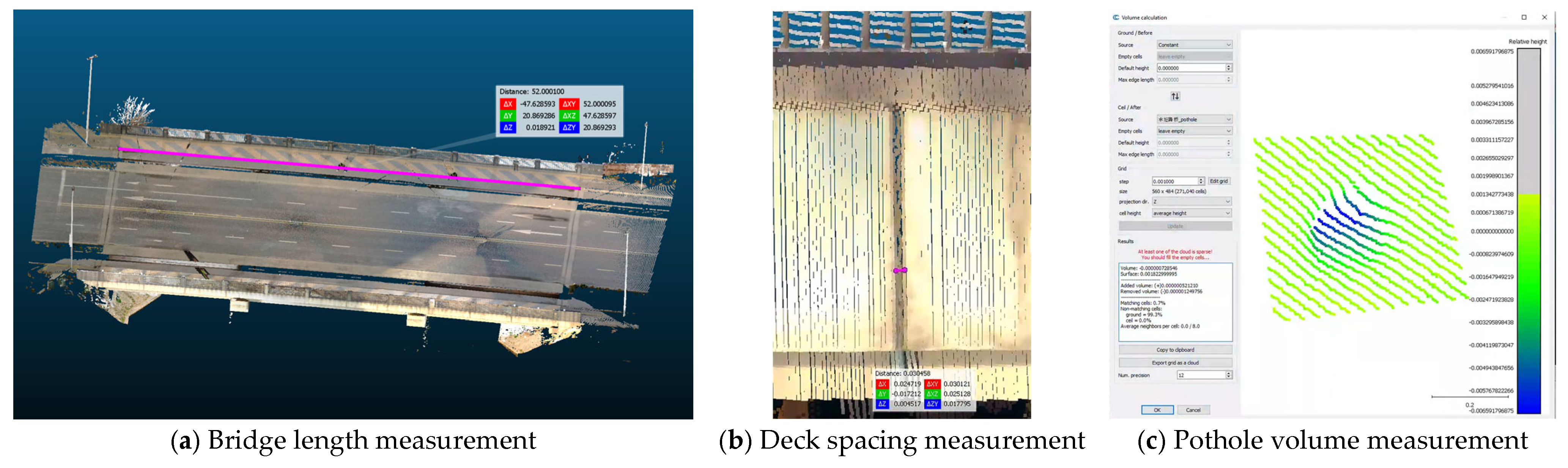

While the point-cloud reconstruction quality metrics discussed in Section 2.5.2 represent global data quality and sometimes local patterns of the quality, a more direct assessment of data quality in the context of bridge inspection is also needed. This research measures key dimensions and damage extent commonly discussed in inspection reports, such as deck length, deck spacing, and pothole volume. The measurements were performed with the help of CloudCompare software (Figure 7). Length measurements were performed by selecting two points that accurately define the target length (e.g., corner points) and calculating the distance between those two points (Figure 7a,b). For the volume measurement, a plane is fitted to the local point-cloud data, followed by the coordinate transformation to align the point cloud and the plane to the plane z = 0. The local point cloud is further segmented to extract the area near the pothole only, and its z coordinates are integrated within the segmented region (Figure 7c). These measurements across different configurations, as well as the reference configuration leveraging the TLS, are analyzed to clarify the influence of measurement configurations on these global and local structural measurements.

Figure 7.

Point cloud–based measurement process. The scale bar follows the units of the point cloud’s coordinate system (e.g., meters when the Reference Coordinate System in Figure 6 is used).

3. Case Study

3.1. Bridge Descriptions

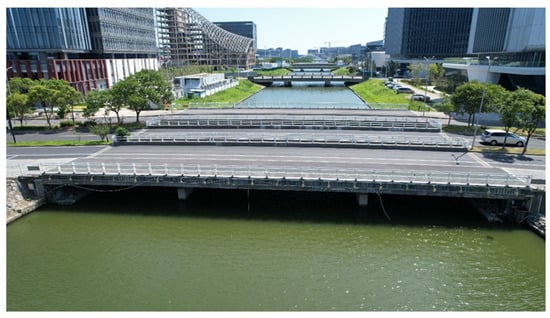

This research selected two short-span road bridges for the testbed for the case studies. The first Chenghegang Bridge is in Huanhu South first Road in Pudong New Area, Shanghai, spanning Chenghe Port. The bridge is a three-span, cast-in-place reinforced concrete continuous box girder bridge, with a total length of 45.0 m and (18 m main span and 13.5 m side span in each side). The total width of the bridge deck is 43.0 m. The overview of the first Chenghegang Bridge is shown in Figure 8.

Figure 8.

The first Chenghegang Bridge, Pudong New Area, Shanghai.

The second Chenghegang Bridge is located on Shuiyun Road in Pudong New Area, Shanghai. The second Chenghegang Bridge is a three-span cast-in-place reinforced concrete beam bridge. The total length of the bridge is 52.0 m (20.0 m main span and 16.0 m side span in each side). The total width of the bridge deck is 28.8 m. The overview of the second Chenghegang Bridge is shown in Figure 9.

Figure 9.

The second Chenghegang Bridge, Pudong New Area, Shanghai.

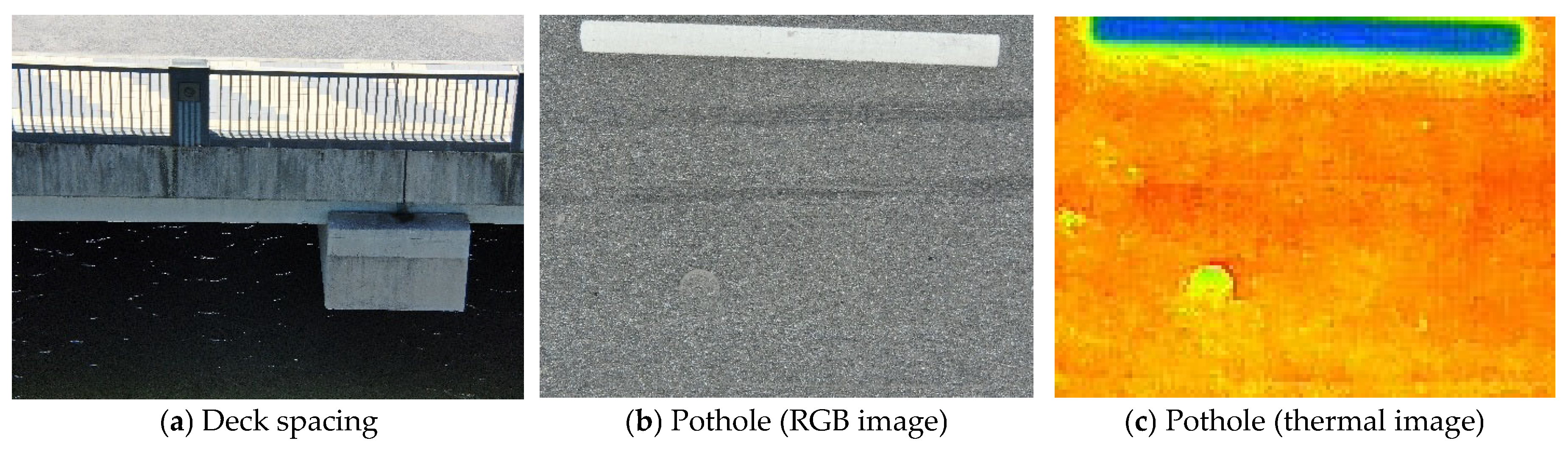

Both bridges were in good condition, and no significant structural damage was observed. Therefore, in the later quality evaluation, we considered measurements of deck spacing (local dimensions, Figure 10a) and minor potholes on the pavement (local volume, Figure 10b,c), as well as bridge global dimensions, such as geometric measurements similar to those performed during actual inspection activities.

Figure 10.

Point cloud-based local measurement targets for the second Chenghegang Bridge. Colors of the thermal image indicate relative surface temperature, with blue representing lower temperatures and yellow–red representing higher temperatures.

3.2. UAV-Based Image Collection and 3D Reconstruction

Following the steps discussed in Section 2, two UAVs, DJI M300 and DJI Air 2S, are used to collect images of the two bridges. The values of key parameters discussed in Section 2.3, height offset and , GSD for the side , and overlap ratio , are shown in Table 2. For both bridges, was fixed to 15 [m], considering the need for ensuring sufficient vertical clearance from the bridge top surface. Because was fixed, GSD of the top was determined by

Table 2.

Path-planning parameters considered in the case study. First: The first Chenghegang Bridge, Second: The second Chenghegang Bridge. Numbers of images shown in parentheses indicate those after removing noisy images.

For the equipment selected for this research, is 5.17 mm/pixel for DJI M300 and 4.30 mm/pixel for DJI Air 2S. For the scanning of the sides of the bridges, GSD was controlled and set to 5 [mm] and 8 [mm] (corresponding to the horizontal distance of 14.38 [m] and 23.14 [m] for M300, 17.34 [m] and 27.86 [m] for Air 2S). We also planned to perform the missions with ; however, we did not end up executing the mission because we assessed that the UAVs (particularly M300 collecting images with its wide-angle camera) go too close to the bridge. Finally, image overlap ratio, , is generally set to 0.9, considering that lower overlap cases can be obtained by sampling images from the set of images collected with . For the top side of the first Chenghegang Bridge, the overlap ratio in the bridge lateral direction was set to 0.8, because the number of images for reached about 500 images for the top side only. For both bridges, and are fixed due to the operational constraint, and therefore, the data for the top side was collected once for the case with , and reused for cases with other values of (UAV paths for different values of are similar for the top surface, according to the process described in Section 2.3). In total, missions are planned for two UAVs and two values of , with cases augmented for different values of by sampling collected images. We performed data collection on 5–6 August 2025. Both days were sunny days with moderate winds.

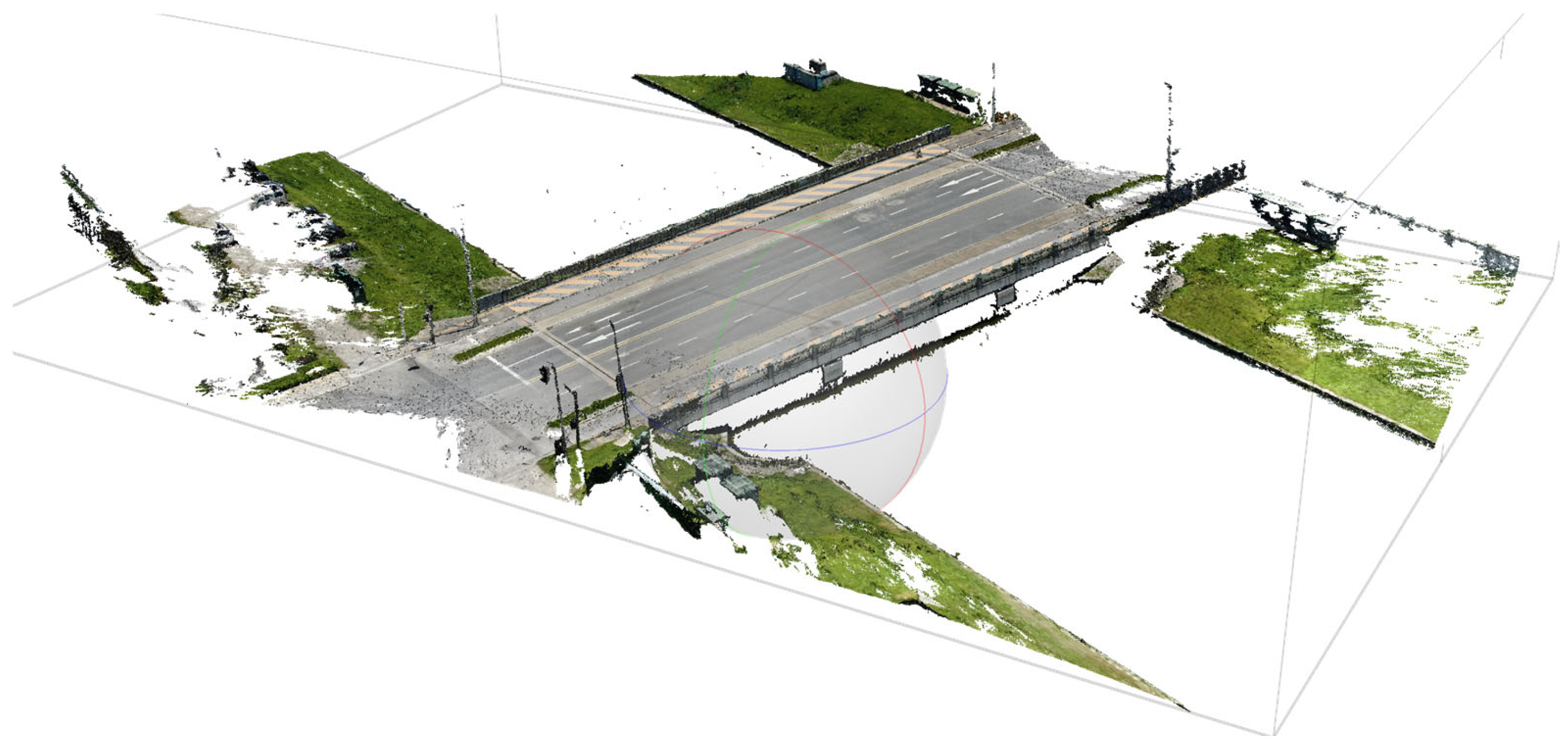

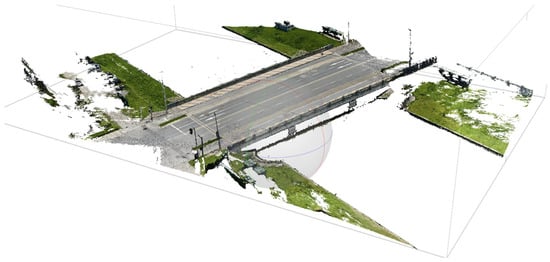

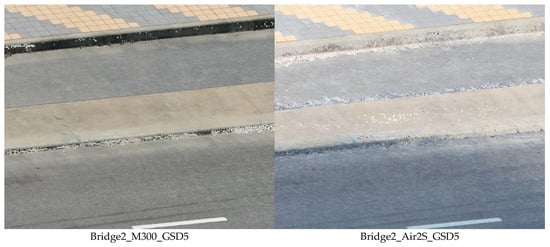

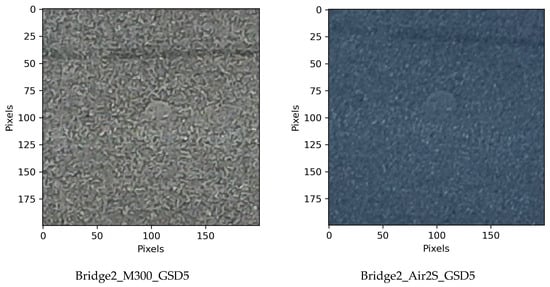

The datasets are referred to with the bridge name, UAV name, , and spatial downsampling ratio that controls image overlaps. For example, Bridge1_M300_GSD5 refers to the dataset of the first Chenghegang Bridge, collected with DJI M300 UAV with and overlap ratio listed in Table 2. Bridge2_Air2S_GSD8_DS2 refers to the dataset of the second Chenghegang Bridge, collected with DJI Air2S UAV with and with spatial downsampling in the bridge longitudinal direction by a factor of 2 (effective overlaps in the longitudinal direction reduces from 0.9 to 0.8, or from 0.8 to 0.6). In this paper, we also call these effective overlaps “overlaps”, and use to denote the parameter. A representative raw point-cloud data obtained as a result of SfM is shown in Figure 11.

Figure 11.

Representative raw point-cloud data (Bridge2_M300_GSD5). The raw point-cloud data comprises 20,573,375 points. See Table 2 for the details of data collection parameters.

Based on the collected images, 3D reconstruction was performed using Metashape Software, followed by post-processing discussed in Section 2.4. A total of 6 reconstructions listed in Table 2, as well as 12 additional reconstructions with reduced longitudinal image overlap ratios (DS2 and DS3), were performed. Moreover, two more reconstructions were performed with different configurations of the SfM algorithm (Metashape software in this research), as discussed in Section 2.4. Choosing Bridge2_M300_GSD5 as a representative case, the alignment accuracy was changed from “High accuracy” to “Highest accuracy” (Bridge2_M300_GSD5_HighestAlign), or the depth-map quality was changed from “High quality” to “Ultra High quality” (Bridge2_M300_GSD5_UHDepth). These algorithm configurations change the computational load of the workflow significantly. For example, using a computer with Intel(R) Core(TM) i9-10900K CPU @ 3.70 GHz, 64.0 GB RAM, NVIDIA GeForce RTX 3090 (24 GB), and NVIDIA GeForce RTX 4090 (24 GB), Bridge2_M300_GSD5, Bridge2_M300_GSD5_HighestAlign, and Bridge2_M300_GSD5_UHDepth took 1.80 h, 1.87 h, and 26.0 h, respectively (bottleneck was the step to build a dense point cloud from depth-maps). Increasing the depth-map resolution by a factor of two in both horizontal and vertical directions resulted in a computation time increase much greater than the corresponding fourfold increase in pixel count, limiting the practicality of that configuration or increasing the need for smart selection of reconstruction regions.

3.3. Data Quality Evaluation

In this section, data quality of the 18 reconstruction results is evaluated by the steps discussed in Section 2.5 to clarify and quantify the influence of different measurement configurations.

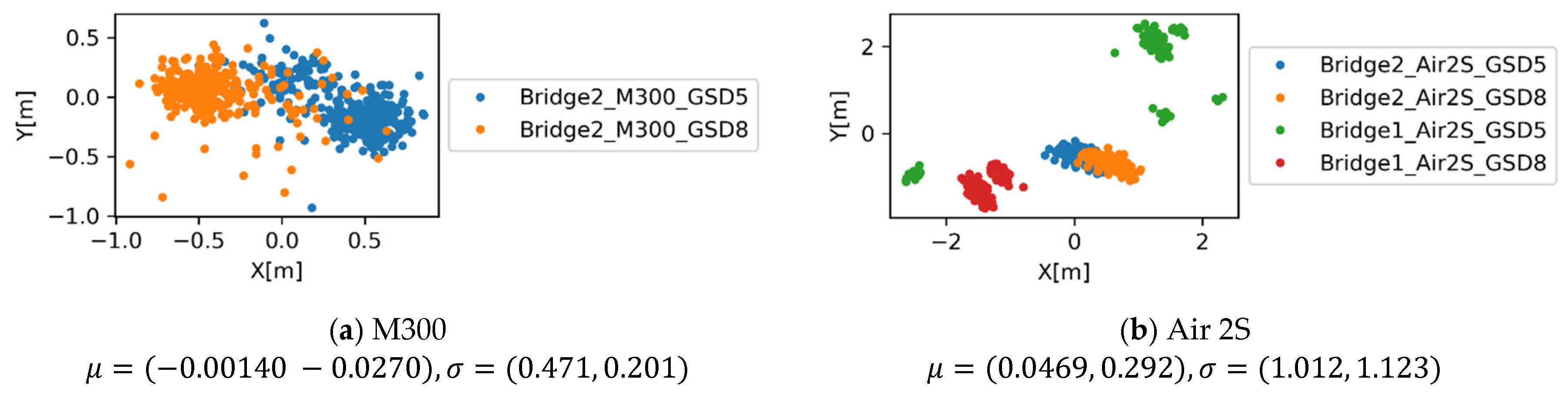

3.3.1. Trajectory Accuracy Evaluation Results

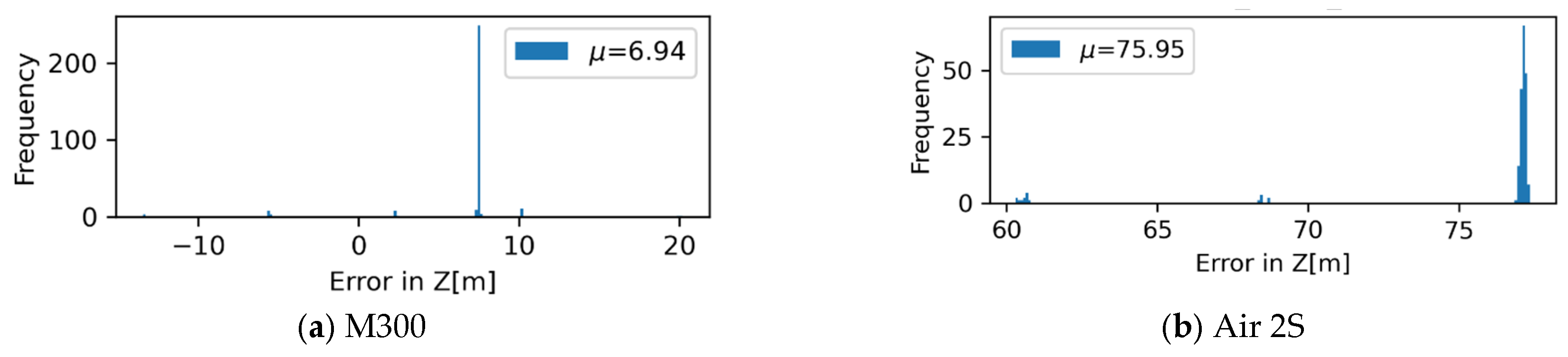

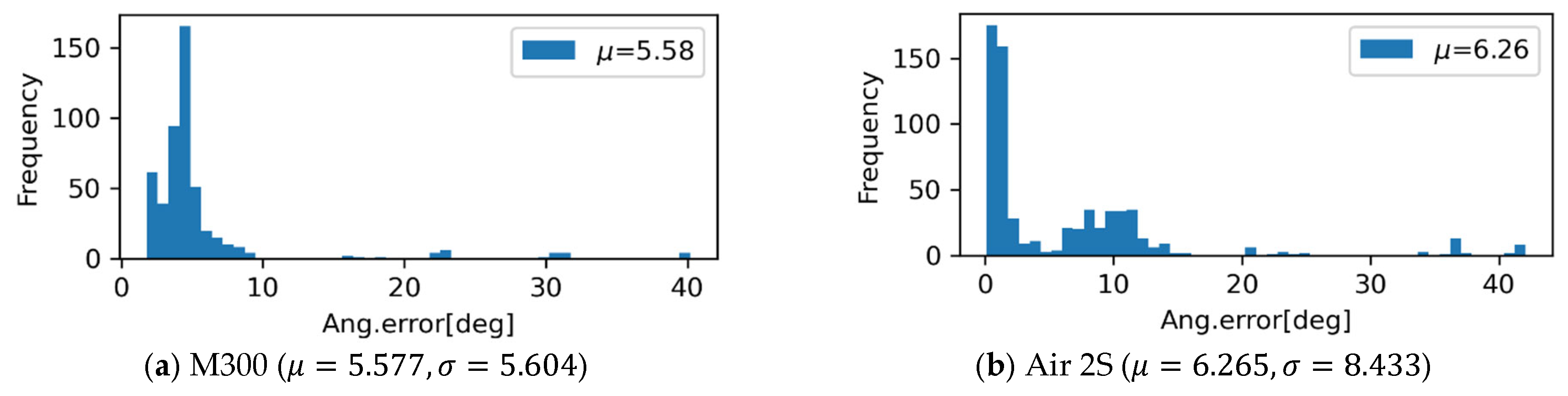

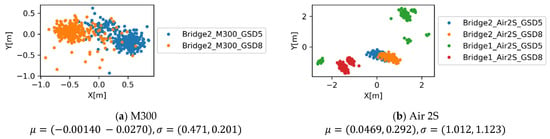

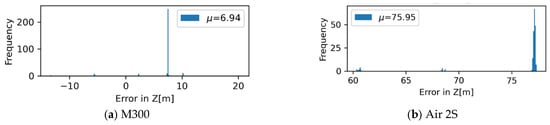

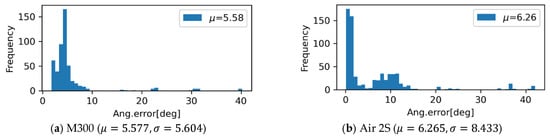

The errors for UAV and camera pose control, , are shown in Figure 12 and Figure 13. For each data collection case, the measured GNSS coordinates are different up to about , with systematic errors particularly evident in the small consumer-grade UAV. The mission can only be conveniently defined for above-ground level height. Along with the inherent uncertainty of the altitude measurement, the error in the direction of the height shows more significant variation. The histogram for the angular error for UAV and camera control, , collected for entire cases for industry-grade and consumer-grade UAVs, are shown in Figure 14. The industry-grade UAV, M300, can almost always achieve errors less than 10 degrees, while a larger proportion of errors at 10 degrees or higher was observed for the Air 2S UAV. This level of error should be accommodated when the data collection plan is made.

Figure 12.

Error metrics for UAV and camera pose control, .

Figure 13.

Error metrics for UAV and camera pose control, . Note that above-ground level height is compared with the altitude measurement in exif data, causing large bias between those two quantities.

Figure 14.

Angular error metrics for UAV and camera pose control, .

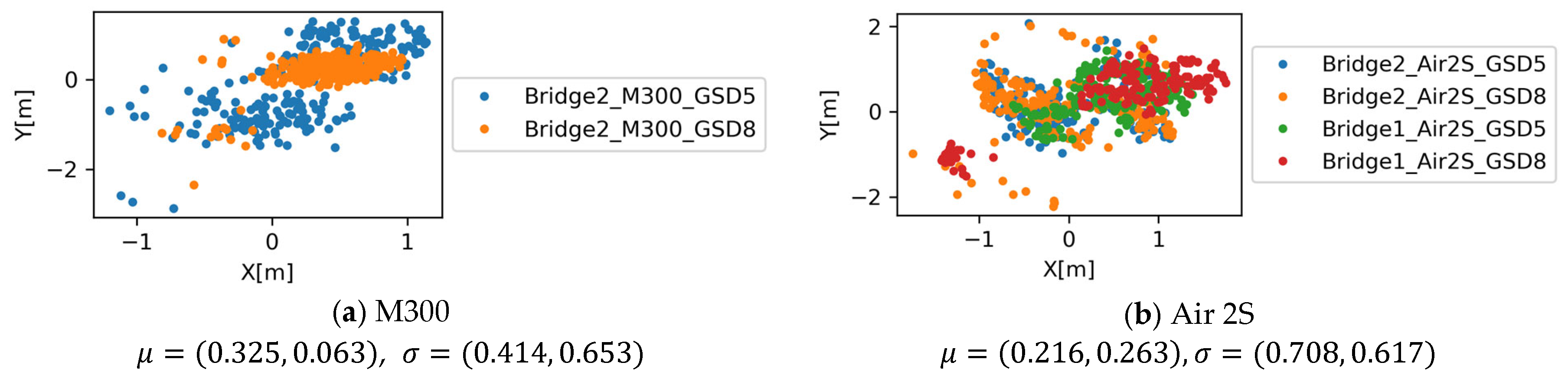

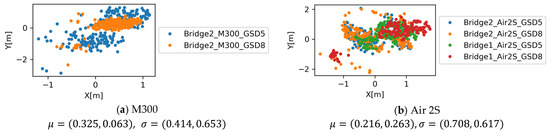

The first GNSS error metric, , is evaluated for each case and shown in Figure 15. For all cases, the positional correction of up to m has been made by the SfM process, indicating the accuracy of the GPS measurement relative to the reconstructed model (note that geometric reconstruction error and other sources of error from the data recording process also propagates to this error). This error indicates that, when the plan is perfectly executed in terms of flight and camera pose control, the camera locations are altered by this amount by the SfM process, based on either measurement error or the SfM reconstruction error. When the observation in images is registered to the reconstructed model, this level of error should be taken into account.

Figure 15.

The first GNSS error metric, for different equipment and GSD configurations.

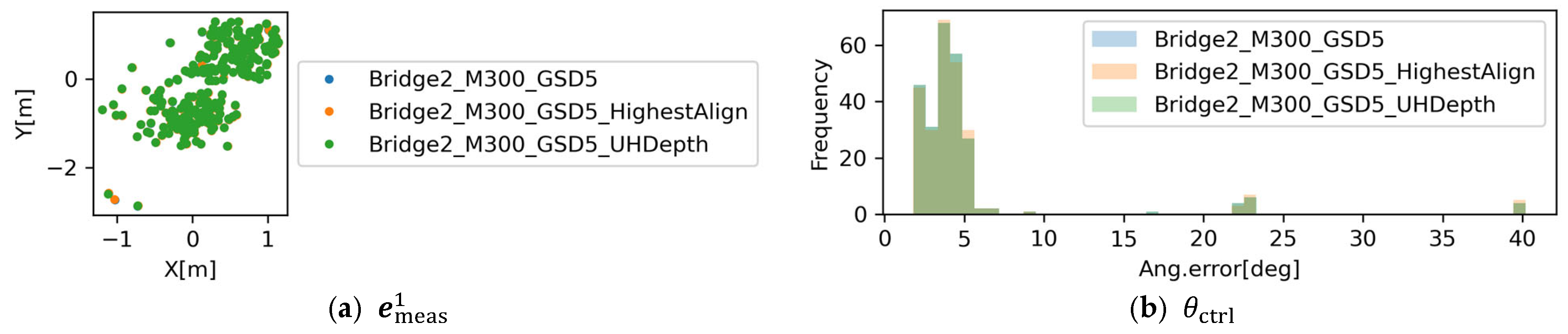

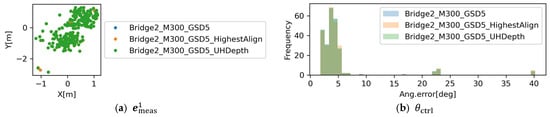

These error metrics, and , are also evaluated for different configurations of the SfM algorithm (Bridge2_M300_GSD5_HighestAlign and Bridge2_M300_GSD5_UHDepth). Figure 16 shows comparisons of those error metrics related to planned vs. actual camera poses. Shapes of distributions do not show significant difference. The mean and standard deviation of were −0.889 and 2.169 for Bridge2_M300_GSD5, −0.888 and 2.167 for Bridge2_M300_GSD5_HighestAlign, and −0.910 and 1.998 for Bridge2_M300_GSD5_UHDepth. For , the mean and standard deviation were 5.193 and 5.926 for Bridge2_M300_GSD5, 5.290 and 6.279 for Bridge2_M300_GSD5_HighestAlign, and 5.193 and 5.926 for Bridge2_M300_GSD5_UHDepth. The results indicate that the major factors affecting these errors are not in the SfM algorithm but in other components of the workflow (e.g., equipment). However, these algorithm factors do matter if the target application requires the images to be taken at precise poses (e.g., detailed inspection of a small component with limited visibility).

Figure 16.

The first GNSS error metric, , for different SfM algorithm configurations.

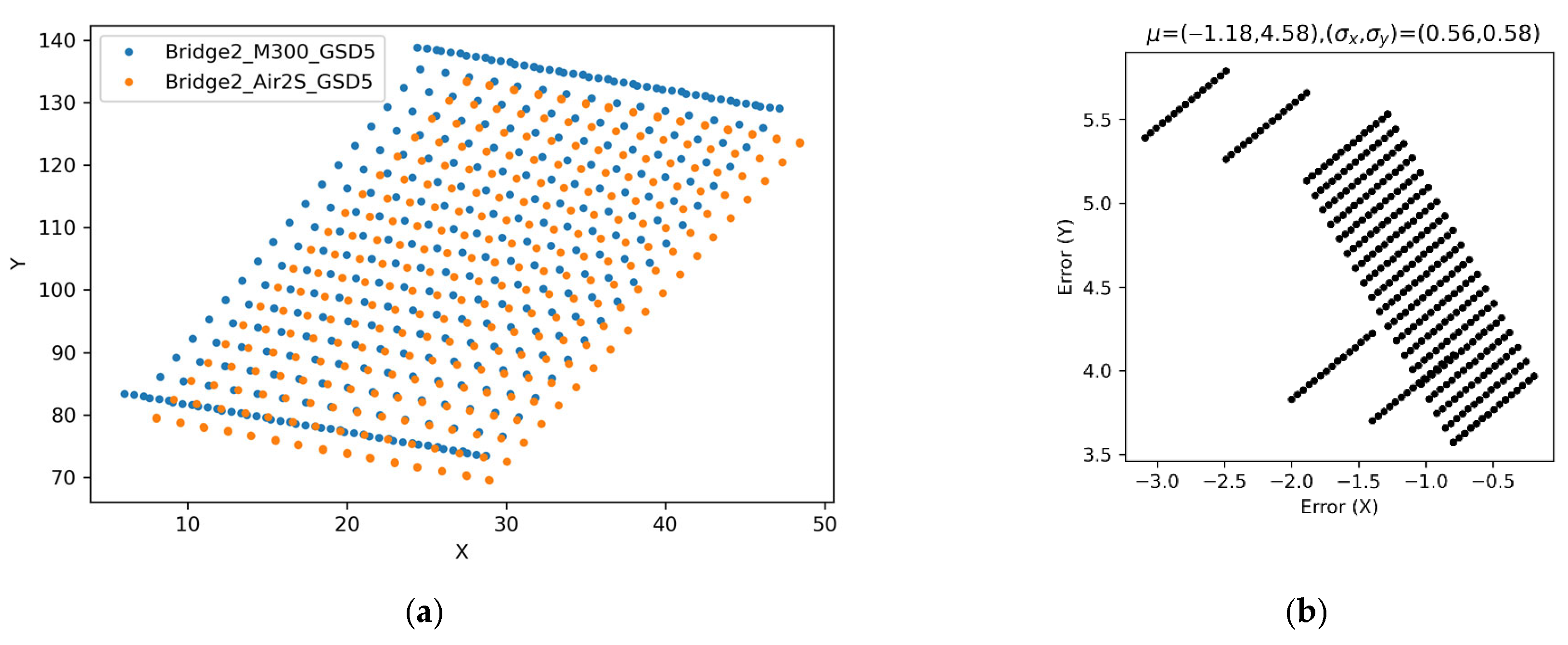

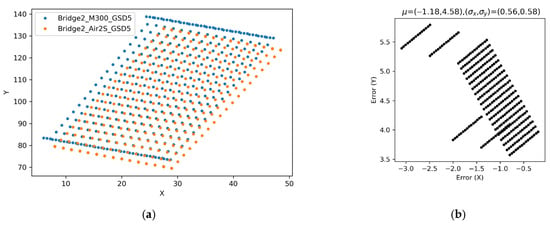

The systematic errors for transforming the recorded GNSS coordinates into the reference coordinate system are investigated using the metric discussed in Section 2.5.1. In this analysis, two cases were selected, Bridge2_M300_GSD5 (data was collected on 5 August 2025) and Bridge2_Air2S_GSD5 (data was collected on 6 August 2025). For each case, the original GNSS coordinates, the planned waypoint coordinates for Bridge2_M300_GSD5, are transformed by and . The resulting waypoint coordinates (horizontal components) expressed in the reference coordinate system are shown in Figure 17a, and the difference in those two transformed coordinates are shown in Figure 17b. The two transforms, and , do not match exactly, leading to the systematic error observed in Figure 17. This error indicates the effect of measured GNSS coordinate variability in two different times (with two devices, RTK-GPS and regular GPS). The effect propagates to the relative locations of those waypoints in the reference coordinates, in which up to about 5.5 m error may be possible. Note again that this error is the composition of pure GPS measurement error and additional error caused by the equipment factors (e.g., GNSS receivers), 3D reconstruction quality, and model alignment algorithm. By performing further extensive tests by isolating these factors, this metric would enable comprehensive characterization of GNSS systematic error patterns and systematic error patterns from other sources in bridge inspection environments.

Figure 17.

Systematic GNSS measurement error metric (Bridge2_M300_GSD5, Bridge2_Air2S_GSD5). (a) Comparisons of the same waypoint coordinates transformed using two datasets. (b) Difference in those two transformed coordinates ().

The statistical distributions of these error metrics, such as , , and , can be propagated (either analytically or computationally) to obtain the distribution of the image-plane offset of an object that is planned to be captured at the center of the image. When the distance to the target object is small, errors in camera location cause larger offsets in images. The negative impact of , decreases as the distance to the target object increases, but the impact of remains. The direct way to apply this statistical characterization is to sample camera projection matrices using the error statistics and project a certain point in a plan using these matrices to observe the distribution of the projected point. When the impact of the error is large, this evaluation provides the probability of capturing the target object in a certain frame in the data collection plan.

3.3.2. Point-Cloud Reconstruction Quality Evaluation Results

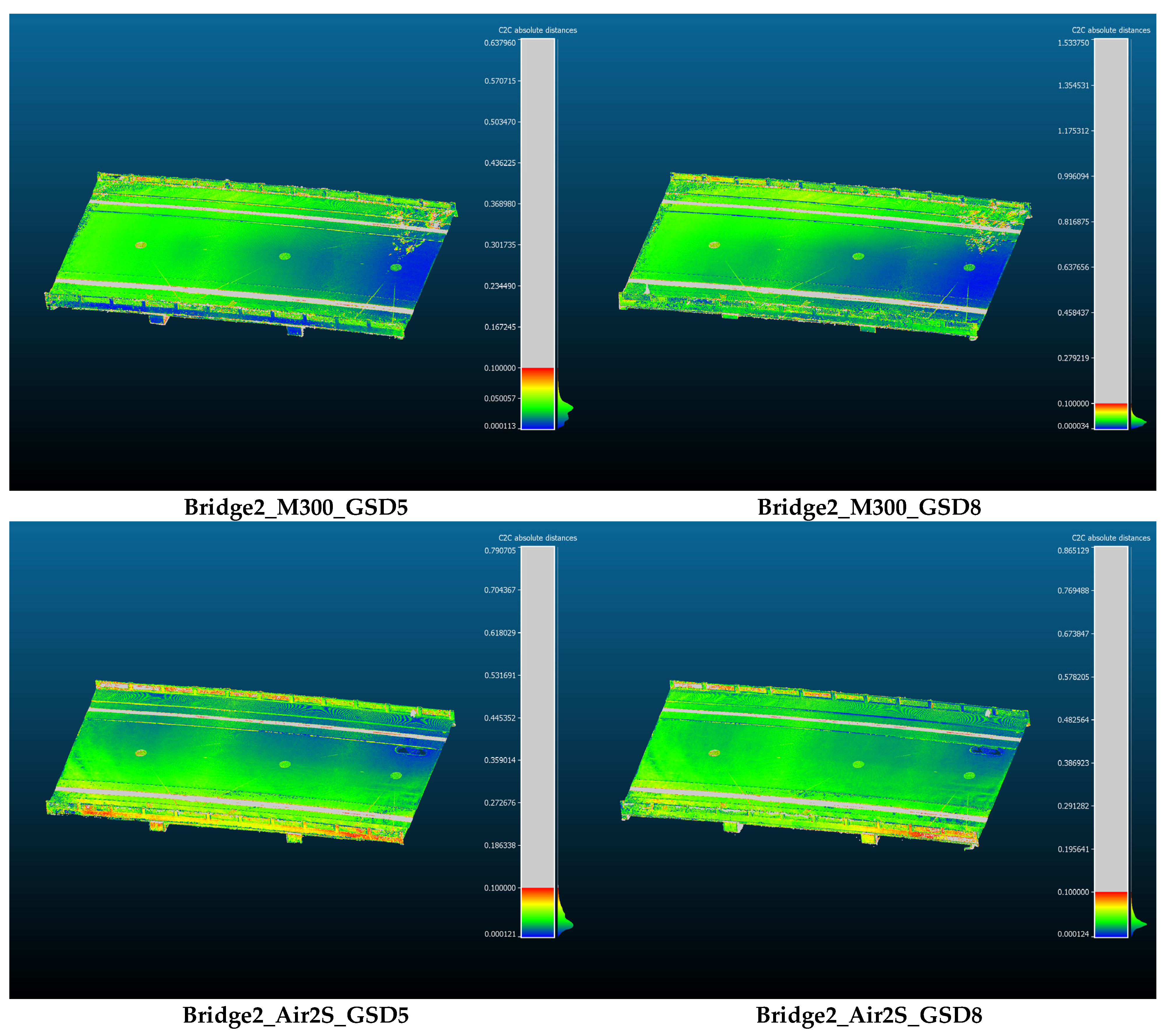

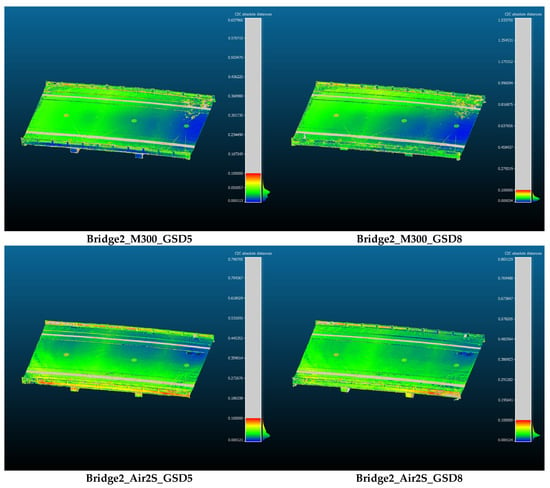

This section discusses the geometric reconstruction quality of point-cloud data following the steps discussed in Section 2.5.2. An overview of reconstruction accuracy for different measurement configurations is shown in Figure 18. For the M300 UAV, the part where the affects directly (side faces) has better reconstruction quality for the cases with , and the error reduces to approximately 1 mm for such a part. The global shape of the deck was captured with the shortest distance ranging from approximately 1 mm to 5 cm. The Air 2S results show a similar tendency, although the impact of GSD on the side is less clear. Error patterns at the global structural scale also exist with similar ranges. These global patterns are consistent across cases for each UAV, while the parts closely related to the change in the measurement configuration improve locally.

Figure 18.

Overview of reconstruction accuracy for different measurement configurations. The color scale is adjusted to be red when the error is 10 cm, and to be blue when the error is minimum value within the point cloud (software specification, indicated in colorbars). Both the scale bar and the error values are expressed in meters.

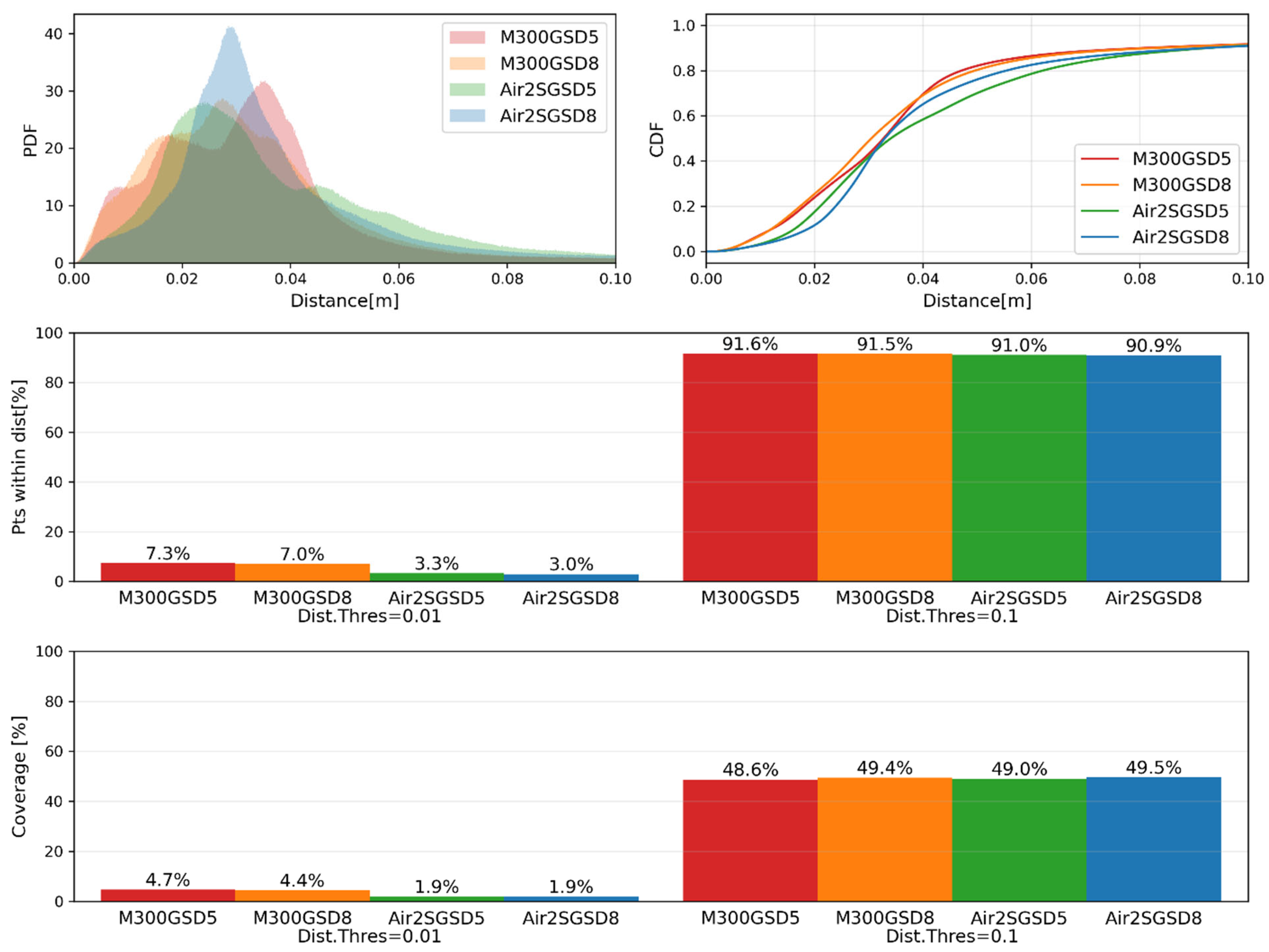

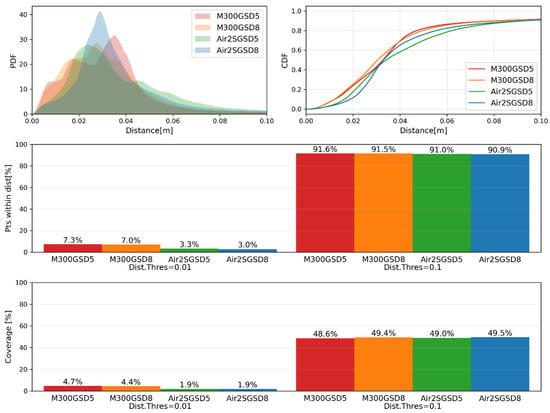

The PDF and CDF of the error are shown in the first row of Figure 19, and the percentage of points in the reconstructed model within threshold values (1 cm and 10 cm) are shown in the second row of the figure. At the global level, the accuracy showed incremental improvements as the GSD decreases and when we used a professional camera in M300 UAV. At the same time, the shapes of the CDFs and PDFs change significantly; for the M300_GSD5 case, as an example, there are multiple peaks in the small distance regions of the PDF, indicating the existence of local parts with high accuracy. Compared with M300_GSD8, the M300_GSD5 case shows more pronounced and more widely separated local peaks in the PDF. This indicates that points with small errors cluster closer to zero, while points with large errors deviate further (i.e., accurate points are more accurate, whereas inaccurate points are less accurate). For the Air 2S cases, a similar tendency can be observed, but the distribution is considerably smoother, with much less pronounced local peaks. From the visualization of reconstruction accuracy in Figure 18, we can observe accurately reconstructed local regions (blue) in the small-GSD cases (e.g., the side surfaces in the M300-based models and parts of the pavement in the Air 2S-based models). However, errors at larger scales sometimes increase in these small-GSD cases, as indicated by more green points on the deck of the M300-based models and more yellow than red points on the sides of the Air 2S-based models. These observations indicate that a lower GSD improves reconstruction accuracy at the local scale, but does not necessarily enhance global shape reconstruction accuracy; the average accuracy over the entire point cloud changes only marginally (4.75 cm for M300_GSD5, 4.74 cm for M300_GSD5, 5.31 cm for Air2S_GSD5, 5.20 cm for Air2S_GSD8). The choice of equipment (camera) also has a strong influence at the local scale, whereas consumer-grade equipment can still achieve comparable accuracy in terms of the global average and percentage of points within distance thresholds (second row of Figure 19). The approximate coverage metric follows a similar trend, where M300 can achieve highly accurate local parts, while at the global level, there are slight (marginal) improvements as the GSD gets smaller (third row of Figure 19). The percentage of approximate coverage is significantly smaller than the percentage satisfying the same reconstruction-accuracy threshold (second row of Figure 19). This reduction is mainly due to the limited space beneath the deck, which makes UAV-based data collection extremely challenging. Overall, both UAV platforms can achieve comparable accuracy levels at the global level, and major differences exist at the local level.

Figure 19.

Statistics of the reconstruction error and coverage metrics.

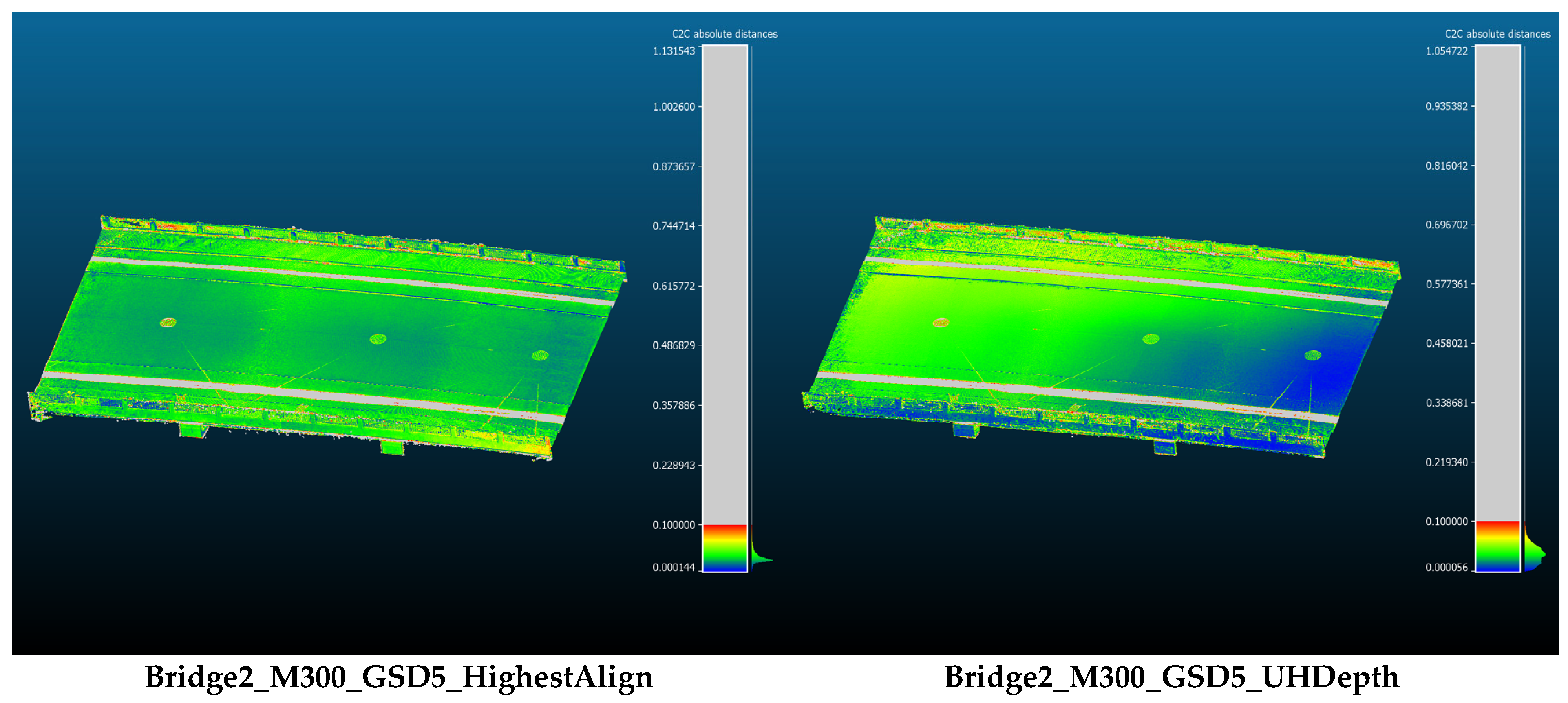

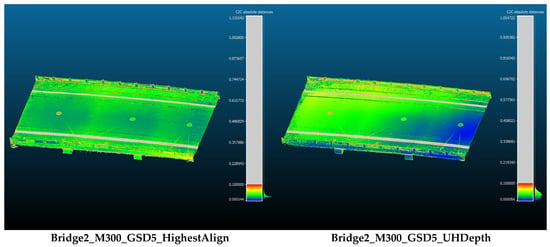

The assessment of geometric reconstruction quality can also be extended to different SfM algorithm configurations (Bridge2_M300_GSD5_HighestAlign and Bridge2_M300_GSD5_UHDepth). An overview of the reconstruction accuracy for these additional cases is shown in Figure 20. For Bridge2_M300_GSD5_HighestAlign, the most noticeable improvement is in the global accuracy pattern, where accurate (blue) parts exist relatively uniformly compared to the original Bridge2_M300_GSD5. Improved point determination accuracy during feature detection and matching brings positive impact on global optimization (bundle adjustment), while the local accuracy patterns may be controlled more by image quality itself. For Bridge2_M300_GSD5_UHDepth, the global pattern of the geometric reconstruction accuracy is similar to Bridge2_M300_GSD5 shown in Figure 18. The global average of the reconstruction error (shortest distance to the reference point cloud) is 0.0475 m for Bridge2_M300_GSD5, 0.0480 for Bridge2_M300_GSD5_HighestAlign, and 0.0488 for Bridge2_M300_GSD5_UHDepth. In this aspect, the “highest accuracy” alignment and “ultra high quality” depth-map computation do not (necessarily) improve the quality, even with the extensive computation. This observation could be explained by the fact that alignment error cannot be easily reduced above this level; if we have noisy or low-quality parts in the reconstruction model and do not eliminate those parts perfectly, such local inaccuracy propagates to the global alignment error by the ICP based on the error metric affected by those noisy parts.

Figure 20.

Overview of reconstruction accuracy for different SfM algorithm configurations. Both the scale bar and the error values are expressed in meters.

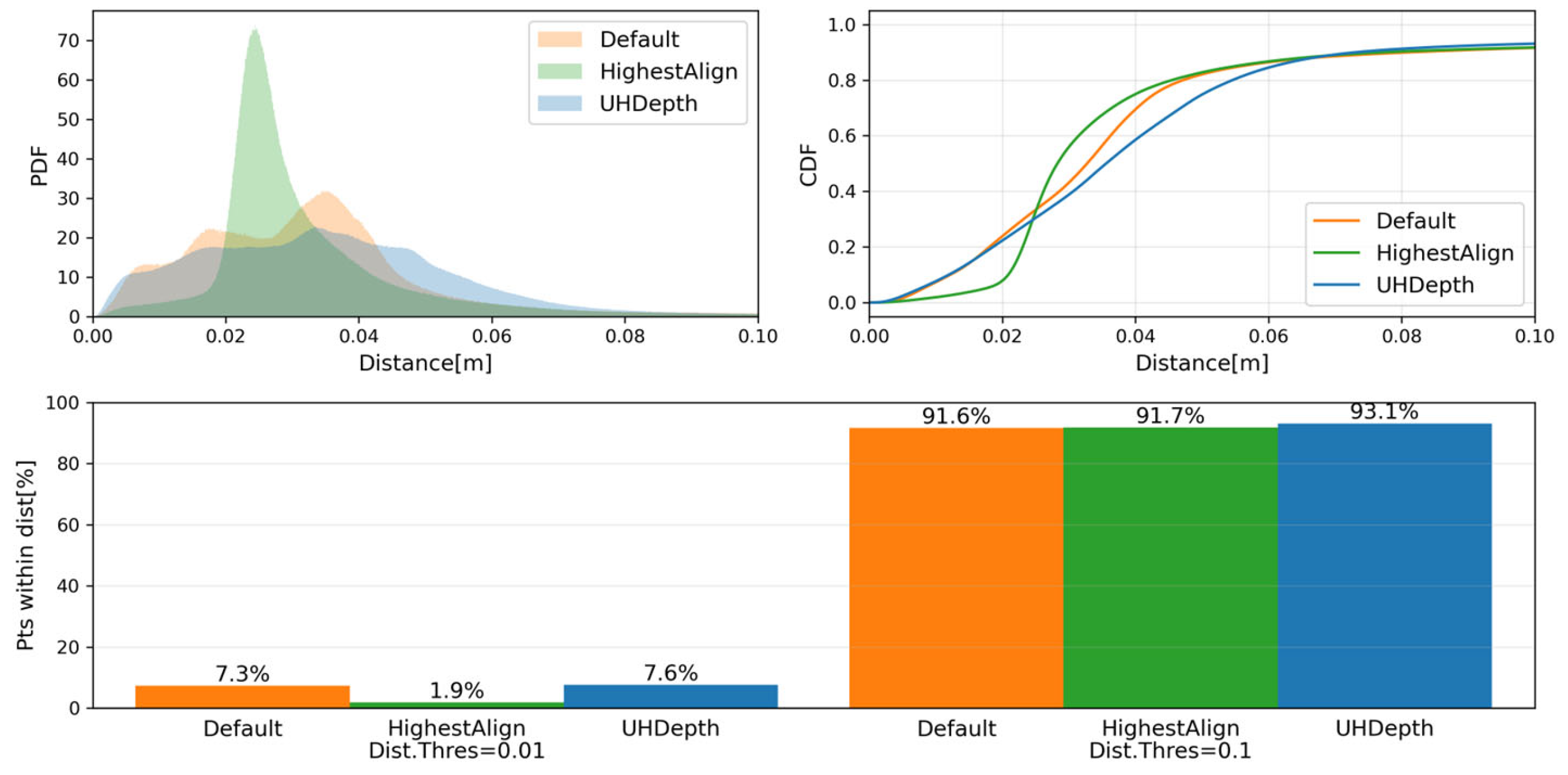

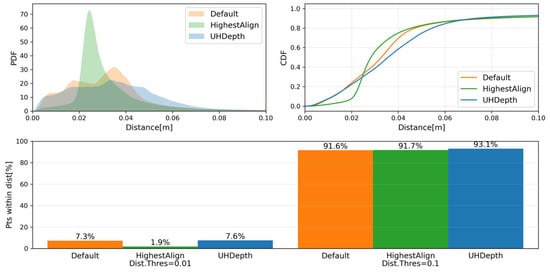

The statistics of the geometric reconstruction error for different SfM algorithm configurations are shown in Figure 21. Compared to the default configuration (Bridge2_M300_GSD5), Bridge2_M300_GSD5_HighestAlign distributes the error more uniformly across the structure (single high narrow peak of the PDF). On the other hand, as the depth-maps are computed in more detail, the PDF becomes more prominently separated, indicating that accurate parts become even more accurate, while error also becomes prominent in some parts. Because the input data is the same, the error levels are similar in the global average sense (as discussed previously), but the local characteristics of the error change significantly, motivating hybrid use of different SfM configurations for tasks requiring high accuracy and level of detail.

Figure 21.

Statistics of the reconstruction error and coverage metrics for differnet SfM algorithm configurations.

These results can be contextualized by referring to Level of Accuracy (LOA) defined by U.S. Institute of Building Documentation (USBID) [40]. In the context of building documentation, LOA20 and LOA30 are defined as the error upper range of 50 [mm] and 15 [mm]. Comparing these values with the error statistics obtained from the bridges in Shanghai, we can consider applying the UAV photogrammetry data to the scenario with LOA20 requirements and potentially target scenarios with LOA30 requirements.

3.3.3. Measurement Results of Component Dimensions and Damage Extent

The results for measuring bridge global dimensions (deck length and deck width), local dimensions (deck spacing), and local volume (pothole volume) are measured following the steps described in Section 2.5.3, and the results are shown in Table 3. The table shows the values measured from the point-cloud data exported from Metashape Software (scale was inferred based on the GNSS data), as well as the results of adjusting the point cloud scale using the identified transform from raw exported data to the reference coordinate system (). For the global dimension (deck length), the SfM measurement shows more than 2 m (3.85%) errors in some cases, while the measurement accuracy was comparable after applying the scale adjustment (the error is less than 20 cm, or 0.385%, for five out of eight cases considered, including downsampled and high-GSD cases). This confirms the observation from Figure 18 and Figure 19 that the reconstruction quality is less affected at the global level than at the local level. For the local dimension (deck spacing), the accuracy decreases significantly as the overlap ratio is reduced. This was caused by the reduction in the detailed structure of the point cloud for lower overlap cases. Finally, the advantage of M300 UAV with professional UAV and camera platforms is evident for the localized volume measurement (pothole volume). The point cloud obtained by Air2S-based image collection had less accurate surface geometric patterns than those obtained by M300 platform. The results indicate that high-quality texture imaging enabled by the better camera sensor is useful in reconstructing detailed surface geometric patterns, even though the image resolution is not larger than that of the camera on Air 2S.

Table 3.

Measurement results for different equipment and GSD configurations. Values in parentheses indicates the results after applying scale correction by ICP algorithm.

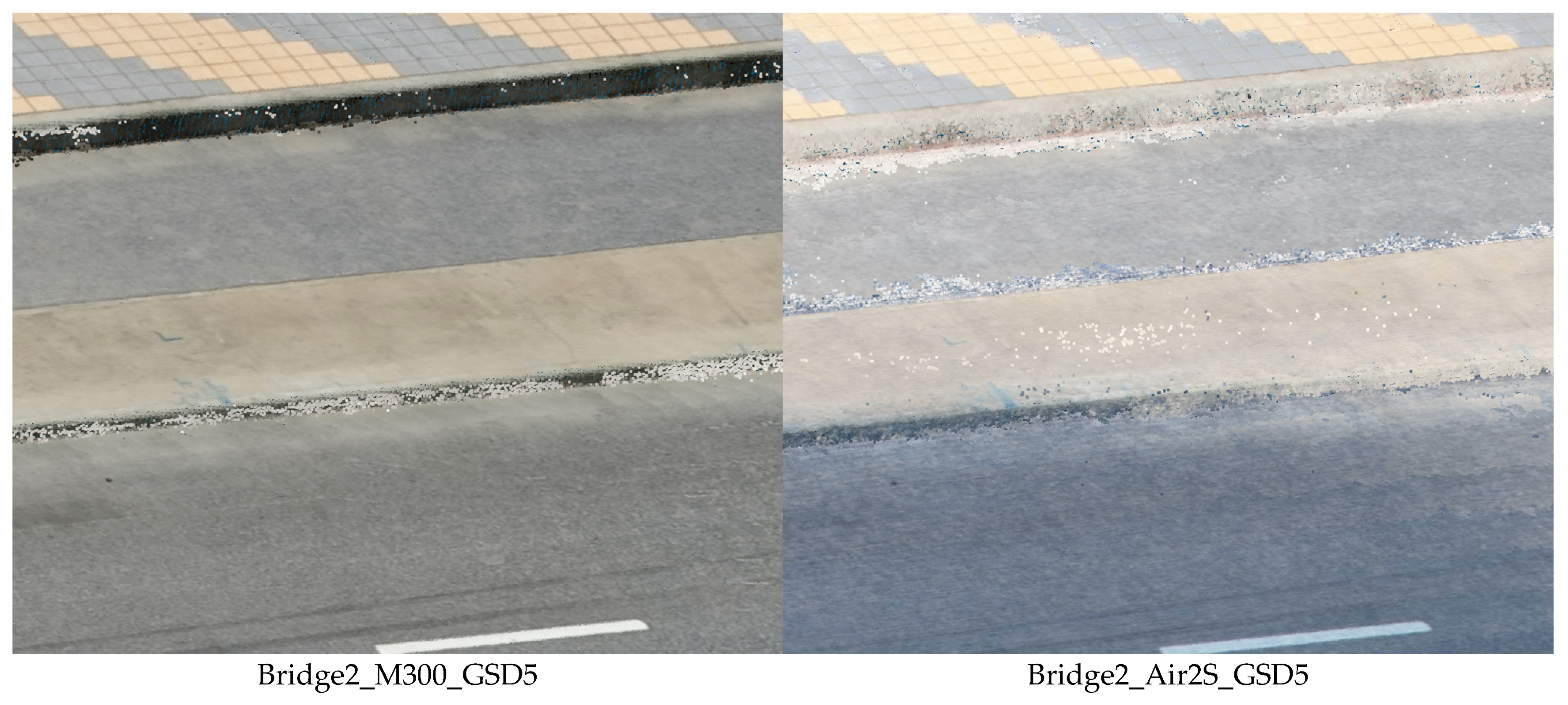

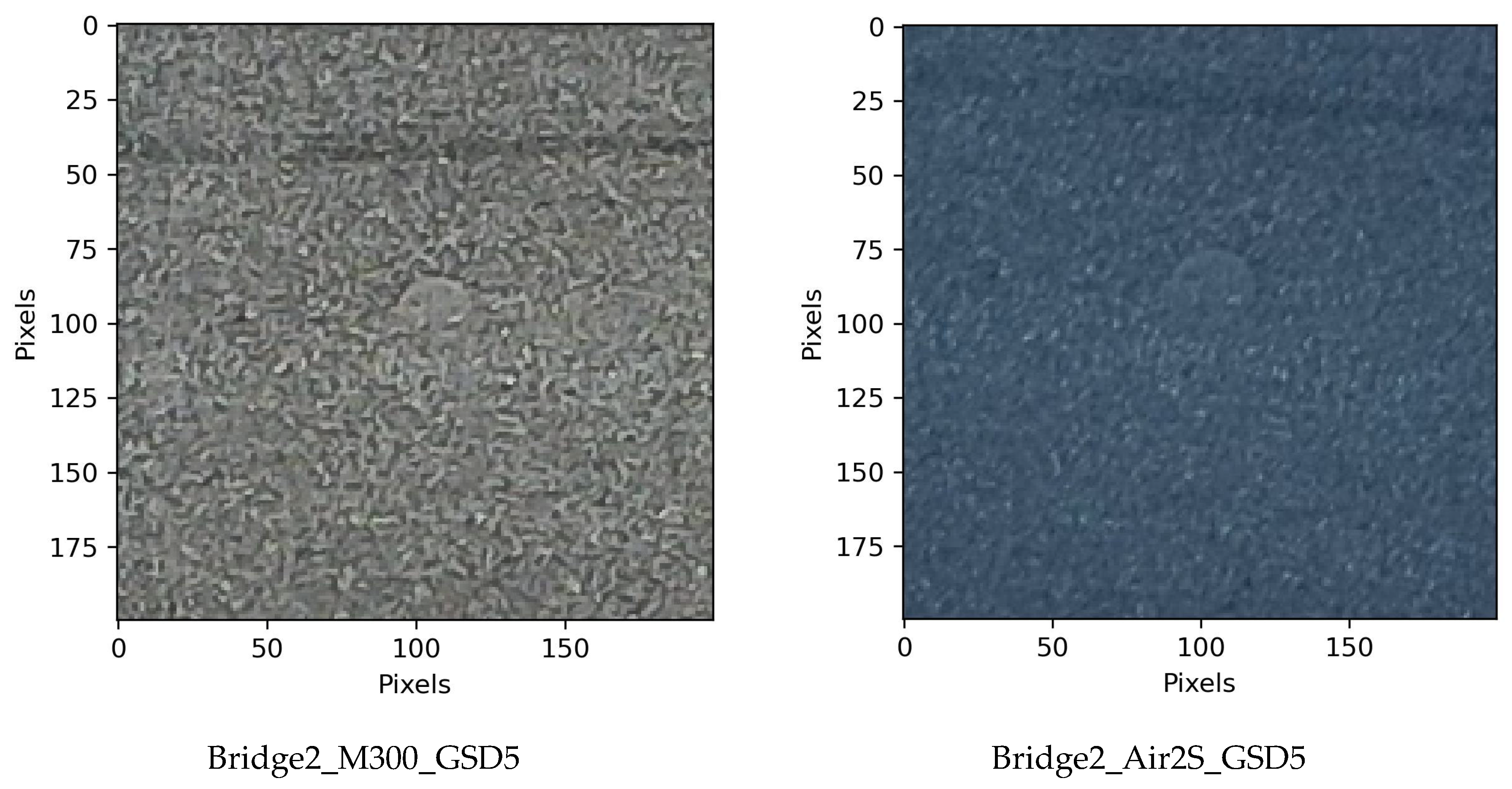

To gain further insight into the differences in pothole volume measurement accuracy, Figure 22 compares close-up views of the point clouds for Bridge2_M300_GSD5 and Bridge2_Air2S_GSD5. Bridge2_M300_GSD5 shows clearer geometric patterns, particularly near the edges. Bridge2_Air2S_GSD5, on the other hand, shows smoother local geometric patterns with less color contrast. The reason can be partially observed from the comparisons of images from similar viewpoints shown in Figure 23. While resolutions of raw images are higher in Bridge2_Air2S_GSD5 (5472 × 3078) than Bridge2_M300_GSD5 (4056 × 3040), Bridge2_M300_GSD5 captures fine patterns better with higher color contrast. While this comparison is local and specific to this part of the structure (e.g., Table 3 shows that the Air 2S results are not always as problematic as in this case), the risk of smoothed reconstructed geometry suggests that the M300 should be chosen for this type of local volumetric measurement.

Figure 22.

Close-up view of point clouds near the pothole location (the pothole is located near the bottom of this view).

Figure 23.

Close-up view of UAV images at the pothole location from similar viewpoints.

The assessment of component dimensions and damage extent was also extended to different SfM algorithm configurations (Bridge2_M300_GSD5_HighestAlign and Bridge2_M300_GSD5_UHDepth discussed in Section 3.3.1). Table 4 shows that the global measurement maintains high accuracy, exploiting the full potential of the data input. Local measurement maintains the accuracy level but does not show noticeable improvement. This is because the measurement of this scale is strongly influenced by the point density (which is set to 0.1 for all cases). Increasing the value may lead to better local measurements but at the expense of significantly increased computational resources. Pothole volume was similarly estimated with HighestAlign configuration. In contrast, for UHDepth configuration, the pothole was clearly visible in the point-cloud data and the volume was also identified, but its accuracy was not (necessarily) higher. Algorithm factors tend to change the shape patterns and distributions of errors, but overall accuracy is strongly dependent on the data given to the algorithm.

Table 4.

Measurement results for different SfM algorithm configurations. Values in parenthesis indicates the results after applying scale correction by ICP algorithm.

These results are interpreted by reference to established bridge inspection and inventory guidelines. For example, during inspection and cost estimation, deck lengths need to be estimated within the tolerance of 0.1 [m] [41]. For deck spacing, TM 5-600 “Bridge Inspection, Maintenance, and Repair” by US Army Corps [42] specifies that local bearing configuration should be measured to the accuracy of 3 [mm], which can be targeted by the measurement configurations considered in this case study. For potholes, U.S. Federal Highway Administration (FHWA) defines that the potholes with diameter larger than 150 [mm] can be classified into low severity (less than 25 [mm] deep), medium severity (25–50 [mm] deep), and high severity (more than 50 [mm] deep). The pothole with 728.55 [] volume can be categorized into low severity. The industry-grade UAV with the professional inspection camera has good potential to monitor potholes from their early stages.

3.4. Practical and Educational Lessons Learned

In this research, multiple field tests using UAV platforms were conducted by a group of students at Zhejiang University/University of Illinois Urbana-Champaign Institute (ZJU-UIUC Institute), Zhejiang University. During the mission planning and execution, the group encountered various practical and operational challenges, from which lessons (including educational ones) were learned. This section summarizes those lessons.

Safety of initial navigation (M300): For the DJI RTK300 drone, the flight speed during the initial travel phase (from takeoff to the first waypoint) is fixed at 5 m/s. This default speed may pose a risk when flying in areas with obstacles such as streetlights or flagpoles. To address this issue, this research also planned the path to reach the first waypoint to collect an image of the bridge. In our approach, the first waypoint was set directly above the takeoff position, from which the UAV can navigate safely to the first point to collect an image by a straight path. In this way, the UAV only needs to ascend vertically before entering the mission flight path, where we can specify the desired flight speed.

Need for pausing at each waypoint (M300): In the data collection plans with dense waypoints (like the ones set in this research), images should often be taken after pausing at each waypoint (e.g., for 1 s). The DJI RTK300 with Zenmuse H20T camera requires sufficient time to capture and store multiple images (high-resolution wide-angle image, high-resolution zoom image, and thermal image). When the mission was run without pausing at each waypoint, images were not captured at many waypoints or the images were captured at very different intervals from the planned intervals, compromising the data collection requirements.

Map accuracy issue and need for on-site path re-planning: Our mission planner generates flight paths based on geographic coordinates (latitude and longitude). However, coordinates from online maps often differ from real-world positions, and real-world environments may also change over time (e.g., flight safety issues arose due to the vegetation growth). We should establish a workflow to enable flexible on-site path re-planning and adjustment (useful features of existing software can be actively explored prior to the data collection, assuming the need).

Issue in flying over water surfaces: Since many bridges span rivers, it is often unavoidable for the drone to fly over water during data collection. The M300’s vision-based obstacle avoidance system can sometimes be triggered by water surface reflections. Our team encountered this issue while collecting data at the bridge site around 3–4 p.m., where the light reflection is strong. These times may be better avoided if possible.

Wind conditions: We should choose clear and windless days to ensure high-quality data acquisition. In windy conditions, M300 automatically stabilizes itself, which may cause the captured images to deviate slightly from the intended shooting angles.

High temperature: In Zhejiang Province, the temperature is high during the daytime in summer. When the temperature approached 40 degrees Celsius, we started encountering heat issues, causing sudden interruption of the mission (including malfunction of smartphone devices controlling the UAV). We should pay attention to avoid putting devices (UAV, controller, etc.) under the direct sunlight as much as possible, and also develop flexible time plans to accommodate occasional halt of the mission due to this problem.

While these lessons might be well-known by experts in operating UAVs, these issues were actually encountered by the team of students, leading to mission failure (including UAV crash) and data re-collections. From an educational perspective, these points were identified and reiterated as critical points that need to be taught carefully before going to the site.

4. Conclusions

This research investigated an approach for quantitatively evaluating the impact of different methodologies and configurations of UAV-based image collection on the quality of the collected images and 3D reconstruction data. This research considered the combinations of two UAV platforms, an industry-grade UAV and a consumer-grade UAV, key parameters for UAV path-planning, Ground Sampling Distance (GSD) and image overlap ratios, and SfM algorithm configurations, alignment accuracy and depth-map quality. This research developed an approach for evaluating these measurement methodologies and configurations quantitatively, focusing on trajectory accuracy, point-cloud reconstruction quality, and accuracy of different kinds of geometric measurements relevant to inspection tasks. The trajectory accuracy assessment includes the evaluation of UAV and camera pose control (location and angle), as well as random and systematic errors of GNSS-based camera localization relative to the fixed reference model. Reconstruction quality assessment is based on the shortest distance between the reconstructed and reference point-cloud data, providing insights into the geometric reconstruction accuracy, degree of coverage, and their spatial patterns. For geometric measurements related to inspection tasks, global dimensional measurement, local dimensional measurement, and local volumetric measurement are selected as benchmarks to provide insights into inspection applications. Combining these quality assessments, the proposed approach can provide direct insights into the effects of different UAV-based image collection methodologies on the bridge reality capture process.

The proposed approach is demonstrated through a case study on short-span road bridges in Shanghai. The results indicate that, for the global dimensional measurements (PDF/CDF of reconstruction accuracy and deck length measurement), small consumer-grade UAV can work nearly as well as large industry-grade UAV with different GSDs when the scales are adjusted appropriately. However, the geometric reconstruction accuracy and local measurement accuracy changes significantly depending on the selected hardware and path-planning parameters. For example, M300 UAV was particularly advantageous for the tasks requiring accurate and detailed 3D reconstruction of the local patterns, including pothole volume measurements. The insights into data quality and error assessments at different stages of photogrammetric 3D reconstruction will guide our future planning of reality capture to satisfy the data quality requirements.

Future work includes the establishment of methodologies to estimate and control quality metrics discussed in this research to produce feasible plans that lead to 3D reconstruction data with sufficient quality. Moreover, assessment should be extended to take into account different environmental conditions, tighter flight constraints, more parameters in measurement planning (e.g., zoom camera in M300 was not considered in this research), and more data to ensure generalizability of the findings. Major directions of future investigations along this line include (1) comprehensive characterization of GNSS errors in bridge inspection environments (e.g., dependence on geometry of and proximity to the structure), and (2) establishment of measurement planning or data post-processing methods to minimize the effect of occlusions (maximizing the coverage). The proposed approach can even be extended to other types of infrastructure, such as tunnels and dams, to clarify unique reality capture quality patterns in their domains. These extensions will enhance the applicability and practicality of UAV-based as-built geometric reconstruction as a key step in a broad range of digital-twinning workflows for bridge inspection.

Author Contributions

Conceptualization, R.Z., H.W., F.W., M.S. and Y.N.; methodology, H.X., S.W. and Y.N.; software, H.X. and S.W.; validation, H.X., S.W. and Y.N.; formal analysis, Y.N.; investigation, H.X., S.W., Y.L., T.X. and Y.N.; resources, R.Z., H.W. and F.W.; data curation, H.X., S.W., Y.L. and Y.N.; writing—original draft preparation, Y.N.; writing—review and editing, Y.N.; visualization, Y.N.; supervision, R.Z., H.W., F.W., M.S. and Y.N.; project administration, H.W.; funding acquisition, H.W. and Y.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Key Research and Development Program of China, (Grant No. 2023YFC3805700, 2023YFC3805701), and Shanghai Urban Digital Transformation Special Fund Project (Grant No. 202401069), Housing & Urban- Rural Construction Commission of Shanghai Municipality (Grant No. 2024-Z02-003), and Shanghai Research Institute of Building Sciences Group Co., Ltd. (Grant No. KY10000038.20240011), Shanghai Sailing Program (Grant No. 23YF1437700).

Data Availability Statement

Dataset available on request from the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- FHWA. National Bridge Inspection Standards Regulations (NBIS). Fed. Regist. 2004, 69, 15–35. [Google Scholar]

- Shu, J.; Zeng, Z.; Li, W.; Zhou, S.; Zhang, C.; Xu, C.; Zhang, H. Automatic geometric digital twin of box girder bridge using a laser-scanned point cloud. Autom. Constr. 2024, 168, 105781. [Google Scholar] [CrossRef]

- Lu, R.; Brilakis, I. Digital twinning of existing reinforced concrete bridges from labelled point clusters. Autom. Constr. 2019, 105, 102837. [Google Scholar] [CrossRef]

- Rakoczy, A.M.; Ribeiro, D.; Hoskere, V.; Narazaki, Y.; Olaszek, P.; Karwowski, W.; Cabral, R.; Guo, Y.; Futai, M.M.; Milillo, P.; et al. Technologies and Platforms for Remote and Autonomous Bridge Inspection—Review. Struct. Eng. Int. 2024, 35, 354–376. [Google Scholar] [CrossRef]

- Spencer, B.F.; Hoskere, V.; Narazaki, Y. Advances in Computer Vision-Based Civil Infrastructure Inspection and Monitoring. Engineering 2019, 5, 199–222. [Google Scholar] [CrossRef]

- Hoskere, V.; Hassanlou, D.; Rahman, A.U.; Bazrgary, R.; Ali, M.T. Unified framework for digital twins of bridges. Autom. Constr. 2025, 175, 106214. [Google Scholar] [CrossRef]

- Ribeiro, D.; Rakoczy, A.M.; Cabral, R.; Hoskere, V.; Narazaki, Y.; Santos, R.; Tondo, G.; Gonzalez, L.; Matos, J.C.; Futai, M.M.; et al. Methodologies for Remote Bridge Inspection—Review. Sensors 2025, 25, 5708. [Google Scholar] [CrossRef]

- Wang, X.; Demartino, C.; Narazaki, Y.; Monti, G.; Spencer, B.F. Rapid seismic risk assessment of bridges using UAV aerial photogrammetry. Eng. Struct. 2023, 279, 115589. [Google Scholar] [CrossRef]

- Cabral, R.; Oliveira, R.; Ribeiro, D.; Rakoczy, A.M.; Santos, R.; Azenha, M.; Correia, J. Railway Bridge Geometry Assessment Supported by Cutting-Edge Reality Capture Technologies and 3D As-Designed Models. Infrastructures 2023, 8, 114. [Google Scholar] [CrossRef]

- Lai, Y.; Chen, J.; Hong, Q.; Li, Z.; Liu, H.; Lu, B.; Ma, R.; Yu, C.; Sun, R.; Demartino, C.; et al. Framework for long-term structural health monitoring by computer vision and vibration-based model updating. Case Stud. Constr. Mater. 2022, 16, e01020. [Google Scholar] [CrossRef]

- Lee, Y.; Lee, J.H.; Byun, N.; Yoon, H. Vision-based geometric finite element model updating for cable suspension bridges. Adv. Eng. Inform. 2025, 68, 103714. [Google Scholar] [CrossRef]

- Marra, A.; Trizio, I.; Fabbrocino, G. An Automated Information Modeling Workflow for Existing Bridge Inspection Management. Infrastructures 2025, 10, 312. [Google Scholar] [CrossRef]

- Pantoja-Rosero, B.G.; Achanta, R.; Beyer, K. Damage-augmented digital twins towards the automated inspection of buildings. Autom. Constr. 2023, 150, 104842. [Google Scholar] [CrossRef]

- Lin, J.J.; Ibrahim, A.; Sarwade, S.; Golparvar-Fard, M. Bridge Inspection with Aerial Robots: Automating the Entire Pipeline of Visual Data Capture, 3D Mapping, Defect Detection, Analysis, and Reporting. J. Comput. Civ. Civil. Eng. 2021, 35, 04020064. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Calò, M.; Ruggieri, S.; Doglioni, A.; Morga, M.; Nettis, A.; Simeone, V.; Uva, G. Probabilistic-based assessment of subsidence phenomena on the existing built heritage by combining MTInSAR data and UAV photogrammetry. Struct. Infrastruct. Eng. 2024, 1–16. [Google Scholar] [CrossRef]

- Erol, B.; Turan, E.; Erol, S.; Alper Kuçak, R. Comparative performance analysis of precise point positioning technique in the UAV − based mapping. Measurement 2024, 233, 114768. [Google Scholar] [CrossRef]

- Dai, F.; Feng, Y.; Hough, R. Photogrammetric error sources and impacts on modeling and surveying in construction engineering applications. Vis. Eng. 2014, 2, 2. [Google Scholar] [CrossRef]

- Zhu, Z.; Rowlinson, S.; Chen, T.; Patching, A. Exploring the Impact of Different Registration Methods and Noise Removal on the Registration Quality of Point Cloud Models in the Built Environment: A Case Study on Dickabrma Bridge. Buildings 2023, 13, 2365. [Google Scholar] [CrossRef]

- Castellani, M.; Meoni, A.; Garcia-Macias, E.; Antonini, F.; Ubertini, F. UAV photogrammetry and laser scanning of bridges: A new methodology and its application to a case study. Procedia Struct. Integr. 2024, 62, 193–200. [Google Scholar] [CrossRef]

- Wang, F.; Zou, Y.; Castillo, E.D.R.; Ding, Y.; Xu, Z.; Zhao, H.; Lim, J.B. Automated UAV path-planning for high-quality photogrammetric 3D bridge reconstruction. Struct. Infrastruct. Eng. 2024, 20, 1595–1614. [Google Scholar] [CrossRef]

- Chen, S.; Laefer, D.F.; Asce, M.; Mangina, E.; Zolanvari, S.M.I.; Byrne, J. UAV Bridge Inspection through Evaluated 3D Reconstructions. J. Bridge Eng. 2019, 24, 05019001. [Google Scholar] [CrossRef]

- Shan, J.; Zhu, H.; Yu, R. Feasibility of Accurate Point Cloud Model Reconstruction for Earthquake-Damaged Structures Using UAV-Based Photogrammetry. Struct. Control Health Monit. 2023, 2023, 7743762. [Google Scholar] [CrossRef]

- Zhu, Z.; Chen, T.; Rowlinson, S.; Rusch, R.; Ruan, X. A Quantitative Investigation of the Effect of Scan Planning and Multi-Technology Fusion for Point-cloud data Collection on Registration and Data Quality: A Case Study of Bond University’s Sustainable Building. Buildings 2023, 13, 1473. [Google Scholar] [CrossRef]

- Wang, S.; Rodgers, C.; Zhai, G.; Matiki, T.N.; Welsh, B.; Najafi, A.; Wang, J.; Narazaki, Y.; Hoskere, V.; Spencer, B.F. A graphics-based digital twin framework for computer vision-based post-earthquake structural inspection and evaluation using unmanned aerial vehicles. J. Infrastruct. Intell. Resil. 2022, 1, 100003. [Google Scholar] [CrossRef]

- Lindner, S.; Garbe, C.; Mombaur, K. Optimization based multi-view coverage path-planning for autonomous structure from motion recordings. IEEE Robot. Autom. Lett. 2019, 4, 3278–3285. [Google Scholar] [CrossRef]

- Narazaki, Y.; Gomez, F.; Hoskere, V.; Smith, M.D.; Spencer, B.F. Efficient development of vision-based dense three-dimensional displacement measurement algorithms using physics-based graphics models. Struct. Health Monit. 2021, 20, 1841–1863. [Google Scholar] [CrossRef]

- Narazaki, Y.; Hoskere, V.; Eick, B.A.; Smith, M.D.; Spencer, B.F. Vision-based dense displacement and strain estimation of miter gates with the performance evaluation using physics-based graphics models. Smart Struct. Syst. 2019, 24, 709–721. [Google Scholar] [CrossRef]

- Ibrahim, A.; Golparvar-Fard, M. 4D BIM Based Optimal Flight Planning for Construction Monitoring Applications Using Camera-Equipped UAVs. In Proceedings of the ASCE International Conference on Computing in Civil Engineering, Atlanta, GA, USA, 17–19 June 2019; pp. 217–224. [Google Scholar] [CrossRef]

- Vincke, S.; Vergauwen, M. Vision based metric for quality control by comparing built reality to BIM. Autom. Constr. 2022, 144, 104581. [Google Scholar] [CrossRef]

- de Assis, L.S.; Poncetti, B.L.; Machado, L.B.; Futai, M.M. Drone-based photogrammetry and virtual reality: Technological alternatives for tunnel inspection. Tunn. Undergr. Space Technol. 2025, 168, 107094. [Google Scholar] [CrossRef]

- Cabral, R.; Santos, R.; Correia, J.; Ribeiro, D. Optimal reconstruction of railway bridges using a machine learning framework based on UAV photogrammetry and LiDAR. Struct. Infrastruct. Eng. 2025, 1–21. [Google Scholar] [CrossRef]

- DJI Store—Official Store for DJI Drones, Gimbals and Accessories (United States). Available online: https://store.dji.com/?gclid=Cj0KCQiAovfvBRCRARIsADEmbRKsel0rIqlIxral4w9PAld3Dxj5mmW4sjDldoeYPNPwn0RP1Yb-5nsaAtDVEALw_wcB (accessed on 21 December 2019).

- FARO. Focus Laser Scanning Solution|Hardware|FARO. Available online: https://www.faro.com/en/Products/Hardware/Focus-Laser-Scanners (accessed on 23 October 2025).

- Litchi for DJI Drones. Available online: https://flylitchi.com/ (accessed on 11 October 2025).

- Agisoft Metashape. Available online: https://www.agisoft.com/ (accessed on 30 August 2020).

- Chen, Y.; Medioni, G. Object modeling by registration of multiple range images. In Proceedings of the IEEE International Conference on Robotics and Automation, Sacramento, CA, USA, 9–11 April 1991; pp. 2724–2729. [Google Scholar] [CrossRef]

- CloudCompare. Available online: https://www.cloudcompare.org/ (accessed on 1 December 2025).

- Kaplan, E.D.; Hegarty, C. Understanding GPS/GNSS: Principles and Applications; Artech House: Norwood, MA, USA, 2017; p. 993. Available online: https://books.google.com/books/about/Understanding_GPS_GNSS_Principles_and_Ap.html?id=y4Q0DwAAQBAJ (accessed on 23 October 2025).

- Level of Accuracy (LOA)|USIBD. Available online: https://usibd.org/level-of-accuracy/ (accessed on 24 November 2025).

- Bridge Inspection and Maintenance System: BIM Level 2 Inspection Manual. Version 2-Open Government. Available online: https://open.alberta.ca/publications/bim-level-2-inspection-manual-version-2 (accessed on 24 November 2025).

- WBDG Home|WBDG—Whole Building Design Guide. Available online: https://www.wbdg.org/ (accessed on 24 November 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).