Abstract

The Secretary Bird Optimization Algorithm (SBOA) is a novel swarm-based meta-heuristic that formulates an optimization model by mimicking the secretary bird’s hunting and predator-evasion behaviors, and thus possesses appreciable application potential. Nevertheless, it suffers from an unbalanced exploration–exploitation ratio, difficulty in maintaining population diversity, and a tendency to be trapped in local optima. To eliminate these drawbacks, this paper proposes an SBOA variant (MESBOA) that integrates a multi-population management strategy with an experience-trend guidance strategy. The proposed method is compared with eight advanced basic/enhanced algorithms of different categories on both the CEC2017 and CEC2022 test suites. Experimental results demonstrate that MESBOA delivers faster convergence, more stable robustness and higher accuracy, achieving mean rankings of 2.500 (CEC2022 10-D), 2.333 (CEC2022 20-D), 1.828 (CEC2017 50-D) and 1.931 (CEC2017 100-D). Moreover, engineering constrained optimization problems further verify its applicability to real-world optimization tasks.

1. Introduction

Global optimization problems are ubiquitous in modern complex systems and engineering design [1,2]. They are typically characterized by high non-linearity, multimodality, multiple constraints and large dimensionality, posing significant challenges to traditional optimization techniques [3]. Classical deterministic approaches such as gradient-based methods and dynamic programming perform well on simple tasks like convex optimization, but often fail to provide satisfactory solutions when the problem is non-convex or non-differentiable [4]. In contrast, meta-heuristic methods, which operate without gradient information, can effectively explore the search space and have been widely applied to complex global optimization and engineering design tasks [5]. Although these algorithms do not guarantee global optimality, they are highly valuable because they consistently deliver high-quality solutions for large-scale and intricate problems [6]. Numerous studies have demonstrated their superior performance in practical engineering challenges ranging from mechanical structure design and parameter estimation to mission planning [7,8,9,10,11]. However, as problem size and complexity grow, the performance of meta-heuristics may degrade markedly, making the continuous development and refinement of new algorithms an urgent necessity [12].

The central idea of meta-heuristic algorithms is to design adaptive and robust search strategies by emulating natural phenomena, biological behaviors, or physical laws. Representative categories and algorithms are briefly exemplified below [13].

Evolutionary-based algorithms realize optimization by replicating the “selection–crossover–mutation” cycle observed in biological evolution. For example, the canonical genetic algorithm (GA) [14] iteratively refines a population through encoded individuals, fitness evaluation, and the genetic operators of selection, crossover, and mutation. Differential evolution (DE) [15] creates candidate solutions by adding scaled difference vectors between existing individuals, a mechanism that is particularly effective in continuous search spaces. Other representatives of this family include Evolution Strategies (ES) [16] and the recently proposed Alpha Evolution (AE) [17], all of which follow the same evolutionary paradigm while introducing distinct variation and selection schemes.

Physics-based algorithms abstract optimization rules from the laws of physics or chemical reactions observed in nature. Inspired by the annealing of metals, Simulated Annealing (SA) [18] employs a temperature schedule to probabilistically accept inferior solutions, thereby escaping local optima. The Gravitational Search Algorithm (GSA) [19] models candidate solutions as masses that interact through Newtonian gravity; heavier masses (better solutions) attract others, guiding the population toward high-fitness regions. Other representatives of this category include Polar Lights Optimization (PLO) [20], Fick’s Law Algorithm (FLA) [21], Light Spectrum Optimizer (LSO) [22] and Fata Morgana Algorithm (FMA) [23].

Human-based algorithms distill optimization logic from human activities and social collaboration. The Teaching–Learning-Based Optimization (TLBO) [24], for instance, mimics the knowledge-transfer process between an instructor and learners in a classroom, whereas the Social Group Optimization (SGO) [25] exploits the cooperative mechanisms of information sharing and collective decision-making observed in human social networks. Other algorithms that belong to this category include the Political Optimizer (PO) [26], Football Team Training Algorithm (FTTA) [27], Escape Optimization Algorithm (EOA) [28], Catch Fish Optimization Algorithm (CFOA) [29], and Student Psychology Based Optimization (STBO) [30].

Swarm-based algorithms mimic the cooperative behavior of biological swarms and achieve global optimization through information sharing among simple agents. Particle Swarm Optimization (PSO) [31], for instance, emulates the foraging of bird flocks: each particle adjusts its position and velocity at every iteration by simultaneously learning from its personal best experience and the swarm’s global best, rapidly converging toward promising regions. Ant Colony Optimization (ACO) [32] replicates the pheromone trail communication of foraging ants; artificial ants probabilistically construct paths and reinforce shorter ones through pheromone updates, eventually converging to minimal routes. Grey Wolf Optimizer (GWO) [33] abstracts the strict social hierarchy and collective hunting tactics of grey wolves—tracking, encircling, and attacking prey—to balance exploration and exploitation in multimodal landscapes. Beyond these mainstream methods, a growing family of swarm intelligence techniques—such as Greylag Goose Optimization (GGO) [34], Tuna Swarm Optimization (TSO) [35], Sled Dog Optimizer (SDO) [36], and Crayfish Optimization Algorithm (COA) [37]—continues to emerge, offering alternative neighborhood topologies and communication rules for diverse optimization scenarios.

For meta-heuristic algorithms, exploration and exploitation constitute the two core phases that directly govern global search capacity and local refinement efficiency; balancing them is therefore pivotal to overall performance [38]. Exploration refers to the extensive sampling of the search space to identify promising regions, thereby reducing the risk of premature entrapment in local optima and safeguarding the discovery of the global solution. Exploitation, in contrast, concentrates the search within these promising regions to refine solutions, improving accuracy and accelerating convergence. Unfortunately, the foundational variants of most meta-heuristics suffer from inherent bottlenecks—premature convergence, local-optimum stagnation, and slow convergence—when confronted with complex problems. To alleviate these drawbacks, researchers have proposed novel operators or refined existing ones to strengthen both global exploration and local exploitation capabilities. In parallel, hybrid algorithms that synergize operators drawn from different meta-heuristics have been developed to achieve an adaptive equilibrium between the two search modes. Reinforcing this trend, the No-Free-Lunch theorem [39] asserts that no single algorithm can outperform all others across every optimization problem, continuously motivating the emergence of new intelligent optimization paradigms.

The SBOA is a novel swarm-based meta-heuristic that emulates the secretary bird’s hunting and escape tactics. The optimization process is explicitly divided into an exploration phase and an exploitation phase, each governed by distinct search behaviors executed in sequence. Owing to these diversified strategies, SBOA delivers competitive performance on a variety of benchmark functions and engineering cases. In the original study by Fu et al., SBOA outperformed basic algorithms such as WOA, GWO, COA and DBO, and achieved results comparable with the advanced LSHADE-SPACMA [40]. Although the original SBOA has shown certain merits, follow-up studies reveal that it still suffers from several drawbacks when confronted with complex optimization scenarios, such as the following: local optimum stagnation—on high-dimensional, multimodal or irregular landscapes the population often stalls around sub-optimal regions and is unable to jump out [41]; limited global exploration—in the early search stage the diversity of the swarm is frequently insufficient, so the algorithm cannot thoroughly cover the solution space [42]; premature convergence—blind attraction toward the current global best easily misguides the whole population into a local optimum, after which further improvement becomes difficult [43]. Consequently, enhancing the global exploration ability and the exploitation accuracy—while simultaneously avoiding local optima—has become the focus of recent research. To mitigate these drawbacks, several SBOA variants have recently been proposed. Xu et al. enriched population diversity through an adaptive learning strategy and balanced exploration and exploitation with a multi-population evolution scheme [44]. Zhu et al. embedded a Student’s t-distribution mutation derived from quantum computing to help the algorithm escape local optima [45]. Song et al. accelerated exploitation by introducing a golden-sine guidance operator while preserving diversity with a cooperative camouflage mechanism [46]. Meng et al. reduced the risk of stagnation via a differential cooperative search and speeded up convergence with an information-retention control strategy [47]. Although these variants improve performance, most of them retain the original SBOA framework in which exploration and exploitation are executed simultaneously within a single iteration. This synchronous update can still lead to incomplete search and premature convergence. Moreover, the extra operators often raise algorithmic complexity and computational cost, creating new challenges that remain to be addressed.

To mitigate the inherent limitations of the original SBOA, this paper proposes an enhanced variant, MESBOA, which incorporates a multi-population management strategy and an experience-trend guidance strategy. The main contributions are summarized as follows:

- (1)

- Dual-mechanism bottleneck relief. A new multi-population management protocol restructures the SBOA framework so that exploration and exploitation are executed in separate subpopulations, eliminating mutual interference and guaranteeing a balanced search. An experience-trend guidance operator dynamically extracts historical information from elite groups and uses it to steer the entire swarm along the most promising evolutionary direction. Acting synergistically, these two mechanisms enlarge global exploration breadth, refine local exploitation accuracy, and reinforce convergence robustness;

- (2)

- Comprehensive benchmark validation. MESBOA is systematically compared with several state-of-the-art basic and improved algorithms on both the 10/20-D CEC-2022 and the 50/100-D CEC-2017 test suites. Statistical analyses—including Friedman, Wilcoxon rank-sum and Nemenyi tests—consistently rank MESBOA among the top performers;

- (3)

- Superior performance on real-world engineering tasks. When applied to a variety of constrained mechanical-design problems, MESBOA reliably obtains better feasible solutions than its competitors while exhibiting markedly lower variance, offering practitioners an efficient and stable optimization tool, especially for high-dimensional complex systems.

This paper is organized as follows: Section 2 presents primarily knowledge of the Secretary Bird Optimization Algorithm. The proposed MPSBOA method with exploration and exploitation strategies is explained in Section 3. Experimental studies and the results are presented in Section 4 and Section 5. Finally, the conclusion is given in Section 6.

2. Secretary Bird Optimization Algorithm

The Secretary Bird Optimization Algorithm (SBOA) translates the bird’s survival strategies—predation and predator avoidance—into a three-stage search template, with initialization, exploration, and exploitation. The exploration phase models hunting behavior and is subdivided into (i) prey search, (ii) prey exhausting, and (iii) prey attack. The exploitation phase mirrors anti-predator behavior, i.e., camouflage or flight. Detailed mathematical models of each stage are presented below.

2.1. Initialization Phase

In SBOA, each secretary bird corresponds to one member of the swarm; its position vector in the search space encodes the decision variables and therefore represents a single candidate solution. As a swarm-based algorithm, SBOA adopts the conventional initialization, where every bird is randomly placed inside the variable bounds using Equation (1).

where is the initial value of the ith candidate solution. and are the lower and upper bound vectors of the search space, respectively. is a random vector with elements uniformly distributed in [0, 1]. By applying the above formula to all individuals in the population, the initialized population can be represented as

where denotes the population size and denotes the dimension of the problem. After initialization, the fitness value of each individual is evaluated, and the one with the highest fitness is selected as the current global best solution.

2.2. Exploration Phase

The exploration phase of SBOA consists of three sequential search processes—prey searching, prey exhausting, and prey attacking—which are activated in strict order as the run proceeds.

During the first third of the iterations only the prey-search model is executed; it is then replaced by the prey-exhaust model in the middle third, and finally the prey-attack model is applied during the last third of the iterations. The three exploration behaviors are detailed next.

During the prey-search phase (t < 1/3 T), SBOA adjusts the position of every secretary bird by exploiting inter-individual differences, thereby enhancing population diversity and global exploration. The corresponding mathematical model is given by Equation (3).

where is the updated position of the ith secretary bird agent. and are two distinct individuals randomly selected from the population. During the prey-exhaust phase (1/3 T < t < 2/3 T), SBOA models the random walk of the secretary bird by Brownian motion, as expressed in Equation (4).

where and denote the current iteration number and the maximum iteration number, respectively. is the global best individual. is the natural constant, and is a random vector drawn from the standard normal distribution. During the final prey-attack phase (t > 2/3 T), the secretary bird employs Lévy flight to mimic its striking mobility, as described by Equation (5).

where is a random vector drawn from the Lévy distribution, as given by Equation (6).

Here, is a fixed constant of 1.5. and are random numbers in the interval [0, 1]. The formula for σ is as follows:

where denotes the gamma function.

2.3. Exploitation Phase

When evading predators, the secretary bird adopts two tactics—flight or camouflage. SBOA translates these tactics into the exploitation-phase model; each behavior is executed with equal probability, as expressed in Equations (9) and (10).

where is a random vector drawn from the standard normal distribution. is an individual randomly selected from the secretary bird population, and is a random integer equal to either 1 or 2.

3. The Proposed MESBOA

This section presents MESBOA and devises two enhancement strategies to overcome the original SBOA’s imbalance between exploitation and exploration and its tendency to fall into local optima.

3.1. Multi Population Management Strategy

For meta-heuristics, exploration features wide search coverage, high diversity and strong randomness, and is therefore preferred in early iterations, multimodal landscapes or dynamic environments. Exploitation, by contrast, is characterized by narrow search range, increased determinism and rapid convergence, so it is better suited to later iterations, unimodal problems or static environments.

The basic SBOA, however, executes the exploration and exploitation behaviors in sequence within every single iteration: each bird first performs an exploratory move and then immediately applies an exploitative update to the same individual. This updating pattern has two defects. Excessive exploitation in early stages wastes evaluations on unpromising regions and restricts the search breadth. Excessive exploration in later stages prevents the swarm from focusing on the most promising areas and slows convergence. The multi-population (or sub-population) strategy is a well-established and widely adopted enhancement technique in the field of meta-heuristics. By dividing the entire search workforce into several mutually interacting sub-groups and equipping each sub-group with its own search operator, the algorithm is able to maintain higher diversity, explore different regions of the search space in parallel, and thus achieve a better balance between exploration and exploitation. This idea has been successfully integrated into numerous optimization algorithms—ranging from differential evolution and particle swarm optimization to artificial bee colony and genetic algorithms—yielding consistent performance improvements across a broad set of benchmark and real-world problems [48,49,50]. To overcome these drawbacks, we propose a multi-population management strategy (MMS) that restructures the SBOA search framework and coordinates exploration and exploitation instead of running them back-to-back. Specifically, MMS splits the population into three sub-groups—dominant, balanced and inferior—according to fitness.

The dominant group act as leaders. In early stages they enlarge the search scope to guarantee strong global exploration, while in later stages they retain the ability to jump out of local optima and thus avoid premature convergence.

The balanced group are responsible for a smooth transition between exploration and exploitation, ensuring the algorithm keeps refining the solution without oscillation.

Regarding the inferior group, although they are far from the optimum, they are not discarded. Opposition-based learning suggests that the opposite of a poor solution may be promising; hence these individuals continue to broaden the search and, in later stages, can accelerate convergence by learning from the best.

Based on the above analysis, MMS reallocates the original SBOA operators so that exploration and exploitation are executed by different sub-populations at the same time, eliminating mutual interference and achieving a cooperative balance.

MMS assigns Equations (2) and (5) to the dominant group. When is satisfied, Equation (5) is executed for position updating; otherwise, Equation (2) is applied. This switching guarantees intensive global exploration at the beginning while retaining the ability to jump out of local optima, and shifts the emphasis to deep exploitation in later stages while still preserving diversity. The balanced group is updated by the secretary bird’s escape and camouflage behaviors. If , Equation (10) is used; otherwise, Equation (9) is performed.

This mechanism keeps the balanced individuals moving toward the current best while periodically enlarging their search radius, thereby sustaining an equilibrium between exploration and exploitation. For the inferior group, the prey-search and prey-exhaust operators are adopted. When is met, Equation (2) is executed; otherwise, Equation (4) is employed. Consequently, the inferior individuals continuously broaden the search scope and, by learning from the best in the final phase, accelerate convergence without misleading the rest of the swarm. The pseudo-code of MMS is given in Algorithm 1.

| Algorithm 1: Pseudocode of multi-population management strategy (MMS) |

| 1: Input: lb, ub, D, N, T |

| 2: Initialize population randomly according to Equation (1) |

| 3: While (t < T) do |

| 4: Calculate the fitness of each secretary bird individual |

| 5: For i =1: N |

| 6: If Xi belongs to dominant group |

| 7: If |

| 8: Update the position of secretary bird individual using Equation (5) |

| 9: Else |

| 10: Update the position of secretary bird individual using Equation (2) |

| 11: End if |

| 12: Else if Xi belongs to balanced group |

| 13: If |

| 14: Update the position of secretary bird individual using Equation (10) |

| 15: Else |

| 16: Update the position of secretary bird individual using Equation (9) |

| 17: End if |

| 18: Else |

| 19: If |

| 20: Update the position of secretary bird individual using Equation (2) |

| 21: Else |

| 22: Update the position of secretary bird individual using Equation (4) |

| 23: End if |

| 24: End if |

| 25: End for |

| 26: t = t + 1 |

| 27: End while |

| 28: Output: The best solution Xb |

3.2. Experience-Trend Guidance Strategy

SBOA neglects inter-individual information exchange; consequently, population diversity collapses in the later search period. Moreover, its limited global exploration capacity and insufficient local refinement ability restrict overall search performance. The algorithm also discards the historical data accumulated during iterations, so it cannot fully capture latent search dynamics or trends. The guided learning strategy is an experience-driven enhancement technique that evaluates the instantaneous exploration–exploitation demand from the collective search history of all individuals and then adaptively selects the most suitable search mode; however, because the decision is based on the averaged past experience of the whole swarm, it may mistake a temporary local aggregation for a genuine convergence signal and consequently mistrigger excessive exploitation, leading to erroneous guidance and degraded global exploration capability [51]. To remedy these weaknesses we propose an experience-trend guidance strategy (EGS) that exploits the historical information of dominant individuals to steer the optimization process.

Specifically, EGS computes the standard deviation of recent positions to measure population dispersion and infers the type of guidance currently required. When the algorithm is biased toward exploration, EGS switches the search to exploitation; otherwise, it drives the swarm back toward exploration. Meanwhile, by analyzing the positional records of dominant individuals over previous iterations, EGS extracts the evolutionary trend and locates regions that are likely to contain the global optimum. In this way, EGS dynamically alternates between guiding exploitation and exploration according to the instantaneous search state, achieving an effective exploration–exploitation balance. The mathematical model of EGS is given below.

First, every individual of each iteration is stored in a historical memory pool whose maximum capacity is . When the number of stored individuals exceeds , the standard deviation of these archived individuals is computed with Equation (11), and the resulting value is normalized by obtained from Equation (12) to eliminate sensitivity to variable-bound changes.

where is the function to calculate standard deviation. After obtaining , EGS updates individuals through two adaptive schemes: an exploration-oriented update where, if indicates low population diversity (i.e., the swarm is highly clustered), EGS relocates individuals toward under-explored regions via Equation (14) to prevent premature convergence and sustain exploration momentum, and an exploitation-oriented update where, if exceeds the threshold (i.e., the swarm is overly dispersed), EGS triggers an exploitation operator via Equation (13) that intensifies the search around promising areas through the combined influence of the elite group, the global best agent and a randomly chosen agent to accelerate convergence, with the switch between these two modes being self-adaptive to guarantee a dynamic balance between exploration and exploitation according to the evolutionary state inferred from .

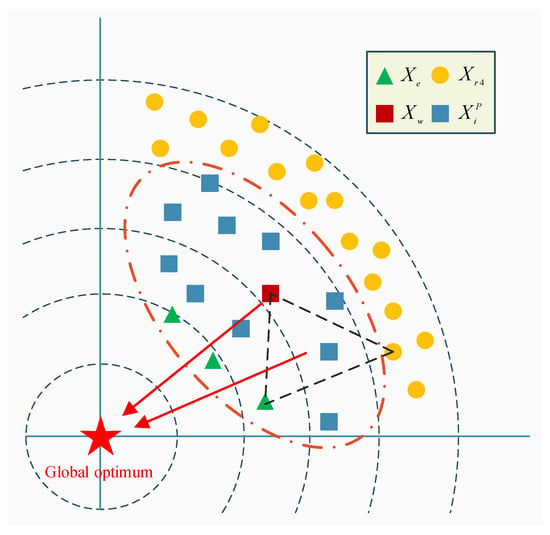

where is a secretary bird individual randomly selected from the current population. denotes the weighted average position of the dominant individuals stored in the historical memory pool . is a randomly chosen individual among the top three fittest birds in the current swarm. is the covariance matrix of the dominant population. represents the dominant individuals preserved in , and gives the number of such dominant individuals. As illustrated in Figure 1, the dominant group drives the population toward promising regions, the top three randomly chosen individuals supply alternative directions while accelerating convergence, and the random agent enlarges the set of possible search orientations; consequently Equation (14) markedly improves individual quality and strengthens global exploration, whereas Equation (13) speeds up convergence yet still preserves the possibility of correcting the search direction.

Figure 1.

The schematic of EGS.

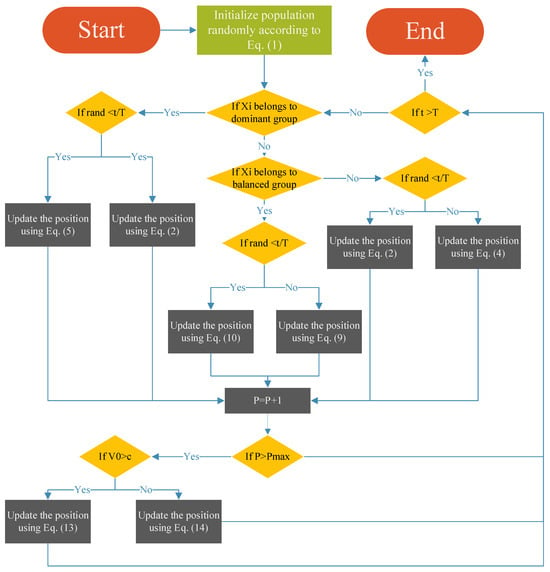

3.3. Implementation Steps of MESBOA

In summary, the proposed MESBOA first generates an initial set of solutions within the search bounds via Equation (1). During each iteration, every individual is updated by the search operator designated by MMS; the renewed individuals are then saved in pool P. Once the EGS activation condition is met, each dimension of every individual is revised by either Equation (13) or Equation (14) according to the current demand. This loop repeats until the stopping criterion is satisfied. The pseudo-code of MESBOA is given in Algorithm 2 and its flowchart is illustrated in Figure 2.

| Algorithm 2: Pseudocode of MESBOA |

| 1: Input: lb, ub, D, N, T |

| 2: Initialize population randomly according to Equation (1) |

| 3: While (t < T) do |

| 4: Calculate the fitness of each secretary bird individual |

| 5: For i = 1: N |

| 6: If Xi belongs to dominant group |

| 7: If |

| 8: Update the position of secretary bird individual using Equation (5) |

| 9: Else |

| 10: Update the position of secretary bird individual using Equation (2) |

| 11: End if |

| 12: Else if Xi belongs to balanced group |

| 13: If |

| 14: Update the position of secretary bird individual using Equation (10) |

| 15: Else |

| 16: Update the position of secretary bird individual using Equation (9) |

| 17: End if |

| 18: Else |

| 19: If |

| 20: Update the position of secretary bird individual using Equation (2) |

| 21: Else |

| 22: Update the position of secretary bird individual using Equation (4) |

| 23: End if |

| 24: End if |

| 25: |

| 26: If |

| 27: If |

| 28: Update the position of secretary bird individual using Equation (13) |

| 29: Else |

| 30: Update the position of secretary bird individual using Equation (14) |

| 31: End if |

| 32: End if |

| 33: End for |

| 34: t = t + 1 |

| 35: End while |

| 36: Output: The best solution Xb |

Figure 2.

Flowchart of the proposed MESBOA.

3.4. The Computational Complexity of MESBOA

Time complexity is a critical metric for evaluating the performance of optimization algorithms. The computational cost of both SBOA and MESBOA is dominated by population initialization and iterative updating, with the key factors being the maximum number of iterations (T), problem dimension (D), and population size (N). According to the original SBOA paper, its time complexity is . Below is the time complexity of MESBOA. The time complexity of initializing the secret birds population is . In the position-update stage, MMS only reassigns the existing search operators; each individual is still moved once per iteration. Hence, the time complexity of MMS remains . As an extra position-update module, GES adds its own cost to SBOA. If EGS is invoked times, its time complexity is . Therefore, the overall time complexity of MESBOA is . Since the EGS procedure is triggered only after the archive has reached its maximum capacity , we assumed is . Consequently, EGS will be executed times, yielding , and because , it follows immediately that T1 < T. Thus, the overall time complexity of MESBOA is slightly lower than that of the original SBOA.

4. Benchmark Test Results and Analysis

To comprehensively evaluate the performance of the proposed MESBOA, a total of 41 functions from the CEC2017 and CEC2022 test suites are employed. In this section, we conduct parameter sensitivity analysis, ablation experiments, convergence analysis, robustness analysis and statistical tests to demonstrate the superiority of MESBOA, and compare its results with various baseline and improved algorithms of different types. The section is organized into six parts: benchmark test function, experimental setup and competitor algorithms, parameter sensitivity analysis, an ablation study, low-dimensional experiments and high-dimensional experiments, which will be presented in sequence.

4.1. Review the Benchmark Test Suites

Two benchmark suites are employed in the experiments. The CEC 2017 set consists of unimodal (UM—F1, F3; F2 was officially removed), multimodal (MM—F4–F10), hybrid (H—F11–F20), and composite (C—F21–F30) functions. The CEC 2022 suite contains unimodal (UM—F1), multimodal (MM—F2–F5), hybrid (H—F6–F8), and composite (C—F9–F12) functions. While CEC 2017 can be evaluated at 10 D, 30 D, 50 D and 100 D, and CEC 2022 at 10 D and 20 D, we selected 50 D and 100 D cases from CEC 2017 together with 10 D and 20 D cases from CEC 2022 to examine MESBOA across a broad dimensional range. Detailed specifications for both suites are summarized in Table A1 and Table A2 of Appendix A.

4.2. Experimental Environment and Configuration

The experiments are realized on MATLAB 2021b in a Windows 11 environment. The platform has 32 GB RAM and an AMD R9 7945HX processor. To guarantee fairness and persuasiveness, all algorithms compared in the performance tests were run on identical data sets, the maximum number of function evaluations was fixed at 1000 D, and every algorithm was executed over 30 independent trials; to reduce randomness, the minimum value (Min), average value (Avg), and standard deviation (Std) of each metric across the 30 runs was used for statistical analysis. Statistical significance was examined via Wilcoxon rank-sum test, Friedman test and Nemenyi test.

To highlight the superiority of the proposed MESBOA, eight basic and enhanced algorithms of distinct categories are selected for comparison. These include evolution-based AE [17] and LSHADE-SPACMA [52], physics-based EO [53] and GLS-RIME [51], human-based CFOA [29] and ISGTOA [54], and swarm-based RBMO [55] and ESLPSO [56]. The AE, CFOA and RBMO are recently published high-performance basic algorithms, EO is a widely cited method, LSHADE-SPACMA is an advanced differential-evolution variant, ESLPSO represents the latest upgrade of the classical PSO, while GLS-RIME and ISGTOA have demonstrated strong efficacy in their respective studies. Overall, this diverse set of competitors provides a solid basis for verifying the exceptional performance of MESBOA. Parameter values for all contenders are taken from their original papers; only the common termination criterion (maximum function evaluations) is imposed to ensure a fair comparison. Table 1 outlines the specific parameter settings.

Table 1.

Parameter setting of each algorithm.

4.3. Parameter-Sensitivity Analysis

For meta-heuristic algorithms, appropriate parameter values are essential to fully exploit their potential. The proposed MESBOA integrates MMS and EGS, each of which introduces its own tunable parameters; therefore, this subsection is devoted to identifying the best settings for both modules.

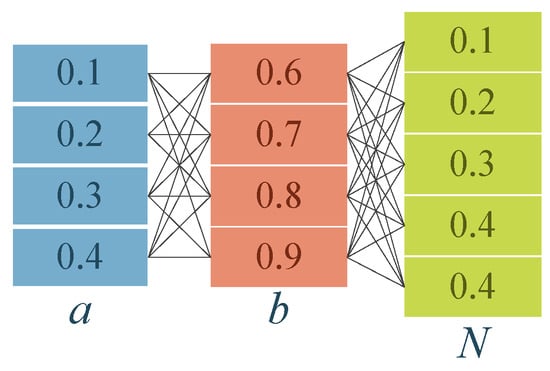

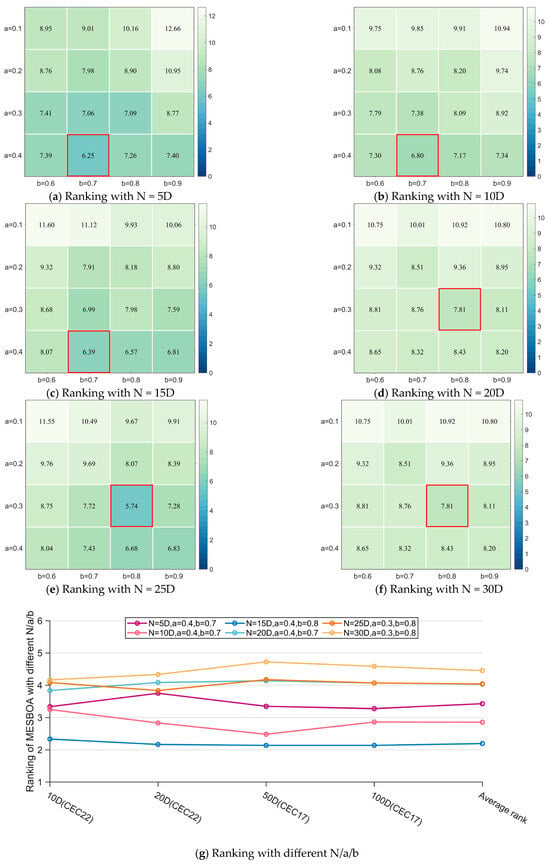

The MMS partitions the population into three groups to boost SBOA, so fixing the sizes of these groups is critical, and a grid search is therefore adopted in which the dominant group is set to contain individuals, the inferior group , and the total population remains , with a sweep from 0.1 to 0.4 in steps of 0.1, b from 0.6 to 0.9 in steps of 0.1, and N from 5D to 30D in steps of 5D, yielding 96 combinations (Figure 3); the version of MESBOA that employs only MMS is run 30 times on selected low- and high-dimensional functions for every parameter triple, and the results are analyzed with the Friedman test to identify the best configuration.

Figure 3.

Combination of three fine-tuning parameters.

Figure 4 visually presents the Friedman mean ranks of MESBOA under different parameter settings for the four-dimensional cases of the two test suites; examining Figure 4a–f reveals that the algorithm achieves the top rank when a is 0.3 or 0.4 and b is 0.7 or 0.8, indicating that these parameters are insensitive to overall population size. A small a (dominant group too small) degrades performance, whereas b (inferior group) must be neither too large nor too small, confirming its constructive role. Figure 4g further shows that the best overall rank occurs at N = 15 D with a = 0.4 and b = 0.8, so this parameter set is adopted in all subsequent experiments.

Figure 4.

The Friedman test results of MESBOA with different N/a/b.

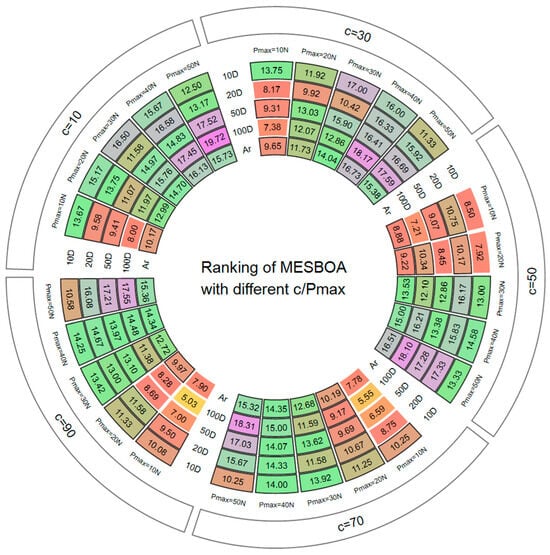

The EGS exerts its strength only when enough historical experience is stored and the correct exploration–exploitation bias is chosen; too few data fail to reveal the evolutionary trend, whereas too many may mislead and slow the search. Bcause different algorithms struggle to balance exploration and exploitation, we need to decide which behavior is currently required. Hence the best values of Pmax (history capacity) and c (bias control) are investigated. A grid search is again employed: Pmax is swept from 10 to 90 in steps of 20 and c from 10 to 50 in steps of 10. Figure 5 reports the Friedman ranks of MESBOA with different parameter pairs across all tested dimensions, where a lower average rank (Ar) indicates better overall performance. In Figure 5, the boxed numbers indicate the Friedman ranks obtained under each parameter configuration; the 10-D and 20-D results refer to the CEC 2022 suite, whereas the 50-D and 100-D results correspond to the CEC 2017 suite. Taking c = 10 as an example, the cell aggregates the ranks (and their average) across the five Pmax settings for every dimensionality: specifically, when c = 10 and Pmax = 10 N, the algorithm achieves a rank of 13.67 on the 10-D CEC 2022 functions.

Figure 5.

The Friedman ranking of MESBOA with different c/Pmax.

At any fixed value of c, increasing Pmax consistently worsens the rank, implying that excessive historical information obscures rather than reveals the population’s evolutionary trend, and thereby misguides the search. Conversely, for a fixed Pmax, a larger c yields better ranks, showing that the original SBOA is exploration-deficient and benefits from stronger exploration bias introduced by EGS. The best compromise is obtained with c = 70 and Pmax = 10 N; this combination achieves the lowest Ar on both low- and high-dimensional functions, and is therefore adopted in all subsequent experiments.

4.4. Strategy Effectiveness Analysis

In this section, we examine the individual contributions of the proposed enhancement strategies to the performance gains observed in MESBOA. Two reduced variants, each incorporating only one of the two mechanisms, are evaluated—MSBOA, obtained by removing the EGS module from MESBOA, and ESBOA, derived by excluding the MMS component. The experimental results for MESBOA, SBOA, MSBOA and ESBOA on both test suites are compiled in Table A1, Table A2, Table A3 and Table A4 of Appendix A, and Friedman together with Wilcoxon rank-sum tests are employed to analyze the outcomes.

Table 2 summarizes the Friedman results for MESBOA and its two derived variants; the obtained p-value < 0.05 confirms significant differences among the four configurations. MESBOA, equipped with both enhancement modules, ranks first under all four dimensional settings, whereas the original SBOA always places last. MSBOA consistently outperforms ESBOA, indicating that the MMS component contributes more to the overall improvement than the EGS component, yet each single strategy still yields a clear gain over the baseline SBOA. Table 3 quantifies the win/tie/loss counts of MESBOA and its partial variants against SBOA: all three improved versions achieve significant superiority on more than half of the functions, again underlining the effectiveness of the proposed modifications. The number of wins for MSBOA exceeds that for ESBOA, further evidencing that MMS provides a larger performance boost than EGS. Overall, both proposed enhancement strategies are statistically validated as distinctly beneficial.

Table 2.

Friedman test results of MESBOA and its derived algorithms.

Table 3.

Wilcoxon rank sum test results of MESBOA and its derived algorithms.

4.5. Low-Dimensional Function Experiments

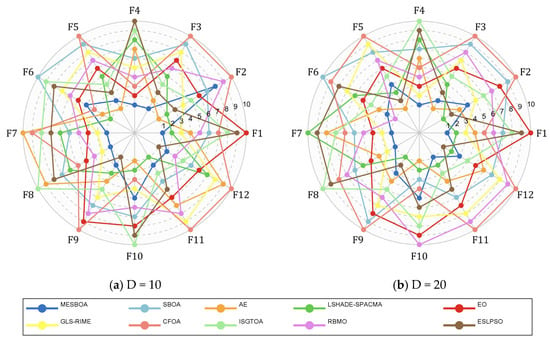

To comprehensively evaluate the performance of the proposed MESBOA on low-dimensional problems, the 12 functions of the CEC2022 test suite are adopted as the benchmark. Figure 6 shows the average rankings of MESBOA, AE, LSHADE-SPACMA, EO, GLS-RIME, CFOA, ISGTOA, RBMO and ESLPSO when solving the 10-D/20-D functions. The complete data of the best, mean and standard deviation values are provided in Table A1 and Table A2 of Appendix A. In the radar chart of rankings, each algorithm connects its ranks on the different functions into one surface; the smaller the enclosed surface, the better the overall performance. As can be seen, the surface produced by the proposed MESBOA is the smallest, demonstrating its superior overall performance. The surfaces of ESLPSO and ISGTOA are similar in size but exhibit poorer smoothness, indicating relatively weak stability and search efficiency. Although SBOA shows small fluctuations, its area is still large, confirming its poor overall performance.

Figure 6.

Radar diagram of MESBOA and comparison algorithms on CEC2022.

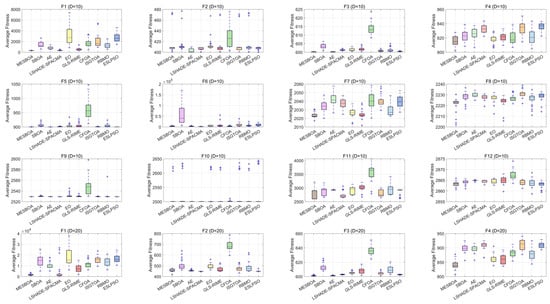

Figure 7 presents boxplots of MESBOA, AE, LSHADE-SPACMA, EO, GLS-RIME, CFOA, ISGTOA, RBMO and ESLPSO on the CEC2022 functions to assess robustness. It is evident that MESBOA exhibits the smallest box ranges on the majority of problems—specifically F1, F3–F4 and F7–F8 for 10-D, and F1, F4, F7–F8 and F11 for 20-D, ten functions in total—while also showing fewer outliers (“+”), indicating high stability. The plots further reveal that MESBOA consistently attains the lowest median on almost half of the functions, underscoring its superior accuracy. Overall, the narrow and low-lying boxes demonstrate that MESBOA delivers stable distributions and strong robustness across the test suite.

Figure 7.

Box plots of MESBOA and comparison algorithms on CEC2022.

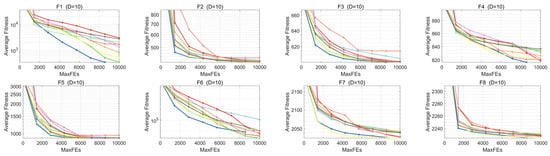

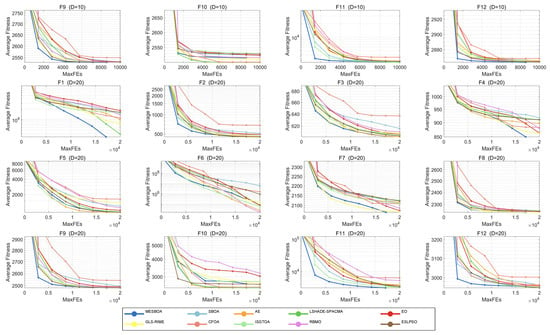

Based on the convergence curves collected on the CEC2022 test set, we further examined MESBOA’s convergence behavior when tackling low-dimensional tasks. As Figure 8 shows, MESBOA delivers excellent convergence. For the unimodal F1, all algorithms continue to converge, yet MESBOA exhibits the fastest speed and the highest accuracy, whereas the original SBOA descends much more slowly and attains a poorer final value. This superior performance on unimodal landscapes is attributed to MMS, which reconstructs the search framework so that dominant birds keep exploiting promising regions, and to EGS, which intensifies exploitation exactly when the population needs it; the synergy of the two mechanisms markedly strengthens MESBOA’s local search capability. On multimodal functions F2–F5, MESBOA is not always the most accurate on F2–F3 and F5, yet it converges faster and consistently yields higher-quality solutions than the basic SBOA. On F4 its convergence is slower, but the algorithm keeps escaping local optima and continues to discover better points. Compared with the original SBOA, MESBOA maintains a steady convergence curve, rarely stalls, and even accelerates in the later search phase. These improvements are credited to the supplementary role of the inferior group defined by MMS; by enlarging the search scope it helps the swarm jump out of local traps. In addition, EGS preserves population diversity through multiple guiding points, which further protects MESBOA from premature convergence. On the more challenging F6–F12, MESBOA attains the highest convergence accuracy on 10-D F7–F8, 20-D F7–F8 and F11. For the remaining functions except F10, it may not deliver the best final value, yet it always exhibits a rapid convergence rate; on F10 its speed and accuracy are not the top but they still surpass those of the basic SBOA. These results confirm that MESBOA possesses a well-balanced exploration–exploitation capability, owing to the fact that EGS dynamically detects the swarm’s current demand and drives each dimension to search accordingly. Overall, MESBOA demonstrates the best convergence behavior among all contenders on low-dimensional optimization problems.

Figure 8.

Convergence curves of MESBOA and comparison algorithms on CEC2022.

In addition to convergence and robustness analyses, several statistical tests are employed to examine the differences between MESBOA and the compared algorithms. Table 4 summarizes the Wilcoxon rank-sum test results between MESBOA and AE, LSHADE-SPACMA, EO, GLS-RIME, CFOA, ISGTOA, RBMO, and ESLPSO, where the symbols “+/=/−” indicate that the proposed MESBOA is superior, similar, or inferior to the compared algorithm, respectively. The Wilcoxon rank-sum test is a non-parametric pairwise comparison method that checks whether two algorithms exhibit significant differences across different functions. It should be noted that all subsequent statistical tests are conducted at a significance level of 0.05. Table 4 shows that, against every competitor, MESBOA obtains more “+” than “−”; for most algorithms the count of “+” even exceeds the sum of “−” and “=”, evidencing a clear superiority. Versus the basic algorithms the advantage is larger in 20 D than in 10 D, whereas versus the enhanced variants the advantage is larger in 10 D than in 20 D. These patterns not only confirm the overall competitiveness of MESBOA but also re-assert the NFL statement that no single algorithm is best for all problems.

Table 4.

Wilcoxon rank sum test results of MESBOA and comparison algorithms on CEC2022.

Beyond pairwise comparisons, an overall analysis is conducted using the Friedman test, and the results are reported in Table 5. The obtained p-values confirm a significant global performance difference between MESBOA and all contenders. Specifically, MESBOA ranks first on both 10-D and 20-D problems, achieving mean ranks of 2.500 and 2.333, respectively, followed immediately by LSHADE-SPACMA and AE, while the original SBOA places second-to-last, outperforming only CFOA. Notably, although MESBOA’s superiority margin is smaller in 20-D than in 10-D, its absolute rank is actually better at 20-D because the rankings of nearly all improved algorithms rise with dimension, while those of the baseline methods fall; consequently, MESBOA’s relative advantage over the other enhanced variants does not increase even though its absolute rank improves. Nevertheless, MESBOA unquestionably delivers the best overall performance among all algorithms examined.

Table 5.

Friedman test results of MESBOA and comparison algorithms on CEC2022.

The Friedman test only indicates whether significant differences exist among all algorithms; it does not quantify the magnitude of the gap between MESBOA and any specific competitor. Therefore, the Nemenyi post-hoc test is applied to obtain a finer-grained analysis. Based on the Friedman rankings, Nemenyi’s procedure computes a critical difference value (CDV) with which algorithm pairs can be judged equivalent; if the difference between the mean ranks of MESBOA and another algorithm is smaller than CDV, the two methods are deemed statistically indistinguishable. CDV is calculated with Equation (18).

where represents the number of algorithms and represents the number of functions tested. is obtained from the table and equals 3.1640 in this paper. Figure 9 illustrates the Nemenyi post-hoc test results for MESBOA and the competing algorithms. It can be observed that MESBOA exhibits significant differences with CFOA, SBOA, and ISGTOA on 10-D functions, while no significant differences are found with the remaining algorithms. On 20-D functions, MESBOA shows significant differences with CFOA, SBOA, RBMO, ISGTOA, and EO, but not with the other algorithms. Therefore, it can be concluded that MESBOA is a competitive algorithm on the CEC2022 test suite.

Figure 9.

Nemenyi post-hoc test results of MESBOA and comparison algorithms on CEC2022.

4.6. High-Dimensional Function Experiments

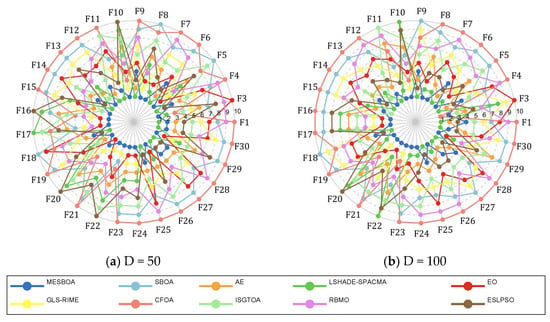

Although Section 4.5 has verified the effectiveness of MESBOA on low-dimensional tasks, modern applications increasingly involve high-dimensional and complex landscapes; hence the performance of MESBOA under such conditions must be assessed. The 50-D and 100-D instances of the CEC2017 test set are therefore employed. Figure 10 gives a first overview of the comparative behavior through a ranking radar chart, where the area enclosed by each algorithm indicates its overall standing on the CEC2017 benchmark. MESBOA never drops out of the top four ranks on any function, demonstrating consistently superior and stable performance, while SBOA and CFOA remain firmly in the bottom two positions across the entire benchmark.

Figure 10.

Radar diagram of MESBOA and comparison algorithms on CEC2017.

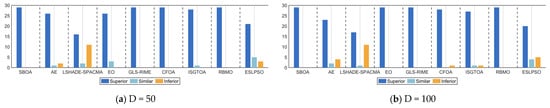

Table 6 summarizes the Wilcoxon rank-sum results between MESBOA and the competitors on high-dimensional functions, with a visual depiction in Figure 11. MESBOA registers significant superiority on at least 16 functions against every rival. Specifically, the counts of superior/(inferior) functions are 58(0) vs. SBOA, 49(6) vs. AE, 33(22) vs. LSHADE-SPACMA, 55(0) vs. EO, 88(0) vs. GLS-RIME, 57(1) vs. CFOA, 55(1) vs. ISGTOA, 58(0) vs. RBMO, and 41(8) vs. ESLPSO. Overall, MESBOA exhibits clear advantages on the majority of 50-D and 100-D functions.

Table 6.

Wilcoxon rank sum test results of MESBOA and comparison algorithms on CEC2017.

Figure 11.

The number of “+/=/−” obtained by MESBOA and comparison algorithms on CEC2017.

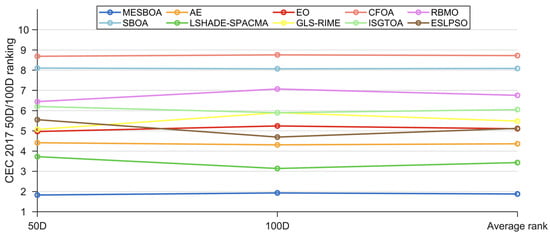

The Friedman test results for MESBOA and the competing algorithms on the 50-D/100-D functions of the CEC2017 suite are reported in Table 7 and visualized in Figure 12; the p-values confirm a significant overall difference, which is further quantified by the Nemenyi post-hoc analysis shown in Figure 13. MESBOA ranks first on both dimensionalities with mean ranks of 1.828 (50-D) and 1.931 (100-D), whereas the original SBOA places second-to-last at 8.103 and 8.069, respectively, and LSHADE-SPACMA and AE occupy the second and third positions. The Nemenyi post-hoc test reveals that, on both 50-D and 100-D CEC2017 functions, MESBOA is not statistically distinguishable from LSHADE-SPACMA, yet it exhibits significant differences from all other contenders. This outcome contrasts with the CEC2022 low-dimensional results and indicates that the proposed MESBOA possesses a stronger edge when tackling high-dimensional problems. Moreover, being compared against the advanced differential evolution variant LSHADE-SPACMA further highlights the superiority of the proposed approach.

Table 7.

Friedman test results of MESBOA and comparison algorithms on CEC2017.

Figure 12.

The Friedman ranking of MESBOA and comparison algorithms on CEC2017.

Figure 13.

Nemenyi post-hoc test results of MESBOA and comparison algorithms on CEC2017.

5. Engineering Optimization Problems Results and Analysis

To verify the effectiveness and robustness of MESBOA in solving real-world engineering optimization problems, three typical engineering design problems with multiple inequality constraints are selected. When constraints are violated, the penalty function method is adopted: any infeasible solution receives a large penalty value and is thus eliminated during iterations. To guarantee fairness and reproducibility, all tests are conducted under identical settings and each problem is run 30 independent times.

5.1. Pressure Vessel Design Problem

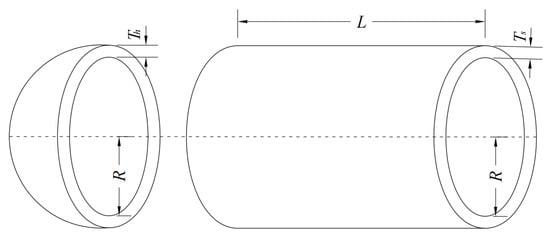

The pressure vessel design optimization problem aims to minimize the structural weight of the material employed. It involves four design variables: shell thickness (), head thickness (), inner radius () and cylindrical shell length (), collectively denoted as . The corresponding structural sketch is shown in Figure 14. Mathematically, it is shown as below.

Figure 14.

Schematic of pressure vessel.

Consider variable

Minimize

Subject this to

The proposed MESBOA algorithm achieves the minimum weight of 5734.9131570 in the pressure vessel design problem, with the corresponding design variables shown in Table 8. This result is the best among all competitors, and the standard deviation over 30 runs is significantly lower than that of the other methods.

Table 8.

Results of MESBOA and competitors on the pressure vessel design problem.

5.2. Welded Beam Design Problem

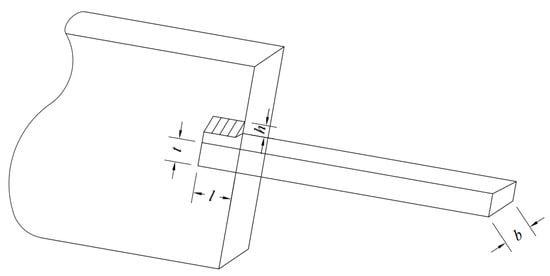

The welded beam design problem is a constrained optimization task whose objective is to minimize the weight of the beam. The design variables are four geometric parameters: weld thickness h, beam length l, beam thickness t and weld width b. The objective function computes the beam weight from these geometric quantities, while a set of constraints guarantees structural safety and feasibility. The corresponding structural sketch is shown in Figure 15. The mathematical model of the welded beam design problem is formulated as follows.

Figure 15.

Schematic of welded beam design.

Consider variable

Minimize

Subject this to

As shown in Table 9, the proposed MESBOA demonstrates superior performance on this problem, achieving the best fitness value of 1.724528 with the solution comprising = [0.187155, 3.470487, 9.036624, 0.205730].

Table 9.

Results of MESBOA and competitors on the welded beam design problem.

5.3. Three-Bar Truss Design Problem

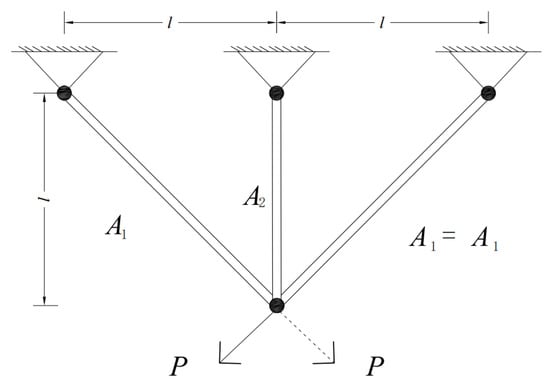

The three-bar truss design aims to produce a truss with the lowest possible weight while satisfying prescribed limits on deflection, buckling, and stress. This optimization problem involves two design variables, as shown in Figure 16, and its mathematical formulation is given below.

Figure 16.

Schematic of three-bar truss design.

Consider variable

Minimize

Subject this to

As shown in Table 10, although the differences between MESBOA and the competing algorithms are small, MESBOA still delivers the best solution, with a corresponding fitness value of 263.8523464.

Table 10.

Results of MESBOA and competitors on the three-bar truss design problem.

6. Conclusions

This paper presents MESBOA, an SBOA variant that addresses the original algorithm’s imbalance between exploitation and exploration and its tendency to become trapped in local optima. A multi-population management strategy (MMS) restructures the SBOA search framework to coordinate exploitation and exploration, while an Experience-Guided Strategy (EGS) dynamically captures the evolutionary trend of the population and regulates exploration/exploitation on demand. Leveraging the guiding role of dominant swarms, MESBOA enhances global exploration, maintains diversity and accelerates convergence. Simulation experiments on the CEC2017 and CEC2022 test suites demonstrate that the proposed MESBOA outperforms eight state-of-the-art algorithms in terms of optimization capability and convergence performance. In addition, experiments on engineering constrained optimization problems show its strong advantages in real-world optimization tasks.

Admittedly, MESBOA still has limitations that warrant further attention. First, the newly introduced parameters of EGS and MMS must be manually tuned, restricting its broader applicability. Second, low-dimensional benchmarks reveal only marginal advantages, indicating that its performance on small-scale problems needs improvement. Finally, although EGS is invoked sparingly, the embedded covariance matrix computations are time-consuming, hindering deployment in real-time optimization. Future work will therefore focus on three directions: (1) adopting reinforcement learning or other adaptive parameter control techniques to eliminate manual tuning and widen the application domain; (2) embedding search operators specifically designed for low-dimensional landscapes and equipping the algorithm with a landscape-detection module to select operators dynamically; and (3) accelerating the covariance update via parallel architectures or matrix-skeletonization so that the method can tackle dynamic optimization tasks with tight timing constraints.

Author Contributions

J.Z. (Jin Zhu)—conceptualization/methodology/software/resources/writing—original draft/validation/visualization; B.L.—software/validation/formal analysis/investigation/data curation/resources; J.Z. (Jun Zheng)—conceptualization/resources/writing—review and editing/supervision/project administration/funding acquisition; S.Y.—writing—review and editing/data curation/resources/supervision; M.W.—writing—review and editing/supervision/project administration/funding acquisition/methodology. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Taizhou Science and Technology Plan Project, grant number 25gyb45.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data supporting the findings of this study are available within the paper.

Acknowledgments

I would like to thank the editors and anonymous reviewers who have helped to improve the paper.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

The statistical results of MESBOA and its derived algorithms on the CEC 2022 in dimensions of 10.

Table A1.

The statistical results of MESBOA and its derived algorithms on the CEC 2022 in dimensions of 10.

| No. | Index | SBOA | MSBOA | ESBOA | MESBOA |

|---|---|---|---|---|---|

| F1 | Min | 5.5352E+02 | 3.1561E+02 | 3.2611E+02 | 3.0008E+02 |

| Avg | 1.4071E+03 | 4.1585E+02 | 3.8985E+02 | 3.1454E+02 | |

| Std | 6.0831E+02 | 1.2941E+02 | 3.7755E+01 | 1.3722E+01 | |

| F2 | Best | 4.0601E+02 | 4.0035E+02 | 4.0024E+02 | 4.0086E+02 |

| Avg | 4.1381E+02 | 4.1732E+02 | 4.0923E+02 | 4.1401E+02 | |

| Std | 1.5470E+01 | 2.5167E+01 | 1.2085E+01 | 1.9802E+01 | |

| F3 | Best | 6.0092E+02 | 6.0005E+02 | 6.0023E+02 | 6.0009E+02 |

| Avg | 6.0350E+02 | 6.0067E+02 | 6.0078E+02 | 6.0021E+02 | |

| Std | 1.3381E+00 | 1.1736E+00 | 4.0234E−01 | 7.1382E−02 | |

| F4 | Best | 8.0797E+02 | 8.0703E+02 | 8.0748E+02 | 8.0200E+02 |

| Avg | 8.2306E+02 | 8.1874E+02 | 8.1413E+02 | 8.1592E+02 | |

| Std | 7.5038E+00 | 8.2939E+00 | 5.0872E+00 | 6.6586E+00 | |

| F5 | Best | 9.0120E+02 | 9.0016E+02 | 9.0002E+02 | 9.0000E+02 |

| Avg | 9.0615E+02 | 9.0311E+02 | 9.0027E+02 | 9.0009E+02 | |

| Std | 5.0025E+00 | 4.8743E+00 | 1.7386E−01 | 1.0028E−01 | |

| F6 | Best | 6.5012E+03 | 1.9935E+03 | 2.2026E+03 | 1.9415E+03 |

| Avg | 5.3346E+04 | 5.2305E+03 | 4.7676E+03 | 4.0973E+03 | |

| Std | 4.1672E+04 | 2.0695E+03 | 1.8256E+03 | 1.9009E+03 | |

| F7 | Best | 2.0198E+03 | 2.0212E+03 | 2.0221E+03 | 2.0173E+03 |

| Avg | 2.0346E+03 | 2.0231E+03 | 2.0280E+03 | 2.0237E+03 | |

| Std | 6.9307E+00 | 2.1432E+00 | 3.5513E+00 | 2.9332E+00 | |

| F8 | Best | 2.2170E+03 | 2.2064E+03 | 2.2156E+03 | 2.2020E+03 |

| Avg | 2.2280E+03 | 2.2233E+03 | 2.2263E+03 | 2.2213E+03 | |

| Std | 3.9364E+00 | 3.5381E+00 | 3.7912E+00 | 6.3528E+00 | |

| F9 | Best | 2.5294E+03 | 2.5293E+03 | 2.5293E+03 | 2.5293E+03 |

| Avg | 2.5300E+03 | 2.5293E+03 | 2.5294E+03 | 2.5293E+03 | |

| Std | 5.5915E−01 | 7.4202E−02 | 1.5615E−01 | 2.8182E−02 | |

| F10 | Best | 2.5004E+03 | 2.5003E+03 | 2.5003E+03 | 2.5003E+03 |

| Avg | 2.5217E+03 | 2.5083E+03 | 2.5235E+03 | 2.5155E+03 | |

| Std | 4.7554E+01 | 2.9716E+01 | 4.6946E+01 | 3.9134E+01 | |

| F11 | Best | 2.6578E+03 | 2.6005E+03 | 2.6115E+03 | 2.6004E+03 |

| Avg | 2.8522E+03 | 2.7853E+03 | 2.7897E+03 | 2.7765E+03 | |

| Std | 1.4014E+02 | 1.6902E+02 | 1.5570E+02 | 1.7604E+02 | |

| F12 | Best | 2.8611E+03 | 2.8596E+03 | 2.8596E+03 | 2.8607E+03 |

| Avg | 2.8641E+03 | 2.8629E+03 | 2.8636E+03 | 2.8632E+03 | |

| Std | 9.7370E−01 | 1.2616E+00 | 1.4768E+00 | 1.1067E+00 |

Table A2.

The statistical results of MESBOA and its derived algorithms on the CEC 2022 in dimensions of 20.

Table A2.

The statistical results of MESBOA and its derived algorithms on the CEC 2022 in dimensions of 20.

| No. | Index | SBOA | MSBOA | ESBOA | MESBOA |

|---|---|---|---|---|---|

| F1 | Min | 5.0359E+03 | 1.0833E+03 | 1.7139E+03 | 8.3858E+02 |

| Avg | 1.4221E+04 | 5.1301E+03 | 3.3450E+03 | 1.6891E+03 | |

| Std | 5.1642E+03 | 2.9662E+03 | 9.0029E+02 | 6.9714E+02 | |

| F2 | Best | 4.5978E+02 | 4.4510E+02 | 4.4085E+02 | 4.1915E+02 |

| Avg | 5.0292E+02 | 4.6441E+02 | 4.5981E+02 | 4.5938E+02 | |

| Std | 4.3153E+01 | 1.2992E+01 | 1.4308E+01 | 1.3063E+01 | |

| F3 | Best | 6.0755E+02 | 6.0062E+02 | 6.0216E+02 | 6.0053E+02 |

| Avg | 6.1309E+02 | 6.0293E+02 | 6.0474E+02 | 6.0128E+02 | |

| Std | 4.4226E+00 | 1.5536E+00 | 1.5033E+00 | 5.9607E−01 | |

| F4 | Best | 8.5622E+02 | 8.2256E+02 | 8.4880E+02 | 8.0729E+02 |

| Avg | 8.9906E+02 | 8.5261E+02 | 8.7853E+02 | 8.4025E+02 | |

| Std | 1.3812E+01 | 1.7090E+01 | 1.4215E+01 | 1.4961E+01 | |

| F5 | Best | 9.7435E+02 | 9.1135E+02 | 9.0822E+02 | 9.0177E+02 |

| Avg | 1.1351E+03 | 1.0540E+03 | 9.4429E+02 | 9.2697E+02 | |

| Std | 1.5742E+02 | 1.1423E+02 | 4.0574E+01 | 2.7455E+01 | |

| F6 | Best | 5.6861E+05 | 2.1926E+03 | 1.0887E+05 | 2.4415E+03 |

| Avg | 3.2073E+06 | 1.8696E+04 | 4.4962E+05 | 1.6519E+04 | |

| Std | 2.7864E+06 | 1.3436E+04 | 2.3261E+05 | 1.4728E+04 | |

| F7 | Best | 2.0573E+03 | 2.0273E+03 | 2.0483E+03 | 2.0314E+03 |

| Avg | 2.0973E+03 | 2.0630E+03 | 2.0829E+03 | 2.0525E+03 | |

| Std | 1.7843E+01 | 2.3158E+01 | 1.9506E+01 | 1.5269E+01 | |

| F8 | Best | 2.2315E+03 | 2.2239E+03 | 2.2297E+03 | 2.2239E+03 |

| Avg | 2.2426E+03 | 2.2308E+03 | 2.2385E+03 | 2.2297E+03 | |

| Std | 6.2336E+00 | 5.4370E+00 | 3.9057E+00 | 4.0834E+00 | |

| F9 | Best | 2.4844E+03 | 2.4808E+03 | 2.4816E+03 | 2.4809E+03 |

| Avg | 2.4926E+03 | 2.4820E+03 | 2.4836E+03 | 2.4820E+03 | |

| Std | 7.4879E+00 | 1.4145E+00 | 1.5079E+00 | 1.2667E+00 | |

| F10 | Best | 2.5006E+03 | 2.5004E+03 | 2.5005E+03 | 2.5005E+03 |

| Avg | 2.5296E+03 | 2.6710E+03 | 2.5468E+03 | 2.6017E+03 | |

| Std | 7.3987E+01 | 4.6806E+02 | 8.4983E+01 | 3.1332E+02 | |

| F11 | Best | 3.2248E+03 | 2.9032E+03 | 3.0228E+03 | 2.9085E+03 |

| Avg | 3.4671E+03 | 3.0928E+03 | 3.0923E+03 | 2.9622E+03 | |

| Std | 1.4417E+02 | 1.5658E+02 | 4.9011E+01 | 5.4120E+01 | |

| F12 | Best | 2.9452E+03 | 2.9365E+03 | 2.9406E+03 | 2.9370E+03 |

| Avg | 2.9608E+03 | 2.9504E+03 | 2.9524E+03 | 2.9526E+03 | |

| Std | 1.4357E+01 | 1.1311E+01 | 7.4899E+00 | 1.2159E+01 |

Table A3.

The statistical results of MESBOA and its derived algorithms on the CEC 2017 in dimensions of 50.

Table A3.

The statistical results of MESBOA and its derived algorithms on the CEC 2017 in dimensions of 50.

| No. | Index | SBOA | MSBOA | ESBOA | MESBOA |

|---|---|---|---|---|---|

| F1 | Min | 5.3234E+09 | 7.6065E+07 | 1.3221E+09 | 1.4560E+07 |

| Avg | 6.7391E+09 | 5.1368E+08 | 2.7944E+09 | 2.3648E+08 | |

| Std | 1.0966E+09 | 3.4326E+08 | 1.3177E+09 | 2.3129E+08 | |

| F3 | Best | 1.1419E+05 | 7.0729E+04 | 6.9889E+04 | 6.5945E+04 |

| Avg | 1.5577E+05 | 1.0090E+05 | 1.0036E+05 | 8.8189E+04 | |

| Std | 2.4727E+04 | 1.5933E+04 | 2.3988E+04 | 1.4670E+04 | |

| F4 | Best | 9.2567E+02 | 5.5087E+02 | 7.3922E+02 | 5.5091E+02 |

| Avg | 1.2961E+03 | 6.9143E+02 | 8.4063E+02 | 6.3903E+02 | |

| Std | 2.5555E+02 | 9.4532E+01 | 9.3296E+01 | 6.3674E+01 | |

| F5 | Best | 8.7112E+02 | 6.4803E+02 | 8.6313E+02 | 6.0263E+02 |

| Avg | 9.4864E+02 | 7.0429E+02 | 8.9166E+02 | 6.5239E+02 | |

| Std | 4.5724E+01 | 4.9281E+01 | 3.4187E+01 | 3.5460E+01 | |

| F6 | Best | 6.3411E+02 | 6.1355E+02 | 6.1731E+02 | 6.0746E+02 |

| Avg | 6.4201E+02 | 6.2025E+02 | 6.2652E+02 | 6.1328E+02 | |

| Std | 5.2349E+00 | 3.3361E+00 | 6.3280E+00 | 2.9741E+00 | |

| F7 | Best | 1.2417E+03 | 9.7991E+02 | 1.1605E+03 | 9.2451E+02 |

| Avg | 1.3345E+03 | 1.0961E+03 | 1.2401E+03 | 9.8456E+02 | |

| Std | 6.5223E+01 | 6.9965E+01 | 4.5451E+01 | 4.6264E+01 | |

| F8 | Best | 1.2189E+03 | 9.6391E+02 | 1.1566E+03 | 9.4115E+02 |

| Avg | 1.2652E+03 | 1.0069E+03 | 1.2087E+03 | 9.7088E+02 | |

| Std | 2.5458E+01 | 3.4370E+01 | 3.4889E+01 | 2.0418E+01 | |

| F9 | Best | 6.6165E+03 | 1.9385E+03 | 5.0748E+03 | 1.8049E+03 |

| Avg | 1.5164E+04 | 4.5938E+03 | 8.9684E+03 | 2.5104E+03 | |

| Std | 7.0642E+03 | 1.8993E+03 | 2.0750E+03 | 5.8870E+02 | |

| F10 | Best | 1.1968E+04 | 5.9223E+03 | 1.1760E+04 | 6.8605E+03 |

| Avg | 1.3420E+04 | 7.4356E+03 | 1.3253E+04 | 7.7645E+03 | |

| Std | 8.6255E+02 | 1.2173E+03 | 1.0574E+03 | 8.6262E+02 | |

| F11 | Best | 2.3350E+03 | 1.5130E+03 | 1.7148E+03 | 1.3359E+03 |

| Avg | 2.9779E+03 | 1.5781E+03 | 1.8813E+03 | 1.4879E+03 | |

| Std | 5.3114E+02 | 7.4236E+01 | 1.0822E+02 | 1.3059E+02 | |

| F12 | Best | 6.4145E+08 | 2.6219E+07 | 6.3167E+07 | 1.3154E+07 |

| Avg | 1.0885E+09 | 4.7882E+07 | 2.0069E+08 | 3.3967E+07 | |

| Std | 2.6347E+08 | 2.1145E+07 | 7.0311E+07 | 1.4797E+07 | |

| F13 | Best | 3.8512E+07 | 2.2719E+05 | 1.0132E+06 | 6.0886E+04 |

| Avg | 1.0187E+08 | 8.3743E+05 | 6.2779E+06 | 2.1344E+05 | |

| Std | 5.7538E+07 | 6.5209E+05 | 7.5739E+06 | 2.2413E+05 | |

| F14 | Best | 2.2823E+05 | 8.5110E+04 | 4.0584E+04 | 1.6070E+04 |

| Avg | 8.7263E+05 | 5.0388E+05 | 1.3693E+05 | 1.3413E+05 | |

| Std | 4.7572E+05 | 2.9648E+05 | 5.4611E+04 | 8.5056E+04 | |

| F15 | Best | 1.2095E+06 | 5.0314E+04 | 6.0063E+04 | 1.8875E+04 |

| Avg | 5.9296E+06 | 2.2444E+05 | 2.6860E+05 | 4.8664E+04 | |

| Std | 4.8641E+06 | 2.4641E+05 | 2.5129E+05 | 2.1482E+04 | |

| F16 | Best | 3.5814E+03 | 2.4757E+03 | 2.9499E+03 | 2.3811E+03 |

| Avg | 4.6814E+03 | 3.2775E+03 | 3.7877E+03 | 3.0954E+03 | |

| Std | 4.9232E+02 | 3.6609E+02 | 5.6897E+02 | 4.1030E+02 | |

| F17 | Best | 3.3792E+03 | 2.6216E+03 | 3.0215E+03 | 2.7166E+03 |

| Avg | 3.7703E+03 | 2.9528E+03 | 3.4059E+03 | 2.9606E+03 | |

| Std | 2.5021E+02 | 2.6651E+02 | 3.1684E+02 | 1.9923E+02 | |

| F18 | Best | 3.2424E+06 | 8.1453E+05 | 8.1007E+05 | 1.5310E+05 |

| Avg | 7.0514E+06 | 4.6193E+06 | 1.5656E+06 | 1.3970E+06 | |

| Std | 2.9777E+06 | 3.3259E+06 | 9.5504E+05 | 1.1457E+06 | |

| F19 | Best | 2.0696E+06 | 1.1930E+04 | 2.4517E+04 | 4.4091E+03 |

| Avg | 4.0114E+06 | 8.6761E+04 | 2.7574E+05 | 2.5381E+04 | |

| Std | 2.5494E+06 | 7.4527E+04 | 3.3317E+05 | 1.9620E+04 | |

| F20 | Best | 3.2181E+03 | 2.6229E+03 | 2.6406E+03 | 2.4998E+03 |

| Avg | 3.4996E+03 | 3.1417E+03 | 3.2717E+03 | 3.0325E+03 | |

| Std | 2.2515E+02 | 3.3210E+02 | 3.4044E+02 | 2.6853E+02 | |

| F21 | Best | 2.6926E+03 | 2.4298E+03 | 2.6541E+03 | 2.3792E+03 |

| Avg | 2.7567E+03 | 2.5113E+03 | 2.6903E+03 | 2.4503E+03 | |

| Std | 4.3726E+01 | 5.7936E+01 | 2.5168E+01 | 3.5139E+01 | |

| F22 | Best | 3.2955E+03 | 8.6472E+03 | 1.3496E+04 | 7.8579E+03 |

| Avg | 1.3487E+04 | 1.0069E+04 | 1.5109E+04 | 9.2700E+03 | |

| Std | 5.0832E+03 | 8.8145E+02 | 1.0637E+03 | 8.5983E+02 | |

| F23 | Best | 3.1260E+03 | 2.8921E+03 | 3.0990E+03 | 2.8408E+03 |

| Avg | 3.1992E+03 | 2.9279E+03 | 3.1465E+03 | 2.8862E+03 | |

| Std | 4.4414E+01 | 3.0486E+01 | 3.6159E+01 | 3.3102E+01 | |

| F24 | Best | 3.3135E+03 | 3.0563E+03 | 3.2794E+03 | 3.0159E+03 |

| Avg | 3.3580E+03 | 3.0961E+03 | 3.3223E+03 | 3.0519E+03 | |

| Std | 2.9522E+01 | 3.3142E+01 | 3.2887E+01 | 3.7292E+01 | |

| F25 | Best | 3.4077E+03 | 3.0950E+03 | 3.3067E+03 | 3.0471E+03 |

| Avg | 3.5863E+03 | 3.2276E+03 | 3.4021E+03 | 3.1440E+03 | |

| Std | 1.2552E+02 | 8.9607E+01 | 7.8371E+01 | 6.2034E+01 | |

| F26 | Best | 8.1073E+03 | 5.5070E+03 | 7.4362E+03 | 4.7936E+03 |

| Avg | 8.5829E+03 | 5.9440E+03 | 8.0917E+03 | 5.5319E+03 | |

| Std | 3.8883E+02 | 3.1599E+02 | 4.3166E+02 | 5.2125E+02 | |

| F27 | Best | 3.5133E+03 | 3.3665E+03 | 3.4427E+03 | 3.3330E+03 |

| Avg | 3.6260E+03 | 3.4337E+03 | 3.5473E+03 | 3.4423E+03 | |

| Std | 1.1981E+02 | 6.3436E+01 | 6.0396E+01 | 6.2900E+01 | |

| F28 | Best | 3.8280E+03 | 3.4352E+03 | 3.5027E+03 | 3.3546E+03 |

| Avg | 4.3097E+03 | 3.6739E+03 | 3.7625E+03 | 3.5227E+03 | |

| Std | 5.0512E+02 | 1.6768E+02 | 1.5463E+02 | 1.1589E+02 | |

| F29 | Best | 4.9456E+03 | 4.0244E+03 | 4.4871E+03 | 4.0078E+03 |

| Avg | 5.6957E+03 | 4.5094E+03 | 5.0447E+03 | 4.3459E+03 | |

| Std | 6.5240E+02 | 3.2636E+02 | 3.5367E+02 | 2.6737E+02 | |

| F30 | Best | 4.7537E+07 | 7.2379E+06 | 1.1101E+07 | 4.2982E+06 |

| Avg | 1.0429E+08 | 1.2192E+07 | 3.7042E+07 | 9.7981E+06 | |

| Std | 4.1100E+07 | 3.9284E+06 | 1.7023E+07 | 3.5740E+06 |

Table A4.

The statistical results of MESBOA and its derived algorithms on the CEC 2017 in dimensions of 100.

Table A4.

The statistical results of MESBOA and its derived algorithms on the CEC 2017 in dimensions of 100.

| No. | Index | SBOA | MSBOA | ESBOA | MESBOA |

|---|---|---|---|---|---|

| F1 | Min | 3.2980E+10 | 5.3269E+09 | 1.3690E+10 | 2.4318E+09 |

| Avg | 4.2816E+10 | 1.3065E+10 | 2.4107E+10 | 5.3457E+09 | |

| Std | 5.8280E+09 | 5.6498E+09 | 5.2249E+09 | 2.6161E+09 | |

| F3 | Best | 3.3304E+05 | 2.7628E+05 | 2.7540E+05 | 2.4978E+05 |

| Avg | 4.2239E+05 | 3.1456E+05 | 3.6654E+05 | 3.0379E+05 | |

| Std | 6.7452E+04 | 2.9059E+04 | 5.0303E+04 | 3.2432E+04 | |

| F4 | Best | 3.1605E+03 | 1.2686E+03 | 2.3453E+03 | 1.0421E+03 |

| Avg | 4.9843E+03 | 1.7801E+03 | 2.8980E+03 | 1.2898E+03 | |

| Std | 1.2147E+03 | 3.1101E+02 | 3.9162E+02 | 1.8028E+02 | |

| F5 | Best | 1.5227E+03 | 9.0219E+02 | 1.3225E+03 | 8.5788E+02 |

| Avg | 1.6376E+03 | 1.0772E+03 | 1.5029E+03 | 9.5578E+02 | |

| Std | 9.0749E+01 | 1.3021E+02 | 7.9265E+01 | 7.7632E+01 | |

| F6 | Best | 6.5335E+02 | 6.3164E+02 | 6.4098E+02 | 6.2682E+02 |

| Avg | 6.6957E+02 | 6.4013E+02 | 6.5683E+02 | 6.3255E+02 | |

| Std | 8.6382E+00 | 4.6371E+00 | 7.9537E+00 | 3.5151E+00 | |

| F7 | Best | 2.2647E+03 | 1.7474E+03 | 1.9981E+03 | 1.3484E+03 |

| Avg | 2.5539E+03 | 2.0686E+03 | 2.2654E+03 | 1.5934E+03 | |

| Std | 1.2597E+02 | 2.0011E+02 | 1.4981E+02 | 1.0417E+02 | |

| F8 | Best | 1.8248E+03 | 1.2802E+03 | 1.7357E+03 | 1.1585E+03 |

| Avg | 1.9418E+03 | 1.4022E+03 | 1.8512E+03 | 1.2386E+03 | |

| Std | 7.6470E+01 | 1.0474E+02 | 6.7080E+01 | 4.7990E+01 | |

| F9 | Best | 4.5050E+04 | 1.4034E+04 | 2.3691E+04 | 8.3892E+03 |

| Avg | 5.6746E+04 | 2.0006E+04 | 4.0845E+04 | 1.3159E+04 | |

| Std | 6.4116E+03 | 3.3595E+03 | 7.1214E+03 | 1.9466E+03 | |

| F10 | Best | 2.8668E+04 | 1.4079E+04 | 2.7618E+04 | 1.4870E+04 |

| Avg | 3.0919E+04 | 1.6575E+04 | 2.9801E+04 | 1.7204E+04 | |

| Std | 1.0165E+03 | 1.6416E+03 | 1.0419E+03 | 1.1994E+03 | |

| F11 | Best | 3.1428E+04 | 6.1680E+03 | 1.5576E+04 | 3.9943E+03 |

| Avg | 5.2745E+04 | 9.4430E+03 | 2.6609E+04 | 6.3384E+03 | |

| Std | 1.1647E+04 | 2.9734E+03 | 7.1705E+03 | 1.6039E+03 | |

| F12 | Best | 5.7051E+09 | 1.9982E+08 | 1.7899E+09 | 1.4451E+08 |

| Avg | 8.9670E+09 | 5.9354E+08 | 2.6942E+09 | 4.3576E+08 | |

| Std | 1.7426E+09 | 5.4524E+08 | 6.4773E+08 | 2.5353E+08 | |

| F13 | Best | 2.6843E+08 | 1.1919E+05 | 1.1260E+07 | 1.3883E+05 |

| Avg | 5.5023E+08 | 3.2892E+06 | 4.4907E+07 | 2.7317E+06 | |

| Std | 1.6736E+08 | 3.3607E+06 | 2.1978E+07 | 2.6033E+06 | |

| F14 | Best | 2.8949E+06 | 8.8110E+05 | 1.0196E+06 | 4.8300E+05 |

| Avg | 9.2366E+06 | 3.9241E+06 | 2.7268E+06 | 1.4325E+06 | |

| Std | 4.8510E+06 | 2.4508E+06 | 1.1503E+06 | 5.8828E+05 | |

| F15 | Best | 3.3748E+07 | 1.0741E+05 | 8.8774E+05 | 3.8257E+04 |

| Avg | 9.9888E+07 | 1.2207E+06 | 3.8167E+06 | 4.2459E+05 | |

| Std | 4.1774E+07 | 1.5002E+06 | 2.8864E+06 | 1.1653E+06 | |

| F16 | Best | 9.3623E+03 | 4.8568E+03 | 8.3591E+03 | 4.7741E+03 |

| Avg | 1.0123E+04 | 6.0638E+03 | 9.2434E+03 | 5.6919E+03 | |

| Std | 5.8862E+02 | 6.6720E+02 | 4.9920E+02 | 6.0055E+02 | |

| F17 | Best | 6.9158E+03 | 4.5616E+03 | 4.9505E+03 | 3.7788E+03 |

| Avg | 7.7357E+03 | 5.0284E+03 | 6.6494E+03 | 4.4434E+03 | |

| Std | 4.4106E+02 | 3.3799E+02 | 6.0683E+02 | 4.2674E+02 | |

| F18 | Best | 6.1337E+06 | 1.2209E+06 | 1.8866E+06 | 8.1386E+05 |

| Avg | 1.2822E+07 | 4.6714E+06 | 4.3101E+06 | 2.8206E+06 | |

| Std | 5.2998E+06 | 1.7391E+06 | 2.0198E+06 | 1.4860E+06 | |

| F19 | Best | 6.0881E+07 | 7.7707E+05 | 2.3783E+06 | 2.5206E+05 |

| Avg | 1.1988E+08 | 2.6216E+06 | 6.5689E+06 | 8.8844E+05 | |

| Std | 4.5012E+07 | 1.4133E+06 | 2.9252E+06 | 5.1209E+05 | |

| F20 | Best | 6.2813E+03 | 3.7534E+03 | 5.3885E+03 | 3.8666E+03 |

| Avg | 6.8889E+03 | 4.8917E+03 | 6.3452E+03 | 4.7465E+03 | |

| Std | 3.9301E+02 | 6.4881E+02 | 5.9066E+02 | 5.2602E+02 | |

| F21 | Best | 3.3687E+03 | 2.7580E+03 | 3.2624E+03 | 2.6685E+03 |

| Avg | 3.4851E+03 | 2.8750E+03 | 3.3326E+03 | 2.7679E+03 | |

| Std | 6.5771E+01 | 5.5995E+01 | 3.3046E+01 | 6.3311E+01 | |

| F22 | Best | 3.1014E+04 | 1.5486E+04 | 2.9022E+04 | 1.7083E+04 |

| Avg | 3.3321E+04 | 1.9207E+04 | 3.2578E+04 | 1.9356E+04 | |

| Std | 1.0935E+03 | 1.8889E+03 | 1.2791E+03 | 1.7042E+03 | |

| F23 | Best | 3.8458E+03 | 3.2380E+03 | 3.7296E+03 | 3.1954E+03 |

| Avg | 3.9921E+03 | 3.3419E+03 | 3.8750E+03 | 3.3033E+03 | |

| Std | 8.6555E+01 | 4.2269E+01 | 6.7595E+01 | 6.0247E+01 | |

| F24 | Best | 4.4612E+03 | 3.6671E+03 | 4.3180E+03 | 3.6717E+03 |

| Avg | 4.5970E+03 | 3.8102E+03 | 4.4934E+03 | 3.7765E+03 | |

| Std | 9.2196E+01 | 7.8570E+01 | 9.0759E+01 | 6.9660E+01 | |

| F25 | Best | 5.5350E+03 | 3.8894E+03 | 4.6325E+03 | 3.8613E+03 |

| Avg | 6.4021E+03 | 4.5753E+03 | 5.4308E+03 | 4.1119E+03 | |

| Std | 5.9407E+02 | 3.9784E+02 | 5.0231E+02 | 2.3349E+02 | |

| F26 | Best | 1.7376E+04 | 9.6698E+03 | 1.6441E+04 | 9.5383E+03 |

| Avg | 1.8534E+04 | 1.1703E+04 | 1.7623E+04 | 1.0801E+04 | |

| Std | 8.0071E+02 | 1.2552E+03 | 6.6396E+02 | 7.3785E+02 | |

| F27 | Best | 4.1218E+03 | 3.5785E+03 | 3.8921E+03 | 3.5825E+03 |

| Avg | 4.3584E+03 | 3.7467E+03 | 4.0526E+03 | 3.7640E+03 | |

| Std | 1.5682E+02 | 1.1397E+02 | 1.1497E+02 | 1.4854E+02 | |

| F28 | Best | 7.4043E+03 | 4.2100E+03 | 5.4099E+03 | 4.2733E+03 |

| Avg | 8.6495E+03 | 5.5227E+03 | 6.9081E+03 | 5.0133E+03 | |

| Std | 7.2869E+02 | 9.2520E+02 | 1.0707E+03 | 6.2226E+02 | |

| F29 | Best | 9.6161E+03 | 6.8033E+03 | 8.7405E+03 | 6.6645E+03 |

| Avg | 1.1001E+04 | 7.7497E+03 | 9.7084E+03 | 7.4722E+03 | |

| Std | 8.5091E+02 | 3.8334E+02 | 4.2583E+02 | 4.8964E+02 | |

| F30 | Best | 1.9568E+08 | 9.2708E+06 | 3.4986E+07 | 5.9545E+06 |

| Avg | 3.6528E+08 | 2.4810E+07 | 6.9686E+07 | 1.6935E+07 | |

| Std | 1.4347E+08 | 1.0887E+07 | 3.0132E+07 | 9.2897E+06 |

Table A5.

The statistical results of MESBOA and comparison algorithms on the CEC 2022 in dimensions of 10.

Table A5.

The statistical results of MESBOA and comparison algorithms on the CEC 2022 in dimensions of 10.

| No. | Index | MESBOA | SBOA | AE | LSHADE-SPACMA | EO | GLS-RIME | CFOA | ISGTOA | RBMO | ESLPSO |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Min | 3.0008E+02 | 5.5352E+02 | 5.1390E+02 | 3.0422E+02 | 8.3144E+02 | 3.2002E+02 | 4.2691E+02 | 8.9581E+02 | 5.3312E+02 | 1.4101E+03 |

| Avg | 3.1454E+02 | 1.4071E+03 | 8.0759E+02 | 3.3737E+02 | 2.9846E+03 | 5.1977E+02 | 1.7090E+03 | 2.0241E+03 | 1.3024E+03 | 2.7115E+03 | |

| Std | 1.3722E+01 | 6.0831E+02 | 2.2829E+02 | 5.0808E+01 | 1.6190E+03 | 2.0288E+02 | 7.7035E+02 | 1.1310E+03 | 5.5710E+02 | 8.2496E+02 | |

| F2 | Min | 4.0086E+02 | 4.0601E+02 | 4.0003E+02 | 4.0049E+02 | 4.0762E+02 | 4.0054E+02 | 4.0266E+02 | 4.0006E+02 | 4.0055E+02 | 4.0026E+02 |

| Avg | 4.1401E+02 | 4.1381E+02 | 4.0334E+02 | 4.0680E+02 | 4.1322E+02 | 4.1070E+02 | 4.2983E+02 | 4.1291E+02 | 4.1438E+02 | 4.0746E+02 | |

| Std | 1.9802E+01 | 1.5470E+01 | 3.6336E+00 | 2.0036E+00 | 1.1389E+01 | 1.6317E+01 | 2.2947E+01 | 1.9173E+01 | 1.9443E+01 | 2.4406E+00 | |

| F3 | Min | 6.0009E+02 | 6.0092E+02 | 6.0010E+02 | 6.0079E+02 | 6.0047E+02 | 6.0029E+02 | 6.0831E+02 | 6.0023E+02 | 6.0029E+02 | 6.0027E+02 |

| Avg | 6.0021E+02 | 6.0350E+02 | 6.0022E+02 | 6.0131E+02 | 6.0149E+02 | 6.0168E+02 | 6.1368E+02 | 6.0083E+02 | 6.0133E+02 | 6.0052E+02 | |

| Std | 7.1382E−02 | 1.3381E+00 | 7.7883E−02 | 3.1576E−01 | 7.5906E−01 | 8.3931E−01 | 3.2778E+00 | 4.1407E−01 | 1.1305E+00 | 1.2418E−01 | |

| F4 | Min | 8.0200E+02 | 8.0797E+02 | 8.0629E+02 | 8.2205E+02 | 8.0617E+02 | 8.0931E+02 | 8.0659E+02 | 8.1427E+02 | 8.0465E+02 | 8.2239E+02 |

| Avg | 8.1592E+02 | 8.2306E+02 | 8.2573E+02 | 8.3256E+02 | 8.1799E+02 | 8.2125E+02 | 8.1999E+02 | 8.3406E+02 | 8.2102E+02 | 8.3557E+02 | |

| Std | 6.6586E+00 | 7.5038E+00 | 7.9831E+00 | 5.3833E+00 | 5.9919E+00 | 5.8839E+00 | 7.9805E+00 | 8.3771E+00 | 9.6320E+00 | 5.5557E+00 | |

| F5 | Min | 9.0000E+02 | 9.0120E+02 | 9.0001E+02 | 9.0017E+02 | 9.0025E+02 | 9.0037E+02 | 9.1103E+02 | 9.0009E+02 | 9.0009E+02 | 9.0009E+02 |

| Avg | 9.0009E+02 | 9.0615E+02 | 9.0004E+02 | 9.0095E+02 | 9.0394E+02 | 9.0513E+02 | 9.6060E+02 | 9.0055E+02 | 9.0440E+02 | 9.0016E+02 | |

| Std | 1.0028E−01 | 5.0025E+00 | 5.0214E−02 | 6.4519E−01 | 6.5938E+00 | 6.6883E+00 | 3.3360E+01 | 4.7008E−01 | 4.9037E+00 | 5.0318E−02 | |

| F6 | Min | 1.9415E+03 | 6.5012E+03 | 1.9615E+03 | 1.8758E+03 | 1.9105E+03 | 1.9400E+03 | 1.8877E+03 | 2.2054E+03 | 2.3819E+03 | 3.0471E+03 |

| Avg | 4.0973E+03 | 5.3346E+04 | 3.1572E+03 | 2.0856E+03 | 5.3879E+03 | 7.6145E+03 | 3.5093E+03 | 1.0229E+04 | 6.4031E+03 | 9.9574E+03 | |

| Std | 1.9009E+03 | 4.1672E+04 | 1.0221E+03 | 1.3273E+02 | 2.4792E+03 | 1.0724E+04 | 1.6457E+03 | 7.5371E+03 | 4.2630E+03 | 5.0076E+03 | |

| F7 | Min | 2.0173E+03 | 2.0198E+03 | 2.0263E+03 | 2.0245E+03 | 2.0138E+03 | 2.0119E+03 | 2.0202E+03 | 2.0291E+03 | 2.0224E+03 | 2.0270E+03 |

| Avg | 2.0237E+03 | 2.0346E+03 | 2.0424E+03 | 2.0376E+03 | 2.0266E+03 | 2.0245E+03 | 2.0410E+03 | 2.0405E+03 | 2.0291E+03 | 2.0395E+03 | |

| Std | 2.9332E+00 | 6.9307E+00 | 7.0220E+00 | 5.4251E+00 | 4.9229E+00 | 4.5714E+00 | 9.4837E+00 | 6.4143E+00 | 6.0680E+00 | 6.3562E+00 | |

| F8 | Min | 2.2020E+03 | 2.2170E+03 | 2.2247E+03 | 2.2193E+03 | 2.2122E+03 | 2.2058E+03 | 2.2215E+03 | 2.2219E+03 | 2.2109E+03 | 2.2143E+03 |

| Avg | 2.2213E+03 | 2.2280E+03 | 2.2298E+03 | 2.2276E+03 | 2.2269E+03 | 2.2238E+03 | 2.2269E+03 | 2.2310E+03 | 2.2260E+03 | 2.2285E+03 | |

| Std | 6.3528E+00 | 3.9364E+00 | 1.9205E+00 | 2.1586E+00 | 3.7518E+00 | 4.9446E+00 | 3.1650E+00 | 3.1939E+00 | 5.0430E+00 | 3.8392E+00 | |

| F9 | Min | 2.5293E+03 | 2.5294E+03 | 2.5293E+03 | 2.5293E+03 | 2.5293E+03 | 2.5293E+03 | 2.5300E+03 | 2.5293E+03 | 2.5293E+03 | 2.5293E+03 |

| Avg | 2.5293E+03 | 2.5300E+03 | 2.5294E+03 | 2.5293E+03 | 2.5313E+03 | 2.5299E+03 | 2.5485E+03 | 2.5295E+03 | 2.5306E+03 | 2.5293E+03 | |

| Std | 2.8182E−02 | 5.5915E−01 | 7.6917E−02 | 2.2856E−02 | 3.4659E+00 | 1.0896E+00 | 1.6445E+01 | 2.7927E−01 | 6.8452E+00 | 1.5645E−03 | |

| F10 | Min | 2.5003E+03 | 2.5004E+03 | 2.5004E+03 | 2.5004E+03 | 2.5002E+03 | 2.5003E+03 | 2.5004E+03 | 2.5004E+03 | 2.5003E+03 | 2.5003E+03 |

| Avg | 2.5155E+03 | 2.5217E+03 | 2.5005E+03 | 2.5005E+03 | 2.5239E+03 | 2.5121E+03 | 2.5014E+03 | 2.5284E+03 | 2.5162E+03 | 2.5279E+03 | |

| Std | 3.9134E+01 | 4.7554E+01 | 7.8479E−02 | 7.9151E−02 | 4.7604E+01 | 3.5340E+01 | 2.0170E+00 | 5.1316E+01 | 4.0826E+01 | 5.5774E+01 | |

| F11 | Min | 2.6004E+03 | 2.6578E+03 | 2.9200E+03 | 2.6417E+03 | 2.7296E+03 | 2.6739E+03 | 2.7942E+03 | 2.6411E+03 | 2.7474E+03 | 2.6188E+03 |

| Avg | 2.7765E+03 | 2.8522E+03 | 2.9269E+03 | 2.7351E+03 | 2.9018E+03 | 2.9883E+03 | 3.5609E+03 | 2.8197E+03 | 2.9310E+03 | 2.8669E+03 | |

| Std | 1.7604E+02 | 1.4014E+02 | 5.1499E+00 | 1.1136E+02 | 1.3348E+02 | 1.3768E+02 | 2.8950E+02 | 1.1526E+02 | 1.7076E+02 | 1.1558E+02 | |

| F12 | Min | 2.8607E+03 | 2.8611E+03 | 2.8642E+03 | 2.8628E+03 | 2.8626E+03 | 2.8597E+03 | 2.8634E+03 | 2.8611E+03 | 2.8586E+03 | 2.8594E+03 |

| Avg | 2.8632E+03 | 2.8641E+03 | 2.8649E+03 | 2.8645E+03 | 2.8645E+03 | 2.8649E+03 | 2.8673E+03 | 2.8638E+03 | 2.8635E+03 | 2.8631E+03 | |

| Std | 1.1067E+00 | 9.7370E−01 | 1.7525E−01 | 6.9615E−01 | 1.0259E+00 | 1.7099E+00 | 2.1460E+00 | 1.1296E+00 | 1.9248E+00 | 1.5283E+00 |

Table A6.

The statistical results of MESBOA and comparison algorithms on the CEC 2022 in dimensions of 20.

Table A6.

The statistical results of MESBOA and comparison algorithms on the CEC 2022 in dimensions of 20.

| No. | Index | MESBOA | SBOA | AE | LSHADE-SPACMA | EO | GLS-RIME | CFOA | ISGTOA | RBMO | ESLPSO |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Min | 8.3858E+02 | 5.0359E+03 | 5.0137E+03 | 4.8345E+02 | 6.0819E+03 | 1.8946E+03 | 6.0716E+03 | 9.4921E+03 | 6.1834E+03 | 1.0408E+04 |

| Avg | 1.6891E+03 | 1.4221E+04 | 1.0012E+04 | 3.7849E+03 | 1.8233E+04 | 7.5399E+03 | 1.1330E+04 | 1.5542E+04 | 1.3538E+04 | 1.6375E+04 | |