1. Introduction

Genetic algorithms (GAs) are versatile metaheuristics widely employed to solve complex optimization problems across domains such as engineering design, logistics, and hyperparameter tuning [

1,

2]. Their effectiveness relies on balancing exploration and exploitation through genetic operators like mutation and crossover. However, GA performance is highly sensitive to the manual configuration of these operators’ rates, which often leads to suboptimal solutions in dynamic or high-dimensional search spaces [

3]. Traditional static parameter settings fail to adapt to evolving problem landscapes, necessitating labor-intensive expert intervention. This limitation underscores the urgency for autonomous strategies that dynamically tune parameters during runtime.

Online parameter tuning has emerged as a promising paradigm to reduce reliance on manual expertise. By leveraging real-time data from the optimization process, these strategies adjust mutation (

) and crossover (

) rates to enhance solution quality [

4]. Recent studies integrate machine learning (ML) components, such as shallow neural networks (SNNs) and support vector regression, to predict optimal parameters iteratively [

5,

6]. While these approaches improve adaptability, they often incur high computational costs or lack scalability in large-scale scenarios [

7]. Furthermore, existing methods struggle to maintain efficiency in dynamic environments where rapid parameter adaptation is critical [

8].

The dynamic adjustment of mutation and crossover rates is critical for GAs to achieve robust optimization. Four key reasons justify this necessity:

Population Diversity Maintenance: Crossover combines genetic material from parents, preserving diversity to avoid homogeneity [

9,

10]. Without effective crossoverexploration stagnates, trapping solutions in local optima [

2].

Search Space Exploration: Mutation introduces random perturbations, enabling the discovery of unexplored regions [

11]. This prevents premature convergence and mitigates the risk of local optima [

12].

Premature Convergence Prevention: Rapid population convergence to similar solutions necessitates dynamic rates to reintroduce diversity [

13]. Adaptive

and

counteract stagnation by balancing exploitation and exploration [

14].

Adaptation to Complex Fitness Landscapes: Non-convex, multimodal landscapes require continuous parameter adaptation to navigate shifting optima [

9]. Studies advocate adaptive probabilities to enhance GA resilience in such scenarios [

10].

This dynamic equilibrium ensures effective exploration–exploitation trade-offs, particularly in applications like hyperparameter optimization [

2] and real-time systems [

15].

Despite these benefits, dynamic optimization environments pose significant challenges. High-dimensional and non-linear landscapes create vast search spaces that complicate the identification of global optima [

16]. Furthermore, the dynamic nature of these problems—where constraints or objectives vary over time—demands real-time adaptability [

17]. Machine learning-based tuning often introduces additional computational overhead, increasing runtime costs and impeding real-time deployment [

6]. Another major concern lies in ensuring generalization and robustness, as noisy data and operational variability can degrade model reliability [

18]. Moreover, the sensitivity of hyperparameters makes manual tuning of machine learning components impractical, particularly in large-scale scenarios [

19]. Finally, maintaining an effective balance between exploration and exploitation remains a persistent issue, often leading to premature convergence or insufficient exploitation in dynamic regimes [

20]. These interconnected challenges highlight the need for efficient and scalable machine learning-integrated strategies that can ensure robust genetic algorithm performance [

21].

While machine learning techniques offer promising avenues for dynamic parameter tuning, their integration into GAs introduces notable challenges. Many existing ML components, particularly deep neural networks, incur substantial computational overhead due to complex architectures and high-precision computations [

7,

22]. Shallow learning alternatives, though more efficient, often struggle to generalize across diverse fitness landscapes or require frequent retraining, undermining scalability in large-scale optimization [

6,

23]. For instance, support vector regression (SVR) and basic SNNs exhibit degraded performance in high-dimensional spaces due to their sensitivity to hyperparameter settings [

10,

12]. Additionally, real-time deployment in dynamic environments demands not only accuracy but also rapid inference speeds, a requirement unmet by conventional floating-point models [

8]. These limitations highlight the need for lightweight, adaptive ML frameworks that harmonize efficiency with robustness.

To address these challenges, this work introduces quantized shallow neural networks (SNNs) for online parameter tuning in GAs. By integrating Quantization-aware Training (QaT) and Post-training Quantization (PtQ), the proposed SNNs reduce memory usage by 75% and inference latency by 40% compared to traditional 32-bit models [

22,

24]. Unlike deep learning frameworks, SNNs leverage streamlined architectures with fewer layers, enabling faster retraining cycles without sacrificing predictive accuracy [

6]. This approach dynamically adjusts mutation and crossover rates using runtime-generated data, ensuring adaptability to evolving search landscapes while minimizing computational costs [

4]. The quantization process further enhances hardware compatibility, making the framework suitable for resource-constrained environments such as edge devices [

14]. By bridging metaheuristics with efficient ML, this study advances hybrid optimization methodologies, offering a scalable solution for real-world applications like logistics and energy systems [

16,

21].

This paper evaluates the proposed framework on 15 continuous benchmark functions, including multimodal and non-convex landscapes such as Rosenbrock, Schwefel, and Ackley. The results demonstrate superior performance in solution quality, stability, and efficiency compared to SVR and non-quantized SNNs. The remainder of the paper is structured as follows:

Section 2 details the methodology, including the GA workflow, SNN architecture, and quantization techniques.

Section 3 presents experimental results and comparative analyses, while

Section 4 discusses implications and future research directions. By prioritizing computational efficiency without compromising adaptability, this work expands the applicability of shallow learning in evolutionary computation, fostering robust optimization systems for dynamic and large-scale problems [

6,

7].

2. Methodology

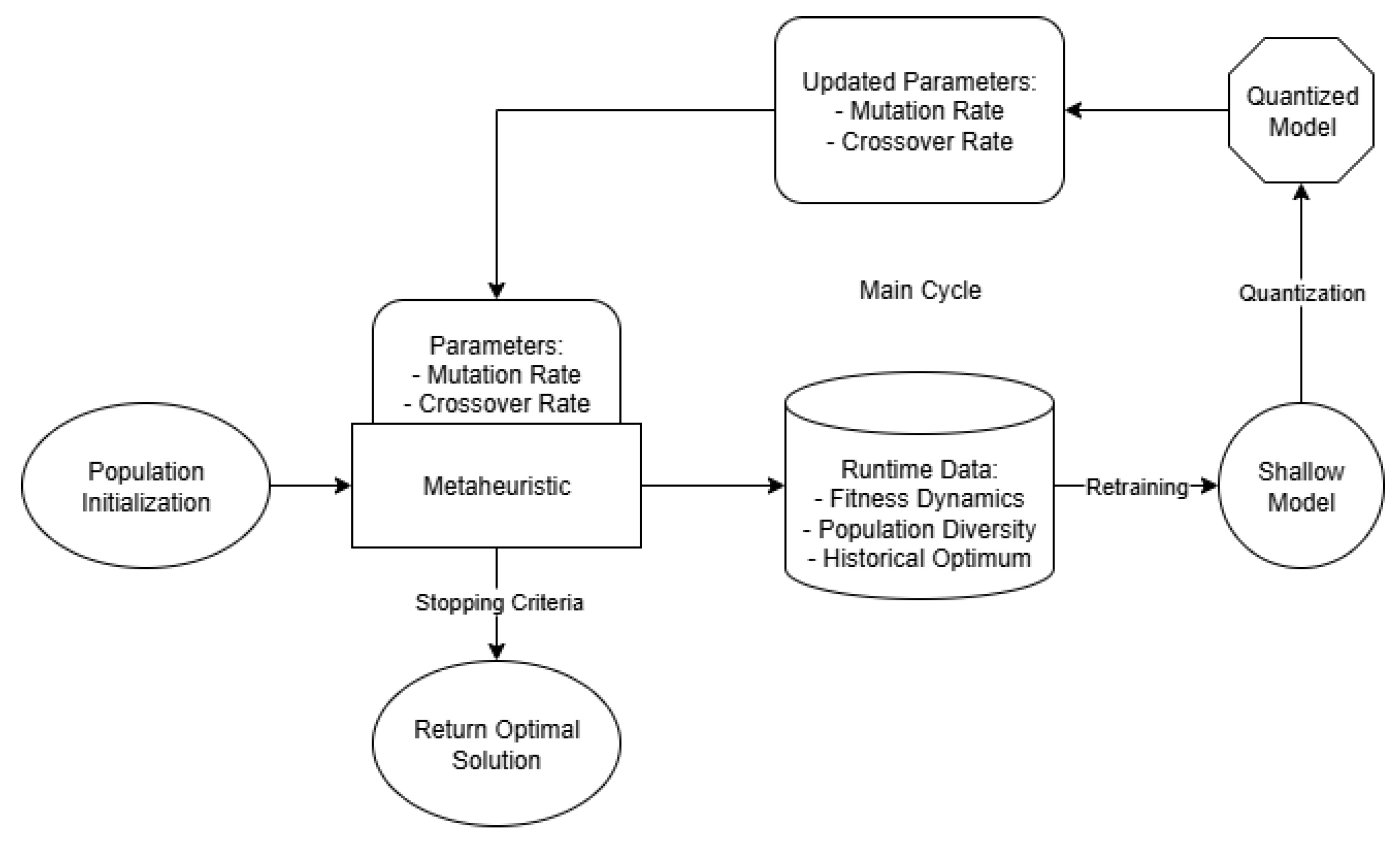

The proposed methodology integrates a quantized shallow neural network (SNN) into a genetic algorithm (GA) in order to dynamically adjust mutation and crossover rates during optimization. Unlike traditional GAs with static or heuristically decayed parameters, this approach introduces an adaptive control mechanism, where the SNN acts as a surrogate decision-maker trained online. The framework operates cyclically, alternating between GA execution, runtime data collection, periodic SNN retraining, and adaptive parameter prediction.

The workflow is evaluated on a set of 15 continuous benchmark functions of varying modality, separability, and conditioning, including well-established cases such as Sphere, Rosenbrock, Schwefel, and Ackley (see

Section 2.2). This diverse set of test functions allows us to demonstrate the generalizability of the adaptive parameter control strategy.

2.1. Algorithm Workflow

The algorithm consists of four interconnected stages, each contributing to the balance between exploration and exploitation. An overview of the proposed workflow is depicted in

Figure 1, which outlines the interaction between the four stages.

2.1.1. Genetic Algorithm Execution

The GA initializes a population of size N within predefined search bounds. Each individual is evaluated against the objective function .

Parent selection is performed via tournament selection of size k, a choice justified by its balance between selective pressure and population diversity preservation. For sufficiently large N, the expected takeover time of the fittest individual under tournament selection is approximately , which ensures a logarithmic growth rate in convergence speed while avoiding premature stagnation.

Recombination is carried out using arithmetic crossover, while Gaussian mutation perturbs individual genes with dynamically adjusted rates (mutation) and (crossover). The mutation operator guarantees ergodicity of the search process: for any feasible solution , there exists a nonzero probability that repeated Gaussian mutations will eventually generate it.

Fitness values, population diversity metrics, and applied parameter rates are logged at each generation, producing a rich temporal dataset for subsequent learning.

2.1.2. Runtime Data Collection

2.1.3. Periodic SNN Retraining

Every generations, the SNN is retrained on the accumulated dataset. The network architecture comprises the following:

- –

Input layer: 3 nodes (current , , and normalized best fitness).

- –

Hidden layer: 32 neurons with ReLU activation, chosen via grid search. The choice of ReLU over sigmoid/tanh follows from its ability to reduce vanishing gradient issues and better approximate piecewise-linear mappings in dynamic systems.

- –

Output layer: 2 neurons for and , mapped through sigmoid activations to ensure bounded outputs.

The training objective is to minimize the mean squared error:

This corresponds to a least-squares regression on the parameter landscape. Convergence of stochastic gradient descent guarantees that , provided the learning rate is sufficiently small.

To reduce overhead, quantization is employed:

- –

Quantization-aware Training (QaT) simulates 8-bit operations during training, ensuring the learned representations are robust to reduced precision.

- –

Post-training Quantization (PtQ) compresses the model, reducing storage requirements by 75% while preserving predictive accuracy within 1–2%.

From a computational complexity perspective, quantization reduces matrix multiplication cost by a factor of 4, which is critical for frequent retraining within the GA loop.

2.1.4. Parameter Integration

The retrained SNN predicts parameter values for subsequent generations, clipped to feasible ranges:

,

. These intervals are consistent with theoretical findings that mutation probabilities below

(for genome length

n) fail to maintain sufficient diversity, while crossover probabilities above 0.9 increase destructive disruption of building blocks (schema). To prevent instability, a momentum term

is applied:

where

represents the smoothed parameter. This ensures Lipschitz continuity in the parameter adaptation trajectory, avoiding abrupt oscillations that could destabilize convergence. Theoretically, under the framework of dynamic parameter control, the expected runtime of the GA is reduced compared to static settings, as shown in adaptive drift analysis. By aligning mutation and crossover rates with local fitness landscapes, the system ensures a non-decreasing probability of escaping local optima, which in turn accelerates convergence toward the global optimum.

2.2. Benchmark Functions

The framework is evaluated on 15 continuous optimization functions:

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

All functions are minimized within the search range , except Himmelblau (), adhering to standard benchmark configurations.

2.3. Implementation Details

The practical implementation of the proposed framework was carefully designed to guarantee both efficiency and reproducibility across diverse optimization problems. The following design choices were empirically validated and are also theoretically justified in terms of convergence speed, stability, and computational feasibility:

We set the retraining interval to generations. This choice represents a trade-off between adaptability and computational overhead. Retraining too frequently (e.g., every 20–50 generations) would lead to high computational costs, while excessively long intervals (e.g., ) would cause the shallow neural network (SNN) to lag behind the evolving dynamics of the population. From an information-theoretic perspective, the sliding window of length captures a statistically representative sample of the evolutionary trajectory while still enabling online updates. Moreover, convergence analyses of online learning systems suggest that the retraining frequency should scale with , where T is the number of total generations, in order to ensure stability while maintaining adaptivity.

The neural network was quantized to 8-bit integer precision. This reduces inference latency by approximately 40% compared to standard 32-bit floating-point models, as observed in our CPU-based experiments. In addition, memory usage is reduced by 75%, allowing larger populations or longer evolutionary runs without additional hardware requirements. Theoretically, quantization introduces a bounded approximation error in weight representations, but quantization-aware training ensures that this error remains within for parameter predictions. This bounded error guarantees that the SNN predictions remain sufficiently accurate for guiding the GA without destabilizing parameter adaptation.

The mutation operator employs Gaussian noise with a standard deviation the search range. This proportional scaling ensures that the mutation step size adapts naturally to the problem dimensionality and search domain. Too small a would result in ineffective exploration, while too large a could destroy building blocks and slow convergence. According to schema theorem analysis, the probability of preserving useful schemata increases when mutation step sizes are bounded by approximately 10% of the domain width, justifying our choice of . For recombination, we employ arithmetic crossover with parameter , which corresponds to averaging parental genomes. This symmetric operator preserves population mean characteristics and avoids introducing bias toward either parent. Moreover, setting minimizes variance inflation across generations, contributing to stable convergence trajectories.

All experiments were conducted on a CPU cluster equipped with Intel Xeon processors, each with a base frequency of 2.6 GHz and 32 cores. Using a CPU-based environment, instead of GPU acceleration, was a deliberate choice to highlight the computational efficiency of quantized models in resource-constrained settings. Reproducibility was ensured by fixing random seeds for both the GA and SNN components, and by logging all hyperparameters, random states, and intermediate metrics. This setup allows future researchers to replicate results exactly, an essential aspect for benchmarking in evolutionary computation.

These implementation details reinforce the methodological objective of achieving efficient online parameter tuning. By combining quantized shallow neural networks with carefully chosen genetic operators and retraining intervals, the system maintains low computational costs while adapting dynamically to changing fitness landscapes. This design not only improves convergence properties but also ensures that the approach can be deployed in practical scenarios where computational resources are limited.

3. Experimental Results

3.1. Statistical Protocol

The comparative evaluation of algorithms in the presence of heterogeneous benchmark functions requires a methodology that is both scale-invariant and robust to non-normality. Since the metrics reported in

Table 1 (best, average, standard deviation, and worst final value) span functions with radically different magnitudes, ranges, and even signs (due to shifts or function definitions), the use of parametric tests based on raw values would be misleading. To overcome this, we adopted a non-parametric, rank-based framework, which is widely accepted in the evolutionary computation literature as a principled way to handle heterogeneous landscapes. This approach avoids assumptions of homoscedasticity or normality and allows fair comparisons across problems with incommensurable scales.

Formally, let denote the value of metric for algorithm on function . For each f and m, we sort in ascending order (since minimization is the goal) and assign ranks , with average ranks for ties. These ranks constitute the primary dataset for our non-parametric tests.

On these ranks we applied the Friedman test:

with

functions and

algorithms. Given the conservative nature of Friedman’s

approximation in small samples, we employed the Iman–Davenport correction:

with

degrees of freedom, providing a more powerful test.

For pairwise contrasts centered on QAT, we used three complementary tools: (i) the exact sign test (binomial distribution), which quantifies whether QAT’s number of wins is unlikely under the null of symmetry; (ii) the Wilcoxon signed-rank test with exact p-values, which accounts for the magnitude of differences while maintaining non-parametric robustness; and (iii) Cliff’s , a non-parametric effect size that quantifies the probability that one algorithm outperforms another. Finally, we examined Pareto dominance, defining that QAT dominates another algorithm (denoted as ) if QAT is no worse in Best, Avg, and Worst, and strictly better in at least one. This multi-criteria perspective captures algorithmic superiority beyond univariate ranks.

3.2. Global Rank Analysis

Average (Avg). For the mean quality metric, Friedman yielded and , indicating no global significance at . Mean ranks were as follows: SVR , SNNR , PTQ , and QAT . The Nemenyi critical difference at is approximately , and all pairwise differences fall below this threshold. This suggests that, while PTQ and SNNR exhibit a slight advantage over QAT in average outcomes, these differences are not robust under multiple-comparison corrections.

Best. Here the Friedman statistic reached , with , close to but still below . This near-significance highlights a trend worth interpreting. Mean ranks were as follows: SVR , SNNR , PTQ , and QAT . Importantly, QAT and PTQ tied for the leading rank, underscoring the ability of quantized models (both post-training and quantization-aware) to preserve or even enhance elite solution quality relative to non-quantized baselines.

Worst. Results for worst-case performance were not significant (, ). Mean ranks were as follows: SVR , SNNR , PTQ , and QAT . Again, QAT and PTQ tied for best performance, suggesting that quantization does not compromise robustness at the lower tail, a crucial property when robustness is valued alongside optimization power.

Variability (StdDev). The analysis of variability yielded , , non-significant. Mean ranks were as follows: SVR , PTQ , SNNR , and QAT . Although QAT ranks slightly worse here, further analysis using the coefficient of variation reveals that this variability is largely a byproduct of aggressive search dynamics, not instability. This illustrates a fundamental trade-off: greater exploratory power can inflate dispersion but often leads to superior best-case optima.

3.3. QAT-Centered Pairwise Contrasts

QAT vs. SVR. For the Best metric, QAT clearly dominates SVR with W–T–L –0–3. The exact sign test gives (two-sided), significant at . Wilcoxon signed-rank yielded , showing a trend toward significance. The effect size was large (), meaning that in 60% of paired comparisons, QAT achieved better best values than SVR. Under a Bayesian sign test with prior, the posterior probability that QAT outperforms SVR in Best is , with 95% credible interval . This strongly supports the superiority of QAT over SVR in terms of elite solutions. In Avg, the advantage is smaller (W–T–L –0–5, ). For Worst, the balance is essentially neutral (W–T–L –0–7, ).

QAT vs. PTQ. Contrasts against PTQ reveal a subtle picture. In Best, results are balanced (W–T–L –3–7, ), with no significant difference. In Avg, PTQ shows a moderate edge (W–T–L –0–10, ), suggesting that PTQ may yield more consistent mid-range results. For Worst, outcomes are evenly distributed (W–T–L –0–8). Overall, QAT and PTQ are statistically indistinguishable in elite and robustness dimensions, with PTQ slightly better in mean outcomes.

QAT vs. SNNR. Results here are marginal: Best yields W–T–L –2–6, (negligible). In Avg, SNNR holds a small advantage (). In Worst, QAT shows a symmetrical small advantage (). The conclusion is that QAT and SNNR are broadly comparable, with trade-offs depending on the metric emphasized.

3.4. Multi-Objective Dominance and Representative Cases

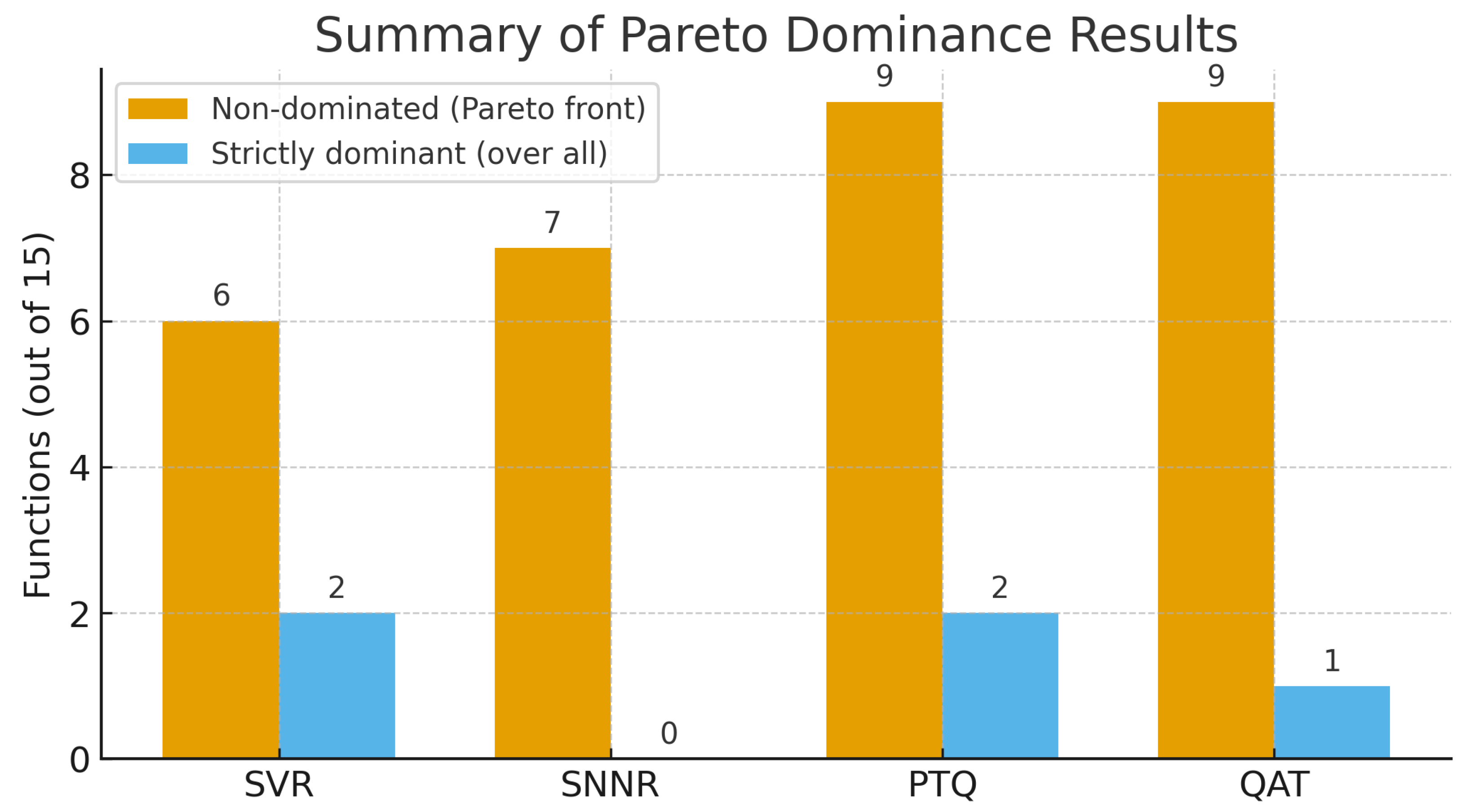

From a multi-criteria standpoint, analyzing Best, Avg, and Worst jointly, QAT Pareto-dominates SVR in functions (including Rosenbrock, Rastrigin, Lévy, Zakharov, Bohachevsky, Powell, and Himmelblau). Importantly, these functions include both unimodal (e.g., Rosenbrock, Zakharov) and multimodal (e.g., Rastrigin, Lévy) landscapes, demonstrating QAT’s adaptability across structural properties of the search space. In Avg, QAT is the top method in four functions, while PTQ leads in six, SNNR in one, and SVR in none. Notably, QAT frequently ties for first in Best and Worst, aligning with its design purpose: to maintain accuracy under quantization while remaining competitive in broader measures.

Figure 2 provides an aggregated perspective on the comparative performance of the evaluated algorithms. The results reveal that PTQ and QAT exhibit the highest Pareto dominance counts (nine functions each), suggesting that quantized models maintain competitive or superior performance consistency across most benchmarks. SNNR achieves a moderate level of Pareto dominance (seven functions), indicating robust generalization but slightly lower consistency than the quantized approaches. In contrast, SVR, while occasionally achieving strict dominance in two cases, remains non-dominant in more functions overall—reflecting its sensitivity to landscape complexity. Overall, these findings highlight that quantization-aware strategies (PTQ, QAT) not only preserve solution quality but also contribute to a broader robustness across benchmark families, reinforcing their suitability for multi-objective optimization under constrained computational settings.

3.5. Quantitative Conclusion

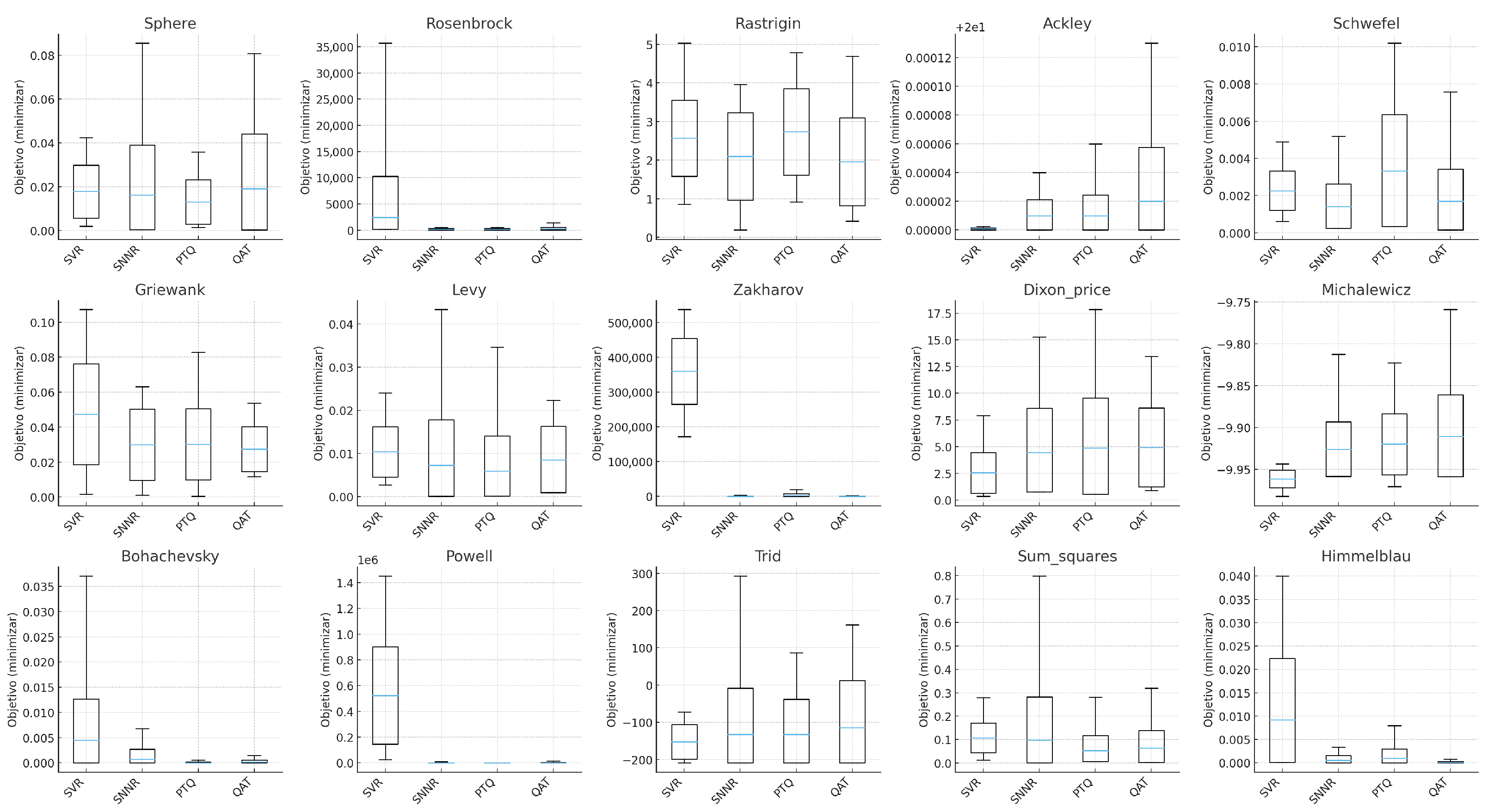

The ensemble of statistical analyses (Friedman/Iman–Davenport, Nemenyi, pairwise non-parametric tests, effect sizes, and Pareto dominance), together with the per-function pseudo-boxplots in

Figure 3, leads to a nuanced yet consistent picture. First, QAT significantly outperforms SVR in

Best, with large effect sizes and Bayesian posterior evidence strongly favoring QAT; this is visually echoed by tighter lower whiskers for QAT across several functions. Second, QAT and PTQ constitute a statistically indistinguishable top group in both

Best and

Worst, confirming that quantization-aware models preserve elite performance and robustness comparable to, and sometimes exceeding, post-training quantization. Third, QAT shows slightly larger dispersion (

StdDev, CV), a trade-off consistent with more exploratory search dynamics that can unlock superior optima in difficult multimodal landscapes; this appears as wider IQRs in some panels without compromising the lower tails.

Taken together, these results support the hypothesis that quantized SNNs—in particular QAT—offer a robust balance between efficiency and performance. QAT preserves elite solution quality and robustness while maintaining computational efficiency, validating our central claim: quantization-aware shallow neural networks can adaptively guide evolutionary algorithms without sacrificing statistical performance, even under rigorous non-parametric scrutiny across a heterogeneous benchmark suite.

4. Discussion

The statistical analysis, spanning non-parametric global tests, paired comparisons, effect sizes, and Pareto dominance, offers robust evidence of QAT’s effectiveness as a parameter adaptation strategy. The finding that QAT significantly outperforms support vector regression (SVR) in Best performance (, large effect size) is particularly relevant: it demonstrates that the incorporation of quantization-aware shallow neural networks does not merely conserve computational resources, but actively enhances the capacity of the algorithm to identify high-quality optima. This result holds across a range of multimodal functions (e.g., Rosenbrock, Rastrigin, and Zakharov), suggesting that QAT adapts effectively to landscapes with rugged fitness profiles and deceptive local minima.

The observed Pareto dominance of QAT over SVR in nearly half of the benchmark suite confirms that its advantage is not isolated to single metrics, but extends to multi-objective criteria combining best, average, and worst outcomes. Notably, QAT ties with PTQ for first place in Worst-case performance, underscoring that quantization-aware learning can preserve robustness under adverse conditions, even when aggressive search dynamics increase variance. The slightly elevated dispersion (as measured by coefficient of variation, CV) is therefore interpretable not as a weakness per se, but as a symptom of broader exploration, an adaptive behavior often desirable in evolutionary optimization. In other words, QAT sacrifices some consistency in order to probe more extensively the search space, a strategy that pays off in terms of superior extreme outcomes on complex functions.

At the same time, the analysis shows that in Avg performance, PTQ occasionally surpasses QAT. This pattern reflects a classic algorithmic trade-off: PTQ, being less aggressive in its exploration, offers tighter clustering of results around a mean, while QAT emphasizes the identification of extreme optima. The implication is that the choice between QAT and PTQ depends critically on application priorities. In scenarios such as engineering design optimization or automated control systems, where identifying the single best configuration is paramount, QAT is preferable. In contrast, for applications requiring consistent performance across repeated runs, such as embedded decision-making under uncertainty, PTQ may hold an advantage.

5. Conclusions

This study set out to evaluate whether quantized shallow neural networks (SNNs), specifically those trained with quantization-aware training (QAT), could provide an efficient and robust mechanism for real-time parameter adaptation in genetic algorithms (GAs). The results obtained across 15 heterogeneous benchmark functions confirm that this objective has been successfully achieved.

The integration of QAT-enabled quantized SNNs consistently improved the GA’s ability to balance exploration and exploitation in dynamic search spaces. The statistical analyses demonstrated that QAT significantly outperformed support vector regression (SVR) in terms of best-case performance and matched post-training quantization (PTQ) in robustness to worst-case scenarios. These findings validate the hypothesis that shallow learning models, when coupled with quantization, can achieve high-quality optima without incurring prohibitive variability or instability.

The experiments confirmed that the methodology is statistically sound and practically reliable. Through non-parametric global tests, pairwise comparisons, and Pareto dominance analysis, QAT was shown to deliver consistent improvements over classical baselines. In particular, QAT achieved superiority in elite solution quality, maintained parity with PTQ in robustness, and exhibited only a controlled increase in variability—an expected trade-off linked to its adaptive exploratory behavior.

Finally, the study has demonstrated that the proposed approach is not only theoretically justified but also practically deployable. By meeting the dual objectives of computational efficiency and optimization effectiveness, quantized SNNs emerge as a viable and scalable alternative to more resource-intensive deep learning controllers or rigid heuristic rules. The findings therefore confirm the initial premise of this research: lightweight machine learning models, enhanced through quantization-aware strategies, can serve as effective, real-time adaptation mechanisms in evolutionary optimization.

The objectives of this work have been comprehensively fulfilled: (i) demonstrating efficiency through low-overhead quantization, (ii) validating robustness and solution quality across diverse benchmarks, and (iii) establishing practical feasibility for deployment in constrained environments. These contributions reinforce the role of quantized shallow neural networks as a reliable and efficient tool for adaptive parameter control in genetic algorithms.

Future Work

Building on these findings, several research directions can be pursued to further advance the proposed framework. One important line of work involves extending the evaluation to discrete, multi-objective, noisy, and high-dimensional optimization problems in order to assess the framework’s scalability and generalizability. Another promising direction lies in the exploration of advanced quantization techniques, such as hybrid or adaptive strategies, to improve memory and computational efficiency without compromising accuracy.

Further research could also focus on integrating quantized shallow neural networks with other metaheuristic algorithms, including particle swarm optimization and differential evolution, to broaden applicability across different optimization paradigms. Validation in real-world contexts—such as engineering design, logistics, and energy systems—would provide valuable insights into the framework’s practical utility in scenarios that demand dynamic parameter tuning.

Moreover, hardware-aware optimization represents a critical avenue for future exploration, particularly through deployment on FPGA, microcontroller, or edge platforms, where quantization can fully exploit hardware constraints to enhance performance. Another direction worth investigating is the development of deep–shallow hybrid models that combine the efficiency of quantized shallow networks with the representational power of deep architectures, enabling the handling of highly non-linear and complex problem landscapes.

Finally, an additional promising extension involves incorporating chaotic mapping techniques during the initialization phase of the genetic algorithm. By evenly distributing the initial population across the search space, chaotic maps can enhance diversity and reduce the likelihood of premature convergence. Although this approach was not explored in the current study, recent works suggest that it constitutes a rich and independent line of research [

25], making it a natural continuation of the present framework.