Abstract

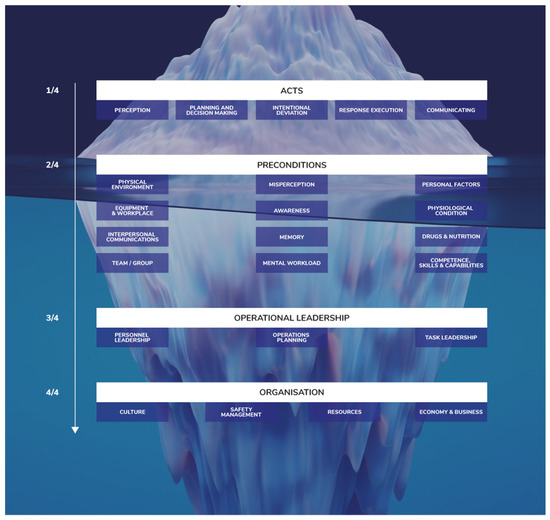

Human factors (HF) in aviation and maritime safety occurrences are not always systematically analysed and reported in a way that makes the extraction of trends and comparisons possible in support of effective safety management and feedback for design. As a way forward, a taxonomy and data repository were designed for the systematic collection and assessment of human factors in aviation and maritime incidents and accidents, called SHIELD (Safety Human Incident and Error Learning Database). The HF taxonomy uses four layers: The top layer addresses the sharp end where acts of human operators contribute to a safety occurrence; the next layer concerns preconditions that affect human performance; the third layer describes decisions or policies of operations leaders that affect the practices or conditions of operations; and the bottom layer concerns influences from decisions, policies or methods adopted at an organisational level. The paper presents the full details, guidance and examples for the effective use of the HF taxonomy. The taxonomy has been effectively used by maritime and aviation stakeholders, as follows from questionnaire evaluation scores and feedback. It was found to offer an intuitive and well-documented framework to classify HF in safety occurrences.

1. Introduction

Human operators play crucial roles in the safe, resilient and efficient conduct of maritime and air transport operations. Consequently, human factors (HF) are often reported as contributors to maritime and aviation incidents and accidents [1]. The importance of supporting the human actors and reducing the potential of human contributions to incidents/accidents by addressing human factors in the design and conduct of transport operations is recognised as being fundamental by researchers, regulators and the transport industry. However, there is a scarcity of suitable HF data derived from the investigation of safety occurrences in support of effective safety management and feedback for design. Details about human contributors are often not systematically analysed or reported in a way that makes the extraction of trends and comparisons possible.

In the maritime sector, guidance and structure to investigate marine accidents and incidents are provided through international organisations such as the International Maritime Organization (IMO) and the European Maritime Safety Agency (EMSA). Various databases and analysis techniques are in use for maritime occurrences, including the European Marine Casualty Information Platform (EMCIP) by EMSA [2], the Confidential Human Factors Incident Reporting Programme (CHIRP) for maritime [3], Nearmiss.dk [4], ForeSea [5], and commercial (non-public) tools. However, there is a lack of sufficiently detailed public guidance and standards for structuring HF-based investigations, and this complicates learning from occurrences. This is consistent with a comparative assessment of the Human Performance Capability Profile (HPCP) of maritime and aviation organisations, where it was found that the maturity levels for maritime were less than for the aviation domain [6].

Considering HF in safety is complex and multi-layered in nature. Similar to Heinrich’s safety iceberg [7], this may be envisioned as an HF iceberg since (i) what typically can be seen in incident reports is the tip and there is usually much more underneath, and (ii) to prevent the recurrence of a type of incident, one needs to take care with what is under the waterline, and not only focus on what is above:

- At the top of the iceberg, it may be relatively easy to see what happened in an occurrence: Who did what and what happened is usually known or at least strongly suspected. The analysis focus at this level is observable performance. The reasons why it happened are not uncovered, such that there is no change in the design or operation. Somebody (if they survived) can be blamed and re-trained, until the next occurrence. The issue is seen as dealt with, but safety learning has failed.

- The second layer considers a range of factors affecting human performance, including HF concepts such as workload, situation awareness, stress and fatigue, human system interfaces, and teamwork. Investigating incidents at this level can lead to more effective prevention strategies and countermeasures, addressing systemic and structural aspects, e.g., job design and interface design.

- The third layer considers how the operations were locally organised and led by operations leaders. There are internal and external targets placing demands on the operators. These may result in workarounds, which can support the efficiency and productivity of the work, which can sometimes also allow risks to emerge. The challenge is determining how jobs and tasks are actually performed in this context. Learning considers the local organisation of the work and leadership strategies in operations.

- The fourth and final layer considers the culture (professional, organisational, national) and socioeconomics (demands, competition) of each sector, which affect individuals, organisations and industries. The analysis focus at this layer is on norms, values and ways of seeing the world and doing things. Such understanding is key to ensuring that derived safety solutions and countermeasures are sustainable for the staff and operating conditions.

A layered structure as in the HF iceberg is well known from the Swiss Cheese Model (SCM) of James Reason [8,9], which is a conceptual model describing multiple contributors at various levels in safety occurrences, as well as the from the SCM-derived and more specific Human Factors Analysis and Classification System (HFACS) [10]. These types of models set a basis for development in this study of a more detailed data repository for gathering and assessing human factors in safety occurrences in aviation and maritime operations, which is called SHIELD: Safety Human Incident and Error Learning Database. The objective is to support designers of systems and operations, risk assessors and managers in easily retrieving and analysing sets of safety occurrences for selected human performance influencing factors, safety events and contextual conditions. This allows learning from narratives of reported occurrences to identify key contributory factors, determine statistics and set a basis for including human elements in quantitative risk models of maritime and aviation operations. Effective learning requires a proper just culture, where people are not blamed for honest mistakes, and a healthy reporting culture, where incidents are reported as a way to improve safety [11].

The objectives of this paper are to present the development of SHIELD and its HF taxonomy, as well as its evaluation and feedback from users. In particular, this paper presents in full detail the HF taxonomy, including guidance and examples for its use. A more concise conference paper on SHIELD development was presented in [12]. Section 2 presents the HF and other taxonomies of SHIELD and the methods for data collection and evaluation by stakeholders. Section 3 presents illustrative cross-domain HF statistics and the results of evaluation by stakeholders. Section 4 provides a discussion of the developments and results. The details of the HF taxonomy, guidance and examples for all its factors are provided in Appendix A.

2. Materials and Methods

2.1. Human Factors Taxonomy

The design of the SHIELD HF taxonomy began via a review of sixteen different taxonomies [13,14] that were developed for safety occurrences in various domains:

- Aviation, including ADREP/ECCAIRS (Accident/Incident Data Reporting by European Co-ordination Centre for Aviation Incident Reporting System) [15], HERA (Human Error in Air Traffic Management) [16] and RAT (Risk Analysis Tool) [17].

- Maritime, including EMCIP (European Marine Casualty Information Platform) [18] and CASMET (Casualty Analysis Methodology for Maritime Operations) [19].

- Railway, including HEIST (Human Error Investigation Software Tool) [20].

- Nuclear, including SACADA (Scenario Authoring, Characterization, and Debriefing Application) [21].

- Space, including NASA-HFACS (a detailed version of HFACS developed by NASA for analysis of occurrences in space operations) [22].

Following a gap analysis [13,14], the two dominant approaches arising from this review were HERA and NASA-HFACS. HERA applies a detailed cognitive approach for the analysis of acts at the sharp end, while NASA-HFACS uses a layered approach up to the level of organisational factors with an enhanced level of detail in comparison with the original HFACS [10]. Building on these two approaches, a number of factors influencing human performance were adapted and made domain-generic for the purpose of cross-domain analysis of occurrences. Various aviation and maritime stakeholders applied the taxonomy and provided feedback, leading to refinements in the definitions of the factors and guidance for their application.

The final SHIELD HF taxonomy consists of four layers, where each layer comprises several categories with a number of factors (Figure 1). The layers are Acts, describing what happened and did not go according to plan; Preconditions, providing factors that influenced the performance during operation; Operational Leadership, describing policies of operational leadership that may affect the working arrangement; and Organisation, describing influences of the organisation and external business environment on the operations. The elements of these layers are summarized next. The complete HF taxonomy with guidance and examples is provided in Appendix A.

Figure 1.

Illustration of the SHIELD HF taxonomy over a Safety Iceberg.

2.1.1. Acts

The Acts layer describes actions, omissions or intentional deviations from agreed procedures or practices by an operator with an impact on the safety of operations. It consists of five categories including 19 factors (see details in Appendix A.1):

- Perception (4 factors): The operator does not perceive, or perceives too late or inaccurately, information necessary to formulate a proper action plan or make a correct decision. The factors distinguish between the sensory modalities (visual, auditory, kinaesthetic, and other).

- Planning and Decision Making (3 factors): The operator has no, late or an incorrect decision or plan to manage the perceived situation. This includes issues with the interpretation/integration of information streams, but it excludes intentional deviations from procedures.

- Intentional Deviation (4 factors): The operator decides to intentionally deviate from an agreed procedure or practice, including routine and specific workarounds, and sabotage.

- Response Execution (6 factors): The operator has planned to perform an action that is appropriate for the perceived situation, but executes it in a wrong manner, at an inappropriate time or does not execute it at all. It includes slips and lapses, such as switching actions in sequences, pushing a wrong button and a lack of physical coordination. It excludes communication acts.

- Communicating (2 factors): The operator has planned to take an action that is appropriate for the perceived situation but communicates incorrect or unclear information to other actors or does not communicate at all.

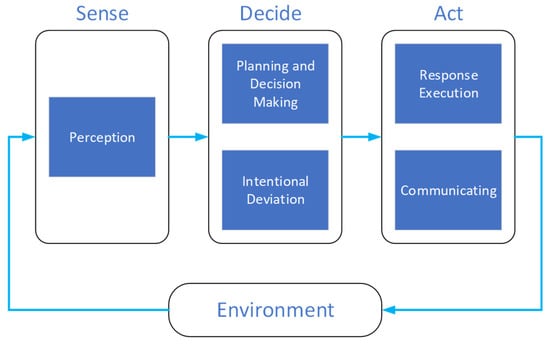

The Acts layer represents a sense—decide–act loop (Figure 2). Sensing issues are represented by the category Perception. It should only be used if there is evidence that information was not well perceived by an operator. Problems with the interpretation/integration of one or more information streams are part of the category Planning and Decision Making. Issues with decision-making on the basis of correctly sensed information are represented in the categories Planning and Decision Making, and Intentional Deviation, where the latter category is strictly used if the operator intentionally deviated from a procedure. Issues with acting on the basis of a correct decision/plan (slips, lapses) are represented in the categories Response Execution and Communicating, where the latter focusses on communications acts.

Figure 2.

Sense–decide–act loop describing the categories in the first layer of the HF taxonomy.

2.1.2. Preconditions

The Preconditions layer describes environmental factors or conditions of individual operators affecting human performance and contributing to the issues described in the Acts layer. It consists of 12 categories including 62 factors (see details in Appendix A.2):

- Physical Environment (9 factors): Conditions in the physical environment such as visibility, noise, vibration, heat, cold, acceleration and bad weather.

- Equipment and Workplace (6 factors): Conditions in the technological environment related to ergonomics, the human–machine interface (HMI), automation, working position and equipment functioning.

- Interpersonal Communication (4 factors): Conditions affecting communication in operations, such as language differences, non-standard or complex communication and the impact of differences in rank.

- Team/Group (6 factors): Conditions in the functioning of a team/group, such as working towards different goals, no cross-checking, no speaking up, no adaptation in demanding situations and groupthink.

- Misperception (4 factors): Misperception conditions leading to misinterpretation, such as motion illusion, visual illusion and the misperception of changing environments or instruments. They provide background for the reason that an operator did not aptly perceive.

- Awareness (7 factors): Lack of focused and appropriate awareness functions leading to misinterpretation of the operation by an operator, such as channelized attention, confusion, distraction, inattention, being ‘lost’, the prevalence of expectations or using an unsuitable mental model.

- Memory (3 factors): Memory issues leading to forgetting, inaccurate recall or using expired information.

- Mental Workload (4 factors): The amount or intensity of effort or information processing in a task degrades the operator’s performance, including low and high workload, information processing overload and the startle effect.

- Personal Factors (7 factors): Personal factors such as emotional state, personality style, motivation, performance pressure, psychological condition, confidence level and complacency.

- Physiological Condition (5 factors): Physiological/physical conditions such as injury, illness, fatigue, burnout, hypoxia and decompression sickness.

- Drugs and Nutrition (3 factors): Use of drugs, alcohol, medication or insufficient nutrition affecting operator performance.

- Competence, Skills and Capability (4 factors): Experience, proficiency, training, strength or biomechanical capabilities are insufficient to perform a task well.

2.1.3. Operational Leadership

The Operational Leadership layer describes the decisions or policies of an operations leader (e.g., a captain in a cockpit or ship, or air traffic control supervisor) that affect the practices, conditions or individual actions of operators, contributing to unsafe situations. It consists of three categories including 15 factors (see details in Appendix A.3):

- Personnel Leadership (4 factors): Inadequate leadership and personnel management, including no personnel measures against regular risky behaviour, a lack of feedback on safety reporting, no role model and personality conflicts.

- Operations Planning (6 factors): Issues in the operations planning, including inadequate risk assessment, inadequate team composition, inappropriate pressure to perform a task and a directed task with inadequate qualification, experience or equipment.

- Task Leadership (5 factors): Inadequate leadership of operational tasks, including a lack of correction of unsafe practices, no enforcement of existing rules, allowing unwritten policies to become standards and directed deviations from procedures.

2.1.4. Organisation

The Organisation layer describes decisions, policies or methods adopted at an organisational level that affect the operational leadership and/or individual operator performance. It consists of four categories including 17 factors (see details in Appendix A.4):

- Culture (2 factors): Safety culture problems or sociocultural barriers causing misunderstandings.

- Safety Management (5 factors): Safety management in the organisation is insufficient, including a lack of organisational structure for safety management and limitations of proactive risk management, reactive safety assurance, safety promotion and training and suitable documented procedures.

- Resources (6 factors): The organisation does not provide sufficient resources for safe operations, including personnel, budgets, equipment, training programs, operational information and support for suitable design of equipment and procedures.

- Economy and Business (4 factors): The economy and business of the organisation pose constraints that affect safety, including relations with contractors, strong competition, economic pressure to keep schedules and costs and the required tempo of operations.

2.2. Other Taxonomies in SHIELD

In addition to the HF taxonomy, SHIELD gathers other occurrence data, such as the involved actors, vehicles and additional context. All these elements enable the designer or risk assessor/manager to relate aspects of the operation and the occurrence with particular human factors. These other taxonomies are often domain-specific, using accustomed terminology from the aviation and maritime domains [23]:

- Occurrence overview: This provides general data on the occurrence, such as headline, date and time, location, number of fatalities and injuries, damage and a narrative of the occurrence.

- Occurrence type: The type of occurrence is described by domain-specific occurrence classes (e.g., accident, serious incident, very serious marine casualty, marine incident) and occurrence categories (e.g., runway incursion, controlled flight into terrain, collision in open sea, capsizing).

- Context: This gathers a range of contextual conditions, such as precipitation, visibility, wind, wake turbulence, light, terrain, sea and tidal conditions, runway conditions and traffic density. This is performed via structured lists, numbers or free texts.

- Vehicle and operation: Information on vehicles involved in a safety occurrence is gathered, such as an operation number, vehicle type (e.g., helicopter, container ship, fishing vessel) and year of build. The operation is specified by operation type (e.g., commercial air transport, recreative maritime operation), operation phase (e.g., take-off, berthing) and level of operation (e.g., normal, training, emergency).

- Actor: The backgrounds of actors involved in a safety occurrence are gathered, describing high-level groups of actor functions (e.g., flight crew, port authority), actor roles (e.g., tower controller, technician, chief officer), activity, qualification, experience, time on shift, age and nationality.

- Prevention, mitigation and learning: The collection of actions by human operators and/or technical systems that prevented the situation from developing into an accident, or that limited the consequences of an accident, is supported by free text fields. Moreover, lessons that have previously been identified following a safety occurrence, e.g., changes in designs, procedures or organisation, can be gathered. These data support the evaluation of the resilience due to operator performance and support safety learning in and between organisations.

2.3. SHIELD Backend and Frontend

The backend of SHIELD uses the modern document-oriented database technology of MongoDB. In document-oriented databases, the data are stored as collections of documents, which are readable and easy to understand. In this way, SHIELD uses a flexible schema, which can be simply changed/maintained and allow quick searches.

A user can access SHIELD via an HMI in the online HURID platform [24]. This is a collection of online tools of the SAFEMODE Human Risk Informed Design Framework (HURID). The SHIELD HMI includes the following features:

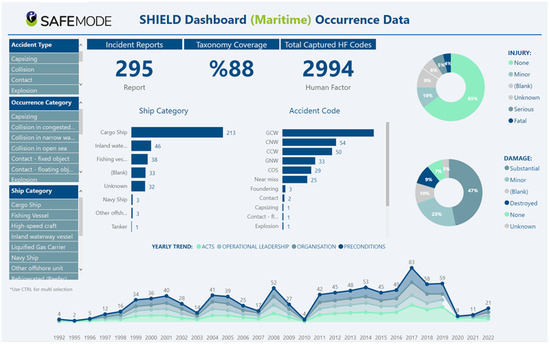

- Dashboard: Overview of statistics on HF and occurrence categories (see Figure 3).

Figure 3. Impression of SHIELD dashboard for maritime occurrences [24].

Figure 3. Impression of SHIELD dashboard for maritime occurrences [24]. - Search: Facility to search for occurrences with particular values.

- My reports: Overview and editing of reports created by a user.

- Create new report: Allows the user to store occurrences according to the taxonomy.

- User guidance: Documentation on the functionalities and taxonomy.

2.4. Data Collection

Partners in the SAFEMODE project collected incident and accident reports in the aviation and maritime domains [25]. Original incident and accident reports with full factual and narrative descriptions were used, rather than pre-processed analyses that employed other taxonomies, so as to avoid biases and lack of detail of the occurrences. Incident reports provide concise narratives by involved operators and may include technical data (such as flight trajectories). Accident reports provide detailed descriptions, technical data and analyses by investigators of events and conditions that contributed to the accident.

Aviation data sources used include the public Aviation Safety Reporting System (ASRS), which provides a range of US voluntary and confidentially reported incidents, deidentified non-public incident reports of European air navigation service providers and airlines and a number of public accident reports. A total of 176 aviation occurrences were analysed, consisting of 167 incidents and 9 accidents. They occurred worldwide in the period 2009 to 2021, predominantly in 2018 and 2019. Prominent types of analysed aviation occurrences are controlled flight into terrain (29%), runway incursion (17%), and in-flight turbulence encounters (30%).

The maritime data sources used in the analyses stem from public reports provided by various organisations, such as the Marine Accident Investigation Branch (MAIB) in the UK, The Bahamas Maritime Authority, the Malta Marine Safety Investigation Unit and the Australian Transport Safety Bureau (ATSB) amongst others. A total of 256 occurrences were analysed, including 246 marine accidents. They occurred worldwide in the period of 1992 to 2021. Prominent types of analysed maritime occurrences concern collisions in congested waters (19%), narrow waters (20%) and open sea (11%), as well as groundings in coastal/shallow water (36%) and when approaching berth (13%).

2.5. Data Analysis Process

The analysis of an occurrence for inclusion in SHIELD starts with studying the associated incident or accident report and extracting the factual information about the involved vehicles, operations, actors, contextual factors, narrative, etc., using the taxonomies explained in Section 2.2. This task can be completed using domain knowledge. Table 1 shows an example of such information for a loss of separation incident in air traffic management.

Table 1.

Example of an aviation occurrence with main elements of the SHIELD taxonomy (see associated human factors in Table 2).

Next, an analysis is performed to identify the human factors according to the SHIELD HF taxonomy as explained in Section 2.1 and Appendix A. It means identifying appropriate factors in each of the taxonomy layers for the actors in the occurrence, using the detailed guidance material. Table 2 shows as an example the identified human factors in the aviation incident of Table 1. Importantly, the reasoning for the choice of the human factors is included for traceability and to support learning. This analysis requires both domain and human factors expertise and is supported by the detailed definitions and examples listed in Appendix A.

Table 2.

Identified human factors in the example occurrence of Table 1.

Sets of occurrences were divided between partners of the SAFEMODE project, who had the appropriate domain and HF expertise and a learning culture mindset [25]. The HF analyses were performed by teams or by individual assessors in combination with reviews. Next, the prime analyses were reviewed by another project partner and discussed upon agreement on the suitable factors. The analysis of accidents takes longer and typically provides more human factors than incidents, given the more detailed knowledge of a larger number of events and conditions that typically contribute to an accident.

2.6. Evaluation by Stakeholders

The evaluation of SHIELD was performed using a questionnaire asking respondents to score statements on a Likert scale from 1 (Strongly Disagree) to 5 (Strongly Agree), and for open commenting. Questions concerned the dashboard, search functionality, report creation, own reports, user guidance and types of applications. The questionnaire was distributed to 15 maritime and 8 aviation stakeholders, including operators, manufacturers, safety agencies and researchers; 18 completed questionnaires were received (78% response rate). Furthermore, SHIELD was evaluated in a maritime case study [26].

3. Results

3.1. Cross-Domain HF Statistics

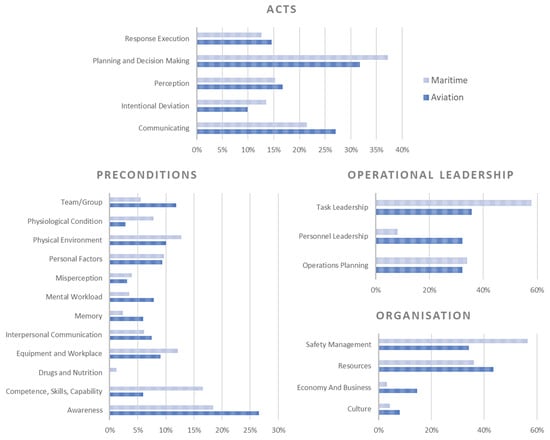

Figure 4 provides an overview of the relative frequencies of the HF categories per layer for the maritime and aviation occurrences amongst the four layers of the taxonomy. These results are based on 176 aviation occurrences and 256 maritime occurrences, which were analysed during the project. They illustrate the possibility of SHIELD for cross-domain analysis.

Figure 4.

Relative frequencies of the HF categories per layer in the analysed maritime and aviation occurrences along the layers of the HF taxonomy.

Figure 4 shows that for both transport domains, the most frequently occurring issues at the Acts level concern planning and decision-making, e.g., a pilot deciding to descend too early in mountainous terrain, and communicating, e.g., an air traffic controller not warning for low altitude.

In the Preconditions layer, many factors are used. The largest category in both the aviation and maritime domains is awareness, with main factors including channelized attention, confusion and distraction, e.g., a chief officer being distracted by constant VHF radio conversations. The category competence, skills and capability is considerably more prominent in maritime occurrences, which is indicative of a higher contribution of inadequate experience, training and a lack of proficiency on ships. In aviation, the category team/group occurs relatively often. In particular, no cross-checking and speaking up by team members, regarding crew resource management problems in the cockpit, is prominent.

In the Operational Leadership layer, factors that are frequently used are inadequate risk assessment (e.g., a captain who opted to fly through a bad weather zone, although other aircraft diverted), inadequate leadership or supervision (e.g., an ATC supervisor who allowed a large exceedance of the capacity of an airspace sector) and no enforcement of existing rules (e.g., masters not enforcing speed limitations of ships).

At the Organisation layer, it can be observed in Figure 4 that the categories of safety management and resources are the most prominent. A lack of guidance, e.g., how to operate in restricted visibility, and limitations of risk assessments, e.g., underestimation of the impact of an automatic flight control system, are important factors in Safety Management. Equipment not being available, e.g., the decision not to install an angle of attack indicator, is most prominent in the resources category.

3.2. Evaluation by Stakeholders

Basic statistics composed of the mean and standard deviation (SD) of the evaluation scores of the completed questionnaires (n = 18) are provided in Table 3. Most mean scores are close to 4, indicating that the respondents agree that the dashboard, search functionality and report creation and maintenance are useful for their purposes. Several suggestions were received for the extension of the dashboard and user guidance [23]. Users expressed that they expected that the database can be useful for various purposes in their organisations, including informing management, supporting safety promotion, improving training, improving operational procedures and supporting risk assessment and mitigation and safety assurance.

Table 3.

Evaluation scores of aviation and maritime stakeholders.

3.3. Early Application of SHIELD in the Maritime Domain

The European Maritime Safety Agency (EMSA) has developed a methodology to analyse the findings of the safety investigations reported in the European Marine Casualty Information Platform (EMCIP) [2] to detect potential safety issues. EMSA has recently published a safety analysis on navigation accidents based on EMCIP data concerning passenger, cargo and service ships [27]. The document considered a large number of safety investigations reported in the system (351) and identified nine safety issues: Work/operation methods carried out onboard the vessels; organisational factors; risk assessment; internal and external environment; individual factors; tools and hardware; competences and skills; emergency response and operation planning. The SHIELD taxonomy was used as a tool supporting the classification of contributing factors, thus supporting the subsequent analysis. The taxonomy was found to offer an intuitive and well-documented framework to classify human element data.

The Confidential Human Factors Incident Reporting Programme (CHIRP) for the maritime domain is an independent, confidential incident and near-miss reporting programme devoted to improving safety at sea [3]. CHIRP Maritime has recently begun using the SHIELD HF taxonomy in support of its analysis. CHIRP Maritime appreciates the systematic evaluation of human factors down to the levels of operational leadership and organisational aspects. This extra depth of analysis grants them more confidence in their findings due to the increased granularity and depth of the taxonomy.

In a maritime case study entitled “Implementation of a Human Factors and Risk-based Investigation suite for Maritime End Users (Organizational Level)” by CalMac Ferries Limited, three incidents were investigated [26]. It was found that SHIELD provides a consistent taxonomy for systematically identifying active and latent failures, which can be applied using existing competencies of maritime end users, without requiring additional training. The analysis identified additional factors with respect to a previous in-house HFACS-based analysis of the incidents by CalMac. In particular, in total, 54 factors were found using SHIELD, which more than doubled the 23 factors found in the earlier in-house analysis.

4. Discussion

The SHIELD taxonomy and database have been developed for the gathering and systematic assessment of human factors in safety occurrences in aviation and maritime operations. The objective is to support designers of systems and operations, risk assessors and managers in easily retrieving and analysing sets of safety occurrences for selected human performance influencing factors, safety events and contextual conditions. This allows learning from narratives of reported occurrences to identify key contributory factors and associated risk reduction measures, determine statistics and set a basis for including human elements in risk models of maritime and aviation operations.

It follows from the largely positive feedback in the questionnaire results, as well as from the appreciation expressed by EMSA, CHIRP Maritime and CalMac, that the developed SHIELD taxonomy and database have been recognized as valuable assets for systematic analysis of human factors in safety occurrences and learning from incidents and accidents as part of safety management. The uptake of SHIELD in the maritime domain is especially prominent. This may be explained by the maritime domain’s relative disadvantage in Human Performance Capability Profile (HPCP) with respect to the aviation domain. This may have caused a larger appetite within the maritime domain for the uptake of SHIELD by key stakeholders, as the aviation domain already has a number of ‘legacy’ taxonomies in use.

The most interesting finding for some of the aviation companies who tried SHIELD was the emergence of insights arising from analysing a set of accidents or incidents, i.e., learning across events. For example, analysing 30 similar incidents relating to runway incidents revealed similar problems at different airports, with problems in the tempo of operations, equipment availability, and safety risk management as prominent factors at the organisational level. Taken one by one, or even for a single airport, the contributory factors of the events looked no more important than a host of other contenders for safety attention. However, when looking across half a dozen airports, certain underlying problems rose to the surface in clarity and priority.

Similarly, during the analysis of a set of loss of separation incidents from a particular air traffic control centre, it was noticed by the two analysts that ‘Tempo of operations’ came up a number of times in the Organisation layer of the SHIELD taxonomy (underpinning ‘High workload’ in the Preconditions layer), along with several Operational Leadership categories. This raised a question in the minds of the analysts as to whether the traffic load was too high in this unit, such that there was insufficient margin when things did not go to plan, resulting in a loss of separation. This could represent a ‘drift towards danger’ scenario, where the rate of incidents is slowly increasing but goes unnoticed, as the air traffic control centre adapts to each incident individually. Since the incidents differed at the Acts layer and had other attendant preconditions, again this issue might have been missed except by looking deeply across a set of incidents.

From the maritime perspective, SHIELD not only captured all the major factors included in the original accident reports successfully but it also identified additional contributing factors that were not previously highlighted. In particular, SHIELD was able to systematically capture organisational and operational leadership issues that were not well identified in the original accident reports. For instance, one of the maritime accidents analysed was the collision between the Hampoel and Atlantic Mermaid vessels, where the main cause of the collision was the Atlantic Mermaid failing to observe the presence of Hampoel, which also failed to take avoiding action [28]. Additional contributing factors included in the accident report were related to technological environment factors, inadequate resources, weather conditions and adverse physiological conditions, amongst others. All the above-mentioned factors were also successfully captured via the application of SHIELD. In addition, SHIELD supported identifying details such as an intermittent fault of one radar for at least 12 months before the collision, a chief officer making an unanswered brief VHF call and a chief officer not following COLREG regulations and not using light and sound signals. Therefore, various organisational aspects were captured as contributing factors in this accident.

The above examples demonstrate the utility of applying a taxonomy that has depth, particularly when analysing across incident sets, looking for patterns that are otherwise not immediately obvious. This kind of learning across incidents is one of the main advantages of a database and layered taxonomy such as SHIELD.

Learning from occurrence retrieval in SHIELD can be pursued in various ways. One can search for particular types of operations and find the types of related incidents and accidents, the prominent contributing factors in the four layers of the HF taxonomy, and the prevention, mitigation and learning for the occurrences. One can obtain an overview of the human factors that contribute most to particular types of occurrences. By looking at the details of these factors and the reasoning provided in the classification, a safety analyst or an investigator can build an understanding of what may go wrong in what kinds of conditions and what kinds of operational leadership or organisational aspects may contribute. A company can track what factors affecting human performance are most prominent in incidents occurring in its operations and compare this with average numbers in the industry. As sectors, the maritime and aviation domains can compare statistics and use them for cross-domain learning of best practices. An illustrative cross-domain comparison in Section 3.1 showed several differences between the sectors, such as a higher contribution of inadequate experience, training and proficiency in the maritime domain.

For strengthening the use of SHIELD, the following further steps are advised: To include larger sets of analysed occurrences, both incidents and accidents, in order to support attaining statistically meaningful results; to apply regular validation for its use in safety management and feedback to design (can the right information be extracted or are other data features needed?); to develop and include a taxonomy for prevention, mitigation and learning, such that it will be easier for users to find and develop statistics for the types of actions that effectively prevented safety occurrences becoming worse.

The SHIELD HF taxonomy found its basis in earlier taxonomies stemming from multiple domains, namely space and aviation/ATM operations [10,16,22], and was next developed to suit aviation/ATM and maritime operations. A key contribution of this research has been the structuring of all categories and factors, their explicit definitions, and the guidance and examples for their use. This has been recognized as a clear asset, supporting its use with little training. Given the multi-domain support of the HF taxonomy, we expect that it can be used with minor adaptations in other domains. The use of the overall SHIELD taxonomy in other domains would require extensions of its domain-specific taxonomies though (e.g., type of operation, actor).

Author Contributions

Conceptualization, S.S., B.K., O.T., R.E.K. and S.P.; methodology, S.S., B.K., O.T., R.E.K., B.v.D., L.S., A.K., R.V. and S.P.; software, P.J., A.D. and V.d.V.; validation, S.S., B.K. and B.v.D.; investigation, S.S., B.K., O.T., R.E.K., B.v.D., B.N.d.M., Y.B.A.F., B.B.-T., L.d.W. and S.I.A.; data curation, P.J., B.N.d.M., R.V., B.B.-T. and V.d.V.; writing—original draft preparation, S.S., B.K., B.N.d.M. and S.P.; writing—review and editing, S.S. and P.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was performed as part of the SAFEMODE project. This project received funding from the European Union’s Horizon 2020 Research and Innovation Programme under Grant Agreement N°814961, but this document does not necessarily reflect the views of the European Commission.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, following the procedures documented in report D1.4 “Social, Ethical, Legal, Privacy issues identification and monitoring (final)” (7/10/2022) of the SAFEMODE project. This includes the publication of (anonymised) survey results and statistics of human factors in safety occurrences.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Access to SHIELD can be attained via the SAFEMODE website https://safemodeproject.eu, 20 February 2023.

Acknowledgments

We thank partners in the SAFEMODE project for their support and feedback for the SHIELD development.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. SHIELD HF Taxonomy

The SHIELD HF taxonomy consists of four layers (Acts, Preconditions, Operational Leadership and Organisation), where each layer comprises several categories with a number of factors. A high-level description of the HF taxonomy is provided in Section 2.1 and illustrated in Figure 1. This appendix provides all details, including the codes, titles and definitions of all layers, categories and factors. Furthermore, it provides guidance and examples (in italics) on their use. Many examples are based on actual occurrences that were analysed and stored in SHIELD.

Appendix A.1. Acts

The Acts layer describes actions, omissions or intentional deviations from agreed procedures or practices by an operator with an impact on the safety of operations. It consists of five categories including 19 factors (see Table A1).

Table A1.

Codes, titles, definitions, and guidance and examples of categories and factors in the Acts layer.

Table A1.

Codes, titles, definitions, and guidance and examples of categories and factors in the Acts layer.

| AP | Perception | The Operator Does Not Perceive, or Perceives Too Late or Inaccurately, Information Necessary to Formulate a Proper Action Plan or Make a Correct Decision | |

| AP1 | No/wrong/late visual detection | The operator does not detect, or detects too late or inaccurately, a visual signal necessary to formulate a proper action plan or make a correct decision. | • Controller is late in detecting the deviation of the aircraft. • Poor visual lookout by Officer of Watch (OOW). OOW is busy with other tasks and when looking does not look around the horizon. |

| AP2 | No/wrong/late auditory detection | The operator does not detect, or detects too late or inaccurately, an auditory signal necessary to formulate a proper action plan or make a correct decision. | • The pilot did not hear the heading instruction and did not turn. • The operator did not seem to hear (did not react to) the warning chime. |

| AP3 | No/wrong/late kinaesthetic detection | The operator does not detect, or detects too late or inaccurately, a kinaesthetic signal necessary to formulate a proper action plan or make a correct decision. | • Neither of the pilots made any reference to the buffet during the stall of the aircraft. |

| AP4 | No/wrong/late detection with other senses (e.g., smell, temperature) | The operator does not perceive, or perceives too late or inaccurately, information received with senses different from vision, hearing and touch, which is necessary to formulate a proper action plan or make a correct decision. | • The chief engineer did not timely smell the overheated engine. |

| AD | Planning and Decision Making | The operator has no, late or an incorrect decision or plan to manage the perceived situation. | |

| AD1 | Incorrect decision or plan | The operator elaborates an action plan or makes a decision which is insufficient to manage the situation. | This factor should not be used for situations where the operator decides to not act to manage the situation. In such situations it is advised to use AD3: No decision or plan. • The pilot decided to descend too early for the approach in the mountainous area. • The skipper had decided not to use the outward bound channel but to steer a course parallel with the land. |

| AD2 | Late decision or plan | The operator does not elaborate on a timely action plan or decision to manage the situation. | • The controller decided to provide a heading instruction to avoid entering other airspace but was too late, leading to an area infringement. • It was only during the final minute that the chief officer switched the helm control to manual steering and sounded the whistle. |

| AD3 | No decision or plan | The operator does not elaborate on any action plan or decision to manage the situation. | This factor should also be used for situations where the operator decides to not act to manage the situation. • Though he was twice informed of the presence of conflicting traffic, the pilot did not make an evasive action nor looked for the conflicting aircraft. • It is apparent that at no stage did the officer of watch consider reducing speed. |

| AI | Intentional Deviation | The operator decides to intentionally deviate from an agreed procedure or practice, including workarounds and sabotage. | |

| AI1 | Workaround in normal conditions | The operator decides to intentionally deviate from an agreed procedure or practice in a normal operating condition. | If it is known that the workaround is used on a regular basis, AI2 “Routine workaround” should be used. • In this particular case the master did not post a lookout during the bridge watch. |

| AI2 | Routine workaround | The operator habitually and intentionally deviates from an agreed procedure or practice on a regular basis. | This factor should only be used if it can be shown that the workaround is used on a regular basis; otherwise use AI1 “Workaround in normal conditions”. • Captain as Pilot Flying also regularly performs Pilot Monitoring tasks. • It was the master’s custom not to post a lookout during the bridge watches. |

| AI3 | Workaround in exceptional conditions | The operator decides to intentionally deviate from an agreed procedure or practice in an exceptional operating condition. | • Pilot had an engine failure and could not glide back to his airfield, he opted to land on the highway. • Becoming concerned about the presence of the fishing vessels, the Filipino officer of watch asked the Chinese second officer to call the fishing vessels in Mandarin to ask them to keep clear. |

| AI4 | Sabotage | The operator decides to intentionally deviate from an agreed procedure or practice in order to create damage to the system or organization. | This code should only be used when the operator intends to create damage. It should not be used in other cases where an operator intentionally deviates from a procedure. • The crash of Germanwings Flight 9525 (24 March 2015) was deliberately caused by the co-pilot. |

| AR | Response Execution | The operator has planned to take an action that is appropriate for the perceived situation, but executes it in a wrong manner, at an inappropriate time or does not execute it at all. | |

| AR1 | Timing error | The operator has planned to take an action that is appropriate for the perceived situation but executes it either too early or too late. | This considers timing errors in the response execution only. Timing errors that are due to late perception should be represented by the category Perception. Timing errors that are due to wrong or late decisions should be represented by the category Planning And Decision Making. • The shipper got distracted and acted too late. |

| AR2 | Sequence error | The operator carries out a series of actions in the wrong sequence. | This considers sequence errors in the response execution of an appropriate plan only, for instance switching two actions in a standard sequence. Sequence errors that are the resultant of wrong planning should be represented by the category Planning And Decision Making. • The pilot put the gear down prior to flaps 20. |

| AR3 | Right action on the wrong object | The operator has planned to take an action that is appropriate for the perceived situation but—when executing it—selects an object (e.g., lever, knob, button, HMI element) different from the intended one. | • The controller plugged the headphone jack into the wrong socket. |

| AR4 | Wrong action on the right object | The operator selects the correct object (e.g., lever, knob, button, HMI element), but performs an action that is not the correct one. | • Adding information to the flight management system (FMS) was not performed well, causing route dropping. Rebuilding FMS did still cause problems. • The master was not using the automatic radar plotting aid (ARPA) properly to check the other vessels movements and obtain the correct closest point of approach. |

| AR5 | Lack of physical coordination | The operator takes an action which is appropriate for the perceived situation, but executes it in a wrong manner, due to a lack of physical coordination. | • The operator did not timely manage to move the heavy obstacle. |

| AR6 | No action executed | The operator has planned to take an action that is appropriate for the perceived situation but does not execute it. | This lack of action should be distinguished from no actions due to problems in the category Planning And Decision Making, such as AD3 (No decision or plan). AR6 should be used if an operator had planned to act, but then did not, for instance because the operator forgot to do so (precondition: PME1). • The crew forgot to turn on the heating of the pitot probes before take-off. |

| AC | Communicating | The operator has planned to take an action that is appropriate for the perceived situation but communicates incorrect or unclear information to other actors or does not communicate at all. | |

| AC1 | Incorrect/unclear transmission of information | The operator transmits to other actors information that is incorrect or unclear, e.g., use of wrong callsign. | • Poor pilot readback of instructions. • Second officer fails to specifically inform commanding officer about the overtaking situation. |

| AC2 | No transmission of information | The operator does not transmit information that is necessary for other actors to operate safely/effectively. | • The controller did not issue a low altitude alert. • The master did not leave night orders. |

Appendix A.2. Preconditions

Preconditions describe environmental factors or conditions of individual operators affecting human performance and contributing to the issues described in the Acts layer. It consists of 12 categories including 62 factors (see Table A2).

Table A2.

Codes, titles, definitions, and guidance and examples of categories and factors in the Preconditions layer.

Table A2.

Codes, titles, definitions, and guidance and examples of categories and factors in the Preconditions layer.

| PPE | Physical Environment | Conditions in the Physical Environment That Affect the Perception and/or Performance of the Operator, such as Vision Blockage, Noise, Vibration, Heat, Cold, Acceleration and Bad Weather | |

| PPE1 | Vision affected by environment | Environmental conditions affect the operator’s vision. | • Pilot reported: “When looking for traffic on final, I did not ask my passenger to move his head so I could get a clear view. I believe the passenger blocked my seeing the plane on final.” • Due to very poor visibility and fog patches it was very difficult for the master or pilot to have a clear view of the incoming vessels. |

| PPE2 | Operator movement affected by environment | Environmental conditions affect the operator’s movement. | This factor should be used only if the movement of the human operator is being affected. It should not be used if the movement of the operation (aircraft/ship) is affected by the environment. • The repair was complicated by the small space where the maintenance operator had to do the work. |

| PPE3 | Hearing affected by environment | Environmental conditions affect the operator’s ability to hear. | • Instructor mentions: “Having my ear piece in the ear facing the Local Controller I was not hearing their transmissions very well”. • The engines were very loud. |

| PPE4 | Mental processing affected by environment | Environmental conditions affect the operator’s ability to mentally process or think about information. | • I could not think clearly due to the noise. |

| PPE5 | Heat or cold stress | Exposure to heat or cold degrades the operator’s performance capabilities. | • I could not well handle due to the frost. |

| PPE6 | Operation more difficult due to weather and environment | Weather conditions and/or environment make controlling the operation more difficult. | It is advised to use other PPE factors if the weather leads to a specific condition that affects the perception or performance of an operator. • Difficult approach due to the nearby mountains. • The anchor dragged due to the wind speed increasing to over 50 kts and the sea swell. |

| PPE7 | Acceleration | Forces acting on a body by acceleration or gravity degrade the operator’s performance capabilities. | • The pilot’s performance was degraded by the large acceleration. |

| PPE8 | Vibration stress | Excessive exposure to vibration degrades operators’ physical or cognitive performance. | • I could not well control due to the excessive vibration. |

| PPE9 | Long term isolation | An individual is subject to long-term isolation, and this degrades operator performance. | • Being alone that long affected my reasoning. |

| PEW | Equipment and Workplace | Conditions in the technological environment that affect the performance of the operator, such as problems due to ergonomics, HMI, automation, working position and equipment functioning. | |

| PEW1 | Ergonomics and human machine interface issues | The ergonomics of tools and human machines interface, including controls, switches, displays, warning systems and symbology degrade the operator’s performance. | • The conflict window was full and the predicted conflict between the aircraft that the controller was interacting with appeared off screen. The controller could not see the conflict in the list. • The data link failed to send and error message was cryptic and partly obscured. |

| PEW2 | Technology creates an unsafe situation | The design, malfunction, failure, symbology, logic or other aspects of technical/automated systems degrade the operator’s performance. | Problems with communication systems are addressed by PEW5 “Communication equipment inadequate”. • The false alert of the ground proximity warning system caused the crew to react on it, and led to confusion about the situation. • Skipper lost the echo of the ship due to interference. |

| PEW3 | Workspace or working position incompatible with operation | The interior or exterior workspace layout or the working position are incompatible with the task requirements of the operator. | Interior workspace refers to physical layout of the building, cabin, bridge, machine room or workspace and the offices within which people are expected to function. Exterior workspace refers to the physical layout of the exterior of the building/ship/aircraft. • Difficult to see the Hold Point location from the tower, due to its height. • Once seated in the bridge chair, visibility was restricted due to the poor height and angle of the chair itself. |

| PEW4 | Personal protective equipment interference | Personal protection equipment (helmets, suits, gloves, etc.) interferes with normal duties of the operator. | • The gloves restricted the fine handling of the apparatus. |

| PEW5 | Communication equipment inadequate | Communication equipment is inadequate or unavailable to support task demands. Communications can be voice, data or multi-sensory. | This factor only considers communication systems that are used for communication information from one operator to the other, but not navigation and surveillance systems (use PEW2 for those). • The air traffic control frequency was busy, and the pilot could not contact the controller. • The bridge/engine room communication facilities were extremely limited. |

| PEW6 | Fuels or materials | Working with fuels, chemicals or materials degrades the operator’s performance. | • Working with the chemicals degraded their performance. |

| PCO | Interpersonal Communication | Conditions affecting communication in operations, such as language differences, non-standard terminology, and impact of rank differences. | |

| PCO1 | Briefing or handover inadequate | Individual or team has not aptly completed the briefing or handover of an activity. | • The initial argument about which noise abatement procedure was correct happened at the taxiing stage. This might have been avoided had the pre-flight briefing been more thorough. • Information was missing when the watch was handed over. |

| PCO2 | Inadequate communication due to team members’ rank or position | Differences in rank or position within team limit or jeopardize team communication. | • Captain did not take serious warning of chief officer, explaining he was more experienced in these cases • During the passage, none of the bridge team questioned the pilot why he had allowed the ship to leave the radar reference line. |

| PCO3 | Language difficulties | Communication between operators with different native languages degrades operator’s performance. | • Language issues prevented the conflict resolution and contributed to the incident since the pilot did not fully understand the clearance to hold short. • The pilot did not seek clarification, and he was isolated from the decision-making process during the discussions between the master and third officer in their native language. |

| PCO4 | Non-standard or complex communication | Operators use terminology/phrases or hand signals differing from standards and training, or they use complex messages that may lead to misunderstanding. | • The controller used confusing phraseology, which was not well understood by the pilot. |

| PTG | Team/Group | Conditions in the functioning of a team/group that affect the performance of operators, such as working towards different goals, no cross-check, no adaptation in demanding situations, and group think. | |

| PTG1 | Team working towards different goals | Team members are working towards different goals, leading to loss of shared situational awareness and not anticipating potential problems. | • There was no agreed cohesive plan of the approach, with each person involved having their own ideas about what was going to happen. • The third engineer did not consult with the chief engineer, and the chief engineer did not seek approval from the bridge before stopping the engines and effectively removing engine power from the master without knowing the navigational situation. |

| PTG2 | No cross-check and speaking-up by team members | Decisions or actions by team members are not cross-checked. There is no multi-person approach to execution of critical tasks/procedures and no communication of concerns, not necessarily due to rank or position. | If the lack of communication is due to differences in team members’ rank or position, it is advised to use factor PCO2 “Inadequate communication due to team members’ rank or position”. • No cross check by captain of settings instrument settings made by first officer. • Captain did not speak up when the pilot failed to verbalize-verify-monitor the rest of the approach on the flight management system. |

| PTG3 | No monitoring and speaking-up of team status and functioning | Team members do not monitor the status and functioning of each other for signs of stress, fatigue, complacency, and task saturation, or do not speak up if status and functioning are monitored as being incorrect. | • As a crew we became laser-focused on separate indications and failed to properly communicate our individual loss of situational awareness; we were both in the red. • Other senior officers onboard were aware that the master had been drinking, but took no action. |

| PTG4 | No adaptation of team performance in demanding situation | Team does not adapt to demanding circumstances. There is no change in team roles and task allocation, there is no team prioritisation of plans and tasks, no workload management, and no management of interruptions and distractions. | • Difficult procedure and the first officer was not experienced for it. Captain should have taken over controls. • The commanding officer remained at the end of the bridge, physically remote from the master, even though the company’s blind pilotage procedure was no longer being followed. The helmsman was unsure of his new role, which resulted in him becoming disengaged from the bridge team. Consequently, the cohesive structure of the bridge team was lost. |

| PTG5 | Long term team confinement | Long-term close proximity or confinement degrades team functioning and operator performance. | • Stress and discomfort were manifest in the crew after the long journey. |

| PTG6 | Group think | Excessive tendency towards group, team or organization consensus and conformity over independent critical analysis and decision making degrades operator performance. | • There was a lack of independent critical analysis due to conformity pressure of the bridge team. |

| PER | Misperception | Misperception conditions leading to misinterpretation, such as motion illusion, visual illusion, and misperception of changing environments or instruments. | |

| PER1 | Motion illusion | Erroneous physical sensation of orientation, motion or acceleration by the operator. | • The pilot was acting at the reverse roll following the previous correction due to a false sensation. |

| PER2 | Visual illusion | Erroneous perception of orientation, motion or acceleration following visual stimuli. | • Not appreciating the effect of the off-axis window at the working position. |

| PER3 | Misperception of changing environment | Misperceived or misjudged altitude, separation, speed, closure rate, road/sea conditions, aircraft/vehicle location within the performance envelope. | • As the aircraft turned, its attitude to the wind changed and its flight performance was affected. The controller did not realise this until it was too late. • The apparent proximity of the looming cliffs which surround the bay caused the master to misjudge the vessel’s distance off the shore. |

| PER4 | Misinterpreted or misread instrument | Misread, misinterpreted or not recognized significance of correct instrument reading. | • Landing gear not down alert was not correctly interpreted. • The pilot was reading the electronic bearing line for the course incorrectly, forgetting that the radar origin was off-centred rather than centred. |

| PAW | Awareness | Lack of focussed and appropriate awareness functions lead to misinterpretation of the operation by an operator, such as channelized attention, confusion, distraction, inattention, being lost, prevalence of expectations, or using an unsuitable mental model. | |

| PAW1 | Channelized attention | Operator focuses all attention on a limited number of cues and excludes other cues of an equal or higher priority. This includes tunnel vision. | • Captain focused on the flight management system, while maintaining altitude is more important, and key for his role. • While the third officer relied on the electronic chart display and information system (ECDIS) as the primary means of navigation, he did not appreciate the extent to which he needed to monitor the ship’s position and projected track in relation to the planned track and surrounding hazards. |

| PAW2 | Confusion | Operator does not maintain a cohesive awareness of events and required actions, and this leads to confusion. | • With the airplane in a left descending turn and air traffic control talking on the radio directing us to turn back to the right, I began to feel disoriented and uncomfortable. • The action taken by the skipper of vessel A, which put the vessels on a collision course, was not expected by the master of vessel B and confused him. |

| PAW3 | Distraction | Interruption and/or inappropriate redirection of operator’s attention. | • The pilot was distracted by the presence of his passenger. • The chief officer was distracted by the constant VHF radio conversations. |

| PAW4 | Inattention | Operator is not alert/ready to process immediately available information. | • Controller’s attention was not fully on the pilot at the time and maybe he only heard what he expected to hear. • The master relaxed his vigilance when the pilot was on board. |

| PAW5 | Geographically lost | The operator perceives to be at a different location compared to the one where s/he actually is. | • Pilot was attempting to land on a road he perceived to be the runway. It was raining, cloud base was low, and it was dark. |

| PAW6 | Unsuitable mental model | Operator uses an unsuitable mental model to integrate information and arrives at a wrong understanding of the situation (e.g., wrong understanding of automation behaviour). | • Following the autopilot disconnect, the pilot flying almost consistently applied destabilizing nose-up control inputs and the crew did not recognize the stall condition. |

| PAW7 | Pre-conceived notion or expectancy | Prevalence of expectations on a certain course of action regardless of other cues degrades operator performance. | • The local controller expected aircraft Y to exit via C4 and did not check it had actually done so. • This course alteration resulted in movement towards the right-hand bank, since the selected course was set very close to the embankment. The pilot was aware and tolerated this because he assumed that it would be compensated subsequently or as they passed through the bend. |

| PME | Memory | Memory issues leading to forgetting, inaccurate recall, or using expired information. | |

| PME1 | Forget actions/intentions | The operator has a temporary memory lapse and forgets planned actions or intentions. | • The controller had previously planned things correctly, then after being busy with other aircraft, forgot about aircraft X when executing aircraft Y descent. • At about 0055, the chief officer was required to call the pilot to provide information about the expected arrival time, but it slipped. |

| PME2 | No/inaccurate recall of information | The operator does not retrieve or recall information accurately from memory. | • Pilot mentions: “The captain then asked me if we had entered the ILS Critical Area and neither one of us could remember passing the sign for one “ |

| PME3 | Negative habit | The operator uses a highly learned behaviour from past experiences which is inadequate to handle the present situation. | • The pilot in command (PIC) deselected autothrottle (as is his habit) and refused the first officer (FO) offer to select a vertical mode for climb (also his habit) while he hand-flew the climb out. Because of the PIC’s refusal to use autothrottle, vertical mode control, or autopilot during climbout from a very busy terminal area, the FO was forced to spend additional effort monitoring basic flying in between multiple frequency changes and clearance readbacks. • GPS Guard zone radius set to 0,3’ which is a standard value for the crew since they have previous experiences with false alarms with guard zones of smaller size. |

| PMW | Mental Workload | Amount or intensity of effort or information processing in a task degrades the operator’s performance. | |

| PMW1 | High workload | High workload degrades the operator’s performance. | • Dealing with two resolution advisories such that approach becomes unstable, no time to properly manage. • The master tried to do nearly everything himself, which caused him to become overloaded and to miss the fact that the engines had been left on half ahead inadvertently, and to misinterpret the speed of the vessel and the situation in general. |

| PMW2 | Low workload | Low workload imposes idleness and waiting, and it degrades operator’s performance. | • The low workload at the remote airfield affected the vigilance of the controller. |

| PMW3 | Information processing overload | Available mental resources are insufficient to process the amount of complex information. | • The pilots had difficulty integrating the cockpit displays and understanding the emergency situation. |

| PMW4 | Startle effect | Available mental resources are insufficient to process sudden, high-intensity, unexpected information, leading to the startle effect. | • The master was clearly surprised by the event and not able to effectively respond for a while. |

| PPF | Personal Factors | Personal factors affect operator performance, such as emotional state, personality style, motivation, psychological condition, confidence level, complacency. | |

| PPF1 | Emotional state | Strong positive or negative emotion degrade operator’s performance. | • He (chief officer) was upset about the death of his father, worried about the health of his pregnant wife. • When the first crewmember collapsed, his colleague reacted impulsively to the emergency by entering the enclosed space to rescue him without fully assessing the risk. |

| PPF2 | Personality style | Operator’s personality traits (e.g., authoritarian, over-conservative, impulsive, invulnerable, submissive) contribute to degraded performance and/or negatively affect interaction with other team members. | • This pilot in command is known for difficulties in the cockpit and by most accounts is unwilling or unable to change his habits or cockpit demeanor […] indifference and even hostility to second in command input. |

| PPF3 | Confidence level | Inadequate estimation by the operator of personal capability, of the capability of others or the capability of equipment degrades performance. | • I should have also recognized that my fellow crew member was a fairly low time First Officer and given more guidance/technique on how I might fly a visual. • The late detection of the conflict were due to an over-reliance on automatic identification system (AIS) information shown on the display. |

| PPF4 | Performance/peer pressure | Threat to self-image and feeling of pressure to perform an activity despite concerns about the risk associated degrades performance. | • Controller wanted to avoid contributing to delay and stated “but in hindsight delaying a few aircraft would have been a lot easier to explain to the supervisors and my coworkers as well as being safer.” • The third officer sought clarification about the use of sound signals and was advised by the chief officer not to sound the horn unless traffic was encountered. On previous ships he had sailed on, the horn was used in restricted visibility, regardless of traffic density. The advice caused the third officer concern, but he did not wish to question his superiors and, with reluctance, he carried on as instructed. |

| PPF5 | Motivation | Excessive or inadequate motivation to accomplish a task or prevalence of personal goals over organization’s goals degrades operator performance. | • Pilot mentions pressure to build total time and fly that particular aircraft. |

| PPF6 | Pre-existing psychological condition | Pre-existing acknowledged personality, psychological or psychosocial disorder/problem degrades operator performance. | • The crash of Germanwings Flight 9525 was deliberately caused by the co-pilot, who had previously been treated for suicidal tendencies and declared “unfit to work” by his doctor. |

| PPF7 | Risk underestimation | False sense of safety or complacency brings the operator to ignore hazards or to underestimate the risks associated to them, thus degrading performance. | • The flight crew did not expect to encounter abnormal severe situations include vertical wind, stall warning; so due to lack of adequate situational awareness the flight crew did not [apply] full engine power and flap to solve low energy situation of the aircraft. • Neither vessel reduced speed on entering fog, even though visibility reduced to less than 2 cables. |

| PPC | Physiological Condition | Physiological/physical conditions affect operator performance, such as injury, illness, fatigue, burnout, medical conditions. | |

| PPC1 | Injury or illness existed during operation | Pre-existing physical illness, injury, deficit or diminished physical capability due to the injury, illness or deficit, degrades operator performance. This includes situations where the operator intentionally performs duties with a known (disqualifying) medical condition. | • The officer of watch wore glasses for reading, but had met the acuity standards without the need for wearing them. As a result of the collision, he had his eyes re-tested, because he felt that he could not see the radar screen clearly without his glasses. The doctor who re-tested his eyesight concluded that it was surprising that his eyesight problem had not previously been identified. • The chief officer reported that, at some time before 03:00, he had started suffering from stomach cramps, and went to his cabin to use the toilet; his cabin was two decks below the bridge and towards the aft end of the accommodation. |

| PPC2 | Fatigue | Diminished mental capability due to fatigue, restricted or shortened sleep, mental activity during prolonged wakefulness or disturbance of circadian rhythm degrades operator performance. | • The watchkeepers on both vessels had worked in excess of the hours permitted by Standards of Training, Certification and Watchkeeping (STCW) over the previous 2 days. • The commanding officer was very tired, so tired that he remained asleep for over an hour. |

| PPC3 | Mentally exhausted (burnout) | Exhaustion associated with the wearing effects of high operational and/or lifestyle tempo, in which operational requirements impinge on the ability to satisfy personal requirements degrades operator performance. | • It became apparent that his performance had been affected by a burn-out. |

| PPC4 | Hypoxia | Insufficient oxygen supply to the body impairs operator performance. | • The crew incapacitated by hypoxia due to a lack of cabin pressurisation. |

| PPC5 | Decompression sickness | Development of nitrogen bubbles in the blood and tissues as a result of too quick reduction of atmospheric pressure causes operator chest pains, bends, difficult breathing, skin irritation, cramps. | • The crew suffered from decompression sickness due to the rapid decompression of the aircraft. |

| PDN | Drugs and Nutrition | Use of drugs, alcohol, medication or insufficient nutrition affect operator performance. | |

| PDN1 | Recreational drugs and alcohol | Recreational use of drugs or alcohol impairs or interferes with operator performance. | • Post-accident toxicological tests indicated one pilot had smoked marijuana within the 24 h before the accident. • The second officer considered that the master was tired and drunk. |

| PDN2 | Prescribed drugs or OTC medications | Use of prescribed drugs or over-the-counter medications or supplements interferes with operator task performance. | • Diphenhydramine, a first-generation sedative antihistaminic, with potentially impairing side effects, was detected in a toxicology analysis following the accident. |

| PDN3 | Inadequate nutrition, hydration or dietary practice | Inadequate nutritional state, hydration or dietary practice degrade operator performance. | • The crew didn’t have a meal for over six hours. |

| PCS | Competence, Skills and Capability | Competence, skills, strength or biomechanical capabilities are insufficient to well perform a task. | |

| PCS1 | Inadequate experience | Operator does not have sufficient experience with a task at hand. | • Operator does not have the experience with the instrument landing system characteristics of the plane. • The master was new to the company and to the vessel, and had been in command of her for just a few hours. |

| PCS2 | Lack of proficiency | Operator capability to accomplish a task does not meet the performance levels expected from her/his skill level. | • The flight crew’s actions for stall recovery were not according to abnormal procedures of the aircraft. Pilot did not use flap 15 and maximum engine power with to recover stall condition. • After initial reactions that depend upon basic airmanship, it was expected that it would be rapidly diagnosed by pilots and managed where necessary by precautionary measures on the pitch attitude and the thrust, as indicated in the associated procedure. |

| PCS3 | Inadequate training or currency | Operator does not meet general training or recurring training requirements for the task assigned to her/him. | • Low exposure time in training to stall phenomena, stall warnings and buffet. • The chief officer had received no crew resource management or bridge team management training. |

| PCS4 | Body size, strength or coordination limitations | Body size, strength, dexterity, coordination mobility or other biomechanical limitations of the operator degrade the task performance of the operator. | • The crew member did not have enough strength to open the pressurized door. |

Appendix A.3. Operational Leadership

The Operational Leadership layer in the taxonomy concerns decisions or policies of the operations leader that affect the practices, conditions or individual actions of operators, contributing to unsafe situations. It consists of three categories including 15 factors (see Table A3).

Table A3.

Codes, titles, definitions, and guidance and examples of categories and factors in the Operational Leadership layer.

Table A3.

Codes, titles, definitions, and guidance and examples of categories and factors in the Operational Leadership layer.

| LP | Personnel Leadership | Inadequate Leadership and Personnel Management by Operations Leader, Including No Correction of Risky Behaviour, Lack of Feedback on Safety Reporting, No Role Model, and Personality Conflicts | |

| LP1 | No personnel measures against regular risky behaviour | An operations leader does not identify an operator who regularly exhibits risky behaviours or does not institute the necessary remedial actions. | • The master did not constitute remedial actions against the repeating risky acts of the third officer. |

| LP2 | Inappropriate behaviour affects learning | Inappropriate behaviour of operations leader affects learning by operators, which manifests itself in actions that are either inappropriate to their skill level or violate standard procedures. | • The loose interpretation of the rules by the chief officer contributed to the missed checks of the engineer. |

| LP3 | Personality conflict | A “personality conflict” exists between an operations leader and an operator. | • The operator was no longer on speaking terms with the master. |

| LP4 | Lack of feedback on safety reporting | Operations leader does not provide feedback to operator following his/her provision of information on a potential safety issue. | • The operator did not get meaningful feedback on her safety concerns. |

| LO | Operations Planning | Issues in the operations planning by the operations leader, including inadequate risk assessment, inadequate team composition, inappropriate pressure to perform a task, and directed task with inadequate qualification, experience, or equipment. | |