Safety of Automated Agricultural Machineries: A Systematic Literature Review

Abstract

1. Introduction

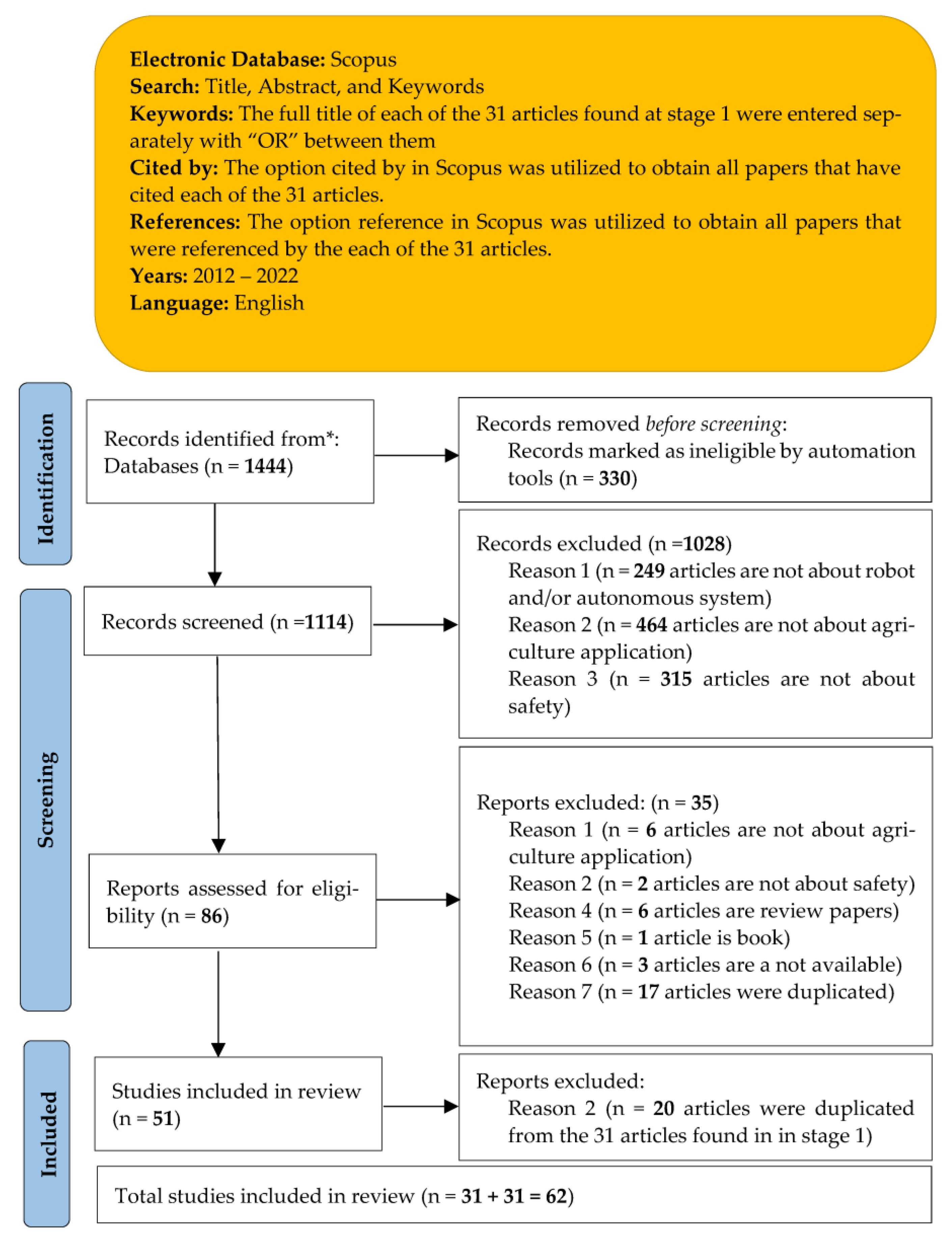

2. Materials and Methods

2.1. Identification

2.2. Screening

- Article is about robots and/or autonomous systems;

- Article is about agriculture applications;

- Article is about safety.

2.3. Eligibility

- The full text of the article is available;

- Article is about automated and autonomous systems;

- Article is about agriculture applications;

- Article is about safety;

- Articles is not a review paper;

- Article is not a book.

2.4. Additional Review

3. Results

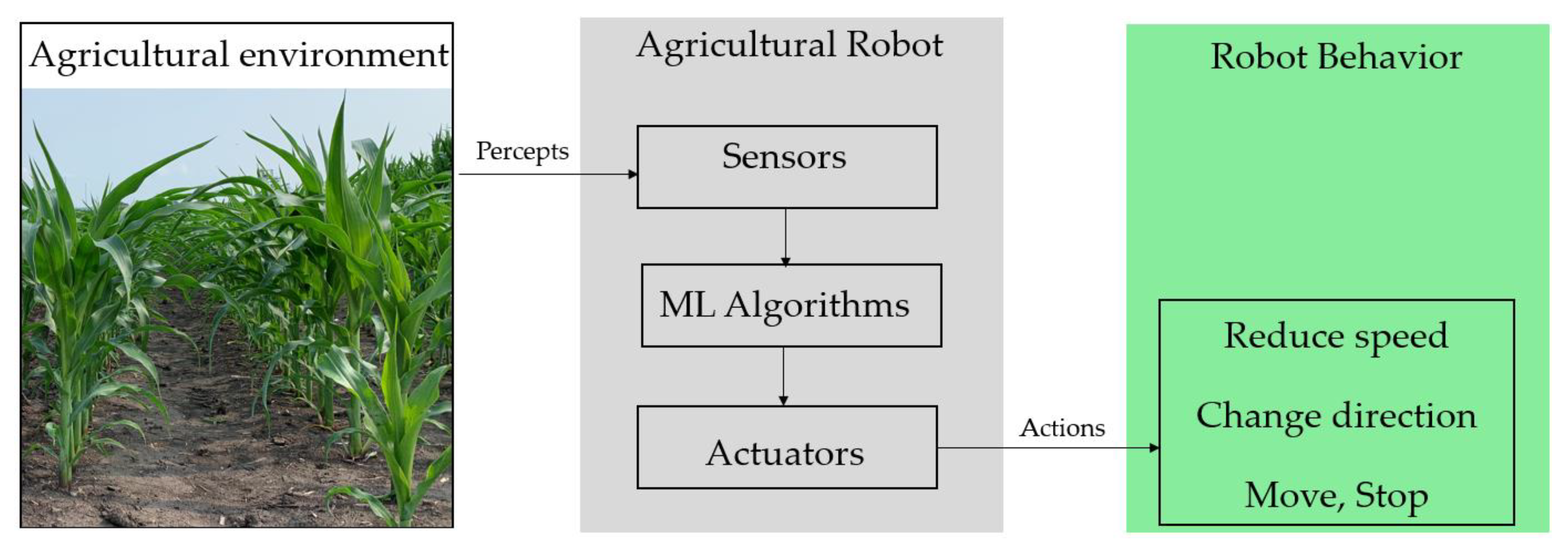

3.1. Environmental Perception

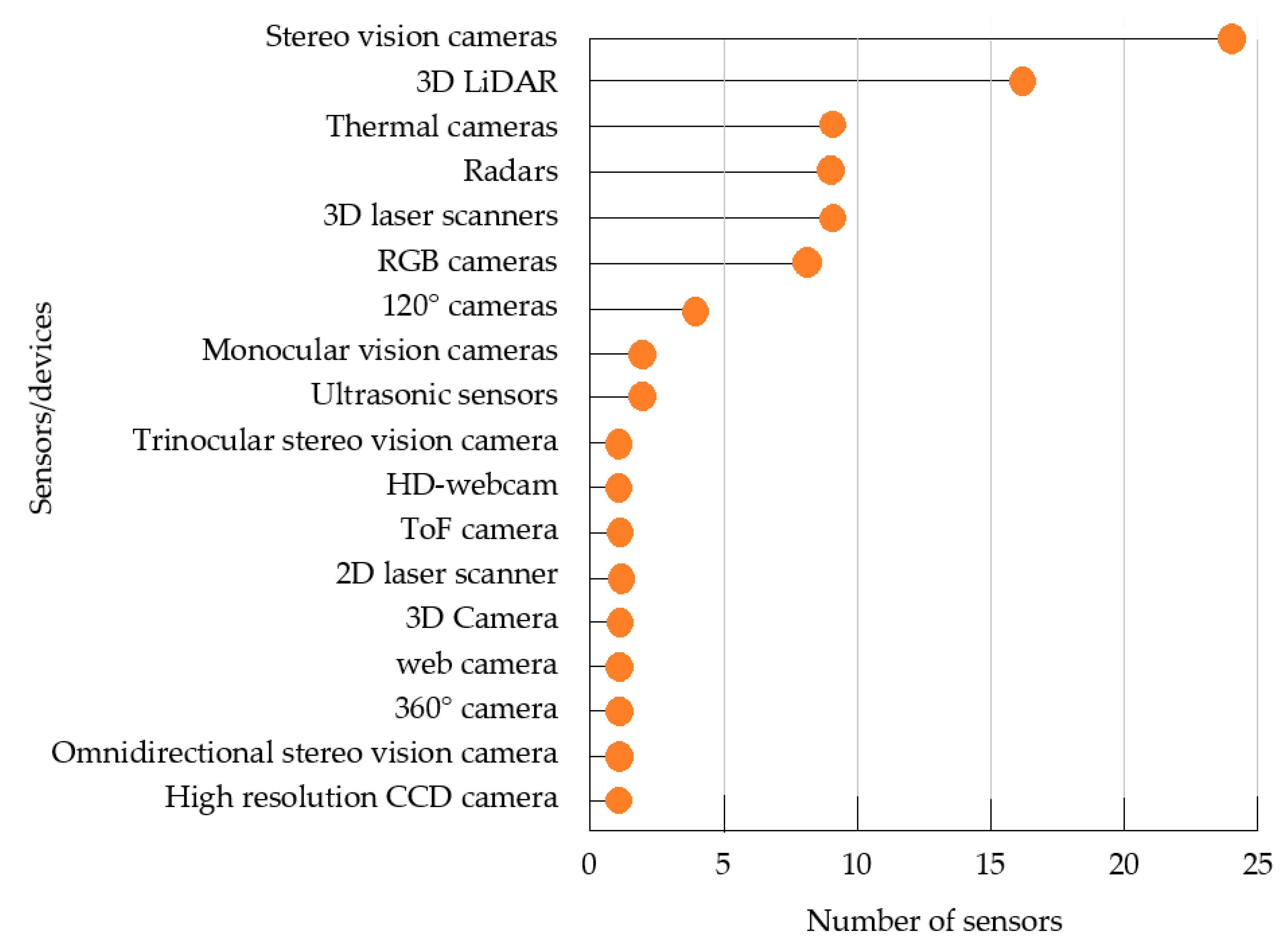

3.1.1. Single Perception Sensor

3.1.2. Multiple Perception Sensors

3.1.3. Datasets and Algorithms

3.1.4. Sensing Strategies

3.2. Risk Assessment and Risk Mitigation

3.3. Human Factors and Ergonomics

4. Discussion

- Environmental perception: The studies have focused on four primary methods: (1) The use of a single perception sensor to detect and avoid obstacles. (2) The use of multiple sensors in obstacle detection and avoidance. (3) The availability of datasets of agricultural environments (including all types of obstacles) to help automated agricultural machines’ perception systems be sufficiently trained and subsequently perform well in detecting obstacles. (4) To improve the perception systems of automated agricultural machines, external ways to improve sensor performance were investigated. Finally, given that it was observed that most of these prior research efforts focused exclusively on obstacle detection while overlooking the reaction of the automated machine afterward, future research should expand on how automated agricultural machines should robustly avoid obstacles upon detection and continue performing their tasks without downtime.

- Risk assessment, hazards analysis, and standards: Overall, since the current risk assessment and hazard analysis methods rely on pre-existing knowledge of equipment, they cannot be effective when dealing with new and revolutionary technologies where pre-existing data are not available, such as automated agricultural machines. A promising future research direction is the development of real-time situational safety risk assessment methods for automated agricultural machines. While the recommendations provided by the ISO 18497 standard are valuable, they only apply to field operations overlooking other operations, including repairs, travel on roadways, mount/dismount equipment, farmyards, and barns. In addition, the standard is missing guidelines with which to deal with hazardous deformable terrain. Overall, full compliance with the current requirements provided in ISO 18497 cannot guarantee the safety of automated agricultural machines. For future directions, the requirements provided with the ISO 18497 standard should be (1) improved to make sure that complying with the guidelines effectively ensures the safety of machines and (2) expanded to cover all other potential areas where automated agricultural machines are likely to be used. ISO 18497 is currently in review, and a future version is expected to be released. Regulatory frameworks and being able to test the functionalities of automated agricultural machines within a reliable software environment are efficient ways to mitigate risks.

- Human factors and ergonomics: Overall, (1) testing the interaction between an automated agricultural machine and a human within a simulated environment helps identify safety concerns associated with the design of the machine in advance, and subsequently provides improvement before the machine reaches users. (2) Knowing the foreseeable human activity related to a particular task when interacting with an automated agricultural machine is essential to improve the design of the machine and subsequently ensure safe human–robot interaction. For future directions, given the wide variety of human sizes, the dimensions of a wide variety of human sizes should be considered in future simulated environment experiments.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- United Nation (UN). World Population Prospects 2019. 2019. Available online: http://www.ncbi.nlm.nih.gov/pubmed/12283219 (accessed on 3 December 2022).

- Food and Agriculture Organization of the United Nations. Sustainable Development Goals. Available online: https://www.fao.org/sustainable-development-goals/indicators/211/en/ (accessed on 1 December 2022).

- FAO. Migration, Agriculture and Rural Development. Addressing the Root Causes of Migration and Harnessing its Potential for Development. 2016. Available online: http://www.fao.org/3/a-i6064e.pdf (accessed on 3 December 2022).

- Ramin, S.R.; Weltzien, C.A.; Hameed, I. Research and development in agricultural robotics: A perspective of digital farming. Int. J. Agric. Biol. Eng. 2018, 11, 4278. [Google Scholar] [CrossRef]

- Lytridis, C.; Kaburlasos, V.G.; Pachidis, T. An overview of cooperative robotics in agriculture. Agronomy 2021, 11, 1818. [Google Scholar] [CrossRef]

- Drewry, J.L.; Shutske, J.M.; Trechter, D.; Luck, B.D.; Pitman, L. Assessment of digital technology adoption and access barriers among crop, dairy and livestock producers in Wisconsin. Comput. Electron. Agric. 2019, 165, 104960. [Google Scholar] [CrossRef]

- Rial-Lovera, R. Agricultural Robots: Drivers; barriers and opportunities for adoption. In Proceedings of the 14th International Conference on Precision Agriculture, Montrel, QC, Canada, 24–27 June 2018; pp. 1–5. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Tranfield, D.; Denyer, D.; Smart, P. Towards a Methodology for Developing Evidence-Informed Management Knowledge by Means of Systematic Review. Br. J. Manag. 2003, 14, 207–222. [Google Scholar] [CrossRef]

- Gualtieri, L.; Rauch, E.; Vidoni, R. Emerging research fields in safety and ergonomics in industrial collaborative robotics: A systematic literature review. Robot. Comput. Integr. Manuf. 2020, 67, 101998. [Google Scholar] [CrossRef]

- Pishgar, M.; Issa, S.F.; Sietsema, M.; Pratap, P.; Darabi, H. Redeca: A novel framework to review artificial intelligence and its applications in occupational safety and health. Int. J. Environ. Res. Public Health 2021, 18, 6705. [Google Scholar] [CrossRef]

- Reina, G.; Milella, A.; Rouveure, R.; Nielsen, M.; Worst, R.; Blas, M.R. Ambient awareness for agricultural robotic vehicles. Biosyst. Eng. 2016, 146, 114–132. [Google Scholar] [CrossRef]

- Lee, C.; Stefan Lang, A.; Heinz, B. Designing a Perception System for Safe Autonomous Operations in Agriculture. In Proceedings of the American Society of Agricultural and Biological Engineers Annual International Meeting 2019, Bosten, MA, USA, 7–10 July 2019. [Google Scholar] [CrossRef]

- Freitas, G.; Hamner, B.; Bergerman, M.; Singh, S. A practical obstacle detection system for autonomous orchard vehicles. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 1–12 October 2012; pp. 3391–3398. [Google Scholar] [CrossRef]

- Kohanbash, D.; Bergerman, M.; Lewis, K.M.; Moorehead, S.J. A safety architecture for autonomous agricultural vehicles. In Proceedings of the American Society of Agricultural and Biological Engineers Annual International Meeting 2012, Dallas, TX, USA, 29 July–1 August 2012; pp. 686–694. [Google Scholar] [CrossRef]

- Bellone, M.; Reina, G.; Giannoccaro, N.I.; Spedicato, L. 3D traversability awareness for rough terrain mobile robots. Sens. Rev. 2014, 34, 220–232. [Google Scholar] [CrossRef]

- Reina, G.; Milella, A.; Underwood, J. Self-learning classification of radar features for scene understanding. Robot. Auton. Syst. 2012, 60, 1377–1388. [Google Scholar] [CrossRef]

- Dvorak, J.S.; Stone, M.L.; Self, K.P. Object detection for agricultural and construction environments using an ultrasonic sensor. J. Agric. Saf. Health 2016, 22, 107–119. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Noguchi, N. Human detection for a robot tractor using omni-directional stereo vision. Comput. Electron. Agric. 2012, 89, 116–125. [Google Scholar] [CrossRef]

- Ross, P.; English, A.; Ball, D.; Upcroft, B.; Corke, P. Online novelty-based visual obstacle detection for field robotics. In Proceedings of the IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 3935–3940. [Google Scholar] [CrossRef]

- Fleischmann, P.; Berns, K. A stereo vision based obstacle detection system for agricultural applications. In Field and Service Robotics: Results of the 10th International Conference; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Campos, Y.; Sossa, H.; Pajares, G. Spatio-temporal analysis for obstacle detection in agricultural videos. Appl. Soft Comput. J. 2016, 45, 86–97. [Google Scholar] [CrossRef]

- Yan, J.; Liu, Y. A Stereo Visual Obstacle Detection Approach Using Fuzzy Logic and Neural Network in Agriculture. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics, Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 1539–1544. [Google Scholar] [CrossRef]

- Oksanen, T. Laser scanner based collision prevention system for autonomous agricultural tractor. Agron. Res. 2015, 13, 167–172. [Google Scholar]

- Inoue, K.; Kaizu, Y.; Igarashi, S.; Imou, K. The development of autonomous navigation and obstacle avoidance for a robotic mower using machine vision technique. IFAC Pap. 2019, 52, 173–177. [Google Scholar] [CrossRef]

- Discant, A.; Rogozan, A.; Rusu, C.; Bensrhair, A. Sensors for obstacle detection—A survey. In Proceedings of the ISSE 2007—30th International Spring Seminar on Electronics Technology 2007, Cluj-Napoca, Romania, 9–13 May 2007; pp. 100–105. [Google Scholar] [CrossRef]

- Rouveure, R.; Nielsen, M.; Petersen, A. The QUAD-AV Project: Multi-sensory approach for obstacle detection in agricultural autonomous robotics. In Proceedings of the International Conference of Agricultural Engineering CIGR-AgEng 2012, Valencia, Spain, 8–12 July 2012; pp. 1–6. [Google Scholar]

- Christiansen, P.; Hansen, M.K.; Steen, K.A.; Karstoft, H.; Jorgensen, R.N. Advanced sensor platform for human detection and protection in autonomous farming. Precis. Agric. 2015, 15, 291–297. [Google Scholar] [CrossRef]

- Christiansen, P.; Kragh, M.; Steen, K.A.; Karstoft, H.; Jørgensen, R.N. Platform for evaluating sensors and human detection in autonomous mowing operations. Precis. Agric. 2017, 18, 350–365. [Google Scholar] [CrossRef]

- Ross, P.; English, A.; Ball, D.; Upcroft, B.; Wyeth, G.; Corke, P. Novelty-based visual obstacle detection in agriculture. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 1699–1705. [Google Scholar] [CrossRef]

- Nissimov, S.; Goldberger, J.; Alchanatis, V. Obstacle detection in a greenhouse environment using the Kinect sensor. Comput. Electron. Agric. 2015, 113, 104–115. [Google Scholar] [CrossRef]

- David, B.; Patrick, R.; Andrew, E.; Tim, P.; Ben Upcroft, R.F.; Salah, S.; Gordon, W.P.C. Field and Service Robotics: Results of the 9th International Conference, Iolanda-Veronica, Brisbane, Australia, 9–11 December 2013; Springer Tracts in Advanced Robotics: Berlin, Germany, 2015; p. 105. [Google Scholar] [CrossRef]

- Franzius, M.; Dunn, M.; Einecke, N.; Dirnberger, R. Embedded Robust Visual Obstacle Detection on Autonomous Lawn Mowers. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 361–369. [Google Scholar] [CrossRef]

- Kragh, M.; Underwood, J. Multimodal obstacle detection in unstructured environments with conditional random fields. J. Field Robot. 2020, 37, 53–72. [Google Scholar] [CrossRef]

- Jung, T.H.; Cates, B.; Choi, I.K.; Lee, S.H.; Choi, J.M. Multi-camera-based person recognition system for autonomous tractors. Designs 2020, 4, 54. [Google Scholar] [CrossRef]

- Skoczeń, M.; Ochman, M.; Spyra, K. Obstacle detection system for agricultural mobile robot application using rgb-d cameras. Sensors 2021, 21, 5292. [Google Scholar] [CrossRef]

- Reina, G.; Milella, A.; Halft, W.; Worst, R. LIDAR and stereo imagery integration for safe navigation in outdoor settings. In Proceedings of the 2013 IEEE International Symposium on Safety, Security, and Rescue Robotics, Linköping, Sweden, 21–26 October 2013. [Google Scholar] [CrossRef]

- Milella, A.; Reina, G.; Underwood, J. A Self-learning Framework for Statistical Ground Classification using Radar and Monocular Vision. J. Field Robot. 2014, 33, 20–41. [Google Scholar] [CrossRef]

- Reina, G.; Milella, A.; Galati, R. Terrain assessment for precision agriculture using vehicle dynamic modelling. Biosyst. Eng. 2017, 162, 124–139. [Google Scholar] [CrossRef]

- Kragh, M.; Jørgensen, R.N.; Pedersen, H. Object detection and terrain classification in agricultural fields using 3d lidar data. Lect. Notes Comput. Sci. 2015, 9163, 188–197. [Google Scholar] [CrossRef]

- Christiansen, P.; Nielsen, L.N.; Steen, K.A.; Jørgensen, R.N.; Karstoft, H. DeepAnomaly: Combining background subtraction and deep learning for detecting obstacles and anomalies in an agricultural field. Sensors 2016, 16, 1904. [Google Scholar] [CrossRef]

- Pezzementi, Z.; Tabor, T.; Hu, P. Comparing apples and oranges: Off-road pedestrian detection on the National Robotics Engineering Center agricultural person-detection dataset. J. Field Robot. 2018, 35, 545–563. [Google Scholar] [CrossRef]

- Kragh, M.F.; Christiansen, P.; Laursen, M.S. FieldSAFE: Dataset for obstacle detection in agriculture. Sensors 2017, 17, 2579. [Google Scholar] [CrossRef]

- Tian, W.; Deng, Z.; Yin, D.; Zheng, Z.; Huang, Y.; Bi, X. 3D Pedestrian Detection in Farmland By Monocular Rgb Image and Far-Infrared Sensing. Remote Sens. 2021, 13, 2896. [Google Scholar] [CrossRef]

- Doerr, Z.; Mohsenimanesh, A.; Laguë, C.; McLaughlin, N.B. Application of the LIDAR technology for obstacle detection during the operation of agricultural vehicles. Can. Biosyst. Eng. 2013, 55, 9–17. [Google Scholar] [CrossRef]

- Yin, X.; Noguchi, N.; Ishii, K. Development of an obstacle avoidance system for a field robot using a 3D camera. Eng. Agric. Environ. Food 2013, 6, 41–47. [Google Scholar] [CrossRef]

- Korthals, T.; Kragh, M.; Christiansen, P.; Karstoft, H.; Jørgensen, R.N.; Rückert, U. Multi-modal detection and mapping of static and dynamic obstacles in agriculture for process evaluation. Front. Robot. AI 2018, 5, 28. [Google Scholar] [CrossRef] [PubMed]

- Sadgrove, E.J.; Falzon, G.; Miron, D.; Lamb, D.W. Real-time object detection in agricultural/remote environments using the multiple-expert colour feature extreme learning machine (MEC-ELM). Comput. Ind. 2018, 98, 183–191. [Google Scholar] [CrossRef]

- Xue, J.; Cheng, F.; Li, Y.; Song, Y.; Mao, T. Detection of Farmland Obstacles Based on an Improved YOLOv5s Algorithm by Using CIoU and Anchor Box Scale Clustering. Sensors 2022, 22, 1790. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Li, M.; Qi, J.; Zhou, D.; Zou, Z.; Liu, K. Detection of typical obstacles in orchards based on deep convolutional neural network. Comput. Electron. Agric. 2021, 181, 105932. [Google Scholar] [CrossRef]

- Mujkic, E.; Philipsen, M.P.; Moeslund, T.B.; Christiansen, M.P.; Ravn, O. Anomaly Detection for Agricultural Vehicles Using Autoencoders. Sensors 2022, 22, 3608. [Google Scholar] [CrossRef] [PubMed]

- Son, H.S.; Kim, D.K.; Yang, S.H.; Choi, Y.K. Real-Time Power Line Detection for Safe Flight of Agricultural Spraying Drones Using Embedded Systems and Deep Learning. IEEE Access 2022, 10, 54947–54956. [Google Scholar] [CrossRef]

- Wang, D.; Li, W.; Liu, X.; Li, N.; Zhang, C. UAV environmental perception and autonomous obstacle avoidance: A deep learning and depth camera combined solution. Comput. Electron. Agric. 2020, 175, 105523. [Google Scholar] [CrossRef]

- Huang, X.; Dong, X.; Ma, J. The improved A* Obstacle avoidance algorithm for the plant protection UAV with millimeter wave radar and monocular camera data fusion. Remote Sens. 2021, 13, 3364. [Google Scholar] [CrossRef]

- Periu, C.F.; Mohsenimanesh, A.; Laguë, C.; McLaughlin, N.B. Isolation of vibrations transmitted to a LIDAR sensor mounted on an agricultural vehicle to improve obstacle detection. Can. Biosyst. Eng. 2013, 55, 2.33–2.42. [Google Scholar] [CrossRef]

- Meltebrink, C.; Ströer, T.; Wegmann, B.; Weltzien, C.; Ruckelshausen, A. Concept and realization of a novel test method using a dynamic test stand for detecting persons by sensor systems on autonomous agricultural robotics. Sensors 2021, 21, 2315. [Google Scholar] [CrossRef]

- Xue, J.; Xia, C.; Zou, J. A velocity control strategy for collision avoidance of autonomous agricultural vehicles. Auton. Robot. 2020, 44, 1047–1063. [Google Scholar] [CrossRef]

- Santos, L.C.; Santos, F.N.; Valente, A.; Sobreira, H.; Sarmento, J.; Petry, M. Collision Avoidance Considering Iterative Bézier Based Approach for Steep Slope Terrains. IEEE Access 2022, 10, 25005–25015. [Google Scholar] [CrossRef]

- Shutske, J.; Contact, U.; Society, A.; Engineers, B.; America, N.; America, N.; Operator, R. Risk Assessment of Autonomous Agricultural Machinery by Industry Designers; College of Agricultural and Life Sciences University of Wisconsin-Madison: Madison, WI, USA, 2021. [Google Scholar]

- Spreafico, C.; Russo, D.; Rizzi, C. A state-of-the-art review of FMEA/FMECA including patents. Comput. Sci. Rev. 2017, 25, 19–28. [Google Scholar] [CrossRef]

- Sandner, K.J. The Efficacy of Risk Assessment Tools and Standards Pertaining to Highly Automated Agricultural Ma-Chinery. Master’s Thesis, Department of Biological Systems Engineering, University of Wisconsin, Madison, WI, USA, 2021. [Google Scholar]

- Shutske, J.M.; Sandner, K.; Jamieson, Z. Risk Assessment Methods for Autonomous Agricultural Machines: Review of Current Practices and Future Needs. Appl. Eng. Agric. 2023, 39, 109–120. [Google Scholar] [CrossRef]

- ISO 12100; Safety of Machinery—General Principles for Design—Risk assessment and Risk Reduction. International Organization for Standardization: Geneva, Switzerland, 2010. Available online: https://www.iso.org/standard/51528.html (accessed on 10 December 2022).

- ISO 25119-1; Tractors and Machinery for Agriculture and Forestry—Safety-Related Parts of Control Systems—Part 1, General Principles for Design and Development. International Organization for Standardization: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/69025.html (accessed on 10 December 2022).

- ISO 25119-2; Tractors and Machinery for Agriculture and Forestry—Safety-Related Parts of Control Systems—Part 2, Concept Phase. International Organization for Standardization: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/69026.html (accessed on 10 December 2022).

- ISO 25119-3; Tractors and Machinery for Agriculture and Forestry—Safety-Related Parts of Control Systems—Part 3, Series Development; Hardware and Software. International Organization for Standardization: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/69027.html (accessed on 10 December 2022).

- ISO 25119-4; Tractors and Machinery for Agriculture and Forestry—Safety-Related Parts of Control Systems—Part 4, Production; Operation; Modification and Supporting Processes. International Organization for Standardization: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/69028.html (accessed on 10 December 2022).

- ISO 18497-2; Agricultural Machinery and Tractors—Safety of Highly Automated Agricultural Machines—Principles for Design. International Organization for Standardization: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/62659.html. (accessed on 10 December 2022).

- ANSI S318.18; Safety for Agricultural Field Equipment. ANSI: Washington, DC, USA, 2017.

- ANSI S354.7; Safety for Farmstead Equipment. ANSI: Washington, DC, USA, 2018.

- Shutske, J.M. Agricultural automation & autonomy: Safety and risk assessment must be at the forefront. J. Agromed. 2023, 28, 5–10. [Google Scholar]

- Lee, T.Y.; Gerberich, S.G.; Gibson, R.W.; Carr, W.P.; Shutske, J.; Renier, C.M. A population-based study of tractor-related injuries: Regional rural injury study-I (RRIS-I). J. Occup. Environ. Med. 1996, 38, 782–793. [Google Scholar] [CrossRef] [PubMed]

- Aby, G.R.; Issa, S.F.; Chowdhary, G. Safety Risk Assessment of a Highly Automated Agricultural Machine. In Proceedings of the 2022 ASABE Annual International Meeting Sponsored by ASABE, Houston, TX, USA, 19–20 July 2022; pp. 2–12. [Google Scholar]

- Steen, K.A.; Christiansen, P.; Karstoft, H.; Jørgensen, R.N. Using deep learning to challenge safety standard for highly autonomous machines in agriculture. J. Imaging 2016, 2, 6. [Google Scholar] [CrossRef]

- Basu, S.; Omotubora, A.; Beeson, M.; Fox, C. Legal framework for small autonomous agricultural robots. AI Soc. 2020, 35, 113–134. [Google Scholar] [CrossRef]

- Kelber, C.R.; Reis, B.R.R.; Figueiredo, R.M. Improving functional safety in autonomous guided agricultural self propelled machines using hardware-in-The-loop (HIL) systems for software validation. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1438–1444. [Google Scholar] [CrossRef]

- Chiu, Y.C.; Chen, S.; Wu, G.J.; Lin, Y.H. Three-dimensional computer-aided human factors engineering analysis of a grafting robot. J. Agric. Saf. Health 2012, 18, 181–194. [Google Scholar] [CrossRef]

- Bashiri, B. Automation and the situation awareness of drivers in agricultural semi-autonomous vehicles. Biosyst. Eng. 2014, 124, 8–15. [Google Scholar] [CrossRef]

- Baxter, P.; Cielniak, G.; Hanheide, M.; From, P. Safe Human-Robot Interaction in Agriculture. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 59–60. [Google Scholar] [CrossRef]

- Benos, L.; Bechar, A.; Bochtis, D. Safety and ergonomics in human-robot interactive agricultural operations. Biosyst. Eng. 2020, 200, 55–72. [Google Scholar] [CrossRef]

- Anagnostis, A.; Benos, L.; Tsaopoulos, D.; Tagarakis, A.; Tsolakis, N.; Bochtis, D. Human activity recognition through recurrent neural networks for human–robot interaction in agriculture. Appl. Sci. 2021, 11, 2188. [Google Scholar] [CrossRef]

| Sensor Modality | Functional Methods | Advantages | Disadvantages |

|---|---|---|---|

| Stereo vision camera | Stereo vision cameras capture wavelengths in the visible range. The images they produce recreate almost exactly what our eyes see. | Natural interpretation for humans | Risk of occlusions |

| Relatively high resolution | Sensitive to lighting conditions | ||

| Relatively high sampling rate | Poor performance in low-visibility conditions (rain, fog, smoke, etc.) | ||

| LiDAR | LiDAR is an optical remote sensing technology that measures the distance or other properties of a target by illuminating the target with light. | High accuracy, high density | High costs related to accuracy and range |

| Narrow beam spread Fast operation | Some risk of occlusion No color or texture information | ||

| Radar | Radars measure the distance between an emitter and an object by calculating the ToF of an emitted signal and received echo. | Long range (up to 100–150 m) | Three-dimensional map limited to pencil beam radar |

| Panoramic perception (360°) | Difficulty in signal processing and interpretation | ||

| Multiple targets | No detection of small objects | ||

| Ultrasonic sensor | An ultrasonic sensor uses sonic waves, in the range of 20 kHz to 40 kHz, to measure the distance to an object. | Reliable in any lighting environment | Poor detection range and resolution |

| Less impact on the environment | Sensitive to smoothness and angle to obstacles | ||

| ToF camera (time of flight camera) | ToF cameras measure distances with modulated light based on the ToF principle. | Provides direct 3D measurements Invariant to illumination and temperature No moving parts | Low resolution, limited field of view (FoV) Relatively short detection range (depends on illumination power) |

| Thermal camera | A thermal camera is a device that forms a heat image and is used to detect heat radiation that is emitted by all living things. | Invariant to illumination | Relatively low resolution |

| Robust against dust and rain Detects humans and animals | Some risk of occlusion Difficulty in calibration |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aby, G.R.; Issa, S.F. Safety of Automated Agricultural Machineries: A Systematic Literature Review. Safety 2023, 9, 13. https://doi.org/10.3390/safety9010013

Aby GR, Issa SF. Safety of Automated Agricultural Machineries: A Systematic Literature Review. Safety. 2023; 9(1):13. https://doi.org/10.3390/safety9010013

Chicago/Turabian StyleAby, Guy R., and Salah F. Issa. 2023. "Safety of Automated Agricultural Machineries: A Systematic Literature Review" Safety 9, no. 1: 13. https://doi.org/10.3390/safety9010013

APA StyleAby, G. R., & Issa, S. F. (2023). Safety of Automated Agricultural Machineries: A Systematic Literature Review. Safety, 9(1), 13. https://doi.org/10.3390/safety9010013