Experience-Driven NeuroSymbolic System for Efficient Robotic Bolt Disassembly

Abstract

1. Introduction

- We propose a NeuroSymbolic framework with offline learning capabilities, integrating neural predicates, action primitives, and LLM-based policy adaptation for unified perception, reasoning, execution, and learning.

- We design an LLM-driven adaptive optimization module that dynamically refines execution strategies and decision-making logic, improving the generalization and reusability of action primitives and neural predicates.

- We develop and deploy the proposed system in real-world EVB disassembly scenarios, demonstrating significant improvements in fastener localization accuracy, disassembly efficiency, and task success rate over conventional methods.

- We show that the proposed framework generalizes to other structured industrial disassembly tasks, such as fan units and power modules, establishing a theoretical and practical foundation for embodied intelligence in complex physical environments.

- We enable historical experience-driven learning by allowing the system to autonomously extract knowledge from past disassembly trajectories and iteratively refine disassembly actions, addressing the limitations of traditional systems that lack adaptive memory and self-improvement capabilities.

2. Literature Review

- Section 2.1 surveys the state of the art in EVB disassembly methods, ranging from mechanized and human–robot collaborative systems to emerging intelligent approaches, and discusses their advantages and limitations in real-world applications.

- Section 2.2 reviews recent efforts to apply LLMs to robotic task understanding, planning, and action generation, highlighting their growing role in semantic reasoning and adaptive decision making.

- Section 2.3 focuses on neural predicate and action primitive learning, covering NeuroSymbolic representations, imitation and reinforcement learning, and other core techniques that support motion generalization and strategic optimization in dynamic environments.

2.1. Progress in Electric Vehicle Battery (EVB) Disassembly Methods

2.2. Applications of LLMs in Robotics

2.3. Autonomous Learning of Neural Predicates and Action Primitives

3. Overview of the Proposed System

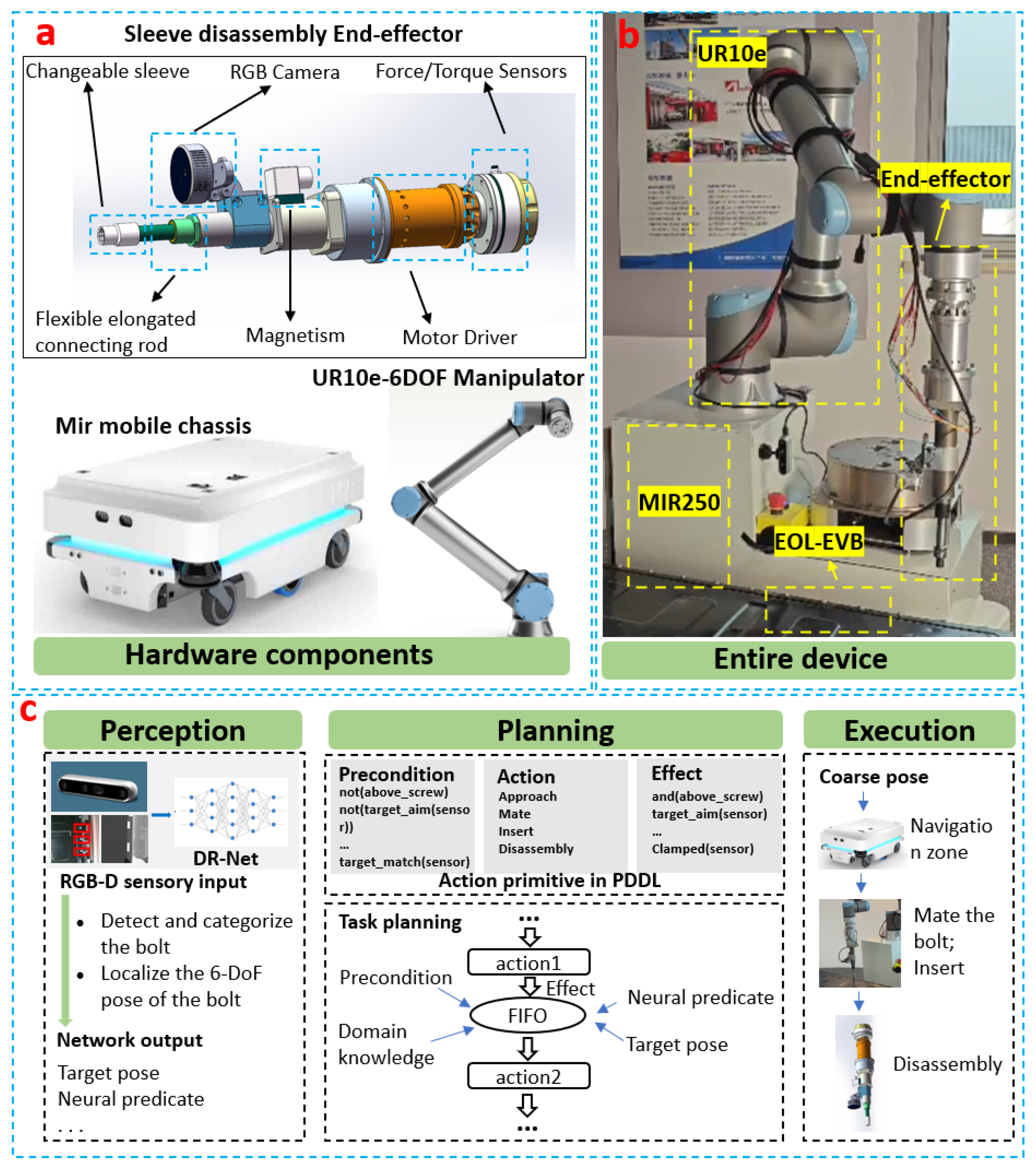

3.1. System Hardware Architecture

3.1.1. Autonomous Mobile Robot

3.1.2. Six-Degree-of-Freedom Manipulator

3.1.3. Modular Sleeve-Type End-Effector

- Perception Module:

- –

- Vision Sensing: An Intel RealSense RGB-D camera captures high-resolution depth and color data for visual analysis.

- –

- Force/Torque Sensing: An ATI six-axis force/torque sensor(ATI Industrial Automation, Inc., Apex, NC, USA) continuously monitors disassembly forces and moments, enabling safe and precise interaction with components.

- Execution Module:

- –

- Modular Sleeve Adapter: A flexible connector for switching screwdriver sockets to accommodate various bolt specifications.

- –

- Drive Motor: It delivers sufficient torque for reliable bolt loosening and removal.

- –

- Electromagnet: It enables magnetic capture and retention of removed bolts through electrically actuated absorption.

- –

- Flexible Joint: It compensates for surface irregularities commonly found on aged battery packs, ensuring stable and consistent contact with fasteners.

3.1.4. Experimental Platform

3.2. System Fundamentals

4. Method

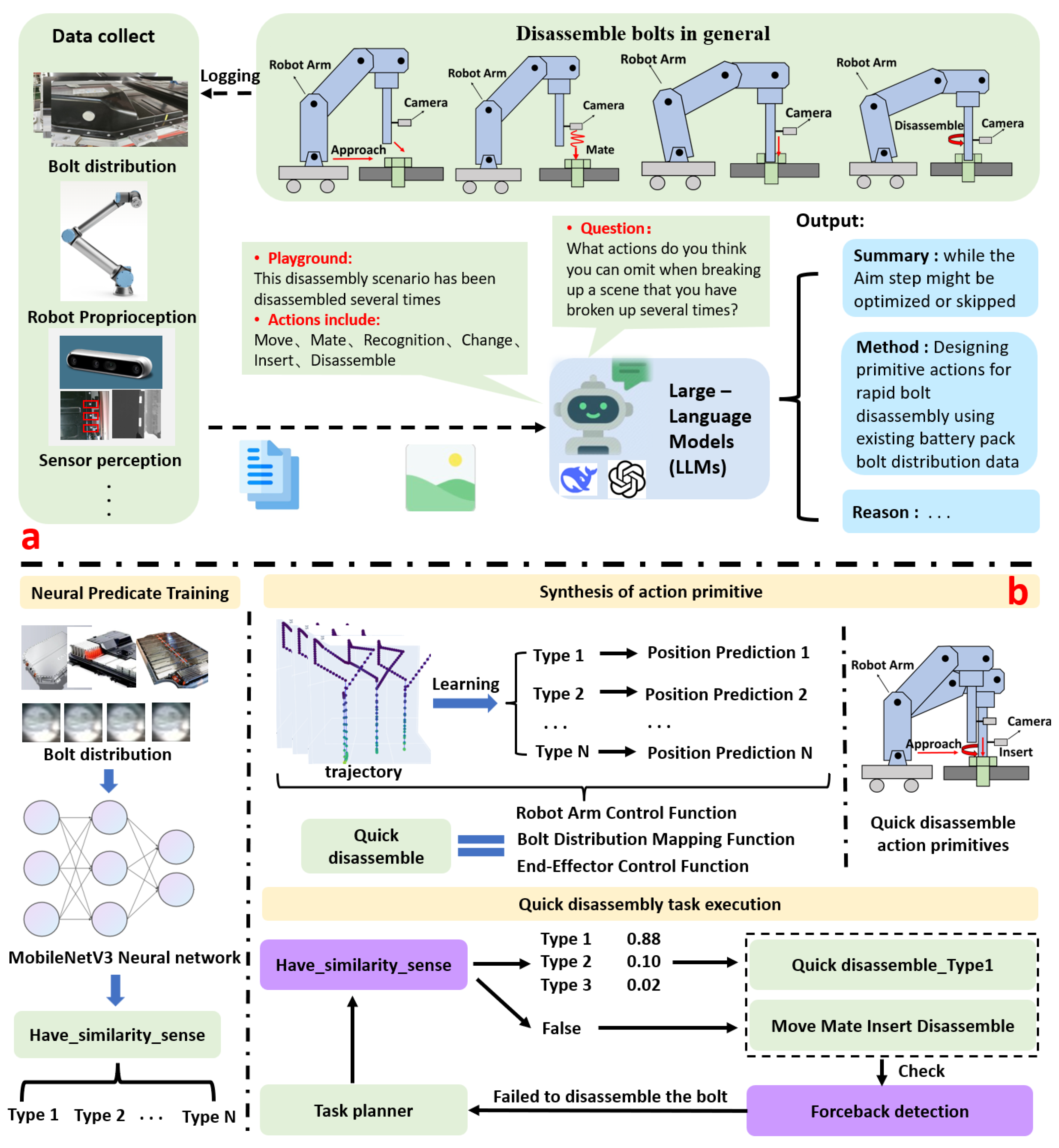

4.1. Bolt Disassembly Data Collection and Storage

4.1.1. Data Definitions and Mathematical Representation

- Battery Identifier: Let denote the set of battery models, with each individual instance being represented as .

- Bolt Position Set: For a given battery b, the set of all bolt positions is defined aswhere is the number of bolts and each represents the 3D position of bolt k in a fixed global coordinate frame.

- Action Primitive Set: For each bolt disassembly action, the corresponding action primitives are recorded aswhere

- –

- denotes the action primitive label (e.g., move, insert, mate);

- –

- is the start position of the end-effector before executing ;

- –

- is the end position after execution.

4.1.2. Storage Format and System Implementation

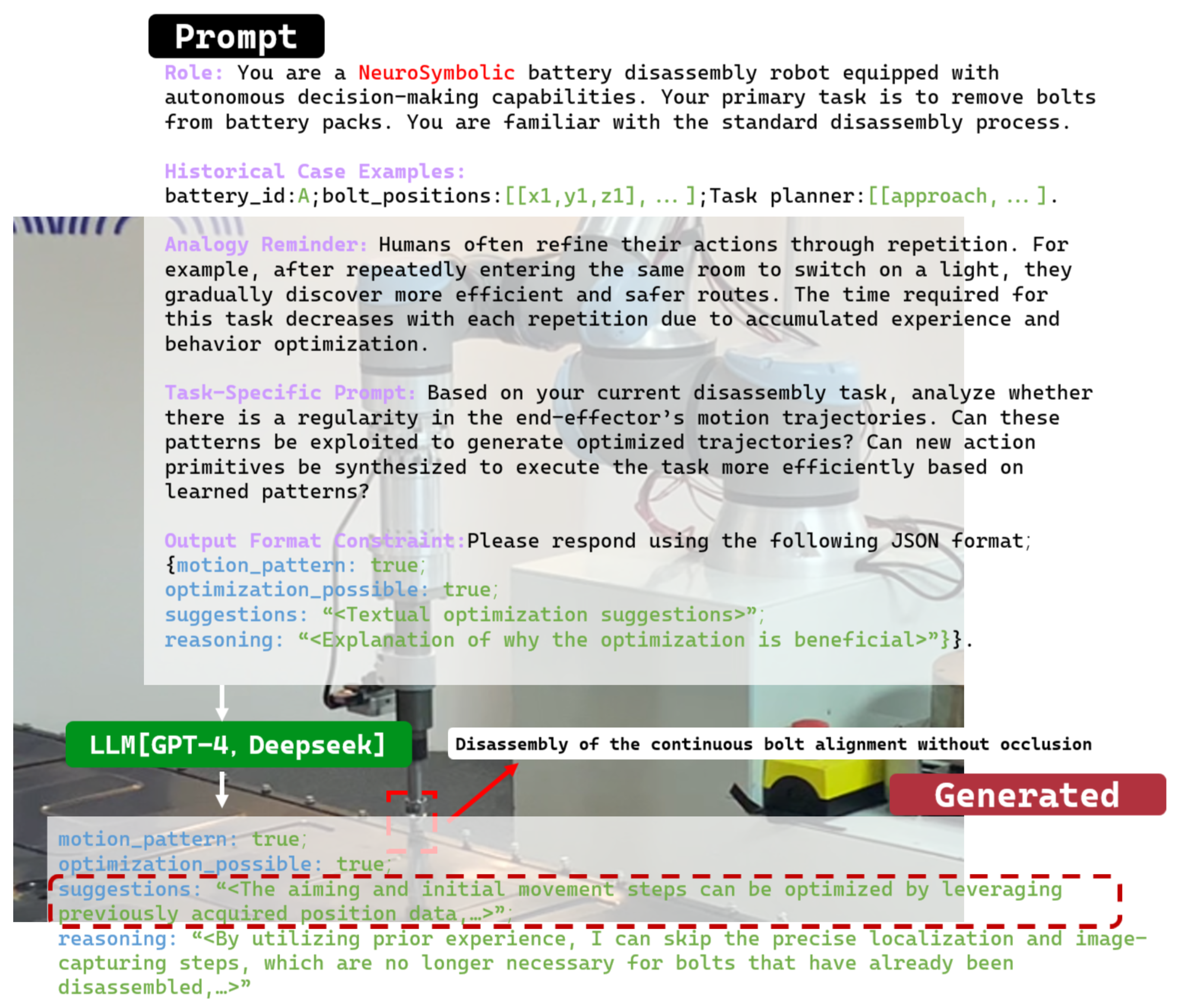

4.2. LLM-Driven Contextual Reasoning and Strategy Optimization

- Role Definition: “You are a NeuroSymbolic battery disassembly robot equipped with autonomous decision-making capabilities. Your primary task is to remove bolts from battery packs. You are familiar with the standard disassembly process.”

- Historical Case Examples:Example 1battery_id: Abolt_positions: [[…]]task_planner: [[approach, mate, recognition, insert, disassemble], …]

- Analogy Reminder: Humans often refine their actions through repetition. For example, after repeatedly entering the same room to switch on a light, they gradually discover more efficient and safer routes. The time required for this task decreases with each repetition due to accumulated experience and behavior optimization.

- Task-Specific Prompt: “Based on your current disassembly task, analyze whether there is a regularity in the end-effector’s motion trajectories. Can these patterns be exploited to generate optimized trajectories? Can new action primitives be synthesized to execute the task more efficiently based on learned patterns?”

- Output Format Constraint: “Please respond using the following JSON format:{motion_pattern: true,optimization_possible: true,suggestions: “<Textual optimization suggestions>”,reasoning: “<Explanation of why the optimization is beneficial>”}

4.3. Bolt Position Prediction Based on Geometric Priors

- Standard deviation , which reflects global variance:

- Maximum spacing deviation , which captures the range of bolt spacing:

- Spacing Consistency Detection: Adjacent bolt positions are used to compute spacing distances, and statistical measures such as standard deviation and spacing range are applied to assess layout regularity.

- Bolt Position Prediction: Given a regular pattern, average spacing and principal direction are used to build a predictive function , allowing for the extrapolation of bolt positions from a known reference point.

- Force Feedback Verification and Correction: After performing the primitive insertion action, real-time force data are analyzed. In the event of a failed insertion, the system halts execution and switches to conventional planning for correction.

4.4. Training of the Similar Scene Recognition Predicate

- Continuous bolt alignment without occlusion;

- Continuous bolt alignment with partial occlusion;

- Intermittent bolt alignment without occlusion;

- Intermittent bolt alignment with partial occlusion.

| Algorithm 1 Scene classification and action execution via neural predicate. |

|

5. Experiments

- Baseline Method: A conventional NeuroSymbolic system without optimization, which performs continuous bolt disassembly by using static, predefined strategies.

- Optimized Method: The proposed self-learning-enhanced NeuroSymbolic system, which integrates LLM-driven strategy adaptation and autonomously learned primitives to perform the same disassembly tasks.

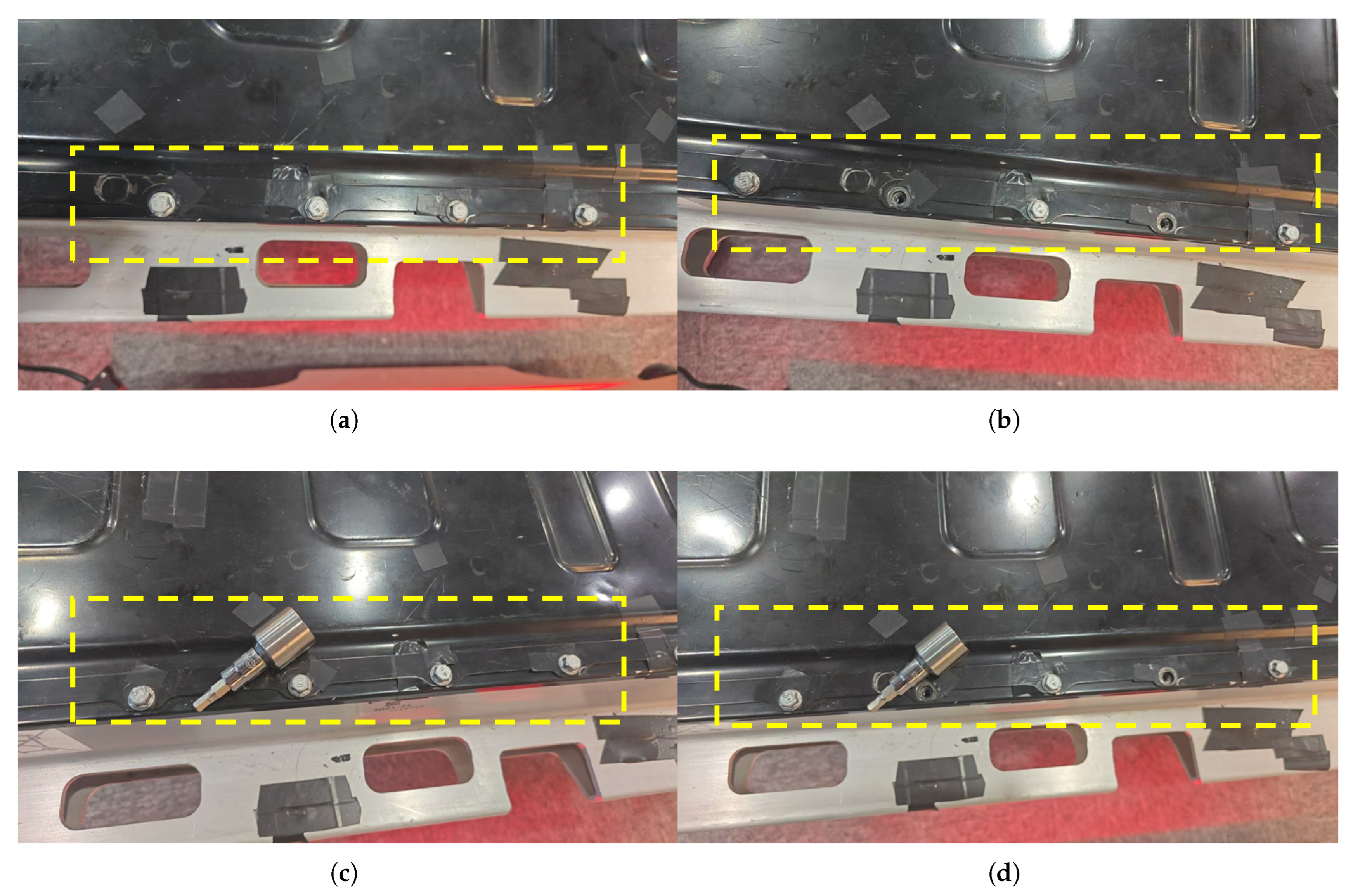

- Uniform bolt distribution without occlusion;

- Uniform bolt distribution with partial occlusion;

- Irregular bolt alignment without occlusion;

- Irregular bolt alignment with partial occlusion.

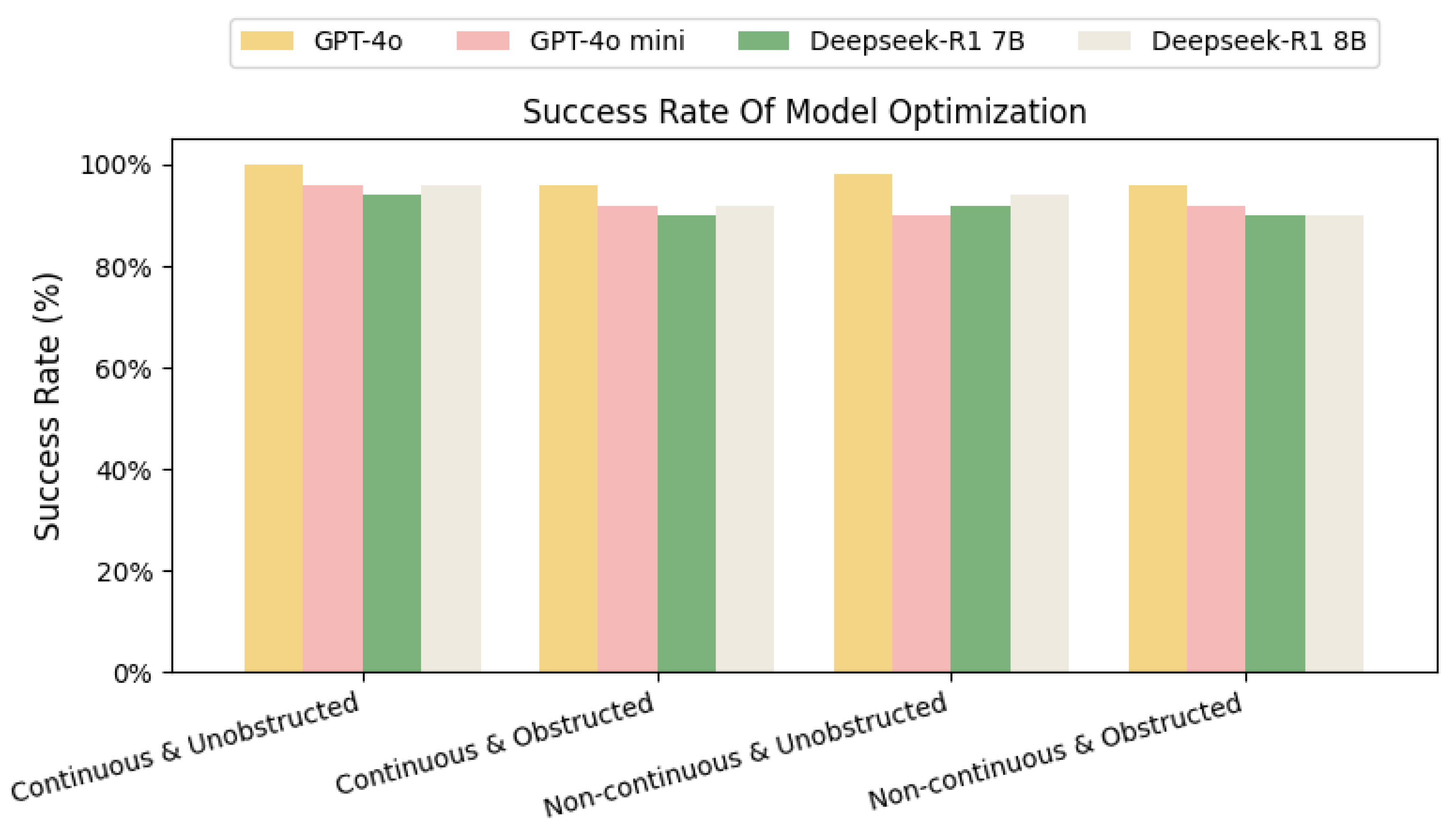

5.1. LLM-Based Optimization Mechanism Evaluation

5.1.1. Experimental Setup

- Continuous bolt distribution without obstacles;

- Discontinuous bolt distribution without obstacles;

- Continuous bolt distribution with obstacles;

- Discontinuous bolt distribution with obstacles.

5.1.2. Results

5.2. Bolt Disassembly Experiments

5.2.1. Experimental Setup

5.2.2. Result

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Beaudet, A.; Larouche, F.; Amouzegar, K.; Bouchard, P.; Zaghib, K. Key Challenges and Opportunities for Recycling Electric Vehicle Battery Materials. Sustainability 2020, 12, 5837. [Google Scholar] [CrossRef]

- Xiao, J.; Jiang, C.; Wang, B. A review on dynamic recycling of electric vehicle battery: Disassembly and echelon utilization. Batteries 2023, 9, 57. [Google Scholar] [CrossRef]

- Yun, L.; Linh, D.; Shui, L.; Peng, X.; Garg, A.; LE, M.L.P.; Asghari, S.; Sandoval, J. Metallurgical and mechanical methods for recycling of lithium-ion battery pack for electric vehicles. Resour. Conserv. Recycl. 2018, 136, 198–208. [Google Scholar] [CrossRef]

- Weyrich, M.; Wang, Y. Architecture design of a vision-based intelligent system for automated disassembly of E-waste with a case study of traction batteries. In Proceedings of the 2013 IEEE 18th Conference on Emerging Technologies & Factory Automation (ETFA), Cagliari, Italy, 10–13 September 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–8. [Google Scholar]

- Hellmuth, J.F.; DiFilippo, N.M.; Jouaneh, M.K. Assessment of the automation potential of electric vehicle battery disassembly. J. Manuf. Syst. 2021, 59, 398–412. [Google Scholar] [CrossRef]

- Zhang, H.; Yang, H.; Wang, H.; Wang, Z.; Zhang, S.; Chen, M. Autonomous electric vehicle battery disassembly based on neurosymbolic computing. In Proceedings of the SAI Intelligent Systems Conference, Amsterdam, The Netherlands, 1–2 September 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 443–457. [Google Scholar]

- Zhang, H.; Zhang, Y.; Wang, Z.; Zhang, S.; Li, H.; Chen, M. A novel knowledge-driven flexible human–robot hybrid disassembly line and its key technologies for electric vehicle batteries. J. Manuf. Syst. 2023, 68, 338–353. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, H.; Wang, Z.; Zhang, S.; Li, H.; Chen, M. Development of an autonomous, explainable, robust robotic system for electric vehicle battery disassembly. In Proceedings of the 2023 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Cagliari, Italy, 10–13 September 2013; IEEE: Piscataway, NJ, USA, 2023; pp. 409–414. [Google Scholar]

- Peng, Y.; Wang, Z.; Zhang, Y.; Zhang, S.; Cai, N.; Wu, F.; Chen, M. Revolutionizing Battery Disassembly: The Design and Implementation of a Battery Disassembly Autonomous Mobile Manipulator Robot (BEAM-1). In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 6367–6374. [Google Scholar]

- Li, H.; Zhang, H.; Zhang, Y.; Zhang, S.; Peng, Y.; Wang, Z.; Song, H.; Chen, M. An accurate activate screw detection method for automatic electric vehicle battery disassembly. Batteries 2023, 9, 187. [Google Scholar] [CrossRef]

- Priyono, A.; Ijomah, W.; Bititci, U.S. Disassembly for remanufacturing: A systematic literature review, new model development and future research needs. J. Ind. Eng. Manag. 2016, 9, 899–932. [Google Scholar] [CrossRef]

- Zang, Y.; Wang, Y. Robotic disassembly of electric vehicle batteries: An overview. In Proceedings of the 2022 27th International Conference on Automation and Computing (ICAC), Bristol, UK, 1–3 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Diekmann, J.; Grützke, M.; Loellhoeffel, T.; Petermann, M.; Rothermel, S.; Winter, M.; Nowak, S.; Kwade, A. Potential dangers during the handling of lithium-ion batteries. In Recycling of Lithium-Ion Batteries: The LithoRec Way; Springer: Cham, Switzerland, 2018; pp. 39–51. [Google Scholar]

- Villagrossi, E.; Delledonne, M.; Faroni, M.; Beschi, M.; Pedrocchi, N. Hiding task-oriented programming complexity: An industrial case study. Int. J. Comput. Integr. Manuf. 2023, 36, 1629–1648. [Google Scholar] [CrossRef]

- Baratta, A.; Cimino, A.; Gnoni, M.G.; Longo, F. Human Robot Collaboration in Industry 4.0: A literature review. Procedia Comput. Sci. 2023, 217, 1887–1895. [Google Scholar] [CrossRef] [PubMed]

- Wegener, K.; Andrew, S.; Raatz, A.; Dröder, K.; Herrmann, C. Disassembly of electric vehicle batteries using the example of the Audi Q5 hybrid system. Procedia Cirp 2014, 23, 155–160. [Google Scholar] [CrossRef]

- Wegener, K.; Chen, W.H.; Dietrich, F.; Dröder, K.; Kara, S. Robot assisted disassembly for the recycling of electric vehicle batteries. Procedia Cirp 2015, 29, 716–721. [Google Scholar] [CrossRef]

- Duan, L.; Li, J.; Bao, J.; Lv, J.; Zheng, H. A MR-Assisted and Scene Perception System for Human-Robot Collaborative Disassembly of Power Batteries. In Proceedings of the 2023 IEEE 19th International Conference on Automation Science and Engineering (CASE), Auckland, New Zealand, 26–30 August 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–8. [Google Scholar]

- Wu, B.; Han, S.; Shin, K.G.; Lu, W. Application of artificial neural networks in design of lithium-ion batteries. J. Power Sources 2018, 395, 128–136. [Google Scholar] [CrossRef]

- Meng, K.; Xu, G.; Peng, X.; Youcef-Toumi, K.; Li, J. Intelligent disassembly of electric-vehicle batteries: A forward-looking overview. Resour. Conserv. Recycl. 2022, 182, 106207. [Google Scholar] [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Anil, R.; Dai, A.M.; Firat, O.; Johnson, M.; Lepikhin, D.; Passos, A.; Shakeri, S.; Taropa, E.; Bailey, P.; Chen, Z.; et al. Palm 2 technical report. arXiv 2023, arXiv:2305.10403. [Google Scholar] [CrossRef]

- Kassianik, P.; Saglam, B.; Chen, A.; Nelson, B.; Vellore, A.; Aufiero, M.; Burch, F.; Kedia, D.; Zohary, A.; Weerawardhena, S.; et al. Llama-3.1-foundationai-securityllm-base-8b technical report. arXiv 2025, arXiv:2504.21039. [Google Scholar]

- Zeng, F.; Gan, W.; Wang, Y.; Liu, N.; Yu, P.S. Large language models for robotics: A survey. arXiv 2023, arXiv:2311.07226. [Google Scholar] [CrossRef]

- Wang, J.; Shi, E.; Hu, H.; Ma, C.; Liu, Y.; Wang, X.; Yao, Y.; Liu, X.; Ge, B.; Zhang, S. Large language models for robotics: Opportunities, challenges, and perspectives. J. Autom. Intell. 2024, 4, 52–64. [Google Scholar] [CrossRef]

- Ahn, M.; Brohan, A.; Brown, N.; Chebotar, Y.; Cortes, O.; David, B.; Finn, C.; Fu, C.; Gopalakrishnan, K.; Hausman, K.; et al. Do as i can, not as i say: Grounding language in robotic affordances. arXiv 2022, arXiv:2204.01691. [Google Scholar] [CrossRef]

- Driess, D.; Xia, F.; Sajjadi, M.S.; Lynch, C.; Chowdhery, A.; Wahid, A.; Tompson, J.; Vuong, Q.; Yu, T.; Huang, W.; et al. Palm-e: An embodied multimodal language model. arXiv 2023, arXiv:2303.03378. [Google Scholar]

- Singh, I.; Blukis, V.; Mousavian, A.; Goyal, A.; Xu, D.; Tremblay, J.; Fox, D.; Thomason, J.; Garg, A. Progprompt: Generating situated robot task plans using large language models. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 11523–11530. [Google Scholar]

- Singh, I.; Blukis, V.; Mousavian, A.; Goyal, A.; Xu, D.; Tremblay, J.; Fox, D.; Thomason, J.; Garg, A. ProgPrompt: Program generation for situated robot task planning using large language models. Auton. Robot. 2023, 47, 999–1012. [Google Scholar] [CrossRef]

- Silver, T.; Hariprasad, V.; Shuttleworth, R.S.; Kumar, N.; Lozano-Pérez, T.; Kaelbling, L.P. PDDL planning with pretrained large language models. In NeurIPS 2022 Foundation Models for Decision Making Workshop; NeurIPS: San Diego, CA, USA, 2022. [Google Scholar]

- Guan, L.; Valmeekam, K.; Sreedharan, S.; Kambhampati, S. Leveraging pre-trained large language models to construct and utilize world models for model-based task planning. Adv. Neural Inf. Process. Syst. 2023, 36, 79081–79094. [Google Scholar]

- Kannan, S.S.; Venkatesh, V.L.; Min, B.C. Smart-llm: Smart multi-agent robot task planning using large language models. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 12140–12147. [Google Scholar]

- Fikes, R.E.; Nilsson, N.J. STRIPS: A new approach to the application of theorem proving to problem solving. Artif. Intell. 1971, 2, 189–208. [Google Scholar] [CrossRef]

- Silver, T.; Athalye, A.; Tenenbaum, J.B.; Lozano-Pérez, T.; Kaelbling, L.P. Learning neuro-symbolic skills for bilevel planning. arXiv 2022, arXiv:2206.10680. [Google Scholar] [CrossRef]

- Martin, A.E.; Doumas, L.A. Predicate learning in neural systems: Using oscillations to discover latent structure. Curr. Opin. Behav. Sci. 2019, 29, 77–83. [Google Scholar] [CrossRef]

- Doumas, L.A.; Puebla, G.; Martin, A.E. Human-like generalization in a machine through predicate learning. arXiv 2018, arXiv:1806.01709. [Google Scholar]

- Han, M.; Zhu, Y.; Zhu, S.C.; Wu, Y.N.; Zhu, Y. Interpret: Interactive predicate learning from language feedback for generalizable task planning. arXiv 2024, arXiv:2405.19758. [Google Scholar]

- Hussein, A.; Gaber, M.M.; Elyan, E.; Jayne, C. Imitation learning: A survey of learning methods. ACM Comput. Surv. 2017, 50, 1–35. [Google Scholar] [CrossRef]

- Schaal, S. Is imitation learning the route to humanoid robots? Trends Cogn. Sci. 1999, 3, 233–242. [Google Scholar] [CrossRef]

- Duan, Y.; Andrychowicz, M.; Stadie, B.; Jonathan Ho, O.; Schneider, J.; Sutskever, I.; Abbeel, P.; Zaremba, W. One-shot imitation learning. arXiv 2017, arXiv:1703.07326. [Google Scholar] [CrossRef]

- Jansonnie, P.; Wu, B.; Perez, J.; Peters, J. Unsupervised Skill Discovery for Robotic Manipulation through Automatic Task Generation. In Proceedings of the 2024 IEEE-RAS 23rd International Conference on Humanoid Robots (Humanoids), Nancy, France, 22–24 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 926–933. [Google Scholar]

- Lynch, C.; Khansari, M.; Xiao, T.; Kumar, V.; Tompson, J.; Levine, S.; Sermanet, P. Learning latent plans from play. In Proceedings of the Conference on Robot Learning, Virtual, 16–18 November 2020; pp. 1113–1132. [Google Scholar]

- Jin, W.; Cheng, Y.; Shen, Y.; Chen, W.; Ren, X. A good prompt is worth millions of parameters: Low-resource prompt-based learning for vision-language models. arXiv 2021, arXiv:2110.08484. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- He, J.; Rungta, M.; Koleczek, D.; Sekhon, A.; Wang, F.X.; Hasan, S. Does Prompt Formatting Have Any Impact on LLM Performance? arXiv 2024, arXiv:2411.10541. [Google Scholar] [CrossRef]

- Qian, S.; Ning, C.; Hu, Y. MobileNetV3 for image classification. In Proceedings of the 2021 IEEE 2nd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Nanchang, China, 26–28 March 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 490–497. [Google Scholar]

- Koonce, B. MobileNetV3. In Convolutional nEural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; Springer: Berlin/Heidelberg, Germany, 2021; pp. 125–144. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Xu, H.; Ghosh, G.; Huang, P.Y.; Arora, P.; Aminzadeh, M.; Feichtenhofer, C.; Metze, F.; Zettlemoyer, L. Vlm: Task-agnostic video-language model pre-training for video understanding. arXiv 2021, arXiv:2105.09996. [Google Scholar]

| Action Primitive | Function |

|---|---|

| Approach | The robotic end-effector moves toward the nearest detected bolt within the visual field. |

| Mate | The bolt position is refined, and the end-effector is aligned accurately along the bolt axis. |

| Insert | The socket tool is lowered to securely engage the bolt head. |

| Disassemble | The end-effector applies a counterclockwise torque to loosen and remove the target bolt. |

| Disassembly Task | Successful Attempts | Unsuccessful Attempts |

|---|---|---|

| Quick disassembly of 3 bolts (total: 9) | 173 | 7 |

| Single disassembly of 9 bolts (total: 9) | 179 | 1 |

| Quick disassembly of 2 bolts (total: 8) | 156 | 4 |

| Quick disassembly of 4 bolts (total: 8) | 152 | 8 |

| Single disassembly of 8 bolts (total: 8) | 180 | 0 |

| Disassembly Task | Success Rate | Average Time |

| Quick disassembly of 3 bolts (total: 9) | 96.10% | 1 min 41 s |

| Single disassembly of 9 bolts (total: 9) | 99.40% | 4 min 17 s |

| Quick disassembly of 2 bolts (total: 8) | 97.50% | 1 min 38 s |

| Quick disassembly of 4 bolts (total: 8) | 95.00% | 1 min 21 s |

| Single disassembly of 8 bolts (total: 8) | 100.00% | 3 min 45 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, P.; Wang, Z.; Peng, Y.; He, Z.; Chen, M. Experience-Driven NeuroSymbolic System for Efficient Robotic Bolt Disassembly. Batteries 2025, 11, 332. https://doi.org/10.3390/batteries11090332

Chang P, Wang Z, Peng Y, He Z, Chen M. Experience-Driven NeuroSymbolic System for Efficient Robotic Bolt Disassembly. Batteries. 2025; 11(9):332. https://doi.org/10.3390/batteries11090332

Chicago/Turabian StyleChang, Pengxu, Zhigang Wang, Yanlong Peng, Ziwen He, and Ming Chen. 2025. "Experience-Driven NeuroSymbolic System for Efficient Robotic Bolt Disassembly" Batteries 11, no. 9: 332. https://doi.org/10.3390/batteries11090332

APA StyleChang, P., Wang, Z., Peng, Y., He, Z., & Chen, M. (2025). Experience-Driven NeuroSymbolic System for Efficient Robotic Bolt Disassembly. Batteries, 11(9), 332. https://doi.org/10.3390/batteries11090332