An Advanced Power System Modeling Approach for Transformer Oil Temperature Prediction Integrating SOFTS and Enhanced Bayesian Optimization

Abstract

1. Introduction

- (1)

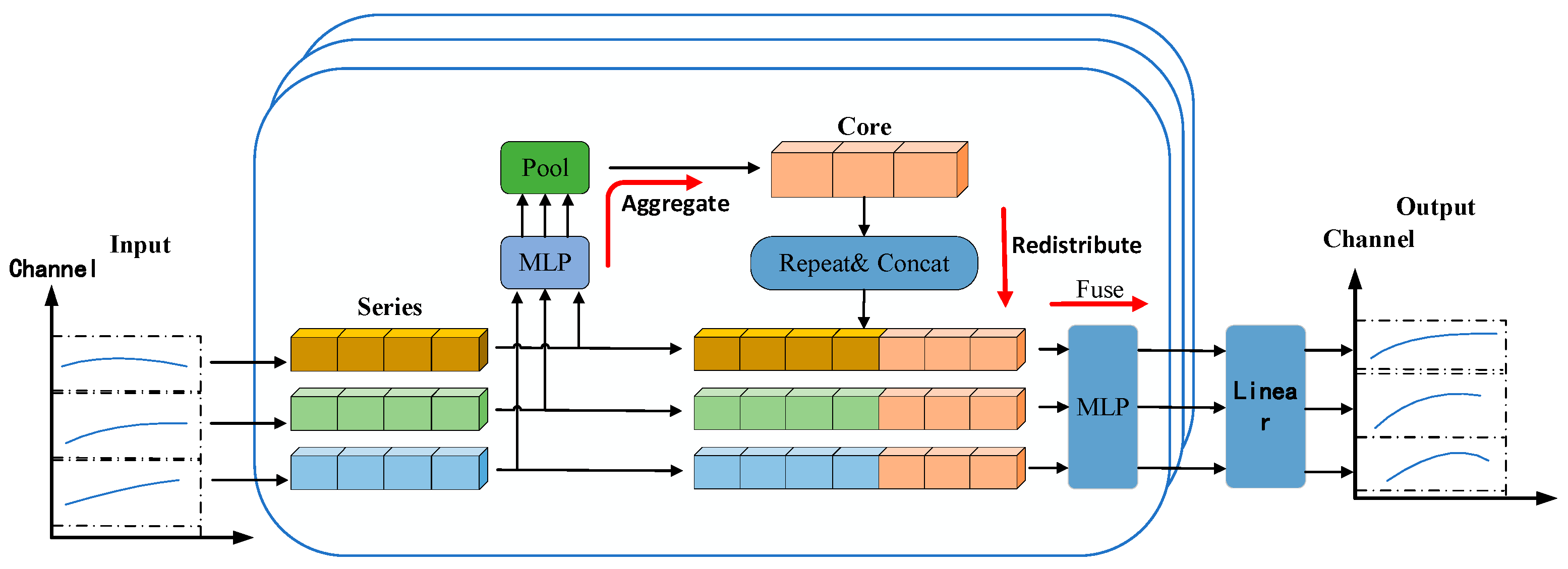

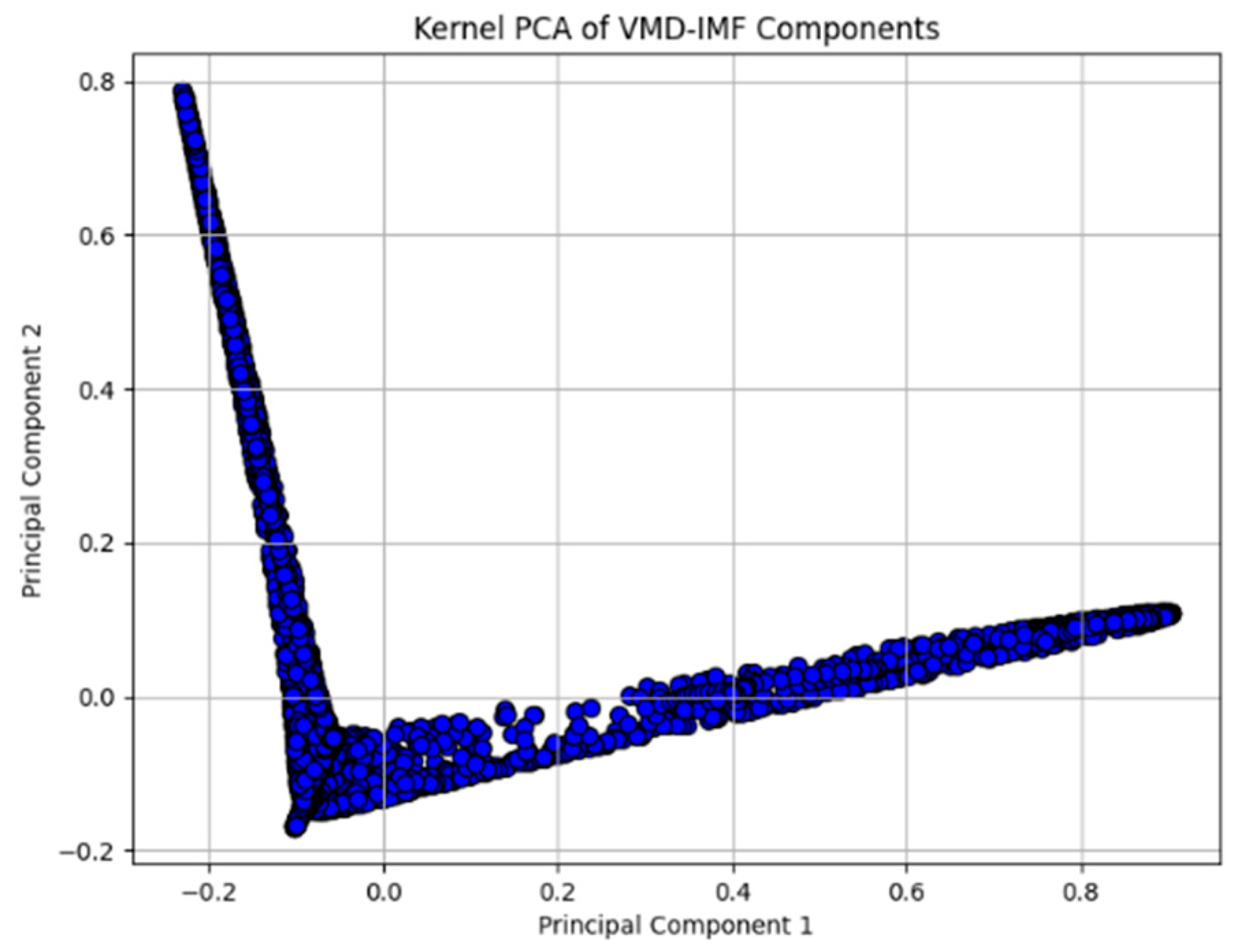

- The top-oil temperature signal is first decomposed using VMD to extract intrinsic mode functions (IMFs) across different frequency bands. These IMFs are then processed via Kernel PCA to perform non-linear dimensionality reduction, thereby mitigating data redundancy and improving computational efficiency.

- (2)

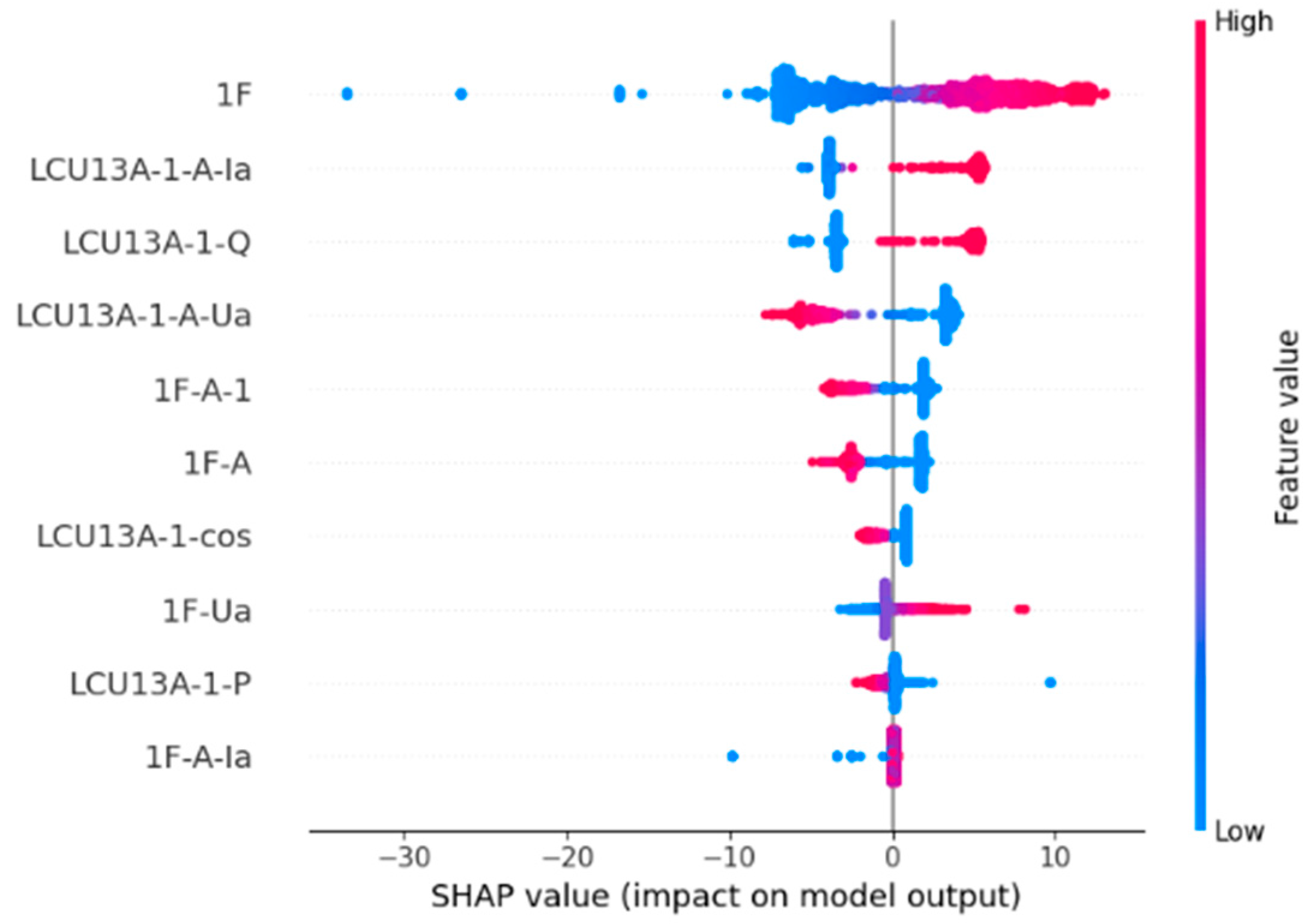

- A TSHAP-MLP approach is introduced to dynamically evaluate the temporal contribution of each feature, incorporating both temporal weighting and a sliding window mechanism. Features with SHAP values exceeding one are retained to reduce the input dimensionality while preserving critical information.

- (3)

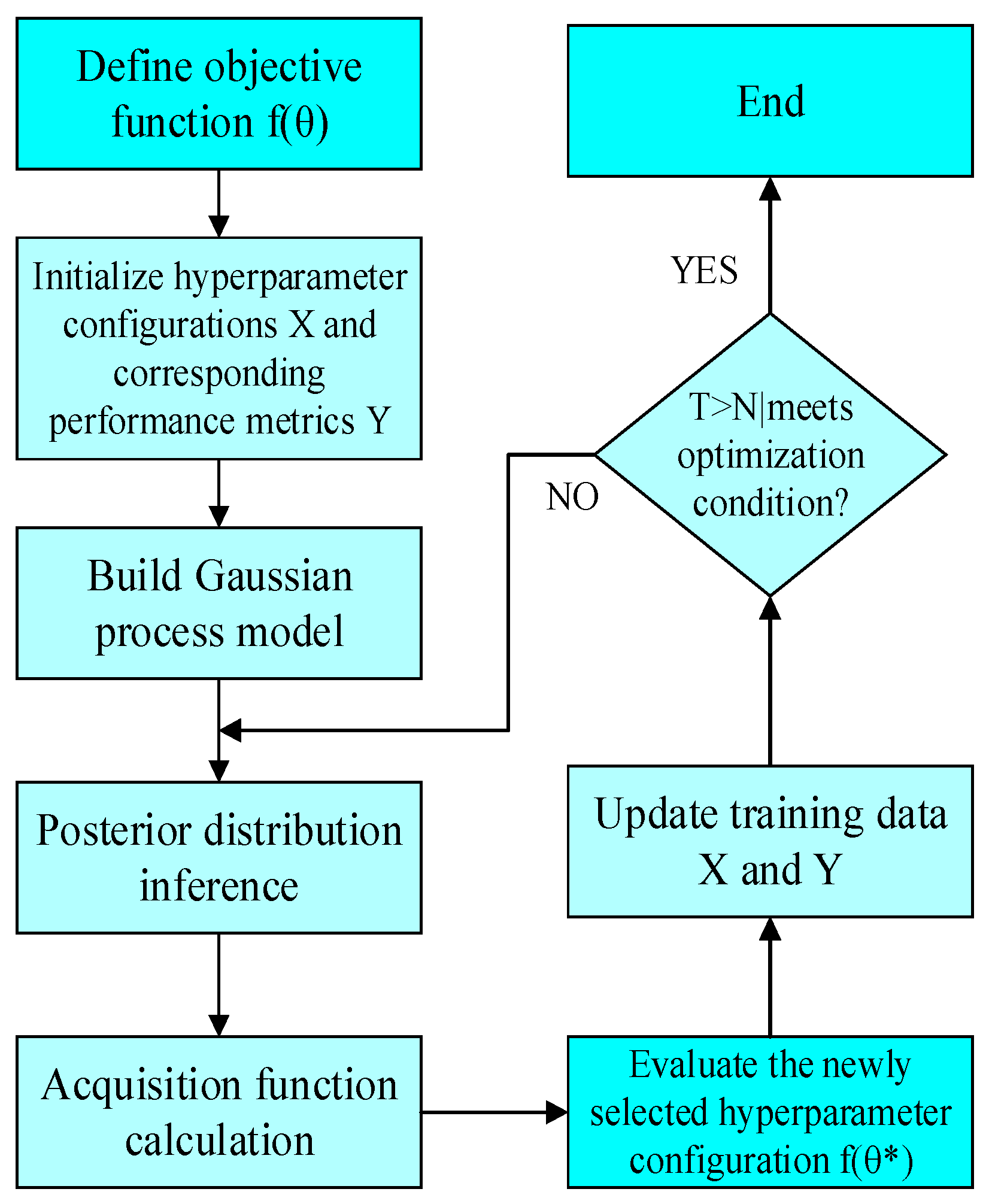

- The SOFTS model is constructed as the core forecasting framework, and its parameters are fine-tuned using an enhanced hierarchical Bayesian optimization algorithm to boost prediction accuracy.

2. Methods

2.1. Min-Max Normalization

2.2. Variational Mode Decomposition

2.3. Kernel Principal Component Analysis (Kernel PCA)

2.4. Feature Extraction Steps

3. TSHAP-MLP Feature Selection

3.1. The Principle Behind TSHAP

3.2. Multilayer Perceptron

3.3. SHAP-MLP Feature Selection

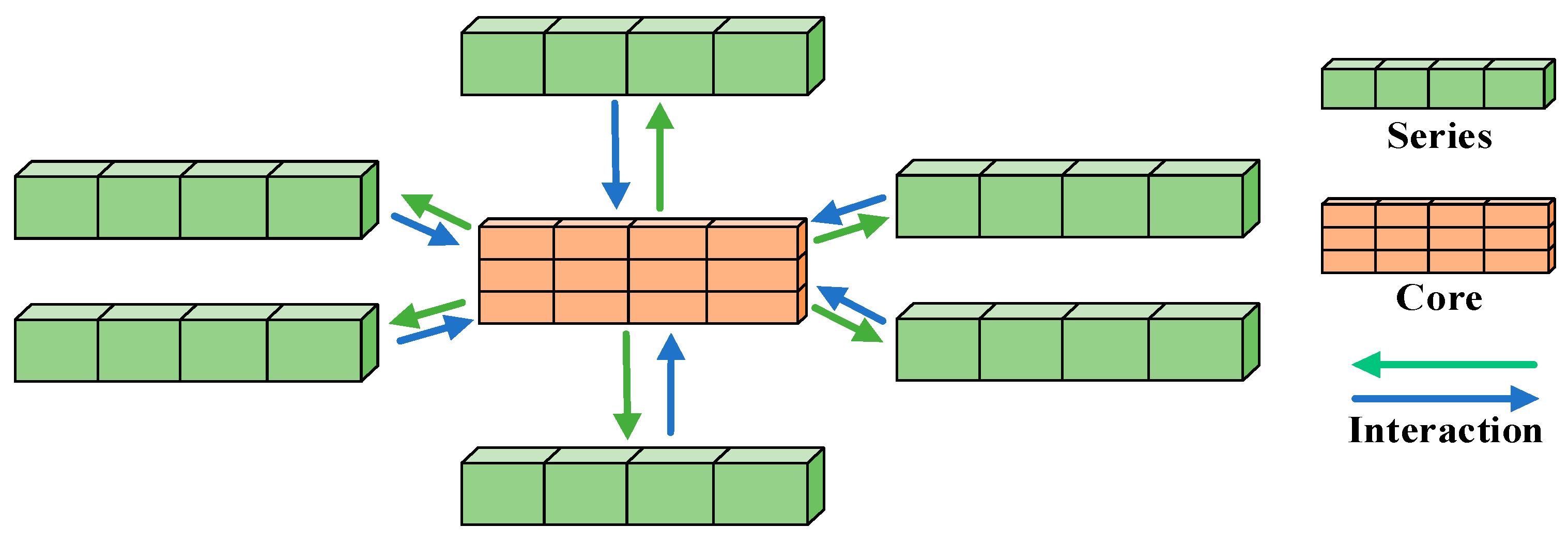

4. SOFTS Model—Hierarchical Bayesian Optimization Algorithm

4.1. SOFTS Model

4.2. Hierarchical Bayesian Optimization Algorithm

5. Case Study

5.1. Data Source and Preprocessing

5.2. Performance Metrics

5.3. Data Processing

5.4. Multivariate Input Feature Selection

6. Results Comparison

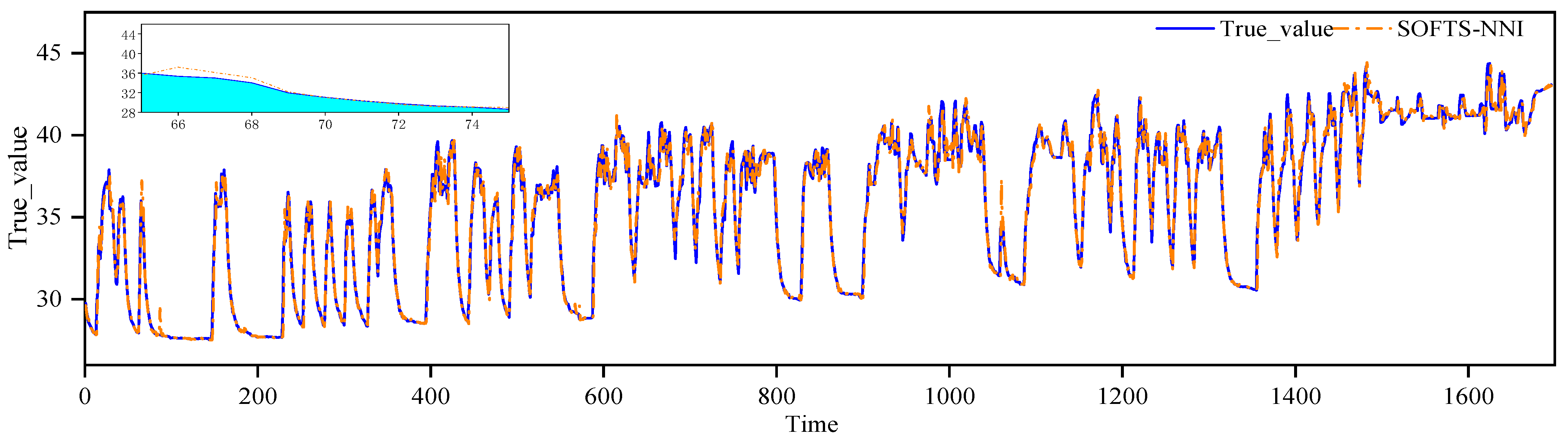

6.1. Analysis of Transformer Top Oil Temperature Prediction Results Based on SOFTS

6.2. Comparison of SOFTS Parameter Tuning Results

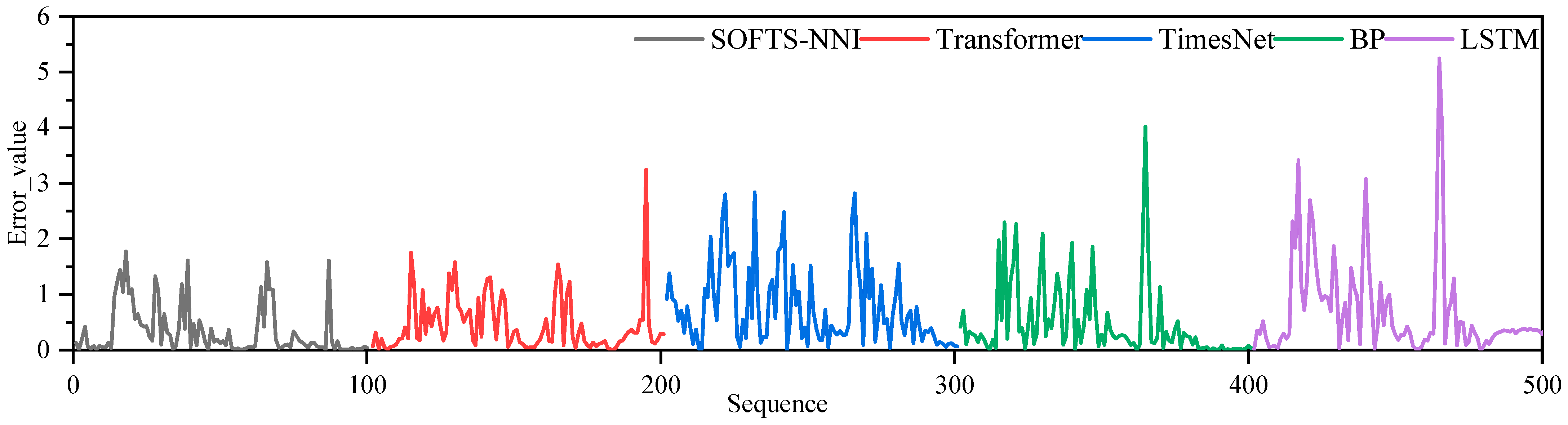

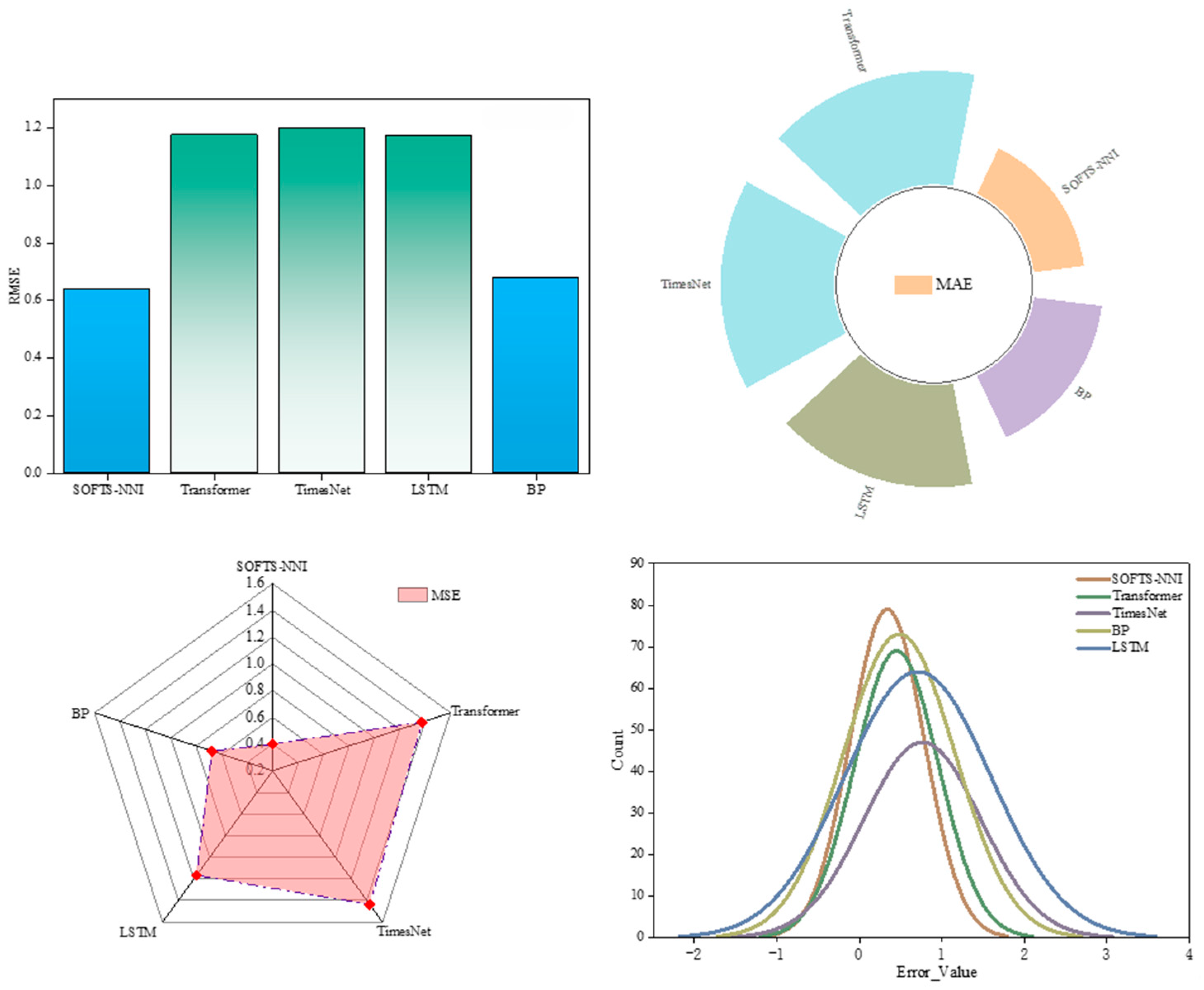

6.3. Comparative Analysis of Different Deep Learning Models

7. Conclusions

- (1)

- When compared with traditional neural network models such as BP and other deep learning models like LSTM, transformer, and TimesNet, the SOFTS model demonstrated significant advantages in terms of prediction accuracy and model stability. Experimental results show that the SOFTS model, optimized using Bayesian optimization algorithms, achieved the smallest evaluation metrics (RMSE, MSE, and MAE) in top-level oil temperature prediction, indicating higher prediction accuracy and lower error rates.

- (2)

- This study combines VMD and Kernel PCA methods for feature extraction of top-level oil temperature signals and uses the SHAP-MLP feature selection method to optimize input features, significantly improving the model’s prediction accuracy. The combination of these techniques effectively reduces the model’s input dimension, removes redundant features, and enhances the model’s generalization capability and efficiency.

- (3)

- This study provides an effective deep learning method for transformer top-layer oil temperature prediction, demonstrating its practical application value in power equipment monitoring. Future research will continue to explore other optimization algorithms and model structures to further enhance the prediction performance of transformer operating conditions, particularly in terms of stability and robustness in complex environments.

- (4)

- This study demonstrates the effectiveness of the SOFTS framework on hydropower transformer data. Leveraging the universal thermal–electrical load coupling, VMD and KPCA extract transferable features, TSHAP-MLP identifies key variables across operating conditions, and the STAR module adapts to diverse load patterns. Future work will validate the method on transformers with varying capacities, cooling methods, and regional grid conditions and explore transfer learning to enhance cross-device generalization.

- (5)

- The distinctiveness of this study lies in the multi-level integration of decomposition, non-linear reduction, temporal feature selection, and adaptive forecasting, which has not been explored in previous transformer temperature prediction works. Future work will explicitly evaluate the proposed framework under noisy and incomplete data, which are common in real-world monitoring systems, and will extend validation to datasets exhibiting stronger seasonal cycles and more frequent abrupt load fluctuations, thereby providing a more quantitative assessment of its adaptability.

- (6)

- Beyond methodological improvements, advances in transformer insulating liquids are also reshaping the thermal environment of power equipment. Khelifa et al. [37] showed that adding ZrO2 nanoparticles to mineral, synthetic, and natural esters can significantly enhance AC breakdown voltage at optimal concentrations, improving insulation reliability. Koutras et al. [38] further reported that semiconducting nanoparticles (SiC, TiO2) improve the initial thermal and dielectric performance of natural esters but may accelerate agglomeration and property degradation with aging. These findings highlight that material-driven changes affect heat dissipation and insulation stability, underscoring the need for accurate top-oil temperature forecasting in next-generation transformers.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| VMD | Variational Mode Decomposition |

| BiGRU | Bidirectional Gated Recurrent Unit |

| Kernel PCA | Kernel Principal Component Analysis |

| BWO | Black Widow Optimization |

| ELM | Extreme Learning Machines |

| IMF | Intrinsic Mode Function |

| SVM | Support Vector Machines |

| MLP | Multilayer Perceptron |

| RMSE | Root Mean Square Error |

| CNN | Convolutional Neural Network |

| BiLSTM | Bidirectional Long Short-Term Memory |

| LSTM | Long Short-Term Memory |

| RNN | Recurrent Neural Network |

| CEEMDAN | Complete Ensemble Empirical Mode Decomposition with Adaptive Noise |

| SA | Self-Attention |

| EEMD | Ensemble Empirical Mode Decomposition |

| PSO | Particle Swarm Optimization |

| TOT | Top Oil Temperature |

| HKELM | Hybrid Kernel Extreme Learning Machine |

| IWOA | Improved Whale Optimization Algorithm |

| TCN | Temporal Convolutional Network |

| SOFTS | Self-organized Time Series Forecasting System |

| NSE | Nash–Sutcliffe Efficiency |

| MAE | Root Mean Absolute Error |

| sMAPE | Symmetric Mean Absolute Percentage Error |

| EMD | Empirical Mode Decomposition |

| R2 | Coefficient of Determination |

| MSE | Mean Squared Error |

| KELM | Kernel Extreme Learning Machine |

References

- Li, S.; Xue, J.; Wu, M.; Xie, R.; Jin, B.; Wang, K. Prediction of transformer top oil temperature based on improved weighted support vector regression based on particle swarm optimization. In Proceedings of the 2021 International Conference on Advanced Electrical Equipment and Reliable Operation (AEERO), Beijing, China, 15–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Huang, X.; Zhuang, X.; Tian, F.; Niu, Z.; Chen, Y.; Zhou, Q.; Yuan, C. A Hybrid ARIMA-LSTM-XGBoost Model with Linear Regression Stacking for Transformer Oil Temperature Prediction. Energies 2025, 18, 1432. [Google Scholar] [CrossRef]

- Zhang, C.; Ma, H.; Hua, L.; Sun, W.; Nazir, M.S.; Peng, T. An evolutionary deep learning model based on TVFEMD, improved sine cosine algorithm, CNN and BiLSTM for wind speed prediction. Energy 2022, 254, 124250. [Google Scholar] [CrossRef]

- Peng, T.; Zhang, C.; Zhou, J.; Nazir, M.S. An integrated framework of Bi-directional long-short term memory (BiLSTM) based on sine cosine algorithm for hourly solar radiation forecasting. Energy 2021, 221, 119887. [Google Scholar] [CrossRef]

- Guo, Y.; Chang, Y.; Lu, B. A review of temperature prediction methods for oil-immersed transformers. Measurement 2025, 239, 115383. [Google Scholar] [CrossRef]

- Dong, N.; Zhang, R.; Li, Z.; Cao, B. Prediction model of transformer top oil temperature based on data quality enhancement. Rev. Sci. Instrum. 2023, 94, 074707. [Google Scholar] [CrossRef] [PubMed]

- Deng, W.; Yang, J.; Liu, Y.; Wu, C.; Zhao, Y.; Liu, X.; You, J. A Novel EEMD-LSTM Combined Model for Transformer Top-Oil Temperature Prediction. In Proceedings of the 2021 8th International Forum on Electrical Engineering and Automation (IFEEA), Xi’an, China, 3–5 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 52–56. [Google Scholar]

- Cao, Y. An Improved Prediction Method of Transformer Oil Temperature. In Proceedings of the 2023 IEEE 6th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 24–26 February 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 987–991. [Google Scholar]

- Hu, J.; Liu, Y.; Peng, Y.; Chen, C.; Wan, J.; Cao, B. Data-Driven short-term prediction for top oil temperature of Residential Transformer. In Proceedings of the 2022 China International Conference on Electricity Distribution (CICED), Changsha, China, 7–8 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 303–309. [Google Scholar]

- Chang, J.; Duan, X.; Tao, J.; Ma, C.; Liao, M. Power Transformer Oil Temperature Prediction Based on Spark Deep Learning. In Proceedings of the 2022 IEEE International Conference on High Voltage Engineering and Applications (ICHVE), Chongqing, China, 25–29 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–4. [Google Scholar]

- Wang, K.; Zhang, H.; Wang, X.; Li, Q. Prediction method of transformer top oil temperature based on VMD and GRU neural network. In Proceedings of the 2020 IEEE International Conference on High Voltage Engineering and Application (ICHVE), Beijing, China, 6–10 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. [Google Scholar]

- Wang, X.; Fu, C.; Zhao, Z.; Fu, B.; Jiang, T.; Xu, Y.; Zhang, L.; Hou, Y. Prediction of transformer top oil temperature based on AC-BiLSTM model. In Proceedings of the 2023 4th International Conference on Smart Grid and Energy Engineering (SGEE), Zhengzhou, China, 24–26 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 390–394. [Google Scholar]

- Lei, L.; Liao, F.; Liu, B.; Tang, P.; Zheng, Z.; Qi, Z.; Zhang, Z. Research on Transformer Temperature Rise Prediction and Fault Warning Based on Attention-GRU. In Proceedings of the 2023 5th International Conference on Smart Power & Internet Energy Systems (SPIES), Shenyang, China, 1–4 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 94–99. [Google Scholar]

- Wang, Y.Q.; Yue, G.L.; He, J.; Liu, H.L.; Bi, J.G.; Chen, S.F. Research on Top-Oil Temperature Prediction of Power Transformer Based on Kalman Filtering Algorithm. High Volt. Appar. 2014, 50, 74–79+86. [Google Scholar]

- Dong, X.; Jing, L.; Tian, R.; Dong, X. Transformer Top-Oil Temperature Prediction Method Based on LSTM Model. Proc. China Electr. Power Soc. 2023, 38, 38–45. [Google Scholar]

- Yang, J.; Lu, W.; Liu, X. Prediction of Top Oil Temperature for Oil-immersed Transformers Based on PSO-LSTM. In Proceedings of the 2021 4th International Conference on Energy, Electrical and Power Engineering (CEEPE), Chongqing, China, 23–25 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 278–283. [Google Scholar]

- Zou, D.; Xu, H.; Quan, H.; Yin, J.; Peng, Q.; Wang, S.; Dai, W.; Hong, Z. Top-Oil Temperature Prediction of Power Transformer Based on Long Short-Term Memory Neural Network with Self-Attention Mechanism Optimized by Improved Whale Optimization Algorithm. Symmetry 2024, 16, 1382. [Google Scholar] [CrossRef]

- Liu, J.; Hou, Z.; Liu, B.; Zhou, X. Mathematical and Machine Learning Innovations for Power Systems: Predicting Transformer Oil Temperature with Beluga Whale Optimization-Based Hybrid Neural Networks. Mathematics 2025, 13, 1785. [Google Scholar] [CrossRef]

- Hao, Y.; Zhang, Z.; Liu, X.; Yang, Y.; Liu, J. Inversion Method for Transformer Winding Hot Spot Temperature Based on Gated Recurrent Unit and Self-Attention and Temperature Lag. Sensors 2024, 24, 4734. [Google Scholar] [CrossRef]

- Zhang, Z.; Kong, W.; Li, L.; Zhao, H.; Xin, C. Prediction of transformer oil temperature based on an improved pso neural network algorithm. In Recent Advances in Electrical & Electronic Engineering (Formerly Recent Patents on Electrical & Electronic Engineering); Bentham Science Publishers: Sharjah, United Arab Emirates, 2024; Volume 17, pp. 29–37. [Google Scholar]

- Li, K.; Xu, Y.; Wei, B.; Hua, H.; Qi, X. Transformer Top-Oil Temperature Prediction Model Based on PSO-HKELM. High Volt. Eng. 2018, 44, 2501–2508. [Google Scholar]

- Li, R. Research on Transformer Top-Oil Temperature Prediction Method Based on Bayesian Network. Master’s Thesis, Southwest Jiaotong University, Chengdu, China, 2018. [Google Scholar]

- Qi, X.; Li, K.; Yu, X.; Lou, J. Transformer Top-Oil Temperature Interval Prediction Based on Kernel Extreme Learning Machine and Bootstrap Method. Proc. Chin. Soc. Electr. Eng. 2017, 37, 5821–5828+5860. [Google Scholar]

- Li, S.; Xue, J.; Wu, M.; Xie, R.; Jin, B.; Zhang, H.; Li, Q. Transformer Top-Oil Temperature Prediction Based on Particle Swarm Optimization Improved Weighted Support Vector Regression. High Volt. Appar. 2021, 5, 103–109. [Google Scholar]

- Patro, S.; Sahu, K.K. Normalization: A preprocessing stage. arXiv 2015, arXiv:1503.06462. [Google Scholar] [CrossRef]

- Dragomiretskiy, K.; Zosso, D. Variational Mode Decomposition. IEEE Trans. Signal Process. 2014, 62, 531–544. [Google Scholar] [CrossRef]

- Zhang, C.; Li, Z.; Ge, Y.; Liu, Q.; Suo, L.; Song, S.; Peng, T. Enhancing short-term wind speed prediction based on an outlier-robust ensemble deep random vector functional link network with AOA-optimized VMD. Energy 2024, 296, 131173. [Google Scholar] [CrossRef]

- Schölkopf, B.; Smola, A.; Müller, K.-R. Kernel principal component analysis. In Artificial Neural Networks—ICANN’97; Gerstner, W., Germond, A., Hasler, M., Nicoud, J.-D., Eds.; Springer: Berlin/Heidelberg, Germany, 1997; Volume 1327, pp. 583–588. [Google Scholar]

- Lundberg, S.M.; Lee, S.-I. A unified approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Taud, H.; Mas, J.F. Multilayer Perceptron (MLP). In Geomatic Approaches for Modeling Land Change Scenarios; Camacho Olmedo, M.T., Paegelow, M., Mas, J.-F., Escobar, F., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 451–455. [Google Scholar]

- Han, L.; Chen, X.-Y.; Ye, H.-J.; Zhan, D.-C. Softs: Efficient multivariate time series forecasting with series-core fusion. arXiv 2024, arXiv:2404.14197. [Google Scholar] [CrossRef]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical Bayesian optimization of machine learning algorithms. In Proceedings of the 25th International Conference on Neural Information Processing Systems (NeurIPS 2012), Lake Tahoe, NV, USA, 3–6 December 2012; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Wu, H.; Hu, T.; Liu, Y.; Zhou, H.; Wang, J.; Long, M. Timesnet: Temporal 2d-variation modeling for general time series analysis. arXiv 2022, arXiv:2210.02186. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Khelifa, H.; Vagnon, E.; Beroual, A. Effect of zirconia nanoparticles on the AC breakdown voltage of mineral oil, synthetic, and natural esters. IEEE Trans. Dielectr. Electr. Insul. 2024, 31, 731–737. [Google Scholar] [CrossRef]

- Koutras, K.N.; Peppas, G.D.; Tegopoulos, S.N.; Kyritsis, A.; Yiotis, A.G.; Tsovilis, T.E.; Gonos, I.F.; Pyrgioti, E.C. Aging impact on relative permittivity, thermal properties, and lightning impulse voltage performance of natural ester oil filled with semiconducting nanoparticles. IEEE Trans. Dielectr. Electr. Insul. 2023, 30, 1598–1607. [Google Scholar] [CrossRef]

| Parameters of the SOFTS Deep Learning Model | ||||||

|---|---|---|---|---|---|---|

| lr | d_model | d_ff | dropout | Train_Epochs | Batch_Size | Test_Loss |

| 0.01627 | 16 | 8 | 0.06194 | 16 | 89 | 0.008477 |

| 0.08974 | 8 | 8 | 0.12194 | 17 | 90 | 0.024948 |

| 0.00013 | 32 | 8 | 0.12194 | 15 | 90 | 0.036025 |

| 0.00014 | 8 | 16 | 0.00195 | 8 | 88 | 0.096082 |

| 0.08635 | 32 | 32 | 0.07821 | 16 | 112 | 0.164758 |

| Number of IMFs K | Residual Energy (%) | RMSE | MAE |

|---|---|---|---|

| 3 | 12.4 | 0.72 | 0.57 |

| 4 | 7.1 | 0.65 | 0.52 |

| 5 | 4.8 | 0.63 | 0.50 |

| 6 | 4.6 | 0.65 | 0.51 |

| 7 | 4.5 | 0.66 | 0.52 |

| 8 | 4.5 | 0.67 | 0.53 |

| Abbreviation | Feature Description | SHAP Value |

|---|---|---|

| 1F | 1F: Main transformer phase A winding temperature | 6.158109 |

| LCU13A-1-A-Ia | LCU13A: No. 1 main transformer HV side power factor cos | 4.533494 |

| LCU13A-1-Q | 1F: Outlet A phase voltage Ua | 4.246660 |

| LCU13A-1-A-Ua | LCU13A: Active power on the high-voltage side of No. 1 main transformer | 4.204933 |

| 1F-A-1 | 1F: Active power (transmitter 1) | 2.454016 |

| 1F-A | LCU13A: No. 1 main transformer HV side phase A current Ia | 2.163187 |

| LCU13A-1-cos | 1F: Outlet A phase current Ia | 1.013335 |

| 1F-Ua | LCU13A: No. 1 main transformer high-voltage side reactive power Q | 0.874439 |

| LCU13A-1-P | 1F: Reactive power | 0.454664 |

| 1F-A-Ia | LCU13A: No. 1 main transformer HV side power factor cos | 0.206889 |

| SHAP Threshold | Number of Retained Features | RMSE | MAE |

|---|---|---|---|

| 0.2 | 10 | 0.69 | 0.56 |

| 1.0 | 7 | 0.63 | 0.50 |

| 4 | 4 | 0.66 | 0.53 |

| Error_Name | SOFTS_Error_Value |

|---|---|

| RMSE | 0.63221 |

| MAE | 0.39568 |

| R2 | 0.98195 |

| MSE | 0.39969 |

| SMAPE | 0.01069 |

| NSE | 0.98204 |

| Models | RMSE | MSE | MAE |

|---|---|---|---|

| SOFTS-NNI | 0.6358 | 0.3997 | 0.3990 |

| Transformer | 1.1733 | 1.3768 | 0.9009 |

| TimesNet | 1.1986 | 1.4367 | 0.8975 |

| LSTM | 1.0806 | 1.1679 | 0.8089 |

| BP | 0.8229 | 0.6772 | 0.5422 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tong, Z.; Xu, Y.; Meng, X.; Zheng, Y.; Peng, T.; Zhang, C. An Advanced Power System Modeling Approach for Transformer Oil Temperature Prediction Integrating SOFTS and Enhanced Bayesian Optimization. Processes 2025, 13, 2888. https://doi.org/10.3390/pr13092888

Tong Z, Xu Y, Meng X, Zheng Y, Peng T, Zhang C. An Advanced Power System Modeling Approach for Transformer Oil Temperature Prediction Integrating SOFTS and Enhanced Bayesian Optimization. Processes. 2025; 13(9):2888. https://doi.org/10.3390/pr13092888

Chicago/Turabian StyleTong, Zhixiang, Yan Xu, Xianyu Meng, Yongshun Zheng, Tian Peng, and Chu Zhang. 2025. "An Advanced Power System Modeling Approach for Transformer Oil Temperature Prediction Integrating SOFTS and Enhanced Bayesian Optimization" Processes 13, no. 9: 2888. https://doi.org/10.3390/pr13092888

APA StyleTong, Z., Xu, Y., Meng, X., Zheng, Y., Peng, T., & Zhang, C. (2025). An Advanced Power System Modeling Approach for Transformer Oil Temperature Prediction Integrating SOFTS and Enhanced Bayesian Optimization. Processes, 13(9), 2888. https://doi.org/10.3390/pr13092888