Abstract

Classification prediction technology, which utilizes labeled data for training to enable autonomous decision, has emerged as a pivotal tool across numerous fields. The Elman neural network (ENN) exhibits potential in tackling nonlinear problems. However, its computational process faces inherent limitations in escaping local optimum and experiencing a slow convergence rate. To improve these shortcomings, an ENN classifier based on Hyperactivity Rat Swarm Optimizer (HRSO), named HRSO-ENNC, is proposed in this paper. Initially, HRSO is divided into two phases, search and mutation, by means of a nonlinear adaptive parameter. Subsequently, five search actions are introduced to enhance the global exploratory and local exploitative capabilities of HRSO. Furthermore, a stochastic roaming strategy is employed, which significantly improves the ability to jump out of local positions. Ultimately, the integration of HRSO and ENN enables the substitution of the original gradient descent method, thereby optimizing the neural connection weights and thresholds. The experiment results demonstrate that the accuracy and stability of HRSO-ENNC have been effectively verified through comparisons with other algorithm classifiers on benchmark functions, classification datasets and an AlSi10Mg process classification problem.

1. Introduction

Classification prediction [1] is a significant technique within the areas of pattern recognition and data mining. The objectives are to define and distinguish model and function (i.e., classifier) for data class or concept, based on the characteristics of the dataset, which enables the system to predictively label unknown objects and make autonomous decisions. Numerous classification prediction methods have been proposed, such as Bayes [2], Naive Bayes [3], K-Nearest Neighbor (KNN) [4], Support Vector Machine (SVM) [5] and Neural Network [6]. However, some studies [7,8,9] demonstrate that no single classifier is optimal for all datasets. In contrast to standard classification problems such as image or text, process classification prediction, as a specific classification task, requires dealing with large volumes of high-dimensional nonlinear and label-ambiguous data. These unique challenges lead to suboptimal performance of traditional classification methods for process data [10,11]. Therefore, investigating more efficient classification methods tailored to different process datasets is particularly essential.

As a variant of Artificial Neural Network (ANN), the Elman neural network (ENN) is inspired by neurobiological principles. It is a local recursion delay feedback neural network that offers better stability and adaptability compared to ordinary neural networks, which makes it particularly adept at solving nonlinear problems. Consequently, the application of ENN has attracted significant research in many different engineering and scientific fields. Zhang et al. [12] utilize ENN to calculate the synthetic efficiency of a hydro turbine and find that it possesses superior nonlinear mapping abilities. In [13], an enhanced ENN is presented to solve the problem of quality prediction during product design. Li et al. [14] apply ENN to predict sectional passenger flow in urban rail transit, and the results highlight the accuracy and usefulness of the method. Gong et al. [15] employ ENN and wavelet decomposition to predict wind power generation, which achieves good results in this context. A modified ENN [16] is suggested for the purpose of establishing predictive relationships between the compressive and flexural strength of jarosite mixed concrete. A hidden recurrent feedback-based of modified Elman [17] is proposed to predict the absolute gas emission quantity, resulting in improved accuracy and efficiency.

ENN is also widely applied to classification prediction problems. Chiroma et al. [18] propose the Jordan-ENN classifier to help medical practitioners to quickly detect malaria and determine severity. Boldbaatar et al. [19] develop an intelligent classification system for breast tumors to distinguish between benign or malignant based on recurrent wavelet ENN. An improved Elman-AdaBoost algorithm [20] is proposed for fault diagnosis of rolling bearings operating under random noise conditions, ultimately achieving better accuracy and practicability. Arun et al. [21] introduce a deep ENN classifier for static sign language recognition. Zhang et al. [22] propose a hybrid classifier that combines a convolutional neural network (CNN) and ENN for radar waveform recognition, resulting in improved overall successful recognition ratio. A fusion model, which integrates Radial Basis Function (RBF) and ENN, is suggested for the purpose of solving residential load identification, and the results indicate that this method improves identification performance [23].

However, ENN also exhibits inherent limitations, particularly escaping from local optimum and convergence deceleration, ultimately resulting in low accuracy [24,25]. The primary factor contributing to this problem is the difficulty in obtaining the suitable weights and thresholds [26]. In order to overcome these weaknesses, it has become increasingly popular to combine Swarm Intelligence (SI), which is a stochastic optimization algorithm inspired by nature, with neural network to optimize weights and thresholds. In [27], the flamingo search algorithm is utilized to refine an enhanced Elman Spike neural network, thereby enabling it to effectively classify lung cancer in CT images. In [28], the weights, thresholds and numbers of hidden layer neurons of ENN are optimized by a genetic algorithm. Wang et al. [29] utilize the adaptive ant colony algorithm to optimize ENN and demonstrate its efficacy in compensating a drilling inclinometer sensor. Although the aforementioned methods of optimizing ENN via SI algorithms have demonstrated significant advantages across various fields, traditional classification methods still dominate the problem of process classification in Selective Laser Melting (SLM). Barile et al. [30,31] employed CNN to classify the deformation behavior of AlSi10Mg specimens fabricated under different SLM processes. Ji et al. [32] achieved effective classification of spatter features under different laser energy densities using SVM and Random Forest (RF). Li et al. [33] classified extracted melt pool feature data with Backpropagation neural network (BPNN), SVM and Deep Belief Network (DBN), thereby reducing the likelihood of porosity.

According to the literature review, a two-phase Rat Swarm Optimizer consisting of five search actions is proposed in this paper, named Hyperactive Rat Swarm Optimizer (HRSO). As stated by Moghadam et al. [34], RSO demonstrates algorithmic merits in simpler structure design and fast convergence. However, similar to other SI, RSO has difficultly in escaping from local optimum when handling complex objective functions or a large number of variables. For this reason, the algorithm outlined in this study is built upon the following four aspects.

First, a nonlinear adaptive parameter is introduced to regulate the balance of exploration and exploitation parts in search phase. Second, the center point search and cosine search are introduced to enhance the effectiveness of global search in the exploration part. Third, three methods, including rush search, contrast search and random search, are introduced into the exploitation part to improve the ability of convergence speed and local search. Fourth, a stochastic wandering strategy is introduced to enhance the ability to jump out local extreme values.

Another theme related to this paper is data classification prediction. To elevate the classification prediction capabilities of ENN, a classifier based on HRSO is proposed, named HRSO-ENNC. Unlike the traditional iterative training method for neural networks, the proposed classifier utilizes HRSO to adjust the weights and thresholds. Tested on benchmark functions, classification datasets and a practical AlSi10Mg process classification problem, the experiment results demonstrate the accuracy and stability of HRSO-ENNC.

The structure of this manuscript is arranged in the following manner. Section 2 provides an overview of the ENN and the original RSO. Section 3 elaborates on the proposed HRSO and the design of an ENN classifier. Section 4 presents the experiments conducted and the corresponding analysis of the results. Section 5 outlines the conclusions drawn from the research presented in this paper.

2. Related Works

2.1. Elman Neural Network

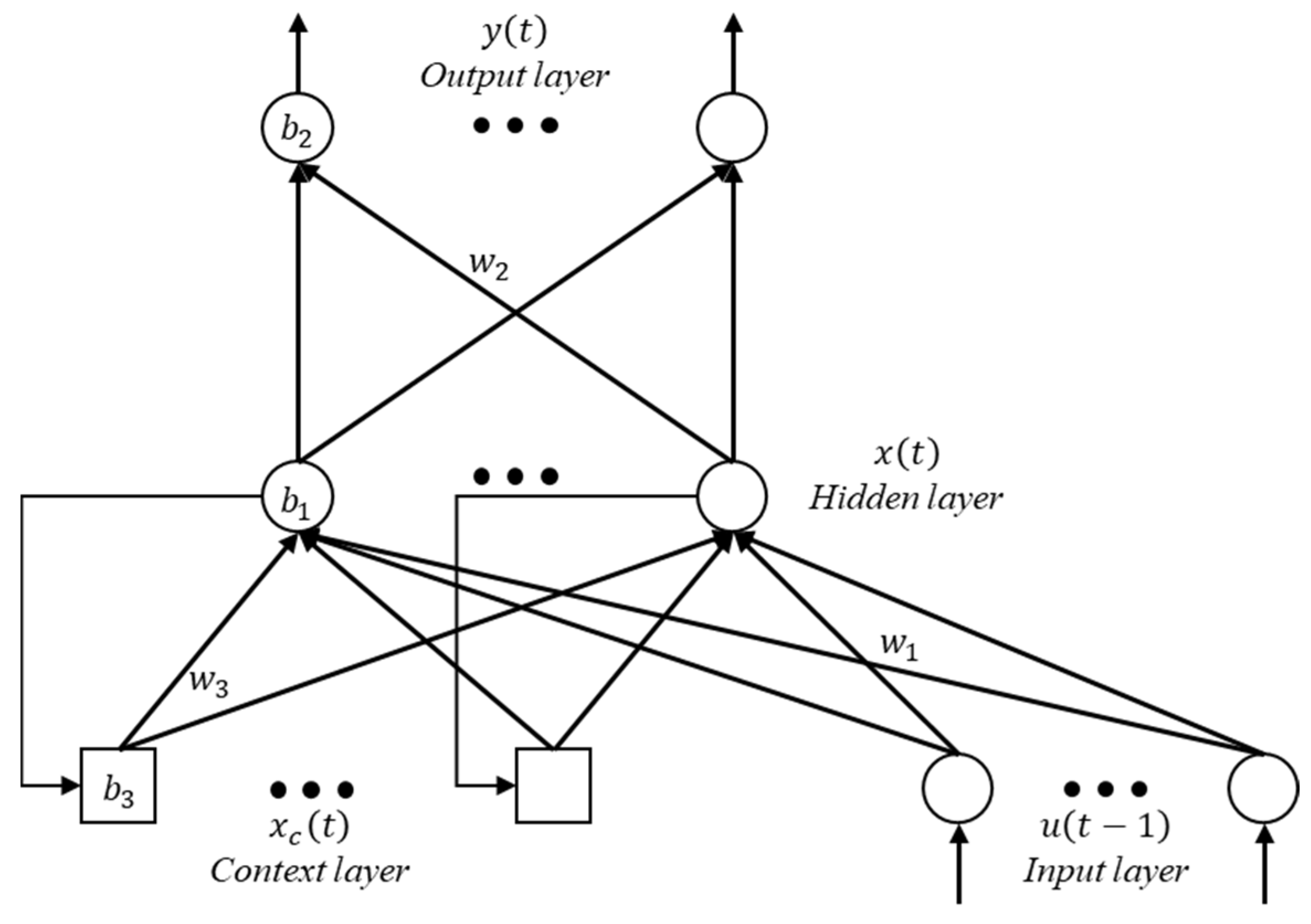

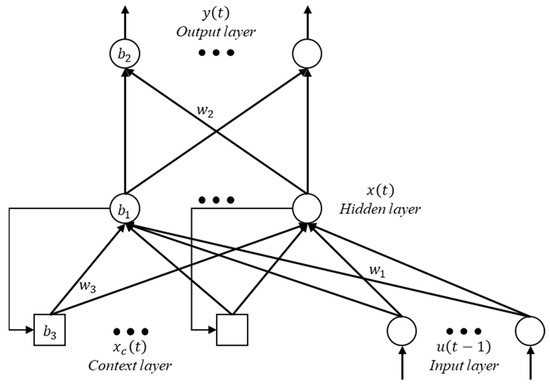

The neural network is an information processing framework and a universal machine learning method with broad applicability in numerous fields. The Elman neural network, which was first introduced by J. L. Elman [35] in 1990, is a well-known local recursion delay feedback neural network, which has a structure similar to the BP network [36], with the addition of a context layer. The schematic representation of the ENN’s architecture can be represented by Figure 1.

Figure 1.

Architecture diagram of Elman neural network.

The topological structure of ENN typically comprises four distinct layers. The input layer, comprising linear neurons, serves as the entry for external signals and transmits them to the hidden layer. Through the activation function, hidden layer units perform translation or dilation on input signals. Subsequently, the context layer remembers output values from the hidden layer in the previous time step. This layer is used as a one-step delay module to provide a feedback mechanism. Ultimately, the output layer generates the final outputs based on the processed information from previous layers. This structure provides the system with the capacity to handle time-varying characteristics, thereby bolstering network stability and adaptation.

According to the above, assuming the ENN has M inputs and N outputs, and that both the hidden layer and the context layer are set to consist of K layer, the weights from the inputs to the hidden layer, from the hidden layer to the outputs and from the context layer to the hidden layer are represented by w1, w2 and w3, respectively. The thresholds are denoted b1, b2 and b3 for the hidden layer, the output layer and the context layer, respectively. Therefore, the mathematical model is expressed by Formula (1):

In Formula (1), t serves as the current iteration number. u(t − 1) denotes the inputs provided to the ENN during the previous time step. x(t) represents the outputs generated by the hidden layer at t-th step, and the outputs of the context layer are denoted as xc(t). y(t) represents the outputs of ENN at the current time step. The transfer function for the hidden layer and the output layer are denoted as f(∙) and g(∙), respectively.

2.2. Rat Swarm Optimizer

As a meta-heuristic algorithm based on swarm intelligence, the Rat Swarm Optimizer [37] emulates the rat population behavior of chasing and hunting. This algorithm can be summarized in two main parts. The first part involves rats chasing prey through the optimal individual to obtain a better position for searching. The second part is the process by which the rats attack the prey. The mathematical expressions for the two cases are provided in Formula (2), and RSO’s pseudo-algorithm is presented in Algorithm 1.

In Formula (2), X denotes the position of the rat. t is the current iteration number. Xtbest represents the optimal individual of population during the t-th iteration. Xti signifies the position of i-th rat and Pt is the prey position for Xti. Xit+1 represents the next position of the i-th rat. A and C serve as update coefficients. The coefficient A is calculated by Formula (3):

In Formula (3), R and C constrain within [1, 5] and [0, 2], respectively. T is the maximum iteration number.

| Algorithm 1 RSO | |||

| 1 | Initialization: | ||

| 2 | Generate the initial population Xi of the RSO | ||

| 3 | Calculate fitness scores and identify X*best | ||

| 4 | while t ≤ T do | ||

| 5 | for i = 1, 2, …, N do | ||

| 6 | Update parameters using Formula (3) | ||

| 7 | Update population positions using Formula (2) | ||

| 8 | end for | ||

| 9 | Calculate fitness scores and update X*best | ||

| 10 | end while | ||

3. Methods

3.1. Improve Algorithm

In this section, a Hyperactivity Rat Swarm Optimizer (HRSO) based on multiple types of chase search and attack actions is proposed to improve the shortcomings of RSO. HRSO implements a two-phase calculation process, comprising a search and a mutation phase. The search phase is further divided into two parts, exploration and exploitation, by means of a nonlinear adaptive parameter. There are five search actions, center point search and cosine search, which are based on the exploration part, and rush search, contrast search and random search, which are based on the exploitation part. The mutation phase employs a stochastic wandering strategy. Algorithm 2 presents the pseudo-algorithm for HRSO.

| Algorithm 2 HRSO | |||

| 1 | Initialization: | ||

| 2 | Generate the initial population Xi of the HRSO | ||

| 3 | Calculate fitness scores and identify X*best | ||

| 4 | while t ≤ T do | ||

| 5 | Execute Search Phase | ||

| 6 | for i = 1, 2, …, N do | ||

| 7 | Update parameters using Formulas (4) and (5) | ||

| 8 | if |E| ≥ 1 then | ||

| 9 | Execute Exploration Search using Algorithm 3 | ||

| 10 | elseif |E| < 1 then | ||

| 11 | Execute Exploitation Search using Algorithm 4 | ||

| 12 | end if | ||

| 13 | end for | ||

| 14 | Calculate fitness scores and identify X*best | ||

| 15 | Execute Mutation Phase using Algorithm 5 | ||

| 16 | Calculate fitness scores and identify X*best | ||

| 17 | end while | ||

3.2. Nonlinear Adaptive Parameter

A nonlinear adaptive parameter E is employed to enhance RSO, which is an important parameter to regulate the proportion of exploration and exploitation parts during the search phase. Depending on the absolute value of E, the algorithm either performs exploration part (when |E| ≥ 1) or exploitation part (when |E| < 1). The mathematical expression for E is given as in Formula (4).

In Formula (4), E0 is a random number from –1 to 1. cp is an adaptive parameter that decreases progressively with iterations. Its representation is given as Formula (5):

According to Formulas (4) and (5), the range of values for parameter E can be determined as −2 to 2.

3.3. Exploration Search

To enable RSO to explore more deeply the invisible domains within search space to improve the efficiency of discovering the global optimal position, center point search and cosine search are introduced in the exploration part of the proposed algorithm.

Center point search is a linear search method based on the average position of the population, which uses cp to control the extended search. This search method is given as Formula (6).

In Formula (6), mean(Xti) denotes the average position of the population. r2 constrains within [0, 1].

The cosine search is a search pattern characterized by a wide range of oscillatory variation. A set of adaptive random numbers z is used to control the cosine fluctuation variation. This search method is given as Formula (7).

In Formula (7), the mathematical expression for the parameter z is as follows, in Formula (8):

In Formula (8), r3 and r5 are random numbers. r6 is Gaussian distributed random numbers. This is controlled by a random number r4. When r4 ≥ cp, r6 shows parts greater than 0.5, otherwise, it shows the parts less than 0.5.

To maximize the exploration performance of HRSO, a random number r1, with values between 0 and 1, is used to control the search method. Specifically, if r1 ≥ 0.5, the search iteration is performed using Formula (6), otherwise, Formula (7) is used. The pseudo-algorithm for details of the exploration search is presented in Algorithm 3.

| Algorithm 3 Exploration Search | |||

| 1 | Update parameters using Formulas (5) and (8) | ||

| 2 | Calculate mean(Xti) based on the current population | ||

| 3 | when |E| ≥ 1 then | ||

| 4 | if r1 ≥ 0.5 then | ||

| 5 | Update population positions using Formula (6) | ||

| 6 | else then | ||

| 7 | Update population positions using Formula (7) | ||

| 8 | end if | ||

| 9 | end when | ||

3.4. Exploitation Search

To enhance the convergence speed and local search capability, three search methods are introduced into the exploitation part of the proposed algorithm, which are rush search, contrast search and random search.

The rush search is the same as the original algorithm, which is Equation (1), but the ranges of the parameters R and C are set to [1, 3] and [–1, 1] respectively, aiming to focus the algorithm on the neighborhood to enhance the local optimization.

The contrast search is performed by randomly selecting two individuals, j1 and j2, from the population and comparing them with the i-th individual, approaching in the direction of the optimal fitness value among the three individuals. The mathematical expression is as in Formulas (9) and (10):

In Formulas (9) and (10), r7 denotes a random number that lies between 0 and 1. m is a constant with the value 1 or 2.

The random search also selects two individuals, with the current individual search in the direction of the optimal fitness value among j1 and j2. It can be expressed by Formulas (11) and (12):

In Formulas (11) and (12), r8 constrains within [0, 1].

To maximize the exploitation performance of the proposed algorithm, a random constant k is introduced to determine the search methods, where k takes values of 1, 2 and 3. Specifically, k = 1 employs Formula (2) for the rush search, k = 2 utilizes Formula (9) for the contrast search and k = 3 applies Formula (10) for the random search. The pseudo-algorithm for details of the exploitation search is presented in Algorithm 4.

| Algorithm 4 Exploitation Search | ||||

| 1. | Update random parameters k, j1, and j2 | |||

| 2. | when |E| < 1 then | |||

| 3. | if k = 1 then | |||

| 4. | Update population positions using Formula (2) | |||

| 5. | elseif k = 2 then | |||

| 6. | Update random parameters jm | |||

| 7. | if fit(j) < fit(i) then | |||

| 8. | Update population positions using Formula (9) | |||

| 9. | else then | |||

| 10. | Update population positions using Formula (10) | |||

| 11. | end if | |||

| 12. | elseif k = 3 then | |||

| 13. | if fit(j1) < fit(j2) then | |||

| 14. | Update population positions using Formula (11) | |||

| 15. | else then | |||

| 16. | Update population positions using Formula (12) | |||

| 17. | end if | |||

| 18. | end if | |||

| 19. | end when | |||

3.5. Stochastic Wandering Strategy

In the mutation phase, a stochastic wandering strategy is employed to improve the algorithmic capability to jump out of local extreme values. This means that a part of the population is randomly selected to be compared with the global optimization and updated iteratively, and the individual with the best fitness will be retained. This strategy is mathematically expressed as in Formulas (13) and (14):

In Formulas (13) and (14), randn is a set of normally distributed random numbers. X*wrost denotes the worst individual in a population at the t-th iteration. It is worth noting that a constant NS is used to control the number of individuals selected in this phase, with NS set to 0.2 in this paper. The pseudo-algorithm for details of the mutation phase is presented in Algorithm 5.

| Algorithm 5 Mutation Phase | |||

| 1. | for i = 1, 2, …, N do | ||

| 2. | if fit(i) > fit(best) then | ||

| 3. | Update population positions using Formula (13) | ||

| 4. | else fit(i) = fit(best) then | ||

| 5. | Update population positions using Formula (14) | ||

| 6. | end if | ||

| 7. | end for | ||

3.6. Classifier Design

For constructing a complete HRSO-ENNC, the structural design of the hidden layer is also a key factor, indicating that the neuron count of the hidden layer has a significant influence on the ENN’s overall performance. An insufficient number of neurons can cause feature information to be lost during the propagation process, preventing the desired accuracy from being achieved. Conversely, an excessive number of neurons may lead to a more complex system prone to overfitting. It is important to highlight that the configuration of the hidden layer also has effects on the weights and thresholds optimization. Therefore, it is necessary to select a reasonable number for the hidden layer. The method presented in [38] is employed in this research, mathematically formalized as in Formula (15):

Formula (15) represents a common empirical equation for determining the number of the hidden layer in neural network, where a represents an integer within the range of [1, 10].

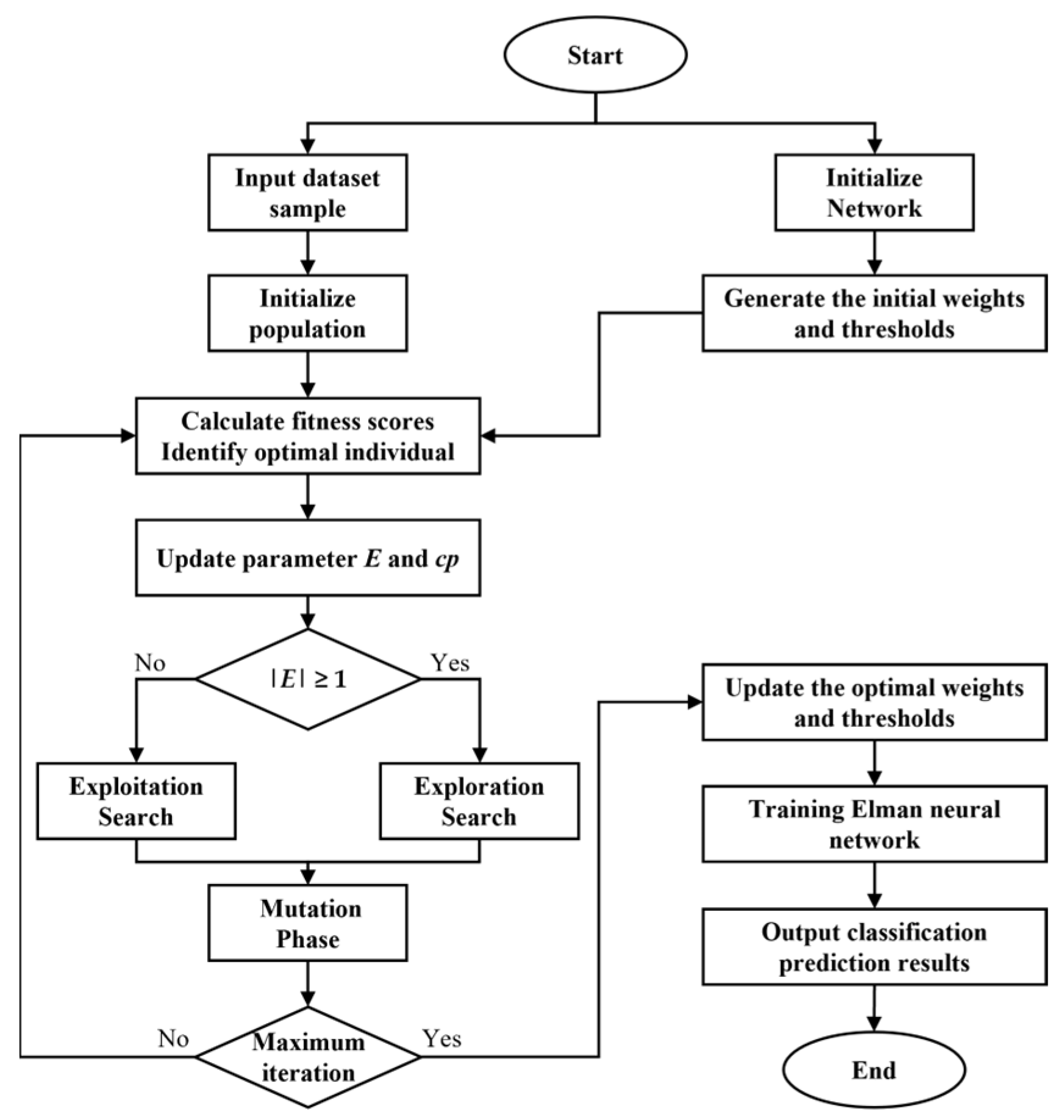

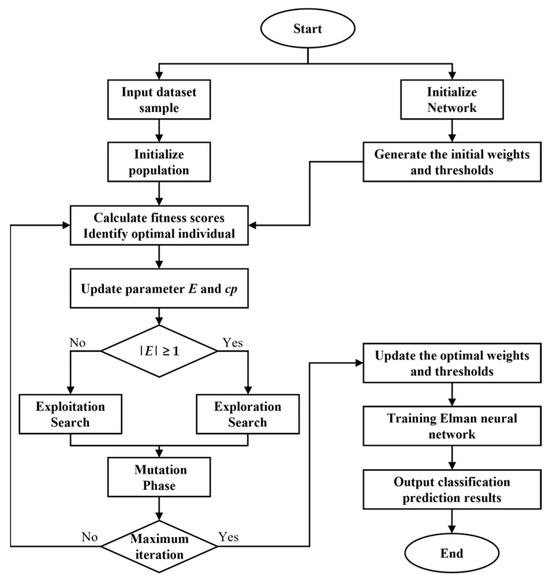

Additionally, the gradient descend method is commonly employed to determine the suitable weights and thresholds in traditional ENN, but it easily obtains a local optimum, ultimately resulting in low accuracy for the system. According to the previous section, the proposed HRSO algorithm ensures an equilibrium between proportions of exploration and exploitation, and it shows a great ability to jump out of the local optimum. In this case, it serves as an adaptive global training method, which means that the proposed algorithm replaces traditional training processes to overcome the shortcomings of the ENN during weights and thresholds optimization. Algorithm 6 presents the pseudo-algorithm for HRSO-ENNC, and Figure 2 represents a specific flowchart.

| Algorithm 6 HRSO-ENNC | |||

| 1. | Input: Dataset sample | ||

| 2. | Normalized dataset | ||

| 3. | Selection of training set by the stratified k-fold cross validation method | ||

| 4. | for i = 1, 2, …, k | ||

| 5. | Initialize ENN and HRSO algorithm parameters | ||

| 6. | Calculate fitness scores and identify X*best | ||

| 7. | while t ≤ T do | ||

| 8. | Update network parameters using Algorithm 2 | ||

| 9. | Calculate fitness scores and identify X*best | ||

| 10. | end while | ||

| 11. | Get X*best for i-th | ||

| 12. | Update network parameters and Training ENN | ||

| 13. | Output classification prediction results | ||

| 14. | end for | ||

Figure 2.

The flowchart of HRSO-ENNC.

4. Experiential Results and Analysis

4.1. Benchmark Functions Test

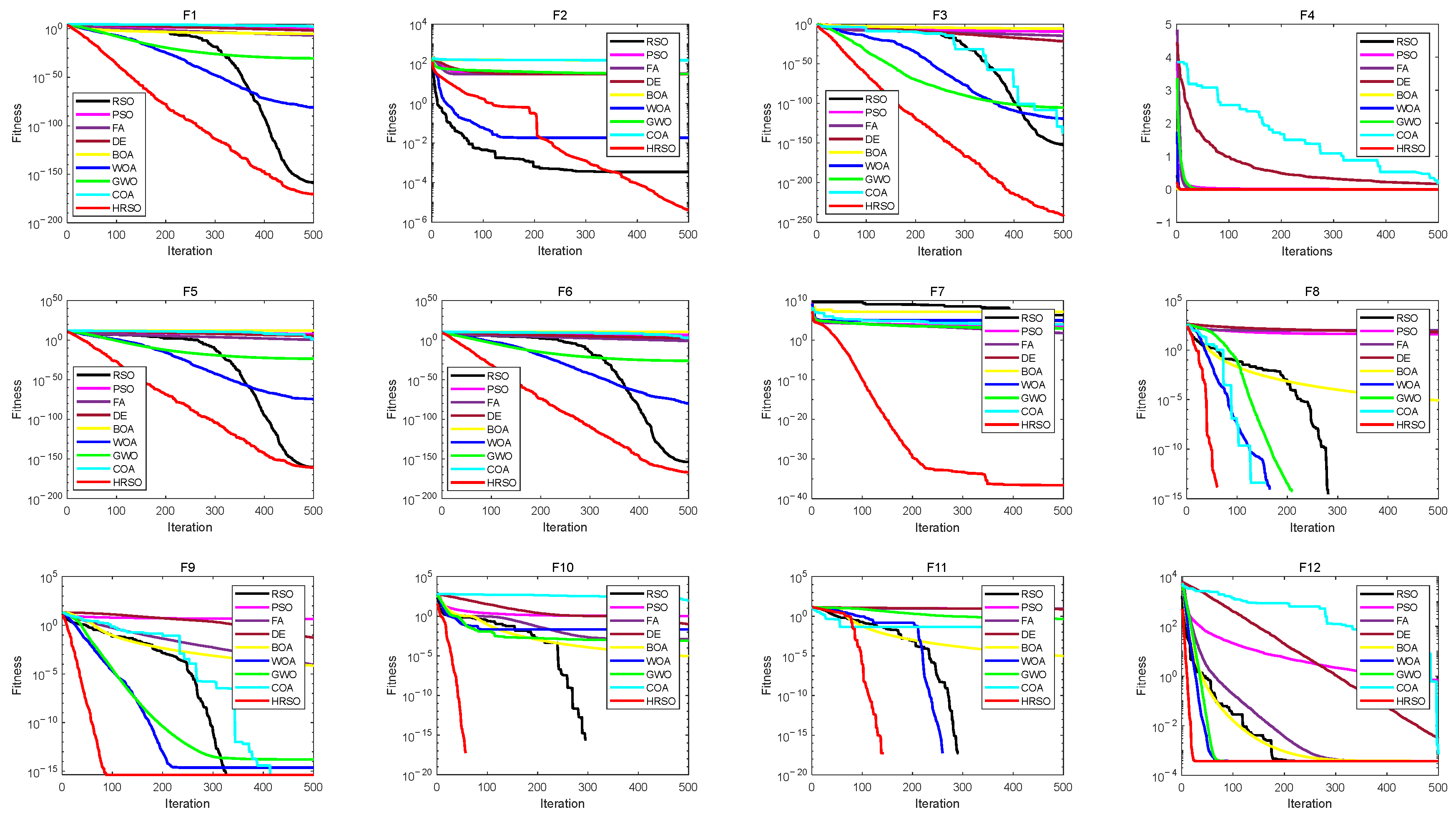

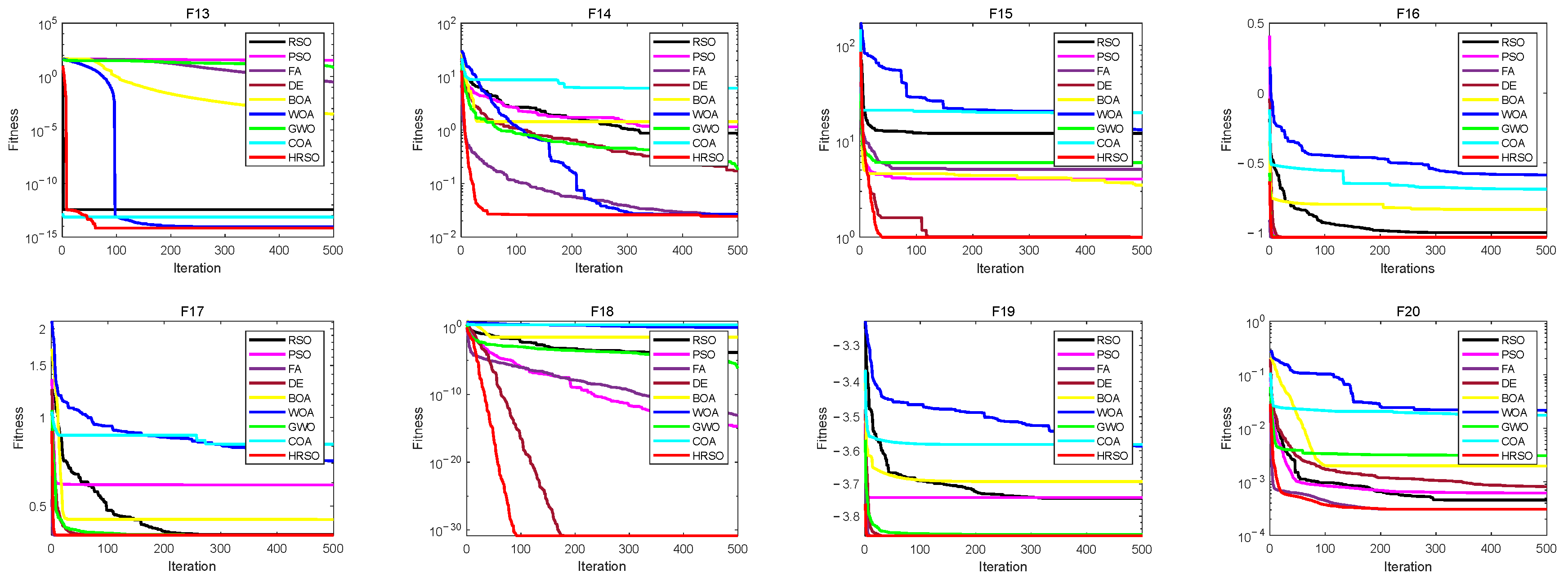

This section evaluates the HRSO using benchmark functions F1 through F20. F1–F7, listed in Table 1, are unimodal functions that are employed to evaluate the algorithm’s performance when there is only one global minimum. F8–F13, listed in Table 2, are multimodal functions that are employed to evaluate the algorithm’s performance in searching for a global solution in the case of numerous local minimums. F14–F20, listed in Table 3, are fixed-dimension multimodal functions that are employed to evaluate the convergence of the algorithm under low-dimensional problems.

Table 1.

Expression of F1–F7 Benchmark Function.

Table 2.

Expression of F8–F13 Benchmark Function.

Table 3.

Expression of F14–F20 Benchmark Function.

In all of the experiments, the proposed HRSO will be compared with RSO, PSO [39], FA [40], DE [41], BOA [42], WOA [43], GWO [44] and COA [45]. For all of the algorithms employed in this research, the population size is set to 30, and the maximum iteration numbers is set to 500. Each algorithm is independently tested 20 times on the benchmark functions listed in Table 1, Table 2 and Table 3. The results of the test experiments are depicted in Table 4. Among them, the Best, Mean and Std represent the optimal value, arithmetic mean and sample standard deviation, respectively, of results from 20 independent runs of the algorithm. These metrics reflect the algorithm’s theoretical maximum performance, overall average performance and the degree of discretization in outputs.

Table 4.

Experimental Results for Benchmark Function.

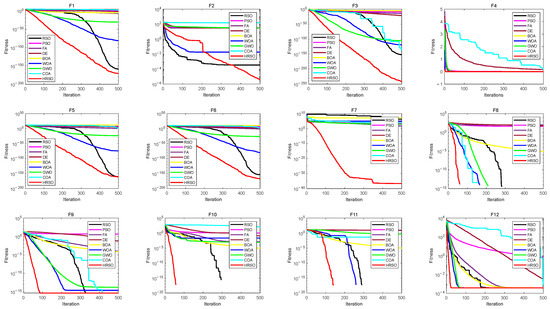

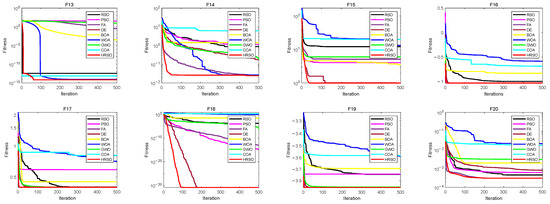

Experimental results from Table 4 reveal that HRSO exhibits the absolute best performance for F2, F3, F5, and F8–F20. F1 and F4 are both bowl-shaped unimodal functions. For F1, the best result for HRSO is marginally inferior to that for RSO, but it demonstrates superior mean and standard scores. This likely stems from its exceptional performance regarding simple unimodal functions while it is susceptible to local optima. Regarding the more complex F4, as predicted by the F1 results, HRSO outperforms RSO and other algorithms in both best and mean metrics. Although HRSO’s standard deviation on F4 is slightly worse than that of RSO, the difference is marginal. F6 and F7 are smooth and plate-shaped unimodal functions. For these functions, although RSO achieves marginally superior best metric, HRSO attains optimal mean and standard deviation scores across all algorithms, further validating the aforementioned conjecture.

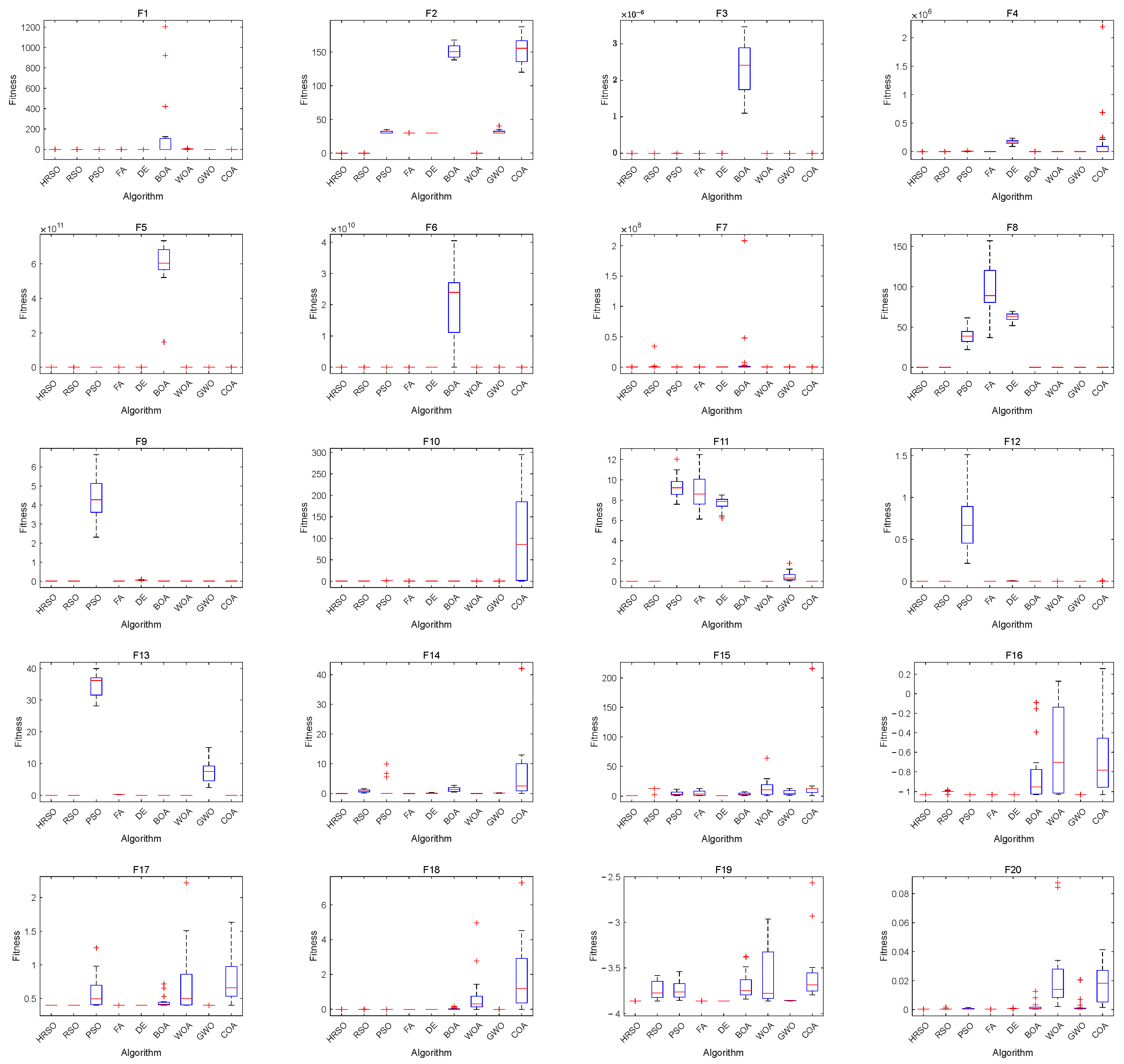

To validate and illustrate the performance of HRSO more intuitively, Figure 3 showcases the convergence curves, Figure 4 presents the boxplots, while Table 5 presents the results of the parameter sensitivity analysis. According to Figure 3 and Figure 4, HRSO exhibits superior performance compared to the other algorithms in both convergence rate and stability, indicating that HRSO is highly exploitable and robust. To further verify the stability of HRSO, Table 5 presents an evaluation of the nonlinear adaptive parameter E, which governs the balance between exploration and exploitation during the search. Using benchmark functions from Table 1, Table 2 and Table 3 and fixing a random number generator seed, experimental results demonstrate that reducing E biases exploitation search and accelerates local convergence, while increasing E shifts toward exploration search and expands global search scope. Notably, the volatility of all of the metrics remained below 2 × 10−3, with no statistically significant differences. This further confirms that HRSO exhibits high robustness against variations in the adjustment parameter of E, and even when E is adjusted within a reasonable range, the algorithm maintains stable performance.

Figure 3.

Convergence graphs of HRSO, RSO, PSO, FA, DE, BOA, WOA, GWO and COA.

Figure 4.

Boxplot of HRSO, RSO, PSO, FA, DE, BOA, WOA, GWO and COA.

Table 5.

Experimental Results for Parameter Sensitivity Analysis.

4.2. Classification Benchmarks Dataset Test

The classification performance of HRSO-ENNC is analyzed in this part. Additionally, comparative results with RSO-ENNC, PSO-ENNC, FA-ENNC, DE-ENNC, BOA-ENNC, WOA-ENNC, GWO-ENNC and COA-ENNC are provided. The performance is evaluated by seven classic benchmarks for data classification prediction problems. Table 6 provides the dataset name, number of samples, features, classes and distribution of sample quantities across different classes for the seven data classification prediction problems.

Table 6.

Classification Prediction Benchmarks Dataset.

In all of the experiments, a stratified seven-fold cross-validation method is utilized to assess the classifier’s performance metrics more accurately. This means that one-seventh of the overall dataset is randomly considered as the test set, while the rest is used for training. Unlike standard cross-validation, the stratified method randomly divides each class into seven equal-sized sets, so that each fold is a better representation of the overall dataset. The classifier is run seven times based on this method, with a different set as the test set each time. The experimental indicators include the values of the maximum, mean and standard deviation for the prediction accuracy on both training and test datasets.

Table 7 and Table 8 present the experimental results of HRSO-ENNC and other algorithm-ENNC for the data classification prediction problem on the training and test dataset, respectively.

Table 7.

Classification Accuracy for Training Dataset.

Table 8.

Classification Accuracy for Testing Dataset.

Table 7 illustrates that, for the Iris, Balancescale and WBC datasets, the HRSO-ENNC achieves better maximum, mean and standard deviation of the prediction accuracy compared to other algorithm-ENNC on the training dataset. For the remaining datasets, the HRSO-ENNC exhibits better maximum and mean accuracy, with only slightly worse standard deviation compared to DE-ENNC and GWO-ENNC. All observed values are less than 1.07 × 10−2, but this is not statistically significant and does not indicate that the HRSO-ENNC is unstable.

Similarly to the experiment results on the training dataset, the best testing results are the in Table 8, except for the WBC and Cancer datasets. For these two datasets, the standard deviation score of the HRSO-ENNC is about 1.76 × 10−2 and 4.50 × 10−3 worse than those of the BOA-ENNC and DE-ENNC, respectively. In conclusion, the experimental outcomes reflect that the HRSO overcomes the dependence of the Elman neural network on weights and thresholds, demonstrating the accuracy of its classification performance.

4.3. AlSi10Mg Process Classification Problem

In selective laser melting (SLM), single-track formation critically determines the dimensional accuracy and surface quality of components. Distinct morphological variations may induce defects including internal porosity and poor surface roughness, which can degrade performance. Consequently, effective classification of these morphological features is crucial for ensuring process stability and product quality. An application of process classification is introduced for single-track AlSi10Mg on selective laser melting (SLM) in this section.

The dataset was acquired through orthogonal simulation experiments in which the laser power and scanning speed are equidistant and sampled in the range of 150–250 W and 0.6–1.6 m/s, with multiple repeated trials conducted. Following image sampling, anomalous data are eliminated, resulting in a final dataset of 441 samples. Based on production experience, samples are classified into three distinct morphological categories. In total, 243 normal class samples are continuous and uniform, 145 over-heat class samples are widened and irregular, and 53 no-continuity class samples display a fractured and discontinuous appearance. Seven critical features are extracted via image measurements, including depth, cross-sectional area and effective width, describing melt pool geometry; linear energy density and laser action depth ratio, reflecting energy transfer efficiency; and standard deviation and coefficient of variation, quantifying process fluctuation. These features collectively characterize geometric attributes, energy distribution and process stability to enable precise classification. Table 9 and Table 10 provide specific details of the dataset and feature. Appendix A provides dataset examples across different classes.

Table 9.

Single-track AlSi10Mg classification problem.

Table 10.

Detailed description of dataset features.

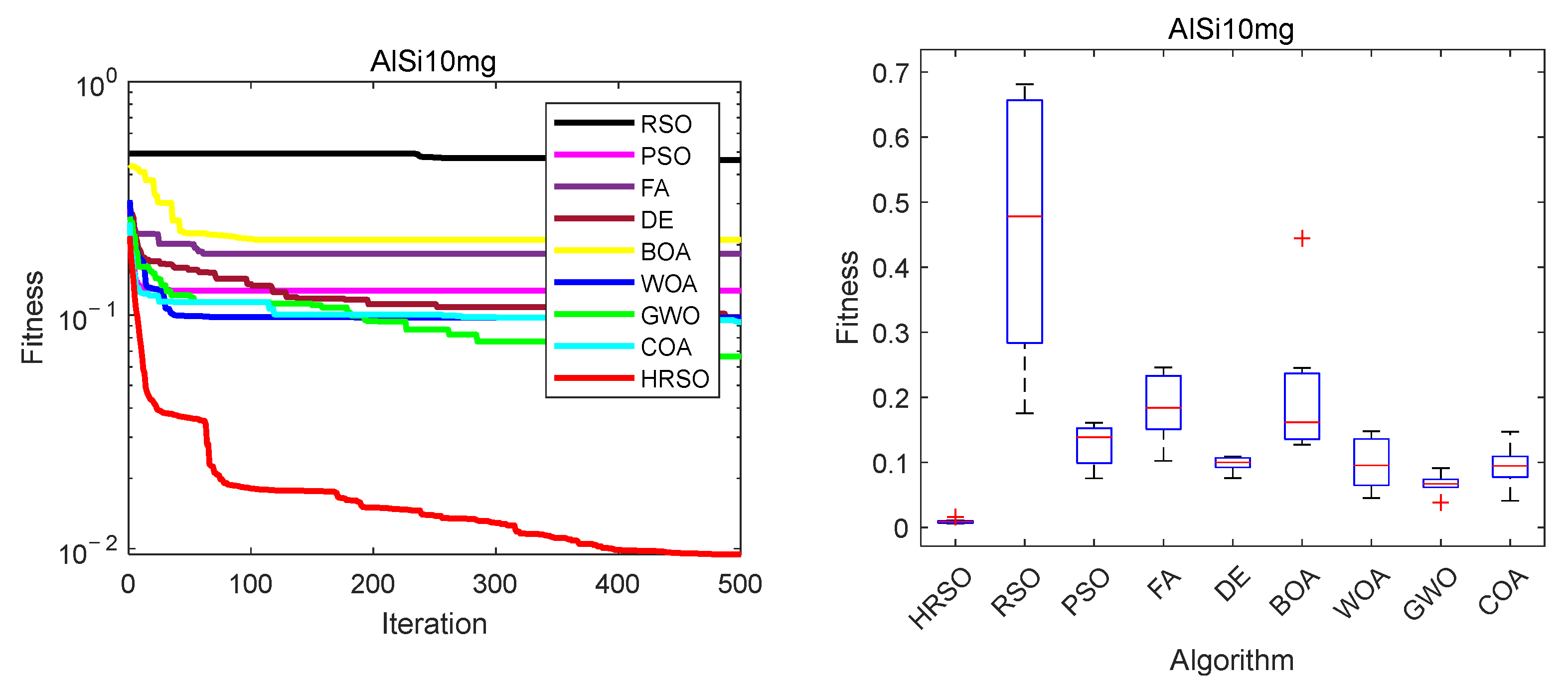

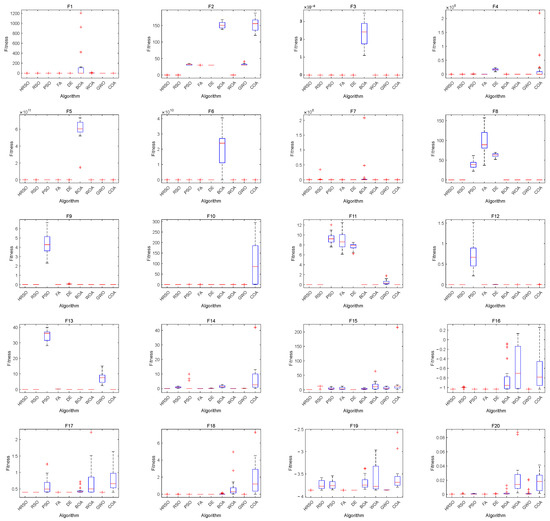

To provide a more objective evaluation of the model’s performance, weighted Precision, weighted Recall and weighted F1-score are included in Table 11 and Table 12. These metrics are calculated as a weighted average using class sample size proportions, effectively mitigating the dominance results of the majority class. Notably, the newly added metrics are the arithmetic means based on the results of the stratified seven-fold cross-validation method. Experimental results presented in Figure 5 confirm that the proposed HRSO-ENNC exhibits superior convergence rate and stability compared to the other classifiers. HRSO-ENNC achieves a maximum identification accuracy of 100%, and on other metrics outperforms all comparison models, as shown in Table 11 and Table 12. This demonstrates the effectiveness and robustness of the HRSO-ENNC in the AlSi10Mg process classification problem.

Table 11.

AlSi10Mg Process Classification Accuracy for Training Dataset.

Table 12.

AlSi10Mg Process Classification Accuracy for Testing Dataset.

Figure 5.

Convergence graphs and boxplots of HRSO, RSO, PSO, FA, DE, BOA, WOA, GWO and COA.

5. Conclusions

In the current work, we address shortcomings of the Elman neural network (ENN), which easily obtains local optimums and a slow convergence rate. To address the data classification prediction problem, an ENN classifier based on the Hyperactive Rat Swarm Optimizer (HRSO) is proposed. The proposed algorithm is designed as a two-phase calculation process and consists of five search actions. The center point and cosine search significantly enhance the exploration phase of the RSO, while rush, contrast and random search enhance the exploitation phase. Finally, a stochastic wandering strategy is used to jump out of local optimal positions. Experimental results based on benchmark functions confirm that HRSO outperforms other algorithms in both convergence rate and stability. Additionally, the proposed HRSO optimizes the weights and thresholds of ENN to overcome its shortcomings by replacing the traditional training methods. The classification performance of HRSO-ENNC is rigorously evaluated through seven benchmark data classification prediction problems, demonstrating the accuracy and generalizability of the model. Furthermore, HRSO-ENNC achieves a maximum accuracy of 100% in the AlSi10Mg process classification problem, with all other metrics significantly outperforming those of other algorithmic classifiers. The results demonstrate that this classifier is an excellent tool for data classification prediction. Further research has shown that the neural network accumulates more weights and thresholds with an augmented number of inputs and outputs, which makes it difficult for the algorithms to converge. Therefore, subsequent research efforts will prioritize algorithmic enhancements for high-dimensional optimization challenges, thereby improving algorithmic scalability.

Author Contributions

Conceptualization, H.C. and X.L.; methodology, M.H.; software, R.N.; validation, R.N.; formal analysis, Y.X.; investigation, L.S.; resources, H.C.; data curation, Y.X.; writing—original draft preparation, R.N.; writing—review and editing, R.N.; visualization, R.N.; supervision, L.S.; project administration, H.C. and X.L.; funding acquisition, H.C. and X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Dateset examples across different classes.

Table A1.

Dateset examples across different classes.

| Depth | Cross-Sectional Area | Effective Width | Linear Energy Density | Laser Action Depth Ratio | Standard Deviation | Coefficient of Variation | Class |

|---|---|---|---|---|---|---|---|

| 0.0399 | 0.0028 | 0.0669 | 0.2500 | 0.6304 | 0.0771 | 0.1152 | over-heat |

| 0.0349 | 0.0019 | 0.0588 | 0.2100 | 0.6588 | 0.0517 | 0.0880 | normal |

| 0.0201 | 0.0008 | 0.0366 | 0.1188 | 0.8822 | 0.1213 | 0.3312 | no-continuity |

References

- Kotsiantis, S.B.; Zaharakis, I.D.; Pintelas, P.E. Machine learning: A review of classification and combining techniques. Artif. Intell. Rev. 2006, 26, 159–190. [Google Scholar] [CrossRef]

- Sun, D.; Wen, H.; Wang, D.; Xu, J. A random forest model of landslide susceptibility mapping based on hyperparameter optimization using Bayes algorithm. Geomorphology 2020, 362, 107201. [Google Scholar] [CrossRef]

- Kuehn, N.M.; Scherbaum, F. A Naive Bayes Classifier for Intensities Using Peak Ground Velocity and Acceleration. Bull. Seismol. Soc. Am. 2010, 100, 3278–3283. [Google Scholar] [CrossRef]

- Kovacs, G.; Hajdu, A. Translation Invariance in the Polynomial Kernel Space and Its Applications in KNN Classification. Neural Process. Lett. 2013, 37, 207–233. [Google Scholar] [CrossRef]

- Gestel, T.V.; Suykens, J.A.K.; Baesens, B.; Viaene, S.; Vanthienen, J.; Dedene, G.; Moor, D.B.; Vandewalle, J. Benchmarking least squares support vector machine classifiers. Mach. Learn. 2004, 54, 5–32. [Google Scholar] [CrossRef]

- Wang, G.C.; Carr, T.R. Marcellus Shale Lithofacies Prediction by Multiclass Neural Network Classification in the Appalachian Basin. Math. Geosci. 2012, 44, 975–1004. [Google Scholar] [CrossRef]

- Michie, D.; Spiegelhalter, D.J. Machine Learning, Neural and Statistical Classification. Technometrics 1999, 37, 459. [Google Scholar] [CrossRef]

- Zhang, G.P. Neural networks for classification: A survey. IEEE Trans. Syst. Man Cybern. Part C 2000, 30, 451–462. [Google Scholar] [CrossRef]

- Sun, J.Y. Learning algorithm and hidden node selection scheme for local coupled feedforward neural network classifier. Neurocomputing 2012, 79, 158–163. [Google Scholar] [CrossRef]

- Müller, M.; Stiefel, M.; Bachmann, B.-I.; Britz, D.; Mücklich, F. Overview: Machine Learning for Segmentation and Classification of Complex Steel Microstructures. Metals 2024, 14, 553. [Google Scholar] [CrossRef]

- Fang, W.; Huang, J.-X.; Peng, T.-X.; Long, Y.; Yin, F.-X. Machine learning-based performance predictions for steels considering manufacturing process parameters: A review. J. Iron Steel Res. Int. 2024, 31, 1555–1581. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, Y.M.; Liu, D.F. Calculating the synthetic efficiency of hydroturbine based on the BP neural network and Elman neural network. Appl. Mech. Mater. 2013, 457–458, 801–805. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, Y.T. Quality Prediction Model Based on Novel Elman Neural Network Ensemble. Complexity 2019, 2019, 9852134. [Google Scholar] [CrossRef]

- Li, Q.; Qin, Y.; Wang, Z.Y.; Zhao, Z.X.; Zhan, M.H.; Liu, Y. Prediction of Urban Rail Transit Sectional Passenger Flow Based on Elman Neural Network. Appl. Mech. Mater. 2014, 505–506, 1023. [Google Scholar] [CrossRef]

- Gong, X.L.; Hu, Z.J.; Zhang, M.L.; Wang, H. Wind Power Forecasting Using Wavelet Decomposition and Elman Neural Network. Adv. Mater. Res. 2013, 608–609, 628–632. [Google Scholar] [CrossRef]

- Gupta, T.; Kumar, R. A novel feed-through Elman neural network for predicting the compressive and flexural strengths of eco-friendly jarosite mixed concrete: Design, simulation and a comparative study. Soft Comput. 2024, 28, 399–414. [Google Scholar] [CrossRef]

- Wei, L.; Wu, Y.; Fu, H.; Yin, Y. Modeling and Simulation of Gas Emission Based on Recursive Modified Elman Neural Network. Math. Probl. Eng. 2018, 2018, 9013839. [Google Scholar] [CrossRef]

- Chiroma, H.; Abdul-Kareem, S.; Ibrahim, U.; Ahmad, I.G.; Garba, A.; Abubakar, A.; Hamza, M.F.; Herawan, T. Malaria Severity Classification Through Jordan-Elman Neural Network Based on Features Extracted from Thick Blood Smear. Neural Netw. World 2015, 25, 565–584. [Google Scholar] [CrossRef][Green Version]

- Boldbaatar, E.A.; Lin, L.Y.; Lin, C.M. Breast Tumor Classification Using Fast Convergence Recurrent Wavelet Elman Neural Networks. Neural Process. Lett. 2019, 50, 2037–2052. [Google Scholar] [CrossRef]

- Fu, Q.; Jing, B.; He, P.; Si, S.; Wang, Y. Fault Feature Selection and Diagnosis of Rolling Bearings Based on EEMD and Optimized Elman_AdaBoost Algorithm. IEEE. Sens. J. 2018, 18, 5024–5034. [Google Scholar] [CrossRef]

- Arun, C.; Gopikakumari, R. Optimisation of both classifier and fusion based feature set for static American sign language recognition. IET. Image. Process 2020, 14, 2101–2109. [Google Scholar] [CrossRef]

- Zhang, M.; Diao, M.; Gao, L.; Liu, L. Neural Networks for Radar Waveform Recognition. Symmetry 2017, 9, 75. [Google Scholar] [CrossRef]

- Akarslan, E.; Dogan, R. A novel approach based on a feature selection procedure for residential load identification. Sustain. Energy Grids 2021, 27, 100488. [Google Scholar] [CrossRef]

- Chen, D.; Wang, P.; Sun, K.; Tang, Y.; Kong, S.; Fan, J. Simulation and prediction of the temperature field of copper alloys fabricated by selective laser melting. J. Laser Appl. 2022, 34, 042001. [Google Scholar] [CrossRef]

- Vaidyaa, P.; John, J.J.; Puviyarasan, M.; Prabhu, T.R.; Prasad, N.E. Wire EDM Parameter Optimization of AlSi10Mg Alloy Processed by Selective Laser Melting. Trans. Indian Inst. Met. 2023, 74, 2869–2885. [Google Scholar] [CrossRef]

- Chaudhry, S.; Soulainmani, A. A Comparative Study of Machine Learning Methods for Computational Modeling of the Selective Laser Melting Additive Manufacturing Process. Appl. Sci. 2022, 12, 2324. [Google Scholar] [CrossRef]

- Prakash, T.S.; Kumar, A.S.; Durai, C.R.B.; Ashok, S. Enhanced Elman spike Neural network optimized with flamingo search optimization algorithm espoused lung cancer classification from CT images. Biomed. Signal Process. Control 2023, 84, 104948. [Google Scholar] [CrossRef]

- Ding, S.; Zhang, Y.; Chen, J.; Jia, W. Research on using genetic algorithms to optimize Elman neural networks. Neural Comput. Appl. 2013, 23, 293–297. [Google Scholar] [CrossRef]

- Wang, X.F.; Liang, C.C.; Jiang, J.G.; Ju, L.L. Sensor Compensation Based on Adaptive Ant Colony Neural Networks. Adv. Mater. Res. 2011, 301–303, 876–880. [Google Scholar] [CrossRef]

- Barile, C.; Casavola, C.; Pappalettera, G.; Kannan, V.P.; Mpoyi, D.K. Acoustic Emission and Deep Learning for the Classification of the Mechanical Behavior of AlSi10Mg AM-SLM Specimens. Appl. Sci. 2022, 13, 189. [Google Scholar] [CrossRef]

- Barile, C.; Casavola, C.; Pappalettera, G.; Kannan, V.P. Damage Progress Classification in AlSi10Mg SLM Specimens by Convolutional Neural Network and k-Fold Cross Validation. Materials 2022, 15, 4428. [Google Scholar] [CrossRef]

- Ji, Z.; Han, Q.Q. A novel image feature descriptor for SLM spattering pattern classification using a consumable camera. Int. J. Adv. Manuf. Technol. 2020, 110, 2955–2976. [Google Scholar] [CrossRef]

- Li, J.; Cao, L.; Xu, J.; Wang, S.; Zhou, Q. In situ porosity intelligent classification of selective laser melting based on coaxial monitoring and image processing. Measurement 2022, 187, 110232. [Google Scholar] [CrossRef]

- Moghadam, A.T.; Aghahadi, M. Adaptive Rat Swarm Optimization for Optimum Tuning of SVC and PSS in a Power System. Int. Trans. Electr. Energy Syst. 2022, 2022, 4798029. [Google Scholar] [CrossRef]

- Elman, J.L. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Representations by Back-Propagating Errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Dhiman, G.; Garg, M.; Nagar, A.; Kumar, V.; Dehghani, M. A novel algorithm for global optimization: Rat swarm optimizer. J. Amb. Intel. Hum. Comp 2021, 12, 8457–8482. [Google Scholar] [CrossRef]

- Ye, S. RMB exchange rate forecast approach based on BP neural network. Phys. Scr. 2012, 33, 287–293. [Google Scholar] [CrossRef]

- Khare, A.; Rangnekar, S. A review of particle swarm optimization and its applications in Solar Photovoltaic system. Appl. Soft Comput. 2013, 13, 2997–3006. [Google Scholar] [CrossRef]

- Kumar, V.; Kumar, D. A Systematic Review on Firefly Algorithm: Past, Present, and Future. Arch. Comput. Methods Eng. 2021, 28, 3269–3291. [Google Scholar] [CrossRef]

- Slowik, A.; Kwasnicka, H. Evolutionary algorithms and their applications to engineering problems. Neural Comput. Appl. 2020, 32, 12363–12379. [Google Scholar] [CrossRef]

- Arora, S.; Singh, S. Butterfly optimization algorithm: A novel approach for global optimization. Soft Comput. 2018, 23, 715–734. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Zhang, Q.; Bu, X.; Zhan, Z.-H.; Li, J.; Zhang, H. An efficient Optimization State-based Coyote Optimization Algorithm and its applications. Appl. Soft Comput. 2023, 147, 110827. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).