Proposing an Information Management Framework for Efficient Testing Strategies of Automotive Integration

Abstract

1. Introduction

- Content boundary: The framework focuses on “information classification and evaluation” for advanced testing strategies, rather than developing specific testing tools or information security technologies.

- Scenario boundary: We provide a universal framework for general automotive software but do not customize it for specific software modules or vehicle types—adaptation to these specific scenarios requires combining enterprise-specific data.

- No application verification: Without unified baseline data, presenting isolated test results would be misleading; instead, we provide an operational guide to enable OEMs to verify effectiveness using their own data in subsequent applications.

2. Methodology

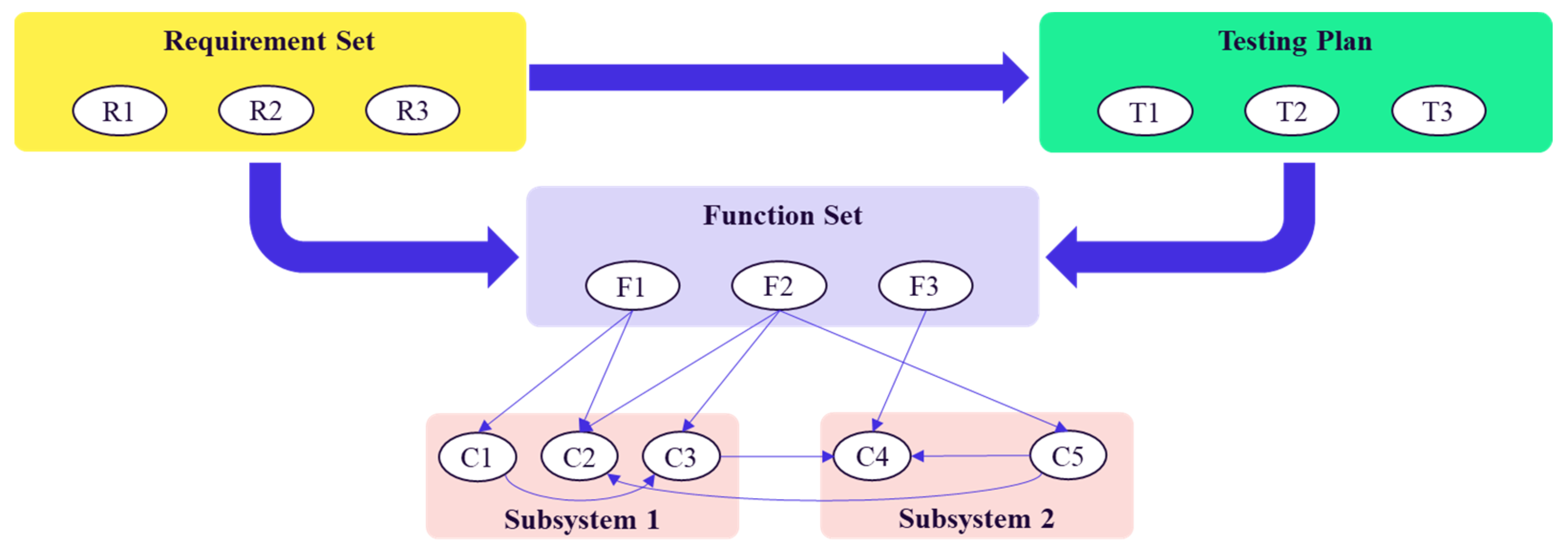

- Requirement Set serves as the origin: the requirements analysis department first defines user and product requirements (e.g., functional safety, performance boundaries) and translates these into actionable specifications.

- Function Set is a series of capabilities developed by developers according to requirements documents. A complete function set should be able to meet all the requirements in the requirement set.

- Subsystems & Components: Each function in the Function Set relies on multiple software components distributed across Subsystem 1 and Subsystem 2. For instance, F1 may depend on C1 and C2 from Subsystem 1, while F2 may share C2 with F1 and additionally utilize C3 from Subsystem 2.

2.1. Stage 1: Investigation on Testing Strategies and Required Information

2.2. Stage 2: Evaluation of Information Usage Difficulty

3. Investigation Results

3.1. Testing Strategies and Required Information

3.1.1. Eliminating Low-Risk High-Level Tests

- Functional Safety is directly related to vehicle safety during operation, and the repair of related issues is more challenging. Its definition and assessment method can refer to ISO 26262 [36].

- Subsystem Complexity describes the number of components and interactions between components within the subsystem. Its quantitative evaluations often use graph theory in mathematics. It is widely agreed in the industry that the more complex a subsystem is, the more likely it is to encounter errors [40].

- Driver/Environment Influence considers whether the software function is affected by environmental or driver factors when performing tasks. Since environmental or driver factors are often uncontrollable, problems may arise during development due to the inability to cover all possible scenarios [39].

- Fault Detection describes whether the software function is designed with runtime fault detection mechanisms. In some cases, fault detection mechanisms can replace certain test cases [41].

- User Relevance, Legal Relevance and Insurance Relevance are used to describe whether the software function is mentioned in user experience design, Legal restrictions, or insurance terms. If relevant, it implies that the software function requires specific dedicated test cases [42].

- Drivability Impact describes whether the software function has a direct impact on drivability. As drivability involves multiple complex evaluation dimensions, including safety, comfort, power, operability, etc., the test cases for the related software function also need to be specifically designed [43].

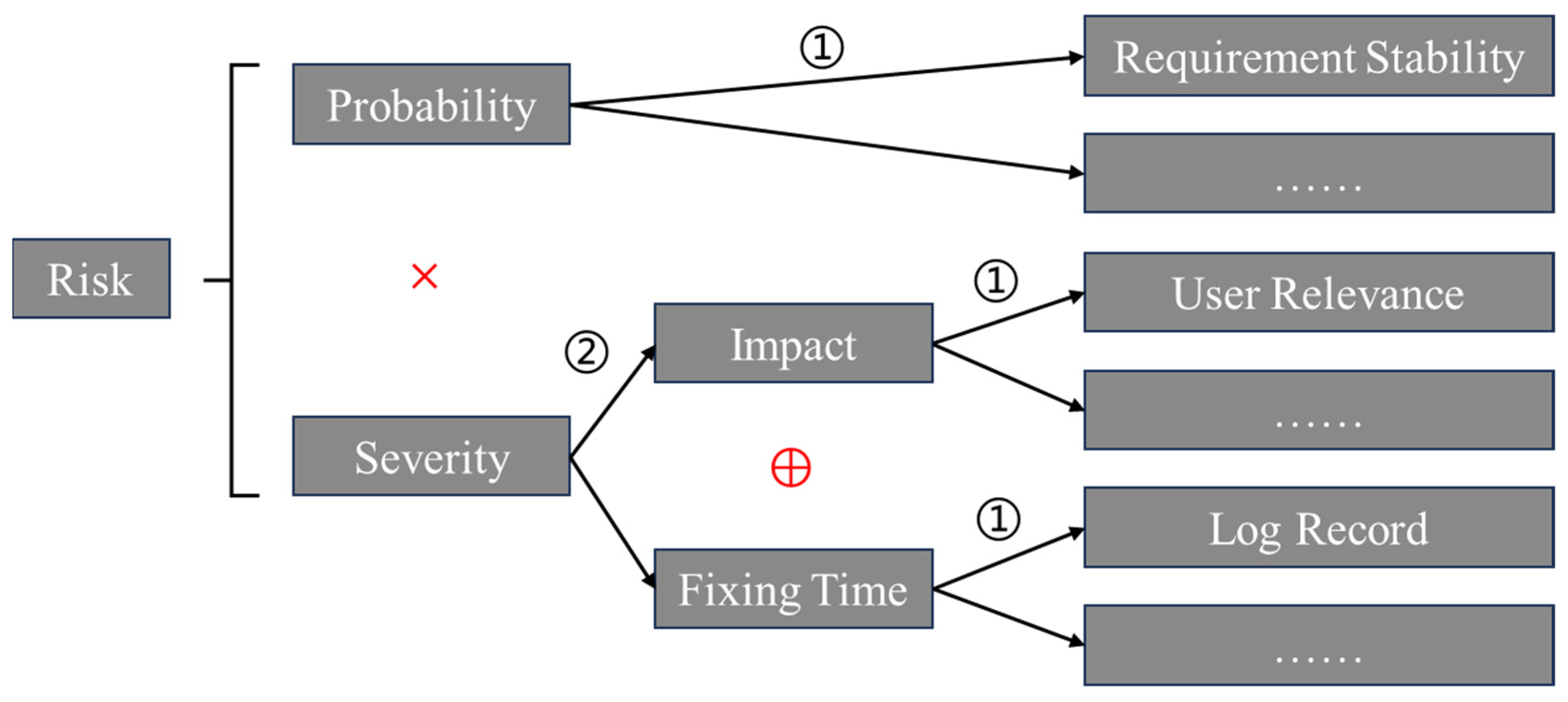

3.1.2. Adjusting the Test Plan Based on Function Risks

- Requirement Stability primarily considers whether the requirements description of a software function has remained largely unchanged over a long period. The more stable the requirements, the higher the probability of reusing relevant software, leading to a lower probability of errors [25].

- Requirement Complexity can be described by the target parameters defined in requirements and the number of interaction logics. Generally, the more complex the requirements, the more challenging it is to develop the software, resulting in a higher probability of errors [25].

- Maturity primarily considers whether the components utilized in a software function are newly developed or reused. The probability of errors is lower in previously validated mature software [25].

- Number of Variants considers the number of branches in the components used by a software function. Variants often pose potential sources of errors in software development and management [44].

- Redundancy considers whether a software function is designed with redundant backup mechanisms. Functions with good redundancy often have a smaller impact even when issues occur [45].

- Commonality considers whether the components used by a software function are also used by other functions. If a function shares many components with others, errors can propagate to other functions, resulting in more severe consequences [46].

- Encryption considers whether the components used by a software function involve strict encryption. Information security issues can have broader impacts, such as privacy breaches [47].

- Log Record and Structured Decomposition respectively consider whether a software function is designed with logging mechanisms and structured testable units. Comprehensive and detailed logging, as well as structured software architecture, aid developers in quickly identifying and fixing defects [48,49].

- Usage considers the frequency of a software function’s usage or execution. The higher the usage frequency, the greater the probability of errors and the more pronounced the impact [25].

- Deployment to Multi-Environments considers the number of platforms that a software function needs to adapt to. Developing highly universal software for embedded systems, such as in vehicles, is challenging. This implies that the more environments a software function needs to accommodate, the higher the probability of failure and impact [25].

- Part of V2X considers whether a software function uses V2X components. The use of V2X components increases the avenues for error occurrence and propagation [47]. For example, the “V2I (vehicle-to-infrastructure) traffic light information reception function” relies on V2X components to interact with road-side units; if the V2X component fails to receive traffic light signals, the function will be disabled, and the failure may also propagate to the “adaptive cruise based on traffic lights” function.

- Performance includes metrics such as real-time capability, precision, and accuracy. High-performance requirements not only demand a high level of proficiency from developers but also mean that even minor errors can have significant consequences [25]. For example, the “adaptive cruise control function” requires a real-time response to the front vehicle’s speed changes (latency ≤ 100 ms) and speed control precision (error ≤ 1 km/h); even a 200 ms latency may cause rear-end collisions.

- Dependency considers how many other software functions a software function relies on for its operation. Higher dependency implies a need for coordination among more software functions during development and operation, which is related to the probability of errors and the fixing time [50].

- Code Complexity includes metrics such as the number of statements, code line length, number of loop paths, depth of function nesting, recursion depth, number of function calls, parameter count, and comment density. Bryan et al. proposed a gray-box testing algorithm and process that utilizes non-intrusive code analysis tools to detect interaction patterns between functions, thus predicting the probability of errors and the difficulty of fixes [51].

- Requirement Density how many user requirements are associated with a software function. More associated requirements lead to more complex software design and larger error impacts [25].

- Interactivity considers the scale of signals for the input and output of a software function. Muscedere et al., through modeling and case studies, found a clear positive correlation between interactivity and the probability of errors. The scale of signals also reflects the scope and complexity of data propagation, which is similarly associated with impact and fixing time [52].

3.1.3. Troubleshooting Based on Component Risks

- Interface Complexity considers the difficulty of external interactions with software components. The more complex the interface, the more likely it is to encounter issues when accessing the component externally [54].

- Multidisciplinary Relevance considers the number of disciplines involved in the development of software components. As automobiles are complex products with high levels of mechanical, electrical, and hydraulic coupling, the development of certain software components may require modeling methods from different fields. The probability of errors in such models is relatively high [55].

- Team Capability and Team Complexity consider the background of the component development team, which can be quantitatively described using the Capability Maturity Model Integration. It is generally believed that software quality is higher when developed by more professional teams [56].

- Open Source Code Proportion and Automatically Generated Code Proportion consider the composition of the source code of software components. Due to the risks associated with open source code and automatically generated code that has not undergone rigorous review in practical projects, there may be some unknown errors [57,58].

- Coupling with Hardware considers whether modifications to a software component require knowledge of the hardware. If there is a strong coupling between the component and the hardware, debugging will be complex, and defect fixes will be slow [59].

- Developer Familiarity primarily considers whether the developer responsible for fixing a software component is sufficiently familiar with the code. The more familiar the developer is with the code, the higher the efficiency of fixes. If the software components are not developed in-house, then the developers of OEM will naturally not be familiar with the specific code. Moreover, if the lifecycle of a software component is long, the developer’s familiarity may decrease over time [60].

- Code Readability primarily considers the structure of the source code of software components and the understandability of text comments. The easier it is to read, the easier it is to modify [61].

- Connection Coupling considers the degree of mutual influence between software components through their connections. For uncoupling connection, a change in one component does not affect another component. For a weak coupling connection, a change in one component unidirectionally affects another component. For a strong coupling connection, any change in one component affects another component. Clearly, stronger connection coupling not only leads to error propagation but also increases the effort required for fixes [62].

| Testing Strategy | Factor | Level | Probability | Impact | Fixing Time | Basis/Source Reference |

|---|---|---|---|---|---|---|

| High-Level Test Elimination | Functional Safety | Function Level | ✓ | ✓ | ISO 26262 [36] | |

| Subsystem Complexity | Architecture Level | ✓ | Graph theory application in software [40] | |||

| Driver/Environment Influence | Function Level | ✓ | Hodel et al. [39] | |||

| Fault Detection | Function Level | ✓ | Theissler [41] | |||

| User Relevance | Function Level | ✓ | Fabian et al. [42] | |||

| Legal Relevance | Function Level | ✓ | Fabian et al. [42] | |||

| Insurance Relevance | Function Level | ✓ | Fabian et al. [42] | |||

| Drivability Impact | Function Level | ✓ | Schmidt et al. [43] | |||

| Function Risk Assessment | Requirement Stability | Requirement Level | ✓ | Kugler et al. [25] | ||

| Requirement Complexity | Requirement Level | ✓ | Kugler et al. [25] | |||

| Maturity | Component Level | ✓ | Kugler et al. [25] | |||

| Number of Variants | Component Level | ✓ | Antinyan et al. [44] | |||

| Redundancy | Function Level | ✓ | Alcaide et al. [45] | |||

| Commonality | Component Level | ✓ | Vogelsang et al. [46] | |||

| Encryption | Component Level | ✓ | Luo et al. [47] | |||

| Log Record | Function Level | ✓ | Ardimento and Dinapoli [48], Du et al. [49] | |||

| Structured Decomposition | Function Level | ✓ | Ardimento and Dinapoli [48], Du et al. [49] | |||

| Usage | Architecture Level | ✓ | ✓ | Kugler et al. [25] | ||

| Deployment to Multi-Environments | Architecture Level | ✓ | ✓ | Kugler et al. [25] | ||

| Part of V2X | Component Level | ✓ | ✓ | Luo et al. [47] | ||

| Performance | Component Level | ✓ | ✓ | Kugler et al. [25] | ||

| Dependency | Function Level | ✓ | ✓ | Vogelsang et al. [50] | ||

| Code Complexity | Source Code Level | ✓ | ✓ | Muscedere et al. [51] | ||

| Requirement Density | Function Level | ✓ | Kugler et al. [25] | |||

| Interactivity | Function Level | ✓ | ✓ | ✓ | Muscedere [52] | |

| Risk-Based Troubleshooting | Interface Complexity | Component Level | ✓ | Durisic et al. [54] | ||

| Multidisciplinary Relevance | Component Level | ✓ | Ryberg [55] | |||

| Team Capability | Component Level | ✓ | Sholiq et al. (CMMI) [56] | |||

| Team Complexity | Component Level | ✓ | Sholiq et al. (CMMI) [56] | |||

| Open Source Code Proportion | Requirement Level | ✓ | Kochanthara et al. [57], Waez and Rambow [58] | |||

| Automatically Generated Code Proportion | Requirement Level | ✓ | Kochanthara et al. [57], Waez and Rambow [58] | |||

| Coupling with Hardware | Component Level | ✓ | Hewett and Kijsanayothin [59] | |||

| Developer Familiarity | Source Code Level | ✓ | Wang et al. [60] | |||

| Code Readability | Source Code Level | ✓ | Scalabrino et al. [61] | |||

| Connection Coupling | Component Level | ✓ | ✓ | Lee and Wang [62] |

3.1.4. Eliminating Redundant Test Cases

- Requirement Types: Requirements are classified into six categories: vehicle function, sub-function, pre-condition, trigger, function contribution, and end-condition. Vehicle function requirements encompass multiple sub-function requirements, while sub-function requirements include pre-condition, trigger, function contribution, and termination condition. This hierarchical structure implies certain logical dependencies between requirements [63].

- Parent Requirement: The parent–child relationship signifies that a parent requirement only needs to be tested if all its child requirements are satisfied; otherwise, the test cases associated with the parent requirement are considered redundant. For example, if engineers know that a test for detecting rainwater using a rain sensor has failed, it can be inferred that the test for automatically closing the sunroof when it rains is redundant since it is already known to fail. Based on the definition of requirement types, there exists a parent–child relationship chain from vehicle function to sub-function to end-condition to function contribution to trigger to pre-condition [63].

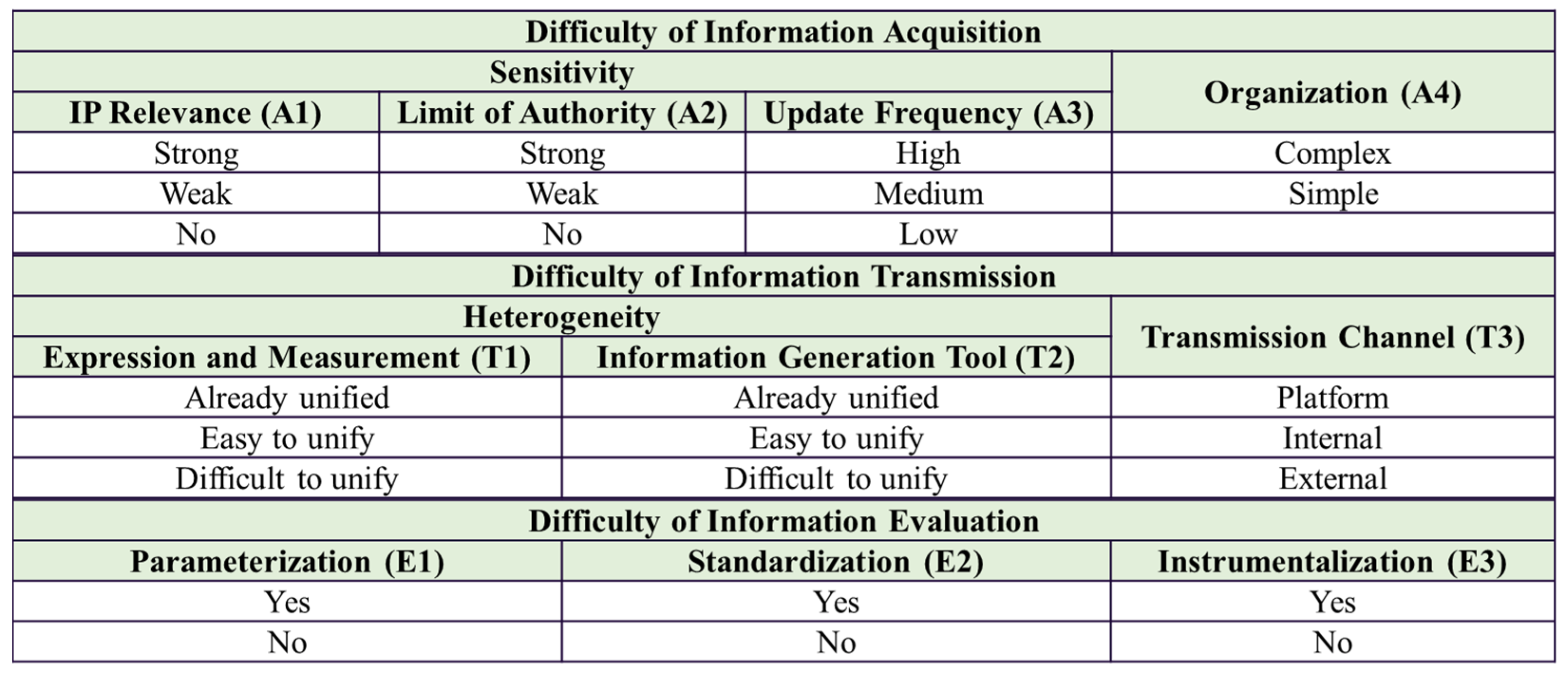

3.2. Difficulty of Using Information

- Technical research and development capability: Information within the company is generally easier to access and more reliable than information from external suppliers. Therefore, OEMs with more in-house software development capabilities have a greater advantage in terms of information availability and efficiency [65]. In industry practice, it is not difficult to observe that new automakers who invest heavily in software development have faster product release and iteration cycles compared to traditional OEMs.

- Supply chain bargaining power: Even when obtaining information from the same supplier, OEMs with stronger supply chain bargaining power tend to acquire more information more quickly [66]. Sometimes, dominant supplier giants may refuse to provide relevant information to smaller-scale OEMs with weaker bargaining power.

- Internal management system: Cross-departmental communication within the enterprise has always been a challenge for OEMs, particularly during periods of industry transformation [67]. The use of certain information may appear to be a simple request and response relationship, but in reality, it may involve the division of power, responsibilities, and interests among departments.

- Platformization for the project: A high level of platformization for a project indicates that many cross-organizational collaborations around the project have become more stable, and there is a wealth of historical data and mature experience to serve as references. This undoubtedly reduces the information usage difficulty.

- Supplier collaboration models around the project: Current automotive software delivery is no longer a turnkey engineering process for black-box software as it used to be [1]. OEMs and suppliers have adopted various new collaboration models. For example, OEMs and suppliers establish joint ventures to jointly develop core technologies. Alternatively, suppliers may deploy a team to work within the OEM, collaborating with the internal development team. Additionally, suppliers may provide open technology licenses, allowing OEMs direct access to source code. Different collaboration models result in varying levels of difficulty in accessing relevant information.

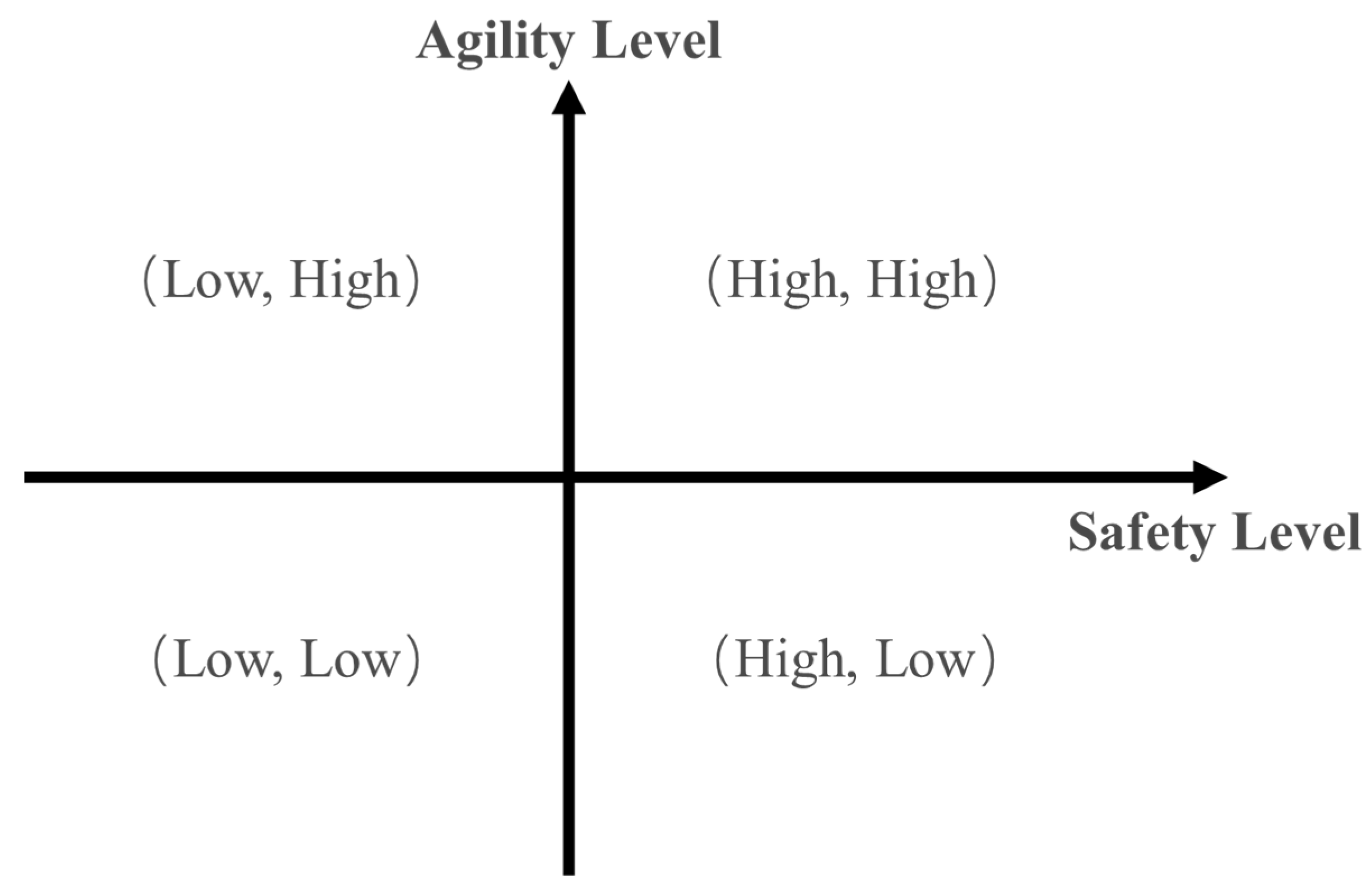

4. Operating Guide

4.1. Potential Application Fields

4.2. Application Outlook

5. Conclusions

- In terms of research methods, although the expert survey participants in the second stage cover multiple roles, the small sample size may limit the universality of the default difficulty values in Table 2. In addition, the information difficulty evaluation focuses on the industry average, regardless of the differences between enterprise types or project scales.

- In terms of framework implementation, the weight distribution of the framework depends on the accumulation of historical project data. OEMs with limited data reserves may face difficulties in implementation, leading to over-reliance on human expert judgment and potential subjectivity. In addition, the framework assumes that information sharing across departments and organizations is technically feasible, but it does not fully consider the non-technical obstacles that may hinder information flow.

- In terms of information security, the framework encourages obtaining detailed supplier information. If there is no strict data security protocol, this may lead to the disclosure of the supplier’s core technology or the OEM’s internal testing strategy, which may lead to legal disputes or competitive disadvantages.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, Z.; Zhang, W.; Zhao, F. Impact, challenges and prospect of software-defined vehicles. Automot. Innov. 2022, 5, 180–194. [Google Scholar] [CrossRef]

- Du, B.; Azimi, S.; Moramarco, A.; Sabena, D.; Parisi, F.; Sterpone, L. An automated continuous integration multitest platform for automotive systems. IEEE Syst. J. 2021, 16, 2495–2506. [Google Scholar] [CrossRef]

- Kang, J.; Ryu, D.; Baik, J. Predicting just-in-time software defects to reduce post-release quality costs in the maritime industry. Softw. Pract. Exp. 2021, 51, 748–771. [Google Scholar] [CrossRef]

- Rana, R.; Staron, M.; Berger, C.; Hansson, J.; Nilsson, M. Analysing defect inflow distribution of automotive software projects. In Proceedings of the 10th International Conference on Predictive Models in Software Engineering, Turin, Italy, 17 September 2014; pp. 22–31. [Google Scholar]

- Matloob, F.; Ghazal, T.M.; Taleb, N.; Aftab, S.; Ahmad, M.; Khan, M.A.; Abbas, S.; Soomro, T.R. Software defect prediction using ensemble learning: A systematic literature review. IEEE Access 2021, 9, 98754–98771. [Google Scholar] [CrossRef]

- Petrenko, A.; Timo, O.N.; Ramesh, S. Model-based testing of automotive software: Some challenges and solutions. In Proceedings of the 52nd Annual Design Automation Conference, San Francisco, CA, USA, 7–11 June 2015; pp. 1–6. [Google Scholar]

- Kriebel, S.; Markthaler, M.; Salman, K.S.; Greifenberg, T.; Hillemacher, S.; Rumpe, B.; Schulze, C.; Wortmann, A.; Orth, P.; Richenhagen, J. Improving model-based testing in automotive software engineering. In Proceedings of the 40th International Conference on Software Engineering: Software Engineering in Practice, Gothenburg, Sweden, 27 May–3 June 2018; pp. 172–180. [Google Scholar]

- Lita, A.I.; Visan, D.A.; Ionescu, L.M.; Mazare, A.G. Automated testing system for cable assemblies used in automotive industry. In Proceedings of the 2018 IEEE 24th International Symposium for Design and Technology in Electronic Packaging (SIITME), Brasov, Romania, 22–25 October 2018; pp. 276–279. [Google Scholar]

- Kim, Y.; Lee, D.; Baek, J.; Kim, M. MAESTRO: Automated test generation framework for high test coverage and reduced human effort in automotive industry. Inf. Softw. Technol. 2020, 123, 106221. [Google Scholar] [CrossRef]

- Dobaj, J.; Macher, G.; Ekert, D.; Riel, A.; Messnarz, R. Towards a security-driven automotive development lifecycle. J. Softw. Evol. Process 2023, 35, e2407. [Google Scholar] [CrossRef]

- Dadwal, A.; Washizaki, H.; Fukazawa, Y.; Iida, T.; Mizoguchi, M.; Yoshimura, K. Prioritization in Automotive Software Testing: Systematic Literature Review. In Proceedings of the 6th International Workshop on Quantitative Approaches to Software Qualityco-Located with 25th Asia-Pacific Software Engineering Conference (APSEC 2018), QuASoQ, Nara, Japan, 4–7 December 2018; pp. 52–58. [Google Scholar]

- Utesch, F.; Brandies, A.; Pekezou Fouopi, P.; Schießl, C. Towards behaviour based testing to understand the black box of autonomous cars. Eur. Transp. Res. Rev. 2020, 12, 1–11. [Google Scholar] [CrossRef]

- Hoffmann, A.; Quante, J.; Woehrle, M. Experience report: White box test case generation for automotive embedded software. In Proceedings of the 2016 IEEE Ninth International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Chicago, IL, USA, 11–15 April 2016; pp. 269–274. [Google Scholar]

- Konzept, A.; Reick, B.; Pintaric, I.; Osorio, C. HIL Based Real-Time Co-Simulation for BEV Fault Injection Testing; SAE Technical Papers; SAE International: Warrendale, PA, USA, 2023; pp. 1–12. [Google Scholar]

- Loyola-Gonzalez, O. Black-box vs. white-box: Understanding their advantages and weaknesses from a practical point of view. IEEE Access 2019, 7, 154096–154113. [Google Scholar] [CrossRef]

- Moukahal, L.J.; Zulkernine, M.; Soukup, M. Vulnerability-oriented fuzz testing for connected autonomous vehicle systems. IEEE Trans. Reliab. 2021, 70, 1422–1437. [Google Scholar] [CrossRef]

- Beckers, K.; Côté, I.; Frese, T.; Hatebur, D.; Heisel, M. A structured and systematic model-based development method for automotive systems, considering the OEM/supplier interface. Reliab. Eng. Syst. Saf. 2017, 158, 172–184. [Google Scholar] [CrossRef]

- Morozov, A.; Ding, K.; Chen, T.; Janschek, K. Test suite prioritization for efficient regression testing of model-based automotive software. In Proceedings of the 2017 International Conference on Software Analysis, Testing and Evolution (SATE), Harbin, China, 3–4 November 2017; pp. 20–29. [Google Scholar]

- Juhnke, K.; Tichy, M.; Houdek, F. Challenges concerning test case specifications in automotive software testing: Assessment of frequency and criticality. Softw. Qual. J. 2021, 29, 39–100. [Google Scholar] [CrossRef]

- Enisz, K.; Fodor, D.; Szalay, I.; Kovacs, L. Reconfigurable real-time hardware-in-the-loop environment for automotive electronic control unit testing and verification. IEEE Instrum. Meas. Mag. 2014, 17, 31–36. [Google Scholar] [CrossRef]

- Sini, J.; Mugoni, A.; Violante, M.; Quario, A.; Argiri, C.; Fusetti, F. An automatic approach to integration testing for critical automotive software. In Proceedings of the 2018 13th International Conference on Design & Technology of Integrated Systems in Nanoscale Era (DTIS), Taormina, Italy, 9–12 April 2018; pp. 1–2. [Google Scholar]

- Amalfitano, D.; De Simone, V.; Maietta, R.R.; Scala, S.; Fasolino, A.R. Using tool integration for improving traceability management testing processes: An automotive industrial experience. J. Softw. Evol. Process 2019, 31, e2171. [Google Scholar] [CrossRef]

- Choi, K.Y.; Lee, J.W. Fault Localization by Comparing Memory Updates between Unit and Integration Testing of Automotive Software in an Hardware-in-the-Loop Environment. Appl. Sci. 2018, 8, 2260. [Google Scholar] [CrossRef]

- Beckers, K.; Holling, D.; Côté, I.; Hatebur, D. A structured hazard analysis and risk assessment method for automotive systems—A descriptive study. Reliab. Eng. Syst. Saf. 2017, 158, 185–195. [Google Scholar] [CrossRef]

- Kugler, C. Systematic Derivation of Feature-Driven and Risk-Based Test Strategies for Automotive Applications; RWTH Aachen University: Achen, Germany, 2023. [Google Scholar]

- Grandell, J. Aspects of Risk Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Bühlmann, H. Mathematical Methods in Risk Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- De Almeida, C.D.A.; Feijó, D.N.; Rocha, L.S. Studying the impact of continuous delivery adoption on bug-fixing time in apache’s open-source projects. In Proceedings of the 19th International Conference on Mining Software Repositories, Pittsburgh, PA, USA, 18–20 May 2022; pp. 132–136. [Google Scholar]

- Lee, H.W. The cost and benefit of interdepartmental collaboration: An evidence from the US Federal Agencies. Int. J. Public Adm. 2019, 43, 294–302. [Google Scholar] [CrossRef]

- Yang, T.M.; Maxwell, T.A. Information-sharing in public organizations: A literature review of interpersonal, intra-organizational and inter-organizational success factors. Gov. Inf. Q. 2011, 28, 164–175. [Google Scholar] [CrossRef]

- Markos, E.; Milne, G.R.; Peltier, J.W. Information sensitivity and willingness to provide continua: A comparative privacy study of the United States and Brazil. J. Public Policy Mark. 2017, 36, 79–96. [Google Scholar] [CrossRef]

- Xie, P.; Rui, Z. Study on the Integration Framework and Reliable Information Transmission of Manufacturing Inte-grated Services Platform. J. Comput. 2013, 8, 146–154. [Google Scholar] [CrossRef][Green Version]

- Sandmann, G.; Seibt, M. Autosar-Compliant Development Workflows: From Architecture to Implementation-Tool Interoperability for Round-Trip Engineering and Verification and Validation; SAE Technical Papers; SAE International: Warrendale, PA, USA, 2012. [Google Scholar][Green Version]

- Riasanow, T.; Galic, G.; Böhm, M. Digital Transformation in the automotive industry: Towards a generic value network. In Proceedings of the 25th European Conference on Information Systems (ECIS), Guimaraes, Portugal, 5–10 June 2017. [Google Scholar][Green Version]

- Ginsburg, S.; van der Vleuten, C.P.M.; Eva, K.W. The hidden value of narrative comments for assessment: A quantitative reliability analysis of qualitative data. Acad. Med. 2017, 92, 1617–1621. [Google Scholar] [CrossRef]

- Birch, J.; Rivett, R.; Habli, I.; Bradshaw, B.; Botham, J.; Higham, D.; Jesty, P.; Monkhouse, H.; Palin, R. Safety cases and their role in ISO 26262 functional safety assessment. In Computer Safety, Reliability, and Security, Proceedings of the 32nd International Conference, SAFECOMP 2013, Toulouse, France, 24–27 September 2013; Proceedings 32; Springer: Berlin/Heidelberg, Germany, 2013; pp. 154–165. [Google Scholar]

- Döringer, S. ‘The problem-centred expert interview’. Combining qualitative interviewing approaches for investigating implicit expert knowledge. Int. J. Soc. Res. Methodol. 2021, 24, 265–278. [Google Scholar] [CrossRef]

- Vöst, S. Vehicle level continuous integration in the automotive industry. In Proceedings of the 2015 10th Joint Meeting on Foundations of Software Engineering, Bergamo, Italy, 30 August–4 September 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 1026–1029. [Google Scholar]

- Hodel, K.N.; Da Silva, J.R.; Yoshioka, L.R.; Justo, J.F.; Santos, M.M.D. FAT-AES: Systematic Methodology of Functional Testing for Automotive Embedded Software. IEEE Access 2021, 10, 74259–74279. [Google Scholar] [CrossRef]

- Zimmermann, T.; Nagappan, N. Predicting subsystem failures using dependency graph complexities. In Proceedings of the 18th IEEE International Symposium on Software Reliability (ISSRE’07), Trollhattan, Sweden, 5–9 November 2007; pp. 227–236. [Google Scholar]

- Theissler, A. Detecting known and unknown faults in automotive systems using ensemble-based anomaly detection. Knowl.-Based Syst. 2017, 123, 163–173. [Google Scholar] [CrossRef]

- Fabian, P.Ü.T.Z.; Murphy, F.; Mullins, M.; Maier, K.; Friel, R.; Rohlfs, T. Reasonable, adequate and efficient allocation of liability costs for automated vehicles: A case study of the German Liability and Insurance Framework. Eur. J. Risk Regul. 2018, 9, 548–563. [Google Scholar] [CrossRef]

- Schmidt, H.; Buettner, K.; Prokop, G. Methods for virtual validation of automotive powertrain systems in terms of vehicle drivability-A systematic literature review. IEEE Access 2023, 11, 27043–27065. [Google Scholar] [CrossRef]

- Antinyan, V. Revealing the complexity of automotive software. In Proceedings of the 28th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Online, 8–13 November 2020; pp. 1525–1528. [Google Scholar]

- Alcaide, S.; Kosmidis, L.; Hernandez, C.; Abella, J. Software-only based diverse redundancy for asil-d automotive applications on embedded hpc platforms. In Proceedings of the 2020 IEEE International Symposium on Defect and Fault Tolerance in VLSI and Nanotechnology Systems (DFT), Frascati, Italy, 19–21 October 2020; pp. 1–4. [Google Scholar]

- Vogelsang, A. Feature dependencies in automotive software systems: Extent, awareness, and refactoring. J. Syst. Softw. 2020, 160, 110458. [Google Scholar] [CrossRef]

- Luo, F.; Zhang, X.; Yang, Z.; Jiang, Y.; Wang, J.; Wu, M.; Feng, W. Cybersecurity testing for automotive domain: A survey. Sensors 2022, 22, 9211. [Google Scholar] [CrossRef]

- Ardimento, P.; Dinapoli, A. Knowledge extraction from on-line open source bug tracking systems to predict bug-fixing time. In Proceedings of the 7th International Conference on Web Intelligence, Mining and Semantics, Amantea, Italy, 19–22 June 2017; pp. 1–9. [Google Scholar]

- Du, J.; Ren, X.; Li, H.; Jiang, F.; Yu, X. Prediction of bug-fixing time based on distinguishable sequences fusion in open source software. J. Softw. Evol. Process 2023, 35, e2443. [Google Scholar] [CrossRef]

- Vogelsang, A.; Teuchert, S.; Girard, J.F. Extent and characteristics of dependencies between vehicle functions in automotive software systems. In Proceedings of the 2012 4th International Workshop on Modeling in Software Engineering (MISE), Zurich, Switzerland, 2–3 June 2012; pp. 8–14. [Google Scholar]

- Muscedere, B.J.; Hackman, R.; Anbarnam, D.; Atlee, J.M.; Davis, I.J.; Godfrey, M.W. Detecting feature-interaction symptoms in automotive software using lightweight analysis. In Proceedings of the 2019 IEEE 26th International Conference on Software Analysis, Evolution and Reengineering (SANER), Hangzhou, China, 24–27 February 2019; pp. 175–185. [Google Scholar]

- Muscedere, B.J. Detecting Feature-Interaction Hotspots in Automotive Software Using Relational Algebra. Master’s Thesis, University of Waterloo, Waterloo, ON, Canada, 2018. [Google Scholar]

- Pett, T.; Eichhorn, D.; Schaefer, I. Risk-based compatibility analysis in automotive systems engineering. In Proceedings of the 23rd ACM/IEEE International Conference on Model Driven Engineering Languages and Systems: Companion Proceedings, Montreal, QC, Canada, 18–23 October 2020; pp. 1–10. [Google Scholar]

- Durisic, D.; Staron, M.; Nilsson, M. Measuring the size of changes in automotive software systems and their impact on product quality. In Proceedings of the 12th International Conference on Product Focused Software Development and Process Improvement, Torre Canne, Italy, 20–22 June 2011; pp. 10–13. [Google Scholar]

- Ryberg, A.B. Metamodel-Based Multidisciplinary Design Optimization of Automotive Structures; Linköping University Electronic Press: Linkoping, Sweden, 2017. [Google Scholar]

- Sholiq, S.; Sarno, R.; Astuti, E.S.; Yaqin, M.A. Implementation of COSMIC Function Points (CFP) as Primary Input to COCOMO II: Study of Conversion to Line of Code Using Regression and Support Vector Regression Models. Int. J. Intell. Eng. Syst. 2023, 16, 92–103. [Google Scholar] [CrossRef]

- Kochanthara, S.; Dajsuren, Y.; Cleophas, L.; van den Brand, M. Painting the landscape of automotive software in GitHub. In Proceedings of the 19th International Conference on Mining Software Repositories, Pittsburgh, PA, USA, 18–20 May 2022; pp. 215–226. [Google Scholar]

- Waez, M.T.B.; Rambow, T. Verifying Auto-generated C Code from Simulink. In Formal Methods, Proceedings of the 22nd International Symposium, FM 2018, Held as Part of the Federated Logic Conference, FloC 2018, Oxford, UK, 15–17 July 2018; Springer: Berlin/Heidelberg, Germany, 2018; Volume 10951, pp. 312–328. [Google Scholar]

- Hewett, R.; Kijsanayothin, P. On modeling software defect repair time. Empir. Softw. Eng. 2009, 14, 165–186. [Google Scholar] [CrossRef]

- Wang, C.; Li, Y.; Chen, L.; Huang, W.; Zhou, Y.; Xu, B. Examining the effects of developer familiarity on bug fixing. J. Syst. Softw. 2020, 169, 110667. [Google Scholar] [CrossRef]

- Scalabrino, S.; Linares-Vásquez, M.; Oliveto, R.; Poshyvanyk, D. A comprehensive model for code readability. J. Softw. Evol. Process 2018, 30, e1958. [Google Scholar] [CrossRef]

- Lee, J.; Wang, L. A method for designing and analyzing automotive software architecture: A case study for an autonomous electric vehicle. In Proceedings of the 2021 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shanghai, China, 27–29 August 2021; pp. 20–26. [Google Scholar]

- Arlt, S.; Morciniec, T.; Podelski, A.; Wagner, S. If A fails, can B still succeed? Inferring dependencies between test results in automotive system testing. In Proceedings of the 2015 IEEE 8th International Conference on Software Testing, Verification and Validation (ICST), Graz, Austria, 13–18 April 2015; pp. 1–10. [Google Scholar]

- Haidry, S.-e.-Z.; Miller, T. Using dependency structures for prioritization of functional test suites. IEEE Trans. Softw. Eng. 2012, 39, 258–275. [Google Scholar] [CrossRef]

- Barsalou, M.; Perkin, R. Statistical problem-solving teams: A case study in a global manufacturing organization in the automotive industry. Qual. Reliab. Eng. Int. 2024, 40, 513–523. [Google Scholar] [CrossRef]

- Bodendorf, F.; Lutz, M.; Franke, J. Valuation and pricing of software licenses to support supplier–buyer negotiations: A case study in the automotive industry. Manag. Decis. Econ. 2021, 42, 1686–1702. [Google Scholar] [CrossRef]

- Bustinza, O.F.; Lafuente, E.; Rabetino, R.; Vaillant, Y.; Vendrell-Herrero, F. Make-or-buy configurational approaches in product-service ecosystems and performance. J. Bus. Res. 2019, 104, 393–401. [Google Scholar] [CrossRef]

- Bisht, R.; Ejigu, S.K.; Gay, G.; Filipovikj, P. Identifying Redundancies and Gaps Across Testing Levels During Verification of Automotive Software. In Proceedings of the 2023 IEEE International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Dublin, Ireland, 16–20 April 2023; pp. 131–139. [Google Scholar]

- Rana, R.; Staron, M.; Hansson, J.; Nilsson, M. Defect prediction over software life cycle in automotive domain state of the art and road map for future. In Proceedings of the 2014 9th International Conference on Software Engineering and Applications (ICSOFT-EA), Vienna, Austria, 29–31 August 2014; pp. 377–382. [Google Scholar]

- Lai, Z.; Shen, Y.; Zhang, G. A security risk assessment method of website based on threat analysis combined with AHP and entropy weight. In Proceedings of the IEEE International Conference on Software Engineering & Service Science, Beijing, China, 24–26 November 2017. [Google Scholar]

- Heeager, L.; Nielsen, P. A conceptual model of agile software development in a safety-critical context: A systematic literature review. Inf. Softw. Technol. 2018, 103, 22–39. [Google Scholar] [CrossRef]

- Birchler, C.; Khatiri, S.; Bosshard, B.; Gambi, A.; Panichella, S. Machine learning-based test selection for simulation-based testing of self-driving cars software. Empir. Softw. Eng. 2023, 28, 71. [Google Scholar] [CrossRef]

- Khan, M.F.I.; Mahmud, F.U.; Hoseen, A.; Masum, A.K.M. A new approach of software test automation using AI. J. Basic Sci. Eng. 2024, 21, 559–570. [Google Scholar]

| Information | Source | Value Range | Acquisition | Transmission | Evaluation | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A1 | A2 | A3 | A4 | T1 | T2 | T3 | E1 | E2 | E3 | |||

| Requirement Stability | Requirement Analysis Department | {Stable, Unstable} | No | No | Low | Simple | Easy to unify | Easy to unify | Platform | No | No | Yes |

| Requirement Complexity | Requirement Analysis Department | {High, Medium, Low} | Weak | Weak | Medium | Simple | Easy to unify | Easy to unify | Internal | No | No | No |

| Subsystem Complexity | Architecture Design Department | Self-defined formula | No | No | Low | Simple | Easy to unify | Easy to unify | Internal | No | No | No |

| Driver/Environment Influence | Internal R&D Team/Supplier | {High, Medium, Low} | Weak | Weak | Low | Simple | Difficult to unify | Easy to unify | Internal | No | No | No |

| Fault Detection | Internal R&D Team/Supplier | {Yes, No} | Weak | Weak | Medium | Simple | Already unified | Easy to unify | Platform | Yes | No | No |

| Interface Complexity | Internal R&D Team/Supplier | Self-defined formula | No | No | Low | Complex | Easy to unify | Difficult to unify | Platform | Yes | No | No |

| Multidisciplinary Relevance | Internal R&D Team/Supplier | 0–10 | Weak | Weak | Medium | Complex | Easy to unify | Easy to unify | External | No | No | No |

| Maturity | Internal R&D Team/Supplier | {New development, Modified carry-over, Carry-over} | No | Weak | Medium | Simple | Easy to unify | Easy to unify | External | Yes | No | Yes |

| Number of Variants | Internal R&D Team/Supplier | 0–1000 | No | No | Low | Simple | Already unified | Difficult to unify | Internal | Yes | Yes | No |

| Team Capability | Supplier Management Department | {Performed, Managed, Defined, Quantitatively Managed, Optimizing} | No | No | Low | Simple | Already unified | Easy to unify | Internal | Yes | No | No |

| Team Complexity | Supplier Management Department | 0–5 | No | No | Medium | Simple | Easy to unify | Easy to unify | External | Yes | Yes | No |

| Open Source Code Proportion | Internal R&D Team/Supplier | % | No | No | Medium | Complex | Already unified | Difficult to unify | External | Yes | Yes | No |

| Automatically Generated Code Proportion | Internal R&D Team/Supplier | % | Strong | Strong | Medium | Complex | Already unified | Difficult to unify | Platform | Yes | No | Yes |

| User Relevance | Internal R&D Team/Supplier | {Yes, No} | No | No | Low | Simple | Difficult to unify | Difficult to unify | Platform | No | No | No |

| Legal Relevance | Internal R&D Team/Supplier | {Yes, No} | No | No | Low | Simple | Easy to unify | Easy to unify | Platform | No | No | No |

| Insurance Relevance | Internal R&D Team/Supplier | {Yes, No} | No | No | Low | Simple | Easy to unify | Easy to unify | Platform | No | No | No |

| Drivability Impact | Internal R&D Team/Supplier | {Yes, No} | No | No | Low | Simple | Difficult to unify | Difficult to unify | Platform | No | No | No |

| Redundancy | Internal R&D Team/Supplier | {Yes, No} | Weak | No | Low | Simple | Easy to unify | Easy to unify | Platform | No | No | No |

| Commonality | Architecture Design Department | 0–100 | Weak | Weak | Medium | Complex | Easy to unify | Easy to unify | Internal | No | No | No |

| Encryption | Internal R&D Team/Supplier | {Yes, No} | No | Weak | Low | Simple | Easy to unify | Easy to unify | Internal | Yes | Yes | Yes |

| Log Record | Internal R&D Team/Supplier | {Yes, No} | No | No | Low | Simple | Easy to unify | Easy to unify | Platform | Yes | No | No |

| Structured Decomposition | Internal R&D Team/Supplier | {Yes, No} | No | No | Low | Simple | Difficult to unify | Difficult to unify | Platform | Yes | No | No |

| Coupling with Hardware | Internal R&D Team/Supplier | {Yes, No} | Weak | Weak | Low | Complex | Easy to unify | Difficult to unify | Platform | No | No | No |

| Developer Familiarity | Internal R&D Team/Supplier | {White, Gray, Black} | No | No | Medium | Complex | Difficult to unify | Difficult to unify | External | No | No | No |

| Code Readability | Internal R&D Team | {High, Medium, Low} | Weak | Weak | Medium | Simple | Difficult to unify | Difficult to unify | Platform | Yes | No | Yes |

| Functional Safety | Internal R&D Team/Supplier | {ASIL A/B/C/D, QM} | Weak | Weak | Low | Simple | Already unified | Difficult to unify | Platform | No | Yes | No |

| Connection Coupling | Internal R&D Team/Supplier | {Strong, Medium, Weak} | Weak | Weak | Medium | Complex | Easy to unify | Difficult to unify | Internal | No | No | No |

| Usage | Architecture Design Department | Self-defined formula | Weak | Weak | High | Simple | Easy to unify | Easy to unify | Internal | Yes | No | Yes |

| Deployment to Multi-Environments | Architecture Design Department | 0–10 | Weak | Weak | Medium | Simple | Easy to unify | Easy to unify | Internal | Yes | No | Yes |

| Part of V2X | Internal R&D Team/Supplier | {Yes, No} | No | No | Low | Simple | Already unified | Easy to unify | Platform | Yes | No | Yes |

| Performance | Internal R&D Team/Supplier | Self-defined formula | Weak | Weak | Low | Simple | Easy to unify | Difficult to unify | Platform | Yes | No | Yes |

| Dependency | Internal R&D Team/Supplier | 0–100 | Weak | Weak | Medium | Complex | Easy to unify | Difficult to unify | Platform | Yes | No | Yes |

| Code Complexity | Internal R&D Team/Supplier | Self-defined formula | Strong | Strong | Medium | Simple | Easy to unify | Difficult to unify | External | No | No | Yes |

| Requirement Density | Internal R&D Team/Supplier | 0–100 | Weak | Weak | High | Simple | Easy to unify | Difficult to unify | Platform | Yes | No | Yes |

| Interactivity | Internal R&D Team/Supplier | Self-defined formula | Weak | Weak | Medium | Complex | Easy to unify | Difficult to unify | Platform | Yes | No | Yes |

| Requirement Category | Requirement Analysis Department | {VF, SF, EC, FC, TR, PC} | Weak | No | Low | Simple | Easy to unify | Easy to unify | Platform | No | Yes | Yes |

| Parent Requirement | Requirement Analysis Department | code name | Weak | No | Low | Simple | Easy to unify | Easy to unify | Platform | No | Yes | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, W.; Shi, M.; Liu, X.; Ren, L. Proposing an Information Management Framework for Efficient Testing Strategies of Automotive Integration. Processes 2025, 13, 3296. https://doi.org/10.3390/pr13103296

Zhang W, Shi M, Liu X, Ren L. Proposing an Information Management Framework for Efficient Testing Strategies of Automotive Integration. Processes. 2025; 13(10):3296. https://doi.org/10.3390/pr13103296

Chicago/Turabian StyleZhang, Wang, Meng Shi, Xinglong Liu, and Linjie Ren. 2025. "Proposing an Information Management Framework for Efficient Testing Strategies of Automotive Integration" Processes 13, no. 10: 3296. https://doi.org/10.3390/pr13103296

APA StyleZhang, W., Shi, M., Liu, X., & Ren, L. (2025). Proposing an Information Management Framework for Efficient Testing Strategies of Automotive Integration. Processes, 13(10), 3296. https://doi.org/10.3390/pr13103296