Abstract

The recent advances in Industry 4.0 have promoted manufacturing industries towards the use of augmented reality (AR), virtual reality (VR), and mixed reality (MR) for visualization and training applications. AR assistance is extremely helpful in assembly task visualization during the stages of product assembly and in disassembly plan visualization during the repair and maintenance of a product/system. Generating such assembly and disassembly task animations consume a lot of time and demands skilled user intervention. In assembly or disassembly processes, each operation must be validated for geometric feasibility regarding its practical implementation in the real-time product. In this manuscript, a novel method for automated assembly task simulation with improved geometric feasibility testing is proposed and verified. The proposed framework considers the assembly sequence plan as input in the form of textual instructions and generates a virtual assembly task plan for the product; furthermore, these instructions are used to ensure there are no collisions using a combination of multiple linear directions. Once the textual instructions achieve geometric feasibility for the entire assembly operation, the visual animations of the assembly operations are successively produced in a game engine and are integrated with the AR platform in order to visualize them in the physical environment. The framework is implemented on various products and validated for its correctness and completeness.

1. Introduction

Assembly is the art of joining multiple parts together to form a product that is suitable for its intended use. The assembly stage comprises 20–30% of the overall time and 30 to 40% of the overall production cost [1,2,3,4]. The benefits of a well-organized and viable assembly sequence include minimizing the assembly costs and time by reducing the tool travel distances and the total number of changes in the assembly directions [4]. The benefits of a well-organized and feasible assembly sequence are minimal assembly costs and time by reducing the travel distances and the total number of tool changes [2,3]. As such, assembly sequence planning (ASP) is critical for the successful production of large-scale products containing a higher number of parts, such as airplanes, cargo/cruise ships, and products used in aero and space industries. Typically, an industrial engineer provides the assembly sequence for a product, based on prior experience and knowledge [5,6]. However, ASP generates a finite number of sequences, some of which are optimal and few are practically beneficial due to the tool and fixture usage [2,3,5]. For every new product configuration, assembly workers have to be trained in assembly operations and in the usage of appropriate tools.

The introduction of AR technology minimizes the cognitive load on assembly workers by visualizing the assembly task simulations in a real-time environment [7,8,9,10]. To create such a visual assembly simulation, virtual assembly sequence validation (VASV) is a critical process that occurs during the prototyping phase, before the development of a physical model of a product. VASV utilizes geometrical information of the product components and user constraints to validate a given set of assembly sequences. This process is essential for ensuring the geometric feasibility and compliance of the generated sequences using geometrical constraints and collision-free paths. By performing VASV during the prototyping phase, manufacturers can identify and resolve the potential assembly conflicts before the actual assembly process to reduce the risk of production errors and to improve the assembly efficiency. Moreover, VASV plays a significant role in optimizing tool and fixture usage, as it helps manufacturers to identify the most efficient assembly sequence, thereby improving productivity and reducing the lead time.

Several researchers have used computational methods [11,12], in which assembly attributes such as the geometrical relations between parts and precedence relations are considered as an input to generate feasible assembly sequence plans. As the part count increases, researchers have used searching techniques to find an optimal feasible assembly plan for large products [13,14,15,16,17]. Graph-based methods have become efficient at finding optimal assembly sequences with skilled user intervention [18,19]. The application program interface (API) of computer-aided design (CAD) tools offers grater flexibility to retrieve the geometrical and topological information necessary for assembly and disassembly sequence planning [20,21,22,23,24,25,26]. However, most of the prominent research on assembly sequence planning (ASP) is limited to generating a feasible assembly sequence plan without further validation in the real-time environment using assembly tools. The current generation of computer-aided design (CAD) software (https://www.autodesk.com/) does not include assembly feasibility testing as a standard feature. While similar tools exist, they do not consider interference testing between parts while moving them in the virtual space, which is a crucial phase in validating an assembly sequence plan. Lack of assembly feasibility testing in the physical environment can result in production errors, increased costs, and delays in the production process. To mitigate these risks, there is an urgent need for advanced CAD software that includes assembly feasibility testing as a standard feature. By incorporating this feature, manufacturers can identify and resolve potential assembly issues before the actual assembly process. Moreover, these assembly plans can be directly exported to AR platforms to visualize in the shopfloor environment.

As a result of limitations in the existing methods, there is a strong need to develop an automated assembly sequence validation and automated assembly simulation framework that can be extended for AR applications. In this paper, Section 2 lists the assumptions in framework construction. Section 3 depicts an improved geometric feasibility testing method for assembly sequence validation. Section 4 describes the assembly sequence validation and automation framework. Case studies are presented in Section 5 to justify the completeness of the proposed framework. The conclusions and future scope of work is presented in Section 6.

2. Assumptions Followed in the Assembly Sequence Validation Framework

Assumptions to create assembly sequence validation (ASV) are listed below.

- All of the components in the given product are rigid, i.e., there will not be any change in their geometrical shape or size during the assembly operation.

- The assembly fixtures/tools hold the part when there is no physical stability for it after it is positioned in its assembly state.

- The friction between parts during assembly operation is not considered for the verification of stability.

- Only recti linear paths along the principle axes and oblique orientation are used for assembling parts with combinations.

- Rotation of a component during the assembly operation is not considered.

- Tool feasibility during the assembly operation is not considered, only the primary and secondary parts of the product are considered for assembly sequence validation.

3. Improved Geometric Feasibility Testing

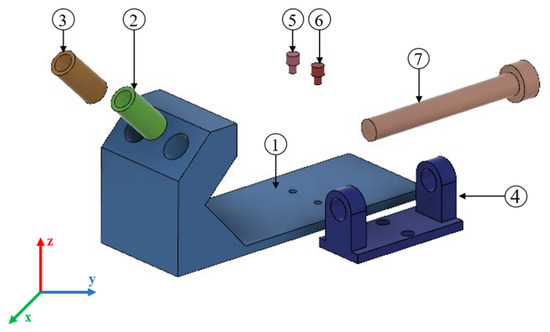

An assembly operation should be conducted through a collision-free path to bring a defined part to its assembled state in the presence of prior existing components. Interference testing is crucial to validate the collision-free path during assembly operations. A bench vise product with seven parts, as shown in Figure 1, is considered for the purpose of implementation.

Figure 1.

Bench vise assembly.

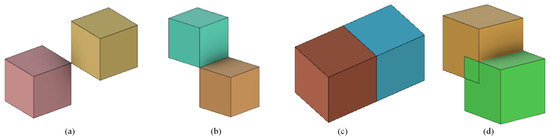

The analysis of interference between two parts in space (Pi and Pj) involves examining the type of contact (point or line and surface) or the overlap between the parts, as illustrated in Figure 2. Traditional methods for analyzing interference between moving parts require recursive calculations by moving part Pi toward part Pj along the specified assembly direction with an incremental step size, which is computationally intensive and resource intensive. In contrast, the proposed approach involves in extruding the projection of part Pi at its center of mass (COM) in the given direction as an extruded volume Vpi and then performs a static contact analysis to compute the interference with part Pj.

Figure 2.

Possible contact interferences in a pair of parts: (a) point contact, (b) line contact, (c) surface contact, and (d) overlap.

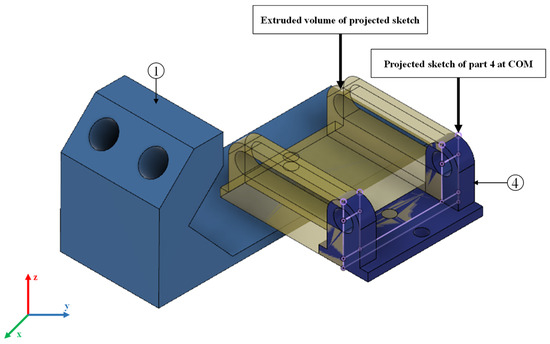

COM is considered as a point for the concentration of vertices. The purpose of this approach is to minimize the loss of geometric information of part Pi during the extrusion process, which is necessary to perform conflict analysis and to identify the type of contact between two parts in the CAD environment. By using COM as the reference point, a plane normal to the assembly direction is created that passes through it. The appending part is projected onto the plane to generate a sketch that consists of the bounding elements of the part. Furthermore, the projected boundary (sketch) is extruded in the specified assembly direction at the same magnitude and distance of the assembly path. Thus, the extruded volume Vpi is generated as a 3D solid body and it retains the necessary geometrical information of the original part, as shown in Figure 3. Furthermore, the extruded solid body is used for interference testing with the existent part or for subassembly.

Figure 3.

Extrusion of P4 from its COM along the direction of assembly operation.

3.1. Interference Testing Algorithm

Interference testing is required during the assembly operation in order to identify the collision-free path, i.e., interference testing has to be done between the existent part and the appending part during each part of the assembly operation. Component snapping and contact analysis for small movements of the appending component is an iterative process that takes a huge amount of time, and an algorithm has been proposed to convert interference testing between moving objects to a static collision analysis between the volume of the appending part and the existent part using Equation (1), where the interference is analyzed individually for each direction vector by creating the extruded volume along the direction vector. The volume of an appending part can be generated by extruding the projected sketch at the COM in the direction of assembly operation, as shown in Figure 3. The volume of interference can be determined by analyzing the geometry of the intersected parts.

Interference between two components is detected using a bounding volume hierarchy (BVH) tree structure. The development of BVH involves two steps: creating a BV for each tessellated triangle and building a tree structure that incorporates the BVs. Axis aligned bounding boxes (AABB) are generated by determining their maximum and minimum coordinates in the Euclidian space. To optimize the computation time of the AABB coordinates, a rotation matrix is further used to rotate the BVs at its axis. The top-down algorithm is employed in this study for tree structure development. The BVs of the triangles (S) are computed after tessellation to determine whether the BV is a leaf node (minimum BV). The BVH overlap intersection result is then converted into the mesh to calculate the volume of intersection. The volume of intersection is further used to distinguish the type of contact, such as the point contact, line contact, surface contact, or overlap of objects. The proposed interference testing Algorithm 1 is given below.

| Algorithm 1: Interference Algorithm |

| Input: Parts, target, direction, magnitude |

| Output: True/False |

| 1 geo center, com, bb ← BoundingBox(target)/* Calculates the Centroid, Center of Mass, Bounding Box dimensions*/ |

| 2 Create a thin Cuboid Ctarget at COM of dimensions bb.length ∗ bb.width ∗ 0.2 |

| 3 Ctarget ← Intersection of Ctarget and target |

| 4 Ctarget ← Extrusion of Ctarget of in direction of magnitude units |

| 5 for Pi in Parts do |

| 6 if Pi is not equal to target then |

| 7 overlap, volume ← check overlap(Pi, target) |

| 8 if overlap is True then |

| 9 if volume > 0 then |

| 10 return True, volume/* Overlapping */ |

| 11 else |

| 12 return False, volume/* Touching */ |

| 13 end |

| 14 else |

| 15 return False, 0 |

| 16 end |

| 17 end |

| 18 end |

| 19 end |

The algorithm considers the part geometries (existent part and appending part), and the direction of assembly and distance travelled for the assembly operation are considered as the input. COM is computed for the appending part to generate an extruded volume. Further axis aligned bounding boxes are created and complete the analysis to verify the type of conflict between the existent part and the volume created by the appending part. Based on the type of intersection, mating with (point/line/surface contact) will be considered as a feasible operation, and volume intersection is considered as an infeasible assembly operation.

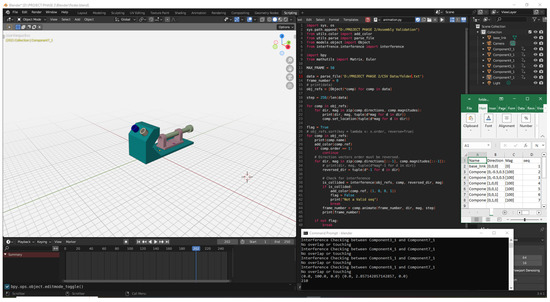

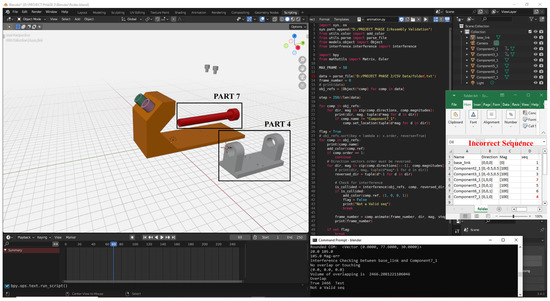

The algorithm was written in Python for interface with the Blender CAD tool using the necessary assembly textual information. The assembly textual information was supplied through an excel sheet to verify the efficacy of the algorithm for a feasible and infeasible assembly sequence plans, as shown in Figure 4 and Figure 5. A sample of the textual information consists of the part name/identity, a vector to describe the assembly orientation, distance travelled, and the order of the part in the assembly sequence.

Figure 4.

The result of the implementation of the interference framework in Blender for a feasible assembly sequence.

Figure 5.

The result of the implementation of the interference framework in Blender for an infeasible assembly sequence.

4. Assembly Sequence Validation and Automation

The proposed framework requires parts in an assembled state as the input, along with user-specified assembly attributes, which include unit vectors to describe the linear direction of the assembly path, distance travelled by a specific part to reach the assembled sate in a given direction, and the sequence of each part as it should be assembled. The part identity is a nomenclature based on the hierarchy from the product tree. Table 1 lists the assembled textual information for the seven-part bench vice product shown in Figure 1.

Table 1.

Sample of the textual assembled instructions for assembling the seven-part bench vice.

4.1. Assembly Sequence Validation and Automation Algorithm

For a product with “n” number of individual components, P1 to Pn−1 are moved in a specific direction (opposite to the direction stated in the provided textual instructions) in reverse assembly order so as to generate an exploded view. While generating the exploded view, the part with order “1” is considered as a base part, which start the assembly operations. Subsequently, each component Pi undergoes a collision-free path check along the assembly direction vector, provided by the user input, which is taken as normal to form a plane at the COM of part Pi, and the projected sketch is extruded for the designated magnitude. By using the framework referenced in Section 3.1. The output volume is indicated. The output volume is “0” in the case of point, line, or surface with contact or no contact. If a collision-free path is detected between component Pi (appending part) and component Pj (where Pj can be an existent or sub-assembly part), component Pi is snapped to its original position in the assembled state. This process is repeated for all components, as specified in the assembly sequence order. However, if any interference occurs between component Pk and component Pj (i.e., Pj ⋂ Pk is a solid), the algorithm halts at component Pk. The algorithm’s output verifies the validity of the assembly sequence and creates an animation of the component’s assembly operation. The assembly sequence validation Algorithm 2 is presented below.

| Algorithm 2: Assembly Sequence Validation Algorithm |

| Input: Pi → (xi, yi, zi) ∗ mi → Si |

| Output: True or False |

| 1 is sequence valid ← False |

| 2 n ← No. of Parts |

| 3 Parts ← Pi(i ∈ [0, n]) |

| 4 direction ← (xi, yi, zi)(i ∈ [0, n]) |

| 5 magnitude ← mi(i ∈ [0, n]) |

| 6 sequence ← si(i ∈ [0, n]) |

| 7 sort the Parts according to sequence in ascending order |

| 8 for i in n do |

| 9 Set initial location of Pi as direction[i]*mangnitude[i] |

| 10 reversed direction ← (direction.i ∗ −1, direction.j ∗ −1, direction.z ∗ −1) |

| 11 Collision ← interfrence(Parts, Part[i], reversed direction, magnitude[i]) |

| 12 if Collison is True then |

| 13 is sequence valid ← False |

| 14 Stop |

| 15 else |

| 16 Move the Part[i] along the reversed direction. |

| 17 end |

| 18 end |

| 19 is sequence valid ← True |

| 20 STOP |

Upon qualifying the assembly sequence validation, the sequences of the assembly operations are visualized as virtual animations in the Blender software (https://www.blender.org/). Furthermore, these visual animations can be exported to a game engine for AR visualization using hand a held device (HHD) or head mounted device (HMD).

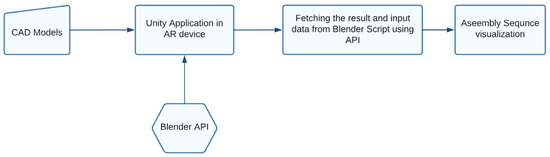

4.2. Augmented Reality Visualization

To visualize the virtual assembly simulations, the assembly animations should be generated in a game engine that supports augmented reality. Unity is one such game engine platform, which was developed by Unity Technologies in the early 2005 for Mac OS. The engine has since been progressively extended to support a wide variety of desktop, mobile, and other AR/VR reality platforms. It is particularly popular for iOS and Android mobile app development in order to show interactive simulations. The Unity application is used as an interface visualization of assembly operations.

After the assembly sequence, the SV algorithm tests the validation, and the results of the assembly animations are further exported to Unity, which provides a visualization of the assembly operations in an AR compatible device, if the sequence is deemed valid. This integration of CAD models, validation algorithms, and AR technology enhances the user’s experience and facilitates accurate assembly processes. This process is presented as a flow chart in Figure 6 and involves the following steps:

Figure 6.

AR interfacing Mechanism for visualization.

- a.

- CAD models: The user supplies the CAD models of the assembled product to the Unity application. These CAD models serve as the basis for the validation and visualization process.

- b.

- Validation using API: The Unity application interacts with the assembly sequence validation (ASV) framework to perform the validation. The input data, including the CAD models and the assembly sequence, are passed to the ASV algorithm. The ASV algorithm then processes the input and determines whether the assembly sequence is valid or not. The output of the ASV algorithm is a Boolean value (true/false) indicating the validity of the sequence from the Blender, and is stored in a cloud database such as Firebase.

- c.

- Data exchange with Unity: After the validation process is completed, the input data and the validation result (true/false) are transferred back to the Unity application using an API (application programming interface). This allows for the transfer of data between the ASV algorithm and the Unity application.

- d.

- Visualization in augmented reality: If the assembly sequence is determined to be valid, Unity takes the validated input data and provides a visualization of the assembly in an augmented reality (AR) device such as Hololens 2. The AR device overlays virtual components onto the real-world environment, allowing the user to see the virtual assembly in the context of the physical space. This visualization serves as a guide for the user during the assembly process, enhancing their understanding of the correct sequence and positioning of the components.

5. Case Studies

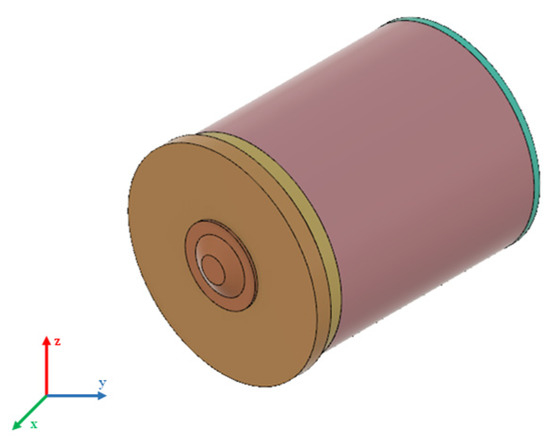

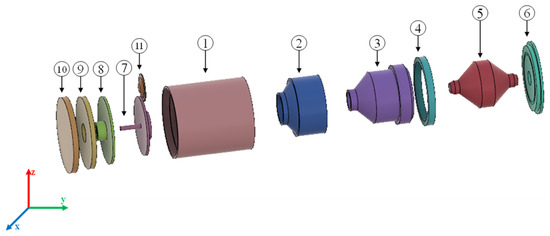

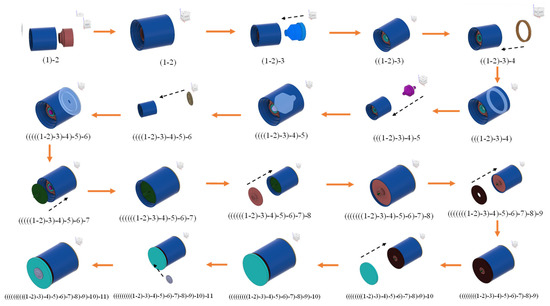

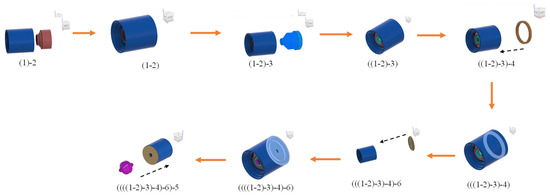

The bench vise assembly consists of seven parts, as shown in Figure 1. The transmission assembly, as shown in Figure 7, consists of 11 parts that are considered for implementation. The proposed framework is used to generate an assembly sequence for the bench vise (an unsymmetric product) and transmission assembly (a symmetric product), and is tested with the framework to validate the sequence using the improved geometric feasibility testing method. The assembled textual instructions for these two products are given in Table 2 and Table 3, which are fed to the algorithm. The textual instructions consist of both feasible and infeasible sequences in order to test the correctness of the framework and completeness to use it with other product variants.

Figure 7.

Transmission box product (assembled state).

Table 2.

User input for the Bench vice assembly.

Table 3.

User input for the transmission assembly.

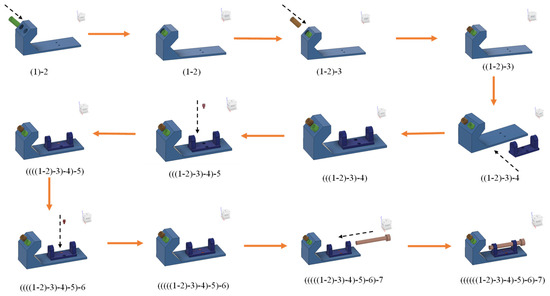

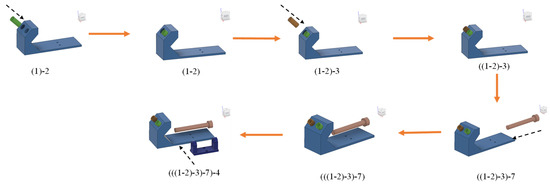

The seven-part bench vice assembly has the precedence restriction that part 4 cannot be assembled after part 7 in the given direction (0, 0, 1). Similarly, the Figure 8 illustrates 11-part transmission box assembly, where the part 5 cannot be assembled after part 6 in the given direction (0, 1, 0). The results of the framework are mentioned in Table 4 for bench vice assembly and transmission assembly, and the steps for generating an animation from a valid sequence are illustrated in Figure 9 and Figure 10 for Bench vice assembly and in Figure 11 and Figure 12 for transmission assembly with feasible and infeasible assembly sequences.

Figure 8.

Exploded view of the transmission box assembly.

Table 4.

Results of assembly sequence validation framework.

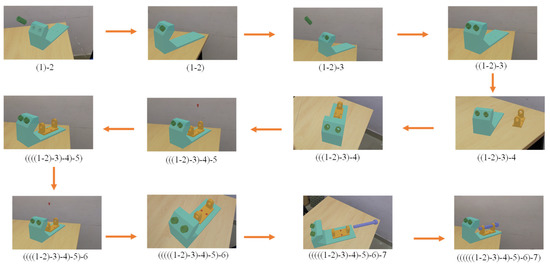

Figure 9.

Steps involved in ASV for Bench vice assembly with a valid assembly sequence (1–2–3–4–5–6–7).

Figure 10.

Steps involved in ASV for Bench vice assembly with an invalid assembly sequence (1–2–3–7–4–5–6).

Figure 11.

Steps involved in the ASV for Transmission Assembly with a valid sequence (1–2–3–4–5–6–7–8–10–9–11).

Figure 12.

Steps involved in ASV for transmission assembly with an invalid sequence (1–2–3–4–6–5–7–8–10–9–11).

The qualified assembly sequence is further transferred to an augmented reality (AR) engine and the sequence of assembly operations are visualized through an augmented reality using devices such as Microsoft Hololens 2 for step-by-step guidance, as shown in Figure 13.

Figure 13.

Assembly sequence steps visualized in Hololens 2.

However, the unqualified sequences in the assembly sequence validation testing phase can be modified by changing the order of assembly operations or changing the direction vectors.

6. Conclusions

Employing an assembly sequence validation algorithm to generate user-friendly animations for product assembly provides several benefits. It saves a significant amount of time and effort when creating assembly guides, particularly in industries where the product assembly process is complex or involves numerous steps. The animations can be customized to the specific needs of the user, and provide a more personalized and effective approach to product assembly guidance. This paper proposes a framework for automated assembly sequence validation and simulation for augmented reality (AR) to provide visual guides of an assembly. The proposed framework is validated for its feasibility using multiple case studies. This approach can significantly reduce the time required for manual verification during assembly operations. Furthermore, a knowledge-based approach for geometrical feasibility testing is proposed that takes the geometrical data of the CAD components and converts the dynamic interference problem into a static interference problem by extruding the sweeping volume of a component, and it then checks the interference. The framework is implemented in Blender and is tested for correctness. The output of this framework is used in AR-based assembly simulations, which provide greater visual appeal for users to assemble a product in the workshop. Furthermore, real-time object identification and tracking mechanisms can be integrated for mapping purposes, which eases the process of assembly guidance and inspection. The future scope of this research could be overlaying the virtual model on a physical model in order to enhance the user experience. This involves training parts through deep learning models to detect the part in three-dimensional space.

Author Contributions

Methodology, B.P.; Validation, M.V.A.R.B. and B.P.; Formal analysis, M.V.A.R.B.; Investigation, M.V.A.R.B. and B.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No new data were created as part of this research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kalpakjian, S.; Schmid, S.R. Manufacturing Engineering. In Technology; Prentice Hall: London, UK, 2009; pp. 568–571. [Google Scholar]

- Bahubalendruni, M.R.; Biswal, B.B. A review on assembly sequence generation and its automation. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2016, 230, 824–838. [Google Scholar] [CrossRef]

- Deepak, B.B.V.L.; Bala Murali, G.; Bahubalendruni, M.R.; Biswal, B.B. Assembly sequence planning using soft computing methods: A review. Proc. Inst. Mech. Eng. Part E J. Process Mech. Eng. 2019, 233, 653–683. [Google Scholar] [CrossRef]

- Whitney, D.E. Mechanical Assemblies: Their Design, Manufacture, and Role in Product Development; Oxford University Press: New York, NY, USA, 2004; Volume 1. [Google Scholar]

- Champatiray, C.; Bahubalendruni, M.R.; Mahapatra, R.N.; Mishra, D. Optimal robotic assembly sequence planning with tool integrated assembly interference matrix. AI EDAM 2023, 37, e4. [Google Scholar] [CrossRef]

- Bahubalendruni, M.R.; Biswal, B.B.; Kumar, M.; Nayak, R. Influence of assembly predicate consideration on optimal assembly sequence generation. Assem. Autom. 2015, 35, 309–316. [Google Scholar] [CrossRef]

- Deshpande, A.; Kim, I. The effects of augmented reality on improving spatial problem solving for object assembly. Adv. Eng. Inform. 2018, 38, 760–775. [Google Scholar] [CrossRef]

- Eswaran, M.; Gulivindala, A.K.; Inkulu, A.K.; Raju Bahubalendruni, M.V.A. Augmented reality-based guidance in product assembly and maintenance/repair perspective: A state of the art review on challenges and opportunities. Expert Syst. Appl. Int. J. 2023, 213, 1–18. [Google Scholar] [CrossRef]

- Dong, J.; Xia, Z.; Zhao, Q. Augmented Reality Assisted Assembly Training Oriented Dynamic Gesture Recognition and Prediction. Appl. Sci. 2021, 11, 9789. [Google Scholar] [CrossRef]

- Eswaran, M.; Bahubalendruni, M.R. Challenges and opportunities on AR/VR technologies for manufacturing systems in the context of industry 4.0: A state of the art review. J. Manuf. Syst. 2022, 65, 260–278. [Google Scholar] [CrossRef]

- Ong, S.; Pang, Y.; Nee, A.Y.C. Augmented reality aided assembly design and planning. CIRP Ann. 2007, 56, 49–52. [Google Scholar] [CrossRef]

- Bahubalendruni, M.R.; Gulivindala, A.K.; Varupala, S.P.; Palavalasa, D.K. Optimal assembly sequence generation through computational approach. Sādhanā 2019, 44, 174. [Google Scholar] [CrossRef]

- Raju Bahubalendruni, M.V.A.; Biswal, B.B. Liaison concatenation—A method to obtain feasible assembly sequences from 3D-CAD product. Sādhanā 2016, 41, 67–74. [Google Scholar] [CrossRef]

- Suszyński, M.; Peta, K.; Černohlávek, V.; Svoboda, M. Mechanical Assembly Sequence Determination Using Artificial Neural Networks Based on Selected DFA Rating Factors. Symmetry 2022, 14, 1013. [Google Scholar] [CrossRef]

- Bahubalendruni, M.R.; Deepak, B.B.V.L.; Biswal, B.B. An advanced immune based strategy to obtain an optimal feasible assembly sequence. Assem. Autom. 2016, 36, 127–137. [Google Scholar] [CrossRef]

- Gunji, A.B.; Deepak, B.B.B.V.L.; Bahubalendruni, C.R.; Biswal, D.B.B. An optimal robotic assembly sequence planning by assembly subsets detection method using teaching learning-based optimization algorithm. IEEE Trans. Autom. Sci. Eng. 2018, 15, 1369–1385. [Google Scholar] [CrossRef]

- Gulivindala, A.K.; Bahubalendruni, M.V.A.R.; Chandrasekar, R.; Ahmed, E.; Abidi, M.H.; Al-Ahmari, A. Automated disassembly sequence prediction for industry 4.0 using enhanced genetic algorithm. Comput. Mater. Contin. 2021, 69, 2531–2548. [Google Scholar] [CrossRef]

- Shi, X.; Tian, X.; Gu, J.; Yang, F.; Ma, L.; Chen, Y.; Su, T. Knowledge Graph-Based Assembly Resource Knowledge Reuse towards Complex Product Assembly Process. Sustainability 2022, 14, 15541. [Google Scholar] [CrossRef]

- Murali, G.B.; Deepak, B.B.V.L.; Raju, M.V.A.; Biswal, B.B. Optimal robotic assembly sequence planning using stability graph through stable assembly subset identification. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2019, 233, 5410–5430. [Google Scholar] [CrossRef]

- Seow, K.T.; Devanathan, R. Temporal logic programming for assembly sequence planning. Artif. Intell. Eng. 1993, 8, 253–263. [Google Scholar] [CrossRef]

- Bahubalendruni, M.R.; Biswal, B.B. A novel concatenation method for generating optimal robotic assembly sequences. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2017, 231, 1966–1977. [Google Scholar] [CrossRef]

- Dong, T.; Tong, R.; Zhang, L.; Dong, J. A knowledge-based approach to assembly sequence planning. Int. J. Adv. Manuf. Technol. 2007, 32, 1232–1244. [Google Scholar] [CrossRef]

- Rashid, M.F.F.; Hutabarat, W.; Tiwari, A. A review on assembly sequence planning and assembly line balancing optimisation using soft computing approaches. Int. J. Adv. Manuf. Technol. 2012, 59, 335–349. [Google Scholar] [CrossRef]

- Anil Kumar, G.; Bahubalendruni, M.R.; Prasad, V.S.S.; Sankaranarayanasamy, K. A multi-layered disassembly sequence planning method to support decision-making in de-manufacturing. Sādhanā 2021, 46, 102. [Google Scholar] [CrossRef]

- Kumar, G.A.; Bahubalendruni, M.R.; Prasad, V.V.; Ashok, D.; Sankaranarayanasamy, K. A novel Geometric feasibility method to perform assembly sequence planning through oblique orientations. Eng. Sci. Technol. Int. J. 2022, 26, 100994. [Google Scholar] [CrossRef]

- Prasad, V.V.; Hymavathi, M.; Rao, C.S.P.; Bahubalendruni, M.A.R. A novel computative strategic planning projections algorithm (CSPPA) to generate oblique directional interference matrix for different applications in computer-aided design. Comput. Ind. 2022, 141, 103703. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).