ChatGPT and the Future of Digital Health: A Study on Healthcare Workers’ Perceptions and Expectations

Abstract

1. Introduction

2. Methods

2.1. Study Design

2.2. Sampling Strategy and Participants Recruitment

2.3. Sample Size

2.4. Ethical Considerations

2.5. Statistical Analysis

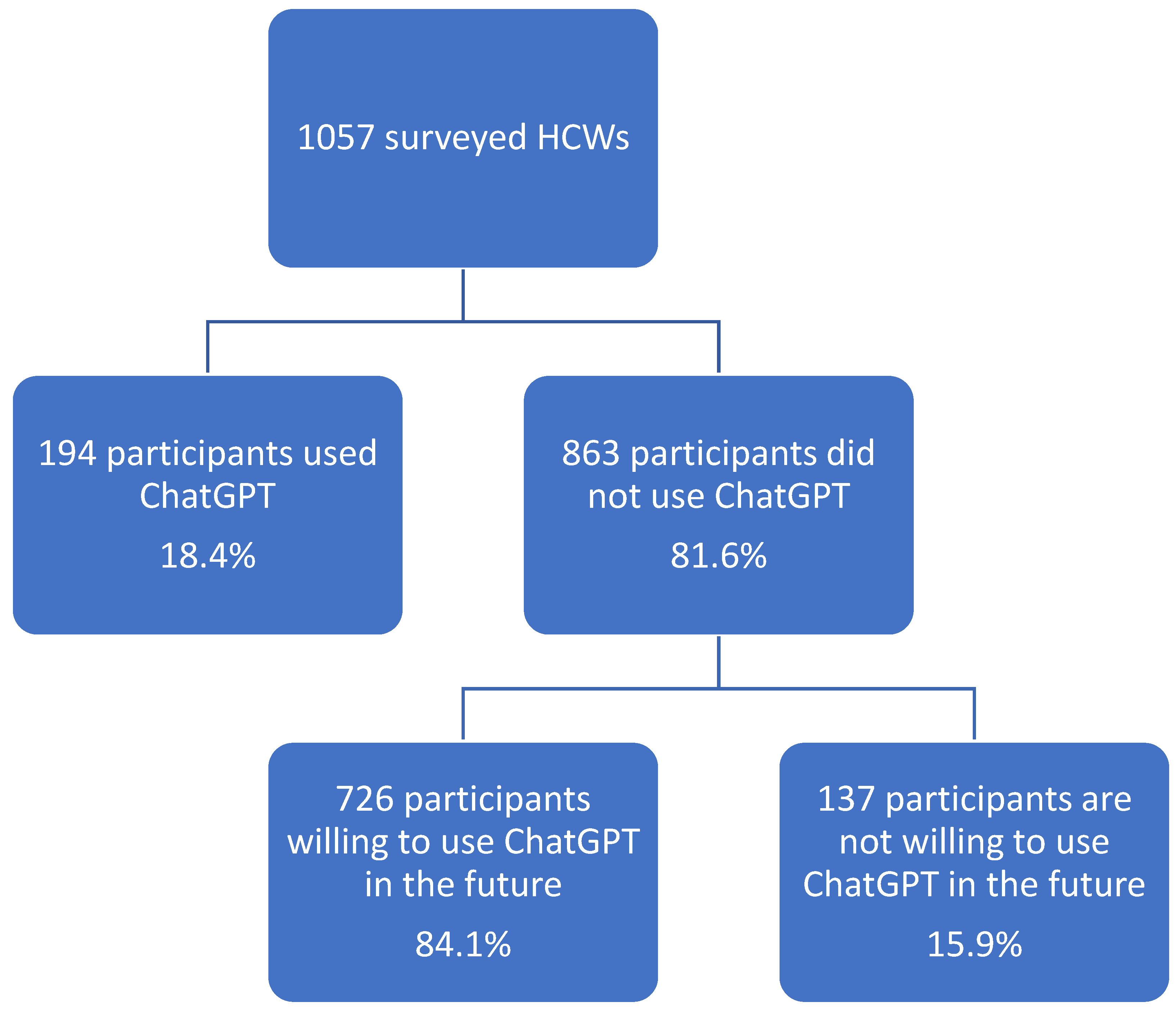

3. Results

4. Discussion

4.1. Principal Results

4.2. Perceived Usefulness of ChatGPT

4.3. Trust and Credibility of ChatGPT

4.4. Obstacles and Concerns about ChatGPT

4.5. Medicolegal Implications of ChatGPT

4.6. Limitations

4.7. Comparison with Prior Work

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CGA | ChatGPT Improved Accuracy |

| ChatGPT | Chat Generative Pre-trained Transformer |

| HCWs | healthcare workers |

| USMLE | United States Medical Licensing Exam |

References

- Laranjo, L.; Dunn, A.G.; Tong, H.L.; Kocaballi, A.B.; Chen, J.; Bashir, R.; Surian, D.; Gallego, B.; Magrabi, F.; Lau, A.Y.S.; et al. Conversational agents in healthcare: A systematic review. J. Am. Med. Inform. Assoc. 2018, 25, 1248–1258. [Google Scholar] [CrossRef]

- Oh, Y.J.; Zhang, J.; Fang, M.L.; Fukuoka, Y. A systematic review of artificial intelligence chatbots for promoting physical activity, healthy diet, and weight loss. Int. J. Behav. Nutr. Phys. Act. 2021, 18, 160. [Google Scholar] [CrossRef]

- Rao, A.; Pang, M.; Kim, J.; Kamineni, M.; Lie, W.; Prasad, A.K.; Landman, A.; Dreyer, K.J.; Succi, M.D. Assessing the Utility of ChatGPT Throughout the Entire Clinical Workflow. medRxiv 2023. [Google Scholar] [CrossRef]

- Temsah, O.; Khan, S.A.; Chaiah, Y.; Senjab, A.; Alhasan, K.; Jamal, A.; Aljamaan, F.; Malki, K.H.; Halwani, R.; Al-Tawfiq, J.A.; et al. Overview of Early ChatGPT’s Presence in Medical Literature: Insights From a Hybrid Literature Review by ChatGPT and Human Experts. Cureus 2023, 15, e37281. [Google Scholar] [CrossRef] [PubMed]

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front. Artif. Intell. 2023, 6, 1169595. [Google Scholar] [CrossRef]

- Al-Tawfiq, J.A.; Jamal, A.; Rodriguez-Morales, A.J.; Temsah, M.H. Enhancing infectious disease response: A demonstrative dialogue with ChatGPT and ChatGPT-4 for future outbreak preparedness. New Microbes New Infect. 2023, 53, 101153. [Google Scholar] [CrossRef] [PubMed]

- Temsah, M.H.; Jamal, A.; Aljamaan, F.; Al-Tawfiq, J.A.; Al-Eyadhy, A. ChatGPT-4 and the Global Burden of Disease Study: Advancing Personalized Healthcare Through Artificial Intelligence in Clinical and Translational Medicine. Cureus 2023, 15, e39384. [Google Scholar] [CrossRef] [PubMed]

- Gordijn, B.; Have, H.T. ChatGPT: Evolution or revolution? In Medicine, Health Care and Philosophy; Springer: Dordrecht, The Netherlands, 2023; Volume 26, pp. 1–2. [Google Scholar]

- Park, S.H.; Do, K.H.; Kim, S.; Park, J.H.; Lim, Y.S. What should medical students know about artificial intelligence in medicine? J. Educ. Eval. Health Prof. 2019, 16, 18. [Google Scholar] [CrossRef]

- HAI. Artificial Intelligence Index Report 2023. Available online: https://aiindex.stanford.edu/wp-content/uploads/2023/04/HAI_AI-Index-Report_2023.pdf (accessed on 12 June 2023).

- Papini, F.; Mazzilli, S.; Paganini, D.; Rago, L.; Arzilli, G.; Pan, A.; Goglio, A.; Tuvo, B.; Privitera, G.; Casini, B. Healthcare Workers Attitudes, Practices and Sources of Information for COVID-19 Vaccination: An Italian National Survey. Int. J. Environ. Res. Public Health 2022, 19, 733. [Google Scholar] [CrossRef]

- Temsah, M.-H.; Alenezi, S.; Al-Arabi, M.; Aljamaan, F.; Alhasan, K.; Assiri, R.; Bassrawi, R.; Alshahrani, F.; Alhaboob, A.; Alaraj, A.; et al. Healthcare workers’ COVID-19 Omicron variant uncertainty-related stress, resilience, and coping strategies during the first week of World Health Organization alert. medRxiv 2021. [Google Scholar] [CrossRef]

- Ferdous, M.Z.; Islam, M.S.; Sikder, M.T.; Mosaddek, A.S.M.; Zegarra-Valdivia, J.A.; Gozal, D. Knowledge, attitude, and practice regarding COVID-19 outbreak in Bangladesh: An online-based cross-sectional study. PLoS ONE 2020, 15, e0239254. [Google Scholar] [CrossRef]

- AlFaris, E.; Irfan, F.; Ponnamperuma, G.; Jamal, A.; Van der Vleuten, C.; Al Maflehi, N.; Al-Qeas, S.; Alenezi, A.; Alrowaished, M.; Alsalman, R.; et al. The pattern of social media use and its association with academic performance among medical students. Med. Teach. 2018, 40, S77–S82. [Google Scholar] [CrossRef]

- Teubner, T.; Flath, C.M.; Weinhardt, C.; van der Aalst, W.; Hinz, O. Welcome to the Era of ChatGPT et al. Bus. Inf. Syst. Eng. 2023, 65, 95–101. [Google Scholar] [CrossRef]

- Cascella, M.; Montomoli, J.; Bellini, V.; Bignami, E. Evaluating the Feasibility of ChatGPT in Healthcare: An Analysis of Multiple Clinical and Research Scenarios. J. Med. Syst. 2023, 47, 33. [Google Scholar] [CrossRef]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digit Health 2023, 2, e0000198. [Google Scholar] [CrossRef] [PubMed]

- Liévin, V.; Hother, C.E.; Winther, O. Can large language models reason about medical questions? arXiv 2022, arXiv:2207.08143. [Google Scholar]

- Deng, J.; Lin, Y. The Benefits and Challenges of ChatGPT: An Overview. Front. Comput. Intell. Syst. 2022, 2, 81–83. [Google Scholar] [CrossRef]

- Adamson, G. Explaining technology we don’t understand. IEEE Trans. Technol. Soc. 2023. [Google Scholar] [CrossRef]

- The Lancet Digital, H. ChatGPT: Friend or foe? Lancet Digit Health 2023, 5, e102. [Google Scholar] [CrossRef] [PubMed]

- Alhasan, K.; Al-Tawfiq, J.; Aljamaan, F.; Jamad, A.; Al-Eyadhy, A.; Temsah, M.-H. Mitigating the Burden of Severe Pediatric Respiratory Viruses in the Post-COVID-19 Era: ChatGPT Insights and Recommendations. Cureus 2023. [Google Scholar] [CrossRef]

- Temsah, M.H.; Jamal, A.; Al-Tawfiq, J.A. Reflection with ChatGPT about the excess death after the COVID-19 pandemic. New Microbes New Infect. 2023, 52, 101103. [Google Scholar] [CrossRef]

- Alhasan, K.; Raina, R.; Jamal, A.; Temsah, M.H. Combining human and AI could predict nephrologies future, but should be handled with care. Acta Paediatr. 2023. [Google Scholar] [CrossRef]

- Goodman, R.S.; Patrinely, J.R., Jr.; Osterman, T.; Wheless, L.; Johnson, D.B. On the cusp: Considering the impact of artificial intelligence language models in healthcare. Med 2023, 4, 139–140. [Google Scholar] [CrossRef]

- Howard, A.; Hope, W.; Gerada, A. ChatGPT and antimicrobial advice: The end of the consulting infection doctor? Lancet Infect. Dis. 2023, 23, 405–406. [Google Scholar] [CrossRef]

- Alkaissi, H.; McFarlane, S.I. Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. Cureus 2023, 15, e35179. [Google Scholar] [CrossRef] [PubMed]

- van Dis, E.A.M.; Bollen, J.; Zuidema, W.; van Rooij, R.; Bockting, C.L. ChatGPT: Five priorities for research. Nature 2023, 614, 224–226. [Google Scholar] [CrossRef]

- Liu, S.; Wright, A.P.; Patterson, B.L.; Wanderer, J.P.; Turer, R.W.; Nelson, S.D.; McCoy, A.B.; Sittig, D.F.; Wright, A. Assessing the Value of ChatGPT for Clinical Decision Support Optimization. medRxiv 2023. [Google Scholar] [CrossRef]

- Leuter, C.; La Cerra, C.; Calisse, S.; Dosa, D.; Petrucci, C.; Lancia, L. Ethical difficulties in healthcare: A comparison between physicians and nurses. Nurs. Ethics 2018, 25, 1064–1074. [Google Scholar] [CrossRef]

- Parikh, P.M.; Shah, D.M.; Parikh, K.P. Judge Juan Manuel Padilla Garcia, ChatGPT, and a controversial medicolegal milestone. Indian J. Med. Sci. 2023, 75, 3–8. [Google Scholar] [CrossRef]

- Commission, E. Ethics Guidelines for Trustworthy AI. Available online: https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai (accessed on 26 March 2023).

- Tsigaris, P.; Teixeira da Silva, J.A. Can ChatGPT be trusted to provide reliable estimates? Account. Res. 2023, 1–3. [Google Scholar] [CrossRef]

- Borji, A. A categorical archive of chatgpt failures. arXiv 2023, arXiv:2302.03494. [Google Scholar]

- Noble, J.M.; Zamani, A.; Gharaat, M.; Merrick, D.; Maeda, N.; Lambe Foster, A.; Nikolaidis, I.; Goud, R.; Stroulia, E.; Agyapong, V.I.O.; et al. Developing, Implementing, and Evaluating an Artificial Intelligence-Guided Mental Health Resource Navigation Chatbot for Health Care Workers and Their Families During and Following the COVID-19 Pandemic: Protocol for a Cross-sectional Study. JMIR Res. Protoc. 2022, 11, e33717. [Google Scholar] [CrossRef] [PubMed]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef] [PubMed]

- Almazyad, M.; Aljofan, F.; Abouammoh, N.A.; Muaygil, R.; Malki, K.H.; Aljamaan, F.; Alturki, A.; Alayed, T.; Alshehri, S.S.; Alrbiaan, A.; et al. Enhancing Expert Panel Discussions in Pediatric Palliative Care: Innovative Scenario Development and Summarization With ChatGPT-4. Cureus 2023, 15, e38249. [Google Scholar] [CrossRef]

| Frequency | Percentage | |

|---|---|---|

| Sex | ||

| Female | 450 | 42.6 |

| Male | 607 | 57.4 |

| Age group | ||

| 18–24 years | 302 | 28.6 |

| 25–34 years | 418 | 39.5 |

| 35–44 years | 170 | 16.1 |

| 45–54 years | 105 | 9.9 |

| 55–64 years | 62 | 5.9 |

| Clinical Role | ||

| Physician | 516 | 48.8 |

| Medical Interns and students | 332 | 31.4 |

| Nurse | 139 | 13.2 |

| Technicians, therapists, and pharmacists | 70 | 6.6 |

| Healthcare experience | ||

| <5 years | 556 | 52.6 |

| 5–10 years | 187 | 17.7 |

| 10–20 years | 178 | 16.8 |

| >20 years | 136 | 12.9 |

| Frequency | Percentage | |

|---|---|---|

| Participants’ computer skills/expertise | ||

| Not so familiar | 78 | 7.4 |

| Familiar to some degree | 468 | 44.3 |

| Very familiar | 511 | 48.3 |

| How familiar are you with the term “ChatGPT”? | ||

| Not so familiar | 538 | 50.9 |

| Familiar to some degree | 359 | 34 |

| Very familiar | 160 | 15.1 |

| How comfortable would you be using ChatGPT in your healthcare practice? | ||

| Not comfortable at all | 263 | 24.9 |

| Comfortable to some extent | 654 | 61.9 |

| Very Comfortable | 140 | 13.2 |

| Did this survey raise your interest to read about ChatGPT and other AI models? | ||

| No | 192 | 18.2 |

| Yes | 865 | 81.8 |

| Participants’ perception of ChatGPT usefulness in healthcare practice? (Selection can be multiple choices) | ||

| Providing medical decisions | 418 | 39.5 |

| Providing support to patients and families | 473 | 44.7 |

| Provide an appraisal of medical literature | 513 | 48.5 |

| Medical research aid (like drafting manuscripts) | 697 | 65.9 |

| Frequency | Percentage | |

|---|---|---|

| Lack of credibility/Unknown source of information of data in the AI Model | 496 | 46.9 |

| Worry of harmful or wrong medical decisions recommendations | 425 | 40.2 |

| Not available in my setting | 403 | 38.1 |

| AI Chatbots are not yet well-developed | 397 | 37.6 |

| Medicolegal implications of using AI for patients’ care | 326 | 30.8 |

| I do not know which AI model can be used in healthcare | 311 | 29.4 |

| Unfamiliarity with using AI Chatbots | 296 | 28.0 |

| Worry about patient’s confidentiality | 273 | 25.8 |

| Resistance to adopt AI Chatbot in medical decisions | 249 | 23.6 |

| Worry of AI taking over human role in healthcare practice | 218 | 20.6 |

| Others (lack of personalized care and inability to adapt to prognostic factors) | 43 | 4.1 |

| Variable? | Multivariate Adjusted Odds Ratio | OR 95% C.I. | p-Value | |

|---|---|---|---|---|

| Lower | Upper | |||

| Sex | 0.772 | 0.536 | 1.112 | 0.164 |

| Age | 0.960 | 0.822 | 1.122 | 0.609 |

| Clinical role | 1.048 | 0.858 | 1.281 | 0.646 |

| Trust * | 0.804 | 0.627 | 1.031 | 0.085 |

| History of ChatGPT use at the time of the survey | 1.902 | 1.226 | 2.950 | 0.004 |

| Familiarity with ChatGPT | 2.023 | 1.508 | 2.714 | <0.001 |

| Medical decisions @ | 1.463 | 0.994 | 2.154 | 0.054 |

| Comfort level Ψ | 2.327 | 1.650 | 3.281 | <0.001 |

| Patients’ outcomes Σ | 7.927 | 5.046 | 12.452 | <0.001 |

| Constant | 0.006 | <0.001 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Temsah, M.-H.; Aljamaan, F.; Malki, K.H.; Alhasan, K.; Altamimi, I.; Aljarbou, R.; Bazuhair, F.; Alsubaihin, A.; Abdulmajeed, N.; Alshahrani, F.S.; et al. ChatGPT and the Future of Digital Health: A Study on Healthcare Workers’ Perceptions and Expectations. Healthcare 2023, 11, 1812. https://doi.org/10.3390/healthcare11131812

Temsah M-H, Aljamaan F, Malki KH, Alhasan K, Altamimi I, Aljarbou R, Bazuhair F, Alsubaihin A, Abdulmajeed N, Alshahrani FS, et al. ChatGPT and the Future of Digital Health: A Study on Healthcare Workers’ Perceptions and Expectations. Healthcare. 2023; 11(13):1812. https://doi.org/10.3390/healthcare11131812

Chicago/Turabian StyleTemsah, Mohamad-Hani, Fadi Aljamaan, Khalid H. Malki, Khalid Alhasan, Ibraheem Altamimi, Razan Aljarbou, Faisal Bazuhair, Abdulmajeed Alsubaihin, Naif Abdulmajeed, Fatimah S. Alshahrani, and et al. 2023. "ChatGPT and the Future of Digital Health: A Study on Healthcare Workers’ Perceptions and Expectations" Healthcare 11, no. 13: 1812. https://doi.org/10.3390/healthcare11131812

APA StyleTemsah, M.-H., Aljamaan, F., Malki, K. H., Alhasan, K., Altamimi, I., Aljarbou, R., Bazuhair, F., Alsubaihin, A., Abdulmajeed, N., Alshahrani, F. S., Temsah, R., Alshahrani, T., Al-Eyadhy, L., Alkhateeb, S. M., Saddik, B., Halwani, R., Jamal, A., Al-Tawfiq, J. A., & Al-Eyadhy, A. (2023). ChatGPT and the Future of Digital Health: A Study on Healthcare Workers’ Perceptions and Expectations. Healthcare, 11(13), 1812. https://doi.org/10.3390/healthcare11131812