Abstract

In this paper, the adaptive lasso method is used to screen variables, and different neural network models of seven countries are established by choosing variables. Gross domestic product (GDP) is a function of land area in the country, cultivated land, population, enrollment rate, total capital formation, exports of goods and services, and the general government’s final consumption of collateral and broad money. Based on the empirical analysis of the above factors from 1973 to 2016, the results show that the BP neural network model has better performance based on multiple summary statistics, without increasing the number of parameters and better predicting short-term GDP. In addition, the change and the error of the model are small and have a certain reference value.

1. Introduction

This paper builds an economic model from the data of 1973–2016 for the Group of Seven (G7) by using the adaptive lasso method and BP neural network models to describe the evolution of the gross domestic product (GDP) of several factors. The quality of the models is assessed, showing the advantage of using BP neural network models to this purpose. The ability of predicting the short-term evolution of the GDP is also assessed. Possible explanations are presented for why this is so, and for the mechanism behind the BP neural network models. The results show how the weights of the neural network and the number of hidden layers can be used to better fit the data, and is expected to forecast GDP growth in the future.

The BP neural network model long been used to develop financial and economic models. However, there are still some shortcomings in improving the accuracy of the model. In recent years, applications of the BP neural network in economic growth have been studied in [1,2,3,4]. The grey prediction model [5] is widely used to forecast economic growth, and experimental results show that the BP neural network model is superior to the model. In addition, fractional calculus is widely used to construct economic models, incorporates the effects of memory in evolutionary processes, and experimental results show that the fractional order model is superior to the integer order model, such as in [6,7,8,9,10,11,12,13,14].

Recently, a better way to get rid of redundant variables has been found in [15], and Ming et al. [9] improved the fractional EGM model in [16], but the error is still difficult to control.

In this paper, we adopt the idea in [15] and the economic model of the BP neural network in [5] to study a group of GDP growth in seven countries. In order to compare the fitting effect between the BP neural network and the fractional order model, we establish the minimum absolute error coefficient, determination, and the Bayesian information criterion (BIC) index. Finally, we give the relative error evaluation model to show the prediction effect of the evaluation model.

In summary, based on the BP neural network model, this paper conducts the modeling of a group of seven economic growth. Through a case study, it shows that the BP neural network has a smaller error to forecast the GDP.

The G7

The G7 is a forum for major industrial countries to meet and discuss policies, including the United States (USA), the United Kingdom (GRB), Germany (DEU), France (FRA), Japan (JPN), Italy (ITA), Canada (CAN), and the European Union (EUU). In the early 1970s, after the first oil crisis hit the western economy, at the initiative of France in November 1975, the six major industrial countries, including United States, Britain, Germany, France, Japan, and Italy established the G6. Since then, Canada joined in the following year, and the Group of Seven (G7) was born. The addition of Russia in 1997 transformed the G7 into the G8. The Group of Seven (G7) is the predecessor of the Group of Eight (G8). On 4 June 2014, the G7 leaders’ meeting was hosted by the European Union, which took place in Brussels, Belgium on the evening of the 4th. This was the first time Russia was excluded since joining the group in 1997. The summit discusses foreign policy, economic, trade, and energy security issues.

Some of the members of the G7 have economies of comparable size, but not the economy of more developed countries, such as China and India. Therefore, it is necessary to study the factors related to the changes in GDP of these countries so as to compare the similarities and differences between GDP growth in different countries.

We collected data from the years 1973–2016 for the G7 countries for a total of 44 years, and used the eight variables obtained to establish different BP neural network models, which describe the changes in GDP in different countries.

2. Model Description

We selected the following eight explanatory variables (see Table 1) in this paper: land area (LA) (km), arable land (AL) (hectares), total population (TP) (million), avg. year of schooling (AYS) (year), gross capital formation (GCF) (dollar), exports of goods and services (EGS) (dollar), and general government final consumption expenditure (GGFCE) (dollar), and broad money (BM) (dollar). The data in this section are the data recorded from the World Bank over 1973–2016.

In order to express this more simply, we have defined the symbols as follows:

Table 1.

Symbols define.

Table 1.

Symbols define.

| y | t | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| LA | AL | TP | AYS | GCF | EGS | GGFCE | BM | GDP | year |

Thus, the model adaptive lasso method and BP neural network are considered as follows.

2.1. Adaptive Lasso Method

Although the lasso method is widely used in high-dimensional data analysis, it also has some shortcomings, such as that it does not have the so-called Oracle nature proposed by Fan and Li [17], namely unbiasedness, sparsity, and continuity [17]. For the lasso method, it is actually an improvement on the ridge regression. Using the penalty function is the singularity of the derivative of the absolute value function at zero. It is also important to compress one or more unimportant variable coefficients into zero. The coefficient of the variable gives a certain compression, which causes it to not meet the unbiased requirements. Thus, Zou [15] proposed the adaptive lasso method, which has the so-called oracle nature.

The method uses the least squares estimation coefficient value under the full model to calculate the penalty terms of different variables. Specifically, the absolute value of the coefficient may be a variable in the real model, so the penalty is small. Conversely, the absolute value of the coefficient may be small with independent variables, and thus cause a large penalty. Based on this idea, the penalty function of the adaptive lasso is defined as follows:

where and are two non-negative adjustment parameters, and is the initial least squares estimation coefficient value. Therefore, the lasso algorithm can be directly used in the calculation of the adaptive lasso method, and because the weight is introduced, the compression of the non-zero coefficient by Lasso is weakened, thereby reducing the deviation and achieving the gradual meaning. Here, we point out that the adaptive lasso is a convex optimization problem, so we do not have to worry about multiple local small-value problems. We can use the correlation algorithm to solve it quickly. For details, please refer to Shojaie and Michailidis [18] for the selection of adjustment parameters in the adaptive lasso to obtain the optimal solution. In addition, Fan and Li [17] proposed the LQA (Local Quadratic Approximation) algorithm that can be used to solve the adaptive lasso result, and each of these algorithms has advantages and disadvantages. The R package referred to in this paper is based on the LQA algorithm, so the algorithm is briefly introduced as follows. Let

where is the initial solution selected. And we denote by . Take , when , by simple calculation:

and

Perform a second-order Taylor expansion on the penalty term of Equation (1), omitting the following higher-order infinitesimal approximation:

Then, use the Newton-Raphson iterative method to calculate, where the process is as follows:

- Calculate Lasso’s solution as the initial solution by using the previous LARS algorithm;

- Let ;

- For a sufficiently small positive number , the algorithm stops when .

The method is relatively fast and the algorithm is relatively stable, but its disadvantage is that if a regression parameter is 0 in the iteration, the variable will always be excluded from the model. In addition, the result of the algorithm depends on the selection of the precision . Different may lead to some differences in the sparseness of the model and the estimation results of the parameters. For more details, see Fan and Li [17].

2.2. BP Neural Network

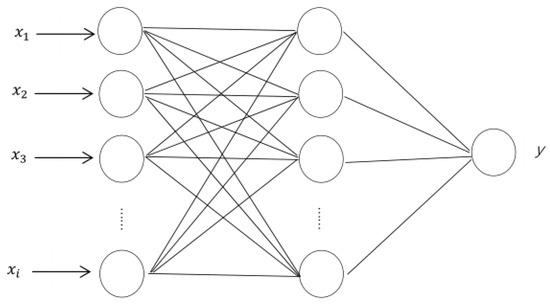

The BP neural network, referred to as the error back propagation neural network, consists of an input layer, one or more hidden layers, and an output layer, and each layer is composed of some neurons, where between adjacent neurons a complete connection relationship is formed, and each neuron in the same layer forms a completely unconnected relationship. The n input signals enter the network from the input layer, are transformed by the excitation function, reach the hidden layer, and are then transformed into the output layer by the excitation function to the form m output signal [5].

Figure 1.

Neural network diagram.

In the above formula, is the parameter of the model, m is the number of nodes of the input layer, and n is the number of nodes of the hidden layer.

Algorithm steps of the BP neural network:

- Step 1: The sample input and output parameters are normalized to the interval ;

- Step 2: Weight and threshold initialization, assign a random value in ;

- Step 3: Calculate the state of the hidden layer of the network and the output value of the output layer;

- Step 4: Calculate the error between the output value and the actual value;

- Step 5: Determine whether the sample error is within the acceptable range. If it is satisfied, the training ends. If it is not satisfied, continue to modify the weight and threshold, and go to Step 3 until the error reaches an acceptable range.

The BP neural network parameters (see Table 2) and the network of the learning rate is .

Table 2.

Network parameters.

In order to measure the performance and predictive capabilities of the BP neural network models, we selected the data from 70% as the training sample, and data from 30% were used as the test sample. In addition, the average absolute deviation (MAD) and the coefficient of determination () were used to evaluate the model, and we used the absolute error to describe the prediction effect of the model. The MAD, and the absolute error are defined as follows:

and

and

We usually use the BIC to evaluate the quality of a model. The smaller the BIC value, the better the model.

3. Main Results

3.1. Variable Selection

We used the adaptive lasso method to select variables (see Table 3).

Table 3.

Importance of the eight variables on Model (1) for each country.

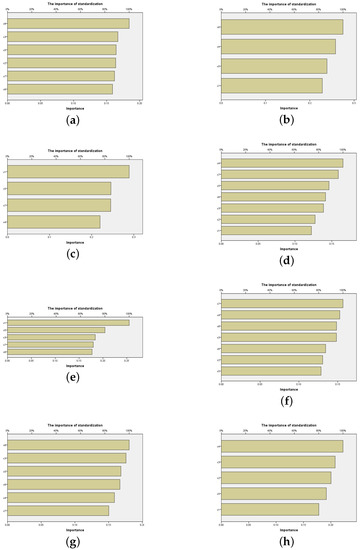

3.2. The Importance of Input Variables

The result of the training consists of how the BP neural network determines the inputs with the most important influences on the output. Table 4 and Figure 2 show the most influential variables and the smallest influence variables in the training process.

Table 4.

The importance of input variables.

Figure 2.

The importance of input variables of the BP neural network model for the G7 countries: (a) Canada (b) France (c) Germany (d) Italy (e) Japan (f) the United Kingdom (g) the United States (h) European Union.

Then, we used the selected variables to establish neural network models of different countries in turn. We calculated the values of MAD, , and the BIC index in the training sample set (see Table 5).

Table 5.

Different values of neural network.

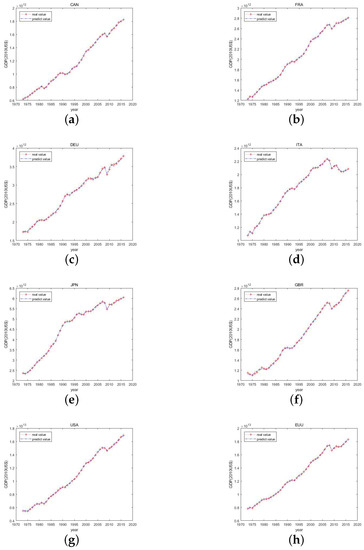

3.3. Fitting Result

Now, we give the fitting results of the BP neural network based on R software (the version is 3.6.1) (see Figure 3).

Figure 3.

Fitting results for the BP neural network model for the G7 countries: (a) Canada (b) France (c) Germany (d) Italy (e) Japan (f) the United Kingdom (g) the United States (h) European Union.

3.4. Predicted Result

Finally, we present the forecast results of the neural network model for G7 countries with GDP data from 2012–2016, and we indexed values, as shown in Table 6.

Table 6.

Neural network model for G7 countries, GDP data from 2012–2016.

All the figures and tables are the results of our research.

4. Conclusions

Differently to the approach in [8], the BP neural network in the economic model was used to study GDP growth in a group of seven countries. By comparing the fitting effect, one can find that the BP neural network model is more efficient than the fractional order model in [8]. In addition, the model also shows the variables that have the greatest impact and the smallest impact on the GDP of different countries. Meanwhile, we found that the input layer variables of the neural network model had different impacts on the output layer. To further illustrate the forecasting effect of the BP neural network model, we presented the GDP forecast for G7 countries from 2012-2016 and compared it with the real value. It was found that the BP neural network model not only had an advantage in fitting the G7 countries’ GDP growth, but also predicted it better. Finally, because the BP neural network had problems involving slower convergence and local extremum, we intend to extend our study by applying a hybrid genetic algorithm to optimize the structure and parameter of BP (back propagation) neural networks based on the gradient descent of multi-encoding.

Author Contributions

The contributions of all authors (X.W., J.W., and M.F.) are equal. All the main results were developed together. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (11661016), by the Training Object of High Level and Innovative Talents of Guizhou Province ((2016)4006), by the Major Research Project of Innovative Group in Guizhou Education Department ([2018]012), and by the Slovak Research and Development Agency under the contract No. APVV-18-0308 and by the Slovak Grant Agency VEGA No. 2/0153/16 and No. 1/0078/17.

Acknowledgments

The authors thank the referees for their careful reading of the article and insightful comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sadeghi, B. A BP-neural network predictor model for plastic injection molding process. J. Inequal. J. Mater. Process Technol. 2000, 103, 411–416. [Google Scholar] [CrossRef]

- Xiao, Z.; Ye, S.; Zhong, B.; Sun, C. BP neural network with rough set for short term load forecasting. Appl. Artif. Intell. Rev. 2009, 36, 273–279. [Google Scholar] [CrossRef]

- Yu, S.; Zhu, K.; Diao, F. A dynamic all parameters adaptive BP neural networks model and its application on oil reservoir prediction. Appl. Math. Comput. 2008, 195, 66–75. [Google Scholar] [CrossRef]

- Guo, Z.; Wu, J.; Lu, H.; Wang, J. A case study on a hybrid wind speed forecasting method using BP neural network. Knowl.-Based Syst. 2011, 24, 1048–1056. [Google Scholar] [CrossRef]

- Feng, L.; Zhang, J. Application of artificial neural networks in tendency forecasting of economic growth. Am. Econ. J.-Econ. Polic. 2014, 40, 76–80. [Google Scholar] [CrossRef]

- Wang, H.; Leng, C. A note on adaptive group lasso. Appl. Comput. Stat. Data Anal. 2008, 52, 5277–5286. [Google Scholar] [CrossRef]

- Ren, Y.; Zhang, X. Model Selection for Vector Autoregressive Processes via Adaptive Lasso. Commun. Stat.-Theory Methods 2013, 42, 2423–2436. [Google Scholar] [CrossRef]

- Tejado, I.; Pérez, E.; Valxexrio, D. Fractional calculus in economic growth modelling of the group of seven. Fract. Calc. Appl. Anal. 2019, 22, 139–157. [Google Scholar] [CrossRef]

- Ming, H.; Wang, J.; Fečkan, M. The application of fractional calculus in Chinese economic growth models. Mathematics 2019, 7, 665. [Google Scholar] [CrossRef]

- Gerardo-Giorda, L.; Germano, G.; Scalas, E. Large-scale simulations of synthetic markets. Commun. Appl. Ind. Math. 2014, 6, 535–842. [Google Scholar]

- Tarasov, V.E.; Tarasova, V.V. Dynamic Keynesian model of economic growth with memory and lag. Mathematics 2019, 7, 178. [Google Scholar] [CrossRef]

- Tarasova, V.V.; Tarasov, V.E. Elasticity for economic processes with memory: Fractional differential calculus approach. Fract. Differ. Calc. 2016, 6, 219–232. [Google Scholar] [CrossRef]

- Luo, D.; Wang, J.; Fečkan, M. Applying Fractional calculus to analyze economic growth modelling. J. Appl. Math. Stat. Inform. 2018, 14, 25–36. [Google Scholar] [CrossRef]

- Tarasov, V.E.; Tarasova, V.V. Macroeconomic models with long dynamic memory: Fractional calculus approach. Appl. Math. Comput. 2018, 338, 466–486. [Google Scholar] [CrossRef]

- Zou, H. The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 2006, 101, 1418–1492. [Google Scholar] [CrossRef]

- Fulger, D.; Scalas, E.; Germano, G. Monte Carlo simulation of uncoupled continuous-time random walksyielding a stochastic solution of the space-time fractional diffusion equation. Phys. Rev. E 2008, 77, 021122. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2011, 96, 1348–1360. [Google Scholar] [CrossRef]

- Shojaie, A.; Michailidis, G. Penalized likelihood methods for estimation of sparse high-dimensional directed acyclic graphs. Biometrika 2010, 97, 519–538. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).