Abstract

This work presents EDICA, a two-stage architecture for fine-grained image classification, which is a hybrid model for the detection and classification task. The model employs YOLOv8 for the detection stage and an ensemble deep learning model that utilizes a majority voting strategy for fine-grained image classification. The proposed model aims to enhance the precision of classification by integrating classification models that have been trained with the same classes. This approach enables the utilization of the strengths of these classification models for a range of test instances. The experiment involved a diverse set of classes, encompassing a variety of types, including dogs, cats, birds, fruits, frogs, and foliage; each class is divided into subclasses for finer-grained classification, such as specific dogs, cat breeds, bird species, and fruit types. The experimental results show that the hybrid model outperforms classification approaches that use only one model, thereby demonstrating greater robustness relating to ambiguous complex images and uncontrolled environments.

MSC:

68T45

1. Introduction

The automatic detection and classification of objects in images have become highly relevant issues in various fields, including surveillance [1,2], medicine [3,4,5], agriculture [6,7], and industry [8,9,10]. Deep learning-based models, particularly YOLO (You Only Look Once)-type architectures, have distinguished themselves since their first version [11] by their capacity to perform real-time object detection with high accuracy and speed.

In recent years, the YOLO family of models has undergone significant evolution, expanding its capabilities not only in object detection tasks but also in image classification [11,12,13,14]. This evolution has resulted in a range of models that address multiple challenges in computer vision, from accurate real-time object localization to identifying specific classes in cropped images. Recent versions of the technology include YOLOv5 [15], YOLOv6 [16], YOLOv7 [17], YOLOv8 [18], YOLOv9 [19], YOLOv10 [20], YOLO11 [21], and the latest version, YOLO12 [12]. Since its initial release in 2015, significant advancements have been made in the field. These include improvements in accuracy, inference speed, modular structure, and support for classification, segmentation, and pose estimation tasks. These enhancements have rendered the tools versatile and accessible for a wide range of applications. Despite this progress, accurately classifying visually similar subclasses (such as specific breeds or species) remains a significant challenge in computer vision. To address this challenge, the present work proposes the hybrid architecture, EDICA (Ensemble Deep Image Classification Architecture), which integrates a detection and a classification stage, where it applies a set of classifiers for fine-grained image classification tasks. EDICA evaluates the performance of the classifiers both individually and jointly.

The objective of EDICA is to enhance object classification in uncontrolled environments by utilizing ensemble models that capitalize on their strengths in categorizing various types of objects. It should be noted that there are several contributions to the architecture regarding similar research. In particular, we mention that EDICA implements two stages: (1) a detection stage that identifies the object or main class using YOLOv8 and (2) a classification stage that implements ensemble models, which are a combination of recent YOLO architectures that have performed well with the types of images used in this study. In addition, EDICA can detect and classify images with multiple objects. For instance, it allows the evaluation of images that have more objects with different classes. Finally, EDICA implements a majority voting strategy that allows the identification of the ensemble models that perform best for a given type of object.

This paper is organized as follows: Section 1 introduces the work. Section 2 presents the work related to this research. Section 3 describes the materials and methods, where the proposed architecture is presented, and the metrics used are explained. Section 4 presents the results obtained from the experimental process, evaluating the model using various instances. Finally, Section 5 presents the conclusions.

2. Related Work

Object recognition has been a subject of extensive study in the field of computer vision, with numerous architectures developed to enhance the detection and classification of visual elements. The integration of accuracy and speed through the use of deep convolutional networks was pioneered by the SSD model (Single Shot MultiBox Detector), proposed by Liu et al. [22]. Another model related to this subject is Faster R-CNN, proposed by Ren et al. [23]. Moreover, comprehensive reviews, such as that by Zhao et al. [24], have underscored the continuous equilibrium between accuracy, inference speed, and architectural complexity that has characterized the evolution of object detectors. These works provide a comprehensive framework for comprehending the advances and limitations of these models.

The YOLO model was submitted in 2015 and published in 2016 by Redmon and collaborators [11]. It has since become one of the most efficient solutions for real-time detection. From its early versions to recent iterations, such as YOLOv8, YOLOv10, YOLO11, and YOLO12, these models have incorporated substantial improvements in their architectures, including enhancements in the neural network, attention mechanisms, and self-directed learning, as well as latency and accuracy [12,25]. Recent reviews, such as the one by Terven et al. [26], provide a comprehensive analysis of the evolution from the first YOLO to YOLOv8 and its variants, including YOLO NAS. These analyses highlight structural changes, training strategies, and domain-specific applications. Moreover, Sapkota et al. [27] present a systematic comparison of YOLOv8 and YOLO12 in real-world fruit detection scenarios. They highlight the continuous improvements in accuracy, recall, and inference speed that characterize the latest YOLO model generations. In the work of Tan and Le [28], they proposed EfficientNet, an architecture that has been integrated into classifiers used in recent versions of YOLO due to its efficiency and performance.

2.1. Hybrid Models for Image Classification

Recent work in the computer vision field has focused on ensemble-based approaches. For instance, research by Mustapha et al. [29] has investigated hybrid systems that integrate YOLOv8 with custom classifiers to address specific tasks, such as waste classification. This approach has yielded favorable outcomes by leveraging CNNs on regions identified by YOLO. In particular, their study compared the performance of the hybrid YOLOv8-CNN model with that of a standalone CNN for classification tasks, demonstrating improvements in key metrics: accuracy (hybrid 88.3%, CNN 82.5%), precision (hybrid 0.89, CNN 0.85), recall (hybrid 0.86, CNN 0.80), and F1-score (hybrid 0.87, CNN 0.83). Although the hybrid model was marginally slower in inference, this drawback was outweighed by its significantly enhanced classification quality and reliability. Alashjaee et al. [30] proposed a hybrid model, named HAODVIP-ADL; they integrated YOLOv10 with CapsNet and InceptionV3 for a computational vision application. Notably, the hybrid object detection model HAODVIP-ADL achieved an outstanding object detection accuracy of 99.74% on an indoor environment dataset, outperforming conventional methods such as IOD155 + tfidf, IOD90 + tfidf, Xception + tfidf, YOLOv5n, YOLOv5m, YOLO Tiny, YOLOv3, ResNet101, Support Vector Machine (SVM), and Linear Discriminant Analysis (LDA), which attained significantly lower performance.

The HAODVIP-ADL model showed superior capability in recognizing small, occluded, or complex objects compared to classical approaches, highlighting its substantial contribution to improving real-time visual assistance systems for individuals with visual impairments.

In addition, other studies have evaluated various hybrid CNN-transformer architectures in comparison to a baseline CNN model, specifically YOLOv8, for the detection of illicit objects in X-ray images [31]. The research explored different combinations of backbones and detection heads (HGNetV2, Next-ViT S, YOLOv8, and RT-DETR) using three particularly challenging public X-ray inspection datasets: EDS, HiXray, and PIDray. The results showed that, although YOLOv8 achieved higher detection precision in standard scenarios (HiXray and PIDray), the hybrid architectures demonstrated greater robustness when facing substantial domain shifts, as seen with the EDS dataset, a crucial factor for real-world security applications in X-ray inspection.

Ali et al. [32] present a comprehensive review focused on hybrid approaches that integrate CNN, YOLO, and Vision Transformer (ViTs) models for challenging applications. Their work highlights how the synergistic combination of these architectures leverages the strengths of each (robust feature extraction with CNNs, rapid object detection with YOLO, and enriched global context modeling with ViTs), resulting in substantial improvements in accuracy and efficiency under demanding conditions compared to standalone YOLO, CNN, or ViTs models.

Despite these advances, most hybrid approaches employ only a single classifier for detection. Studies investigating the simultaneous use of multiple classifiers to improve class and subclass assignment accuracy and robustness are scarce. One notable example is the work of Abirami et al. [33], who propose a model integrating YOLOv9 with three advanced classifiers: the Deep Neural Network (DNN), the Deep Belief Network (DBN), and the Long Short-Term Memory (LSTM). This model demonstrates that combining multiple approaches improves object detection and classification in underwater environments. However, such approaches are uncommon, and they do not incorporate a formal voting strategy or probabilistic comparison. In addition to Abirami’s work, general approaches to ensemble learning provide a theoretical and methodological background. For example, Ganaie et al. [34] provided a comprehensive review of deep ensemble learning models, classifying them and analyzing their application across different domains such as computer vision, natural language processing, and medicine. Their research concludes that ensemble strategies improve generalization, robustness, and performance, especially when addressing complex problems and scenarios characterized by high uncertainty.

In contrast to research focused on maximizing performance, the work by Zhang et al. [35] presents a Fragmented Neural Network (FNN) ensemble method. Inspired by the random forest algorithm, this approach trains multiple simple networks in parallel on random fragments of images, utilizing a voting system to combine their predictions for enhanced accuracy. The main contribution is offering a pragmatic, accessible, and low-cost modeling paradigm. This facilitates the “mass production” of effective AI models without the enormous computational resources demanded by state-of-the-art systems.

In the field of multi-object detection, Lee and Kwon proposed Dynamic Belief Fusion (DBF), an advanced technique that combines the scores of several object detectors using the Dempster–Shafer theory. Unlike previous approaches, such as Bayesian fusion or conventional ensembles, DBF implements a probabilistic model capable of assigning and merging differentiated belief levels according to the confidence and consistency of each detector. DBF demonstrated robustness and superior performance in the presence of ambiguous or conflicting results among detectors in multi-object detection tasks compared to individual detectors [36].

2.2. Fine-Grained Image Classification

In the classification area, several researchers have applied deep learning models to fine-grained image classification. In particular, the work of Jia et al. [37] presents a classification method for blood cell images that combines a Masked Autoencoder model and an enhanced Vision Transformer (ViT) architecture. The ViT model employs a fine-grained classification approach, attaining 93.51% accuracy for the blood cell class and 81.41% for the erythrocyte subtype. This performance surpasses other models, such as CNN, EfficientNet, and VGG. Additionally, Shi et al. [38] and Chen et al. [39] applied the ViT model for fine-grained images. On the other hand, Zhu et al. [40] presented a fine-grained classification model that combines CNN with ViT. Specifically, they applied the ViT model to extract global features and a CNN to reinforce local details; they achieved accuracies of 95.2%, 97.1%, and 96.9% in three different datasets. Moreover, a recent review of current methodologies employing fine-grained classification with limited data was published in 2025 [41]. This review presents several applications, such as meta-learning, data augmentation, knowledge distillation, and self-supervised learning [41]. The most recent publication presents numerous applications of fine-grained classification, including species recognition, medical image recognition, and monitoring.

In conclusion, the research presented in this review of the related work shows that the YOLO architecture has been widely used in object detection and classification, with remarkable results. Likewise, ensemble models are being applied to object classification, with results that surpass models with a single classifier, as shown in Abirami et al. [33]. In Section 2.2 of this work, we present combined models that enhance fine-grained image classification. A difference in our proposed approach is that we combine the detection and classification stages in the EDICA architecture. In this paper, we propose the EDICA hybrid architecture as a solution that integrates a detection stage and a classification stage, with ensemble models using YOLO versions to enhance robustness in fine-grained image classification.

3. Materials and Methods

This section presents the proposed hybrid architecture, which utilizes YOLOv8m for object detection and ensemble models for fine-grained image classification. Furthermore, the metrics for the detection and classification task are described.

3.1. Ensemble Deep Image Classification Architecture

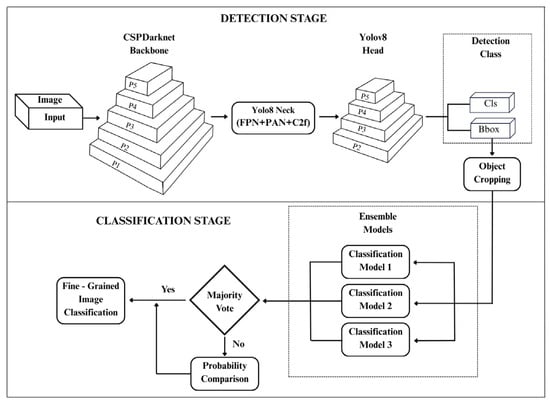

EDICA architecture uses recent high-performance deep learning models for object detection and an ensemble architecture dedicated to the task of fine-grained classification. Figure 1 illustrates the two stages of the EDICA architecture: (1) the detection stage and (2) the classification stage. These stages are explained in detail below.

Figure 1.

EDICA architecture based on the YOLOv8m model.

Detection stage: In the detection stage, the YOLOv8m architecture is applied, which has been previously trained with seven classes. YOLOv8m was selected as the most suitable architecture after comparative experiments with YOLOv9, YOLOv10, YOLOv11, YOLOv12, and RT-DETR-L, achieving the best performance on the dataset used in this study. Once YOLOv8m detects the object of interest, the bounding boxes are cropped, which are used in the next stage.

Classification stage: In the preceding stage, the YOLOv8m detector identifies the object and subsequently crops it. In this stage, an ensemble of classifier models is employed for object classification. After the object is classified by each of the classifiers in the ensemble, a vote strategy is performed to determine which model is the best. The winning model is responsible for assigning the subclass label to the object. This process enables the implementation of a fine-grained classification object. This strategy utilizes the implementation of an ensemble of classifier models to leverage the distinctive characteristics inherent in each classification model.

3.2. Evaluation Metrics

To comprehensively evaluate the performance of the proposed hybrid system, specific metrics were used for both object detection and classification. These metrics allow for the quantification of performance in terms of accuracy, generalization capability, temporal efficiency, and inter-model consistency.

3.2.1. Object Detection Metrics

A variety of metrics have been applied to assess performance in object detection, particularly in scenarios involving multiple classes and instances. In their comprehensive review, Padilla et al. [42] analyze the advantages and limitations of these metrics, highlighting the importance of combining precision, recall, and mAP (mean Average Precision) under different intersection over union (IoU) thresholds to evaluate both the detection and classification capabilities of a deep learning model. In this paper, we evaluate deep learning models for accurately identifying the general class of objects in images, using several metrics. In Table 1, we present the metrics for the object detection stage.

Table 1.

Object detection metrics.

3.2.2. Object Classification Metrics

In the second stage of the EDICA architecture, image classification performance metrics are obtained. For instance, the accuracy metric is used to measure the efficiency of models in classifying subclasses by employing one or more ensemble classifiers. Additionally, we propose utilizing the consistency between classifiers, as well as the Average Confidence Probability (ACP). The metrics used for object classification are described in Table 2.

Table 2.

Object classification metrics.

To ascertain the efficiency of the ensemble model, we propose complementary metrics presented in Table 3. These metrics allow a more detailed analysis of system performance, especially in multi-class scenarios with multiple objects per image. These metrics are not included in conventional standards. Instead, they are derived from prior studies on model calibration and confidence analysis, such as the research conducted by Guo et al. [44]. This study demonstrated that the confidence a model assigns to its predictions can be miscalibrated even when the accuracy is high.

Table 3.

Metrics for evaluating the ensemble model in images with multiple classes.

The proposed metrics are as follows: the Image Classification Index (), Correct Average Confidence (CAC), and Incorrect Average Confidence (IAC) are designed to augment conventional metrics, offering a more comprehensive perspective on the performance of the ensemble model in terms of certainty, error dispersion, and inter-class consistency.

4. Results

This section presents the results obtained from the proposed hybrid architecture for object detection and classification. The architecture was evaluated considering two performance levels: the detection of general classes using the YOLOv8m model and the final classification of specific subclasses using three independent classifiers based on the YOLO model for fine-grained image classification. The evaluation in the detection phase is carried out by the following metrics: (a) mAP with IoU thresholds 50, 75, and 50–95, (b) accuracy, (c) recall, (d) inference time, and (e) training time. The hybrid model obtained better results for the correct identification of general classes and specific subclasses.

The present study utilized a dataset created by integrating several widely used datasets: African wildlife [45], 510 bird species [46], plant classification [47], PlantVillage [48], Oxford-IIIT Pet [49], and Frog (Roboflow) [50]. This dataset of images was utilized during the detection stage, and a total of 3700 images were classified into seven distinct classes. Of these, 84% were for training, while the remaining 16% were designated for validation (see Table 4 for details).

Table 4.

Dataset for the detection stage.

For the fine-grained classification stage, a dataset comprising 3467 images was employed, systematically organized into 15 subclasses. Among these, 82% of the images were used for training, whereas the remaining 18% were allocated for validation purposes, as shown in Table 5.

Table 5.

Dataset for the classification stage.

To adequately assess the efficacy of the proposed classification model at varying levels of complexity, two distinct image datasets were utilized, each designed independently. The initial evaluation set comprises 547 images, each containing a single subclass. This configuration enables the analysis of the classification models’ behavior in simple classification scenarios, where ambiguity in label assignment is absent, and each prediction can be directly compared with a single target class.

Additionally, we designed a second dataset that encompasses 29 images, each featuring two or more objects present concurrently. This set simulates more intricate scenarios, where the system is tasked with accurately identifying multiple objects within a single scene. This set of experiments evaluates two key aspects of the models’ capabilities: first, the models’ ability to detect and localize specific regions of interest and, second, the models’ accuracy in classifying these regions without confounding similar objects.

4.1. Object Detection Results

In the first stage of EDICA architecture, named object detection, several models were trained and evaluated. These models are the distinct versions of YOLO, and we include one RT-DETR-L architecture, which all utilize the same dataset. As illustrated in Table 6, a comprehensive evaluation of the diverse detection models under consideration is presented, encompassing the metrics of precision, recall, mAP at varying thresholds, inference time, and training time. This comparison enables the identification of models with optimal detection capabilities and those demonstrating the highest efficiency.

Table 6.

Comparative results of object detection with YOLO models and RT DETR-L.

As we observe in Table 6, the YOLOv8m model obtained the most balanced performance between precision (0.918), recall (0.860), and mAP50 (0.908), as well as in the other metrics evaluated. The experimental findings indicate that the YOLOv8m model presents the best performance in comparison to other YOLO versions and the RT DETR-L model. This observation positions the YOLOv8m model as the most competitive model at this stage. Furthermore, it exhibits one of the lowest inference times (7.7 milliseconds), rendering it particularly well-suited for real-time vision applications. The findings from the evaluation of the other models demonstrate comparable efficiency, with the YOLOv9m and YOLO12 models exhibiting a notable distinction. In Table 7, the precision results are presented for each evaluated model, categorized by class. We can observe all the models evaluated for each class, where the YOLOv8m model presents superior performance.

Table 7.

Results of precision by class for each model.

As shown in Table 7, the leaf and bird classes exhibited the highest levels of performance across all detection models, attaining a precision score exceeding 0.97. This outcome signifies a substantial degree of visual discriminability within these categories. Conversely, the frog class exhibited the lowest performance, indicating more significant challenges in its identification. The RT DETR-L model demonstrated the poorest performance, while the YOLOv8m model showed the best performance, attaining a precision score of 0.793 for the frog class. The YOLOv8m model is regarded as the most balanced, with high accuracy in most classes, outperforming recent models such as YOLO12 and RT-DETR-L.

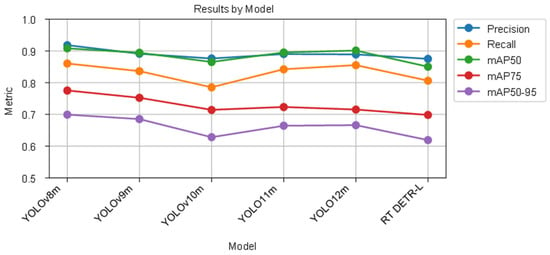

To complement the quantitative analysis, Figure 2 presents a comparative graph that brings together five key metrics used in this work: precision, recall, mAP50, mAP75, and mAP (50–95). This figure enables the visual discernment of performance trends across diverse models. A thorough examination reveals that YOLOv8m consistently outperforms RT-DETR-L across nearly all metrics. Notably, RT-DETR-L exhibits a substantial decline, particularly in mAP (50–95), underscoring its diminished generalization capability in this particular setting.

Figure 2.

Comparison of YOLO models and RT-DETR-L using the following metrics: precision, recall, and mAP with different thresholds.

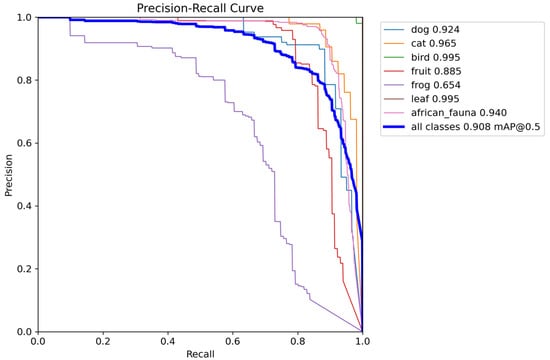

As illustrated in Figure 3, the precision–recall curve for the YOLOv8m detection model is presented. Each line in the figure represents the performance of a specific class, thereby allowing for the observation of the relationship between precision and recall (ability to detect all real objects). The “leaf” and “bird” classes demonstrate remarkable performance, attaining mAP50 values of 0.995. In contrast, the “frog” class exhibits substandard performance, with an mAP50 of 0.654. The thick blue curve signifies the mean average of the model across all classes, exhibiting an mAP of 0.5 of 0.908, thereby substantiating the model’s capacity to execute precise detection on the utilized dataset.

Figure 3.

Precision vs. recall curve of the YOLOv8m model with all the classes.

The results of the detection stage for all models evaluated demonstrated robust performance, accurately locating the majority of the classes in the test images. The metrics employed in this detection stage, such as precision, recall, and mAP (with several thresholds), as well as inference and training times, substantiate the superior performance of the YOLOv8m model. The qualitative results corroborate its capacity to generalize accurately, even in complex scenarios. These findings confirm the selection of YOLOv8m as the best model for the detection stage of the EDICA architecture, which was previously explained.

In summary, the experimentation carried out for the object detection stage showed YOLOv8 to be the best performing model, although the YOLO12 model also obtained very similar results using the aforementioned performance metrics. Furthermore, as can be seen in Table 6, when comparing the precision results, YOLOv8 is better than all the models evaluated using the proposed dataset. The same situation occurs with regard to the training and inference times. We cannot conclude with certainty that YOLOv8 is a better model than YOLO12 or YOLO11, since we only experimented with the dataset mentioned in this research and did not perform an exhaustive comparison between the models with more challenging image sets. However, in this experiment and with this dataset, YOLOv8 obtained slightly better results; so, based on these results, it was chosen as the main model for the detection stage of the EDICA architecture.

4.2. Object Classification Results

The results presented in this section pertain to the object classification stage of the EDICA architecture, which implements a fine-grained image classification. That is to say, once the YOLOv8m model detects the objects, an ensemble of classifiers is employed to classify the objects into their subclasses. The next ensemble models applied in this stage are YOLOv8m, YOLOv11m, YOLOv8m with ResNet50, and ShuffleNet [51]; they have been trained independently. Two sets of image databases were utilized to evaluate the ensemble models. The evaluation was performed on two types of datasets: (a) the image dataset under consideration contains a single class, and (b) the image dataset under consideration contains multiple classes within a single image, which are collectively referred to as “subclasses”. The results of using ensemble models are shown in Table 8.

Table 8.

Results of accuracy, consistency, and average confidence by number of classifiers.

As illustrated in Table 8, the results were evaluated based on the metrics of accuracy, consistency, ACP, and average time inference. The findings indicate that the use of ensemble models enhances the classification performance compared to the application of a single model for object classification.

4.2.1. Classification Results for a Single Class

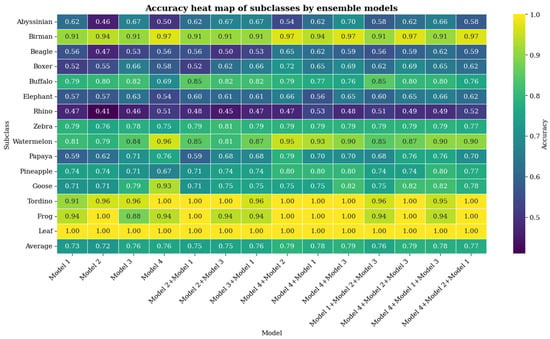

In this section, we present the results of the dataset, which contains images with a single object per image, allowing for direct evaluation using metrics such as accuracy per class and overall accuracy. Configurations with one, two, and three classifiers were also considered to evaluate the effect of the combination of several classifiers for the classification of the subclasses of the object. As shown in Table 8, the findings encompass a range of metrics for evaluating the models, from one to three classifier ensembles. In addition to presenting the overall performance, Figure 4 offers a detailed breakdown of the accuracy by subclass, accompanied by the identification of the value obtained for each category. This approach enables the identification of subclasses that are most readily recognized by EDICA, as well as those that present a significant challenge.

Figure 4.

Heat map of ensemble models.

Figure 4 presents the heat map using the accuracy metric of the individual and ensemble models for the classification of each subclass. This comparison shows the efficacy of using an ensemble model for fine-grained image classification. The analysis indicates that subclasses such as Leaf, Frog, Tordino, and Birman demonstrated accuracies exceeding 91% in the majority of models. In contrast, other subclasses, including Abyssinian, Rhino, and Beagle, exhibited lower and more variable results. In addition, the heat map illustrates that, when models are ensembled, the accuracy results demonstrate a slight improvement compared to using a single model for fine-grained classification.

Moreover, the heterogeneity in the performance of the models was assessed using Cochran’s Q test, a nonparametric statistical procedure designed explicitly for related samples. This approach was appropriate, given that all models were applied to the same dataset, with categorical outputs indicating whether the predictions were correct or incorrect.

The global Cochran’s Q test yielded a statistically significant result at the α = 0.05 confidence level, with an observed statistic of approximately Q = 75.47, and an associated p-value of 7.773 × 10−11. This outcome led to the rejection of the null hypothesis that all models achieved the same proportion of correct classifications. Consequently, a post hoc analysis was conducted using McNemar’s test with the Holm adjustment to identify which specific pairs of models exhibited significant differences in predictive accuracy.

Table 9 reports the pairs of models that were found to differ significantly. Out of the 91 pairwise comparisons, nine were statistically significant, with Model B (ensemble) emerging as the model with superior performance.

Table 9.

Ensemble hybrid models with statistically significant performance differences.

The results indicate that YOLO11m demonstrated significantly lower performance compared to 7 of the 10 hybrid models. Similarly, YOLOv8m exhibited inferior performance relative to two of the hybrid models. No other models showed statistically significant differences. In conclusion, these results suggest that ensemble hybrid models can enhance the performance of specific classification models.

4.2.2. Classification Results for Multi-Class

This section presents the results of the evaluation of individual and ensemble models for classifying images with multiple objects categorized in subclasses. To this end, a test dataset was utilized, comprising images that were not part of the training process and that exhibited multiple classes within a single image. This approach enabled the ensemble models to differentiate between these subclasses. To accurately assess the performance of EDICA architecture in this intricate scenario, metrics were employed that examined both instance-based classification and image-based classification. The metrics used for this evaluation are presented below:

- CAC (Correct Average Confidence): average confidence in correct predictions.

- IAC (Incorrect Average Confidence): average confidence in incorrect predictions.

- ICI (Image Classification Index): measures how many predictions were correct per image.

Table 10 presents the evaluation results using the proposed metrics. In addition, it has been observed that, within this more challenging environment, the ensemble model consisting of Model 4 + Model 2 + Model 3 which is an ensemble of ShuffleNet, YOLO11, and YOLOv8m-ResNet50 demonstrates the optimal balance in subclass classification, exhibiting an accuracy of 72.6%, an ICI of 0.70, and the highest average confidence in its correct predictions (CAC = 0.958). The other ensemble models that showed similar results are Model 4 (SuffleNet) + Model 2 (YOLO11) + Model 1 (YOLOv8m) and Model 4 + Model 1 + Model 3 (YOLOv8m-Resnet50). While alternative models exhibited commendable levels of accuracy, their capacity to ensure consistent performance across images (as evidenced by the IAC) was always surpassed by the ensemble model. These results indicate that the classifier combination strategy enhances not only the accuracy of classification but also the stability of the ensemble model.

Table 10.

Classification results based on multiple classes per image.

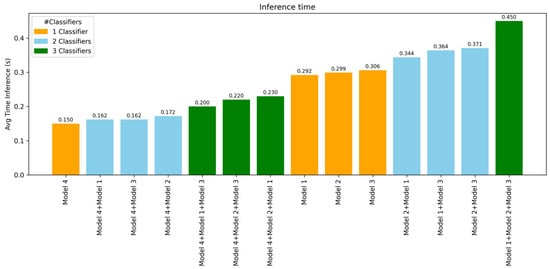

Figure 5 presents the inference times for each ensemble model. Notice that the Model 4 (ShuffleNet) model has the shortest inference time. Conversely, the ensemble models necessitate a greater time investment, with the Model 1 + Model 2 + Model 3, which implements the ensemble of YOLOv8m, YOLOv11, and YOLOv8m-ResNet50, requiring the most time. However, it can be observed that the inference time of the Model 1 (YOLOv8m) and Model 2 (YOLOv11m) is marginally higher than that of some ensemble models. This discrepancy may be due to the integration of Model 4, which helps enhance the inference time in the classification process.

Figure 5.

Inference time in seconds for each ensemble model.

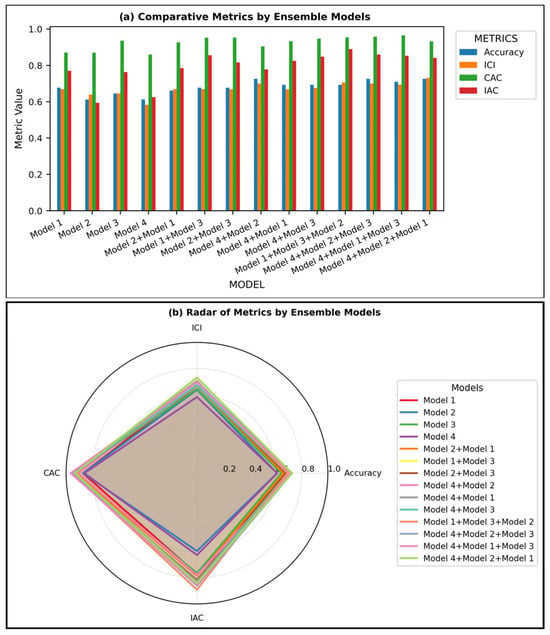

Figure 6 presents a comparison of the performance of EDICA with one model vs. several ensemble models using different classification metrics. Specifically, Figure 6a presents a bar chart that illustrates the performance of each model in terms of four key metrics: accuracy, ICI, CAC, and IAC. The findings indicate that the ensemble models demonstrate superior performance in terms of the accuracy and the confidence of their predictions when compared to the individual models. This suggests that the hybrid approach exhibits greater reliability and robustness. In addition, Figure 6b presents a radar chart that synthesizes these metrics into a single representation, thereby enabling a comprehensive comparison of the models’ performance. The graph illustrates that the ensemble models demonstrate a more balanced and uniform performance across all metrics. The ensemble model comprising three classifiers exhibits the highest values, while the individual models demonstrate lower results. In summary, the findings indicate that the ensemble models exhibit superior performance in comparison to the single models. This outcome supports the hypothesis that using ensemble models not only enhances the accuracy of the models but also reinforces the stability and reliability of the EDICA architecture in complex multi-class classification scenarios.

Figure 6.

Comparative performance of EDICA using multiple classification models evaluated through accuracy, ICI, CAC, and IAC metrics: (a) bar chart comparison; (b) radar chart visualization.

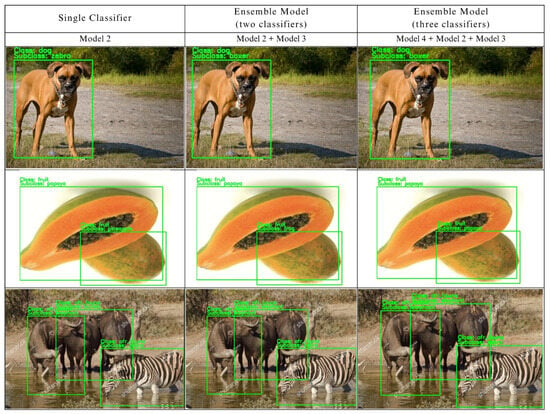

In Figure 7, a visual representation is finally presented, illustrating the performance of the ensemble model during the classification stage of the EDICA architecture. This representation is achieved through three different scenarios. The initial row corresponds to a rudimentary scenario, with a solitary object present in the image. The second row illustrates an intermediate case, in which there are two objects of the same subclass. The third row exemplifies a complex context wherein multiple objects with different subclasses coexist within a single image. In each case, the results obtained using only one classifier, Model 2 (YOLO11m), the ensemble model with two classifiers, Model 2 + Model 3, and the model with three classifiers, Model 4 + Model 2 + Model 3, are compared. It has been observed that, as the visual complexity of the data increases, implementing multiple classifiers can contribute to a reduction in labeling errors and an enhancement in consistency in object classification across different subclasses.

Figure 7.

Visual comparison of results in the object classification stage using one to three classifiers for fine-grained image classification.

In summary, the results obtained in the classification stage demonstrate that the ensemble strategy for fine-grained image classification outperforms individual models, both in simple scenarios (where there is one class per image) and in more complex contexts (where there are multiple classes per image). Conventional metrics, such as accuracy, were augmented by additional metrics, including ICI, CAC, and IAC. This multifaceted approach enabled the assessment of not only the accuracy but also the stability and self-confidence of the EDICA architecture with three classifiers. As illustrated in Figure 7, a comparative analysis was conducted among the metrics accuracy, ICI, CAC, and IAC. This analysis enabled the discernment of the EDICA architecture’s superiority when utilized with three classifiers. These findings underscore the importance of employing ensemble models in fine-grained image classification tasks and provide a substantial foundation for future enhancements or implementations of EDICA in real-world applications.

5. Conclusions

This study presents the EDICA architecture, which implements an object detection stage as well as an image classification stage for fine-grained image classification. Various tasks were performed in each stage. For example, in the first detection stage, a set of experiments was carried out with a set of 3102 images containing different objects, which allowed for the selection of the model with the best object detection performance and also determined the appropriate hyperparameters for each model evaluated. The models evaluated in this stage were the YOLOv8 model to YOLO12, including the RT DETR-L model. The analysis reveals that YOLOv8m obtained the most consistent performance in object detection using the proposed dataset. It should be noted that YOLO12 also obtained very good results; the training and inference times of YOLOv8 were better, which was an important factor in the choice of model.

Subsequently, a second experiment was conducted using a set of 2839 images for fine-grained image classification. The classes of objects used in this study were divided into subclasses and evaluated using a joint model of several classifiers. The EDICA architecture was evaluated using sets of one, two, and three assembled models to assess adequate performance. The models ensembled in this stage were YOLOv8, YOLOv11, and YOLOv8 combined with Resnet50 and ShuffleNet. The experiment showed that implementing a set of multiple classifiers improves the accuracy of detailed classification by leveraging the unique characteristics inherent in each classifier. These characteristics are particularly advantageous for identifying specific objects in uncontrolled environments.

Finally, in future work, we recommend experimenting with ensembles of multiple classifiers in different contexts and datasets to identify the advantages of each and the environmental conditions and characteristics of the object set where they function properly, thereby enabling the creation of a more robust model. Experiments may also be conducted in real-time applications to verify their performance.

Author Contributions

Conceptualization, A.J.G.H., J.P.S.H. and J.F.S.; methodology, A.J.G.H., J.P.S.H., D.L.H.R. and J.F.S.; software, A.J.G.H., J.P.S.H. and D.L.H.R.; validation, J.F.S. and J.G.-B.; formal analysis, J.F.S. and J.P.S.H.; investigation, A.J.G.H., J.P.S.H., D.L.H.R. and J.F.S.; resources, J.G.-B. and G.C.V.; data curation, A.J.G.H. and J.P.S.H.; writing—original draft preparation, A.J.G.H., J.P.S.H. and J.F.S.; writing—review and editing, A.J.G.H., J.P.S.H., D.L.H.R., G.C.V. and J.F.S.; visualization, A.J.G.H.; supervision, J.P.S.H. and J.F.S.; project administration, J.F.S. and J.P.S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to acknowledge SECIHTI (Secretaria de Ciencia, Humanidades, Tecnologías e Innovación), TecNM/Instituto Tecnológico de Ciudad Madero, and the National Laboratory of Information Technologies (LaNTI). Alan J. González Hernández would like to thank SECIHTI for his Ph.D. Scholarship.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| EDICA | Ensemble Deep Image Classification |

| YOLO | You Only Look Once |

| SSD | Single Shot MultiBox Detector |

| R-CNN | Region-based Convolutional Neural Network |

| CNN | Convolutional Neural Network |

| LSTM | Long Short-Term Memory |

| DNN | Deep Neural Network |

| DBN | Deep Belief Network |

| DBF | Dynamic Belief Fusion |

| ViT | Vision Transformer |

| VGG | Visual Geometry Group |

| RT DETR | Real-Time DEtection Transformer |

| mAP | mean Average Precision |

| IoU | Intersection over Union |

| COCO | Common Objects in Context |

| ACP | Average Confidence Probability |

| ICI | Image Classification Index |

| CAC | Correct Average Confidence |

| IAC | Incorrect Average Confidence |

| TP | True Positives |

| FP | False Positives |

References

- Divya, N.; Al-Omari, O.; Pradhan, R.; Ulmas, Z.; Krishna, R.V.V.; El-Ebiary, T.Y.A.B.; Rao, V.S. Object detection in real-time video surveillance using attention based transformer-YOLOv8 model. Alex. Eng. J. 2025, 118, 482–495. [Google Scholar] [CrossRef]

- Alamri, F. Comprehensive study on object detection for security and surveillance: A concise review. Multimed. Tools Appl. 2025, 84, 42321–42352. [Google Scholar] [CrossRef]

- Ragab, M.G.; Abdulkadir, S.J.; Muneer, A.; Alqushaibi, A.; Sumiea, E.H.; Qureshi, R. A Comprehensive Systematic Review of YOLO for Medical Object Detection (2018 to 2023). IEEE Access 2024, 12, 57815–57836. [Google Scholar] [CrossRef]

- Saraei, M.; Lalinia, M.; Lee, E.-J. Deep Learning-Based Medical Object Detection: A Survey. IEEE Access 2025, 13, 53019–53038. [Google Scholar] [CrossRef]

- Alanazi, H. Optimizing Medical Image Analysis: A Performance Evaluation of YOLO-Based Segmentation Models. Int. J. Adv. Comput. Sci. Appl. 2025, 16, 1167. [Google Scholar] [CrossRef]

- Kanna, S.K.; Ramalingam, K.; Pazhanivelan, P.; Jagadeeswaran, R.; Prabu, P.C. YOLO deep learning algorithm for object detection in agriculture: A review. J. Agric. Eng. 2024, 55, 1. [Google Scholar] [CrossRef]

- Das, A.; Pathan, F.; Jim, J.R.; Kabir, M.M.; Mridha, M.F. Deep learning-based classification, detection, and segmentation of tomato leaf diseases: A state-of-the-art review. Artif. Intell. Agric. 2025, 15, 192–220. [Google Scholar] [CrossRef]

- Gheorghe, C.; Duguleana, M.; Boboc, R.G.; Postelnicu, C.C. Analyzing Real-Time Object Detection with YOLO Algorithm in Automotive Applications: A Review. Comput. Model. Eng. Sci. 2024, 141, 1939–1981. [Google Scholar] [CrossRef]

- Kang, S.; Hu, Z.; Liu, L.; Zhang, K.; Cao, Z. Object Detection YOLO Algorithms and Their Industrial Applications: Overview and Comparative Analysis. Electronics 2025, 14, 1104. [Google Scholar] [CrossRef]

- El Kalach, F.; Farahani, M.; Wuest, T.; Harik, R. Real-time defect detection and classification in robotic assembly lines: A machine learning framework. Robot. Comput.-Integr. Manuf. 2025, 95, 103011. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar] [CrossRef]

- Ajayi, O.G.; Ashi, J.; Guda, B. Performance evaluation of YOLO v5 model for automatic crop and weed classification on UAV images. Smart Agric. Technol. 2023, 5, 100231. [Google Scholar] [CrossRef]

- Saranya, M.; Praveena, R. Accurate and real-time brain tumour detection and classification using optimized YOLOv5 architecture. Sci. Rep. 2025, 15, 25286. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv5; Ultralytics: Frederick, MD, USA, 2020; Available online: https://github.com/ultralytics/yolov5 (accessed on 23 April 2025).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLOv8, version 8.0.0; [Software]; Ultralytics: Frederick, MD, USA, 2023; Available online: https://github.com/autogyro/yolo-V8 (accessed on 28 April 2025).

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J. YOLO11, version 11.0.0; [Software]; Ultralytics: Frederick, MD, USA, 2024; Available online: https://github.com/ultralytics/ultralytics (accessed on 12 May 2025).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- YOLO, version 8. Ultralytics Official Documentation. Ultralytics: Frederick, MD, USA, 2023. Available online: https://docs.ultralytics.com (accessed on 13 May 2025).

- Terven, J.; Córdova Esparza, D.M.; Romero González, J.A. A comprehensive review of YOLO architectures in computer vision: From YOLOv1 to YOLOv8 and YOLO NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Sapkota, R.; Meng, Z.; Churuvija, M.; Du, X.; Ma, Z.; Karkee, M. Comprehensive performance evaluation of YOLOv12, YOLOv11, YOLOv10, YOLOv9 and YOLOv8 on detecting and counting fruitlet in complex orchard environments. Comput. Electron. Agric. 2024, 215, 108622. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning (ICML 2019), Long Beach, CA, USA, 9–15 June 2019; PMLR: New York, NY, USA, 2019; pp. 6105–6114. [Google Scholar] [CrossRef]

- Mustapha, A.A.; Saruchi, S.A.; Supriyono, H.; Solihin, M.I. A Hybrid Deep Learning Model for Waste Detection and Classification Utilizing YOLOv8 and CNN. Eng. Proc. 2025, 84, 82. [Google Scholar] [CrossRef]

- Alashjaee, A.; AlEisa, H.; Darem, A.; Marzouk, R. A Hybrid Object Detection Approach for Visually Impaired Persons Using Pigeon-Inspired Optimization and Deep Learning Models. Sci. Rep. 2025, 15, 9688. [Google Scholar] [CrossRef]

- Cani, J.; Diou, C.; Evangelatos, S.; Radoglou-Grammatikis, P.; Argyriou, V.; Sarigiannidis, P.; Varlamis, I.; Papadopoulos, G.T. X-ray Illicit Object Detection Using Hybrid CNN-Transformer Neural Network Architectures. arXiv 2025. [Google Scholar] [CrossRef]

- Ali, M.A.M.; Aly, T.; Raslan, A.T.; Gheith, M.; Amin, E.A. Advancing crowd object detection: A review of YOLO, CNN and ViTs hybrid approach. J. Intell. Learn. Syst. Appl. 2024, 16, 175–221. [Google Scholar] [CrossRef]

- Abirami, G.; Nagadevi, S.; Jayaseeli, J.; Rao, T.; Patibandla, R.S.M.L.; Aluvalu, R.; Srihari, K. An Integration of Ensemble Deep Learning with Hybrid Optimization Approaches for Effective Underwater Object Detection and Classification Model. Sci. Rep. 2025, 15, 10902. [Google Scholar] [CrossRef]

- Ganaie, M.A.; Hu, M.; Malik, A.K.; Tanveer, M.; Suganthan, P.N. Ensemble deep learning: A review. arXiv 2021. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, S.; Wang, X.; Li, Y. A fragmented neural network ensemble method and its application to image classification. Sci. Rep. 2024, 14, 2291. [Google Scholar] [CrossRef]

- Lee, H.; Kwon, H. DBF: Dynamic belief fusion for combining multiple object detectors. arXiv 2022. [Google Scholar] [CrossRef]

- Jia, N.; Guo, J.; Li, Y.; Tang, S.; Xu, L.; Liu, L.; Xing, J. A fine-grained image classification algorithm based on self-supervised learning and multi-feature fusion of blood cells. Sci. Rep. 2024, 14, 22964. [Google Scholar] [CrossRef]

- Shi, Y.; Hong, Q.; Yan, Y.; Li, J. LDH-ViT: Fine-grained visual classification through local concealment and feature selection. Pattern Recognit. 2025, 161, 111224. [Google Scholar] [CrossRef]

- Chen, C.-S.; Chen, G.-Y.; Zhou, D.; Jiang, D.; Chen, D.; Chang, S.-H. Improving fine-grained food classification using deep residual learning and selective state space models. PLoS ONE 2025, 20, e0322695. [Google Scholar] [CrossRef]

- Zhu, S.; Zhang, X.; Wang, Y.; Wang, Z.; Sun, J. A fine-grained image classification method based on information interaction. IET Image Process 2024, 18, 4852–4861. [Google Scholar] [CrossRef]

- Lim, J.M.; Lim, K.M.; Lee, C.P.; Lim, J.Y. A review of few-shot fine-grained image classification. Expert Syst. Appl. 2025, 275, 127054. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A survey on performance metrics for object-detection algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), IEEE, Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Guo, C.; Pleiss, G.; Sun, Y.; Weinberger, K.Q. On calibration of modern neural networks. In Proceedings of the 34th International Conference on Machine Learning (ICML 2017), Sydney, Australia, 6–11 August 2017; PMLR: New York, NY, USA; Volume 70, pp. 1321–1330. [Google Scholar] [CrossRef]

- Ultralytics. African Wildlife Dataset [Dataset]; Ultralytics: Frederick, MD, USA. Available online: https://docs.ultralytics.com/datasets/detect/african-wildlife/ (accessed on 15 February 2025).

- Gpiosenka, S.F. Explore 510 Bird Species [Dataset]; Kaggle: San Francisco, CA, USA. Available online: https://www.kaggle.com/code/gpiosenka/explore-510-bird-species-dataset (accessed on 15 February 2025).

- Yudha, I.S. Plants Type Datasets [Dataset]; Kaggle: San Francisco, CA, USA, 2023. [Google Scholar] [CrossRef]

- Hughes, D.P.; Salathé, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics through machine learning and crowdsourcing. arXiv 2015. [Google Scholar] [CrossRef]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A.; Jawahar, C.V. The Oxford-IIIT Pet Dataset [Data set]. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; University of Oxford: Oxford, UK, 2012. Available online: https://www.robots.ox.ac.uk/~vgg/data/pets/ (accessed on 15 February 2025).

- Roboflow. (2024, Marzo). Frog Dataset [Dataset]. Roboflow Universe. Available online: https://universe.roboflow.com/frog-y6qeg/frog-ukiu5 (accessed on 15 February 2025).

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).