Abstract

Robot-aided outdoor cleaning is a challenging task with plenty of room for improvement. The cleaning profile of a robot should be adjusted based on the availability of litter to ensure proper and efficient cleaning. This article proposes a novel system that integrates a Glasius Bio-Inspired Neural Network (GBNN) for coverage path planning with a vision-based cleaning profile adaptation scheme. The vision-based adaptation occurs based on the presence of leaves on the ground. The proposed system operates in two phases: an initial phase with low-power cleaning to cover areas with no leaves, while localizing leaf spots that require high-power cleaning, and a secondary phase focusing on cleaning these detected leaf spots using a high-power cleaning profile. This cleaning profile adaptation enables more efficient and effective cleaning. Simulation results showed a 47% improvement in energy efficiency for the proposed method compared to a method with no cleaning profile adaptation. Robot hardware tests conducted using the Panthera 2.0 outdoor cleaning robot have demonstrated the real-world applicability of the proposed method.

Keywords:

outdoor cleaning robots; glasius bio-inspired neural network (GBNN); coverage path planning; embodied AI; adaptive cleaning MSC:

68T40

1. Introduction

Outdoor cleaning has traditionally involved the use of manual methods and simple tools to maintain the cleanliness and appearance of exterior spaces. For outdoor places such as public walkways and parks, this usually means employing human cleaners to manually sweep the rubbish or debris using a broom. This type of traditional cleaning method is generally dull, dirty and time-consuming [1,2]. This type of conventional cleaning is also labor-intensive, requiring a substantial workforce to maintain the cleanliness of large outdoor spaces during the day and night. Robots have been developed to alleviate these limitations [3,4].

The outdoor cleaning industry is still largely dominated by manual sweepers or non-autonomous gas and electric powered sweepers. Such methods pose severe health risks, with occupational health surveys reporting musculoskeletal strain and respiratory symptoms in more than half of street cleaning staff [5]. In addition, conventional sweeping also affects road-air quality due to resuspended particulate matter. As such, these issues have sparked the creation of a new class of electrified autonomous street sweepers with higher efficiency due to longer operating hours and consistent performance.

In this space, several commercial solutions have been introduced to prove the feasibility of autonomous robots for such purposes. Trombia Free, the first full power electric sweeper, pairs LiDAR and Global Navigation Satellite System (GNSS) localization with a novel vacuum head and claims 85% lower energy use than diesel counterparts during its Helsinki trials [6]. The Idriverplus S100N “Spring”, built in China, completed its pavement pilots in Kongsberg, and demonstrated reliable gravel and leaf collection even under Nordic winter conditions [7]. In Singapore, Enway’s Bucher CityCat V20e has become the world’s first autonomous compact sweeper approved for public road use [8]. However, these platforms still depend on software advances in coverage planning, adaptive cleaning and dirt type perception to realize their full potential.

Early coverage path planning (CPP) approaches laid the foundations for today’s robot sweeper navigation. Boustrophedon cellular decomposition divides free space into simple cells that a robot can traverse with back-and-forth strokes, guaranteeing full coverage with provable completeness [9]. On the other hand, Spanning Tree Coverage (STC) treats those cells as graph nodes and cuts redundant passes while still visiting every cell once [10]. Newer algorithms replace right angle turns with clothoid curves, giving continuous paths that prevent unnecessary speed reductions, thereby improving traversal times [11]. Actor–critic deep reinforcement learning builds on this by learning coverage policies that further lower energy use and coverage redundancy using reward functions that penalize unnecessary movements and encourage exploration [12].

Glasius et al. [13] proposed a biologically inspired two-layered neural network for trajectory planning and obstacle avoidance. This model, also known as Glasius Bio-Inspired Neural Network (GBNN), consists of two topographically ordered neural maps: a sensory map and a motor map. The sensory map receives sensory inputs and generates an activity pattern representing the optimal path to the target, while the motor map processes the activity pattern, resulting in a moving cluster of neural activity that guides the system along the optimal path. GBNN-based coverage path planning has been applied to robots for autonomous navigation in complex environments. To address the challenges in path planning for Autonomous Underwater Vehicles (AUVs), ref. [14] uses the GBNN algorithm to enhance path planning efficiency for AUVs. This algorithm overcomes the limitations of traditional biologically inspired neural networks, such as high computational complexity and long path planning times. A GBNN with a comprehensive energy consideration model for energy-efficient coverage of ship-hull maintenance robot has been proposed in [15]. In order to enhance the efficiency of area coverage in complex and confined environments, ref. [16,17] proposed modified GBNN algorithms, which incorporate multiple reconfiguration states. This enhancement enables robots to autonomously adjust their width during path planning, optimizing area coverage in open spaces and accessibility in tight areas. An overlapping and reconfiguration reduction criteria to further improve the coverage efficiency has been introduced in [18]. A complete GBNN algorithm for coverage cleaning in hospital context was proposed in [19]. The work [20] introduced an improved GBNN algorithm to enable the robot to operate effectively in 3D space and address the unique challenges posed by the complex, curved surfaces of ship hulls. This enhancement reduces data volume and computational demands, making the path planning process more efficient. A summary of these CPP methods is given in Table 1.

Table 1.

Summary of state-of-the-art CPP methods.

Cleaning efficiency is equally dependent on how well cleaning parameters are matched to specific surface as well as debris types. However, the CPP methods discussed earlier consider only the efficient navigation of a robot for coverage. Thai et al. [21] designed a penetration depth controller that adjusts brush torque to maintain constant contact across surfaces such as asphalt, concrete and cobblestone, thereby improving pick-up consistency by 18%. Several studies on rotary brush cleaning showed that variable speeds can prevent kick-back of pebbles while removing fine dust [22]. Furthermore, vision-based methods that control suction activation can reduce energy costs by up to 80% without sacrificing cleanliness [23]. A recent fuzzy logic method leverages on YOLO v5 road surface detection and can control brush pressure and vacuum power for efficient pick-up of debris [24]. Similarly, the work [25] proposed a method to adapt the cleaning brush pressure based on the surface roughness perceived through LiDAR. However, these methods are limited to cleaning adaptation. Adaptive cleaning control paired with an optimal coverage method could improve energy as well as cleaning efficiency.

In order to detect various dirt types such as food wrappers, bottles, street dirt, wet and dry leaves on the ground, vision systems have been developed extensively in recent years. For cleaning in office environments, ref. [26] presents a vision-based dirt detection system for mobile cleaning robots that can be applied to any surface and dirt without previous training. A dirt recognition vision system was developed utilizing RGB images from a camera to detect and classify dirty areas on different floor surfaces. The system processes surface images pixel by pixel using a spectral residual filtering approach to create cleanliness maps, identifying dirty areas for focused cleaning, thus making it suitable for application on mobile cleaning robots. Bormann et al. [27] introduced a learning-based dirt and object detection system, DirtNet, that is an improvement on the method in [26] both in performance and functionality. The dirt and object detection system developed in [27] is based on YOLOv3, which offers three branches for small, medium and large object detection, as needed for detecting small dirt spots and large objects simultaneously. The paper [28] proposed a vision system based on the YOLOv5 framework for detecting dirty spots on the floor. It employs the YOLOv5 deep learning model that is trained to detect solid dirt and liquid dirt in real time. A similar deep learning-based approach to classify indoor dirt into solid, liquid and stain, and map their locations, has been proposed in [29]. In [30], an automatic leaf blower robot was developed with a ResNet50 deep Convolutional Neural Network (CNN) model to detect and recognize fallen leaves on the ground. Single leaf images, multiple leaf images and ground images without leaves were gathered and used to train the CNN model. After training, the robot was able to distinguish between clean and leaf-covered ground, autonomously maneuvering itself to collect leaves into a garbage bag. To quantify litter on city streets and improve cleanliness of cities, [31] introduced a fully automated computer vision application for littering quantification based on images taken from streets and sidewalks. The application uses the OverFeat-GoogLeNet deep learning CNN-based model to localize and classify different types of urban wastes, such as bottles and leaves in an automated manner within RGB images. These images are taken by a camera mounted on top of a street sweeper vehicle. The results from this application could then be merged to produce a waste density map of the city.

One of the current limitation in robot-aided outdoor cleaning is the lack of a complete solution for efficient and effective cleaning and CPP. In order to solve this limitation, we propose a novel system based on a GBNN and a vision-based adaptive cleaning scheme. The system identifies spots demanding high-power cleaning profile while performing low-power cleaning and then switch to high-power cleaning in the second phase. The locations with leaves are treated as the spots requiring high-power cleaning profile. The proposed strategy follows the following workflow:

- Fully cover the open spaces in a given area with low-power cleaning profile.

- Monitor for special target spots (in our case, leaves) and map them while avoiding the spots.

- Switch to a second-phase cleaning strategy with a high-power cleaning profile.

- Clean the identified leaf spots in the area.

This strategy is modeled focusing on avoiding peaks of currents when modulating the vacuum and brush cleaning system in a dynamic way. This framework is platform-agnostic and developed on top of a Robot Operating System (ROS). Integration in various platforms should be straightforward. The rest of the paper is structured as follows. Section 2 describes the robot platform used for validations and experimentation. Section 3 describes the algorithms used in the proposed system. Section 4 presents the experimentation results. Section 5 contains the conclusions of the paper.

2. Robot Platform

2.1. Robot Hardware

The Panthera 2.0 is an outdoor cleaning robot and its main features are shown in Figure 1. The Panthera 2.0 robot platform has several modules to aid it in cleaning and navigating around its environment. These comprise the cleaning module, the sensing module and the locomotion module.

Figure 1.

Panthera 2.0 robot platform overview.

2.1.1. Cleaning Module

The cleaning module is made up of three key components: brush assemblies, a vacuum system and a standard bin with its mounting mechanism. For sweeping of dirt and rubbish, the Panthera 2.0 is fitted with two side brushes located at the front corners of the robot, as seen in Figure 1. These brushes are each powered by a brush motor, providing the rotational motion required for sweeping. The primary function of the side brushes is to collect dirt and debris from the ground and direct it toward the center of the robot, where the cylindrical brushes are positioned. There are two counter-rotating cylindrical brushes, operating at rotational speeds between 700 and 1200 RPM, fitted underneath the robot, and these work to clean and collect all debris swept in by the side brushes. The cylindrical brushes are powered by dedicated brush motors, which drive them via a belt and pulley mechanism. Once gathered, the debris is picked up and deposited into a cleaning tray for temporary storage.

Subsequently, the vacuum system extracts debris from the cleaning tray by exerting suction power. The debris is then transferred through a hose and into a standard-sized bin at the rear of the robot, as indicated in Figure 1, for storage. The bin measuring 0.9 m in height, 0.4 m in width and length, and weighing approximately 2.2 kg is able to store 120 L of trash before needing to be replaced. This bin is supported by a lift frame that moves vertically along with it. During cleaning operations, the bin is elevated to a height of 140 mm above the ground. When full, it is lowered to ground level so that a human operator can easily remove the bin. A trash level sensor mounted on the bin enclosure monitors the amount of waste in the bin and sends updates to the user interface, alerting robot operators when the bin needs to be emptied.

2.1.2. Sensors Setup

The Panthera 2.0 robot has a variety of sensors to help it perform object detection, localization, mapping of its surroundings and navigation around its environment. The placements of these sensors, such as cameras, LiDARs and ultrasonics, are illustrated in Figure 1. Two stereo cameras, one mounted at the front and another at the rear, capture real-time visual data for detecting both static and moving obstacles. Two surveillance cameras are positioned on top of the robot to record its surroundings during operation, enabling remote monitoring. For obstacle detection, two 32-channel LiDAR sensors are mounted on the front-right and rear-left sides of the robot. While a 128-channel LiDAR mounted on top of the robot assists with localization, path planning and navigation. This sensor configuration provides broad visual coverage and enhances obstacle detection. To support short-range object detection, ultrasonic sensors are installed along the bottom front, left and right sides of the robot. To support short-range object detection, ultrasonic sensors are installed along the bottom front, left and right sides of the robot. These sensors accurately measure distance and detect the presence of nearby obstacles, ensuring reliable detection of any obstacle close to the robot.

2.1.3. Locomotion Mechanism

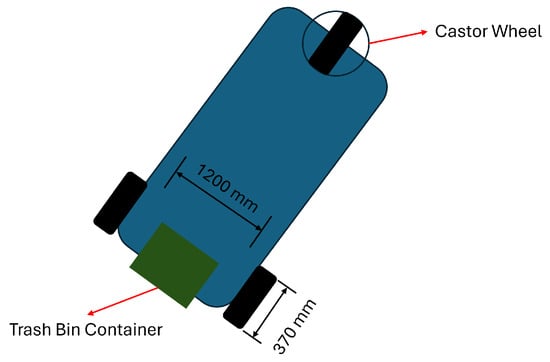

The Panthera 2.0 robot is equipped with a two-wheel differential drive system for locomotion, whereby two drive wheels of diameter 370 mm are mounted near the rear corners of the robot, as shown in Figure 2. The drive wheels are each attached with a 400 W drive motor. These motors are mounted onto the robot’s chassis and provide the necessary power to the drive wheels, via a chain sprocket mechanism. For improved maneuverability, a single caster wheel is attached to the front of the chassis, enabling the robot to turn smoothly with a small turning radius. This configuration enhances the robot’s ability to navigate complex outdoor environments, enabling it to negotiate sharp bends and steer around obstacles easily.

Figure 2.

Robot locomotion system.

2.2. Robot Software

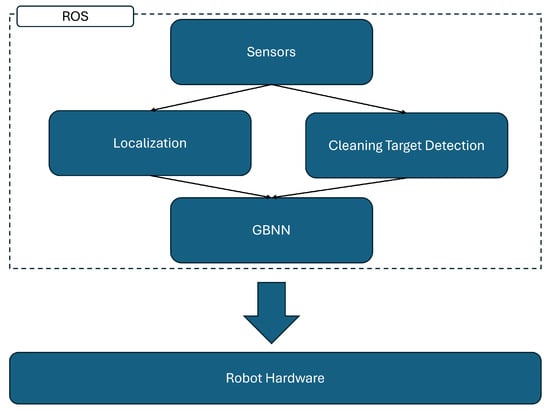

ROS is the central core of the data processing and communication in the proposed system. Through it, the sensor array will run and enable localization and other functionalities. ROS provides a modular and scalable infrastructure for real-time message passing, device abstraction and system orchestration, making it ideal for integrating diverse robotic components. Sensor data such as LiDAR, IMU, GPS and camera feeds are published as standardized topics, which are then subscribed to by various modules for processing tasks like mapping, state estimation and environment awareness.

As shown in Figure 3, GBNNs have control over the robot movement, and every other aspect of hardware control is handled by the machine state and driver software running on top of ROS. The GBNN module, integrated within the autonomy layer, is responsible for interpreting environmental stimuli and dynamically generating movement decisions based on activity fields. It receives processed inputs from the localization and mapping modules and outputs optimal navigation commands that adapt in real time to the presence of obstacles and coverage objectives. Meanwhile, low-level motor commands, hardware interfacing and safety operations are managed by dedicated ROS nodes operating within the machine state framework, ensuring a robust and reactive control loop.

Figure 3.

Software architecture.

3. Coverage Path Planning

3.1. GBNN

A GBNN is inspired by how the biological brain of foraging animals reacts to stimuli. It is a very efficient way to handle and explore unknown environments, even when only partial observations are available, and it also responds appropriately to obstacles.

As given in Algorithm 1, the standard GBNN CPP algorithm starts initializing the environment as a grid; in parallel, a copy of such environment is held to register the already visited spaces. After selecting the starting point, a local scan of the area surrounding the mobile agent will determine the next target. If it is an obstacle, that position is ignored. If not, then the grid space is updated to hold a value representing the stimuli for moving towards that space. After doing that, the grid with a higher stimuli value is chosen as the target, and the agent moves there; this process repeats iteratively until there are no more open spaces.

| Algorithm 1 GBNN coverage path planning (standard). |

|

Inspired by the core functionality of a GBNN, several modifications to the main loop were made in the proposed system to achieve a proper coverage strategy in two phases: low-power cleaning phase and high-power cleaning phase. Dynamically switching the cleaning between high-power and low-power setting would degrade the cleaning modules. Therefore, the cleaning adaptation is switched as two phases. The proposed GBNN CPP algorithm with two phases is given in Algorithm 2. It first prioritizes cleaning open space using low-power cleaning profile while avoiding spotted leaf areas. After that, it switches to the leaf cleaning phase, where the robot will switch to the high-power cleaning profile.

3.1.1. Neighborhood Definitions

For the algorithm to work, it is necessary to fine-tune three hyperparameters: the observation radius, which determines the size of the portion around the robot analyzed every iteration, and the activity influence, which determines the priority that the algorithm assigns to each grid cell. The adjacency of the algorithm is defined by the movement directions; in this case, the cardinal directions are considered. The defined values are given below.

- Observation radius: .

- Activity influence: .

- Movement directions: .

| Algorithm 2 Glasius-inspired coverage navigation with phase switching (proposed). |

|

3.1.2. Grid Initialization Probabilities

Each grid cell is initialized by sampling a discrete random variable , as defined in (1):

The probabilities of each category are defined as

These values are then used as integer encodings in the environment grid:

- 0: OPEN space.

- 1: OBSTACLE.

- 2: LEAF SPOT.

- 3: COVERED.

- Note that COVERED is not part of the initialization process, but a value used in the execution loop to indicate the traversed places.

3.1.3. Activity Decay Function

Lines 14–25 of Algorithm 2 are explained in (2). They represent the attention placed on the unexplored and available space. They are in charge of guiding the robot towards the desired goals. Activity from a target cell is propagated to nearby cells using the inverse square decay function.

where

3.1.4. Movement Scoring Function

In order to control the behavior of the robot and to influence it so that it does not overlap with already visited places, each candidate neighboring cell is assigned a movement score based on (3) (see Algorithm 2 line 37).

Penalty is defined as in (4).

3.1.5. Open Area Coverage

In the first phase, when the robot traverses around an open area, the condition explained in (5) classifies it as COVERED.

3.1.6. Leaf Spot Coverage

In the second phase, after cleaning the open areas, the robot traverses around all the leaf-spotted places; the logic behind the identification of leaf-spotted places is given in (6).

3.1.7. Loop Detection via Repetition Ratio

In practice, GBNN algorithms tend to fall within execution loops, whether the robot is surrounded by already visited areas or the penalty accumulates to the point where the robot becomes trapped in local minima. As explained in (7), looping behavior is detected using a repetition ratio r over a moving window of previous positions (Algorithm 2 line 26).

If , the agent enters escape mode, which is a heuristic solution based on Breath-First Search (BFS). BFS moves the robot to the closest open and non-explored area.

3.2. Detecting Special Target Spots

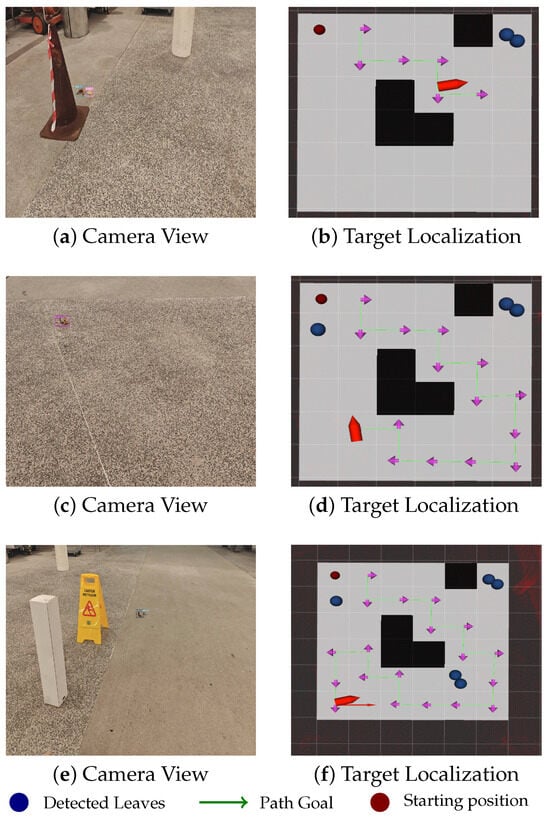

An intermediary computer vision-based model is necessary to detect the leaves on the ground. The leaf detection model proposed in [32] is used in this regard. This leaf detection method is based on custom trained Ground DINO. By combining the leaf detection results with the depth image from the Realsense camera in the front, the system can localize leaf spots in the cleaning area and tag them in the respective grids of the occupancy grid, which tracks environmental conditions. This workflow is detailed in Algorithm 3.

| Algorithm 3 Leaf detection and 3D localization with Grounding DINO. |

|

After assigning a prompt, the model takes the current frame in the camera buffer and produces inference to obtain the centroids of the detected bounding boxes. The method initially calculates the position with respect to the camera_link and subsequently performs the transformation into the base_link, resulting in the world coordinates of every spot; after that, the spot is extrapolated into the occupancy grid and the area is represented as obstacle in the proposed GBNN algorithm.

4. Results

4.1. Simulations

The proposed system was initially tested with simulations. In addition, the energy performance of the proposed two-phase GBNN method has been compared against the standard GBNN CPP (single-phase cleaning with high-power profile). The high-power and low-power cleaning profiles are set up as 800 W and 240 W, respectively, based on the observed behavior of the robot.

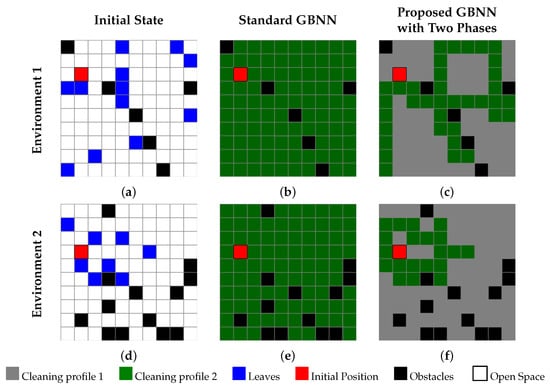

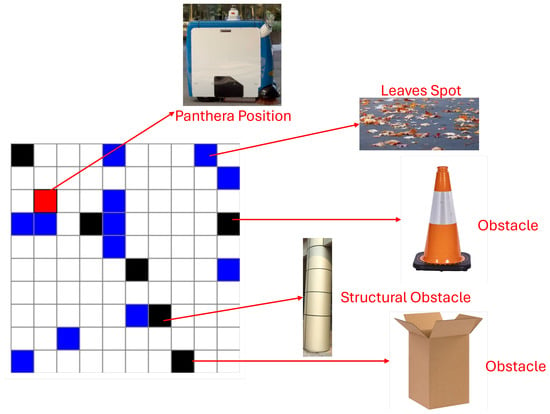

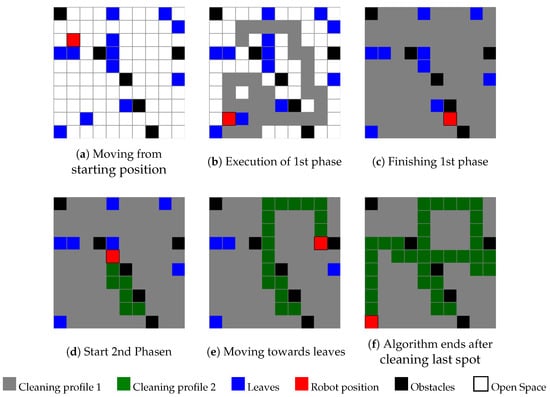

Three simulation test cases were considered. The first two cases were static environments, shown in Figure 4, and were considered for the simulations. The arrangement of Environment 1 is shown in Figure 4a including locations of leaf spot, obstacles and the initial position of the robot. A brief explanation of the representation of the corresponding environment components in the grid map is shown in Figure 5. The standard GBNN approach achieved complete cleaning of the environment with use of 0.1333 kWh energy with only the high-power cleaning profile (see coverage results in Table 2 and Figure 4b). In contrast, the proposed method performed the coverage in two phases, as detailed in Figure 6 with intermediate status. The first phase of the cleaning ends at the state shown in Figure 6c. In the second phase, the goal of the robot was to cover the leaf spots. According to the results, the proposed method effectively switched the cleaning profile and achieved the complete coverage (100% coverage). The energy used by the proposed method was 0.0712 kWh, which is considerably lower than the standard GBNN approach with a single phase.

Figure 4.

Comparison between the standard GBNN and the proposed two-phase GBNN across two environments. (a): initial state of the Environment 1, (b): coverage results in Environment 1 using standard GBNN, (c): coverage results in Environment 2 using the proposed GBNN with two phases, (d): initial state of the Environment 2, (e): coverage results in Environment 2 using standard GBNN, and (f): coverage results in Environment 2 using the proposed GBNN with two phases.

Figure 5.

Representation of environment components in a grid map. Here, the black grids correspond to any obstacle, from objects in the area to structural stuff. The blue grids correspond to the leaf spots, and the red grid is the robot’s position.

Table 2.

Comparison of standard one-phase vs proposed two-phase method.

Figure 6.

Execution steps of the proposed algorithm in Environment 1.

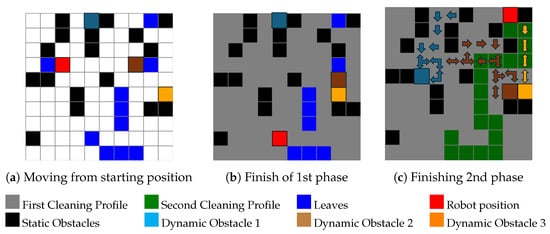

Similar improved energy performance was observed in Environment 2. The standard GBNN method used 0.1166 kWh of energy, while the proposed two-phase GBNN only used 0.0535 kWh energy. A scenario with dynamic obstacles was considered for the third case, shown in Figure 7. Here, the dynamic obstacles were moved randomly. The algorithm consistently circumvented obstacles, showcasing its capability of handling dynamic obstacles. The proposed method has shown superiority in terms of energy efficiency in this dynamic obstacle scenario too. These observations from the simulation results confirm the performance improvement of the proposed method compared to the standard GBNN method with one phase.

Figure 7.

Experiment with dynamic obstacles.

4.2. Robot Hardware Experiments

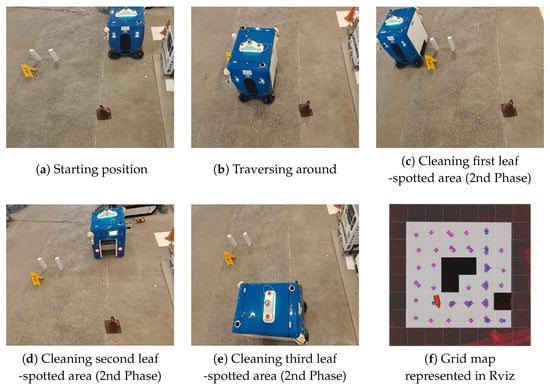

A robot hardware test case has been conducted considering the Panthera 2.0 robot to validate the real-world applicability of the proposed method. Figure 8a shows the initial arrangement of the test environment with the robot. Figure 9 shows the moments when the robot detects each of the leaf spot during the traversing of phase 1. The robot has detected four leaf spot during phase 1 (see Figure 9f). These results confirm its effectiveness.

Figure 8.

Robot hardware test results.

Figure 9.

Leaf spot detection and localization during the robot hardware experiment.

Figure 8f shows the final paths of the robot in Rviz. According to the results of this experiment case, the proposed method was successful in completely cleaning the environment by switching the cleaning profile between the two phases effectively.

4.3. Discussion of Results

A standard GBNN-based CPP approach follows a single-profile cleaning workflow. The objective of the proposed development is to improve the energy efficiency by adapting a GBNN-based algorithm to have two phases for cleaning. According to the simulation results, the proposed method uses on average 48% less energy compared to the standard approach while accomplishing the same cleaning task. Both proposed method and the standard approach are capable of handling dynamic obstacles. The proposed method is better even in dynamic obstacle scenarios in terms of energy efficiency.

5. Conclusions

This paper proposed a novel system for efficient cleaning and CPP of an outdoor cleaning robot. The proposed system leverages a computer vision-based adaptive cleaning scheme and a Glasius Bio-Inspired Neural Network-based CPP. The proposed method uses a two-phase cleaning approach to increase the energy efficiency. To validate the applicability of the proposed method, we conducted simulation and hardware experiments, demonstrating a 48% average energy saving in the proposed method compared to a single-profile CPP cleaning approach while ascertaining 100% coverage. Therefore, the proposed method would be useful in improving the performance of robot-aided outdoor cleaning. As future work, it is planned to integrate more behaviors like the dynamic detection of humans to influence planning. For example, if a place is a common walk-path for humans, that specific area can be left for later or one can switch to a specific cleaning profile to reduce sound contamination and discomfort among people in a public place. The integration of foundation models into cleaning workflows could help robots detect risky situations to trigger complex behaviors in environments with a dense amount of dynamic obstacles. Exploring such algorithms is also an important future action to improve outdoor cleaning robots.

Author Contributions

Conceptualization, B.F.G., M.A.V.J.M. and M.R.E.; methodology, B.F.G.; software, B.F.G., J.W.S.L. and A.J.; validation, B.F.G., J.W.S.L. and A.J.; formal analysis, B.F.G., M.A.V.J.M., M.R.E. and J.W.S.L.; investigation, B.F.G.; writing—original draft preparation, B.F.G.; writing—review and editing, M.A.V.J.M. and M.R.E.; visualization, B.F.G.; supervision, M.A.V.J.M. and M.R.E.; project administration, M.A.V.J.M. and M.R.E.; funding acquisition, M.R.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the National Robotics Programme under its National Robotics Programme (NRP) BAU, PANTHERA 2.0: Deployable Autonomous Pavement Sweeping Robot through Public Trials, Award No. M23NBK0065, and also supported by A*STAR under its RIE2025 IAF-PP programme, Modular Reconfigurable Mobile Robots (MR)2, Grant No. M24N2a0039.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yang, W. Road-Sweeping Vehicles to Replace Manual Cleaning in More Private Landed Estates. 2025. Available online: https://www.straitstimes.com/singapore/housing/road-sweeping-vehicles-to-replace-manual-cleaning-in-more-private-landed-estates (accessed on 5 June 2025).

- Coastal News. Ride-On Sweepers vs. Traditional Cleaning Methods: Pros and Cons. 2024. Available online: https://www.coastalnews.com.au/house-garden/39395-ride-on-sweepers-vs-traditional-cleaning-methods-pros-and-cons (accessed on 5 June 2025).

- Chang, M.S.; Chou, J.H.; Wu, C.M. Design and implementation of a novel outdoor road-cleaning robot. Adv. Robot. 2010, 24, 85–101. [Google Scholar] [CrossRef]

- Jeon, J.; Jung, B.; Koo, J.C.; Choi, H.R.; Moon, H.; Pintado, A.; Oh, P. Autonomous robotic street sweeping: Initial attempt for curbside sweeping. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics (ICCE), Berlin, Germany, 3–6 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 72–73. [Google Scholar]

- van Kampen, V.; Hoffmeyer, F.; Seifert, C.; Brüning, T.; Bünger, J. Occupational health hazards of street cleaners—A literature review considering prevention practices at the workplace. Int. J. Occup. Med. Environ. Health 2020, 33, 701–732. [Google Scholar] [CrossRef] [PubMed]

- Trombia Technologies. Trombia Free—Autonomous Street Sweeper Product Brief. 2024. Available online: https://trombia.com (accessed on 15 May 2025).

- Idriverplus. S100N “Spring” Autonomous Sweeper Field Trials in Kongsberg. 2022. Available online: https://idriverplus.com (accessed on 15 May 2025).

- Enway GmbH. Autonomous Bucher CityCat V20e Sweeper Approved for Public-Road Use in Singapore. 2021. Press Release, 12 July 2021. Available online: https://enway.ai (accessed on 15 May 2025).

- Choset, H.; Pignon, P. Coverage Path Planning: The Boustrophedon Cell Decomposition. In Proceedings of the Proceedings IEEE International Conference Field and Service Robotics, London, UK, December 1998; pp. 203–209. [Google Scholar]

- Zelinsky, A.; Jarvis, R.A.; Byrne, J.; Yuta, S. Planning Paths of Complete Coverage of an Unstructured Environment by a Mobile Robot. In Proceedings of the International Conference on Advanced Robotics, Atlanta, GA, USA, 2–6 May 1993; pp. 533–538. [Google Scholar]

- Šelek, A.; Seder, M.; Brezak, M.; Petrović, I. Smooth Complete Coverage Trajectory Planning Algorithm for a Nonholonomic Robot. Sensors 2022, 22, 9269. [Google Scholar] [CrossRef] [PubMed]

- Garrido-Castañeda, S.I.; Vasquez, J.I.; Antonio-Cruz, M. Coverage Path Planning Using Actor–Critic Deep Reinforcement Learning. Sensors 2025, 25, 1592. [Google Scholar] [CrossRef] [PubMed]

- Glasius, R.; Komoda, A.; Gielen, S.C. A biologically inspired neural net for trajectory formation and obstacle avoidance. Biol. Cybern. 1996, 74, 511–520. [Google Scholar] [CrossRef] [PubMed]

- Zhu, D.; Tian, C.; Sun, B.; Luo, C. Complete coverage path planning of autonomous underwater vehicle based on GBNN algorithm. J. Intell. Robot. Syst. 2019, 94, 237–249. [Google Scholar] [CrossRef]

- Muthugala, M.V.J.; Samarakoon, S.B.P.; Elara, M.R. Toward energy-efficient online complete coverage path planning of a ship hull maintenance robot based on glasius bio-inspired neural network. Expert Syst. Appl. 2022, 187, 115940. [Google Scholar] [CrossRef]

- Muthugala, M.V.J.; Samarakoon, S.B.P.; Elara, M.R. Online coverage path planning scheme for a size-variable robot. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 5688–5694. [Google Scholar]

- Yi, L.; Wan, A.Y.S.; Le, A.V.; Hayat, A.A.; Tang, Q.R.; Mohan, R.E. Complete coverage path planning for reconfigurable omni-directional mobile robots with varying width using GBNN(n). Expert Syst. Appl. 2023, 228, 120349. [Google Scholar] [CrossRef]

- Muthugala, M.V.J.; Samarakoon, S.B.P.; Wijegunawardana, I.D.; Elara, M.R. Improving Coverage Performance of a Size-reconfigurable Robot based on Overlapping and Reconfiguration Reduction Criteria. In Proceedings of the 2025 IEEE International Conference on Robotics and Automation (ICRA), Atlanta, GA, USA, 19–23 May 2025; IEEE: Piscataway, NJ, USA, 2025. [Google Scholar]

- Wan, A.Y.S.; Yi, L.; Hayat, A.A.; Gen, M.C.; Elara, M.R. Complete area-coverage path planner for surface cleaning in hospital settings using mobile dual-arm robot and GBNN with heuristics. Complex Intell. Syst. 2024, 10, 6767–6785. [Google Scholar] [CrossRef]

- Wang, X.; Xu, C.; Ji, D. Coverage Path Planning for Ship Hull Based on Improved GBNN Algorithm. In Proceedings of the 2024 43rd Chinese Control Conference (CCC), Kunming, China, 28–31 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 4451–4456. [Google Scholar]

- Thanh, H.T.; Viet, T.V.; Duc, T.H.; Quoc, T.D. Control of the street cleaning machine with a side brush for different road surfaces using PID and slick mode controllers. FME Trans. 2023, 51, 318–328. [Google Scholar] [CrossRef]

- Wang, C.; Parker, G. Analysis of rotary brush control characteristics for a road sweeping robot vehicle. In Proceedings of the 2014 International Conference on Mechatronics and Control (ICMC), Jinzhou, China, 3–5 July 2014; pp. 1799–1804. [Google Scholar] [CrossRef]

- Donati, L.; Fontanini, T.; Tagliaferri, F.; Prati, A. An Energy Saving Road Sweeper Using Deep Vision for Garbage Detection. Appl. Sci. 2020, 10, 8146. [Google Scholar] [CrossRef]

- Wang, H.; Wang, C.; Ao, Y.; Zhang, X. Fuzzy control algorithm of cleaning parameters of street sweeper based on road garbage volume grading. Sci. Rep. 2025, 15, 8405. [Google Scholar] [CrossRef] [PubMed]

- Azcarate, R.F.G.; Jayadeep, A.; Zin, A.K.; Lee, J.W.S.; Muthugala, M.V.J.; Elara, M.R. Adaptive Outdoor Cleaning Robot with Real-Time Terrain Perception and Fuzzy Control. Mathematics 2025, 13, 2245. [Google Scholar] [CrossRef]

- Bormann, R.; Weisshardt, F.; Arbeiter, G.; Fischer, J. Autonomous dirt detection for cleaning in office environments. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1260–1267. [Google Scholar]

- Bormann, R.; Wang, X.; Xu, J.; Schmidt, J. Dirtnet: Visual dirt detection for autonomous cleaning robots. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1977–1983. [Google Scholar]

- Canedo, D.; Fonseca, P.; Georgieva, P.; Neves, A.J. A deep learning-based dirt detection computer vision system for floor-cleaning robots with improved data collection. Technologies 2021, 9, 94. [Google Scholar] [CrossRef]

- Singh, I.S.; Wijegunawardana, I.; Samarakoon, S.B.P.; Muthugala, M.V.J.; Elara, M.R. Vision-based dirt distribution mapping using deep learning. Sci. Rep. 2023, 13, 12741. [Google Scholar] [CrossRef] [PubMed]

- Hsia, S.C.; Wang, S.H.; Yeh, J.Y.; Chang, C.Y. A Smart Leaf Blow Robot Based on Deep Learning Model. IEEE Access 2023, 11, 111956–111962. [Google Scholar] [CrossRef]

- Rad, M.S.; von Kaenel, A.; Droux, A.; Tieche, F.; Ouerhani, N.; Ekenel, H.K.; Thiran, J.P. A Computer Vision System to Localize and Classify Wastes on the Streets. In Proceedings of the 2017 International Conference on Computer Vision Systems, Cham, Switzerland, 10–13 July 2017; pp. 195–204. [Google Scholar]

- Gómez, B.F.; Jayadeep, A.; Muthugala, M.A.V.J.; Elara, M.R.; You, W. A Vision-Language Model Approach to Synthetic Data Generation for Outdoor Cleaning Robots; Singapore University of Technology and Design: Singapore, 2025; Manuscript in preparation. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).