RAMHA: A Hybrid Social Text-Based Transformer with Adapter for Mental Health Emotion Classification

Abstract

1. Introduction

Problem Statement

- RAMHA Architecture:We propose a novel multi-stage hybrid model that integrates RoBERTa-large, lightweight adapters, BiLSTM, and attention mechanisms in an interpretable and parameter-efficient framework for mental health classification.

- Emotion-to-Mental Mapping: We perform a psychologically meaningful remapping of an emotion-rich dataset into three diagnostic classes—depression, anxiety, and stress—enhancing real-world applicability.

- Adapter Efficiency: Leveraging Pfeiffer Adapter Fusion, RAMHA fine-tunes task-specific parameters while largely preserving the generalization capabilities of the pretrained language model, with minimal training costs.

- Empirical Verification: RAMHA is evaluated on GoEmotions data and demonstrates superior performance compared to baseline Transformer-based classifiers, particularly in detecting subtle emotional signals indicative of mental health conditions.

- Binary and Ternary Classification: The model addresses both binary classification tasks (e.g., detecting the presence or absence of depression) and ternary classification tasks (e.g., distinguishing between depression, anxiety, and control).

2. Related Work

2.1. Machine Learning Approaches

2.2. Deep Learning Approaches

2.3. Transformer-Based Methods

2.4. Adapter-Based Fine-Tuning

2.5. Attention-Based Hybrid Architectures

3. Methodology and Model Design

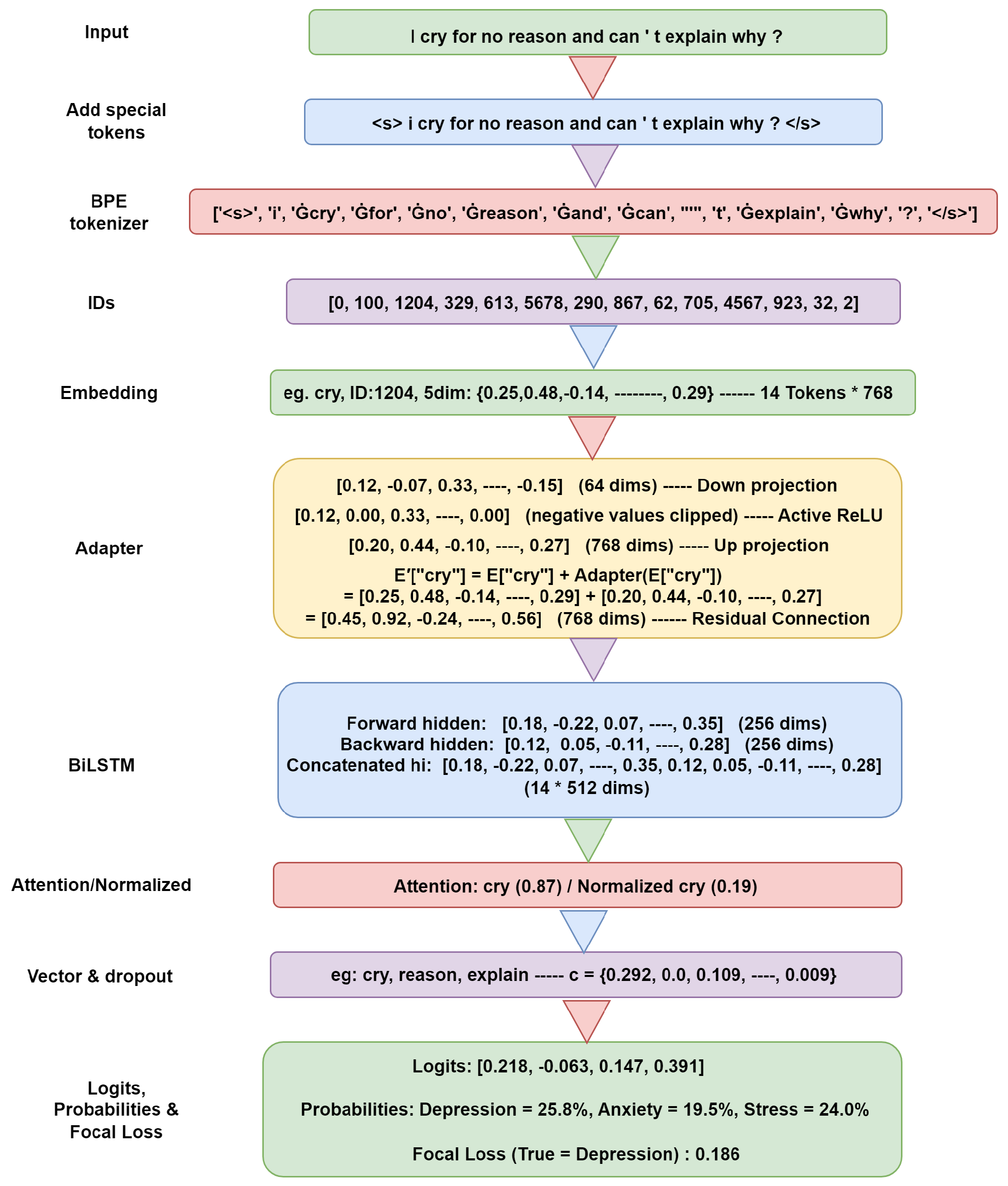

| Algorithm 1 RAMHA: RoBERTa–Adapter–BiLSTM–Attention framework. |

Require: Input text x Ensure: Predicted class probabilities p 1: 2: 3: 4: 5: for each hidden state do 6: 7: end for 8: 9: 10: 11: 12: 13: 14: return p |

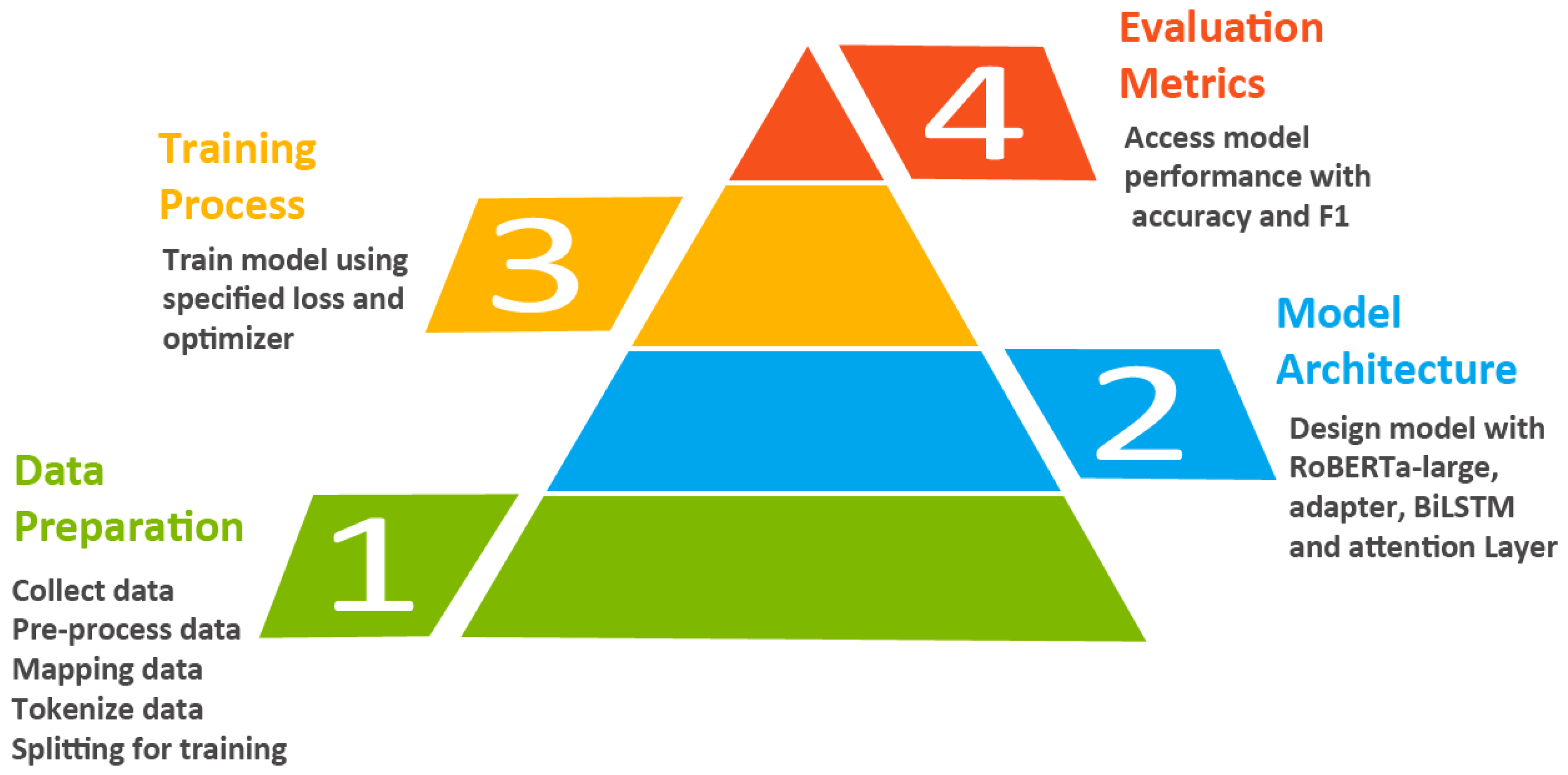

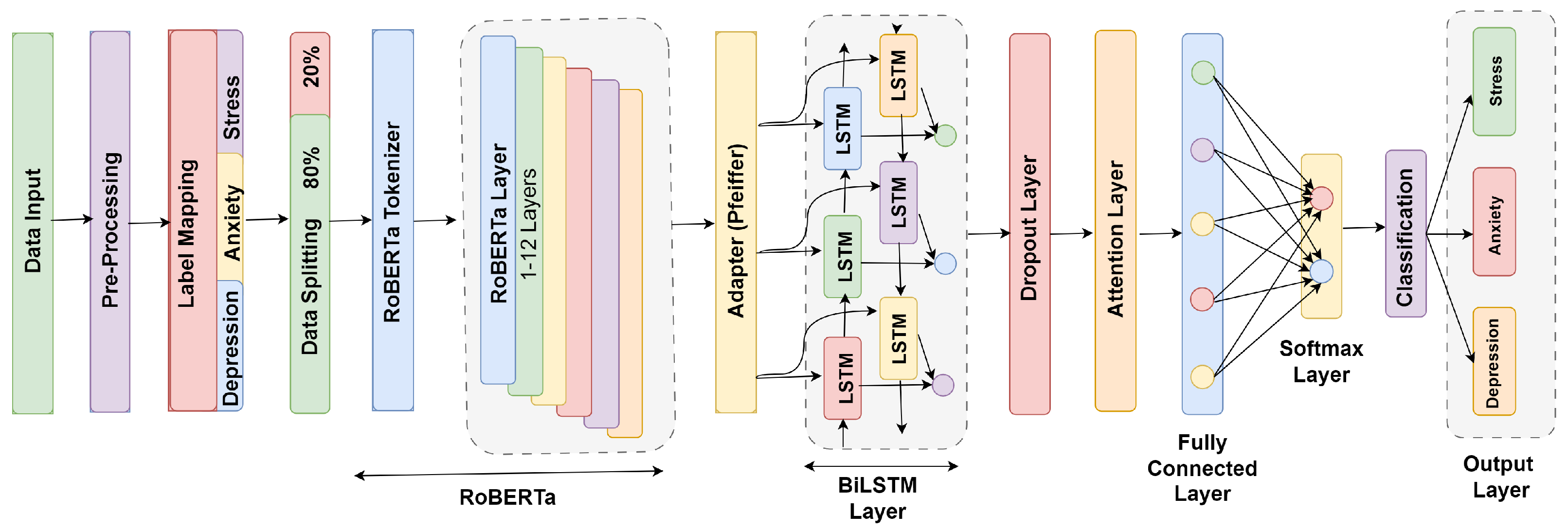

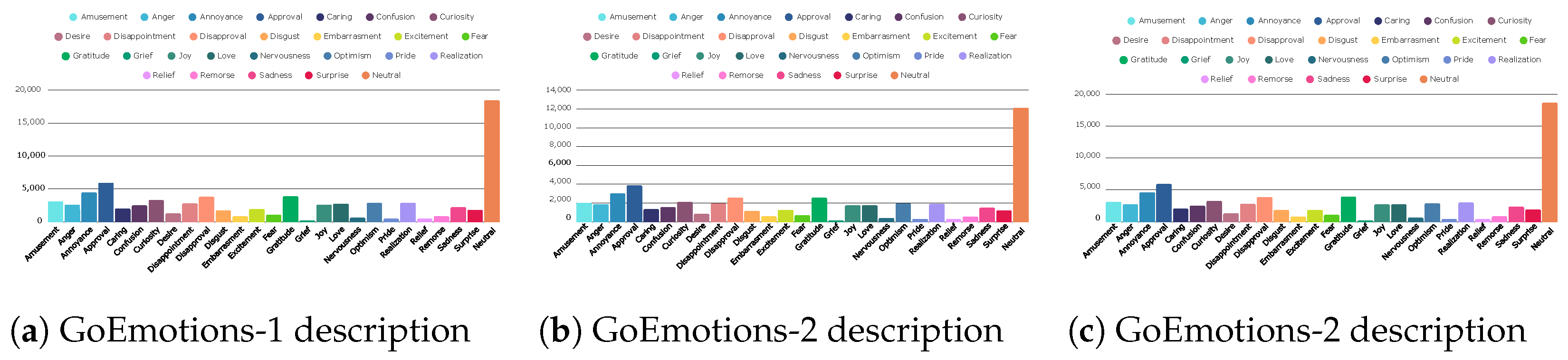

3.1. Data Preparation

- Loading and Preprocessing: The data is read in CSV format. Every item of text is preprocessed, i.e., all unnecessary punctuation marks, symbols (i.e., dots, commas, and special characters), HTML tags, and a lot of whitespace are deleted. This makes the input noise-free and optimized to be tokenized and modeled downstream.

- Mapping Emotions to Mental Health categories: A mapping dictionary has been formulated that has defined the original 28 emotion labels using three high-level categories of mental illnesses:

- ‑

- Depression: It contains sadness, grief, loneliness, and disappointment.

- ‑

- Anxiety: It consists of fear, nervousness, worry, and embarrassment.

- ‑

- Stress: Stress includes labels such as annoyance, confusion, nervousness, disappointment, embarrassment, and surprise.

- Choice of Primary Labels: A particular Reddit comment can be associated with various emotions; however, there is an argmax operation where we pick out the most obvious mental health label on a per-sample basis. This reduces the multi-label framework into a multi-class classification problem.

- Label Encoding: Label encoding is used so that the category labels in mental health (depression, anxiety, stress) can be translated into integers (e.g., 0, 1, 2) and are compatible with PyTorch models.

- Data Balancing: To mitigate the problem of class imbalance that exists in the combined datasets, we conducted proportional sampling so that each target class—depression, anxiety, and stress—would have the same number of samples before the data was split.

- Data Splitting: The dataset was divided into training and validation subsets using an 80/20 split. The validation set was employed to monitor model performance during training and to mitigate the risk of overfitting. To ensure fairness and balanced representation of classes, we applied class-balancing techniques along with focal loss, making the validation set a reliable indicator of the model’s generalization performance, effectively serving as a substitute for a separate test set.

3.2. Tokenization

- Truncation and Padding: Each input sequence is truncated to be equal to the maximum model length or padded to a fixed length to provide consistency.

- Special Tokens: RoBERTa-specific tokens and padding tokens will be used where necessary.

- Token Type and Attention Masks: These are automatically generated to create the difference between tokens and attention-based processing.

3.3. Model Architecture

3.4. Pretrained Encoder (RoBERTa-Large)

3.5. Adapter Integration: Pfeiffer Configuration

3.6. BiLSTM

3.7. Attention Mechanism

3.8. Dropout

3.9. Classification Head

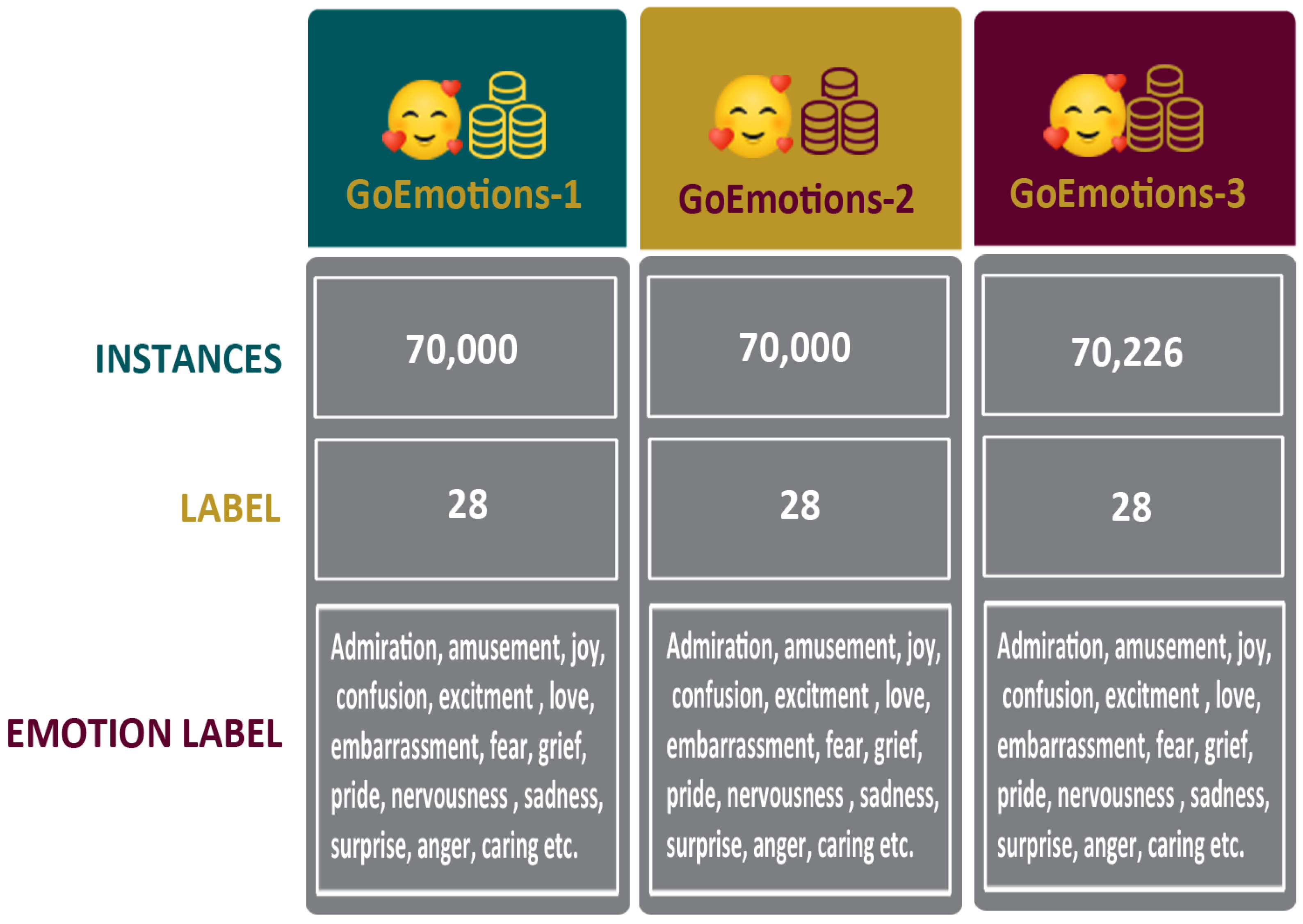

4. Dataset and Hyper-Parameters

4.1. Dataset

4.2. Computational Environment and Hyper-Parameters

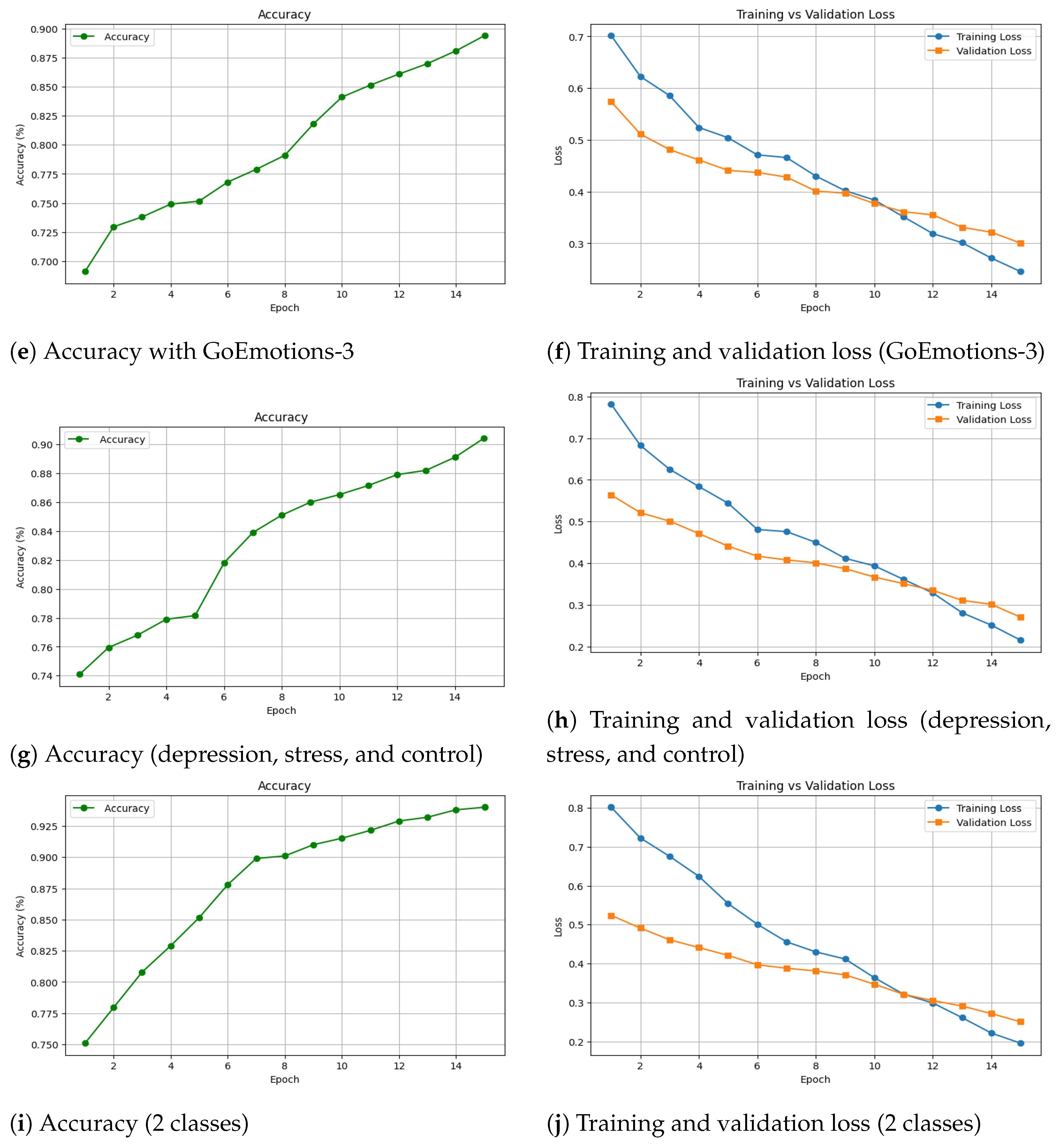

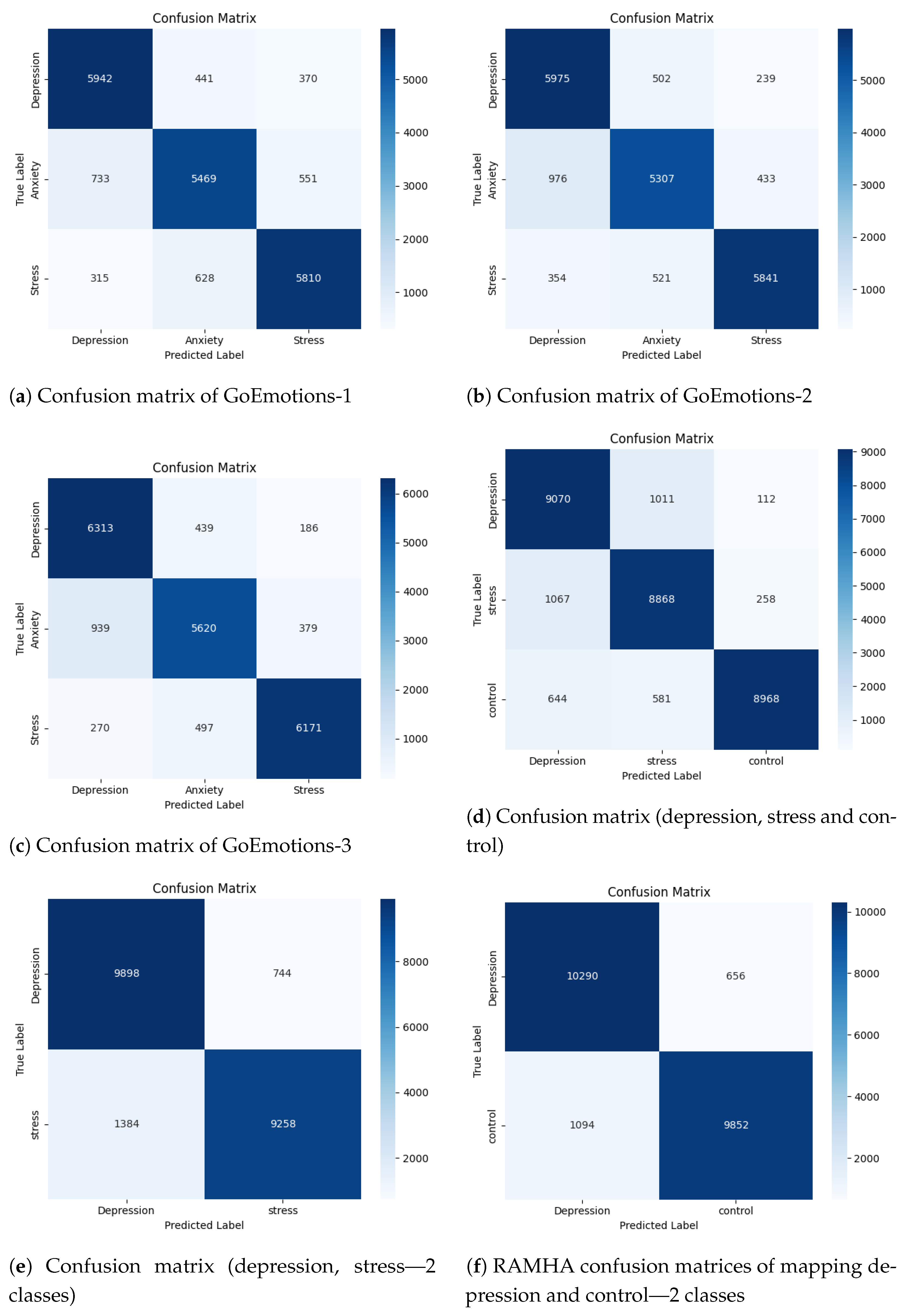

5. Results Discussions

5.1. Ablation Study

5.2. Comparative Study

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- What Are the Types of Mental Disorders?—my.clevelandclinic.org. Available online: https://my.clevelandclinic.org/health/diseases/22295-mental-health-disorders (accessed on 1 August 2025).

- International Classification of Diseases (ICD)—who.int. Available online: https://www.who.int/standards/classifications/classification-of-diseases?utm_source=chatgpt.com (accessed on 17 August 2025).

- Mental Health of Adolescents—who.int. Available online: https://www.who.int/news-room/fact-sheets/detail/adolescent-mental-health (accessed on 1 August 2025).

- Depressive Disorder (Depression)—who.int. Available online: https://www.who.int/news-room/fact-sheets/detail/depression (accessed on 1 August 2025).

- Jin, Y.; Liu, J.; Li, P.; Wang, B.; Yan, Y.; Zhang, H.; Ni, C.; Wang, J.; Li, Y.; Bu, Y.; et al. The Applications of Large Language Models in Mental Health: Scoping Review. J. Med. Internet Res. 2025, 27, e69284. [Google Scholar] [CrossRef]

- Ilias, L.; Mouzakitis, S.; Askounis, D. Calibration of transformer-based models for identifying stress and depression in social media. IEEE Trans. Comput. Soc. Syst. 2023, 11, 1979–1990. [Google Scholar] [CrossRef]

- Kumar, M.; Khan, L.; Chang, H.T. Evolving techniques in sentiment analysis: A comprehensive review. PeerJ Comput. Sci. 2025, 11, e2592. [Google Scholar] [CrossRef]

- Khan, L.; Amjad, A.; Afaq, K.M.; Chang, H.T. Deep sentiment analysis using CNN-LSTM architecture of English and Roman Urdu text shared in social media. Appl. Sci. 2022, 12, 2694. [Google Scholar] [CrossRef]

- Conway, M.; O’Connor, D. Social media, big data, and mental health: Current advances and ethical implications. Curr. Opin. Psychol. 2016, 9, 77–82. [Google Scholar] [CrossRef]

- Ernala, S.K.; Birnbaum, M.L.; Candan, K.A.; Rizvi, A.F.; Sterling, W.A.; Kane, J.M.; De Choudhury, M. Methodological gaps in predicting mental health states from social media: Triangulating diagnostic signals. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019; pp. 1–16. [Google Scholar]

- Tolentino, J.C.; Schmidt, S.L. DSM-5 criteria and depression severity: Implications for clinical practice. Front. Psychiatry 2018, 9, 450. [Google Scholar] [CrossRef]

- Hassan, A.U.; Hussain, J.; Hussain, M.; Sadiq, M.; Lee, S. Sentiment analysis of social networking sites (SNS) data using machine learning approach for the measurement of depression. In Proceedings of the 2017 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 18–20 October 2017; pp. 138–140. [Google Scholar]

- Khan, L.; Shahreen, M.; Qazi, A.; Jamil Ahmed Shah, S.; Hussain, S.; Chang, H.T. Migraine headache (MH) classification using machine learning methods with data augmentation. Sci. Rep. 2024, 14, 5180. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Pfeiffer, J.; Kamath, A.; Rücklé, A.; Cho, K.; Gurevych, I. Adapterfusion: Non-destructive task composition for transfer learning. arXiv 2020, arXiv:2005.00247. [Google Scholar]

- Han, S.; Mao, R.; Cambria, E. Hierarchical attention network for explainable depression detection on Twitter aided by metaphor concept mappings. arXiv 2022, arXiv:2209.07494. [Google Scholar] [CrossRef]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Kerasiotis, M.; Ilias, L.; Askounis, D. Depression detection in social media posts using transformer-based models and auxiliary features. Soc. Netw. Anal. Min. 2024, 14, 196. [Google Scholar] [CrossRef]

- Chiong, R.; Budhi, G.S.; Dhakal, S.; Chiong, F. A textual-based featuring approach for depression detection using machine learning classifiers and social media texts. Comput. Biol. Med. 2021, 135, 104499. [Google Scholar] [CrossRef] [PubMed]

- Kabir, M.; Ahmed, T.; Hasan, M.B.; Laskar, M.T.R.; Joarder, T.K.; Mahmud, H.; Hasan, K. DEPTWEET: A typology for social media texts to detect depression severities. Comput. Hum. Behav. 2023, 139, 107503. [Google Scholar]

- Resnik, P.; Armstrong, W.; Claudino, L.; Nguyen, T.; Nguyen, V.A.; Boyd-Graber, J. Beyond LDA: Exploring supervised topic modeling for depression-related language in Twitter. In Proceedings of the 2nd Workshop on Computational Linguistics and Clinical Psychology: From Linguistic Signal to Clinical Reality, Denver, CO, USA, 5 June 2015; pp. 99–107. [Google Scholar]

- Coppersmith, G.; Leary, R.; Whyne, E.; Wood, T. Quantifying suicidal ideation via language usage on social media. In Proceedings of the Joint Statistics Meetings Proceedings, Statistical Computing Section, JSM, Seattle, WA, USA, 8–13 August 2015; Volume 110, p. 8. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- AlSagri, H.S.; Ykhlef, M. Machine learning-based approach for depression detection in twitter using content and activity features. IEICE Trans. Inf. Syst. 2020, 103, 1825–1832. [Google Scholar] [CrossRef]

- Sato, J.R.; Moll, J.; Green, S.; Deakin, J.F.; Thomaz, C.E.; Zahn, R. Machine learning algorithm accurately detects fMRI signature of vulnerability to major depression. Psychiatry Res. Neuroimaging 2015, 233, 289–291. [Google Scholar] [CrossRef]

- Mehmood, F.; Mumtaz, N.; Mehmood, A. Next-Generation Tools for Patient Care and Rehabilitation: A Review of Modern Innovations. Actuators 2025, 14, 133. [Google Scholar] [CrossRef]

- Amjad, A.; Khan, L.; Chang, H.T. Effect on speech emotion classification of a feature selection approach using a convolutional neural network. PeerJ Comput. Sci. 2021, 7, e766. [Google Scholar] [CrossRef] [PubMed]

- Amjad, A.; Khan, L.; Ashraf, N.; Mahmood, M.B.; Chang, H.T. Recognizing semi-natural and spontaneous speech emotions using deep neural networks. IEEE Access 2022, 10, 37149–37163. [Google Scholar] [CrossRef]

- Ashraf, N.; Khan, L.; Butt, S.; Chang, H.T.; Sidorov, G.; Gelbukh, A. Multi-label emotion classification of Urdu tweets. PeerJ Comput. Sci. 2022, 8, e896. [Google Scholar] [CrossRef]

- Khan, L.; Amjad, A.; Ashraf, N.; Chang, H.T.; Gelbukh, A. Urdu sentiment analysis with deep learning methods. IEEE Access 2021, 9, 97803–97812. [Google Scholar] [CrossRef]

- Orabi, A.H.; Buddhitha, P.; Orabi, M.H.; Inkpen, D. Deep learning for depression detection of twitter users. In Proceedings of the Fifth Workshop on Computational Linguistics and Clinical Psychology: From Keyboard to Clinic, New Orleans, LA, USA, 5 June 2018; pp. 88–97. [Google Scholar]

- Kim, J.; Lee, J.; Park, E.; Han, J. A deep learning model for detecting mental illness from user content on social media. Sci. Rep. 2020, 10, 11846. [Google Scholar] [CrossRef] [PubMed]

- Mehmood, F.; Mehmood, A.; Whangbo, T.K. Alzheimer’s Disease Detection in Various Brain Anatomies Based on Optimized Vision Transformer. Mathematics 2025, 13, 1927. [Google Scholar] [CrossRef]

- Amanat, A.; Rizwan, M.; Javed, A.R.; Abdelhaq, M.; Alsaqour, R.; Pandya, S.; Uddin, M. Deep learning for depression detection from textual data. Electronics 2022, 11, 676. [Google Scholar] [CrossRef]

- Vandana; Marriwala, N.; Chaudhary, D. A hybrid model for depression detection using deep learning. Meas. Sens. 2023, 25, 100587. [Google Scholar] [CrossRef]

- Karim, M.R.; Syeed, M.M.; Fatema, K.; Hossain, S.; Khan, R.H.; Uddin, M.F. AnxPred: A Hybrid CNN-SVM Model with XAI to Predict Anxiety among University Students. In Proceedings of the 2024 IEEE 17th International Scientific Conference on Informatics (Informatics), Poprad, Slovakia, 13–15 November 2024; pp. 132–137. [Google Scholar]

- Khan, L.; Amjad, A.; Ashraf, N.; Chang, H.T. Multi-class sentiment analysis of urdu text using multilingual BERT. Sci. Rep. 2022, 12, 5436. [Google Scholar] [CrossRef]

- Mitra, S. Suicidal Intention Detection in Tweets Using BERT-Based Transformers. In Proceedings of the 2022 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS), Greater Noida, India, 4–5 November 2022. [Google Scholar]

- Houlsby, N.; Giurgiu, A.; Jastrzebski, S.; Morrone, B.; De Laroussilhe, Q.; Gesmundo, A.; Attariyan, M.; Gelly, S. Parameter-efficient transfer learning for NLP. In Proceedings of the International Conference on Machine Learning. PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 2790–2799. [Google Scholar]

- Qasim, A.; Mehak, G.; Hussain, N.; Gelbukh, A.; Sidorov, G. Detection of depression severity in social media text using transformer-based models. Information 2025, 16, 114. [Google Scholar] [CrossRef]

- Tavchioski, I.; Robnik-Šikonja, M.; Pollak, S. Detection of depression on social networks using transformers and ensembles. arXiv 2023, arXiv:2305.05325. [Google Scholar] [CrossRef]

- Pfeiffer, J.; Rücklé, A.; Poth, C.; Kamath, A.; Vulić, I.; Ruder, S.; Cho, K.; Gurevych, I. Adapterhub: A framework for adapting transformers. arXiv 2020, arXiv:2007.07779. [Google Scholar] [CrossRef]

- Chakravarthi, B.R.; Rajiakodi, S.; Ponnusamy, R.; Sivagnanam, B.; Thakare, S.Y.; Thangasamy, S. Detecting caste and migration hate speech in low-resource Tamil language. In Language Resources and Evaluation; Springer: Cham, Switzerland, 2025; pp. 1–36. [Google Scholar]

- Sun, C.; Huang, L.; Qiu, X. Utilizing BERT for aspect-based sentiment analysis via constructing auxiliary sentence. arXiv 2019, arXiv:1903.09588. [Google Scholar] [CrossRef]

- Qorich, M.; Ouazzani, R.E. BERT-Based Models with BiLSTM for Self-chronic Stress Detection in Tweets. In Proceedings of the International Conference on Artificial Intelligence and Smart Environment, Errachidia, Morocco, 23–25 November 2023; Springer: Cham, Switzerland, 2023; pp. 376–383. [Google Scholar]

- Zanwar, S.; Wiechmann, D.; Qiao, Y.; Kerz, E. Exploring hybrid and ensemble models for multiclass prediction of mental health status on social media. arXiv 2022, arXiv:2212.09839. [Google Scholar] [CrossRef]

- Thekkekara, J.P.; Yongchareon, S.; Liesaputra, V. An attention-based CNN-BiLSTM model for depression detection on social media text. Expert Syst. Appl. 2024, 249, 123834. [Google Scholar] [CrossRef]

- Hossain, M.M.; Hossain, M.S.; Mridha, M.; Safran, M.; Alfarhood, S. Multi task opinion enhanced hybrid BERT model for mental health analysis. Sci. Rep. 2025, 15, 3332. [Google Scholar] [CrossRef]

- Khan, L.; Qazi, A.; Chang, H.T.; Alhajlah, M.; Mahmood, A. Empowering Urdu sentiment analysis: An attention-based stacked CNN-Bi-LSTM DNN with multilingual BERT. Complex Intell. Syst. 2025, 11, 10. [Google Scholar] [CrossRef]

- Sardelich, M.; Manandhar, S. Multimodal deep learning for short-term stock volatility prediction. arXiv 2018, arXiv:1812.10479. [Google Scholar] [CrossRef]

- Suresh, V.; Ong, D.C. Using knowledge-embedded attention to augment pre-trained language models for fine-grained emotion recognition. In Proceedings of the 2021 9th International Conference on Affective Computing and Intelligent Interaction (ACII), Nara, Japan, 28 September–1 October 2021; pp. 1–8. [Google Scholar]

- Wang, K.; Jing, Z.; Su, Y.; Han, Y. Large language models on fine-grained emotion detection dataset with data augmentation and transfer learning. arXiv 2024, arXiv:2403.06108. [Google Scholar] [CrossRef]

- Singh, G.; Brahma, D.; Rai, P.; Modi, A. Fine-grained emotion prediction by modeling emotion definitions. In Proceedings of the 2021 9th International Conference on Affective Computing and Intelligent Interaction (ACII), Nara, Japan, 28 September–1 October 2021; pp. 1–8. [Google Scholar]

- Huang, C.; Trabelsi, A.; Qin, X.; Farruque, N.; Mou, L.; Zaiane, O.R. Seq2Emo: A sequence to multi-label emotion classification model. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 4717–4724. [Google Scholar]

- Sitoula, R.S.; Pramanik, M.; Panigrahi, R. Fine-Grained Classification for Emotion Detection Using Advanced Neural Models and GoEmotions Dataset. J. Soft Comput. Data Min. 2024, 5, 62–71. [Google Scholar] [CrossRef]

| Model | Design Highlights | Relation to RAMHA |

|---|---|---|

| BERT–BiLSTM for Stress Detection (2024) [46] | Uses BERT embeddings followed by BiLSTM to detect chronic stress in tweets. | Similar pipeline but lacks attention and adapter modules. RAMHA introduces attention and adapter-based fine-tuning for better interpretability and efficiency. |

| Hybrid Transformer + BiLSTM + Feature Fusion (2022) [47] | Combines BERT/RoBERTa, BiLSTM, and handcrafted linguistic features for multiclass mental health prediction. | Fuse multimodal features but without adapters or focal loss. RAMHA is fully end-to-end and handles class imbalance effectively. |

| Attention-based CNN–BiLSTM for Depression (2024) [48] | Applies an attention-enhanced CNN–BiLSTM network for depression detection on social media text. | Adds attention but lacks Transformer backbone and adapter efficiency; RAMHA uses a Transformer core with adapter-based tuning. |

| Opinion-BERT (2025) [49] | Multi-task model using BERT, CNN/BiGRU, and opinion embeddings for sentiment and mental health status classification. | Hybrid structure with local and sequential layers, but no adapter fine-tuning or focal loss. RAMHA enhances efficiency, generalizability, and imbalance handling. |

| RAMHA (proposed) | RoBERTa-Large with adapter fine-tuning, BiLSTM, attention modules, and focal loss—designed for efficient and robust mental health emotion classification. | Unique integration of adapters, attention, and class-imbalance-aware design. |

| Target Class | Label Used |

|---|---|

| Depression | Sadness, grief, remorse, disgust, disappointment, disapproval |

| Anxiety | Fear, nervousness, embarrassment, desire |

| Stress | Annoyance, anger, realization, confusion |

| Control | joy, love, optimism, gratitude, pride, relief, admiration, amusement, approval, caring, excitement |

| Hyperparameter | Value / Setting |

|---|---|

| Pretrained Model | RoBERTa-Large |

| Tokenizer | RobertaTokenizer (Max Length: 128) |

| Learning Rate | 2 × |

| Weight Decay | 0.01 |

| Batch Size | 16 |

| Optimizer | AdamW |

| Dropout Rate | 0.5 |

| Learning Rate Scheduler | ReduceLROnPlateau (patience = 2, factor = 0.5) |

| Loss Function | Focal Loss () |

| Number of Epochs | 10 |

| Attention Hidden Size | 512 |

| Maximum Sequence Length | 128 |

| Evaluation Metrics | Accuracy, F1-Score |

| Model | GoEmotions-1 | GoEmotions-2 | GoEmotions-3 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | Accuracy | Precision | Recall | F1-Score | Accuracy | Precision | Recall | F1-Score | |

| SVM | 0.66 | 0.61 | 0.59 | 0.59 | 0.67 | 0.62 | 0.60 | 0.61 | 0.66 | 0.61 | 0.60 | 0.59 |

| LR | 0.67 | 0.61 | 0.58 | 0.59 | 0.68 | 0.63 | 0.61 | 0.61 | 0.68 | 0.63 | 0.60 | 0.59 |

| NB | 0.61 | 0.60 | 0.55 | 0.54 | 0.61 | 0.60 | 0.54 | 0.54 | 0.62 | 0.61 | 0.55 | 0.54 |

| LSTM | 0.71 | 0.68 | 0.66 | 0.67 | 0.71 | 0.69 | 0.67 | 0.67 | 0.72 | 0.70 | 0.68 | 0.67 |

| R-CNN | 0.72 | 0.69 | 0.67 | 0.68 | 0.70 | 0.68 | 0.68 | 0.68 | 0.72 | 0.70 | 0.67 | 0.68 |

| GRU | 0.73 | 0.70 | 0.68 | 0.69 | 0.73 | 0.69 | 0.68 | 0.68 | 0.74 | 0.71 | 0.69 | 0.69 |

| Bi-GRU | 0.75 | 0.72 | 0.71 | 0.71 | 0.74 | 0.71 | 0.70 | 0.69 | 0.74 | 0.72 | 0.70 | 0.69 |

| BERT | 0.78 | 0.76 | 0.74 | 0.75 | 0.77 | 0.75 | 0.74 | 0.75 | 0.77 | 0.74 | 0.75 | 0.75 |

| Model | GoEmotions-1 | GoEmotions-2 | GoEmotions-3 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | Accuracy | Precision | Recall | F1-Score | Accuracy | Precision | Recall | F1-Score | |

| BERT | 0.78 | 0.76 | 0.74 | 0.75 | 0.77 | 0.75 | 0.74 | 0.75 | 0.77 | 0.74 | 0.75 | 0.75 |

| DistilBERT | 0.78 | 0.75 | 0.73 | 0.74 | 0.78 | 0.74 | 0.73 | 0.74 | 0.79 | 0.76 | 0.75 | 0.75 |

| ALBERT | 0.79 | 0.76 | 0.75 | 0.75 | 0.78 | 0.76 | 0.74 | 0.74 | 0.79 | 0.76 | 0.75 | 0.75 |

| XLNET | 0.80 | 0.77 | 0.76 | 0.76 | 0.79 | 0.77 | 0.75 | 0.75 | 0.81 | 0.77 | 0.77 | 0.77 |

| RoBERTa | 0.81 | 0.79 | 0.78 | 0.79 | 0.80 | 0.78 | 0.77 | 0.77 | 0.81 | 0.79 | 0.78 | 0.78 |

| RoBERTa+BiLSTM | 0.83 | 0.80 | 0.80 | 0.80 | 0.81 | 0.78 | 0.77 | 0.78 | 0.83 | 0.79 | 0.79 | 0.80 |

| RAMHA (our Model) | 0.88 | 0.86 | 0.85 | 0.85 | 0.87 | 0.85 | 0.84 | 0.85 | 0.89 | 0.87 | 0.86 | 0.87 |

| RAMHA withDiff Combination | GoEmotions-1 | GoEmotions-2 | GoEmotions-3 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | Accuracy | Precision | Recall | F1-Score | Accuracy | Precision | Recall | F1-Score | |

| Depression, stress, Anxiety (3 classes) | 0.88 | 0.86 | 0.85 | 0.85 | 0.87 | 0.85 | 0.84 | 0.85 | 0.89 | 0.87 | 0.86 | 0.87 |

| Depression, stress, control (3 classes) | 0.90 | 0.88 | 0.87 | 0.88 | 0.89 | 0.88 | 0.87 | 0.87 | 0.90 | 0.88 | 0.88 | 0.88 |

| Depression, stress (2 classes) | 0.92 | 0.89 | 0.91 | 0.90 | 0.91 | 0.89 | 0.88 | 0.90 | 0.91 | 0.89 | 0.90 | 0.90 |

| Depression, control (2 classes) | 0.93 | 0.91 | 0.92 | 0.92 | 0.92 | 0.90 | 0.91 | 0.91 | 0.93 | 0.91 | 0.92 | 0.91 |

| RAMHA Model Components | GoEmotions-1 | GoEmotions-2 | GoEmotions-3 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | Accuracy | Precision | Recall | F1-Score | Accuracy | Precision | Recall | F1-Score | |

| RoBERTa | 0.81 | 0.79 | 0.78 | 0.79 | 0.80 | 0.78 | 0.77 | 0.77 | 0.81 | 0.79 | 0.78 | 0.78 |

| RoBERTa +Adapter (Pfeiffer) | 0.82 | 0.80 | 0.79 | 0.80 | 0.80 | 0.79 | 0.78 | 0.79 | 0.82 | 0.80 | 0.79 | 0.80 |

| RoBERTa+BiLSTM | 0.83 | 0.80 | 0.80 | 0.80 | 0.81 | 0.78 | 0.77 | 0.78 | 0.83 | 0.79 | 0.79 | 0.80 |

| RoBERTa +Adapter (Pfeiffer) + BiLSTM | 0.84 | 0.82 | 0.81 | 0.82 | 0.83 | 0.80 | 0.80 | 0.80 | 0.83 | 0.81 | 0.80 | 0.81 |

| RoBERTa +Adapter (Pfeiffer) + BiLSTM + Attention | 0.85 | 0.83 | 0.81 | 0.83 | 0.84 | 0.82 | 0.81 | 0.82 | 0.85 | 0.83 | 0.82 | 0.83 |

| RAMHA (our Model) | 0.88 | 0.86 | 0.85 | 0.85 | 0.87 | 0.85 | 0.84 | 0.85 | 0.89 | 0.87 | 0.86 | 0.87 |

| Study | Year | Dataset | Method | F1-Score |

|---|---|---|---|---|

| [52] | 2022 | EmpatheticDialogues, GoEmotions, Affect in Tweets | SBERT FT+WordNet | 49.00 |

| [52] | 2021 | EmpatheticDialogues, GoEmotions, Affect in Tweets | KEA-ELECTRA | 49.60 |

| [53] | 2024 | GoEmotions | BERT | 52.00 |

| [54] | 2021 | GoEmotions | BERT+MLM | 51.25 |

| [54] | 2021 | GoEmotions | MLM+BERT+CDP | 51.96 |

| [54] | 2021 | GoEmotions | BERT+CPD | 52.34 |

| [55] | 2021 | SemEval18+GoEmotions | BERT | 58.49 |

| [55] | 2021 | SemEval18+GoEmotions | Seq2Emo | 59.57 |

| [56] | 2024 | GoEmotions | CNN+BERT+RoBERTa | 84.58 |

| RAMHA (Our Proposed model) | 2025 | GoEmotions | RoBERTa+adapter+BiLSTM | 87.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumar, M.; Khan, L.; Choi, A. RAMHA: A Hybrid Social Text-Based Transformer with Adapter for Mental Health Emotion Classification. Mathematics 2025, 13, 2918. https://doi.org/10.3390/math13182918

Kumar M, Khan L, Choi A. RAMHA: A Hybrid Social Text-Based Transformer with Adapter for Mental Health Emotion Classification. Mathematics. 2025; 13(18):2918. https://doi.org/10.3390/math13182918

Chicago/Turabian StyleKumar, Mahander, Lal Khan, and Ahyoung Choi. 2025. "RAMHA: A Hybrid Social Text-Based Transformer with Adapter for Mental Health Emotion Classification" Mathematics 13, no. 18: 2918. https://doi.org/10.3390/math13182918

APA StyleKumar, M., Khan, L., & Choi, A. (2025). RAMHA: A Hybrid Social Text-Based Transformer with Adapter for Mental Health Emotion Classification. Mathematics, 13(18), 2918. https://doi.org/10.3390/math13182918