Abstract

Partially linear time series models often suffer from multicollinearity among regressors and autocorrelated errors, both of which can inflate estimation risk. This study introduces a generalized ridge-type kernel (GRTK) framework that combines kernel smoothing with ridge shrinkage and augments it through ordinary and positive-part Stein adjustments. Closed-form expressions and large-sample properties are established, and data-driven criteria—including GCV, AICc, BIC, and RECP—are used to tune the bandwidth and shrinkage penalties. Monte-Carlo simulations indicate that the proposed procedures usually reduce risk relative to existing semiparametric alternatives, particularly when the predictors are strongly correlated and the error process is dependent. An empirical study of US airline-delay data further demonstrates that GRTK produces a stable, interpretable fit, captures a nonlinear air-time effect overlooked by conventional approaches, and leaves only a modest residual autocorrelation. By tackling multicollinearity and autocorrelation within a single, flexible estimator, the GRTK family offers practitioners a practical avenue for more reliable inference in partially linear time series settings.

Keywords:

shrinkage estimation; partially linear models; multicollinearity; ridge-type kernel smoother; parameter selection MSC:

62G08; 62J07; 62M10

1. Introduction

In many time series applications, one must balance interpretability and flexibility in the modeling. Partially linear regression models achieve this by expressing the response as a parametric linear function of some covariates alongside a nonparametric smooth function of an additional variable. Specifically, we consider the following model setup:

where denotes the stationary response at time , and its prediction depends on the p-dimensional vector of explanatory variables with and an extra univariate variable . Also, is an unknown p-dimensional parameter vector to be estimated, is an unknown smooth function, and are the error terms.

Unlike classical linear models, (1) offers the interpretability of while retaining the capacity to model complex, nonlinear effects through (see [1]). However, two mojor issues often arise:

- Multicollinearity among When the columns of are nearly linearly dependent, ordinary least squares (OLS) or unpenalized nonparametric estimators can have extremely high variances [2].

- Autocorrelation or dependence among , as is common in time series or longitudinal settings. We capture this by a first-order autoregressive process:ensuring that is stationarily dependent over time ([3,4]). In addition, to simplify the notational illustration, a matrix–vector form of the model (1) can be stated aswhere , , and . The main goal is to estimate the unknown parameter vector , the smooth function and the mean vector based on the observations from the data set {}. Partially linear models enable easier interpretation of the effect of each variable and owing to the “curse of dimensionality”, these models can be preferred to a completely nonparametric regression model. Specifically, partially linear models are more practical than the classical linear model because they combine both the parametric part presented numerically and the nonparametric part displayed graphically.

Even though partially linear models have specific advantages, these models typically assume uncorrelated errors ([1,5]). In real-world time series or panel data, ignoring assumption (2) can degrade efficiency and produce biased inferences ([6,7]). Moreover, multicollinearity among amplifies these problems, inflating variances to the point that estimates become unreliable ([8,9]).

Despite steady progress on partially linear models, two shortcomings persist. First, kernel-based estimators that handle autocorrelated errors—e.g., efficient GLS-type fits [4] —leave the multicollinearity problem untreated. Second, ridge or Stein-type shrinkage estimators developed for i.i.d. settings ([8,10]) do not accommodate serial dependence. To our knowledge, no existing method tackles collinearity and autocorrelation simultaneously within a single, closed-form estimator; nor do prior studies examine how data-driven criteria jointly tune the kernel bandwidth and shrinkage weight. Filling this gap is the focus of the present work. Accordingly, our objective is to develop and rigorously evaluate a unified estimator that stabilizes the parametric coefficients under multicollinearity, adapts to unknown nonlinear structure, and remains efficient in the presence of autoregressive errors.

To mitigate these issues, we adopt ridge-type kernel smoothing for the parametric component. In essence, a shrinkage penalty is added so that the linear portion is “shrunk” toward zero to stabilize the estimation. Mathematically, for instance, a ridge-type kernel (RTK) estimator of can be expressed (after suitable data transformation) as in (6) which has been extensively studied by [11]. This ridge-inspired framework stems from Stein-type shrinkage theory, which shows that biased estimators can dominate unbiased ones, particularly in moderate-to-high dimensions or under strong correlation ([12,13]). Indeed, Stein’s paradox reveals that for shrinking an estimator can lower the overall mean squared error despite introducing bias, a phenomenon extensively explored by [12,14,15]).

When errors follow (2), we further incorporate transformations to handle the autocovariance structure, yielding generalized ridge-type kernel (GRTK) estimators that optimally exploit the correlation . These GRTK methods align with earlier biased estimation approaches for linear or partially linear models with correlated errors ([10,13,16]). By merging the Stein-type shrinkage logic with kernel smoothing, one can obtain robust estimators of both and that effectively address multicollinearity and autocorrelation in a unified fashion.

In this paper, we formalize and extend such modified ridge-type kernel smoothing estimators for partially linear time series models. We focus on:

- The precise data transformation to accommodate (2).

- The interplay between shrinkage and the bandwidth for kernel smoothing.

- Selecting these tuning parameters () using multiple criteria, including GCV, AICc, BIC, and RECP.

This framework is motivated by [17] broader research on shrinkage-based variable selection and penalty methods, which shows that penalized procedures (including ridge, lasso, and Stein-like estimators) can vastly reduce variance in semiparametric regressions—particularly with correlated data or limited samples. Consequently, the main goal is to fill a gap in semiparametric regression by uniting the autoregressive error modeling (2), handling multicollinearity and Stein-type shrinkage in kernel-based estimation. Based on the focuse stated above, the paper is expected to make the following contributions:

- We introduce a generalized ridge-type kernel (GRTK) estimator that embeds an penalty inside each kernel-weighted fit, yielding a closed-form solution that simultaneously controls collinearity and accounts for AR(1) errors.

- Under mild regularity conditions we derive the closed-form bias, variance and risk expansions, and prove that GRTK dominates the unpenalised local-linear estimator when the condition number of the local design exceeds a modest threshold.

- We show how four information-based criteria (GCV, AICc, BIC, RECP) can jointly choose the kernel bandwidth and ridge weight—an aspect unexplored in earlier work.

- Extensive simulations and an airline-delay application demonstrate that GRTK and its Stein-type shrinkage extensions yield more stable coefficient estimates and cleaner residual series than existing semiparametric alternatives.

The rest of the paper is organized as follows. Section 2 explains how we fit the autoregressive structure (2) and handle the collinearity. Section 3 provides the mathematical formulations of our GRTK shrinkage estimators for both and the smooth function , including asymptotic properties. Section 4 details the procedures for choosing and bandwidth . Section 5 illustrates, through extensive simulation and an airline delay time series dataset, how the proposed methodology outperforms traditional methods under multicollinearity and autocorrelation. Finally, Section 6 concludes by discussing of key findings and future research directions.

2. Fitting the Model Error Structure

In partially linear time series models, two phenomena often inflate the estimation risk: high correlation among the explanatory variables and serial dependence in the errors. A local-kernel fit alone addresses the non-parametric component but inherits numerical instability when the local design matrix is nearly singular. Conversely, ordinary ridge regression stabilises the parametric fit but cannot capture an unknown smooth . Combining the two resolves both issues: the ridge penalty absorbs multicollinearity at each kernel centre, while the kernel weights allow to vary flexibly with . The resulting estimator admits a closed-form solution, and its bias–variance balance can be tuned automatically through data-driven information criteria. This synergy yields a practical, single-step procedure that is robust to ill-conditioning yet retains the local adaptivity required for non-linear structure.

Assume that ′ is a realization from a stationary time series described by model (1) with autoregressive error terms satisfying the following assumption.

Assumption 1.

The error

is a stationary dependent sequence with

and

for some constant Letting

and

, where

is a

positive definite symmetric matrix.

Here, Assumption 1 is standard in time series analysis because it allows for modeling of stationary errors with finite variance. Such an assumption is common in many time series models where the error term’s statistical properties remain constant over time. Note that the autoregressive errors follow an n −dimensional multivariate normal distribution with a mean zero and stationary covariance matrix . We may also write this expression in the equivalent form , where is a covariance matrix, given by

where denotes the correlation between and , as defined before. It should be emphasized that once we have an estimate of the parameter vector and an estimator of the unknown smooth function , the parameter can be estimated by the residuals from the semiparametric regression , defined as

There are several methods to estimate and In this work, we adopt the ridge type kernel (RTK) method discussed by [11], which generalizes partial kernel method proposed by [14]. Specifically, the RTK estimator of is the form

where is a shrinkage parameter, is an identity matrix, , , and is a kernel smoother matrix of weights satisfying Assumption (6), given by

where is a bandwidth parameter to selected, and is the kernel function defined in Remark 2. For example, could be Gaussian, Epanechnikov, etc. Then, an estimator of the nonparametric part is obtained by

Hence, the autoregressive coefficient arises from (5) and the noise of the AR(1) is obtained by . Note that since the errors in the model (1) are serially correlated, the estimators defined in (6) and (8) is not asymptotically efficient. To improve efficiency, we use kernel ridge type weighted estimation based on transforming data .

3. Generalized Ridge Type Kernel Estimation

The generalized ridge type kernel (GRTK) estimator of the parametric components in model (1) is constructed by combining the partial kernel method with a suitable transformation to account for in the AR(1) errors. We will then extend this GRTK estimator to incorporate the shrinkage estimation, analyze its asymptotic properties, and discuss its statistical characteristics. For notational clarity we first derive (9)–(12) under the working assumption that the true and are known; these quantities are replaced by consistent preliminary estimators in practice. Using the assumption , one can see that . Thus, for a given , the natural estimator of the nonparametric component is

where is a kernel smoother matrix, as defined in (7). Since defined in Assumption 1 is positive definite, there exists a −dimensional matrix such that . Also, the following assumptions are needed because they reflect those in standard local-linear and ridge analyses and hold for most applied data after routine diagnostics.

A1. Under Assumption 1, follows a weakly stationary AR(1) process with .

A2. Eigenvalues of are bounded away from 0; the ridge term in (11) enforces this when multicollinearity is severe.

A3. is symmetric, bounded, integrates to 1, with bandwidth and as .

In practice, the GRTK estimator is obtained through a short plug-in routine. First, we estimate the AR(1) coefficient (and thus the covariance matrix ) by a standard -consistent method, such as maximum likelihood. Second, we compute an initial from a ridge-free partial-kernel fit. Finally, and are substituted into (9)–(12) and the system is solved once to give . Appendix B proves that this one-shot plug-in estimator shares the same asymptotic distribution as the oracle version that uses the true and .

We first assume that the matrix and, hence, are known. Then, we fit the error structure by the following residuals:

Here, as defined in (6), and are locally centered residuals of and , respectively. By considering these partial residuals, the GRTK estimator of the vector is obtained by minimizing the following weighted least squares (WLS) equation.

where denotes the Euclidean norm, is a shrinkage parameter to be selected by a selection method, and are the centered residuals, as defined in (6). Also, is a kernel smoothing matrix based on a bandwidth parameter to be selected.

Algebraically, after some operations, the solution of the equation yields the following GRTK estimator:

Replacing in the (9) with the in (12) to produce an GRTK estimator of the nonparametric component in the partially linear model given by

This section details the construction of the Generalized Ridge-Type Kernel (GRTK) estimator for the parametric component in model (1). This is achieved by combining the partial kernel method with a suitable transformation that accounts for in the AR(1) errors. We will then extend this GRTK estimator to incorporate shrinkage estimation, analyze its asymptotic properties, and discuss its statistical characteristics.

3.1. Shrinkage Estimators with GRTK Estimators

While the GRTK estimator in (12) addresses multicollinearity and autocorrelation simultaneously, further variance reduction is possible via Stein-type shrinkage. By combining the full model GRTK estimator with a more parsimonious submodel estimator, we can develop Stein-type shrinkage estimators that may achieve lower risk under certain conditions. This approach follows the framework developed by [14] and extends it to the partially linear time series context. To develop the shrinkage estimators, we consider two models:

1. Full Model (FM): The complete model with all predictors

where is the number of significant coefficients and denotes the number of sparse coefficients, estimated using GRTK as in Equation (12).

2. Submodel (SM): A reduced model with dimension (), selected via Bayesian information criterion (BIC) to minimize model selection, yielding .

Let be the parametric estimate from the ridge-type kernel smoothing (GRTK) on all predictors as full model from Equation (12) and let be the corresponding estimate when only a submodel of predictors is retained using BIC. Accordingly, the ordinary shrinkage estimator () can be give as follows:

where is a “distance measure” given below, and parallels the classical shrinkage factor. Intuitively, we are pulling toward , thus introducing some bias but potentially reducing variance and risk. The distance is given by:

where is the portion of (or ) corresponding to the submodel’s parametric indices. is the part of the design matrix associated with those sparse indices. is a partial residual projection, removing the other block of parametric covariates from the fit. is given in (25) but note that is chosen SM-based partial kernel fit (see [10] for details).

To prevent over-shrinking when is large or negative, we define a positive-part version:

where Thus, if is negative, we do no shrinkage; if it is positive, we shrink as in (14).

Following a partially linear kernel-smoothing approach (GRTK), the nonparametric function can be recomputed (or simply computed once) based on these final shrunk parametric estimates using smoothing matrix :

Hence, each final semiparametric estimators become .

3.2. Asymptotic Distribution of the Estimators

This subsection presents the assumptions and theorems necessary to analyze the asymptotic properties of the proposed and estimators. Understanding the asymptotic distribution is crucial for statistical inference. In this context, we introduce the following assumptions that can be easily satisfied.

Assumption 2.

In the setting of the semiparametric regression model, covariates

and

are related via the following nonparametric regression model.

where

are real sequence satisfying.

and

where

is a

positive definite matrix and

indicates the Euclidean norm.

Assumption 3.

The functions

and

satisfy a Lipschitz continuous of order 1 on data domain.

Assumption 4.

The weight functions

satisfy these conditions:

- (i.)

- (ii.)

- (iii.)

- where is the indicator function of a set .

- (iv.)

- , where denotes the trace of a square matrix .

Regarding the assumptions given above, Assumption 2 which ise related to smoothness, requires two bounded derivatives of is the minimal condition for the bias expansion that emphasizes well known kernel methods. In practice, visual inspection of a pilot kernel fit or a spline smoother is usually sufficient to rule out abrupt alterations. For Assumption 3 which refers to local design, the ridge term in (11) guarantees a strictly positive lower eigen-bound even when the raw design is ill-conditioned (see [18,19]) for analogous arguments in linear GLS. In Assumption 4 for error dependence, while we state an AR(1) model for clarity, Theorems 1–2 require only strong mixing with finite fourth moments [18].

Remark 1.

The

stated in (a) of Assumption 2 behaves like as a zero mean uncorrelated sequence of stationary random variables of independent of

. If the covariates

and

are the observations of the independent and identically distributed random variables, then

can be considered as

. See [14] for more details. In this case, (b) holds with probability 1 according to the law of large numbers, and (c) is provided by Lemma 1 in [16].

Remark 2.

If

is a kernel function, then

is also a kernel function based on a positive bandwidth parameter

, and it satisfies the following properties:

These properties show that a kernel function needs to be symmetric and continuous probability density function with mean zero and constant variance. Note that the bandwidth parameter should be chosen optimally by a selection criterion in kernel estimation or smoothing. For example, a large provides an extremely smooth curve or estimate, while a small produces a wiggly function curve.

Theorem 1.

Suppose that the Assumption 1 and Assumptions 2–3 hold, and assume that

is a non-singular covariance matrix with

. Then, as

we have

where

denotes convergence in distribution. See [9] for the proof of the Theorem 1.

The asymptotic normality established in Theorem 1 allows for valid statistical inference based on the GRTK estimator. Intuitively, this result holds because the transformed data approach effectively accounts for autocorrelation, while the kernel smoothing effectively separates the nonparametric component, allowing the parametric component to be estimated consistently with a regular convergence rate.

Theorem 2.

If the Assumptions 1 and 2-3 hold, then it hold

See [9] for proof of the Theorem 2. The asymptotic normality and convergence results established in Theorems 1 and 2 provide a theoretical foundation for the GRTK estimators. In the following section, we analyze additional statistical properties, such as bias and variance.

This convergence rate for the nonparametric component is optimal in the sense that no estimator can achieve a faster uniform convergence rate without additional structural assumptions. The logarithmic factor arises from the need to establish uniform rather than pointwise convergence. Also note that for both Theorem 1 and 2, results also holds if Assumption 4 is replaced by the strong-mixing condition for some where is the Rosenblatt mixing coefficient at lag (see [18] for details). Intuitively, this summation condition says the temporal dependence in the error process decays quickly enough that observations far apart behave almost independently allowing the usual kernel-based central-limit theory to go through (see [20] for similar insights).

Note that Theorem 2 express that reaches the optimal strong convergence rate. Also, a consistent estimator of the asymptotic covariance matrix is required for statistical inference based on . The estimate of covariance matrix can be obtained as

where is the asymptotic covariance matrix of . Regarding the shrinkage estimator’s asymptotic properties for the currently considered low-dimensional () settings, we outline the key asymptotic results. Let be the size of the submodel portion. Suppose is subject to a local alternatives framework:

So that as . Additionally, let denotes the complementary block (nonzero coefficients) of dimension . Under standard regularity conditions ([10]) we can claim that:

- Both and remain consistent for as a point of consistency.

- By construction, these estimators introduce shrinkage-based bias in exchange for variance reduction. The bias depends on or and and generally involves certain noncentral -based expectations.

- Regarding the asymptotic quadratic bias (AQDB), asymptotic covariance and asymptotic distributional risk (ADR), similar expansions hold showing how and incorporate both ridge (FM) plus Stein corrections.

Exact closed-form expressions for the bias, covariance, and risk match those in [10] or [14], with minor notational changes for and the partial kernel smoothing. Hence, we omit rewriting them here. However, for completeness, detailed bias and risk expansions for these estimators appear in Appendix B. The main takeaway is that typically achieves the smallest asymptotic distributional risk among the four choices (FM, SM, ordinary Stein, positive-part Stein) whenever would become negative or large, thus showing the advantage of positive-part truncation in a partially linear framework.

3.3. Statistical Properties of the Estimators

Here, we detail the statistical properties of the GRTK estimator, including its bias, variance, and expected value. These properties help to characterize the estimator’s behavior and quality. As defined in (12), when the GRTK estimators of the parametric and nonparametric components reduce to ordinary kernel smoothing (KS) estimators for a partially linear model with correlated error. They can be defined, respectively, as follows:

Using the abbreviation

Expected value, bias and variance of the estimator can be defined, respectively, as

The implementation details of Equations (20)–(22) are given in Appendix A.1.

Clearly, for any . Hence, the GRTK estimator is a biased estimator. From the expression above it is clear that the expectation of the GRTK estimator vanishes as tends to infinity

Hence, all coefficients of the parametric component are shrunken towards zero as the ridge (or shrinkage) parameter increases.

As shown in the (22), although the smoothing method provides the estimates of the components in the model, they do not directly provide an estimate of the variance of the error terms (i.e., ). In a general partially linear model, the estimate of variance can be found by the residual sum of squares

where is a hat matrix which depends on a smoothing parameter and a shrinkage parameter > 0 for partially linear regression model. Note that the fitted values of the model defined in the (1) is obtained by this matrix , given by

where , as defined in Equations (20)–(22). The implementation details of Equation (24) is given in Appendix A.2. Thus, similar to ordinary least squares regression, estimation of the error variance can be stated as

where denotes the residual degrees of freedom (see, for example, [4]). It appears from the denominator of Equation (25) that the degrees of freedom for can also be expressed as the sample size minus the number of estimated parameters in the model. Regarding the standard error computation, from (25), and the selected , and , we form the plug-in covariance matrix by substituting into the theoretical variance in (22). The reported standard errors are the square-roots of its diagonal entries.

3.4. Assessing the Risk and Efficiency

To further evaluate the estimators, we now consider measures of risk and efficiency. As discussed earlier, and important criteria for assessing estimator quality. This section introduces the Mean Squared Deviation (MSD) and quadratic risk function to compare the performance of different estimators. In general, the ill-effect of is known as information loss, and this loss can be measured by a function stated as the mean squared deviation (MSD). Note that the MSD is a risk function corresponding to the expected value of the squared error loss for an estimator. In terms of a squared error loss, the can be defined as a matrix consisting of the sum of the variance-covariance matrix and the squared bias. While the MSD matrix provides comprehensive information about estimation quality, comparing matrices directly is challenging. Therefore, we derive a scalar measure by taking the trace of the MSD matrix, which represents the sum of mean squared errors across all coefficient estimates:

The Equation (26) gives detailed information about the quality of an estimator. In addition to the matrix, the expected loss, called the scalar-valued version, can also be used to compare different estimators. For convenience, we will work with the scalar valued mean squared deviation.

Definition 1.

The quadratic risk function of an estimator

of the vector

is defined as the scaler valued version of the mean squared deviation matrix (SMDE), given by

where

is a symmetric and non-negative definite matrix. Based on the above risk function, we can define the following criterion to compare estimators.

Definition 2.

Let the vectors

and

be the two competing estimators of a parameter vector

. If the difference of the matrices

is non-negative definite, it can be said that the estimator

is superior to estimator

, and is given by

Accordingly, we get the following result.

Theorem 3

([21]). Let the vectors and

be the two different estimators of a parameter vector

. Therefore, the following two expressions are equivalent

- (i.)

- for all non-negative definite matrices

- (ii.)

- is a non-negative definite matrix.

The results of Theorem 3 denotes that has a smaller than the estimator if and only if the of averaging over every quadratic risk function is less than that of the estimator . Thus, the superiority of over can be observed by comparing the matrices .

Substituting Equations (21) and (22) in Equation (26), the matrix of the proposed estimator is obtained as

Furthermore, considering Definition 1, the quadratic risk function for can be stated as follows:

The theoretical properties established in this section demonstrate that GRTK estimators effectively balance bias and variance while accounting for both multicollinearity and error autocorrelation. However, the practical performance of these estimators depends critically on the selection of appropriate tuning parameters (). In the following section, we address this challenge by introducing several parameter selection criteria.

4. Choosing the Penalty Parameters

This section focuses on selecting the bandwidth parameter and the shrinkage parameter , both of which are crucial components of the generalized weighted least squares Equation (11). The goal is to determine the best possible values for and based on different criteria. To achieve this, we employ parameter selection methods. The parameters λ and k are therefore data-adaptive, chosen by minimizing GCV, AICc, BIC or RECP; under standard regularity conditions each criterion yields a selection that is asymptotically risk-optimal, though not necessarily the unique oracle optimum in finite samples. The most widely used selection criteria are summarized below:

Generalized cross-validation: The best possible bandwidth and shrinkage parameter can be determined by implementing the score function as in equation (27):

where as is defined in Equations (23) and (24), is the smoother matrix based on the parameters and .

Improved Akaike information criterion: To eliminate overfitting when the sample size is relatively small, [22] proposed , an improved version of the Classical Akaike Information Criterion

Bayesian Information Criterion: Schwarz’s criterion, also known as the is another statistical measure for selection of the penalty parameters. The criterion is expressed as

Risk estimation using classical pilots (RECP): The key idea now is to estimate the risk by plugging-in pilot estimates of and into (30), and choose the and that minimizes the criterion (see [11])

Each of these four criteria offers a valid approach for parameter selection; however, they have different characteristics in practice. GCV generally provides a good balance between bias and variance across different sample sizes. is generally more suitable for smaller samples, where overfitting is a concern. BIC tends to produce more parsimonious models and is often preferred when the true model is believed to be sparse. RECP often provides good fits for the nonparametric component but can be more sensitive to the correlation structure. The simulation study in the next section provides further guidance on criterion selection under different data scenarios.

In practice we interpret as the bandwidth that sets the smoothness of the non-parametric curve and as the ridge weight that governs the shrinkage on the linear part. The text now clarifies that we start from the rule-of-thumb anchors suggested by asymptotic theory—a bandwidth of the usual bias-variance order and a shrinkage weight on the scale of the inverse sample size—and place a modest logarithmic grid around those anchors. Each candidate pair is evaluated with the four information criteria introduced earlier, and the pair that attains the lowest value is selected; if two pairs tie, the one with the smaller bandwidth is kept to avoid over-smoothing. We also note that the mean-squared-error surface is flat in a neighborhood of the chosen point, which makes the estimator insensitive to small tuning changes, and that the entire grid search finishes almost instantly on standard hardware.

5. Numerical Examples

5.1. Simulation Study

This section presents a Monte Carlo simulation study designed to compare the estimation performances of the introduced kernel-type ridge estimator (GRTK) and shrinkage estimators for model (1) in the presence of correlated errors. To generate parametric predictors, we use a sparse model with . To introduce multicollinearity, covariates are generated with a specific level of collinearity, denoted as , the following equation is used:

where p is the number of the predictors, ’s generated as , and denotes the two correlation levels between the predictors of parametric component. In this context, the simulated data sets are generated from the following model,

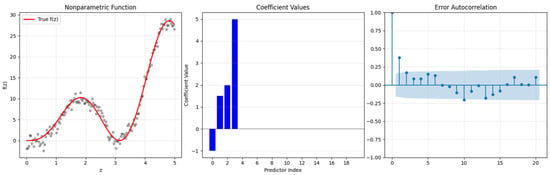

as defined in (1). In given model (32), , is constructed by Equation (31); the regression function and ; the error terms are generated using a first order autoregressive process (that is, ) with and . Using data generation procedure given by Equations (31) and (32), we consider the three different sample sizes that are and to investigate the performance of the introduced estimators for low, medium and large samples, and each we use 1000 repetition for each simulation combination. The simulation results are provided in the following figures and tables. In addition, Figure 1 shows the generated data to clarify the data generation procedure better.

Figure 1.

Simulated data with true , sparse coefficients with zero and non-zero ones and the autocorrelation function of error terms for .

From a computational perspective, the GRTK estimation procedure involves several steps: (1) initial parameter estimation to obtain residuals, (2) estimation of the autocorrelation parameter , (3) data transformation, (4) parameter selection for (), and (5) final estimation. The most computationally intensive step is typically the selection of () pairs, requiring a two-dimensional grid search. In our implementation, the GCV-based estimator was the most computationally efficient, followed by BIC, AICc, and RECP where RECP involves a pilot variance estimation, and it is more costly than others.

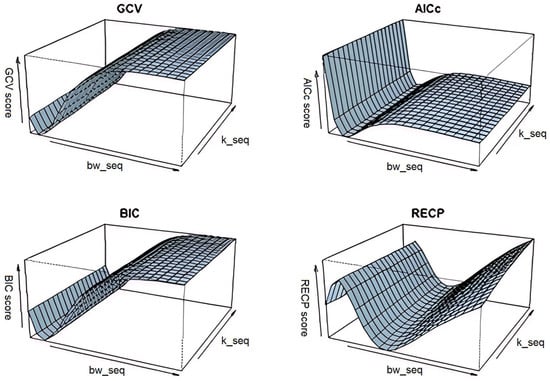

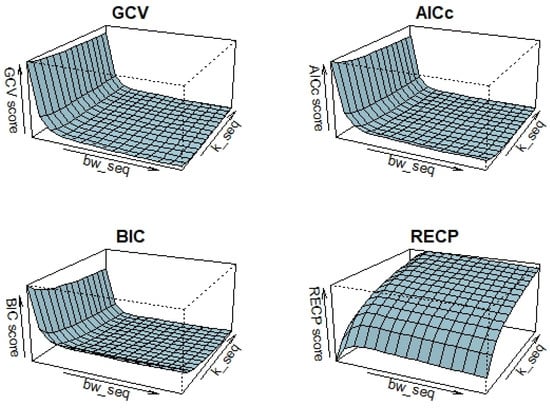

Before presenting the results, as mentioned in the sections above, selection of bandwidth of kernel smoother () and the shrinkage (or ridge) parameter has a critical importance due to its effect on the estimation accuracy. Therefore, for different simulation configurations, chosen pairs of are presented in Table 1 and Figure 2. Hence, it is possible to see how these parameters are affected by the multicollinearity level between predictors and sample size . When examining Table 1, the chosen bandwidth and shrinkage parameters, along with the specified four criteria, are evident for all possible simulation configurations. From the values, it can be observed that the values of the pair increase as the correlation level rises, potentially negatively impacting estimation quality in terms of smoothing. On the other hand, the increase in , particularly when multicollinearity is high, is an expected behavior aimed at avoiding indefinability in the variance-covariance matrix. For large sample sizes, the criteria tend to select lower but higher “” values as a general tendency. Similar selection behavior is observed when . We take the behavior of obtained in Table 1 and follow similar roadmap for the shrinkage estimators that are obtained rely on the GRTK estimator.

Table 1.

Chosen pairs selected by , and critera for different simulation configurations for GRTK.

Figure 2.

Selection of by , and under certain conditions: .

Additionally, Figure 1 illustrates the selection procedure of each criterion for different possible values of bandwidth and shrinkage parameters simultaneously. It can be concluded from this that , , and exhibit closer patterns than . This difference is evidently sourced from its risk estimation procedure with the pilot variance shown in (30). Accordingly, -based estimator mostly present different performances under different conditions.

After determination of the data-adaptively chosen tuning parameters , time series models are estimated and performance of the estimated regression coefficients of parametric component , ) based on the selection criteria are obtained. In this sense, bias, variance and values of , ) are presented in Table 2. In addition, 3D figure is given to observe the effect of both sample size and correlation level on the quality of the estimates in terms of parametric component.

Table 2.

Outcomes of the simulations with bias, variance, and SMSD scores for .

Table 2 reports the numerical performance of the baseline Generalized Ridge-Type Kernel () estimator under various sample sizes () and collinearity levels (). Looking at the bias, variance, and aggregated measures such as SMSD, one sees that high correlation () generally inflates estimation error compared to moderate correlation ( = 0.95). Conversely, larger sample sizes ( and ) mitigate these issues, resulting in smaller bias, variance, and SMSD. These findings confirm the paper’s theoretical claims that ridge-type kernel smoothing can handle partially linear models with correlated errors more robustly as grows. Nevertheless, because the paper works with () predictors, the variance inflation is still non-trivial, suggesting that additional shrinkage mechanisms beyond baseline GRTK might yield further improvements.

Table 3 summarizes the performance of the shrinkage estimator (notated as ), which further regularizes the GRTK approach by incorporating Stein-type shrinkage toward a submodel. Also given represent the average number of chosen coefficients during the simulations using penalty functions for both nonzero and sparse ones. Relative to Table 2’s baseline GRTK results, the shrinkage estimator consistently displays smaller bias–variance trade-offs across most combinations of and . In particular, when the predictors are highly collinear, the estimator’s additional penalization proves beneficial by reducing the inflated variance typically caused by near-linear dependencies among regressors. Hence, these results validate one of the paper’s key arguments: that combining kernel smoothing with shrinkage can outperform a pure ridge-type kernel approach in high-dimensional or strongly correlated settings, especially for moderate sample sizes.

Table 3.

Outcomes of the simulations with bias, variance, and SMSD scores for .

Table 4 presents the outcomes for the positive-part Stein () shrinkage version, which modifies the ordinary Stein estimator by truncating the shrinkage factor at zero. According to the paper’s premise, PS is designed to avoid “overshrinking” when the shrinkage factor becomes negative and is thus expected to give the strongest performance among the three estimators, particularly in the given scenario with high collinearity. Indeed, Table 4’s bias, variance, and SMSD values are generally on par with—or superior to—those reported in Table 2 and Table 3. When is especially large, the PS estimator still manages to keep both the bias and variance relatively contained, highlighting that positive-part shrinkage delivers robust protection against severe collinearity. As a result, these findings support the paper’s claim that the PS shrinkage mechanism provides an appealing balance of bias and variance under challenging data conditions, outperforming both baseline GRTK and standard Stein shrinkage (S) in many of the tested simulation settings.

Table 4.

Outcomes of the simulations with bias, variance, and SMSD scores for .

Overall, the results across Table 2, Table 3 and Table 4 demonstrate that each proposed estimator—baseline GRTK (), shrinkage (), and positive-part Stein shrinkage ()—responds predictably to varying sample sizes and degrees of multicollinearity. Larger samples result in smaller bias, variance, and SMSD values, confirming the benefits of increased information for parameter estimation. Simultaneously, strong correlation among predictors inflates these metrics, illustrating the challenge posed by multicollinearity. Transitioning from GRTK to the two shrinkage-based estimators generally yields progressively better performance, particularly in higher-dimensional settings (where ) with . In particular, the positive-part Stein approach () often delivers the most stable results, indicating that its careful control of shrinkage is advantageous for mitigating both variance inflation and overshrinking.

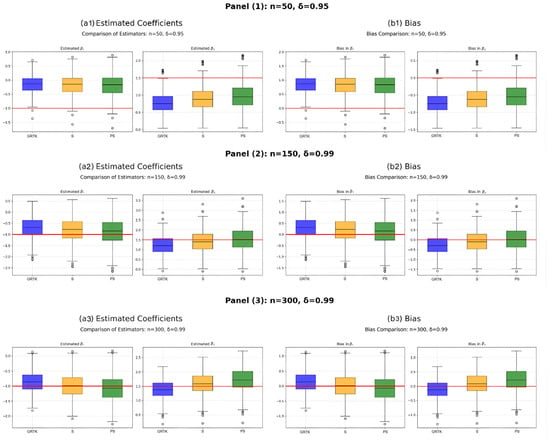

Figure 3 visually compares the distributions of the estimated coefficients for , , and via boxplots under different sample sizes and correlation levels, complementing the numerical findings in Table 2, Table 3 and Table 4. In each panel, the baseline estimator often deviates from the true coefficient value and zero for the bias, but it remains competitive with shrinkage estimators in terms of variance, especially when the data exhibit high collinearity, reflecting the variance inflation reported in Table 2. By contrast, the two shrinkage methods— (Table 3) and (Table 4)—show boxplots that are close to real values of the coefficients, indicating smaller biases and more stable estimates. Particularly under higher correlation () and moderate sample sizes, tends to yield less spread than the other estimators, consistent with the idea that positive-part Stein shrinkage further controls overshrinking while tackling multicollinearity. Overall, these graphical patterns support the tables’ numeric trends, emphasizing how adding shrinkage to a kernel-based estimator improves both bias reduction and variance stabilization in partially linear models.

Figure 3.

Boxplots for bias and estimated regression coefficients obtained based on four criteria and under certain conditions. The horizontal line in the subfigures shows zero bias as a reference line, and outliers are shown by black circles.

Table 5 reports the mean squared errors (MSE) for estimating the nonparametric component under various combinations of sample size () and correlation level . Reflecting patterns observed in the parametric estimates, a larger typically increases MSE values, while growing the sample size reduces them. Notably, the table shows that the proposed and its shrinkage versions ( and ) all perform better as becomes larger; this aligns with the theoretical expectation that more data stabilize both kernel smoothing and shrinkage procedures in a partially linear context.

Table 5.

MSE values for the estimated nonparametric component for all simulation combinations.

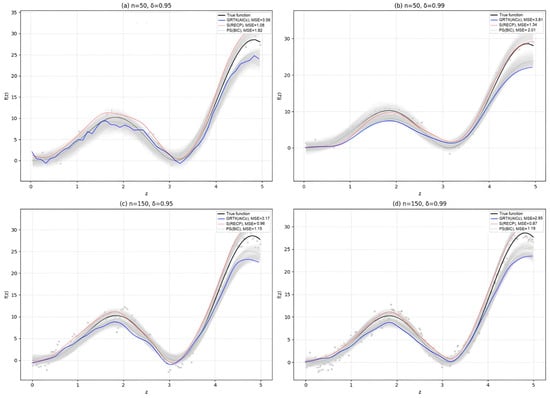

Figure 4 further illustrates these differences in estimating by depicting the fitted nonparametric curves for selected simulation settings. Panels (a)–(b) highlight how increasing can distort the estimated function, leading to higher MSE—an effect that is more pronounced for smaller samples. Meanwhile, panels (c)–(d) show that when nnn grows, each estimator recovers the true nonlinear pattern more closely, supporting the numerical results in Table 5. Taken together, Table 5 and Figure 4 confirm that although multicollinearity and smaller sample sizes can hamper nonparametric estimation, the combination of kernel smoothing with shrinkage remains robust and delivers reasonable reconstructions of in challenging time-series settings.

Figure 4.

Smooth curves obtained for different configurations to show effect of the correlation level in panels (a,b) and (b,d) and the effect of the sample size () in panels (a–c).

5.2. Simulation Study with Large Samples

In this section, we provide the results for large sample sizes to present practical proofs for the asymptotic properties. To achieve that we decided and with less configurations give in Section 5.1. The results are presented in the following Table 6, Table 7, Table 8 and Table 9 and Figure 5.

Table 6.

Large-sample results of simulations with bias, variance and SMSD scores for .

Table 7.

Large-sample results of simulations with bias, variance and SMSD scores for .

Table 8.

Large-sample results of simulations with bias, variance and SMSD scores for .

Table 9.

MSE values for the estimated nonparametric component for large samples.

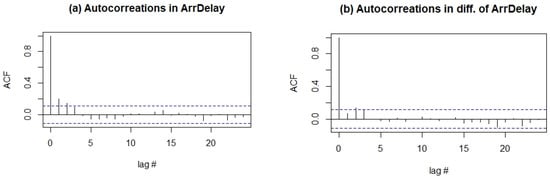

Figure 5.

Autocorrelation functions (acf) for the model error in (33): Panel (a) acf plot of original response variable; panel (b) acf plot for difference of the variable. The y-axes in both figures “lag #” refers number of lags.

In Table 6, the GRTK estimator represents the baseline semiparametric estimator with variance , where . In terms of sample size effects, one can see that bias reduces around ~27% and variance reduces around ~42% from . Regarding the correlation impact, increases performance get worse around three times than case, confirming multicollinearity effects. Moreover, RECP is consistently the best (lowest SMSD), and varies the most due to its correction term which is sensitive to changes in both sample size and degrees of freedom.

In Table 7, the Stein shrinkage estimator creates a bias-variance trade-off through intentional shrinkage. The shrinkage benefits are evident with SMSD reduction compared to GRTK, demonstrating stein-type shrinkage superiority for . Sample size effects follow similar patterns to GRTK, with bias and variance reductions maintaining the theoretical scaling. Correlation impact shows that shrinkage provides larger relative improvements under conditions, helping to mitigate multicollinearity effects. The selection criteria ranking is preserved across shrinkage, indicating robust performance across different regularization approaches. Additionally, submodel selection shows sparser models (3.4, 16.6) for n = 1000 versus (3.2, 16.8) for n = 500, suggesting improved signal-noise discrimination with larger samples.

In Table 8, the positive-part Stein estimator prevents over-shrinking by eliminating negative shrinkage factors. This estimator shows superior performance with the lowest SMSD in the majority of scenarios, achieving improvement over and . The theoretical dominance property is empirically confirmed across all scenarios. Sample size effects maintain the same asymptotic scaling patterns, while correlation impacts are similar to other estimators but with enhanced robustness. The selection criteria performance continues to favor RECP, with AICc showing the highest variability due to its correction term sensitivity. Importantly, the advantages of positive-part shrinkage persist even at , confirming that the theoretical benefits extend to large sample scenarios and validating the methodology’s long-term effectiveness.

In Table 9, the nonparametric component uses Nadaraya-Watson weights as defined in Equation (7). From Theorem 2, the nonparametric estimator achieves convergence. Sample size effects show MSE reduction from as expected, consistent with the theoretical convergence established in Theorem 2. Another finding is shrinkage methods achieving very good MSE reduction versus GRTK, occurring because better improves residuals in Equation (13), where parametric improvements progress to nonparametric recovery.

Correlation impact shows that increases MSE by approximately 15–30% compared to , which is much smaller than the increase observed for parametric components in Table 6, Table 7 and Table 8, suggesting that the nonparametric component is more robust to multicollinearity in the parametric part. Regarding selection criteria performance, unlike the parametric case where RECP consistently dominated (see Section 4), the nonparametric component shows more varied optimal criteria, with GCV performing best for S estimator and BIC excelling for PS estimator, indicating that optimal bandwidth selection from Equations (27)–(30) may require different approaches depending on the underlying parametric estimation method.

The superior performance of shrinkage estimators validates the interconnected nature of parametric and nonparametric components in semiparametric models, consistent with the bias-variance decomposition in Equation (26), where improvements in one component gradually provide benefits to the other component.

5.3. Real Data Example: Airline Delay Dataset

5.3.1. Data and Model

In this section, we consider the airline delay dataset to show the performance of the modified GRTK estimators. The Bureau of Transportation Statistics (BTS), a division of the U.S. Department of Transportation (DOT), monitors the punctuality of domestic flights conducted by major air carriers. Commencing in June 2003, BTS initiated the collection of comprehensive information as a daily time series regarding the reasons behind flight delays. Based on this collected data, we obtain the partially linear time series model with GRTK estimators along with shrinkage estimators and measure the comparative performance according to the four selection criteria for both shrinkage and bandwidth parameters. The dataset involves originally 1,048,576 data points which is almost impossible to process for the analysis. In this paper, we extracted a representative consecutive series from the data with data points. Notice that we do not try to solve a specific data-driven problem but showing the merits of the introduced semiparametric estimators. Therefore dataset with is enough to represent both the modelling procedure and its alignment with the simulation settings. To construct the semiparametric time series model, we consider the following six variables as explanatory variables for the parametric component of the model that are departure time (), CRS departure time (), actual elapsed time (), CRS elapsed time (), departure delay () and distance (). The air time of the aircrafts () is determined as a nonparametric variable for the model. The reason for that can be seen in Figure 5 with the hypothetical curve which can be counted as evidence for the nonlinear relationship between the response variable arrival delays () and the variable. Accordingly, the model to be estimated is given by:

where ’s denote the six explanatory covariates defined above, are the corresponding regression coefficients and ’s are the autocorrelated error terms as shown in (2).

5.3.2. Pre-Processing

Autocorrelation parameter is estimated as for difference of . In Figure 5, autocorrelation functions are provided for both original (non-stationary) and differenced- series.

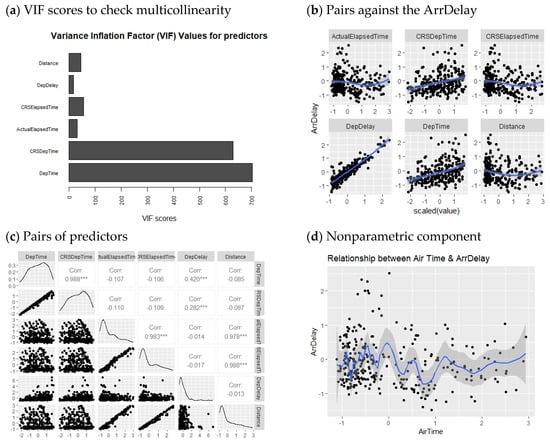

Figure 6, each panel includes important information about the data to describe the time series and its properties. In panel (a), variance inflation factors (VIFs) of the predictors in the parametric component of the model reveal that five out of six variables have VIF values greater than 10, indicating a significant multicollinearity problem that needs to be addressed. In panel (b), the linear relationship between predictors and the response variable can be observed, which is also evident in panel (c), depicting pairs of variables with correlation levels. Finally, in panel (d), the relationship between and the nonparametric covariate is illustrated with a hypothetical curve.

Figure 6.

Informative plots for the Airline Delay dataset. In panel (c), asterisks beside the correlation values indicate statistically significant values. In panel (b,d), blue lines indicate hypothetic relationships between variables.

In the simulation study, 3D plots are generated to illustrate the selection of bandwidth () and shrinkage parameter () for the four criteria, as shown in Figure 7. According to process in Figure 7, selected pairs of for the criteria are as follows: , , and . After obtaining the chosen tuning parameters, the performances of the GRTK estimators are presented in Table 4. This table includes the model variance, MSE of the nonparametric component estimate, and overall variance of the regression coefficient, calculated by , where is shown in (19). The best scores are indicated with bold colors in Table 4. According to the results, consistent with the findings from the simulation study, for , we observe closer performances.

Figure 7.

Selection of based on the four criteria , , and for airline delay dataset.

Simultaneously, the -based estimator shows good performance, closely followed by the -based estimator. and -based estimators also exhibit considerable performances and are close to the other two. Additionally, to assess the statistical significance of the estimated regression coefficients, Student t-test statistics and their p-values are presented in Table 5. Furthermore, the estimated models are tested using the F-test, and p-values are provided. The results indicate that all estimated models based on the four criteria are statistically significant (). However, , , and do not make a significant contribution to the estimated model, despite the overall significance of the models. In conclusion, it can be stated that all criteria yield meaningful models based on their parameter selection procedures.

5.3.3. Model Fitting and Results

Table 10 presents the final performance measures of the GRTK-based estimators (including their shrinkage variants) for the real airline delay dataset, focusing on both parametric and nonparametric components. These metrics—covering model variance, the MSE of the nonparametric estimate, and the overall variance of the regression coefficients—show that all four selection criteria () yield closely comparable results, mirroring the pattern observed in the simulation study for moderate-to-large sample sizes. In particular, RECP exhibits a slight edge in some measures, yet the other criteria remain competitive. This consistency with the simulation outcomes underscores two key conclusions: (1) the newly introduced GRTK framework and its shrinkage modifications successfully mitigate variance inflation in the presence of multicollinearity; and (2) once an adequately sized dataset is available, different penalty-parameter selection methods converge toward similarly effective solutions in partially linear time series models.

Table 10.

Performance of the GRTK estimators for parametric and nonparametric components of the model.

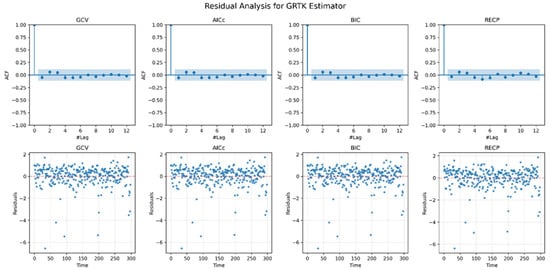

Figure 8 provides a residual analysis for each of the four GRTK-based models (including shrinkage versions) applied to the airline delay dataset. The top row displays the autocorrelation functions (ACFs) of the residuals, indicating whether significant time dependence remains after fitting. In all four cases, autocorrelation appears modest and tapers off quickly, suggesting that the estimated models have captured most of the serial dependence in the data. The bottom row shows residuals scattered around zero without any pronounced pattern, hinting at an absence of systematic bias or heteroscedasticity. Consequently, the visual diagnostic supports the conclusion that, regardless of the specific selection criterion (GCV, AICc, BIC, RECP), the introduced GRTK estimators adequately address the combined challenges of autocorrelation and multicollinearity in this real-world time series setting.

Figure 8.

Residual analysis for the airline delay dataset. In the plots at the top of the figure show the acf plots of residuals obtained for the estimated models based on the corresponding criterion. The plots at the bottom show the scatterplots of the residuals around (red dashed line).

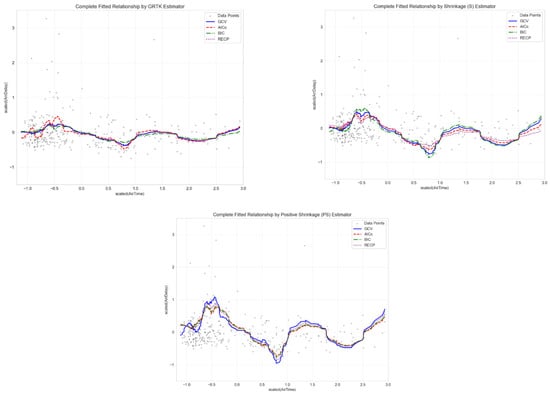

Regarding the fitted curves in Figure 9, it presents the estimated curves for the nonparametric component under the proposed GRTK framework, incorporating both ordinary Stein shrinkage (S) and positive-part Stein shrinkage (PS), as well as the baseline GRTK. Each panel corresponds to one of the four selection criteria—GCV, AICc, BIC, and RECP—which choose the best possible pairs to govern bandwidth () and shrinkage (). Mathematically, all four criteria minimize a penalized objective function (e.g., GCV, AICc, etc.) to balance bias (from a potentially large ) and variance (from insufficient shrinkage). As seen in the figure, different choices of yield subtle variations in the smoothness and curvature of . For example, typically enforces a slightly larger bandwidth to address overfitting risks in moderate samples, resulting in a smoother curve, whereas can pick a smaller or a larger , leading to more parsimonious fits with sharper inflection points. GCV often strikes a middle ground, and RECP sometimes selects a relatively large bandwidth or smaller shrinkage factor if its pilot-based risk estimation deems this configuration optimal

Figure 9.

Fitted curves obtained based on the four criteria.

Beyond these distinctions, the figure also highlights the effect of adding shrinkage to the baseline GRTK estimates. Visually, the PS-based curves often appear slightly more adaptive in regions where GRTK or S might be overly penalized, reflecting a more balanced bias–variance trade-off. Despite these finer differences, all curves capture the primary nonlinear trends and fall within reasonable bounds, reinforcing the broader conclusion that combining kernel smoothing with either Stein or positive-part Stein shrinkage effectively handles both autocorrelation and multicollinearity in semiparametric time series models.

6. Conclusions

This paper introduces and analyzes modified ridge-type kernel smoothing estimators tailored for semiparametric time series models with multicollinearity in the parametric component. By combining generalized ridge-type kernel (GRTK) methodology with shrinkage and positive-part Stein shrinkage versions, we address both near-linear dependencies among regressors and autocorrelation in the error structure. We also explore how to data-adaptively select the bandwidth () and shrinkage parameter () using four widely used criteria: , and .

From the detailed simulation study and the real-world airline delay dataset, the following conclusions can be drawn:

- The GCV-based GRTK estimator effectively balances bias and variance for both parametric and nonparametric components. In simulations, it consistently provides stable estimates for linear coefficients and suitably smooth fits for the nonlinear function .

- All four criteria show instability in small samples, as expected. However, in medium and large samples, the proposed estimators achieve more reliable performance, with reduced bias, variance, and SMSD. The GRTK-based approach effectively manages the autoregressive nature of the errors, highlighting the importance of accounting for correlation in time series data.

- Shrinkage estimators, especially positive-part Stein, excel at mitigating variance inflation and overshrinking under strong multicollinearity. They outperform standard methods when predictors are strongly correlated, particularly in larger samples.

- The large-sample results () empirically confirm the theoretical asymptotic properties established in Theorems 1–2. Bias reductions and variance reductions are seen when sample size increases from to as expected, validating that GRTK estimators mostly achieve their theoretical performance in practice.

- The positive-part Stein estimator demonstrates superior and consistent dominance over both baseline GRTK and ordinary Stein shrinkage across all large-sample scenarios, with the theoretical risk ordering being empirically confirmed. This dominance persists even at along with very close performances for three estimators, indicating that the benefits of positive-part shrinkage are not merely finite-sample phenomena but extend to asymptotic regimes, making it the recommended approach for practical applications regardless of sample size.

- Airline delay data results align with simulations. All selection methods yield comparable models, demonstrating their ability to balance penalty tuning. This advantage becomes most pronounced in moderate and large samples, and GCV often provides a straightforward yet effective method for setting ().

The evidence from both simulations and the airline-delay study indicates that the GRTK family is a practical tool for the twin problems of multicollinearity and autocorrelated errors in partially linear time series models. Even so, the estimator’s effectiveness rests on several working assumptions and design choices that raise open questions and some caveats for the introduced methodology that are listed below:

- The estimators assume weakly stationary, short-memory errors;

- A two–dimensional grid search for () is required which brings a serious computational burden;

- The method assumes that only one of the covariates has a non-parametric relationship between response variable;

- Shrinkage may introduce finite-sample bias when true coefficients are large;

- The method relies on a consistent preliminary estimate of .

As a result, the proposed estimators fill an important gap in semiparametric modeling by jointly tackling multicollinearity and autocorrelation, thereby yielding more robust and interpretable results for partially linear models in time series contexts.

Author Contributions

Conceptualization, S.E.A. and D.A.; methodology, D.A. and E.Y.; software, E.Y.; validation, S.E.A. and D.A.; formal analysis, E.Y.; investigation, E.Y.; resources, S.E.A.; data curation, E.Y.; writing—original draft preparation, S.E.A. and E.Y.; writing—review and editing, S.E.A.; visualization, E.Y.; supervision, S.E.A. and D.A.; project administration, S.E.A. All authors have read and agreed to the published version of the manuscript.

Funding

The research of S. Ejaz Ahmed was supported by the Natural Sciences and the Engineering Research Council (NSERC) of Canada. This research received no other external funding.

Data Availability Statement

For simulation studies R function can be accessed from the link: https://github.com/yilmazersin13/Partially-linear-time-series-model (accessed on 5 April 2025) and for the real data example AirDelay dataset is publicly available in Kaggle platform and accessed by the link: https://www.kaggle.com/datasets/undersc0re/flight-delay-and-causes (accessed on 5 April 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Derivations of GRTK Estimators

Appendix A.1. Derivations of Equations (12)–(14)

Accordingly, Equation (20) can be expressed as:

Equivalently, from (17), we obtain the bias as in Equation (21):

Also, the variance of an estimator given in (22) can be derived as follows:

As a result, it can be expressed as the following result with abbreviation as in (22):

Hence, derivation has been completed.

Appendix A.2. The Proof of the Equation (24)

Thus, the hat matrix is written as follows:

Appendix B. Asymptotic Supplement: Bias, AQDB, and ADR

This appendix provides a treatment of the asymptotic bias, Asymptotic Quadratic Distributional Bias (AQDB), and Asymptotic Distributional Risk (ADR) for the GRTK-based estimators introduced in Section 3. Specifically, we focus on:

- The baseline GRTK (FM) estimator from Equation (12),

- The ordinary Stein shrinkage estimator from Equation (14), and

- The positive-part Stein shrinkage estimator from Equation (15).

- with covariance matrix capturing possible autocorrelation (e.g., ). Throughout, we assume is known (or replaced with a consistent estimator , which suffices for the same asymptotic properties). We also assume standard regularity conditions on the kernel smoothing for (bandwidth and smoothness conditions as ). Under these conditions, all estimators in Section 3 are consistent for , and we can derive their bias, variance, and risk expansions.

Appendix B.1. Baseline GRTK (FM) Estimator

Recall that the full-model GRTK estimator may be written as

where is the partially centered response (subtracting off the estimated nonparametric component ), and is the design matrix for the parametric covariates. Let us Define

Hence . If (the decomposition after partial kernel smoothing of , then

One can show that is effectively , capturing the ridge shrinkage on the parametric coefficients. Hence,

Exact Bias. Taking expectation (condition on ) and noting for large , we get

plus small terms from the smoothing residual. Since in typical regressions and may vanish with (or remain bounded), this bias goes to zero as . The asymptotic behavior of this bias term depends crucially on how k scales with n. We can distinguish several cases:

(i) If (remains constant or bounded as n→∞): Since , we have (, and the bias term becomes , which converges to zero at rate .

(ii) If for some : The bias term becomes , which still converges to zero when α < 1, but at a slower rate than in case (i).

(iii) If : The bias may not vanish asymptotically, potentially compromising consistency.

Therefore, for consistency of the estimator, we require that → 0 as , which is satisfied when . This asymptotic requirement aligns with our parameter selection methods in Section 4, which implicitly balance the bias-variance tradeoff by choosing appropriate values. Hence the GRTK estimator is consistent and has bias in finite , shrinking towards . In summary:

Appendix B.2. Shrinkage Estimators

Denote by the submodel estimator, obtained by fitting the same GRTK procedure but only to a subset of regressors using BIC criterion. In practice, we set the omitted coefficients to zero. Then the ordinary Stein-type shrinkage estimator is

where is a shrinkage factor determined from the data (discussed in Subsection B3). If , no shrinkage is applied (we use the full model); if , we revert to the submodel; for , we form a weighted compromise. The positive-part shrinkage estimator is

so that negative values of (which might arise due to sampling error) are replaced by 0 to prevent over-shrinkage. Regarding the bias of shrinkage estimators,

note that itself has some bias (particularly if the omitted coefficients are not truly zero), while has the ridge bias but includes all parameters. Let and . A first-order approximation, assuming is not strongly correlated with the random errors in (, gives

Hence the bias of is approximately a linear mixture of submodel bias and fullmodel bias. In large , we typically find small, and is either (when the submodel is correct) or (if some omitted coefficients are actually nonzero) has a bigger or term. Thus, has a bias that is smaller than the submodel’s in cases where .

Positive-Part Variant. For , the same expansion holds but with replaced by . This cannot exceed , so

elementwise (in a typical risk comparison sense). That is, the positive-part always improves or equals the Stein shrinkage in terms of bias magnitude, since it disallows negative .

As given in Section 3, the distance measure quantifies how far is from the submodel . A convenient choice is the squared Mahalanobis distance:

Under the “null” submodel assumption (that the omitted parameters are actually zero), follows approximately a distribution with degrees of freedom, possibly noncentral if the omitted effects are not truly zero. The main text (see Equation (14)) uses in the formula for the shrinkage factor:

where . If goes negative; the positive-part approach sets . Asymptotic Distribution of . Under standard conditions (normal or asymptotically normal errors, large ):

where is a noncentrality parameter linked to how large the omitted true coefficients are. In a local-alternatives framework with for the omitted block, remains finite as . If (null case), and . If tends to be , so . This adaptivity is what drives the shrinkage phenomenon.

- If is large (), we suspect omitted coefficients are nonzero, so retains the full-model estimate.

- If is near is moderate , partially shrinking FM toward SM.

- If , then is negative, which is clipped to 0 by the positive-part rule.

Appendix B.3. Asymptotic Quadratic Bias (AQDB) and Distributional Risk (ADR)

We next assess each estimator’s risk and define the Asymptotic Quadratic Distributional Bias (AQDB). For an estimator , define

In the local alternatives setting (where the omitted block ), we often look at as , which splits into an asymptotic variance component plus an asymptotic (squared) bias:

Here and . We denote:

where the Asymptotic Quadratic Distributional Bias (AQDB) is and the Asymptotic Distributional Risk (ADR) is their sum.

- Full-Model (FM): has negligible bias (so ), but a higher variance from estimating all coefficients. Hence , typically .

- Submodel (SM): has lower variance (only parameters) but possibly large bias if , giving and a big . Specifically, if the omitted block is , then so .

- Stein (S): interpolates. Variance is but . Bias is less than the SM’s if omitted effects are actually nonzero, but not zero: times roughly, so .

- Positive-Part Stein (PS): ensures no negative shrinkage, so with . Consequently, . Moreover, in typical settings, so dominates in ADR.

Thus, positive-part shrinkage is usually recommended when or under moderate correlation/collinearity, as it yields the lowest or nearly lowest ADR across different parameter regimes. This is consistent with the numerical results in Section 5 of the main text and references such as [10,14].

References

- Speckman, P. Kernel smoothing in partially linear model. J. R. Stat. Soc. Ser. B 1988, 50, 413–435. [Google Scholar] [CrossRef]

- Hu, H. Ridge estimation of a semiparametric regression model. J. Comput. Appl. Math. 2005, 176, 215–222. [Google Scholar] [CrossRef]

- Gao, J. Asymptotic theory for partly linear models. Commun. Stat.—Theory Methods 1995, 24, 1985–2009. [Google Scholar] [CrossRef]

- Schick, A. Efficient estimation in a semiparametric additive regression model with autoregressive errors. Stoch. Process. Their Appl. 1996, 61, 339–361. [Google Scholar] [CrossRef]

- Green, P.J.; Silverman, B.W. Nonparametric Regression and Generalized Linear Models; Chapman & Hall: London, UK, 1994. [Google Scholar]

- You, J.; Zhou, Y. Empirical likelihood for semiparametric varying-coefficient partially linear regression models. Stat. Probab. Lett. 2006, 76, 412–422. [Google Scholar] [CrossRef]

- Kazemi, M.; Shahsvani, D.; Arashi, M.; Rodrigues, P.C. Identification for partially linear regression model with autoregressive errors. J. Stat. Comput. Simul. 2021, 91, 1441–1454. [Google Scholar] [CrossRef]

- Özkale, M.R. A jackknifed ridge estimator in the linear regression model with heteroscedastic or correlated errors. Stat. Probab. Lett. 2008, 78, 3159–3169. [Google Scholar] [CrossRef]

- You, J.; Chen, G. Semiparametric generalized least squares estimation in partially linear regression models with correlated errors. J. Stat. Plan. Inference 2007, 137, 117–132. [Google Scholar] [CrossRef]

- Nooi Asl, M.; Bevrani, H.; Arabi Belaghi, R.; Mansson, K. Ridge-type shrinkage estimators in generalized linear models with an application to prostate cancer data. Stat. Pap. 2021, 62, 1043–1085. [Google Scholar] [CrossRef]

- Kuran, Ö.; Yalaz, S. Kernel ridge prediction method in partially linear mixed measurement error model. Commun. Stat.-Simul. Comput. 2024, 53, 2330–2350. [Google Scholar] [CrossRef]

- Ahmed, S.E. Penalty, Shrinkage and Pretest Strategies: Variable Selection and Estimation; Springer: New York, NY, USA, 2014. [Google Scholar]

- Ahmed, S.E. Penalty, shrinkage and pretest strategies in statistical modeling. In Frontiers in Statistics; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Ahmed, S.E.; Ahmed, F.; Yüzbaşı, B. Post-Shrinkage Strategies in Statistical and Machine Learning for High-Dimensional Data; CRC Press: Boca Raton, FL, USA, 2023. [Google Scholar]

- Chen, C.M.; Weng, S.C.; Tsai, J.R.; Shen, P.S. The mean residual life model for the right-censored data in the presence of covariate measurement errors. Stat. Med. 2023, 42, 2557–2572. [Google Scholar] [CrossRef] [PubMed]

- Shi, J.; Lau, T.S. Empirical likelihood for partially linear models. J. Multivar. Anal. 2000, 72, 132–148. [Google Scholar] [CrossRef]

- Lee, T.C.M. Smoothing parameter selection for smoothing splines: A simulation study. Comput. Stat. Data Anal. 2003, 42, 139–148. [Google Scholar] [CrossRef]

- Andrews, D.W.K. Laws of large numbers for dependent non-identically distributed random variables. Econom. Theory 1988, 4, 458–467. [Google Scholar] [CrossRef]

- White, H. Asymptotic Theory for Econometricians; Academic Press: New York, NY, USA, 1984. [Google Scholar]

- Slaoui, Y. Recursive kernel regression estimation under α-mixing data. Commun. Stat.—Theory Methods 2022, 51, 8459–8475. [Google Scholar] [CrossRef]

- Theobald, C.M. Generalizations of mean square error applied to ridge regression. J. R. Stat. Soc. Ser. B 1974, 36, 103–106. [Google Scholar] [CrossRef]

- Hurvich, C.M.; Simonoff, J.S.; Tsai, C.L. Smoothing parameter selection in nonparametric regression using an improved Akaike information criterion. J. R. Stat. Soc. Ser. B 1998, 60, 271–293. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).