Abstract

Robust image processing systems require input images that closely resemble real-world scenes. However, external factors, such as adverse environmental conditions or errors in data transmission, can alter the captured image, leading to information loss. These factors may include poor lighting conditions at the time of image capture or the presence of noise, necessitating procedures to restore the data to a representation as close as possible to the real scene. This research project proposes an architecture based on an autoencoder capable of handling both poor lighting conditions and noise in digital images simultaneously, rather than processing them separately. The proposed methodology has been demonstrated to outperform competing techniques specialized in noise reduction or contrast enhancement. This is supported by both objective numerical metrics and visual evaluations using a validation set with varying lighting characteristics. The results indicate that the proposed methodology effectively restores images by improving contrast and reducing noise without requiring separate processing steps.

MSC:

68T07

1. Introduction

Gaussian noise is one of the most common types of noise observed in digital images, often introduced during image acquisition due to various factors. These factors include adverse lighting conditions, thermal instabilities in camera sensors, and failures in the electronic structure of the camera [1]. Adverse lighting conditions have been shown to degrade the visual quality of digital images and exacerbate the noise inherent to camera sensors, thereby increasing the presence of Gaussian noise in the captured image.

The term “adverse lighting conditions” refers to two specific scenarios: low illumination and high illumination. In the case of low illumination, the resulting digital image exhibits reduced brightness and low contrast [2]. Additionally, within the electronic structure of the camera, sensors exhibit increased sensitivity to weak signals, amplifying any signal variations and resulting in the generation of Gaussian noise. Conversely, under high illumination conditions, the camera sensors become saturated, leading to a nonlinear response in the captured data [3] and contributing to the presence of Gaussian noise. Moreover, the heat generated, along with other types of noise, such as thermal noise, further affects the image quality.

Several techniques have been developed to mitigate the impact of Gaussian noise in images captured under poor lighting conditions. These include both classical image processing methods and advanced deep learning-based approaches [4]. Some of these techniques focus on separately addressing noise reduction and illumination enhancement [5]. However, it is important to distinguish between illumination enhancement and contrast enhancement as they are two distinct techniques in image processing that address different aspects of a visual quality of the image. While illumination enhancement adjusts the overall brightness to improve visibility and make the image clearer and easier to interpret [6], contrast enhancement increases the difference between light and dark areas to make details more perceptible [7]. This research highlights the importance of simultaneously enhancing image details through contrast adjustment and reducing noise using an autoencoder neural network. This is achieved by analyzing the image and applying trained models based on the features present in the input data.

2. Background Work

Inadequate lighting during scene capture can lead to several issues that negatively impact the visual quality of an image. These issues range from underexposure or oversaturation to noise generation, as illustrated in Figure 1 (derived from Smartphone Image Denoising Dataset (SIDD) [8]).

Figure 1.

The noise present in saturated and underexposed images.

Noise in digital images refers to variations in pixel values that distort the original information. This alteration can occur during image capture, digitization, storage, or transmission. Different types of noise are characterized by how they interact with the image and can be identified based on their source. One of the most common types of noise in images is Gaussian noise. Various techniques, both traditional and deep learning-based, have been developed to reduce this type of noise. Traditional approaches include methods such as the median filter [9], while deep learning techniques have an inherent ability to overcome the limitations of certain conventional algorithms, such as the following:

- Denoising Convolutional Neural Network (DnCNN): This study introduced the design of a Convolutional Neural Network (CNN) specifically developed to reduce Gaussian noise in digital images. The network consists of multiple convolutional layers, followed by batch normalization and ReLU activation functions. A distinctive feature of this architecture is that it does not directly learn to generate a denoised image; instead, it employs residual learning, where the model learns to predict the difference between the noisy image and its denoised counterpart. This approach facilitates learning and improves model convergence [10].

- Nonlinear Activation Free Network (NAFNet): This study presented an alternative designed for image denoising. The network was developed with the objective of improving architectural efficiency and simplifying computations by minimizing the use of nonlinear activation functions, such as Rectified Linear Unit (ReLU). This design choice enhances computational efficiency while maintaining optimal model performance [11].

- Restoration Transformer (Restormer): This network is based on the Transformer architecture and is specifically designed for image restoration tasks, including Gaussian noise reduction. This approach leverages the advantages of Transformer networks to optimize memory usage and computational efficiency while simultaneously capturing long-range dependencies [12].

In the context of digital images, contrast is defined as the measure of the difference between the brightness levels of the lightest and darkest areas in an image. Adequate contrast enhances the visual quality of an image, whereas insufficient contrast can result in a visually flat appearance [7]. To address the issue of poor contrast in images, several algorithms have been developed in recent years to enhance contrast. Examples include An Advanced Whale Optimization Algorithm for Grayscale Image Enhancement [13], Pixel Intensity Optimization and Detail-Preserving Contextual Contrast Enhancement for Underwater Images [14], and Optimal Bezier Curve Modification Function for Contrast-Degraded Images [15]. However, these algorithms primarily focus on enhancing specific image channels or improving images under a single lighting condition.

Some algorithms that operate across all image channels and are capable of functioning under extreme lighting conditions, whether in low or high illumination, include the following:

- Single-Scale Retinex (SSR) is a technique designed to enhance the contrast and illumination of digital images. It is based on human perception of color and luminance in real-world scenes, simulating how the human eye adapts to different lighting conditions by adjusting color perception and scene illumination [16]. The application process of is outlined in Equations (1)–(6).where represents the original image pixel, which can be decomposed into two components: , the reflectance component; and , the illumination component.To facilitate the distinction between reflectance and illumination, the following logarithmic transformation is applied:Clarity enhancement is achieved by applying a Gaussian filter to smooth the original image:where is the Gaussian filter, and ∗ denotes two-dimensional convolution, which is formally defined as follows:The reflectance component is obtained by subtracting the illumination component in the logarithmic domain:Finally, an inverse transformation is performed to reconstruct the processed image:

- Multiscale Retinex (MSR) is an extension of the algorithm, and it was designed to overcome the limitations of Gaussian filter scale sensitivity. Unlike , this algorithm operates across multiple filter scales, utilizing the results from different scales to achieve a balance between local and global details [17]. The computation of is given by Equation (7).where represents the output value at coordinates , n is the number of scales, and and denote the weight and the Gaussian filter at scale i, respectively.

- Multiscale Retinex with Color Restoration (MSRCR) is an enhancement of the algorithm that combines the contrast and detail enhancement capabilities of with a function designed to preserve the natural colors of the image, thereby preventing the loss of color fidelity [18]. The computation of is described in Equations (8) and (9).where represents the output value at coordinates , is the color restoration function, is a gain-related constant, and corresponds to the pixel intensity in the k different channels of the image.

- Multiscale Retinex with Chromaticity Preservation (MSRCP) is a refinement of , and it was designed to preserve the chromaticity of the image while enhancing its contrast and detail. This approach ensures a more faithful representation of the original colors [18]. The computation of is described in Equations (10)–(12).where represents the average intensity of the pixel at coordinates , denotes the chromatic proportions, and is the resulting value.

- Gamma correction is a technique used to enhance the brightness and contrast of a digital image. It adjusts the relationship between intensity levels and their perceived brightness, thereby helping to correct distortions [19]. The computation of Gamma correction is given by Equation (13).where S represents the output intensity, I is the input intensity, and is the correction factor.

- Histogram equalization is a widely used algorithm for contrast enhancement. It improves contrast by redistributing intensity levels (Equation (14)) so that the histogram approaches a uniform distribution. This process enhances details in low-contrast images.where L represents the maximum intensity value in the image, and is the probability of an event occurring at that intensity.

3. Proposed Model

The aforementioned algorithms have been shown to prioritize a single objective, either noise reduction or contrast enhancement. However, a new algorithm is proposed that performs both tasks simultaneously, yielding superior results compared to existing methods. The proposed algorithm, Denoising Vanilla Autoencoder with Contrast Enhancement

(DVACE), was designed to simultaneously address the noise reduction and contrast enhancement in images represented mathematically as multidimensional arrays. First, consider an original image X, defined as a two-dimensional matrix (Gray Scale (GS) image) or a three-dimensional tensor (Red, Green, Blue (RGB) image), where each matrix entry corresponds to the pixel intensity at position .

Then, let the original multidimensional image be the following:

where is the spatial resolution of the image, C is the number of channels ( for GS, and for RGB).

Considering a multidimensional Gaussian noise model [20], the observed noisy image is expressed as follows:

where is the observed noisy image, is the original noise-free image, is additive Gaussian noise, is the multidimensional mean matrix (local or global) of pixels, and is the covariance matrix representing the multidimensional noise dispersion (typically for stationary, uncorrelated noise between pixels and channels, where I is the multidimensional identity matrix).

For each pixel at a specific position with observed value (vector for RGB and scalar for GS), the Gaussian noise probability distribution is as follows:

where is the column vector (for RGB and ), the scalar (GS, ) is observed at spatial position , is the original local mean at position , and is the noise covariance matrix (simplified often to , with as the identity matrix).

If noise is stationary and isotropic (equal in all directions), the equation simplifies to the following:

The joint probability for the entire observed image, assuming independence among pixels and channels, is as follows:

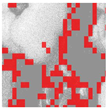

This provides the mathematical foundation on which the DVACE model optimizes the estimation of the original image X by minimizing the exponential term that represents the squared error between the observed noisy image Y and the restored image X. By adjusting the variance, the density of noise present in the image can be increased or decreased. Additionally, by modifying the mean, the image can appear underexposed (Figure 2a) or overexposed (Figure 2b). This demonstrates how the illumination of the image changes, either darkening or brightening. Finally, the histogram corresponding to the simulated image is presented.

Figure 2.

Image simulation: (a) underexposed with its respective Gaussian distribution, with and , and its resulting histogram when corrupted; (b) saturated with its respective Gaussian distribution, with and , and its resulting histogram when corrupted.

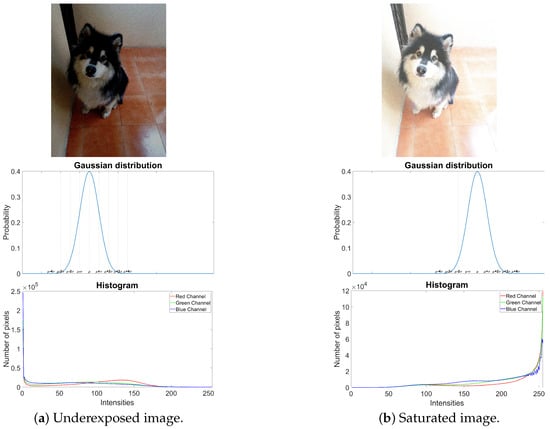

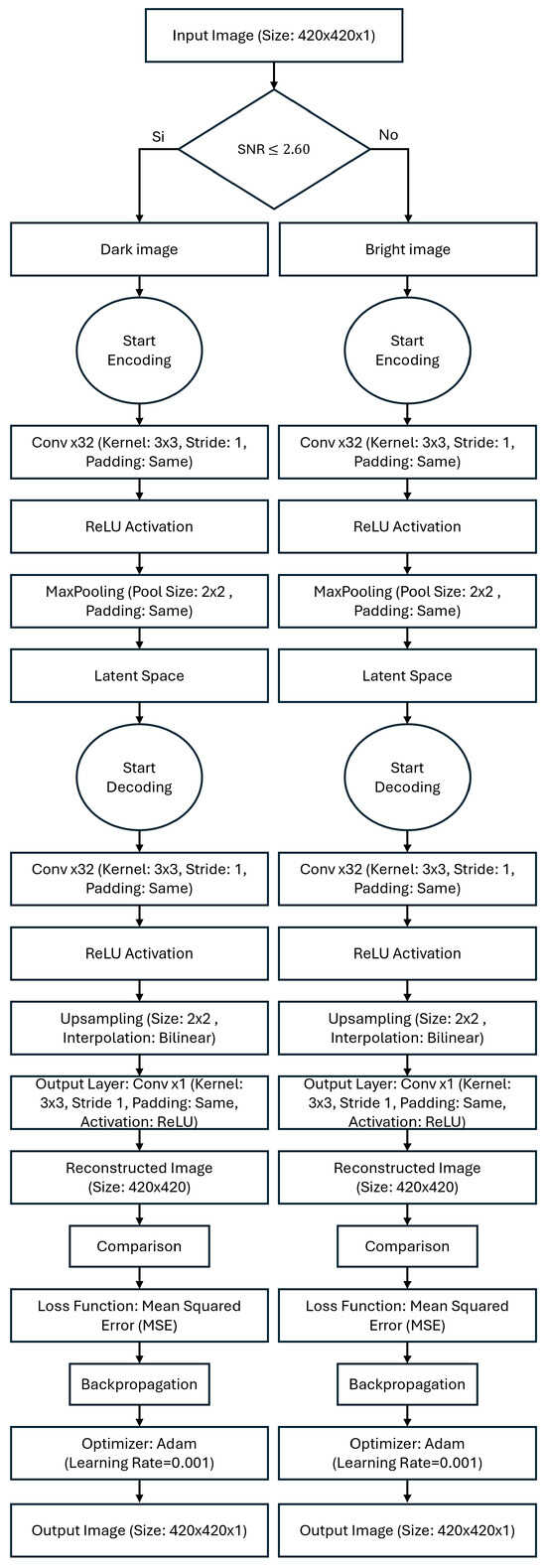

Figure 3 presents the flowchart of the proposed model architecture for RGB images, while Figure 4 shows the flowchart of the proposed model architecture for GS images.

Figure 3.

Flowchart of the proposed DVACE for RGB images.

Figure 4.

Flowchart of the proposed DVACE for GS images.

Each architecture calculates the Signal-to-Noise Ratio (SNR) of the input image. The SNR metric [21] is used to enhance the network’s ability to determine the most suitable model—whether to apply a model that brightens dark images or one that darkens bright images—during the actual processing stage. The SNR is defined for an image , where and c represent the spatial and channel dimensions. The SNR quantifies the mean intensity relative to the variance in the image:

where the mean and the variance are computed as follows:

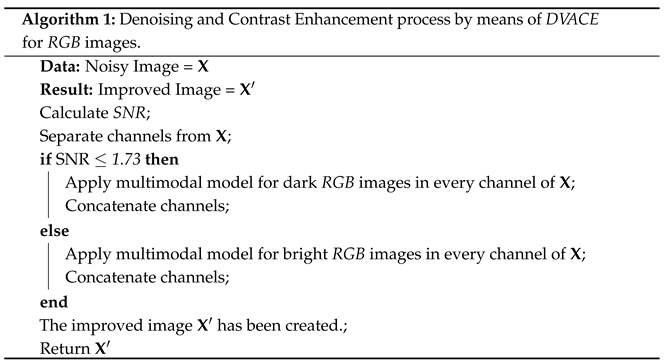

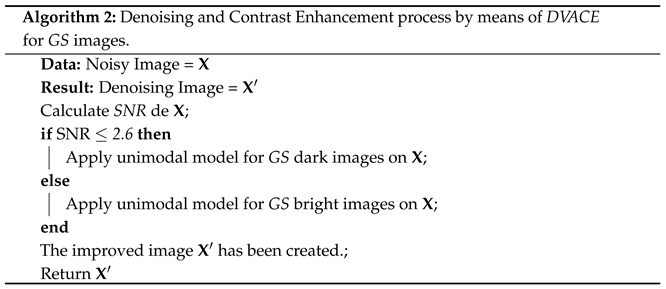

This formulation provides a robust measure of the image intensity relative to its noise distribution. It is evident that the design of Algorithms 1 and 2 was based on the proposed architectures.

The SNR thresholds used in both the RGB and GS algorithms were determined experimentally by calculating the average SNR of the corrupted images used to train the network. Equations (23)–(31) illustrate the DVACE procedure.

Given a set of images in different modalities ( for GS images and for RGB images), the classification process based on the SNR can be rigorously expressed as a decision function, which is defined as follows:

where X represents the input image; is the processed image by the DVACE model; represents the GS image space; represents the RGB image space with C channels; is the function computing the SNR of the image; and and are predefined SNR thresholds for GS and RGB images, respectively.

and are the unimodal enhancement functions for GS images, and the following apply:

- is applied to images with low SNR (dark images).

- is applied to images with high SNR (bright images).

and are the multimodal enhancement functions for RGB images, and the following apply:

- is applied to images with low SNR (dark images).

- is applied to images with high SNR (bright images).

The convolutional operation ∗ between an input tensor and a kernel is defined as follows:

where accounts for padding in the kernel size, and is the bias term for channel k.

A non-linear transformation is applied to the convolutional result:

where the activation function is defined as follows:

this introduces non-linearity, enabling feature extraction from high-dimensional spaces.

Dimensional reduction is performed through max-pooling:

where define the pooling window size. This operation selects the most dominant feature per region.

A secondary convolutional pass refines the extracted features:

where represents a new set of learned weights.

To restore spatial resolution, we applied weighted bilinear interpolation:

where are interpolation weights satisfying the following:

A final convolutional step reconstructs the enhanced image as follows:

where represents a final learned weight set for output feature mapping.

Following the Denoising Vanilla Autoencoder (DVA) training structure and methodology [22], two databases were created using images from the “1 Million Faces” dataset [23], from which only 7000 images were selected.

The first database contains images with a mean intensity of {0.01 to 0.5} and of 0.01 for bright images, while the second database contains images with a mean intensity ranging from {−0.01 to −0.05} and a variance of 0.01 for dark images. Each database includes images in both RGB and GS. The implementation details to ensure reproducibility are provided in Table 1.

Table 1.

Hyperparameter and training setup.

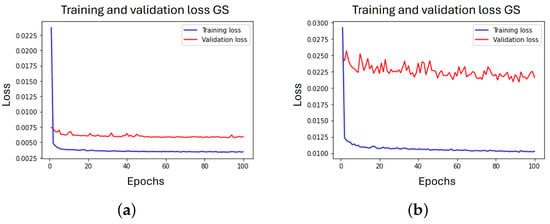

Figure 5.

Learning curves of the algorithm DVACE for the GS images, (a) Unimodal model for dark images, (b) Unimodal model for light images.

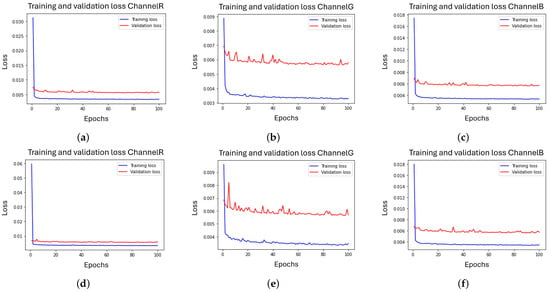

Figure 6.

Learning curves of the algorithm DVACE for the RGB images, (a) Multimodal model R for dark images, (b) Multimodal model G for dark images, (c) Multimodal model B for dark images, (d) Multimodal model R for light images, (e) Multimodal model R for light images, (f) Multimodal model R for light images.

4. Experimental Results

It is essential to recognize that all algorithms require a validation process to assess their effectiveness in comparison to existing methods. To gain a comprehensive understanding of their performance, it is crucial to employ techniques that quantitatively and/or qualitatively evaluate their outcomes.

Therefore, the following quantitative and qualitative quality criteria were used to assess and validate the results obtained by DVACE in comparison to the other specialized techniques discussed in Section 2.

Quantitative metrics provide a means of evaluating the quality of digital images after processing. These metrics can be categorized into reference-based metrics, which compare the processed image against a ground truth, and non-reference metrics, which assess image quality without requiring a reference. The metrics used in this study are as follows:

- Erreur Relative Globale Adimensionnelle de Synthèse (ERGAS) [22,24].

- Mean Square Error (MSE) [22].

- Normalized Color Difference (NCD) estimates the perceptual error between two color vectors by converting from the space to the CIELuv space. This conversion is necessary because human color perception cannot be accurately represented using the RGB model as it is a non-linear space [25]. The perceptual color error between the two color vectors is defined as the Euclidean distance between them, as given by Equation (32).where is the error, and , , and y are the difference between the components , , and , respectively, between the two color vectors under consideration.Once was found for each one of the pixels of the images under consideration, the NCD was estimated according to Equation (33).where is the norm of magnitude of the vector of the pixel of the original image not corrupted in space , and M and N are the dimensions of the image.

- Perception-based Image Quality Evaluator (PIQE) is a no-reference image quality assessment method that evaluates perceived image quality based on visible distortion levels [26]. Despite being a numerical metric, it is particularly useful for identifying regions of high activity, artifacts, and noise, as it generates masks that indicate the areas where these distortions occur. Consequently, PIQE is also classified as a qualitative metric as it is based on human perception and assesses visual quality from a non-mathematical perspective [26].The activity mask of an image is a tool that quantifies the level of detail or complexity in a specific region based on intensity variations. Its computation is derived from Equations (34) and (35).where is the gradient of the image, and y are the derivatives of the image in the position .where is the variance in each of the blocks, and the of size y is the average of the gradient in the block.The artifact mask in an image indicates distortions, such as irregular edges that degrade visual quality. These distortions are detected by analyzing non-natural patterns in regions with high activity levels, where inconsistent blocks are identified and classified as artifacts.The noise mask is evaluated based on variations in undesired activity within low-activity regions, measuring the dispersion of intensity values within a block, as shown in Equation (36). If the dispersion significantly exceeds the expected level, the region is classified as noise.

- Peak Signal-to-Noise Ratio (PSNR) [22,27].

- Relative Average Spectral Error (RASE) [22,28].

- Root Mean Squared Error (RMSE) [22,29].

- Spectral Angle Mapper (SAM) [22,30].

- Structural Similarity Index (SSIM) [22,31].

- Universal Quality Image Index (UQI) [22,32].

The DVACE evaluation was performed using classic benchmark images commonly used for algorithm assessment, including Airplane, Baboon, Barbara, Cablecar, Goldhill, Lenna, Mondrian, and Peppers, in both RGB and GS formats. Each evaluation image was corrupted with Gaussian noise, with a variance of and a mean intensity ranging from to , in increments of . Figure 7 presents a close-up of the original peppers in both RGB and GS formats.

Figure 7.

Close-up image of the original peppers.

As shown in Table 2 and Table 3, the quantitative results for the peppers RGB image are presented for and , respectively, with . It is evident that, in most cases where the mean was nonzero, DVACE achieved superior image restoration.

Table 2.

Quantitative results for the peppers image in RGB with .

Table 3.

Quantitative results for the peppers image in RGB with .

Similarly, Table 4 and Table 5 present the quantitative results for the peppers GS image for different mean values and . It was observed that, in most cases where , DVACE achieved superior image restoration compared to all the other algorithms used for comparison.

Table 4.

Quantitative results for the peppers image in GS with .

Table 5.

Quantitative results for the peppers image in GS with .

As shown in Table 4 and Table 5, the peppers image was evaluated under different noise conditions. DVACE consistently achieves the highest SSIM and PSNR, with the lowest MSE, RMSE, and NCD, ensuring optimal noise reduction and contrast enhancement. It also minimized ERGAS, RASE, and SAM, confirming its superior spectral fidelity. Histogram Equalization and Gamma Correction improved contrast but introduced spectral distortions. The deep learning-based methods (DnCNN, NafNet, and Restormer) showed variability, while the MSR-based techniques and SSR exhibited higher error rates. DVACE maintained the best trade-off between denoising and structural fidelity.

Table 6 presents a visual comparison of the results obtained by DVACE and the aforementioned algorithms for both noise reduction and contrast enhancement on the baboon image in RGB with . This table illustrates that, while the proposed algorithm introduces some distortions, it achieves the best noise reduction results alongside the NAFNet network. Additionally, in terms of contrast enhancement, DVACE demonstrated superior restoration (comparable to Histogram Equalization).

Table 6.

Qualitative results for the peppers image in RGB.

Table 7 presents a visual comparison for the peppers image in RGB with . Visually, DVACE and the median filter exhibited less noise reduction. However, the contrast enhancement achieved by DVACE was comparable to that of the dedicated algorithms designed for this task.

Table 7.

Qualitative results for the peppers image in RGB.

Table 8 presents a comparison for the peppers image in GS with . The results indicate that DVACE achieved the best performance in both noise reduction and contrast enhancement.

Table 8.

Qualitative results for the peppers image in GS.

Table 9 presents a comparison for the peppers GS image with , confirming the trend observed with DVACE, which achieved the best results in both noise reduction and contrast enhancement.

Table 9.

Qualitative results for the peppers image in GS.

As such, in general, Table 6, Table 7, Table 8 and Table 9 provide a visual assessment of DVACE against alternative methods. DVACE, DnCNN, and NAFNet produced cleaner images with well-preserved details, while Histogram Equalization and Gamma Correction enhanced contrast but amplified artifacts. Activity masks show DVACE retained details with minimal distortions. Artifact masks reveal that DVACE introduced fewer distortions than Median and MSRCP, while noise masks confirmed superior noise suppression compared to MSR-based methods and SSR. Overall, DVACE provided the most balanced restoration.

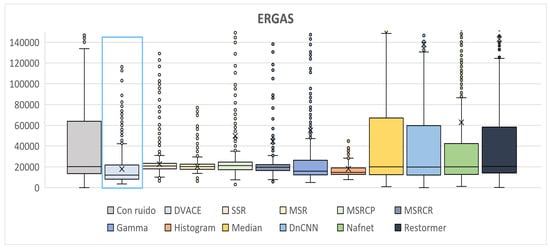

To comprehensively present the results of the metrics calculated from the images in the validation dataset, which were processed by each of the aforementioned methods, box plots are provided below. Figure 8 presents the ERGAS metric distribution across different methods. The noisy image showed the highest values, with DVACE achieving a low median and minimal variance, confirming its stable performance. Histogram Equalization and Gamma Correction also performed well, whereas MSR and MSRCR exhibited higher ERGAS values, indicating weaker global reconstruction. DVACE maintained a consistent advantage with fewer outliers.

Figure 8.

Box plots of the quantitative ERGAS results obtained.

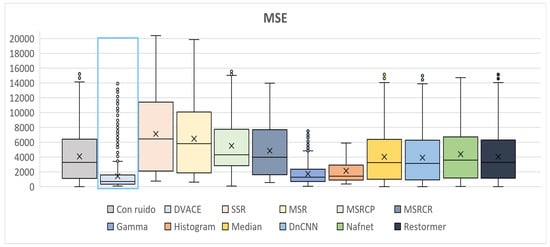

Figure 9 illustrates the MSE distribution. The noisy image exhibits high error and dispersion, while DVACE achieved a lower median MSE with reduced variance, ensuring effective reconstruction. The deep learning models (DnCNN and NafNet) showed greater variability, and the MSR-based methods performed inconsistently. DVACE remained one of the most reliable techniques.

Figure 9.

Box plots of the quantitative MSE results obtained.

Notably, Gamma Correction and Histogram Equalization, despite not being deep learning techniques or having noise reduction capabilities, achieved the next best results. In contrast, SSR demonstrated the poorest performance as both its dispersion and average error were significantly higher than those of the other methods.

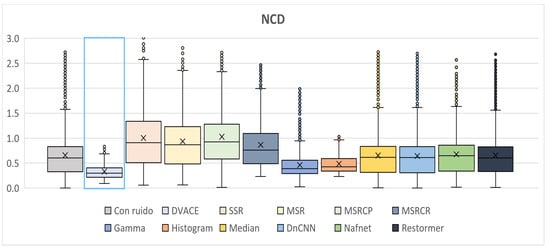

As shown in Figure 10, the NCD metric, which reflects color fidelity, was evaluated. DVACE achieved one of the lowest median NCD values with minimal dispersion, confirming its effectiveness in preserving perceptual color accuracy. While Histogram Equalization and Gamma Correction yielded competitive results, it introduce variability. The deep learning methods performed well but with slightly higher dispersion.

Figure 10.

Box plots of the quantitative NCD results obtained.

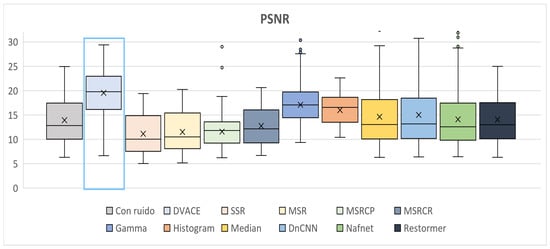

Figure 11 presents the PSNR distribution. The noisy image exhibited the lowest values, while DVACE achieved a high median PSNR with low variance, ensuring effective noise reduction and image fidelity. The deep learning models maintained competitive values but showed dataset-dependent behavior. The MSR-based methods performed worse in key metrics.

Figure 11.

Box plots of the quantitative PSNR results obtained.

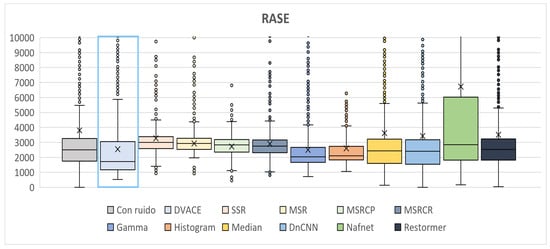

As shown in Figure 12, the RASE values, which indicate spectral reconstruction accuracy, were captured. The noisy image had the highest values, whereas DVACE maintained a lower median with reduced variance. Histogram Equalization and Gamma Correction achieved good results but exhibited more variability. The deep learning models and MSR-based methods showed inconsistent performance.

Figure 12.

Box plots of the quantitative RASE results obtained.

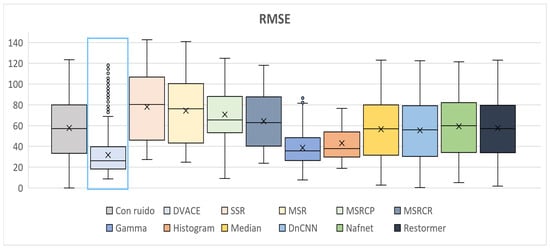

Figure 13 illustrates the RMSE values, reflecting the reconstruction accuracy. The noisy image exhibited the highest RMSE, while DVACE achieved a low median with reduced dispersion, confirming its stability. The deep learning models remained competitive but more variable. The MSR-based methods and SSR showed weaker performance.

Figure 13.

Box plots of the quantitative RMSE results obtained.

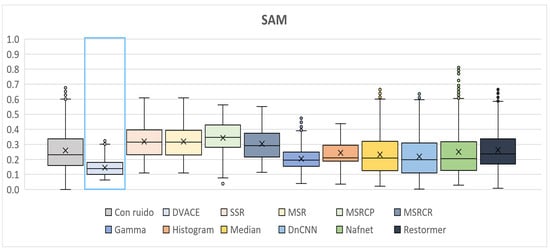

Figure 14 presents the SAM values, which measure the spectral fidelity. The noisy image showed significant spectral distortions, while DVACE achieved one of the lowest median SAM values, ensuring improved spectral consistency. Histogram Equalization and Gamma Correction performed well but introduced more variability.

Figure 14.

Box plots of the quantitative SAM results obtained.

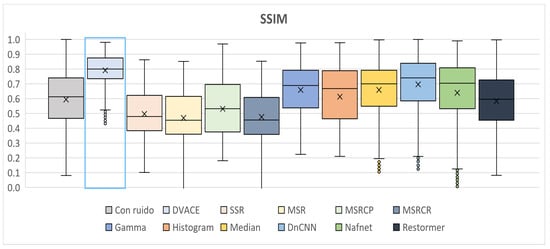

As shown in Figure 15, the SSIM, which reflects the image quality, was evaluated. The noisy image had the lowest values, while DVACE achieved a high median with minimal variance, confirming its structural preservation. The deep learning models showed competitive performance, while the MSR-based methods underperformed.

Figure 15.

Box plots of the quantitative SSIM results obtained.

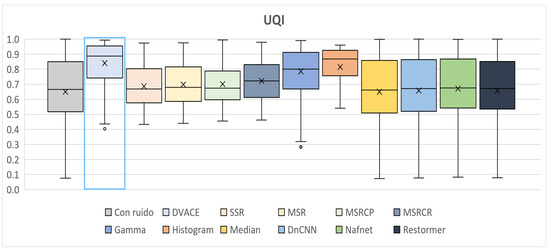

Finally, as shown in Figure 16, the UQI values, which assess the perceptual quality, were recorded. The noisy image exhibited the lowest UQI, while DVACE achieved one of the highest medians with low dispersion, ensuring strong consistency. The deep learning models performed well but exhibited slightly higher variability.

Figure 16.

Box plots of the quantitative UQI results obtained.

Another critical factor to consider when evaluating the effectiveness of an image restoration method is its execution speed. Table 10 presents the execution times of DVACE for images with dimensions , , , , , and , all of which were corrupted via Gaussian noise with and .

Table 10.

The average processing time for different images sizes, noise density, and image type.

Table 11 compares DVACE with two versions of DnCNN, showing that DVACE maintained competitive execution times, especially for larger image resolutions. For 512 × 512 and 1024 × 1024, DVACE outperformed DnCNN in efficiency, with processing times of 0.049 s and 0.075 s, respectively, demonstrating its advantage in speed without compromising restoration quality.

Table 11.

Comparison of the processing time between DVACE and DnCNN of the images in GS.

5. Conclusions

This research highlights the importance of proper image processing in addressing two distinct yet simultaneous challenges that can arise during image capture: poor lighting and noise. Based on this, a methodology is proposed using an autoencoder capable of processing images of any size and type (RGB or GS) under noisy and low-light conditions.

When analyzing the results presented, it was observed that DVACE effectively reduces Gaussian noise in images and enhances their contrast through deep learning techniques implemented in the proposed algorithm, regardless of the average noise level in the degraded images. The results of DVACE, both visually and across various quantitative metrics, demonstrate superior noise reduction and contrast enhancement compared to classical and deep learning-based specialized techniques.

One limitation observed in this research was that DVACE introduces distortions and reduces image activity. Therefore, we recommend using DVACE as a foundation for further improvements (such as integrating a sharpness enhancement algorithm to mitigate distortions and increase image activity).

Author Contributions

Conceptualization, A.A.M.-G., A.J.R.-S., and D.M.-V.; methodology, A.A.M.-G., A.J.R.-S., and D.M.-V.; software, A.A.M.-G., A.J.R.-S., D.M.-V., E.E.S.-R., and J.P.F.P.-D.; validation, E.E.S.-R., D.U.-H., E.V.-L., and F.J.G.-F.; formal analysis, A.A.M.-G., A.J.R.-S., D.M.-V., E.E.S.-R., J.P.F.P.-D., D.U.-H., E.V.-L., and F.J.G.-F.; investigation, A.A.M.-G., A.J.R.-S., D.M.-V., and E.E.S.-R.; writing—original draft preparation, A.A.M.-G.; writing—review and editing, A.A.M.-G., A.J.R.-S., D.M.-V., E.E.S.-R., J.P.F.P.-D., D.U.-H., E.V.-L., and F.J.G.-F.; supervision, A.A.M.-G., A.J.R.-S., and D.M.-V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Acknowledgments

The authors wish to thank Instituto Politécnico Nacional and Consejo Nacional de Humanidades, Ciencias y Tecnologías for their support in carrying out this research work.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN | Convolutional Neural Network |

| DnCNN | Denoising Convolutional Neural Network |

| DVA | Denoising Vanilla Autoencoder |

| DVACE | Denoising Vanilla Autoencoder with Contrast Enhancement |

| ERGAS | Erreur Relative Globale Adimensionnelle de Synthèse |

| GS | Gray Scale |

| MSE | Mean Square Error |

| MSR | Multiscale Retinex |

| MSRCP | Multiscale Retinex with Chromaticity Preservation |

| MSRCR | Multiscale Retinex with Color Restoration |

| NAFNet | Nonlinear Activation Free Network |

| NCD | Normalized Color Difference |

| PIQE | Perception-based Image Quality Evaluator |

| PSNR | Peak Signal-to-Noise Ratio |

| RASE | Relative Average Spectral Error |

| ReLU | Rectified Linear Unit |

| Restormer | Restoration Transformer |

| RGB | Red, Green, Blue |

| RMSE | Root Mean Squared Error |

| SAM | Spectral Angle Mapper |

| SIDD | Smartphone Image Denoising Dataset |

| SNR | Signal-to-Noise Ratio |

| SSIM | Structural Similarity Index |

| SSR | Single-Scale Retinex |

| UQI | Universal Quality Image Index |

References

- Kumar-Boyat, A.; Kumar-Joshi, B. A Review Paper: Noise Models in Digital Image Processing. Signal Image Process. Int. J. (SIPIJ) 2015, 6, 63–75. [Google Scholar] [CrossRef]

- He, Z.; Ran, W.; Liu, S.; Li, K.; Lu, J.; Xie, C.; Liu, Y.; Lu, H. Low-Light Image Enhancement With Multi-Scale Attention and Frequency-Domain Optimization. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 2861–2875. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.; Evan, B. Blind measurement of blocking artifacts in images. In Proceedings of the 2000 International Conference on Image Processing, Vancouver, BC, Canada, 10–13 September 2000; Volume 3, pp. 981–984. [Google Scholar] [CrossRef]

- Akyüz, A.O.; Reinhard, E. Noise reduction in high dynamic range imaging. J. Vis. Commun. Image Represent. 2007, 18, 366–376. [Google Scholar] [CrossRef]

- Ren, W.; Liu, S.; Ma, L.; Xu, Q.; Xu, X.; Cao, X.; Du, J.; Yang, M.H. Low-Light Image Enhancement via a Deep Hybrid Network. IEEE Trans. Image Process. 2019, 28, 4364–4375. [Google Scholar] [CrossRef] [PubMed]

- Pitas, I. Digital Image Processing Algorithms and Applications; Wiley-Interscience: Hoboken, NJ, USA, 2000; Volume 1, p. 432. [Google Scholar]

- Wang, S.; Zheng, J.; Hu, H.M.; Li, B. Naturalness Preserved Enhancement Algorithm for Non-Uniform Illumination Images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef] [PubMed]

- Abdelhamed, A.; Lin, S.; Brown, M.S. A High-Quality Denoising Dataset for Smartphone Cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Smith, S.; Brady, J.M. Image processing of multiphase images obtained via X-ray microtomography: A review. Int. J. Comput. Vis. 1997, 23, 45–78. [Google Scholar] [CrossRef]

- Zhang, K.; Zou, W.; Chen, Y. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Chu, X.; Zhang, X. Simple Baselines for Image Restoration. In Computer Vision–ECCV 2022, Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; pp. 17–33. [Google Scholar] [CrossRef]

- Waqas-Zamir, S.; Arora, A.; Khan, S. Restormer: Efficient Transformer for High-Resolution Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 1–13. [Google Scholar] [CrossRef]

- Han, Y.; Hu, P.; Su, Z.; Liu, L.; Panneerselvam, J. An Advanced Whale Optimization Algorithm for Grayscale Image Enhancement. Biomimetics 2024, 9, 760. [Google Scholar] [CrossRef]

- Subramani, B.; Veluchamy, M. Pixel intensity optimization and detail-preserving contextual contrast enhancement for underwater images. Opt. Laser Technol. 2025, 180, 111464. [Google Scholar] [CrossRef]

- Subramani, B.; Bhandari, A.K.; Veluchamy, M. Optimal Bezier Curve Modification Function for Contrast Degraded Images. IEEE Trans. Instrum. Meas. 2021, 70, 1–10. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.; Woodell, G. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef] [PubMed]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef]

- Petro, A.; Shert, C.; Morel, J. Multiscale Retinex; Image Processing On Line: Paris, France, 2014; pp. 71–88. [Google Scholar] [CrossRef]

- Poynton, C.A. Gamma and Its Disguises: The Nonlinear Mappings of Intensity in Perception, CRTs, Film, and Video. Smpte J. 1993, 102, 1099–1108. [Google Scholar] [CrossRef]

- Kaur, S. Noise types and various removal techniques. Int. J. Adv. Res. Electron. Commun. Eng. 2015, 4, 226–230. [Google Scholar]

- Collins, C.M. Fundamentals of Signal-to-Noise Ratio (SNR). In Electromagnetics in Magnetic Resonance Imaging; Morgan and Claypool Publishers: Bristol, UK, 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Miranda-González, A.A.; Rosales-Silva, A.J.; Mújica-Vargas, D.; Escamilla-Ambrosio, P.J.; Gallegos-Funes, F.J.; Vianney-Kinani, J.M.; Velázquez-Lozada, E.; Pérez-Hernández, L.M.; Lozano-Vázquez, L.V. Denoising Vanilla Autoencoder for RGB and GS Images with Gaussian Noise. Entropy 2023, 25, 1467. [Google Scholar] [CrossRef]

- Bojan, T. 1 Million Faces. Kaggle. 2020. Available online: https://www.kaggle.com/competitions/deepfake-detection-challenge/discussion/121173 (accessed on 4 June 2024).

- Du, Q.; Younan, N.H.; King, R. On the Performance Evaluation of Pan-Sharpening Techniques. IEEE Geosci. Remote Sens. Lett. 2007, 4, 518–522. [Google Scholar] [CrossRef]

- Poynton, C. Poynton’s Color FAQ. Electronic Preprint. 1995, p. 24. Available online: https://poynton.ca/ (accessed on 1 June 2024).

- Venkatanath, N.; Praneeth, D.; Bh, M.C.; Channappayya, S.S.; Medasani, S.S. Blind image quality evaluation using perception based features. In Proceedings of the 2015 Twenty First National Conference on Communications (NCC), Mumbai, India, 27 February–1 March 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Naidu, V. Discrete Cosine Transform-based Image Fusion. J. Commun. Navig. Signal Process. 2012, 1, 35–45. [Google Scholar]

- Panchal, S.; Thakker, R. Implementation and comparative quantitative assessment of different multispectral image pansharpening approaches. Signal Image Process. Int. J. (SIPIJ) 2015, 6, 35–48. [Google Scholar] [CrossRef]

- Liviu-Florin, Z. Quality Evaluation of Multiresolution Remote Sensing Image Fusion. UPB Sci. Bull. 2009, 71, 37–52. [Google Scholar]

- Alparone, L.; Wald, L.; Chanussot, J. Comparison of Pansharpening Algorithms: Outcome of the 2006 GRS-S Data-Fusion Contest. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3012–3021. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Alparone, L.; Aiazzi, B.; Baronti, S. Multispectral and Panchromatic Data Fusion Assessment Without Reference. Photogramm. Eng. Remote Sens. 2008, 2, 193–200. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).