Abstract

As oil and gas exploration has deepened, the complexity and risk of well repair operations has increased, and the traditional description methods based on text and charts have limitations in accuracy and efficiency. Therefore, this study proposes a well repair scene description method based on visual language technology and a cross-modal coupling prompt enhancement mechanism. The research first analyzes the characteristics of well repair scenes and clarifies the key information requirements. Then, a set of prompt-enhanced visual language models is designed, which can automatically extract key information from well site images and generate structured natural language descriptions. Experiments show that this method significantly improves the accuracy of target recognition (from 0.7068 to 0.8002) and the quality of text generation (the perplexity drops from 3414.88 to 74.96). Moreover, this method is universal and scalable, and it can be applied to similar complex scene description tasks, providing new ideas for the application of well repair operations and visual language technology in the industrial field. In the future, the model performance will be further optimized, and application scenarios will be expanded to contribute to the development of oil and gas exploration.

Keywords:

workover operation; vision language model; prompt learning; scenario description; industrial large model MSC:

68T45

1. Introduction

In recent years, as the number of large-scale deep learning models has increased, many industries have widely applied these models in downstream scenarios [1,2]. The integration of artificial intelligence and industrial applications has become a hot topic in both research and practice, covering a wide range of fields. The development in these areas not only drives technological innovation but also brings new opportunities for socioeconomic development [3]. In the petroleum industry, the application of large-scale models remains exploratory [4]. Research groups collected a large amount of text data from the oil and gas industry, providing new ideas for the development of large language models in the oil and gas field. They constructed an intelligent petroleum engineering system by extracting and searching well site and historical data [5]. New avenues have been opened up by the innovative exploration of large language models for the intelligent development of the petroleum engineering field, providing strong support for scientific research and technological applications in related fields. However, the application of vision language models in the petroleum industry remains nascent. In particular, traditional methods often fail to meet the requirements for addressing the challenges in workover operations. Considering the abundance of video monitoring and work report texts in workover operations, the introduction of vision language models has become a promising solution. By combining vision information and natural language descriptions, this model is expected to achieve intelligent understanding and interpretation of the workover process, thereby providing new possibilities for technological advancement and safety management in this field. The on-site monitoring data generated during workover operations in oil fields is immense. Despite the high value of these video records, their substantial storage requirements present a bottleneck in data management and analysis [6]. To address this challenge, the introduction of enhanced visual-language technology offers an innovative solution. As an advanced intelligent processing technology, real-time enhanced visual-language technology can instantly analyze and comprehend image content in workover scenarios. By deeply integrating deep learning and computer vision, this technology precisely converts scene images into detailed textual descriptions. Consequently, the original storage-intensive video image data is efficiently compressed into text form, significantly enhancing data storage and retrieval efficiency. This not only alleviates the strain on storage resources but also makes the analysis and utilization of historical monitoring information more convenient. Managers can quickly browse and search textual descriptions of key events, providing instant and reliable data support for safety audits, operational efficiency assessments, and the development of accident prevention strategies [7].

Scene understanding leverages integrated visual language models to interpret on-site visual data, providing machine analysis feedback for the operational site. This approach effectively bridges gaps inherent in traditional manual monitoring [8] and facilitates safer and more efficient operational processes. Additionally, scene description promotes the optimization of remote monitoring and strategic decision-making, particularly in geographically remote operational sites. This technology can transform intuitive visual information into precise natural language reports, providing a foundation for remote expert teams to implement monitoring and precise guidance, thus promoting intelligent fault prediction and health management [9]. By learning from rich historical workover operation cases, the model can autonomously identify precursory features of equipment failures, triggering early warning mechanisms, with the potential to comprehensively advance well site digital twins [10,11]. However, a series of significant challenges related to applying vision language models to workover operation scene descriptions have been identified and must be addressed in the research. These challenges stem from the high complexity of the workover operation environment, which encompasses intricate mechanical equipment operations [12] and dense human activities [13], making it difficult to comprehensively and accurately capture all key information through simple visual recording methods. Particularly prominent are the uncontrollability of natural lighting conditions and the influence of harsh weather conditions, resulting in significant fluctuations in the quality of collected on-site images and video materials, thereby constraining the model’s ability to accurately parse and interpret these data. Furthermore, the specialized terminologies and profound professional knowledge in the workover domain pose extremely high demands on the model’s semantic understanding and logical reasoning capabilities, further increasing the difficulty of accurately describing scenes.

In this work, we present an approach utilizing prompt-enhanced vision language models, which preserves the robust domain generalization capability of pre-trained vision language models [14,15]. This method enables the transformation of downstream task inputs with minimal task-specific annotation data, facilitating data space adaptation and significantly reducing the overhead and difficulty of adapting tuning strategies for downstream tasks. Through prompt enhancement on a small-sample real workover scene dataset, the model demonstrates outstanding adaptability in complex workover operation scenarios. Overall, this study encompasses several innovative aspects:

This study proposes an effective method of prompt-enhanced modeling, which successfully enables large-scale vision language models to adapt to new domains in downstream applications. By designing adaptive prompts at the input of the model encoders, the vision language model is guided to understand and recognize specific visual and linguistic information in the new domain, thereby achieving accurate execution of tasks in the new domain.

Leveraging the concept of auxiliary annotation, a real workover scene image description dataset is generated to train the prompt-enhanced model, and its adaptability to workover operation scenarios is validated. The powerful generalization capability of large-scale vision language models is utilized to generate pseudo-annotations for workover scene images, which are then manually adjusted with domain expertise and incorporated into the experimental dataset for prompt enhancement.

This research explores the application of large-scale vision language models in the petroleum industry, demonstrating their potential in practical use. The successful application of vision language models in complex scene descriptions demonstrates their potential utility in downstream fields such as workover operations, providing new perspectives and methods for addressing the complex and ever-changing challenges in the petroleum industry.

2. Prior Research Review

In order to better understand the description process of using prompts to enhance visual language models in complex workover scenarios, this section conducts an in-depth analysis from three dimensions: workover scenario understanding, visual language models, and scenario description. It systematically sorts out and presents the latest theoretical methods and practical achievements in related research frontiers.

2.1. Workover Scene Understanding

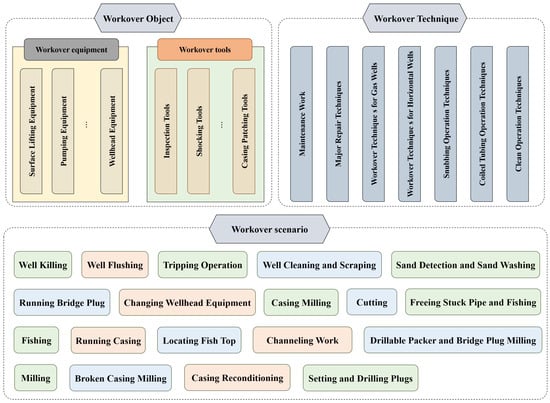

Workover operations, as an indispensable part of the petroleum production industry, refer to a series of complex engineering activities involving the maintenance, repair, or modification of drilled oil wells throughout their lifecycle [16]. This process encompasses various interventions aimed at improving the efficiency of oil and gas well production and extending the longevity of wellbores. Specifically, workover operations may involve clearing obstructions such as sediment or accumulated sand and debris at the wellbore bottom to restore oil and gas flow, implementing acidizing or fracturing operations to enhance reservoir permeability, repairing damaged wellbore structures, replacing aging equipment, and even re-completing wells to adapt to geological changes or adopt new technologies to improve recovery rates. The theoretical structure of workover operations [16] is illustrated in Figure 1. In practical operations, workover requires high technical proficiency and is often conducted in harsh environments characterized by high pressure, high temperature, and the presence of harmful gases, thereby necessitating stringent safety controls, the use of precision instruments, and highly skilled personnel.

Figure 1.

Theoretical Structure of Workover Operations.

Workover operations require the utilization of permanent downhole monitoring technologies to obtain real-time production data, supporting intelligent operational decision-making and optimization [17]. By assessing workload efficiency indicators, developing best practices, and optimizing models, workover operations aim to reduce operational time losses, optimize resource allocation, and decrease production losses [18]. Through the analysis of operational data, the establishment of performance baselines, and the optimization of operational processes, significant improvements in cost and efficiency can be achieved [19]. Therefore, the core requirement of workover operations lies in the utilization of advanced technologies to achieve intelligent decision-making, precise assessment, and accurate optimization, ensuring efficient execution and cost control of operations while safeguarding safety and environmental protection. Under the premise of ensuring safety and environmental protection, these requirements aim to maximize well production capacity, optimize reservoir management, and extend the economic life of oil fields. With the continuous integration of intelligent and automated technologies, modern workover operations are gradually evolving towards remote monitoring [20], precise guidance, and minimal human intervention, aiming to ensure operational efficiency while maximizing safety and environmental protection.

The options for application of machine learning technology in the field of petroleum engineering are increasing, especially in workover and drilling operations, where it is showing great potential. Recent studies have focused on developing intelligent models to improve the safety and efficiency of operations. For example, Ref. [21] proposed a regional drilling risk pre-assessment method based on deep learning and multi-source data, which effectively enhanced the model’s generalization ability for pre-drilling risk prediction in new exploration areas by constructing a drilling risk profile rich in geological and engineering information. Addressing the nonlinear fluctuation characteristics of monitoring data during natural gas drilling, Ref. [22] utilized convolutional algorithms to extract multi-parameter correlation features that vary over time and applied neural networks with nonlinear classification capabilities for risk classification, significantly improving identification accuracy and response speed. Then, Ref. [23] tackled the problem of recognizing the working state of workover pump systems and proposed a few-shot working condition recognition method based on four-dimensional time-frequency features and meta-learning convolutional neural networks, effectively solving the challenge of rapid and accurate identification under scarce sample conditions. These studies not only promote the application of machine learning technology in petroleum engineering, they also provide new ideas for the intelligent development of workover and drilling operations. Workover operations not only require precise perception and understanding of the downhole environment, they also demand a profound knowledge of professional equipment and operational procedures, setting high standards for the scene understanding and description capabilities of vision language models.

2.2. Vision Language Models and Scene Description

Vision language models, serving as a bridge between visual perception and language understanding, have shown unprecedented progress and potential in recent years amid the flourishing development of artificial intelligence research. Originating from the era of deep learning, these models initially focused on tasks such as image captioning and simple description generation, leveraging convolutional neural networks to extract image features [24] and combining them with recurrent neural networks [25] or, later, Transformer architectures [26] to generate textual descriptions, thereby achieving the initial conversion from pixels to language. With the construction of large-scale datasets and leaps in computational power, such as MS COCO [27], Visual Genome [28], and so on, the performance of vision language models has significantly improved. They are now capable of not only recognizing objects and actions in images but also capturing more complex contextual relationships between scenes and objects. In recent years, the emergence of the vision language pre-training paradigm, especially Contrastive Language-Image Pre-training (CLIP) by OpenAI [29] and A Large-scale ImaGe and Noisy-text embedding (ALIGN) by Google [30], has revolutionized the field. Additionally, the introduction of Transformer architectures and their variants, such as Bidirectional Encoder Representation from Transformers, abbreviated as BERT [31], and Vision Transformer, abbreviated as ViT [32], has empowered vision language models with powerful self-attention mechanisms, enabling the models to flexibly handle long-distance dependencies and complex contextual information. These technological advancements have not only improved the accuracy and diversity of descriptions but also led to the emergence of cutting-edge applications, such as end-to-end multimodal generation [33] and image content synthesis [34]. These models are pre-trained on large-scale multimodal data, learn shared representation spaces across modalities, and then finetuned on specific downstream tasks, such as visual question answering (VQA), image-text retrieval (ITR), and visual reasoning, achieving significantly better performance than traditional methods. They have deepened the understanding of visual scenes and promoted the exploration of interaction mechanisms between vision and language. We analyzed three currently popular visual language models [14,15,35]. Since each model contains versions with different numbers of parameters, we uniformly analyze version 7B, as shown in Table 1. LLaVA is an innovative end-to-end multimodal model. With its efficient visual and language fusion capabilities, it achieves outstanding performance with a small amount of data, demonstrating strong generalization and understanding capabilities. Its architecture includes a pre-trained CLIP visual encoder and a large language model (LLM), which converts visual features into the understanding space of the language model through linear projection to achieve multimodal dialogue. Mini-Gemini, through a unique combination design of CNN and ViT, focuses on high-resolution image processing and provides a series of model versions with flexible parameter numbers to meet the requirements of different scenarios. It adopts dual visual encoders to provide low-resolution visual embeddings and high-resolution candidate objects, proposing patch information mining technology to enhance the model performance.

Table 1.

Comparison of the characteristics of vision language models.

Furthermore, we also made comparisons with mainstream models such as BLIP [36], OFA [37], MiniGPT-4 [38], Flamingo [39], etc. BLIP adopts the multimodal encoder-Decoder Hybrid Architecture (MED) and Capfilt data augmentation technology. It is good at cross-modal retrieval and image subtitle generation, but it has deficiencies in Chinese language support and fine-grained positioning. OFA is based on a unified Seq2Seq framework and instruction learning, and is suitable for multi-task generalization, but its performance in complex visual reasoning is limited. MiniGPT-4 performs well in creative content generation through the visual-language alignment layer and detailed description fine-tuning, but it requires manual data organization and has limited Chinese support. Flamingo, by leveraging the Perceiver Resampler and gated cross-attention, performs exceptionally well in learning from a small number of samples and contextual reasoning, but it has high computational costs and poor Chinese adaptability.

Comprehensively considering the performance and applicability of the model in Chinese multimodal applications, the Qwen-VL model was finally selected as the basic model for the research. Qwen-VL not only performs well in graphic and textual understanding and visual reasoning, but also integrates a profound understanding of Chinese Internet texts and images. It supports megapixel image processing and has end-to-end capabilities in both Chinese and English, demonstrating outstanding performance and wide applicability in Chinese multimodal applications.

Scene description generation aims to provide detailed explanations of image content by automatically generating accurate and context-rich natural language texts. This process goes beyond object recognition and requires the model to deeply understand the scene structure, complex interactions between entities, dynamic behaviors, and potential narrative clues to construct a multidimensional, coherent visual narrative scene. Early scene description techniques were mainly based on template matching and retrieval mechanisms [40]. These methods faced bottlenecks due to the limitations of description templates and the scarcity of expression. With the development of deep learning models combining convolutional neural networks and recurrent neural networks, automatic generation of descriptions became possible, marking a significant leap in the field towards dynamic and diversified text production. These deep learning models, trained on massive image-text paired datasets [41], significantly enhanced the accuracy and fluency of descriptions. In recent years, with the introduction of large-scale pre-training, the performance boundaries of scene description have been completely changed. This not only deepens the understanding of visual contexts but also leads to more human-like language expression generation. Through cross-modal contrastive learning strategies, these models achieve a high degree of coordination between visual features and language expressions, ensuring that the generated descriptions are both nuanced and imbued with profound semantic layers. Despite significant progress, the description generation of well workover scenes still faces specific challenges. Precise naming of professional tools and techniques, assessment descriptions of workover operations, and clear expression of event sequences in complex mechanical interactions remain pressing issues.

3. Materials and Methods

Qwen-VL is a commercial open source large-scale vision language model introduced by Alibaba, which combines vision encoders with position-aware vision language adapters, endowing the language model with visual understanding capabilities. Below, we will introduce a vision language model enhanced by cross-modal prompt learning based on Qwen-VL.

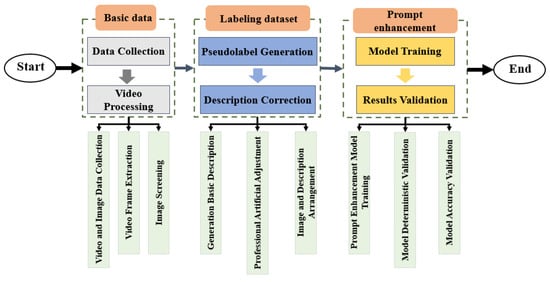

3.1. Workflow

In response to the complex image description challenges frequently encountered in oilfield workover operations, this study proposes a comprehensive and systematic methodology. This methodology not only focuses on how to deeply mine valuable information from vast video image resources but also emphasizes the scientific and systematic organization of this information to construct a high-quality, representative, and widely applicable dataset. Through the construction of this dataset, we can more accurately capture and understand the key details and dynamic changes in the workover process, thereby further optimizing the decision support system for workover operations and improving operational efficiency and safety. As shown in Figure 2, the implementation process of this methodology covers multiple stages, including data collection, processing, analysis, and application, ensuring the rigor and reliability of the entire research process.

Figure 2.

Workflow of prompt-enhanced vision language model for workover scene description.

First, we collected a massive amount of workover operation video data from multiple well sites, covering a wide range of routine workover operations and complex fault-handling processes, providing rich visual materials for subsequent image analysis. Subsequently, video processing algorithms were employed for frame extraction at predetermined intervals to obtain key frame images. This process effectively reduced redundancy in the video data, focusing on image data containing scene information. Next, object detection techniques were applied to preliminarily filter the selected images, which identified and classified key workover elements, such as equipment tools and wellhead conditions, using deep learning models to achieve precise target recognition in complex scenes, thereby enhancing the accuracy and efficiency of data processing. The obtained foundational dataset was initially organized, and pre-trained vision language models were utilized to generate preliminary descriptions of the images, known as pseudo-labels. Leveraging their robust cross-domain generalization capabilities, these models autonomously generated semantically rich descriptions for each image, albeit with some potential errors, providing an effective starting point for subsequent manual review. Subsequently, professional personnel reviewed and corrected the machine-generated pseudo-labels to ensure the accuracy and professionalism of each description. Finally, the manually refined image description results were integrated into a high-precision prompt-enhanced training and validation dataset, laying a solid foundation for subsequent vision language model prompt enhancement. Through training on this dataset, the model’s adaptability to the workover operation field significantly improved, enabling more accurate understanding and judgment, thereby providing intuitive and reliable basis for subsequent workover operation research.

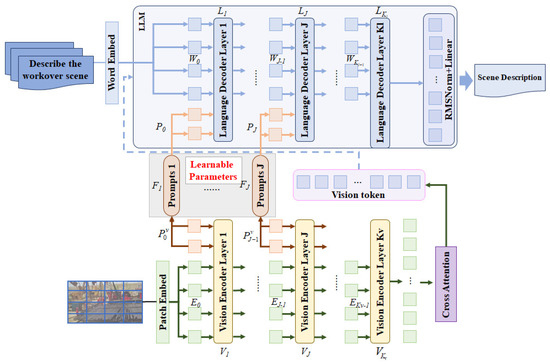

3.2. Prompt-Enhanced Vision Language Models

The study aims to utilize the Qwen-VL architecture as the foundational model and enhance its performance through prompt-based guidance, focusing on the core knowledge of workover scenes. Research has demonstrated that precise adjustments of textual prompts at different transformation stages can effectively enhance the synergistic effects between vision and language modalities [42], thereby improving the model’s generalization ability when facing new categories, cross-dataset transfer, and domain shift datasets. Based on this finding, we explore cross-modal prompt enhancement for vision language models to further enhance their performance. The overall model structure is illustrated in Figure 3.

Figure 3.

Overall architecture of the prompt-enhanced vision language model for workover scene description.

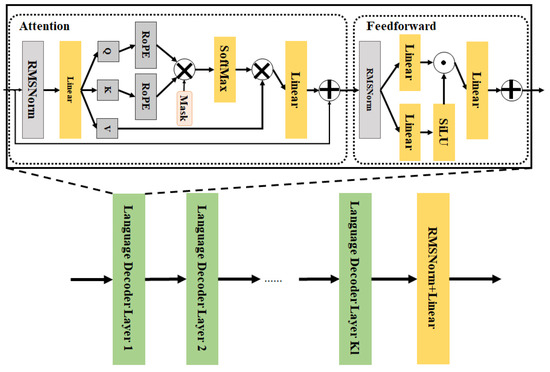

The core architecture of Qwen-VL comprises three major components: a large-scale language model (LLM), a vision encoder, and a position-aware vision language adapter. Through a three-stage training process, it achieves in-depth understanding, localization, and text reading functions for images. The large-scale language model Qwen-7B [43] is based on an improved Transformer architecture, employing Untied Embeddings, which is replaced by the previous bimodal branch, and Rotation Position Encodings (RoPE) techniques to enhance performance while maintaining memory efficiency. Carefully designed training strategies, including Flash Attention and the application of the AdamW optimizer, ensure effective learning of the model, as depicted in Figure 4. The vision encoder pre-training process utilizes a sophisticated variant of the Vision Transformer architecture, ViT-G/14, which not only possesses the ability to process visual signals using self-attention mechanisms but also achieves finer capturing and representation of complex visual features through a significant increase in model size. The position-aware vision language adapter is implemented through a carefully designed single-layer cross-attention architecture. Its characteristic lies in using query vectors that are randomly initialized and then trained end-to-end, interacting with the feature maps outputted by the vision encoder. During this interaction, visual features are mapped to a fixed-dimensional 256-dimensional sequence, achieving a refined representation of the original vision input while maintaining high information fidelity.

Figure 4.

Structural and operational diagram of large-scale language model components.

To enhance the adaptability of the Qwen-VL model for downstream tasks in workover scene description, we investigated the potential of multimodal prompt enhancement involving both vision and language modalities. Research indicates that learning prompts at deeper levels of the Transformer architecture are crucial for progressively refining feature representations [44]. Building upon this understanding, this study proposes a strategy wherein trainable prompts are integrated into the first J layers of both the vision and language branches of the Qwen-VL model. These multimodal hierarchical prompts aim to leverage the intrinsic knowledge base of the vision language model to optimize the representation of context information relevant to specific tasks.

Before we delve into the formal introduction, let us briefly discuss some simple concepts of vision language models. Tokens can be regarded as fragments or basic units of words. In natural language processing, text is often segmented into a series of tokens to facilitate computer processing and analysis. These tokens are not always precisely divided at the beginning or end of words; they may include trailing spaces, punctuation marks, or even in some cases, be part of subwords. Embedding is a technique that converts high-dimensional data, such as text or images, into lower-dimensional vector representations. These vector representations capture the essential features of the data, making them more efficient for processing, analysis, and machine learning tasks. This technique is widely used to transform image and text information into low-dimensional vector representations. These vector representations can be compared and manipulated in a shared vector space, enabling correlation and matching between images and text. Patch refers to the practice of dividing an image into a series of small blocks when processing image inputs. This approach helps the model capture local features and details in the image, thereby better understanding the image content. By dividing the image into patches, the model can individually process and analyze each patch’s information and then integrate these local details to generate an understanding of the entire image.

Specifically, in the language branch, we introduce bl trainable tokens, denoted as , where bl represents the number of tokens. Consequently, the input embeddings are transformed as follows:

where represents the set of tokens input before the prompt enhancement, and wi denotes the i-th token in the set. New learnable tokens are further introduced into each transformation block of the language encoder Li until the J-th layer:

where denotes the concatenation operation, _ denotes the position of language prompts Pi,, which is used for the embedding of learnable tokens in the next layer. After the J-th layer of the transformer, subsequent layers generate corresponding prompts based on the preceding layers:

Similarly, for the input image tokens in the vision branch, bv learnable tokens, denoted as , and new learnable tokens are introduced into the image encoder Vi up to the J-th layer:

The depth prompts offer flexibility in learning prompts across different feature levels in the ViT architecture. Shared prompts across stages are preferred over independent prompts because the features are more correlated due to the consecutive transformer blocks processing. Therefore, unlike in the early stages, no additional independent learning prompts are provided in the later stages.

During the prompt enhancement process, it is essential to adopt a multimodal approach aimed at optimizing both the vision and language branches of the Qwen-VL model simultaneously to ensure comprehensive contextual optimization. A preliminary strategy is to simply merge the depth vision prompts with the language prompts, allowing language prompts P and vision prompts to learn together in a unified training process. Although this method satisfies the principle of prompt integrity, it lacks interaction between the vision and language branches when learning task-specific contexts, thus failing to fully exploit synergistic effects. Recognizing this limitation, Ref. [42] innovatively introduced a branch-aware multimodal prompting mechanism aimed at coordinating the vision and language branches of the Qwen-VL model by sharing prompt information between the two modalities, enabling close collaboration between them. Specifically, akin to the previously described depth language prompts P, the language prompts are integrated into the language branch up to the J-th transformer block. To ensure collaborative operation between the vision prompts and language prompts P, the mechanism of a vision-language coupling function F(·) is employed. This function projects the vision prompts onto the language prompts Pi through a mapping from the vision to the language domain:

where the coupling function F(·) denotes a linear layer and serves as a communication channel between the vision and language modalities, facilitating the mutual exchange of gradients, thereby enhancing the overall collaborative learning capability of the model.

3.3. The Semi-Supervised Vision Semantic Augmentation Labeling Method

The semi-supervised learning method is a machine learning approach that combines the characteristics of both supervised and unsupervised learning [45]. It aims to utilize a large amount of unlabeled data along with limited labeled data to train the model. This approach involves training the model on labeled data and then using the acquired knowledge to infer the categories of unlabeled data, thus improving learning efficiency and generalization, especially in scenarios where acquiring labeled data is costly or labeling resources are limited. Our research strategy ingeniously integrates the core principles of semi-supervised learning with advanced vision language model technology, aiming to establish an efficient and economical data labeling process. Specifically, this method leverages the generalization capabilities of the foundational vision language model to automatically generate descriptive pseudo-labels based on image content. This process fully leverages the model’s understanding of visual scenes, allowing for the initial assignment of labels to images without manual intervention, despite the possibility of some uncertainty or errors in these labels.

The subsequent key step involves the introduction of the concept of “human-machine collaboration”, wherein human experts review and refine the automatically generated pseudo-labels at relatively low cost. This manual correction not only significantly enhances the accuracy of the labels and ensures data quality but also greatly reduces the expensive and time-consuming costs associated with relying solely on manual annotation. Compared to traditional image labeling methods, this strategy notably reduces the dependence on large amounts of precisely annotated data, providing a feasible and economical solution for processing large-scale image data. Furthermore, this method promotes effective collaboration between machine learning and human intelligence, forming an iterative optimization cycle: as the initial pseudo-labels are manually corrected, these high-quality samples can be fed back into the vision language model for retraining, further improving the model’s description generation capability, thereby forming a positive feedback loop that gradually approaches more accurate automatic labeling goals. This process not only optimizes model performance but also demonstrates the unique advantages of semi-supervised learning in reducing annotation costs and improving learning efficiency, which is of significant importance for advancing fields such as computer vision and natural language processing.

4. Results

This chapter is dedicated to conducting extensive experiments using datasets collected from actual oilfield sites, aiming to validate the effectiveness and reliability of the proposed methods. Firstly, in the “Dataset Overview” section, the characteristics of the dataset used are elaborately described. Subsequently, the “Experimental Design” section systematically illustrates the specific configuration and execution process of the experiments. Following that, the “Results Analysis” and “Comparative Analysis” sections delve into and dissect the data results generated by the experiments, while rigorously comparing them with the methods prior to improvement, comprehensively evaluating the performance and advantages of the proposed approach.

4.1. Dataset Overview

We chose images of workover operations in oilfield well sites as the experimental dataset. These images cover a wide range of workover operation scenarios, including but not limited to tripping tubing, hydraulic fracturing, sand flushing, and well stimulation operations. The image data of these scenarios are crucial for understanding the complex workover environment.

The data collection process adhered to strict scientific standards to ensure the quality and diversity of the data. The first part consisted of over 24,000 images collected through a network of high-resolution industrial-grade cameras deployed at key positions on the workover platforms. These cameras were carefully positioned to capture operational details from different angles and lighting conditions. To minimize environmental interference, all cameras were equipped with anti-glare and automatic exposure adjustment functions, ensuring clear images even in extreme weather conditions. The second part comprised over 2000 high-definition images collected manually by researchers at the workover operation sites. Compared to images obtained from conventional camera networks, these data, with their higher resolution characteristics, could reveal finer details of the scenes, thus enhancing the representation of complex visual information. During the construction of the dataset, we emphasized the continuity of the time series to record the entire workover operation process. In the process of annotating image scene descriptions using the semi-supervised vision semantic augmentation labeling method, each step of the operation, including but not limited to the type, status, and object of the operation, was meticulously added to the pseudo-annotation results. It is worth noting that we took the last sentence of each scene description as the overall summary result of the image, as shown in Figure 5. The scenario is described as follows: This image illustrates the process of TrippingTubing during well workover operations. In the top left of the image is the pumping unit, below which lies a multitude of tubing. There are workover rigs and some wellhead equipment present in the scene. Several personnel are handling the Tubing while Tripping Tubing. After completing all the labeling work for the dataset, to ensure the accuracy of the labels, we invited experienced oilfield engineers to participate in the review of image labeling, ensuring consistency and professionalism in the annotations. After processing, the dataset comprised over 26,000 images along with their corresponding annotations. To better enable the vision language model to learn the characteristics of the scenes, while ensuring that the training and validation sets contained all workover operation scenarios, the study used 25,000 annotated images as the training set and the rest as the validation set.

Figure 5.

Example of workover scenario.

4.2. Experimental Design

After data preparation and pretreatment in the preparatory stage, the image of workover scene and its corresponding annotation are obtained. On this basis, we prepared the pre-trained Qwen-VL model, then used the workover scene data set to prompt the enhanced model, and the input image size was uniformly normalized, because if the image size is too large, the details will be lost in the process of Patch Embedding, and if the image size is too small, a lot of details will be lost. Therefore, the dimension dv of vision prompt enhancement branch is equal to 588 (3 × 142 = 588), and the dimension dl of language prompt branch is 32,000. Therefore, the coupling function is a linear layer with 32,000 dimensions mapped to 588 dimensions. In the experiment, the depth J of prompt enhancement is set to 15, and the length bv of prompt language branch is set to 28. The language prompt branch is obtained by linear mapping of vision branches, and the training parameters are randomly initialized.

During the training phase of this experiment, we utilized 4 GPUs (V100 32G) for parallel accelerated training. Each batch processed 16 samples, and we set the number of iterations to 500 epochs, optimizing the model training using this strategy. In each iteration, we employed a strategy of randomly shuffling the dataset to inject into the training process, aiming to enhance the model’s generalization capability. We selected AdamW [46] as the core optimization algorithm:

In the equation above, and represent the first-order moment estimate and the second-order moment estimate of the gradient at the current time step t, respectively; and are the exponential decay rates for controlling the exponential moving averages of the first and second moments, respectively; denotes the learning rate; is a small constant to prevent division by zero; is the weight decay coefficient controlling the extent of weight decay; and and represent the parameters and gradients at the current time step, respectively. During the training process, we employed the Warmup method, wherein the learning rate starts with a small value and gradually increases to the designated initial learning rate. This strategy aims to stabilize the model’s convergence to a good solution at the beginning of training, preventing issues such as overly rapid parameter updates and fluctuations in the loss function caused by an excessively large learning rate during the initial stages of training. The training process parameters are outlined in Table 2.

Table 2.

Training hyperparameter table.

When tackling the challenging task of image scene description, the core criterion for evaluating model performance lies in accurately quantifying the disparity between the text descriptions generated by the model and the predefined target texts. The cross-entropy loss function systematically reduces discrepancies between generated descriptions and actual scene descriptions by computing the deviation of probability distributions of the two sequences. It not only considers the accuracy of generated vocabulary but also deeply integrates the appropriateness of word selection at each position in the sequence. Consequently, it comprehensively and meticulously guides model learning. The calculation formula is as follows:

where L represents the loss function value; N represents the number of samples; C represents the length of the generated text tokens; pt denotes the vocabulary distribution of the true labels; qt represents the predicted vocabulary distribution, which is a probability distribution normalized by the SoftMax function; and the sequence output by the model is xt. The calculation formula is as follows:

where T represents the temperature parameter. During the validation stage of the experiment, the batch size remains set at 16, and the preprocessed scale of the input images is consistent with that during the training stage, with the input image size of . Given the emphasis on model quality in industrial applications, to balance model output diversity with quality, we set the temperature parameter to 0.2 and the Top-p sampling to 0.1. Since the order of samples in the test set does not affect the final predictive performance, the data batches can be inputted sequentially at this stage.

4.3. Results Analysis

In the research on vision language model prompt enhancement, through a thorough analysis of the experimental results, we found that this technique can significantly improve the performance of the model in joint visual and textual comprehension tasks. Below, we will provide a detailed analysis of these experimental results, while also analyzing the impact of hyperparameters and prompt enhancement designs on the outcomes.

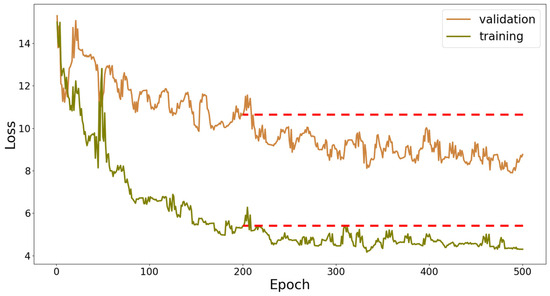

During the training and validation phases, we assessed the convergence of the model based on the loss function curve, as illustrated in Figure 6. It is evident from the graph that the model exhibits rapid convergence in the early stages of training, with the loss function decreasing rapidly within the first 220 epochs. At this point, the loss function of the training set decreases to 4.7154, and the model tends to fluctuate but still maintains an overall decreasing trend throughout the subsequent training exploration. Ultimately, after training, the loss value of the training set decreases to 4.3169, while the loss value of the validation set decreases to 8.1359, indicating that the model has converged to a satisfactory result.

Figure 6.

Loss function curve during training and variation (The red line represents the result benchmark of 220 epochs).

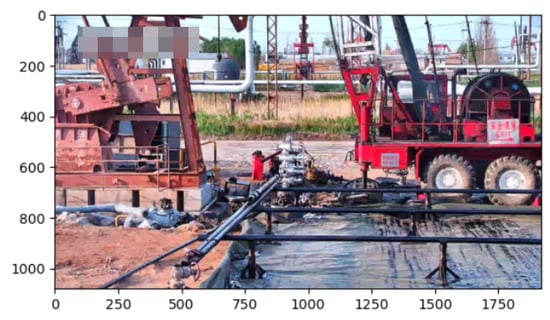

Based on the trained prompt-enhanced model, integrated into Prompt_VL for direct invocation, the test results of the model upon completion of training are depicted in Figure 7. From the graph, it is evident that the model effectively integrates information from both visual and textual modalities. It accurately identifies the depicted location as the wellhead position in a workover scenario and correctly recognizes the pumping unit, workover rig, and wellhead equipment. Furthermore, it determines that the scene is in a non-operational state, which aligns precisely with the actual scenario depicted in the image. These results indicate that the model is capable of providing accurate descriptions of workover scenes depicted in images.

Figure 7.

Test Results of the Model’s Description of the Workover Scenario.

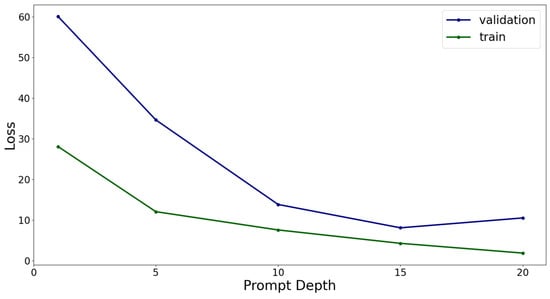

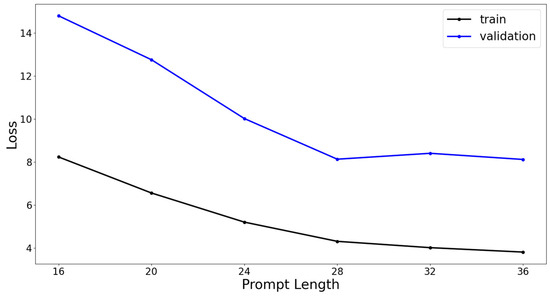

As mentioned in the experimental design, “the depth J of prompt enhancement is set to 15, and the length bv of prompt language branch is set to 28”. Both the depth of prompt enhancement and the length of the vision-language dual-branch prompt have a significant impact on the model’s final expressive capability. Here, we will explore the influence of these hyperparameters on the model’s performance, while keeping the experimental environment and other parameters consistent with those previously established. Generally, the depth of prompt enhancement is positively correlated with performance, but it also brings about more parameters and computational overhead. Given the 48-layer Transformers structure of the vision encoder ViT-G/14 [47] and the 32-layer encoder structure of the language model Qwen-7B, there is considerable flexibility in choosing the depth, as shown in Figure 8. From the graph, it can be observed that, as the depth of the prompt increases, the convergence value of the model’s training loss gradually decreases, indicating that greater depth indeed provides the model with more learning capacity. However, it is worth noting that when the depth of prompt enhancement J is chosen as 20 in the experiment, the validation loss tends to increase. This suggests that the model has begun to exhibit signs of overfitting. Therefore, the final choice for the prompt depth is set to 15 layers.

Figure 8.

The influence curve of prompt depth on the model.

On the other hand, we explored the influence of prompt length on the model, as shown in Figure 9. From the graph, it is evident that the prompt length also has a significant impact on the model’s expressive capability. The training loss function shows a negative correlation with the prompt length, indicating that, as the prompt length increases, the model’s learning capacity significantly improves. However, the validation set loss of the model achieves its optimal value around a prompt length of 28, with marginal improvement observed for prompt lengths beyond this point. Therefore, we choose 28 as the final prompt length based on these results.

Figure 9.

The influence curve of prompt length on the model.

Finally, let us analyze the impact of prompt enhancement on the model’s parameter count. With the effect of the vision-language bimodal coupling function, there is a certain increase in the training parameters due to prompt enhancement. The total number of training parameters can be calculated using the following formula:

where Params represents the total number of parameters. According to the structure of the Qwen-VL model, the total number of parameters of the three parts, namely the visual encoder, the large language model, and the location-aware visual language adapter, is 9.6 B. We deeply analyzed the influence of prompt enhancement on the number of parameters and performance of the model. Based on Formula (12), setting dl = 32,000 and dv = 588, we calculated the number of model parameters Params corresponding to different prompt depths J and prompt lengths bv. The following results are shown in Table 3 and Table 4. With the increase of J, Params presents a linear growth trend, while when bv changes within a certain range, Params basically remains stable. When J = 15 and bv = 28, the number of model parameters is 0.293 B, accounting for only 3.05% of the total number of parameters (9.6 B) of the Qwen-VL model. This indicates that by optimizing the prompt enhancement strategy, the model achieves efficient adaptation to downstream tasks at the cost of a lower inference cost (an increase of 3.05% in the number of parameters). The relevant results are presented in tabular form, providing an important reference for the selection of model parameters.

Table 3.

Model parameters for different prompt depths J at a fixed prompt length bv = 28.

Table 4.

Model parameters for different prompt lengths bv at a fixed prompt depth J = 15.

4.4. Comparative Analysis

To better demonstrate the improved adaptability of the enhanced model to workover scenes, we conducted comparative experiments between the enhanced model and the basic Qwen-VL model. We evaluated the performance of the models in terms of both the accuracy of scene interpretation and the reliability of text generation.

4.4.1. Comparative Analysis of the Accuracy of Scene Interpretation

The focus of this experiment is on accurately identifying various types of equipment and workers at workover sites to ensure the outstanding performance of the recognition system. The system should not only quickly and accurately distinguish key operational equipment, such as drilling rigs, pump trucks, and cranes, but also be sensitive enough to differentiate the identities and activity statuses of operators, safety supervisors, and other on-site workers. Achieving high-precision capture of dynamic elements in complex workover scenes is crucial for enhancing safety management, optimizing workflows, and early detection and prevention of accident risks at construction sites. This lays a solid foundation for the digital transformation and intelligent management of the oil and gas extraction industry. During our research process on the validation set, which included 1243 images with corresponding annotations, we selectively focused on key workover equipment and on-site workers, as shown in Table 5, as the main research subjects, and quantified the performance differences of the vision language model before and after the implementation of prompt enhancement technology. It is worth mentioning that the table lists different names for the same target, and the content mentioned here has been processed for plural vocabulary. The study covered the evaluation of the precise identification and classification capabilities of workover equipment and personnel, revealing how prompt enhancement technology effectively enhances the practicality and robustness of vision language models in complex industrial environments, especially in oil extraction workover scenes. This provides a solid theoretical and practical basis for further advancing the intelligent management technology of oil fields.

Table 5.

Workover scene identification equipment and personnel behavior table.

During the testing phase, the scene assessment of the vision-language model encompasses 8 targets. By inputting “List the workover targets in the image” into the language branch, the model is prompted to meet the task requirements, facilitating the statistical evaluation of the model’s recognition performance. The study selects accuracy, precision, and recall as three metrics to objectively assess the model’s scene recognition capability. Accuracy indicates the overall correctness of the model’s classification for all samples, providing an intuitive reflection of the model’s discrimination and classification ability. High precision implies that the classifier should tend to classify samples as positive when facing “highly confident” instances, effectively mapping the model’s ability to distinguish non-target categories. Thus, an increase in precision directly corresponds to the enhancement of the model’s ability to discern negative samples. Conversely, an improvement in recall highlights the classifier’s determination and efficiency in capturing all positive samples, demonstrating the model’s acumen in differentiating positive instances. A higher recall indicates the model’s positivity and superior differentiation in recognizing positive classes. The calculation formulas are as follows:

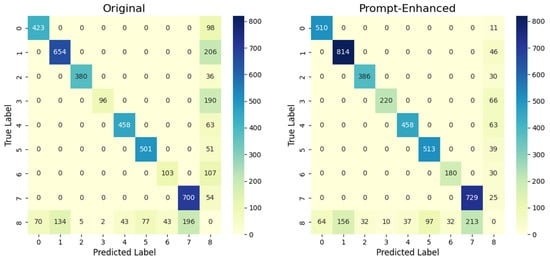

where TP represents True Positives, FP represents False Positives, FN represents False Negatives, and TN represents True Negatives. During the experimental process, due to the presence of multiple target classes in a large number of images, the multi-class confusion matrix results are illustrated in Figure 10. In the figure, category 8 represents the set of samples where none of the classes 0 to 7 exist.

Figure 10.

Comparison of confusion matrix images.

From the images, it can be visually observed that the model, after prompt enhancement, exhibits significant improvements in the recognition accuracy and precision for various target categories, with a slight increase in recall, as summarized in Table 6. Regarding the results, the categories of Modular building and Pumping equipment showed relatively low precision in the original vision language model’s recognition. The former might be attributed to the conventional understanding that the equipment on the wellsite does not include this category. However, we introduced this category in our study because a Modular building serves as the rest area for construction personnel, which is instructive for the delineation of wellsite areas. On the other hand, pumping equipment might be overlooked by the model due to scale issues, which is also a problem inherent in the current Vision Transformer-based vision encoder. Overall, compared to the previous version, the model’s accuracy has improved by 13.21%, precision by 14.94%, and recall by 0.32%. The enhanced prompt model demonstrates a significant enhancement in scene judgment capability, enabling the identification of workover scene targets.

Table 6.

Comparison of classification indexes.

Furthermore, we also calculated the precision and recall rates for each category both before and after prompt enhancement. The results, presented in Table 7, show that most categories have experienced improvements in both precision and recall rates. These results further verify the significant improvement of the suggested enhancement model in recognizing targets of different categories.

Table 7.

Pre-and post-prompt enhancement precision and recall rates by category.

4.4.2. Comparative Analysis of the Reliability of Text Generation

The core of this experiment lies in the in-depth analysis of the specific impact of prompt enhancement strategies on the performance of the vision language model, focusing on evaluating its effectiveness in improving the accuracy, naturalness, and contextual coherence of text generation. By providing clear guiding information to the vision language model through prompt enhancement, it significantly reduces the ambiguity and inaccuracy of the output while maintaining the diversity of predictions. The experiment employs standardized evaluation metrics, using the change in perplexity (PPL) of the model’s generated text after prompt enhancement as an indirect reflection of language fluency and logical coherence [48]; the calculation formula is as follows:

where PPL(W) stands for generated text W; exp represents exponential function with natural logarithm e; N represents the number of samples; C represents the length of the generated text tokens; pt denotes the vocabulary distribution of the true labels; and qt represents the predicted vocabulary distribution. Perplexity serves as a reliability metric for language generation models, primarily because it reflects the quality and internal logical consistency of generated text from multiple dimensions. Perplexity indicates the uncertainty of the model’s predictions given the data, with lower values indicating more accurate predictions and a deeper understanding of language patterns by the model. In vision language generation tasks, low perplexity not only indicates that the model can better understand vision inputs and generate natural and coherent text but also reflects the model’s progress in learning and capturing the relationship between vision features and language descriptions.

Recall-Oriented Understudy for Gisting Evaluation (ROUGE) is an evaluation metric for assessing the quality of generated text by comparing it with reference text [49]. It focuses on evaluating the recall of the generated text concerning the reference text, which measures the extent to which the generated content covers the information present in the reference text. This is achieved by calculating metrics, such as the longest common subsequence, including the consideration of out-of-vocabulary terms. The formula for calculating ROUGE is as follows:

where X and Y represent the reference text and the generated text, respectively; lX and lY represent the lengths of the reference text and the generated text, respectively; LCS(X,Y) denotes the longest common subsequence of X and Y; Rlcs and Plcs represent recall and precision, respectively; Flcs represents the ROUGE score; is a hyperparameter representing the balance between recall and precision, which was set to 8 during the experimental process to ensure no key information was overlooked.

Consensus-based Image Description Evaluation (CIDEr) is a crucial evaluation metric for image description tasks, focusing on measuring the similarity and semantic consistency between machine-generated image descriptions and reference descriptions. CIDEr [50] aggregates the co-occurrence of n-grams, weighted by Term Frequency-Inverse Document Frequency (TF-IDF), between model-generated descriptions and multiple reference descriptions into a single score, emphasizing the importance of reaching consensus with multiple reference descriptions. TF-IDF plays a key role in mining keywords in the text and assigning weights to each word to reflect its importance to the document’s topic. TF-IDF consists of two parts: Term Frequency (TF), representing the frequency of a word appearing in a document, usually normalized to prevent bias towards longer documents, and Inverse Document Frequency (IDF), representing the reciprocal of a word’s frequency across the entire corpus, typically logarithmically scaled to prevent bias towards rare words. This approach helps capture the overall quality of descriptions and favors generated content that closely aligns with human consensus, providing a comprehensive indicator of description quality under multiple reference standards. The calculation method for CIDEr is as follows:

where gk represents the TF-IDF vector; Ii represents the evaluated image; represents the vocabulary of n-grams; I represents the set of image datasets; Si = [si1,…,sim] represents the image description for Ii, where sij is one of its words; hk(sij) denotes the frequency of occurrence of phrase wl in the reference sentence sij; min() denotes the minimum value; CI represents the CIDEr metric result; ci represents the candidate sentence; N denotes the highest order of n-grams used; represents the weight of n-grams, typically set to 1/N; m represents the number of reference sentences; and ||gn|| denotes the size of vector gn.

Comparative analysis of model reliability using perplexity as a metric not only allows for the evaluation of prediction efficiency from a statistical perspective but also provides insights into the model’s generalization ability, internal mechanisms, and adaptability to complex tasks. ROUGE evaluates the quality by comparing the overlap between generated content and reference text, providing a multidimensional, practical, and interpretable assessment that effectively reflects the content overlap between generated and reference texts. CIDEr, stemming from statistical consensus, emphasizes the contextual consistency and consensus information between generated content and multiple reference descriptions, capturing contextual relevance in descriptions. However, this metric exhibits poorer adaptability for evaluating longer sentences. Therefore, we utilized the image’s overall description sentence, as mentioned earlier, as the reference text for CIDEr. Additionally, we guided text generation with the prompt “Describe the image in one sentence” for the vision language model’s textual input. These three metrics were employed to assess the generated text of the vision language model before and after prompt enhancement, as shown in Table 8.

Table 8.

Comparison of Scene Description Indexes.

The results from the table indicate that the perplexity of the vision-language model decreased from 3414.88 to 74.96 after prompt enhancement, suggesting a reduction in uncertainty when handling workover scene description tasks. The model’s predictive capability improved, leading to more precise text generation. Moreover, the ROUGE metric increased from 0.217 to 0.379, indicating that the generated scene descriptions are closer to the original information content, enhancing information coverage. Additionally, the generated text exhibits closer adherence to the sentence structure and grammatical features of the reference text, demonstrating improved quality or information recall capability. Lastly, the model’s CIDEr score increased from 0.038 to 3.324. The original model’s performance in scene description was disappointing, as it failed to meet application requirements due to a lack of domain-specific language expression capabilities. However, the improvement indicates a significant enhancement in performance in tasks such as image description or related text generation, achieving a high degree of consistency with human evaluation standards in image description tasks. In summary, the prompt-enhanced vision-language model demonstrates the capability to provide language descriptions for workover scenes.

To better demonstrate the impact of hint enhancement on model performance, we present text generation results for a test scenario, as shown in Figure 11. This image illustrates the process of Well Overhaul scene during workover operations. In the lower left corner of the image is the workover rig, and the derrick equipment with a platform for workover is in the middle, along with lifting equipment and vertical tubing. A large workover rig and equipment stand in an outdoor setting. The result of Qwen-VL is as follows: The picture depicts a bustling scene at a large workover platform against a backdrop of a clear blue sky. The platform, composed of red and yellow structures, features a prominent crane arm, numerous cables and pipelines, indicating its industrial nature. On the platform, workers dressed in safety gear are engaged in workover operations, while several trucks are parked nearby, likely used for transporting equipment or materials. A large red and yellow oil rig is being used to extract crude from a well. Prompt_VL indicates the result as follows: In a clear sky, a large workover rig stands prominently in an open area, surrounded by several trees. The rig features a tall tower with a red and white platform near the top, and below it, multiple pieces of machinery and equipment are arranged, including a derrick equipment and pipelines. The scene portrays a hectic yet organized atmosphere at the workover platform. The baseline model provides a somewhat referenceable recognition of the image, focusing on the workover platform and successfully identifying the pipes. However, it also hallucinates the presence of “workers” and “several trucks”. In contrast, the prompt-enhanced model eliminates these hallucinations and successfully identifies the large-scale workover rig, derrick, and pipes.

Figure 11.

Describes the test scenario of well workover operation.

5. Discussion

The findings presented in this study shed light on the efficacy of prompt enhancement in enhancing the performance of vision-language models for workover scene description tasks. Through extensive experimentation and analysis, several key observations and implications emerge, warranting further discussion and exploration.

Effectiveness of prompt enhancement. The results demonstrate a notable improvement in the model’s ability to accurately recognize and describe workover scenes after prompt enhancement. This enhancement spans various aspects, including scene recognition accuracy, language generation quality, information coverage, and logical coherence. By providing explicit guidance to the model during the generation process, prompt enhancement enables clearer instructions or cues, thereby aiding the model in focusing on relevant visual features and generating more precise and contextually appropriate descriptions. Additionally, prompt enhancement ensures that the generated descriptions encapsulate essential details and nuances present in the visual input, thereby enhancing information coverage. Furthermore, it promotes logical coherence in the generated descriptions by guiding the model to produce outputs that are contextually consistent and logically connected to the visual input. In essence, the effectiveness of prompt enhancement lies in its ability to provide explicit guidance, enhance information coverage, and promote logical coherence, thus facilitating a more effective integration of visual and textual information and resulting in the observed improvements in model performance.

Transferability to other domains. Beyond workover scene description tasks, the study highlights the potential transferability of prompt enhancement techniques to other domains within the oil and gas industry. By leveraging multimodal data and employing similar augmentation strategies, vision-language models could effectively tackle a range of downstream tasks, such as oil and gas production [51] and reservoir engineering [52]. This exploration involves several crucial factors, including data availability, task complexity, and model generalization. First, the feasibility of transferring prompt enhancement techniques hinges on the availability of multimodal data relevant to the target domain. If suitable datasets containing both visual and textual information are accessible, it increases the likelihood of successful adaptation. Second, the complexity of downstream tasks influences the applicability of prompt enhancement. More complex tasks may require additional fine-tuning or customization of augmentation strategies to effectively guide model performance. Finally, base model generalization plays a crucial role in determining the transferability of prompt enhancement techniques. Assessing how well the model performs across different domains and tasks provides insights into its robustness and adaptability, and the strongly generalized base model exhibits greater capability in leveraging prompt enhancement. By examining these factors comprehensively, we can better understand the potential challenges and benefits of applying prompt enhancement in diverse contexts within the oil and gas industry, thereby informing future research directions and practical implementations.

Limitations and future directions. Despite the promising results, the study acknowledges several limitations that warrant attention in future research endeavors. For instance, the current architecture’s reliance on global self-attention mechanisms may limit its robustness in handling certain image transformations and small-scale features [53]. To address this, future research could explore hybrid architectures that integrate convolutional neural networks for enhanced feature extraction. Additionally, further efforts are needed to address data diversity issues and improve the model’s ability to handle more complex scenes.

6. Conclusions

In this work, we propose a method for well workover scene description based on cross-modal coupled prompt-enhanced vision language models, aiming at intelligent analysis and scene recognition in well site. This method outperforms baseline large models in both scene judgment and description language generation, indicating a significant improvement in its domain adaptation ability to well workover scenes. We utilize Qwen-VL as the base model, leveraging its inherent generalized knowledge learned from the pre-training process with 1.4 billion text pairs. In constructing prompt vectors for both vision and language branches, we ensure deep knowledge fusion between the two branches by mapping the prompts from the text branch to the vision branch using a coupling function. We conduct comparative experiments in scene target recognition and generated text validation. The model achieves an accuracy of 0.8002 and precision of 0.9248 in target recognition, effectively addressing the issue of objective judgment in scene description. Additionally, it resolves the accuracy, reliability, and logicality issues in text generation with a perplexity of 74.96, a ROUGH score of 0.379, and a CIDEr score of 3.324. Through detailed experiments and analysis, it is evident that freezing the parameters of large-scale vision language models and only adding bimodal prompts, which account for 3.05% of the total parameters, can effectively guide large-scale vision language models to adapt to downstream knowledge in industrial scenes. The results of the study demonstrate that large-scale multimodal models can adapt to different domains with a certain amount of professional data knowledge and minimal computational cost, providing insights for the future development of large-scale models in the oil field domain.

Author Contributions

Conceptualization, X.L.; Methodology, X.L.; Software, R.Z.; Validation, X.L.; Formal analysis, X.L. and C.W.; Resources, L.Z.; Data curation, X.L.; Writing—original draft, X.L.; Writing—review & editing, X.L., Z.S., J.W. and W.L.; Visualization, Z.S. and R.Z.; Supervision, L.Z.; Project administration, L.Z.; Funding acquisition, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China under Grant 52325402, 52441411 and 52274057, National Science and Technology Major Project under Grant 2024ZD1004300, the National Key R&D Program of China under Grant 2023YFB4104200, 111 Project under Grant B08028.

Data Availability Statement

The datasets presented in this article are not readily available because requirement of the fund. Requests to access the datasets should be directed to xingyuliu23@163.com.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Deshmukh, S.; Elizalde, B.; Singh, R.; Wang, H. Pengi: An audio language model for audio tasks. Adv. Neural Inf. Process. Syst. 2023, 36, 18090–18108. [Google Scholar]

- Wu, C.; Lin, W.; Zhang, X.; Zhang, Y.; Xie, W.; Wang, Y. PMC-LLaMA: Toward building open-source language models for medicine. J. Am. Med. Inform. Assoc. 2024, 31, 1833–1843. [Google Scholar] [CrossRef]

- Tang, B.-J.; Ji, C.-J.; Zheng, Y.-X.; Liu, K.-N.; Ma, Y.-F.; Chen, J.-Y. Risk assessment of oil and gas investment environment in countries along the Belt and Road Initiative. Pet. Sci. 2024, 21, 1429–1443. [Google Scholar] [CrossRef]

- Abijith, P.; Patidar, P.; Nair, G.; Pandya, R. Large language models trained on equipment maintenance text. In Proceedings of the Abu Dhabi International Petroleum Exhibition and Conference, Abu Dhabi, United Arab Emirates, 2–5 October 2023; p. D021S065R003. [Google Scholar]

- Alquraini, A.; Al-Kadem, M.; Al Bori, M.; Bukhamseen, I. Optimizing ESP Well Analysis Using Natural Language Processing and Machine Learning Techniques. In Proceedings of the SPE Annual Technical Conference and Exhibition, San Antonio, TX, USA, 16–18 October 2023; p. D031S032R006. [Google Scholar]

- Mohammadpoor, M.; Torabi, F. Big Data analytics in oil and gas industry: An emerging trend. Petroleum 2020, 6, 321–328. [Google Scholar] [CrossRef]

- Enyekwe, A.; Urubusi, O.; Yekini, R.; Azoom, I. Impact of Data Quality on Well Operations: Case Study of Workover Operations. In Proceedings of the SPE Nigeria Annual International Conference and Exhibition, Lagos, Nigeria, 2–4 August 2016; p. SPE-184300-MS. [Google Scholar]

- Cheng, Z.; Wan, H.; Niu, H.; Qu, X.; Ding, X.; Peng, H.; Zheng, Y. China offshore intelligent oilfield production monitoring system: Design and technical path forward for implementation. In Proceedings of the Abu Dhabi International Petroleum Exhibition and Conference, Abu Dhabi, United Arab Emirates, 2–5 October 2023; p. D021S048R005. [Google Scholar]

- Sayed, R.A.F.; Hashim, S.M.; Shahin, E.S.; Abd-Elmaksoud, M.H. Effective Use of Real-Time Remote Monitoring System in Offshore Oil Wells Optimization, Case Study from Gulf of Suez-July Field. In Proceedings of the Offshore Technology Conference, Houston, TX, USA, 4–7 May 2020; p. D031S032R001. [Google Scholar]

- Wang, X.; Zhu, J.; Zhang, W.; Zeng, Q.; Zhang, Z.; Tan, Y. Enhancing Oilfield Management: The Digital Wellsite Advantages. In Proceedings of the Abu Dhabi International Petroleum Exhibition and Conference, Abu Dhabi, United Arab Emirates, 2–5 October 2023; p. D011S020R006. [Google Scholar]

- Lai, W.; Zhang, H.; Jiang, D.; Wang, Y.; Wang, R.; Zhu, J.; Chen, Q.; Gao, Y.; Li, W.; Xie, D. Digital twin and big data technologies benefit oilfield management. In Proceedings of the Abu Dhabi International Petroleum Exhibition and Conference, Abu Dhabi, United Arab Emirates, 31 October–3 November 2022; p. D031S079R002. [Google Scholar]

- Epelle, E.I.; Gerogiorgis, D.I. A review of technological advances and open challenges for oil and gas drilling systems engineering. AIChE J. 2020, 66, e16842. [Google Scholar] [CrossRef]

- Kiran, R.; Salehi, S.; Teodoriu, C. Implementing human factors in oil and gas drilling and completion operations: Enhancing culture of process safety. In Proceedings of the International Conference on Offshore Mechanics and Arctic Engineering, Madrid, Spain, 17–21 June 2018; American Society of Mechanical Engineers: New York, NY, USA, 2018; p. V008T11A022. [Google Scholar]

- Li, Y.; Zhang, Y.; Wang, C.; Zhong, Z.; Chen, Y.; Chu, R.; Liu, S.; Jia, J. Mini-gemini: Mining the potential of multi-modality vision language models. arXiv 2024, arXiv:2403.18814. [Google Scholar]

- Bai, J.; Bai, S.; Yang, S.; Wang, S.; Tan, S.; Wang, P.; Lin, J.; Zhou, C.; Zhou, J. Qwen-vl: A frontier large vision-language model with versatile abilities. arXiv 2023, arXiv:2308.12966. [Google Scholar]

- Qun, L.; Yiliang, L.; Tao, L.; Hui, L.; Baoshan, G.; Guoqiang, B.; Jialu, W.; Dingwei, W.; Huang, S.; Weiye, H. Technical status and development direction of workover operation of PetroChina. Pet. Explor. Dev. 2020, 47, 161–170. [Google Scholar]

- Ballinas, J. Evaluation and Control of Drilling, Completion and Workover Events with Permanent Downhole Monitoring: Applications to Maximize Production and Optimize Reservoir Management. In Proceedings of the SPE International Oil Conference and Exhibition in Mexico, Villahermosa, Mexico, 10–12 February 2002; p. SPE-74395-MS. [Google Scholar]

- Mansour, H.; Munir Ahmad, M.; Dhafr, N.; Ahmed, H. Evaluation of operational performance of workover rigs activities in oilfields. Int. J. Product. Perform. Manag. 2013, 62, 204–218. [Google Scholar] [CrossRef]

- Nace, C.L.; Miller, J.H.; Mercer, A.M. Optimizing Workover Rig Drillout Operations Through Data Acquisition and Analytics. In Proceedings of the SPE/ICoTA Well Intervention Conference and Exhibition, The Woodlands, TX, USA, 22–23 March 2022; p. D021S012R004. [Google Scholar]

- Payette, G.; Spivey, B.; Wang, L.; Bailey, J.; Sanderson, D.; Kong, R.; Pawson, M.; Eddy, A. A real-time well-site based surveillance and optimization platform for drilling: Technology, basic workflows and field results. In Proceedings of the SPE/IADC Drilling Conference and Exhibition, The Hague, The Netherlands, 14–16 March 2017; p. D011S002R001. [Google Scholar]

- Xu, Y.-Q.; Liu, K.; He, B.-L.; Pinyaeva, T.; Li, B.-S.; Wang, Y.-C.; Nie, J.-J.; Yang, L.; Li, F.-X. Risk pre-assessment method for regional drilling engineering based on deep learning and multi-source data. Pet. Sci. 2023, 20, 3654–3672. [Google Scholar] [CrossRef]

- Xia, W.-H.; Zhao, Z.-X.; Li, C.-X.; Li, G.; Li, Y.-J.; Ding, X.; Chen, X.-D. Intelligent risk identification of gas drilling based on nonlinear classification network. Pet. Sci. 2023, 20, 3074–3084. [Google Scholar] [CrossRef]

- He, Y.-P.; Zang, C.-Z.; Zeng, P.; Wang, M.-X.; Dong, Q.-W.; Wan, G.-X.; Dong, X.-T. Few-shot working condition recognition of a sucker-rod pumping system based on a 4-dimensional time-frequency signature and meta-learning convolutional shrinkage neural network. Pet. Sci. 2023, 20, 1142–1154. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1–9. [Google Scholar] [CrossRef]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–15 June 2015; pp. 3156–3164. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.-J.; Shamma, D.A. Visual genome: Connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vis. 2017, 123, 32–73. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; PMLR: Birmingham, UK, 2021; pp. 8748–8763. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.-T.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.-H.; Li, Z.; Duerig, T. Scaling up visual and vision-language representation learning with noisy text supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; PMLR: Birmingham, UK, 2021; pp. 4904–4916. [Google Scholar]

- Kenton, J.D.M.-W.C.; Toutanova, L.K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the NAACL-HLT, Minneapolis, MN, USA, 2–7 June 2019; p. 2. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]