Abstract

In this paper, a two-player nonzero-sum stochastic differential game problem is studied with both players using switching controls. A verification theorem associated with a set of variational inequalities is established as a sufficient criterion for Nash equilibrium, in which the equilibrium switching strategies for the two players, indicating when and where it is optimal to switch, are characterized in terms of the so-called switching regions and continuation regions. The verification theorem is proved in a piecewise way along the sequence of total decision times of the two players. Then, some detailed explanations are also provided to illustrate the idea why the conditions are imposed in the verification theorem.

Keywords:

stochastic differential game; switching control; verification theorem; variational inequality; sufficient condition MSC:

91A05; 91A15; 91A23

1. Introduction

A differential game is concerned with the problem that multiple players make decisions, according to their own advantages and trade-off with other peers, in the context of dynamic systems. More precisely, suppose that there are N players acting on a dynamic system through their decisions , and each of them has an individual cost functional , . The goal is to find a Nash equilibrium for the N players, which satisfies , for , where is an arbitrary decision for Player i. The property of means that when the N players act with , it is advantageous for them to maintain their decisions throughout. If one of them acts unilaterally to change a decision, it is penalized if the others maintain their initial decisions. The problem described above is called a nonzero-sum differential game. In this paper, we assume and focus our attention on the two-player case. In particular, if the dynamic system is given by a stochastic differential equation, then the game is called a nonzero-sum stochastic differential game, and there is much literature on this subject; see Hamadène et al. [1], Hamadène [2,3], Wu [4], and Sun and Yong [5]. On the other hand, recently, the theory of optimal control and differential game has been found to be very useful in the field of human–machine interaction systems; see [6,7,8].

Optimal switching is used to determine a sequence of stopping times at which to shift the mode of the control process to another. When there are more than two modes, it needs to decide not only when to switch, but also where to switch. As an important branch in control theory, optimal switching has been extensively investigated by means of variational inequalities (see Yong [9,10], Tang and Yong [11], Pham [12], and Song et al. [13]) or backward stochastic differential equations (see Hamadène and Jeanblanc [14], El Asri and Hamadène [15], Hamadène and Zhang [16], Hu and Tang [17], and El Asri [18,19]). In addition, the zero-sum switching game problems have been discussed by Tang and Hou [20], Hamadène et al. [21], and El Asri and Mazid [22]; it is noted that the nonzero-sum switching game problems have not ever been investigated. Apart from the mathematical interest in its own right, optimal switching enjoys a wide range of applications, such as resource extraction (Brekke and Øksendal [23]), investment decisions (Duckworth and Zervos [24]), and electricity production (Carmona and Ludkovski [25]).

In this paper, we consider, for the first time in the literature, a two-player nonzero-sum stochastic differential game with both players using switching controls. The main contribution of this paper is to establish a verification theorem (see Theorem 1) as a sufficient criterion for Nash equilibrium between the two players, in which a set of variational inequalities is given and a regularity condition is imposed to require, taking player 1 for example, the solution to be on the opponent’s continuation region , and to be on except for the boundary of its own continuation region . It turns out that the Nash equilibrium strategies for the two players can be constructed based on these variational inequalities, and the solutions and coincide with the corresponding value functions (or, Nash equilibrium payoffs in the terminology of Buckdahn et al. [26]) of the two players.

On the one hand, it is emphasized that, if we just prove the verification theorem, then the regularity condition given is more strict than what we actually need; however, the seemingly superfluous regularity condition is really necessary if we want to further apply the the so-called smooth-fit principle to solve some specific examples, otherwise we cannot find enough pasting equations for the undetermined parameters. On the other hand, we would like to mention that in this paper, the verification theorem is proved in a piecewise or stage-by-stage way along the sequence of total decision times of the two players (here, a stage means a period between two adjacent decision times), which, to the best of our best knowledge, is new in the nonzero-sum switching game literature.

2. Problem Formulation

Let R be the one-dimensional real space. For a subset , , , and , denote the spaces of all real valued continuous, continuously differentiable, and twice continuously differentiable functions on D, respectively. Let be a probability space on which a one-dimensional standard Brownian motion , , is defined. denotes the natural filtration of augmented by all the null sets.

Let the two players in the game be labeled Player 1 and Player 2. In order to formulate the problem precisely, we first provide the definition of admissible switching controls for the two players.

Definition 1.

Let and be two finite sets of possible modes for Player 1 and Player 2, respectively. An admissible switching control for Player 1 is a sequence of pairs , where is a sequence of stopping times with and as , representing the decisions on “when to switch," and is a sequence of -valued random variables with each being -measurable, representing the decisions on “where to switch." The collection of all admissible switching controls for Player 1 is denoted by .

An admissible switching control for Player 2 is defined similarly. The collection of all admissible switching controls for Player 2 is denoted as .

Remark 1.

Here, an example of electricity production management is provided to illustrate the meaning of “possible modes for players” in the Definition 1. Typically, a power plant has multiple modes, such as operating at full capacity, operating at partial capacity, or even shutting down all generators. Suppose that there are two managers running the power plant by switching the mode of the power plant from one to another, and the fixed costs are associated with these switchings. Both managers make decisions to maximize their payoffs and eventually reach a Nash equilibrium. In this example, the multiple modes of the power plant that can be chosen by the two managers are the so-called “possible modes for players”.

The state process is described by the following:

where are two given functions satisfying the usual Lipschitz condition, so that (1) admits a unique strong solution. Note that the functions b and and the functions f, , , , appearing in the following payoff functionals are deterministic, as, in this paper, we adopt the verification theorem approach associated with a set of ordinary differential equations in the form of variational inequalities to deal with the game problem under consideration.

The payoff functionals for Player 1 and Player 2 to maximize are given, respectively, by

and

where is a given function, and are the switching costs for Player 1 and Player 2, respectively, and are the corresponding gains for Player 1 and Player 2 due to the opposites’ actions, respectively, and is the discount factor. It is emphasized that there are no specific conditions on the functions f, , , , ; all of the conditions we need are listed in the verification theorem.

The objective is to find a Nash equilibrium , i.e.,

If such a Nash equilibrium exists, then we denote

as the corresponding value function. Note that are not uniquely defined, but depend on the Nash equilibrium under consideration.

Remark 2.

In this paper, we assume the Brownian motion and the state process to be one-dimensional just for simplicity of presentation. There is no essential difficulty to generalize the results to the multi-dimensional case, but with more complex notation.

3. Verification Theorem

In this section, we establish a verification theorem as a sufficient criterion that can be used to obtain a Nash equilibrium.

Let

and

Then, let

and

Remark 3.

The definitions of and have an immediate explanation: If Player 1 (respectively, Player 2) makes a switching from i to k (respectively, j to l), then the present Nash equilibrium payoff can be written as (respectively, ); we have considered the payoff in the present mode of the switching control and the switching cost. The maximum point of (respectively, ) is actually the best new mode that Player 1 (respectively, Player 2) would choose in case it wants to switch; otherwise, it would be in its interest to deviate by the definition of the Nash equilibrium.

Similarly, (respectively, ) represents the payoff for Player 1 (respectively, Player 2) when Player 2 (respectively, Player 1) takes the best switching action and behaves optimally afterward.

Let

and

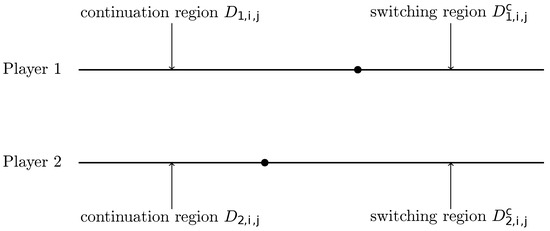

In fact, (respectively, ) is the so-called continuation (or, no-switching) region for Player 1 (respectively, Player 2), in which it is better for Player 1 (respectively, Player 2) to do nothing than make a switching; see Figure 1 for a graphical representation of and . The boundaries of and are denoted by and , respectively.

Figure 1.

A graphical representation of and .

Denote

where and are the first-order and second-order derivatives of an arbitrary twice differentiable function , respectively.

Now, we state and prove the verification theorem for the nonzero-sum stochastic differential game with switching controls.

Theorem 1.

Let and be real-valued functions, such that:

(i) For ,

and

(ii) For and ,

and

(iii) For and ,

(iv) For and ,

(v) For and ,

(vi) For and ,

Define and inductively as

and

Then, we have that is a Nash equilibrium for the two players and are the corresponding value functions of the game.

Remark 4.

It should be noticed that along the boundaries of (respectively, ), the function (respectively, ), , only belongs to , but does not necessarily belong to on (respectively, ). In this situation, we can apply the smooth approximation argument introduced by Øksendal [27] (Theorem 10.4.1 and Appendix D) to complement the smoothness needed for Itô’s formula. Here, in this proof, for convenience, we simply consider (respectively, ), , to be on (respectively, ); in this connection, see also Guo and Zhang [28] (Theorem 3.1) and [29] (Theorem 2) and Aïd et al. [30] (Theorem 1).

Proof.

We only show the part for Player 1, and the counterpart for Player 2 is symmetric. We first prove that

where is an arbitrary switching control for Player 1. Denote (with ) as the sequence of total switching times in the game: at each , we have either for some m or for some n. Then, based on , the payoff functional for Player 1 can be rewritten as

Applying Itô’s formula to between and , , we have

where the inequality follows from condition (iii), as when and the last equality is due to the fact that no switching occurs between and , thus, we have and .

Summing the indices , we have

Combining (8) and (9) yields

In the following, the analysis is divided into three cases:

(a) If for some m, then

(b) If for some n, then

Remark 5.

Here, we provide some comments on the conditions (i)–(vi) given in the verification theorem. First, the condition (i) is the regularity requirement on the solutions of the variational inequalities. It is important for us, in specific cases, to solve the variational inequalities and obtain some analytical solutions by using the so-called smooth-fit principle. The condition (ii) is a typical assumption in optimal switching control theory, which comes from the dynamic programming principle. Regarding the condition (iii), if Player 2 does not make a switching (i.e., ), then the problem for Player 1 becomes a classical one-player optimal switching control problem, so we have (2). On the contrary, if Player 2 makes a switching (i.e., ), by the definition of Nash equilibrium, we expect that Player 1 does not lose anything. This is equivalent to (4) in condition (v); otherwise, it would be in its interest to deviate. Finally, the conditions (iv) and (vi) on are imposed for the same reason.

Remark 6.

The proof for the case with three or more than three players can be given in a similar way to that of Theorem 1. Note that in the proof of Theorem 1, we show the property of the Nash equilibrium, that and separately and independently. It can be naturally generalized to the case with three or more than three players, and one needs only to modify accordingly the conditions (i)–(vi) imposed on and to for in Theorem 1.

4. Concluding Remarks

This paper is the first attempt to study a two-player nonzero-sum stochastic differential game with both players using switching controls. The verification theorem method is adopted with a set of variational inequalities and an appropriate regularity condition is proposed for Nash equilibriums.

It is mentioned that this paper is mainly devoted to the theoretical aspect of a stochastic differential game with switching controls. On the other hand, the numerical aspect is also an important and interesting issue, and it is very useful to apply the verification theorem for solving some practical problems arising from real world. We will consider this topic in our future study.

Author Contributions

Writing—original draft, Y.L.; Writing—review & editing, H.M. All authors have read and agreed to the published version of the manuscript.

Funding

The work is supported by the National NSF of China (12001278, 12001023), the NSF of the Higher Education Institutions of Jiangsu Province (20KJB110017) and the Science and Technology Project of Beijing Municipal Education Commission (KM202410005014).

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hamadène, S.; Lepeltier, J.P.; Peng, S. BSDEs with continuous coefficients and stochastic differential games. In Backward Stochastic Differential Equations (Paris, 1995–1996); Pitman Research Notes in Mathematics Series 364; Longman: Harlow, UK, 1997; pp. 115–128. [Google Scholar]

- Hamadène, S. Backward-forward SDE’s and stochastic differential games. Stoch. Process. Appl. 1998, 77, 1–15. [Google Scholar] [CrossRef]

- Hamadène, S. Nonzero sum linear-quadratic stochastic differential games and backward-forward equations. Stoch. Anal. Appl. 1999, 17, 117–130. [Google Scholar] [CrossRef]

- Wu, Z. Forward-backward stochastic differential equations, linear quadratic stochastic optimal control and nonzero sum differential games. J. Syst. Sci. Complex. 2005, 18, 179–192. [Google Scholar]

- Sun, J.; Yong, J. Linear-quadratic stochastic two-person nonzero-sum differential games: Open-loop and closed-loop Nash equilibria. Stoch. Process. Appl. 2019, 129, 381–418. [Google Scholar] [CrossRef]

- Kille, S.; Leibold, P.; Karg, P.; Varga, B.; Hohmann, S. Human-variability-respecting optimal control for physical human-machine interaction. arXiv 2024, arXiv:2405.03502. [Google Scholar]

- Kille, S.; Varga, B.; Hohmann, S. Study on human-variability-respecting optimal control affecting human interaction experience. arXiv 2024, arXiv:2408.08620. [Google Scholar]

- Varga, B. Toward adaptive cooperation: Model-based shared control using LQ-differential games. arXiv 2024, arXiv:2403.11146. [Google Scholar] [CrossRef]

- Yong, J. Differential games with switching strategies. J. Math. Anal. Appl. 1990, 145, 455–469. [Google Scholar] [CrossRef]

- Yong, J. A zero-sum differential game in a finite duration with switching strategies. SIAM J. Control Optim. 1990, 28, 1234–1250. [Google Scholar] [CrossRef]

- Tang, S.; Yong, J. Finite horizon stochastic optimal switching and impulse controls with a viscosity solution approach. Stochastics 1993, 45, 145–176. [Google Scholar] [CrossRef]

- Pham, H. On the smooth-fit property for one-dimensional optimal switching problem. In Séminaire de Probabilités XL; Springer: Berlin/Heidelberg, Germany, 2007; pp. 187–199. [Google Scholar]

- Song, Q.; Yin, G.; Zhu, C. Optimal switching with constraints and utility maximization of an indivisible market. SIAM J. Control Optim. 2012, 50, 629–651. [Google Scholar] [CrossRef]

- Hamadène, S.; Jeanblanc, M. On the statring and stopping problem: Applications in reversible investments. Math. Oper. Res. 2007, 32, 182–192. [Google Scholar] [CrossRef]

- Asri, B.E.; Hamadène, S. The finite horizon optimal multi-modes switching problem: The viscosity solution approach. Appl. Math. Optim. 2009, 60, 213–235. [Google Scholar] [CrossRef]

- Hamadène, S.; Zhang, J. Switching problem and related system of reflected backward SDEs. Stoch. Process. Appl. 2010, 120, 403–426. [Google Scholar] [CrossRef]

- Hu, Y.; Tang, S. Multi-dimensional BSDE with oblique reflection and optimal switching. Probab. Theory Relat. Fields 2010, 147, 89–121. [Google Scholar] [CrossRef]

- Asri, B.E. Optimal multi-modes switching problem in infinite horizon. Stoch. Dyn. 2010, 10, 231–261. [Google Scholar] [CrossRef]

- Asri, B.E. Stochastic optimal multi-modes switching with a viscosity solution approach. Stoch. Process. Appl. 2013, 123, 579–602. [Google Scholar] [CrossRef][Green Version]

- Tang, S.; Hou, S.H. Switching games of stochastic differential systems. SIAM J. Control Optim. 2007, 46, 900–929. [Google Scholar] [CrossRef]

- Hamadène, S.; Martyr, R.; Moriarty, J. A probabilistic verification theorem for the finite horizon two-player zero-sum optimal switching game in continuous time. Adv. Appl. Probab. 2019, 51, 425–442. [Google Scholar] [CrossRef]

- Asri, B.E.; Mazid, S. Stochastic differential switching game in infinite horizon. J. Math. Anal. Appl. 2019, 474, 793–813. [Google Scholar] [CrossRef]

- Brekke, K.A.; Øksendal, B. Optimal switching in an economic activity under uncertainty. SIAM J. Control Optim. 1994, 32, 1021–1036. [Google Scholar] [CrossRef]

- Duckworth, K.; Zervos, M. A model for investment decisions with switching costs. Ann. Appl. Probab. 2001, 11, 239–260. [Google Scholar] [CrossRef]

- Carmona, R.; Ludkovski, M. Pricing asset scheduling flexibility using optimal switching. Appl. Math. Financ. 2008, 15, 405–447. [Google Scholar] [CrossRef]

- Buckdahn, R.; Cardaliaguet, P.; Rainer, C. Nash equilibrium payoffs for nonzero-sum stochastic differential games. SIAM J. Control Optim. 2004, 43, 624–642. [Google Scholar] [CrossRef]

- Øksendal, B. Stochastic Differential Equations, 6th ed.; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Guo, X.; Zhang, Q. Closed-form solutions for perpetual American put options with regime switching. SIAM J. Appl. Math. 2004, 64, 2034–2049. [Google Scholar]

- Guo, X.; Zhang, Q. Optimal selling rules in a regime switching market. IEEE Trans. Automat. Control 2005, 50, 1450–1455. [Google Scholar] [CrossRef]

- Aïd, R.; Basei, M.; Callegaro, G.; Campi, L.; Vargiolu, T. Nonzero-sum stochastic differential games with impulse controls: A verification theorem with applications. Math. Oper. Res. 2020, 45, 205–232. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).