Abstract

Mixed zero-sum games consider both zero-sum and non-zero-sum differential game problems simultaneously. In this paper, multiplayer mixed zero-sum games (MZSGs) are studied by the means of an integral reinforcement learning (IRL) algorithm under the dynamic event-triggered control (DETC) mechanism for completely unknown nonlinear systems. Firstly, the adaptive dynamic programming (ADP)-based on-policy approach is proposed for solving the MZSG problem for the nonlinear system with multiple players. Secondly, to avoid using dynamic information of the system, a model-free control strategy is developed by utilizing actor–critic neural networks (NNs) for addressing the MZSG problem of unknown systems. On this basis, for the purpose of avoiding wasted communication and computing resources, the dynamic event-triggered mechanism is integrated into the integral reinforcement learning algorithm, in which a dynamic triggering condition is designed to further reduce triggering times. With the help of the Lyapunov stability theorem, the system states and weight values of NNs are proven to be uniformly ultimately bounded (UUB) stable. Finally, two examples are demonstrated to show the effectiveness and feasibility of the developed control method. Compared with static event-triggering mode, the simulation results show that the number of actuator updates in the DETC mechanism has been reduced by 55% and 69%, respectively.

Keywords:

dynamic event-triggered control; integral reinforcement learning; adaptive dynamic programming; adaptive critic design; mixed zero-sum games MSC:

49L12; 49L20; 49N70; 35F21; 90C39

1. Introduction

In recent years, differential games theory has been extensively applied in various fields such as economic and financial decision-making [1,2,3,4], automation control systems [5,6], and computer science [7]. In these studies, multiple participants engaged in continuous games and strove to optimize their respective independent, then conflicting, objectives so the Nash equilibrium could be achieved. In two-player zero-sum games (ZSGs) [8,9], if one player gained, it inevitably resulted in a loss for another player, and precluded any possibility of cooperation. However, in multi-person non-zero-sum games (NZSGs) [7,10], the total sum of benefits or losses for the parties involved were not zero, thus they allowed for the possibility of a win–win scenario and subsequent cooperation. Based on the characteristics and advantages of these two types of games, Ref. [11] proposed a novel game strategy distinct from ZSGs and NZSGs, termed MZSGs. In such scenarios, determining the Nash equilibrium typically involved solving the coupled Hamilton–Jacobi–Bellman (HJB) equations. When the system was linear, the solution could be obtained by simplifying the HJ equations to algebraic Riccati equations. However, when the system was nonlinear, obtaining analytical solutions became challenging due to the presence of coupling terms in the equations.

To address the issue of unattainable analytical solutions, researchers devised a novel method based on the ADP algorithm to approximate optimal solutions [12,13,14,15]. This method was applied in numerous control domains to tackle various problems, such as control issues [16], time-delay system control [17], optimal spin polarization control [18], and reinforcement learning (RL) control [19]. In the work of [18], a new ADP structure was proposed for three-dimensional spin polarization control. A novel ADP method, entirely independent of system information, was introduced for nonlinear Markov jump systems in order to solve the two-player Stackelberg differential game in [20]. In the work of [21], an iterative ADP algorithm was proposed to iteratively solve the parameterized Bellman equation for the global stabilization of continuous-time linear systems under control constraints.

The integral reinforcement learning (IRL) algorithm was proposed as a derivative of the RL algorithm in [22]. In policy iteration-based ADP algorithms, an approximate optimal solution to the HJB equation was required. However, in the IRL algorithm, model-free implementation was achievable, which means that the HJB equation could be solved without any knowledge of system dynamics [23]. Due to the difficulty in modeling or accurately describing system dynamics in practical problems, the IRL algorithm has been widely applied to address optimal control problems. In [24], it was demonstrated that data-based IRL algorithms were equivalent to model-based policy iteration algorithms. Researchers utilized the IRL algorithm to obtain iterative control, thereby achieving policy evaluation and policy improvement [25]. Moreover, a novel guaranteed cost control was designed using the IRL algorithm, which aimed to relax the system dynamics knowledge of stochastic systems modulated by stochastic time-varying parameters in [26]. In the research by [27], an adaptive optimal bipartite consensus scheme was developed using the IRL algorithm in order to contribute a critic-only controller structure. However, these control methods had all been developed on the basis of a time-triggered mechanism; the defining characteristic of the mechanism was that the sampled data and each control update were periodic. However, as systems gradually stabilized or operated within permissible performance margins, it became desirable for controllers to remain in a static state [28].

In response to these requirements, researchers proposed static event-triggered control (SETC) for sampling processes, which can ensure stable system operation. Specifically, a novel triggering condition was pre-designed by the authors, which mapped to a defined threshold. The controller would only update when this condition was met, significantly reducing the wastage of communication resources [29,30,31,32]. Nevertheless, the triggering rules associated with static event triggers were solely dependent on the current state and error signals and disregarded previous values. This limitation was addressed in Girard’s research in [33], where an internal dynamic variable was incorporated into the previous triggering mechanism. This enhancement made the triggering mechanism contingent not only related to current values but also associated historical data, which led to the development of DETC. The advantage of this new mechanism laid in its ability to extend the interval between triggers. In subsequent studies [34,35,36,37], researchers extensively explored the application of DETC in multi-agent systems, theoretically proving their feasibility. Ref. [38] investigated fault-tolerant control issues based on DETC, where the dynamic variable adopted a form more general than exponential functions. Ref. [39] investigated non-fragile dissipative filters for discrete-time interval type-2 fuzzy Markov jump systems.

In this paper, an online algorithm was proposed based on ADP to address the IRL online adaptive DETC problem for MZSGs with unknown nonlinear systems. The main contributions are given as follows:

- 1.

- Unlike the studies on ZSG or NZSG problems in [8,19,24,30], the ZSG and NZSG problems are considered simultaneously, in which an event-triggered ADP approach is used to solve the problem to reduce communication and computing resources.

- 2.

- By establishing the actor NNs to approximate the optimal control strategies and auxiliary control inputs of each player, a novel event-triggered IRL algorithm is proposed to solve the mixed zero-sum games problem without using the information of system functions.

- 3.

- In the developed off-policy DETC method, by introducing the dynamic adaptive parameters to the triggering conditions, compared with the static event-triggered mechanism, the triggering frequency is further reduced, thereby achieving greater utilization of communication resources.

The rest of this paper is organized as follows. Section 2, elaborates on unknown nonlinear systems and introduces knowledge of multiplayer MZSGs. Section 3 proposes ADP-based near-optimal control for MZSGs under the DETC mechanism. Section 4 develops a dynamic event-triggered IRL algorithm for unknown systems. Section 5 proves the UUB properties of closed-loop system states and the convergence of critical NN weight estimation errors. Section 6 provides two example results to validate the effectiveness of the developed algorithms. Finally, Section 7 presents the conclusion of this paper.

2. Problem Formulation

Consider the following multiplayer games system

where is the system state, and are unknown system and control dynamics, are the control inputs, and is the initial system state.

Assumption 1.

, is a positive constant. and are Lipschitz continuous on a compact set , which contains the origin, and the system is stable.

For system (1), these participants exhibit varying competitive dynamics, where the first N participants engage in cooperative relationships akin to NZSGs, and the N-th and ()-th participants engage in competitive interactions akin to ZSGs. We can identify equilibrium strategies that stabilize the system, defining the cost function of the i-th participant based on their interactions with others

where , and is a positive constant.

Definition 1.

The value functions corresponding to (4) and (5) are

where . Let = , and = . Our intention is to find the optimal control inputs .

If the optimal value functions (2) and (3) are differentiable on , the Hamilton functions are defined as

where . HJ functions are determined by

At equilibrium, there exist stability conditions

Next, using stationary conditions, we can obtain

Remark 1.

3. ADP-Based Near-Optimal Control for MZSGs Under DETC Mechanism

The dynamic event-triggered mechanism put in this paper represents as a singular monotonically increasing sequence of trigger instants, which satisfies that the condition . is denoted as the state at trigger time , and the event-triggered errors are defined as:

Recalling (10) and (11), the event-triggered optimal control policies are obtained as

where with . By substituting (15) and (16), the event-triggered HJ equations are represented as:

Assumption 2.

All optimal control policies exhibit local Lipschitz continuity concerning the event-triggered error . That is, with , and , we can always find a normal number that makes hold.

Theorem 1.

Proof.

The Lyapunov function is , where and .

When implementing the optimal control policies (15) and (16) under the DETC method, the derivative of along the trajectory of system (1) is

The augmented optimal control signal can be indicated by and the augmented control error can be indicated by . Substituting (22) into (21) yields:

where , , and

. is the pseudo-inverse of .

It can be observed that B, R, and are all positive definite, thus the eigenvalues of are greater than zero. Because Y is not a zero matrix, both the smallest eigenvalue of B and the largest eigenvalue of are greater than zero; then, we have

On the other hand (20), we have

When the dynamic triggering condition (19), designed by us, is satisfied, it yields that . According to Lyapunov’s theorem, the state vector of system (1) can gradually approach zero.

This proof is completed. □

Remark 2.

Using Young’s inequality, the unknown term can be eliminated. Through this operation, it becomes possible in the calculation of triggering conditions to dispense with the need for control input information, while simultaneously ensuring the stability of the system. Consequently, it also allows for greater conservation of system resources in computation while simultaneously making certain the stability of the system.

4. Dynamic Event-Triggered IRL Algorithm for MZSG Problem

Proposing an on-policy iterative algorithm is valuable for solving the HJI equation using the Bellman equation. The core concept of the model-based ADP algorithm outlined above forms the basis for the subsequent unknown system online learning-based Algorithm 1.

| Algorithm 1 On-Policy ADP Algorithm |

Step 1: The initial admissible control inputs are expressed as also let . Step 2: Solve the following Bellman Equation (27) for . Step 4: If max ( is a set positive number), stop at step 3; otherwise, return to step 2. |

Inspired by [38], we can establish the Bellman equations as follows:

where .

Based on the equilibrium condition, we have

In this section, motivated by the iterative idea of Algorithm 2, a model-free IRL algorithm for MZSGs problem is given. The system dynamics are entirely unknown, i.e., and are unknown, so by introducing a series of exploration signals into the control inputs to relax the need for precise knowledge of and , the traditional system (1) can be reformulated as:

| Algorithm 2 Model-Free IRL Algorithm for MZSGs |

Step 1: Set initial policies . Step 2: Solve the Bellman Equation (31) to acquire Step 3: If max ( is a set positive number), stop it; otherwise, return to step 2. |

Based on [23,38], the auxiliary detection signals are considered, and the Bellman equation can be given in the following form:

To eliminate the requirement for input dynamics, the control defined similar to Equations (28) and (29) are defined as:

Theorem 2.

Proof.

, satisfies Equations (27)–(29) if and only if it is a solution to Equation (31). To establish the sufficient condition, it is sufficient to demonstrate that Equation (31) has a unique solution. This is proven through reasoning involving contradictions.

Assuming there exists another solution () to Equation (31), it can be easily demonstrated as

In addition, one has

It is known that Equation (37) holds . Let , and it is concluded that . From , constant . Furthermore, , to .

In this part of the document, we utilize the below NNs to estimate the value functions and the control inputs over the compact , which is given as follows:

where are the ideal weight vectors. are the activation function vectors for the NN. are the NN approximation errors.

The estimated value of can be expressed as

with representing the estimated critic weight vector.

The target control policies are estimated by

where are the ideal weight vectors. are the activation functions for the actor NNs. are the NN approximation errors.

Denote the estimated values of as . The estimated value of is given as follows:

Refer to the event as the sampled reconstruction error . When , the optimal control signals are designed as

□

Assumption 3.

(1) The ideal NN weight vectors and are bounded by , . (2) The NN activation functions and are all bounded by , . (3) The gradients of NN activation functions and the NN approximation error and its gradient are bounded by , and .

The estimated value functions are shown in (39) and control signals can be obtained as

Define the estimated value of the augmented NN weight vectors as

The desired weight vector of is denoted as

Define

Then, the residual error becomes

where .

Let , updating the augmented weight vectors using the gradient descent algorithm as

where is an adaptive gain value.

Assumption 4.

Consider the system in (1), let , and there exist constants and and satisfies

which ensures that remains persistently exciting over the interval .

Remark 3.

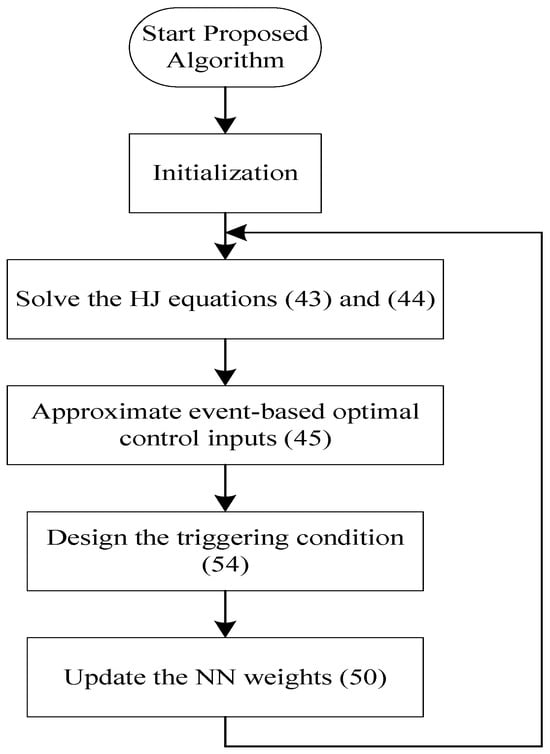

The flowchart of the proposed method in Theorem 3 is given in Figure 1. In order to ensure the thorough learning of the neural network, variable should be consistently incentivized, whereby the estimated weights can converge to the ideal weights , and a term involving is incorporated into the weight adjustment rule of NNs for stability analysis.

Figure 1.

The flowchart of the proposed method in Theorem 3.

Considering the system dynamic (1), the augmented system state is set as with , and the combined control system can be derived as

Additionally, we have

where , where .

5. Stability Analysis

Theorem 3.

Assume that Assumptions 1–4 hold and the solution of Bellman Equation (31) exists. The DETC strategies are given in (39) and (41) for systems (1), and the augmented weight vector is updated based on (50). Providing the triggering condition is as

where and are proposed in (59) and (64), is a design parameter.

Proof.

The Lyapunov function is chosen as

where with and , and .

Case 1: When , , considering system dynamics (1) and applying the DETC policies in (45), it has with and , and .

Considering (38), becomes

where .

In light of (10), (11), and (58) and based on the fact and Assumption 2, becomes

where B is given in (23) and where according to assumption 3, is a normal number. And based on (49), we obtain

where and .

If the sampling interval T is sufficiently small, the integral terms , , , , and can be approximated asb. When , .

Review Assumption 3; there is a constant that satisfies .

Then, . Thus, we have

If the triggering condition (54) is satisfied, we have

where .

Let the parameter , and the interval T be chosen such that and . Then, if

Therefore, the system states and the NN weight estimation errors are UUB.

Case 2: When , the differential of this function can be expressed as

Based on Case 1, is monotonically decreasing on . Thus, one obtains , . Applying the limit to each side of the inequality, it has . Based on that fact, we have

Moreover, is a continuous difference function at the triggering instant with . According to the results of Case 1, one has . According to the analysis presented above, it obtains . □

6. Simulation

In this section, examples of connecting two nonlinear systems are provided to verify the effectiveness of the algorithm.

6.1. A Nonlinear Example

In this example, to assess the efficacy of our method, we consider four players by [39]:

Define the cost performances as

Set , , , and I has appropriate dimensions, and let , . The values of other quantities are the same as the previous simulation.

In the design, assume that the all initial probing control inputs are . The simulation parameters are shown in Table 1.

Table 1.

Parameter settings.

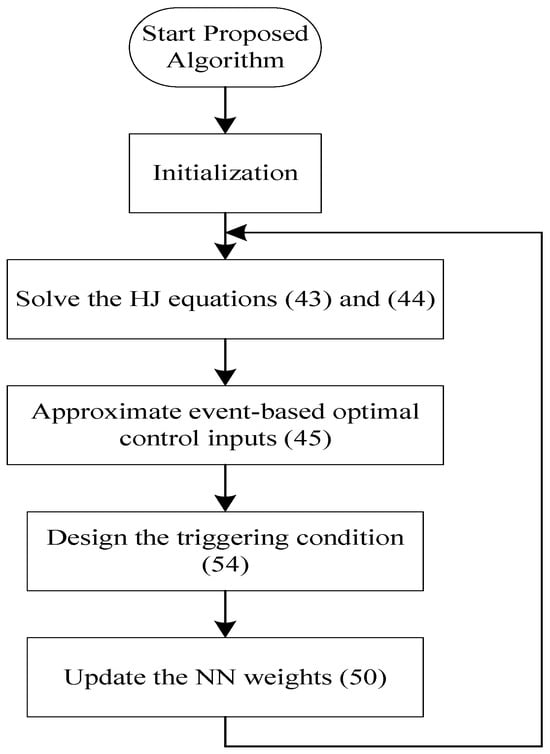

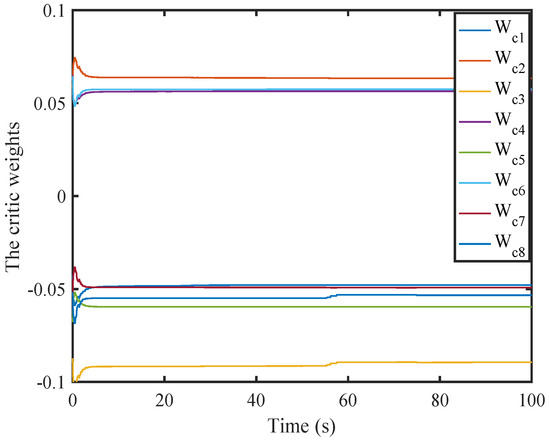

The weight values of the first critic NN converge to [0.0735, −0.0450, −0.0391, 0.0933, −0.0863, 0.0406, −0.0506, 0.0049].

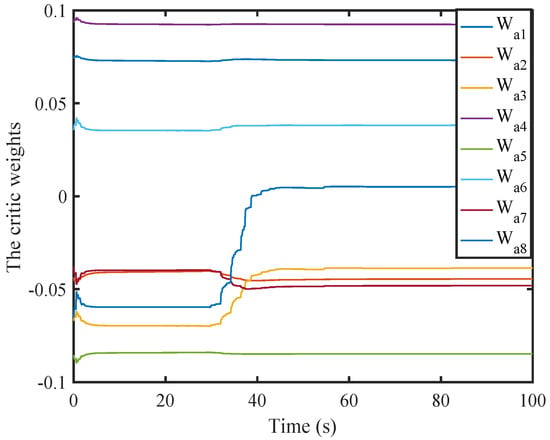

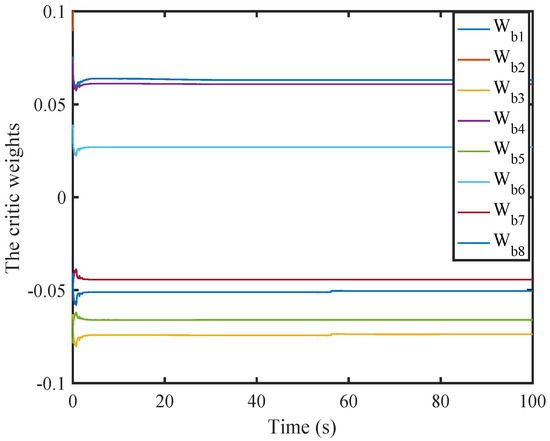

The weight values of the second critic NN converge to [0.0630, 0.1011, −0.0737, 0.0608, −0.0660, 0.0269, −0.0443, −0.0504].

The weight values of the third critic NN converge to [−0.0478, 0.0633, −0.0894, 0.0563, −0.0596, 0.0573, −0.0492, −0.0533].

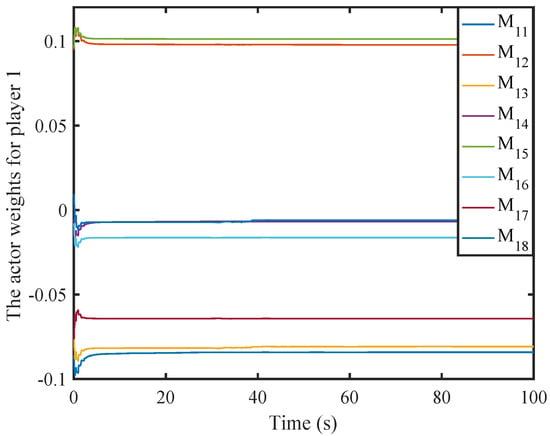

The weight values of the first actor NN converge to [−0.0841, 0.0986, −0.0840, −0.0064, 0.1016, −0.0182, −0.0609, −0.0122].

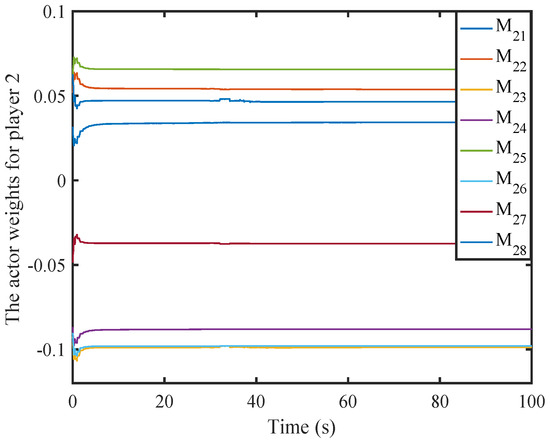

The weight values of the second actor NN converge to [0.0368, 0.0522, −0.0989, −0.0856, 0.0639, −0.0972, −0.0370, 0.0443].

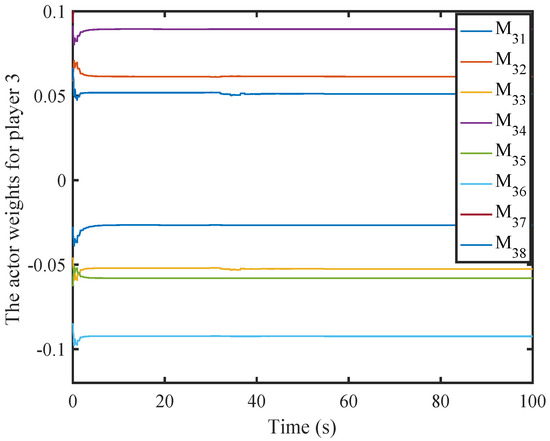

The weight values of the third actor NNconverge to [−0.0234, 0.0595, −0.0533, 0.0918, −0.0593, −0.0925, 0.1057, 0.0467].

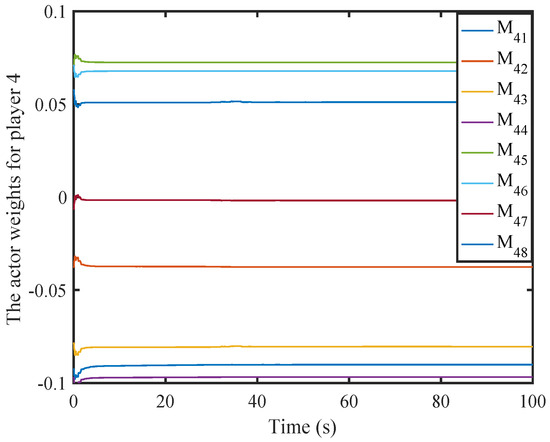

The weight values of the fourth actor NN converge to [−0.0901, −0.0371, −0.0823, −0.0966, 0.0726, 0.0672, −0.0004, 0.0482].

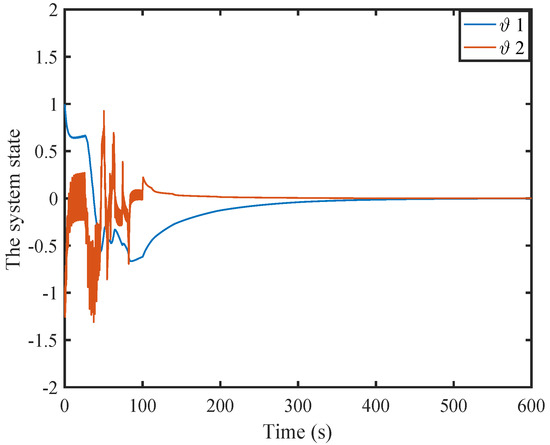

It is clear that the system state converges to zero.

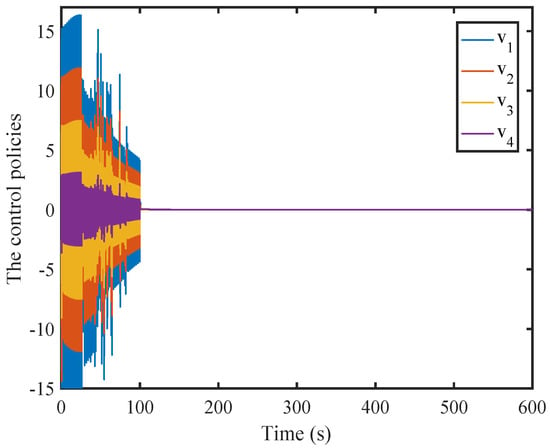

It is obvious that the control input converges to zero.

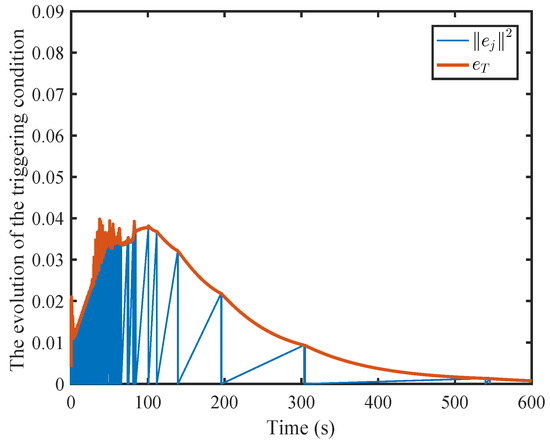

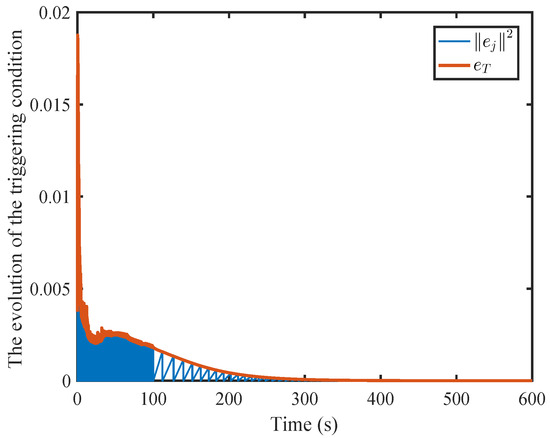

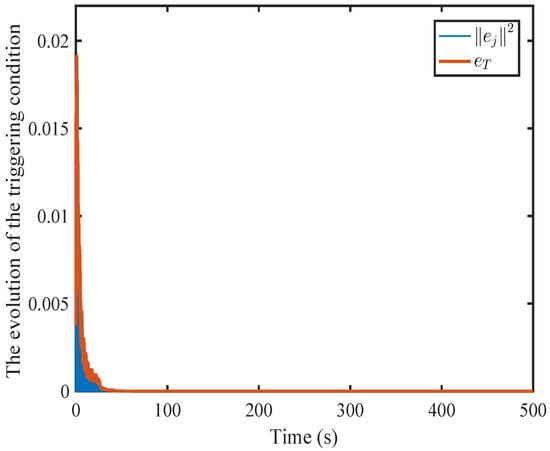

It can be found that the norm of sampling error under dynamic event-triggering converges to zero.

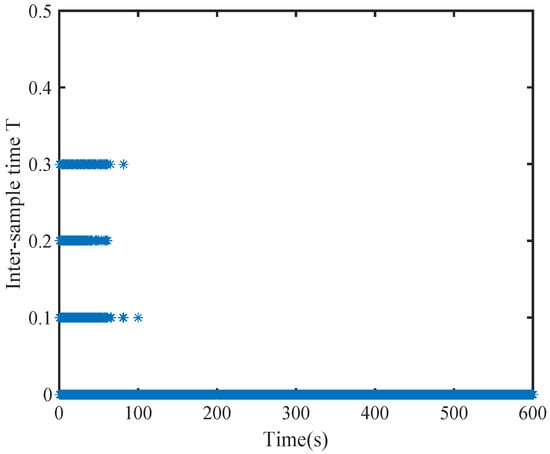

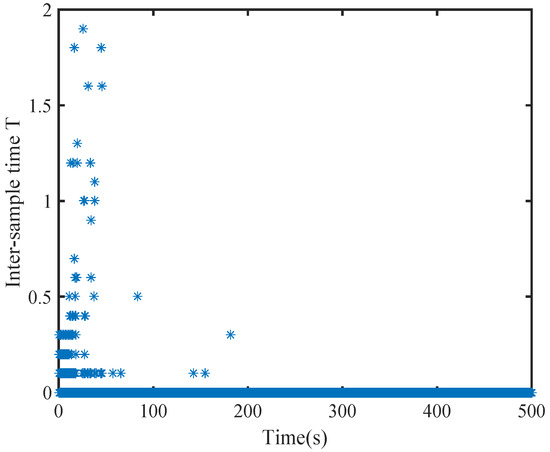

It is obvious that compared to static event-triggering, the number of actuator updates triggered by dynamic events decreased by 55%.

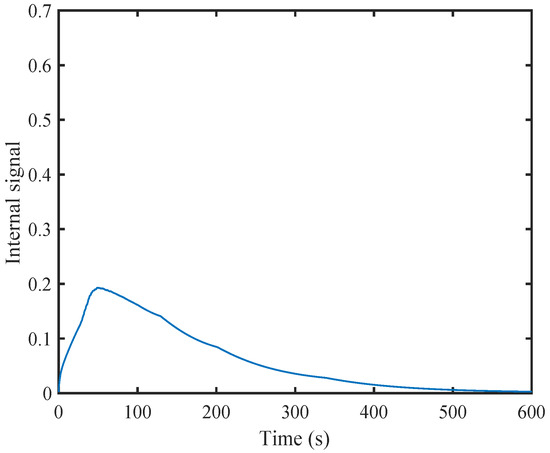

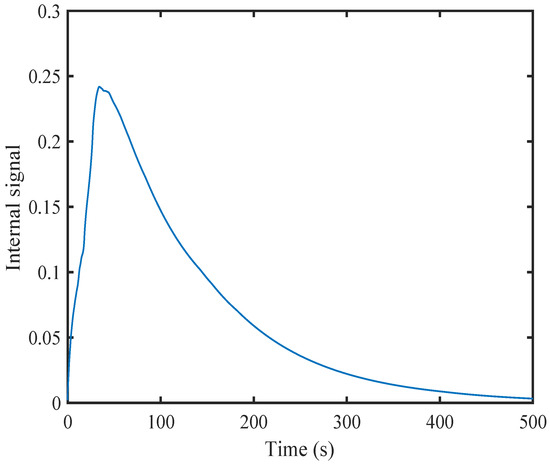

It can be found that the internal dynamic signals converges to zero.

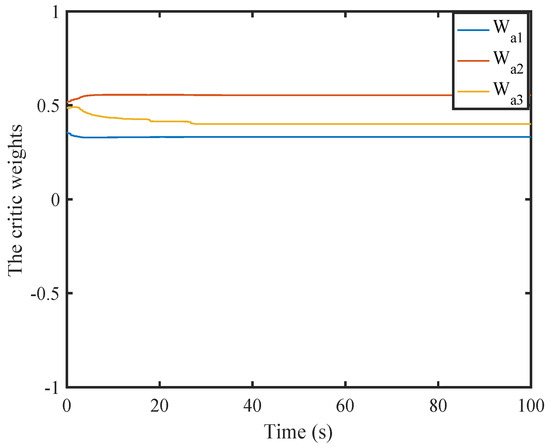

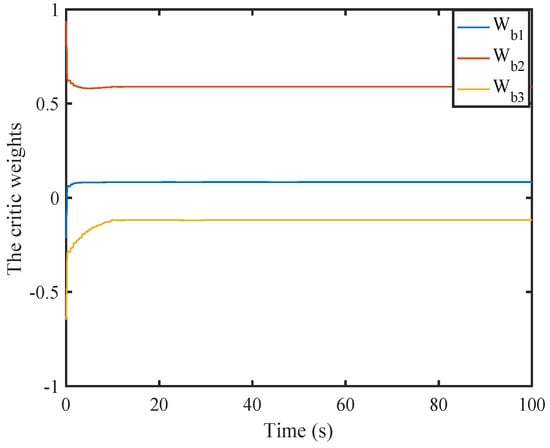

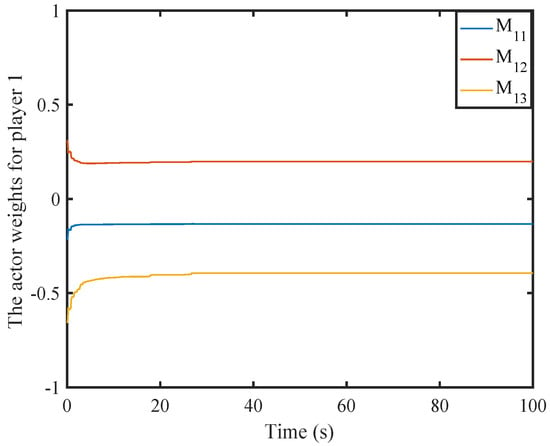

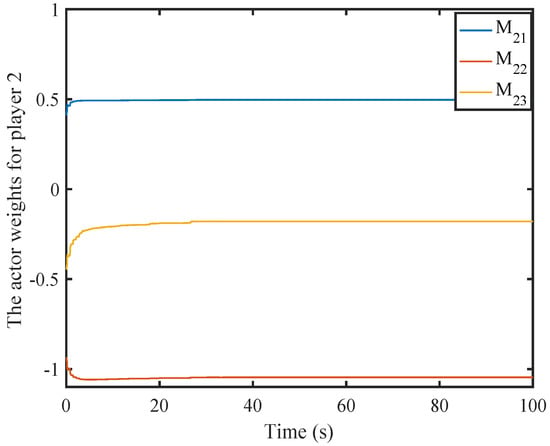

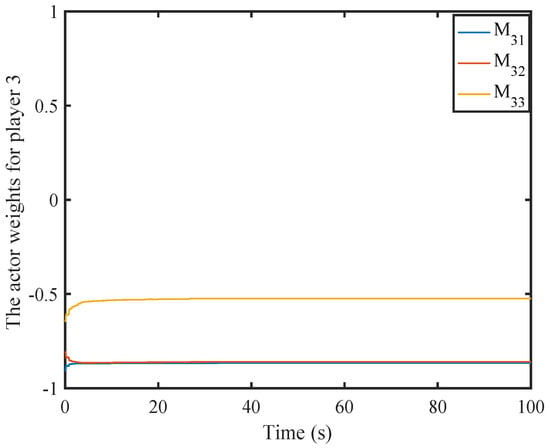

Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14 and Figure 15 display the simulation results. Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8 show the convergence of the weight vectors of the critic and actor NNs.

Figure 2.

Weight history of the first critic NN.

Figure 3.

Weight history of the second critic NN.

Figure 4.

Weight history of the third critic NN.

Figure 5.

Weight history of the first actor NN.

Figure 6.

Weight history of the second actor NN.

Figure 7.

Weight history of the third actor NN.

Figure 8.

Weight history of the fourth actor NN.

Figure 9.

The evolutions of the system state.

Figure 10.

The control input.

Figure 11.

Sampling error norm and triggering threshold norm under DETC.

Figure 12.

Sampling period of the learning stage by DETC.

Figure 13.

The internal dynamic signals.

Figure 14.

Sampling error norm and triggering threshold using static mechanisms proposed in [29].

Figure 15.

Sampling period of the learning stage using static mechanisms proposed in [29].

It is clear that the norm of sampling error under static event-triggering converges to zero.

In Figure 12 and Figure 15, the horizontal axis of symbol “*” represents the triggering instant, and the vertical axis of symbol “*” denotes the difference between the current triggering instant and the previous triggering instant. The evolutions of the system states and the control inputs are displayed in Figure 9 and Figure 10, respectively. They illustrate that the system will rapidly converge to zero. The Figure 11 and Figure 14, respectively, provide curves of the sampling error norms triggered by dynamic and static events and the triggering threshold specifications. The sampling times triggered by dynamic and static events are shown in Figure 10 and Figure 15. Figure 13 shows the internal signal. From the graph, it can be seen that the internal signal is positive.

By comparison, it is evident that dynamic event-triggering strategies containing dynamic internal signals have larger thresholds and fewer event-triggering times. Compared to static event-triggering, the number of actuator updates have been reduced by 55%. This indicates that compared to other triggering mechanisms, the dynamic event-triggering mechanism proposed in this paper can significantly reduce computational complexity.

6.2. A Comparison Simulation

Consider the following system dynamics in [38]

Define the cost performances as

Set , , , and I has appropriate dimensions, , and . For critic NNs, the initial weights are set to [−0.1, 0.1], and the initial weight vectors of the actor NNs are randomly set in the interval . Select the NN activation function as . The initial system state is , and set the sampling time as s. Moreover, select the parameter . The probing control inputs are added, which are .

The weight values of the first critic NN converge to .

The weight values of the second critic NN converge to .

The weight values of the first actor NN converge to .

The weight values of the second actor NN converge to .

The weight values of the third actor NN converge to .

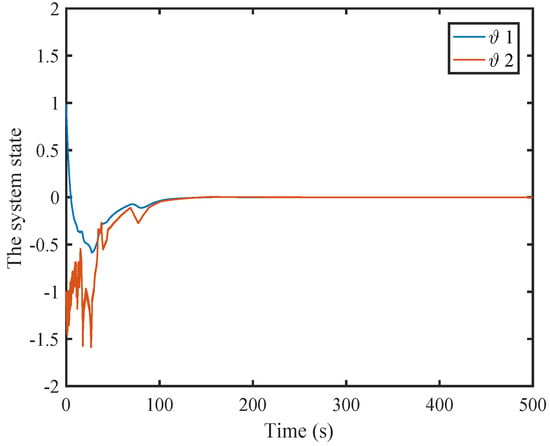

It is clear that the system state converges to zero.

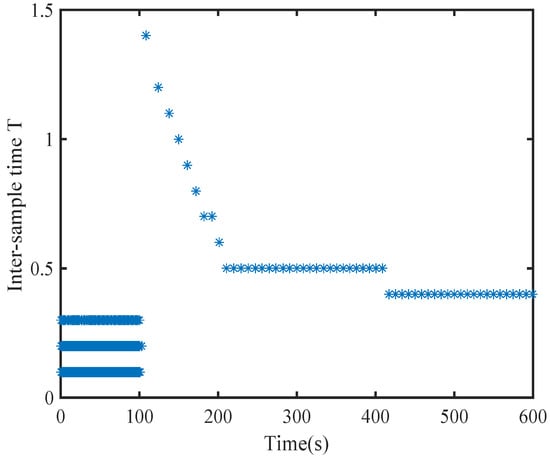

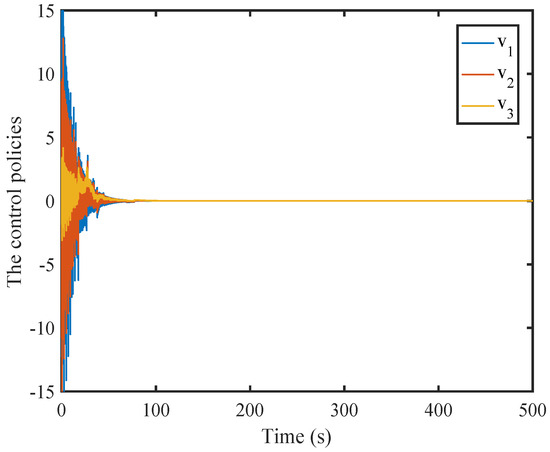

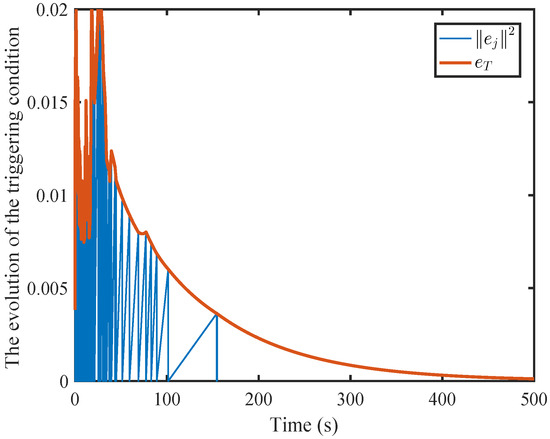

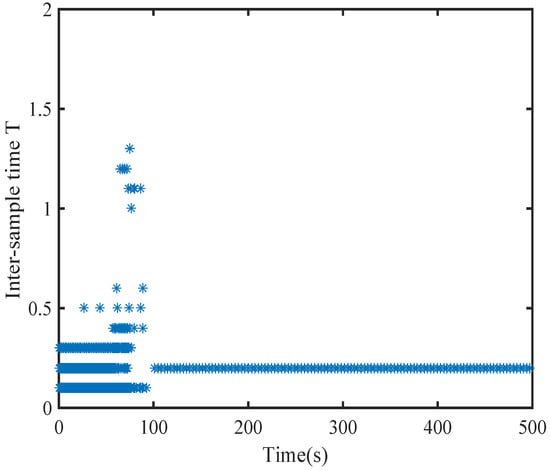

Figure 16, Figure 17, Figure 18, Figure 19 and Figure 20 illustrate the convergence of the critic and actor NNs weight vector. The evolutions of the system states and the control inputs are displayed in Figure 21 and Figure 22, which show that the system states converge and the control signals rapidly converge to zero. Figure 23 and Figure 24 present the norm of the sampling error and the norm of the trigger threshold , and the sampling time , respectively. The internal dynamic signals are depicted in Figure 25. It can be seen that the internal signal is positive.

Figure 16.

Weight history of the first critic NN.

Figure 17.

Weight history of the second critic NN.

Figure 18.

Weight history of the first actor NN.

Figure 19.

Weight history of the second actor NN.

Figure 20.

Weight history of the third actor NN.

Figure 21.

The evolutions of the system state.

Figure 22.

The control input.

Figure 23.

Sampling error norm and triggering threshold norm under DETC.

Figure 24.

Sampling period of the learning stage by DETC.

Figure 25.

The internal dynamic signals.

It is obvious that the control input converges to zero.

It can be found that the norm of sampling error under dynamic event-triggering converges to zero.

To highlight the benefits of the proposed dynamic event-triggering mechanism, Figure 26 and Figure 27 show the sampling error, threshold norm, and the sample interval of the systems under the static event-triggering condition. By comparing Figure 23 and Figure 24 with Figure 26 and Figure 27, it is evident that the dynamic event-triggering strategy incorporating the positive dynamic internal signal has a larger threshold and fewer event-triggering occurrences. The dynamic event-triggering mechanism introduced in this paper can significantly reduce the computational burden.

Figure 26.

Sampling error norm and triggering threshold using static mechanisms proposed in [31].

Figure 27.

Sampling period of the learning stage using static mechanisms proposed in [31].

In Figure 24 and Figure 27, the horizontal axis of symbol “*” represents the triggering instant, and the vertical axis of symbol “*” denotes the difference between the current triggering instant and the previous triggering instant.

It can be found that compared to static event-triggering, the number of actuator updates triggered by dynamic events decreased by 69%.

It can be found that the internal dynamic signals converges to zero.

It is clear that the norm of sampling error under static event-triggering converges to zero.

7. Conclusions

In this paper, a DETC approach based on IRL has been designed for the MZSG problem in nonlinear systems with completely unknown dynamics. This algorithm has relaxed the requirements on the system drift dynamics. In the adaptive control process, an actor–critic approach has been adopted to design synchronized adjustment rules for weight updates. The IRL algorithm designed in this framework has been implemented online, providing a new approach for combining NN and DETC to solve the MZSG problem. The method has proposed dynamic event-triggering conditions, which reduces communication resources for the controlled object. Finally, the effectiveness of the proposed method has been validated through two examples. The simulation results show that the number of actuator updates in the DETC mechanism has been reduced by 55% and 69%, respectively, compared with static event-triggering mode.

Author Contributions

Conceptualization, Y.L.; methodology, Y.L.; software, Z.S. and X.M.; validation, Z.S., H.S. and L.L.; formal analysis, H.S.; data curation, L.L.; writing—original draft preparation, Z.S. and Y.L.; writing—review and editing, Z.S.; visualization, X.M.; supervision, L.L. and H.S.; funding acquisition, L.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Key R&D Program Project (2022YFF0610400); Liaoning Province Applied Basic Research Program Project (2022JH2/101300246); Liaoning Provincial Science and Technology Plan Project (2023-MSLH-260); Liaoning Provincial Department of Education Youth Program for Higher Education Institutions (JYTQN2023287); and Project of Shenyang Key Laboratory for Innovative Application of Industrial Intelligent Chips and Network Systems.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors have no competing interests or conflicts of interest to declare that are relevant to the content of this article.

References

- Chen, L.; Yu, Z.Y. Maximum principle for nonzero-sum stochastic differential game with delays. IEEE Trans. Automa. Control 2014, 60, 1422–1426. [Google Scholar] [CrossRef]

- Yasini, S.; Sitani, M.B.N.; Kirampor, A. Reinforcement learning and neural networks for multi-agent nonzero-sum games of nonlinear constrained-input systems. Int. J. Mach. Learn. Cybern. 2016, 7, 967–980. [Google Scholar] [CrossRef]

- Rupnik Poklukar, D.; Žerovnik, J. Double Roman Domination: A Survey. Mathematics 2023, 11, 351. [Google Scholar] [CrossRef]

- Leon, J.F.; Li, Y.; Peyman, M.; Calvet, L.; Juan, A.A. A Discrete-Event Simheuristic for Solving a Realistic Storage Location Assignment Problem. Mathematics 2023, 11, 1577. [Google Scholar] [CrossRef]

- Tanwani, A.; Zhu, Q.Y.Z. Feedback nash equilibrium for randomly switching differential-algebraic games. IEEE Trans. Automa. Control 2020, 65, 3286–3301. [Google Scholar] [CrossRef]

- Peng, B.W.; Stancu, A.; Dang, S.P. Differential graphical games for constrained autonomous vehicles based on viability theory. IEEE Trans. Cybern. 2022, 52, 8897–8910. [Google Scholar] [CrossRef]

- Wu, S. Linear-quadratic non-zero sum backward stochastic differential game with overlapping information. IEEE Trans. Autom. Control 2023, 60, 1800–1806. [Google Scholar] [CrossRef]

- Dong, B.; Feng, Z.; Cui, Y.M. Event-triggered adaptive fuzzy optimal control of modular robot manipulators using zero-sum differential game through value iteration. Int. J. Adapt. Control Signal Process. 2023, 37, 2364–2379. [Google Scholar] [CrossRef]

- Wang, D.; Zhao, M.M.; Ha, M.M. Stability and admissibility analysis for zero-sum games under general value iteration formulation. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 8707–8718. [Google Scholar] [CrossRef]

- Du, W.; Ding, S.F.; Zhang, C.L. Modified action decoder using Bayesian reasoning for multi-agent deep reinforcement learning. Int. J. Mach. Learn. Cybern. 2021, 12, 2947–2961. [Google Scholar] [CrossRef]

- Lv, Y.F.; Ren, X.M. Approximate nash solutions for multiplayer mixed-zero-sum game with reinforcement learning. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 2739–2750. [Google Scholar] [CrossRef]

- Qin, C.B.; Zhang, Z.W.; Shang, Z.Y. Adaptive optimal safety tracking control for multiplayer mixed zero-sum games of continuous-time systems. Appl. Intell. 2023, 53, 17460–17475. [Google Scholar] [CrossRef]

- Ming, Z.Y.; Zhang, H.G.; Zhang, J.; Xie, X.P. A novel actor-critic-identifier architecture for nonlinear multiagent systems with gradient descent method. Automatica 2023, 155, 645–657. [Google Scholar] [CrossRef]

- Ming, Z.Y.; Zhang, H.G.; Wang, Y.C.; Dai, J. Policy iteration Q-learning for linear ito stochastic systems with markovian jumps and its application to power systems. IEEE Trans. Cybern. 2024, 54, 7804–7813. [Google Scholar] [CrossRef] [PubMed]

- Song, R.Z.; Liu, L.; Xia, L.; Frank, L. Online optimal event-triggered H∞ control for nonlinear systems with constrained state and input. IEEE Trans. Syst. Man Cybern. Syst. 2023, 53, 1–11. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, H.G.; Yu, R. H∞ tracking control of discrete-time system with delays via data-based adaptive dynamic programming. IEEE Trans. Syst. Man Cybern. Syst. 2020, 50, 4078–4085. [Google Scholar] [CrossRef]

- Zhang, H.G.; Liu, Y.; Xiao, G.Y. Data-based adaptive dynamic programming for a class of discrete-time systems with multiple delays. IEEE Trans. Syst. Man Cybern. Syst. 2020, 50, 432–441. [Google Scholar] [CrossRef]

- Wang, R.G.; Wang, Z.; Liu, S.X. Optimal spin polarization control for the spin-exchange relaxation-free system using adaptive dynamic programming. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 5835–5847. [Google Scholar] [CrossRef]

- Wen, Y.; Si, J.; Brandt, A. Online reinforcement learning control for the personalization of a robotic knee prosthesis. IEEE Trans. Cybern. 2020, 50, 2346–2356. [Google Scholar] [CrossRef]

- Yu, S.H.; Zhang, H.G.; Ming, Z.Y. Adaptive optimal control via continuous-time Q-learning for Stackelberg-Nash games of uncertain nonlinear systems. IEEE Trans. Syst. Man Cybern. Syst. 2023, 54, 2346–2356. [Google Scholar] [CrossRef]

- Rizvi, S.A.A.; Lin, Z.L. Adaptive dynamic programming for model-free global stabilization of control constrained continuous-time systems. IEEE Trans. Cybern. 2022, 52, 1048–1060. [Google Scholar] [CrossRef] [PubMed]

- Vrabie, D.; Pastravanu, O.; Abu-Khalaf, M. Adaptive optimal control for continuous-time linear systems based on policy iteration. Automatica 2009, 45, 477–484. [Google Scholar] [CrossRef]

- Cui, X.H.; Zhang, H.G.; Luo, Y.H. Adaptive dynamic programming for tracking design of uncertain nonlinear systems with disturbances and input constraints. Int. J. Adapt. Control Signal. Process. 2017, 31, 1567–1583. [Google Scholar] [CrossRef]

- Zhang, Q.C.; Zhao, D.B. Data-based reinforcement learning for nonzero-sum games with unknown drift dynamics. IEEE Trans. Cybern. 2019, 48, 2874–2885. [Google Scholar] [CrossRef] [PubMed]

- Song, R.Z.; Lewis, F.L.; Wei, Q.L. Off-policy integral reinforcement learning method to solve nonlinear continuous-time multiplayer nonzero-sum games. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 704–713. [Google Scholar] [CrossRef]

- Liang, T.L.; Zhang, H.G.; Zhang, J. Event-triggered guarantee cost control for partially unknown stochastic systems via explorized integral reinforcement learning strategy. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 7830–7844. [Google Scholar] [CrossRef]

- Ren, H.; Dai, J.; Zhang, H.G. Off-policy integral reinforcement learning algorithm in dealing with nonzero sum game for nonlinear distributed parameter systems. Trans. Inst. Meas. Control 2024, 42, 2919–2928. [Google Scholar] [CrossRef]

- Yoo, J.; Johansson, K.H. Event-triggered model predictive control with a statistical learning. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 2571–2581. [Google Scholar] [CrossRef]

- Huang, Y.L.; Xiao, X.; Wang, Y.H. Event-triggered pinning synchronization and robust pinning synchronization of coupled neural networks with multiple weights. Int. J. Adapt. Control Signal. Process. 2022, 37, 584–602. [Google Scholar] [CrossRef]

- Sun, L.B.; Huang, X.C.; Song, Y.D. A novel dual-phase based approach for distributed event-triggered control of multiagent systems with guaranteed performance. IEEE Trans. Cybern. 2024, 54, 4229–4240. [Google Scholar] [CrossRef]

- Li, Z.X.; Yan, J.; Yu, W.W. Adaptive event-triggered control for unknown second-order nonlinear multiagent systems. IEEE Trans. Cybern. 2021, 51, 6131–6140. [Google Scholar] [CrossRef] [PubMed]

- Fan, Y.; Yang, Y.; Zhang, Y. Sampling-based event-triggered consensus for multi-agent systems. Neurocomputing 2016, 191, 141–147. [Google Scholar] [CrossRef]

- Girard, A. Dynamic triggering mechanisms for event-triggered control. IEEE Trans. Autom. Control 2015, 60, 1992–1997. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, D.; Wang, Y.; Zhou, B. Dynamic event-based tracking control of boiler turbine systems with guaranteed performance. IEEE Trans. Autom. Sci. Eng. 2023, 21, 4272–4282. [Google Scholar] [CrossRef]

- Xu, H.C.; Zhu, F.L.; Ling, X.F. Observer-based semi-global bipartite average tracking of saturated discrete-time multi-agent systems via dynamic event-triggered control. IEEE Trans. Circuits Syst. II Express Briefs 2024, 71, 3156–3160. [Google Scholar] [CrossRef]

- Chen, J.J.; Chen, B.S.; Zeng, Z.G. Adaptive dynamic event-triggered fault-tolerant consensus for nonlinear multiagent systems with directed/undirected networks. IEEE Trans. Cybern. 2023, 53, 3901–3912. [Google Scholar] [CrossRef]

- Hou, Q.H.; Dong, J.X. Cooperative fault-tolerant output regulation of linear heterogeneous multiagent systems via an adaptive dynamic event-triggered mechanism. IEEE Trans. Cybern. 2023, 53, 5299–5310. [Google Scholar] [CrossRef]

- Song, R.Z.; Yang, G.F.; Lewis, F.L. Nearly optimal control for mixed zero-sum game based on off-policy integral reinforcement learning. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 2793–2804. [Google Scholar] [CrossRef]

- Han, L.H.; Wang, Y.C.; Ma, Y.C. Dynamic event-triggered non-fragile dissipative filtering for interval type-2 fuzzy Markov jump systems. Int. J. Mach. Learn. Cybern. 2024, 15, 4999–5013. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).